Abstract

An arbitrage portfolio provides a cash flow that can never be negative at zero cost. We define the weaker concept of a “desirable portfolio” delivering cash flows with negative risk at zero cost. Although these are not completely risk-free investments and subject to the risk measure used, they can provide attractive investment opportunities for investors. We investigate in detail the theoretical aspects of this portfolio selection procedure and the existence of such opportunities in fixed income markets. Then, we present two applications of the theory: one in analyzing market integration problem and the other in gauging the credit quality of defaultable bonds in a portfolio. We also discuss the model calibration and provide some numerical illustrations.

1. Introduction

We introduce a methodology by using risk measures that can detect more general risk-return tradeoff opportunities than classical arbitrage ones. An arbitrage portfolio provides a cash flow that can never be negative at zero cost. We define the weaker concept that describes a portfolio delivering cash flows with negative risk at zero cost, which we call a desirable portfolio or opportunity.

We use optimization techniques to quantitatively measure and detect the existence of desirable opportunities (or optimal portfolios from a financial point of view) which we define through convex risk measures. Then, we apply the theory to analyze market integration and to study the credit quality of bonds issued in defaultable markets. In the following, we give a short review of risk measures, as they are essential tools in our analysis.

Risk measures are popular tools in gauging and mitigating the risk of financial positions. In particular, they are helpful in determining capital requirements which are necessary to maintain the solvency of a company. For a thorough review of risk measures and the many useful references within, we refer to Föllmer and Weber (2015). Most of the popular risk measures are either coherent or convex1. The former was introduced in Artzner et al. (1999), while, for the latter, we refer to Föllmer and Schied (2011).

Coherent risk measures are convex but not the other way around. Convex risk measures are more convenient when dealing with optimization. In particular, this is the case when the constraints of admissible assets of an optimization problem form a convex set rather than a cone. This is argued in Weber et al. (2013) and Föllmer and Weber (2015). Hence, the main results here are presented according to a class of convex risk measures covering a wide range of practical risk measures such as CVaR.

We use convex risk measures and ignore transaction costs, which implies a linear pricing functional to formulate and detect the desirable opportunities in markets by forming a static optimal portfolio in a sense to be precisely defined.

We study two optimization problems. In the first one, the optimal value is subject to some constraints determined through a convex risk measure where we simply maximize profit for a given negative risk. In the second problem, we maximize the profit and minimize risk simultaneously, subject to some sequential arbitrage constraints introduced in Balbás and López (2008). These optimization problems are applied to quantify and detect the desirable portfolios in markets.

We present two applications of this portfolio optimization theory. In the first one, we use the theory to build an indicator to measure market integration and identify inefficiencies in the market. However, the second, and main, application of this work is to introduce a numerically implementable procedure to gauge the credit quality of bonds in a defaultable market assumed not to provide any desirable opportunities. For the numerical implementation, as an example, we use the CVaR risk measure.

Normally, a bond price is driven by two main factors. The first is the real bond price calculation based on an interest rate model assuming that there are no premiums to compensate embedded options or other contingencies such as default risk. The other part is a premium for the embedded options, the risk of default, or other contingencies. To reflect this risk of default, most bonds in the market are rated by rating agencies. Normally, the most credible bonds are the ones issued by governments. Our goal is to measure this credit premium and basically study the credit quality of defaultable bonds. Although there might be other types of risks affecting the price of a bond, here we assume that the risk of default is the dominating one.

The outline of the paper is as follows. In Section 2, we discuss some basic facts and preliminaries. In Section 3, the concept of desirable portfolios, based on optimization theory and risk measures, is defined. A dual problem plays a critical role in providing Karush-Kuhn-Tucker-like conditions. The model calibration and numerical implementations are explained in Section 4. In Section 5, we apply the model to market integration, and see how the dual solution allows us to measure “potential pricing errors” and market inefficiencies. Finally, in Section 6, we show the application of the theory to measure the credit quality of defaultable bonds.

2. Preliminaries

Assume that uncertainty in a market is modeled by the probability space , and that is an matrix representing a portfolio of n bonds with possible future cash flows at times . The column j of matrix A, denoted by , represents the future cash flows of bond j in the portfolio, i.e., at the future dates , while row i represents the total cash flows of these n bonds at time . Here, represents the maturity date of the cash flows, the last time when a payment is made in the portfolio.2 In addition, suppose that , with , is the current price of the j-th bond.

Assume that any future cash flow of a bond, or in general of a portfolio, is reinvested and the accumulated wealth generated by this cash flow is denoted by . Here, we assume that this reinvestment is in fixed income markets. Although the cash flows are predetermined, because of the fluctuations in interest rates, the accumulated wealth is a random variable at the maturity time T. For now, we assume that this is the only source of randomness that makes uncertain. This provides the motivation to define the accumulated wealth function , where

and is a vector in .

Suppose that , where Y is a Banach space of random variables equipped with a norm , and with the dual space Z. By ignoring transaction costs, we can assume that is a linear function.

To control the risk over the space Y, we use risk measures. In our work, this space is interpreted as the space of all future gains. In general, a risk measure can be defined over the space , the set of all real valued random variables. For any random variable X belonging to , the quantity can be interpreted as the risk associated with the future wealth or gain X in a period of time. First, we review some axioms of risk measures.

Suppose that X and Y represent gains of two investments and consider the following properties:

- Subadditivity: For all , .

- Positive homogeneity: For all and , .

- Translation invariance: For all and all , .

- Monotonicity: For all , if then .

- Convexity: For all ,

A risk measure is called coherent if it satisfies the subadditivity, positive homogeneity, translation invariance, and monotonicity. A risk measure that satisfies monotonicity, translation invariance, and convexity is called a convex risk measure. Thus, coherent risk measures are convex, but not the other way around.

Artzner et al. (1999) define coherent risk measures; however, in their paper, the random variable X represents a loss. They also find a representation theorem on a finite probability space.

Since the work of Artzner et al. (1999), their results have been extended in a variety of ways to define different types of risk measures. For instance, deviations and expectation bounded risk measures were introduced by Rockafellar et al. (2006). Their paper provides some insights on the structure of the sub-gradient sets associated with risk measures. Distortion risk measures were introduced by Wang (2000). The properties of distortion risk measures are analyzed in Balbás et al. (2009). All these risk measures are defined on a probability space. There are also empirical risk measures that are defined on data sets. These are discussed in Section 4. We mention and review two important and well-known risk measures: The value-at-risk of X for is given by

where is the distribution function of X. The conditional value-at-risk (CVaR) is given by

when is continuous at

Note that VaR is not a subadditive risk measure, and hence it can penalize diversification in a portfolio. However, in practice, when the probability level is high enough, VaR is typically subadditive, see Daníelsson et al. (2013), and that practical advantages may outweigh its theoretical deficiencies. Moreover, Dhaene et al. (2008) warn against blind adherence to coherent risk measures and point out that the desired property of subadditivity also depends on the precise business context at hand.

It is shown in Artzner et al. (1999) that VaR is not a convex risk measure. In addition, since VaR is smaller than CVaR, VaR might ignore the risk of large losses. In addition, it is harder to use VaR in optimization problems as it is not convex or subadditive. Our theory is not applicable to non-convex risk measures such as VaR.

While the definition of VaR is consistent through the literature, there are different terminologies and definitions for CVaR. Other terminologies such as Average Value at Risk (AVaR), Tail Value at Risk, and Expected Shortfall are used.

The representation in Equation (1) is introduced in Rockafellar and Uryasev (2000). CVaR expressed in the form of Equation (1) requires a continuous distribution. In Acerbi and Tasche (2002), another form of CVaR, called AVaR, is defined according to VaR where there is no requirement for the continuity of the distribution. In Rockafellar and Uryasev (2002), CVaR is defined for general distributions even discrete ones. To see different definitions of CVaR and how they are related with each other, we refer to Föllmer and Schied (2011).

The composition of and , denoted by , defines a risk measure on into that satisfies subadditivity, positive homogeneity, and convexity if the risk measure does so. If is a convex risk measure, then the additivity of leads to the convexity of , i.e., for all cash flows , and for all , we have:

The domain of can be considered as a set of cash flows. For this reason, it would be more appropriate to call a risk statistic, defined in more detail in Section 4. In addition, due to the effect of interest rates, in our model is not necessarily translation invariant, even if is; we defer the discussion about this to Section 4.

Throughout this paper, without any loss in generality, we assume that the risk measure is normalized, i.e., , and so we conclude that . Using a suitable representation of or plays an important role in the next few sections. In the following, we specify the type of representation that we are going to use.

Recall that Y (which includes ) is a Banach space equipped with the norm , and with the dual space Z.

Assumption 1.

Suppose that is any risk measure on Banach space Y that admits the following representation. There exists such that for every :

where

is compact with respect to the product topology of and the usual topology on .

It is easy to see that satisfies convexity, and it is also continuous with respect to the norm topology defined by .

The properties of a risk measure under Assumption 1 are discussed in Balbás et al. (2010a) for . This assumption is met by many convex and coherent risk measures such as down side semi-deviations, CVaR, Wang’s distortion measure, the absolute deviation, and the standard deviation.

If is any continuous risk measure satisfying convexity, then Theorem 3.1 of Okhrati and Assa (2017) shows that

Therefore, we can take representation in Equation (3) of Assumption 1 as our primal hypothesis on , in the sense that any convex continuous risk measure can either satisfy it or it can be approximated by such risk measures with a compact sub-gradient set3. In particular, the above approximation can be applied to where the dual space of Y is and . Throughout Section 3, we suppose that and Assumption 1 is in force.

If satisfies convexity in Equation (2), then, for every , we have:

where

and is the Euclidean inner product on .

Using the previous representation or directly applying Fenchel’s duality theorem, see Theorem A.62 of Föllmer and Schied (2011), we can further show the following.

Corollary 1.

Assume that satisfies subadditivity and positive homogeneity, then

where

and is the Euclidean inner product on .

Remark 1.

By max in the above corollary or in the rest of the section, we implicitly mean that the maximum is attained. For example, in Corollary 1, it turns out that there exists such that

3. Measurement of Desirable Portfolios

3.1. First Problem: Maximizing the Income

In this section, we determine our optimal desirable portfolios according to Definition 1. We also provide a necessary and sufficient condition for the existence of such opportunities in a market. Remember that, in this section, we use a risk measure that satisfies Assumption 1 for .

Definition 1.

(Desirable Portfolio) Assume that represents a portfolio consisting of units of bond j, for . Then, is said to be a desirable portfolio if

or, equivalently, , where , and , for

To interpret the above definition, note that is the current price of the portfolio, while for any , the sum is the total cash flow of the portfolio at time . The above definition simply says that there is no cost for the portfolio and, at the same time, the risk measure for the portfolio cash flow is non-positive. The terminology “desirable” is similar to the definition of “desirable claims” in Černý and Hodges (2002) where a desirable position is a member of a convex set disjoint from the origin.

Note that a desirable portfolio is subject to investors’ risk trade-off preference summarized in their chosen risk measure, and it could still exist in the absence of arbitrage. Thus, a desirable portfolio is attractive only up to investors’ choices of a risk measure and their subjective tolerance level. Furthermore, a non-positive risk of a desirable portfolio in the previous definition indicates either no risk within the portfolio or some mispriced bonds.

Considering the fact that, in a desirable portfolio, is the income, we propose the following optimization problem that leads to the main result of this section:

where are the decision variables. As mentioned above, represents the portfolio composition. If it respects the above constraints, can be interpreted as an upper bound portfolio for purchases, whose total price can not be larger than one unit. This is a portfolio optimization problem; consider the following short literature review of this topic.

The optimization problem in Equation (4) differs in two ways from the mean-variance approach first proposed by Markowitz (1952). First, in Markowitz’ approach, the objective is to minimize the variance of the loss distribution (with respect to some constraints), while here we try to maximize the income. Second, in both the objective function and the constraints of Markowitz’ problem, both tails of the loss distributions are taken into account through the variance, while, in optimization problem in Equation (4), the effect and the weights of the tails is handled by the risk measure, providing more flexibility.

In Rockafellar and Uryasev (2000), a portfolio optimization problem is considered by minimizing CVaR or the variance of the loss associated with the portfolio, over a set of constraints determined by the mean of the loss of the portfolio. Later, this is extended by Ben-Tal and Teboulle (2007) to “Optimized Certainty Equivalence” which generates a corresponding convex risk measure.

The problem in Equation (4) is similar to the CVaR optimization approach in Krokhmal et al. (2002) in which a CVaR constraint is considered where the objective is to minimize the expected loss at the end of the period or maturity. In the optimization problem in Equation (4), the objective is to maximize the current income subject to non-negative risk of future gains of the portfolio at the end of the maturity time.

From now on, we assume that the supremum in Equation (4) is attained. Using Assumption 1 for , this optimization problem is equivalent to

We call this the primal problem. By using a similar approach to that of Balbás et al. (2010b) and constrained optimization theory, as presented in Chapter 8 of Luenberger (1969), the dual of our primal problem in Equation (5) is

where and is a positive cone of the space of inner regular real-valued sigma-additive measures on the Borel sigma-algebra of .

Then, following Chapter 8 of Luenberger (1969), is the solution of the primal problem if and only if there exists such that

A simple calculation shows that these are equivalent to

In the literature, these are called Karush-Kuhn-Tucker (KKT) conditions. The dual problem in Equation (6) and optimality equations in Equation (7) can be further simplified. To do this, we use a mean-value theorem from Balbás et al. (2010a) and simplify the integrals in both the dual problem in Equation (6) and the KKT conditions in Equation (7). First, we make the following assumption.

Assumption 2.

Assume that the primal problem in Equation (5) is finite and reaches its optimal value, and so the optimal solutions always exist.

Theorem 1.

Under Assumption 2, we have the following:

- The equivalent dual form of the primal problem is

- and solve problems in Equations (5) and (8), respectively, if and only if they satisfy the following KKT conditions

The following interesting lemma bridges the optimal solution of the primal problem to the existence of desirable portfolios. The proof of the lemma highlights the advantage of working with the risk measure rather than , as the arguments take place in a deterministic space.

Lemma 1.

Suppose that Assumption 2 is satisfied and that is the optimal value of the primal problem. Then, the market does not provide any desirable portfolio if and only if and .

Proof.

Suppose that is the optimal solution of problem in Equation (4). The solution must be feasible, so , and if the market does not provide any desirable portfolio, then Obviously, is feasible, so which means that the optimal solution of problem in Equation (4) is zero. From Assumption 2 and Theorem 1, we conclude that . Note that which leads to , and so , . Since , then and , and the result follows.

On the other hand, suppose that and , which concludes that the optimal solution of the problem in Equation (4) is zero. We show that there is no desirable portfolio. Let and define and where . Using the convexity of , one can show that . It is also clear that , , and . Therefore, is feasible and so or which leads to . This shows that there is no desirable portfolio in the market. ☐

Finally, combining this lemma with Theorem 1 provides a necessary and sufficient condition for the existence of desirable portfolios.

Corollary 2.

Suppose that Assumption 2 is satisfied. The market does not provide any desirable portfolios (or opportunities) if and only if there exists optimal values such that

where and is the j-th column of matrix A.

Proof.

By Lemma 1, the non-existence of a desirable portfolio is equivalent to and . If , then by the second and last condition of part 2 of Theorem 1, we have , and therefore the first condition gives that .

On the other hand, if there exists such that and for every j, , the first and third conditions of Part (2) of Theorem 1 give and hence , respectively. Thus, the optimal value of the primal problem is zero and, consequently, . Now, the result follows from Lemma 1. ☐

3.2. Second Problem: Minimizing the Risk and Maximizing the Income

The problem that is investigated in Section 3.1 can be further improved. In Section 3.1, we focus on maximizing the portfolio income subject to the first constraint of problem in Equation (4), i.e., the risk constraint. Another perspective is to maximize the income and minimize the risk simultaneously. In other words, we want to maximize the objective vector function over a constraint set that will be specified in this section. In the following, we assume that the maximal point is attained. This is a multi-objective optimization problem and the solution(s) is (are) Pareto optimal, see Sawaragi et al. (1985). In simple terms, this translates to finding a solution that minimizes the risk and yet does not reduce the income, in other words a middle-ground for which both the risk management and income generation are carried out at a satisfactory level, and one is not penalized in favor of the other.

Note that by maximizing , the risk decreases. Maximizing this objective vector function is equivalent to . For the main constraint, we select strong sequential arbitrage (SSA), i.e. , for as in Section 2, any and where is the matrix

Therefore, we consider the following vector optimization problem:

Since is a convex function, for every optimal solution of Equation (10) there exists the non-zero vector that solves the scalar optimization problem

Conversely, if , then every solution of Equation (11) is also a solution of Equation (10). Then, the set of solutions of Equation (11), over arbitrary non-zero vectors , covers the whole solution set of Equation (10), with possibly some additional points. Hence, we fix a non-zero vector and focus on problem in Equation (11).

To analyze this problem we follow the same steps as for problem in Equation (4). Inspired by Lemma 1, the following definition and lemma, give sufficient motivation to study problem in Equation (11).

Definition 2.

The market does not provide any desirable opportunity if and only if the optimal solution of Equation (11) for is equal to zero.

Lemma 2.

If there is a strong sequential arbitrage (SSA) opportunity in the market, then there is also a desirable opportunity.

Another equivalent form of this lemma is that if a market is free from any desirable opportunity then it is SSA free as well.

It can be shown that solves Equation (11) and if and only if solves the following problem:

Note that , hence and is a feasible point for the problem in Equation (11). Thus, the optimal solutions of Equations (11) and (12) must be smaller than or equal to zero, or, in other words, .

By the representation of Assumption 1, problem in Equation (12) is equivalent to:

Similar arguments as those of Section 3.1 show that the dual problem of Equation (11) is

where , , and is the j-th column of matrix A.

It is easy to check that Equation (13) is equivalently represented as

In the following, the equivalent forms of KKT conditions for problem in Equation (14) are inserted within parenthesis.

Now, by using the mean-value theorem of Balbás et al. (2010a), the dual problem in Equation (13) and KKT conditions in Equation (15) can be further simplified. The simplified dual problem is

and the modified KKT conditions are

By analogy with Theorem 1, we now have the following result.

Theorem 2.

Assume that the primal problem in Equation (12) is always finite and that it attains its optimal value, then:

- The equivalent form of the dual problem is given by Equation (16);

- Furthermore, and solve problems in Equations (12) and (16), respectively, if and only if they satisfy the KKT conditions in Equation (17).

Similar to Corollary 2, we also obtain the following result.

Corollary 3.

Assume that the primal problem in Equation (12) is always finite and that it attains its optimal value, then there is no desirable opportunity in the market, if and only if there exist optimal values and such that

This extends the result of Balbás and López (2008), and, similar to in their work, one can also obtain envelopes to the term structure of interest rates. Lemma 2 points out that the credit premiums and the term structure of interest rate envelopes that may be obtained by desirable opportunities should be more accurate than those obtained by SSA.

4. Model Calibration

The numerical implementation of optimization problem in Equation (8) and hence the primal problem in Equation (5) relies on the closed form of the sub-gradient set . The sub-gradient set of some risk measures has already been derived. For example, the sub-gradient of is given in closed form by Rockafellar et al. (2006). Thus, one can propose two approaches for the numerical implementations. One approach is to identify and then solve the optimization problems accordingly, but the structure of a sub-gradient set is not known in general. Alternatively, one can concentrate on and its sub-gradient set instead, which we discuss in the following. However, we must first make sure that risk measures are consistent with the theory developed in Section 3.

Remark 2.

In Equation (4), instead of the representation of Assumption 1, one could use a representation of such as that of Corollary 1. The arguments of Section 3 are still valid for the risk measure ; however, the results and the optimization problems depend on the representation of and its sub-gradient set.

The structure of the sub-gradient set of risk measure , that is built on , can be totally different than that of . For instance, this can be observed in the following simple model.

Assume that the probability space includes only two scenarios, i.e., with , . Take the risk measure to be , for some To model the evolution of the interest rate, we use the following one period simple tree model

Since is , from Rockafellar et al. (2006), it can be proven that the sub-gradient set of is the following,

Using this sub-gradient, and the representation provided by Corollary 1, after some manipulations, one can show that the sub-gradient set of is given by

While the sub-gradient of is given by Equation (18), the sub-gradient set in Equation (19) is different. Even in this simple example, finding the sub-gradient set requires some manipulations, not necessarily applicable to real portfolio examples. Therefore, we need a tractable and practical approach to identify the sub-gradient set in a numerical implementation. In the following, we try to solve this problem by using statistical risk measures, see Kou et al. (2013) for more details.

A special feature of the risk measure is that its domain is not a random space. One can think that is defined on a data set. In other words, each vector in can be interpreted as a set of data. This is the idea behind risk statistics, as defined in Kou et al. (2013), that they use to define some risk measures and prove their representation theorems. We first review their definitions of risk statistics.

Definition 3.

The function is a natural risk statistic if it satisfies the following conditions:

- C(1)

- For all and , where 1 is the m-dimensional vector , (translation invariance).

- C(2)

- For all and , , (positive homogeneity).

- C(3)

- For all vectors and in , if then , (monotonicity).

- C(4)

- For all vectors and in , if for then , (comonotonic subadditivity).

- C(5)

- For any permutation of , we have , (permutation invariance).

Definition 4.

The function is a coherent risk statistic if it satisfies conditions C(1), C(2), C(3), and subadditivity.

Definition 5.

The function is a law-invariant coherent risk statistic if it satisfies conditions C(1), C(2), C(3), C(5), and subadditivity.

In Kou et al. (2013), the representation theorem of these risk measures is obtained. In numerical implementation using risk statistics, we first need to specify a sub-gradient set. For simplicity, we take the maximal sub-gradient set, for instance , of a natural risk statistic. However, numerical implementations of natural, coherent, and law-invariant coherent risk statistics in our model lead to both conceptual and practical problems. We already mentioned that risk statistics are compatible with our framework, but, in numerical implementations, we must also check the consistency of their axioms with the set of the available data.

Here, the data used are a set of cash flows of a bonds portfolio. In Kou et al. (2013), static data at a fixed time are used, while here the data are time dependent, and therefore, certain axioms of the above risk measures are not consistent with our setup. For example, the translation invariance property is not valid in our framework due to the effect of the interest rate over the cash flow at different times.

The same problem occurs for axiom C(5). For instance, consider a one-period maturity, then by C(5), . However, by definition, is equal to , that represents the risk associated to the future wealth of a portfolio that pays one unit of currency at time and nothing at time . Of course, due to the uncertainty of factors such as random interest rates, this risk is different from . In the following, we provide a representation theorem based on subadditivity, positive homogeneity, and monotonicity. All of these axioms are consistent with the portfolio data and with the framework in Section 2.

Theorem 3.

- If is a risk statistic that satisfies subadditivity and positive homogeneity, then where is the subset of

- If, in addition, the risk measure satisfies monotonicity, then

This theorem is a result of Fenchel’s duality theorem and it can be proved following Ahmed et al. (2008). Regarding the above theorem, it is also important to recall what , , and mean. For instance, recall that ; in simple words, this is the current risk of future income at time T, obtained by investing one dollar at time , one dollar at time ,…, and one dollar at time . There are similar interpretations for and . In our numerical example, we use the following special version of the above risk statistic.

Definition 6.

The function is the risk statistic DF (default free) if it satisfies the properties of subadditivity, positive homogeneity, monotonicity, and admits the following representation:

Remark 3.

Despite our simple data set and representation theorem in Definition 6, the numerical implementations lead to meaningful results, in particular reasonable credit premiums, explained in Section 6. However, a more sophisticated implementation would require using an empirical distribution or a set of simulated data for . As it turns out, this is in fact possible.

The risk statistic in Theorem 3 admits a general structure. As in Definition 6, a more specific choice of leads to new risk measures. In Assa and Morales (2010), under some circumstances, it is shown that a specific with a single member is an estimator for . This estimator is built using an empirical distribution, and the main criteria is that the original risk measure ρ should be a distribution-based one. On the other hand, in Krätschmer et al. (2014), it is shown that this estimator is a plug-in estimator, and it is strongly consistent which means that the estimator converges to almost surely. From this perspective, the risk statistics DF with specified weights , can be considered as an estimator of . We determine the weights through the dual optimization problem.

To apply the risk measure DF, we need to specify the original risk measure and then estimate , , , and for all .

Suppose that , (here is the confidence level), and let the instantaneous short rate be modeled by the stochastic process on the space satisfying the usual conditions. Then, we obtain , and similar expressions for and . Therefore, after choosing an interest rate model, we need to calibrate the model and estimate the previous expression. We do this in two steps.

Step 1. Calibrating an interest rate model: for the simplicity of computation, we use the Vasicek model for the interest rate: , where , b, are constants and is a standard Brownian motion4. Here, we use the generalized method of moments (GMM) to estimate the parameters, see Hansen (1982).

It is worth mentioning that there are alternative interest rate models. For a discussion and comparison of interest rate models, we refer to Boero and Torricelli (1996). In addition, the inversion of the yield curve is another popular approach to estimate the parameters, see Chapter 24 of Björk (2009).

The calibration results, based on the one-month US daily treasury yield rates from 2 January 2013 to 30 September 20145 and the GMM method, are: , , , and .

Step 2. Estimating , , and for all : using Itô’s formula and Fubini’s theorem for stochastic processes, see Theorem 64 of Chapter 4 of Protter (2004), one can show that:

where and . Therefore, using Itô’s isometry, we can see that has a normal distribution with mean of and variance . Hence, the random variable has a lognormal distribution. In general, the sum of these lognormal random variables has a complicated distribution, especially when there is dependence between these random variables and this is the case for . The distribution of this random variable has no closed form. In the following, we overcome this challenge through simulations to estimate , , and for all .

We only explain how to estimate , as a similar procedure can be carried out for and . First, by using Fubini’s theorem for stochastic processes, we can deduce that

Equation (21) has an advantage over Equation (20) because, on the right hand side of Equation (21), there is no integral term and hence the simulation is simpler. Now, using Euler’s discretization procedure, one can simulate the processes and , and so for a fixed T simulate , . Hence, we can generate simulated values for the random variable using Equation (21). By generating a large number of random values, we can then estimate the cumulative distribution function of Z and hence approximate , for .

Next, we consider data from a real portfolio, composed of six bonds taken from FINRA.6 The profiles of these bonds are given in Table 1. Based on these bonds, we have and the cash flow times can be determined accordingly. The estimation results at 99% confidence level, i.e., are as follow: , , and part of the result for is shown in Table 2, with four decimal accuracy, where each entry represents the value for . Note that, for example, corresponds to the first coupon payment time which is , and corresponds to the last payment time which is ; therefore, we have 15 payment times in total. At payment times such as , we have two coupons from Bond 1 and Bond 2, but, for , we only have one coupon from Bond 3. In the next example, we provide the solution of the dual and primal problems.

Table 1.

Treasury Bonds 1, 2, 3, 4, 5; Corporate Bond 6.

Table 2.

The values of .

In the following, we take the first approach discussed in Section 3.1. However, the numerical examples and discussions could be easily modified for the second approach explained in Section 3.2.

Example 1.

For the portfolio that consists of Bonds 1 to 4 in Table 1, the optimal value of problem in Equation (6) using risk statistics DF and the above parameter estimations is zero. Next, we add Bond 5 to this portfolio, and in this case the optimal value is approximately equal to .

- The solution of the primal problem is equal to , , , , .

- The solution of the dual problem is equal to , , , , , , , , , …

This example shows that Bonds 1 and 3 have no effect in maximizing the income within this set of bonds, as the primal solution yields . In addition, it shows that by selling one unit of Bond 2 and buying units of Bond 4, the estimated income is 7.8, as in the primal solution , and .

Remark 4.

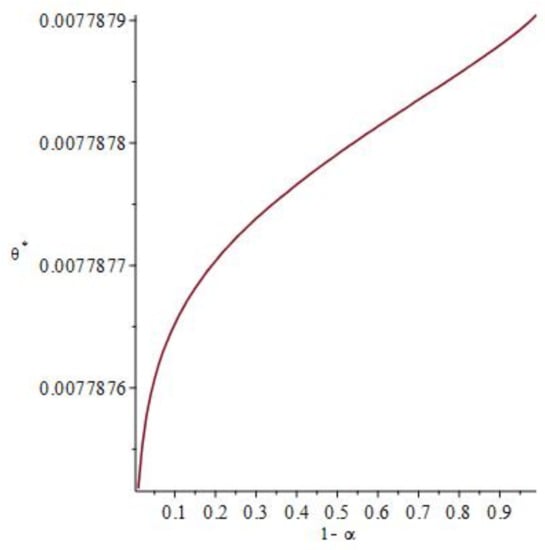

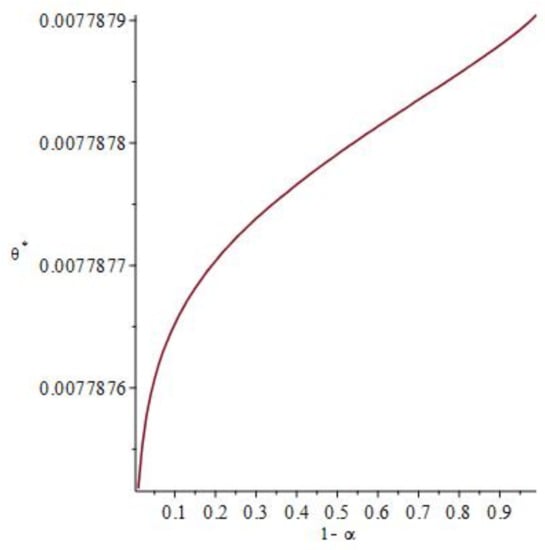

At this point, it is worth investigating the sensitivity of the solution with respect to changes in the confidence level α. This is illustrated in Figure 1 about which we have two observations: First, as the confidence level decreases (i.e. the tolerance level increases), the degree of risk-aversion also decreases and the investor will be willing to take more risk. In this case, the optimal value or income increases to reward the extra risk taken. Second, despite the increase in tolerance level , the model is actually robust, this means that, although the values of α vary between 0 and 1, the values of only change marginally. In other words, the variation in the optimal desirable portfolio value is robust with respect to changes in α.

Figure 1.

Optimal values as a function of the tolerance level .

5. Application to Market Integration

Integrated markets should not provide agents with opportunities to make risk-free profits at no cost. The existence of classical arbitrage opportunities is one of the signs that indicate inefficiency in markets. This problem has been investigated in Chen and Knez (1995), and also in Kempf and Korn (1998).

As an alternative to the classical methods, we introduce the indicator , i.e., the optimal value of the dual problem, to measure a more general type of inefficiency in markets. From the primal problem, it is clear that , a higher value of this quantity indicates a less integrated market in the sense that it leads to opportunities with larger income. By Lemma 1, in an ideal market, the indicator takes a lower value, otherwise, the potential positive income of the portfolio would indicate an inefficiency of the market. To further motivate this indicator, we investigate its relation with the classical arbitrage opportunities.

By Corollary 2, the existence of a desirable portfolio is linked to solving the following system of equations for ,

where and is the j-th column of matrix A. If the optimal values satisfy this system of equations then the existence of a desirable opportunity (for a specific risk measure ) is guaranteed.

Now suppose that the solution set is empty, then by Corollary 2, this means that for any risk measure , there is a desirable portfolio. In other words, for such risk measures , the existence of desirable portfolios is guaranteed. In this case, it is not difficult to prove that classical arbitrage also exists; see the following corollary.

Corollary 4.

Suppose that the solution set of the following system of equations is empty for ,

where and is the j-th column of matrix A. Then, the existence of classical arbitrage in the market is guaranteed.

Example 2.

From Example 1 and based on the available data, one can conclude that Bonds 1 to 4 are consistent in pricing and no desirable portfolio can be built by these bonds. The non-zero for Bonds 1 to 5, indicates inconsistency in pricing, an embedded option, or other hidden risks. Referring to the profile of the bonds in Table 1, one observes that the fifth bond is an inflation indexed note, and the non-zero value of the previous example is indeed indicating the embedded option within this bond. In the next section, specifically Remark 5, we learn how to price this embedded option.

6. Application to Credit Premium Measurement

In this section, we discuss the second application of the theory by measuring the credit risk premium of corporate bonds. As there is an extensive credit risk literature, we only mention the following popular books with many useful references within for interested reader: Bluhm et al. (2010) and Duffie and Singleton (2012). Nevertheless, to the best of our knowledge, the following methodology in measuring credit risk premium, using risk measures, is new.

Suppose that are the current prices of default free bonds (with no embedded option or risk premium) for which we want to use as a base to calculate the credit premium of the defaultable bond (with no embedded options) with the current price . Since is defaultable, we have , where is the price of the bond in the absence of the risk of default, and is the credit premium of the bond that we are interested in estimating. Suppose that our subjective measure of risk is fixed and according to , then the following proposition can provide an approximation (a lower bound) to the credit premium. Recall that we have taken the first approach of Section 3.1.

Proposition 1.

If the set of the prices does not provide any desirable portfolio, then , where is the optimal value of the dual problem in Equation (6) for this set of bonds.

The proof of this proposition is omitted as it follows from the same methodology as in Balbás and López (2008), in particular Propositions 3.1 and 4.1 of their paper. However, their work is not based on a probability model, but the proofs can be adapted to the set up of this proposition.

As it was mentioned earlier, any non-zero optimal value could be due to the existence of a desirable portfolio or other embedded risk premiums such as default risk. However, if we assume that the market is free from any desirable opportunity, then the above proposition can provide an estimation for the credit premiums. Note that the procedure is based on Corollary 2 to estimate these credit premiums. The same procedure can be carried out by Corollary 3. Indeed, applying the latter should provide more accurate credit premiums than with Corollary 2, because it also counts the inconsistencies arising from the existence of strong sequential arbitrage opportunities in the market.

To implement the numerical procedure, we use the risk measure with a confidence level of , the estimated parameters of Section 4, and the risk statistic DF explained in the last section. We choose a small portfolio as our market.

Remark 5.

The above methodology can be also used to analyze the price of bonds with embedded options. Suppose that is the price of a default-free bond with an embedded option that has a price of . Hence, , where is the price of an equivalent bond without any embedded option. Then, similar to Proposition 1, one can obtain lower bounds for .

Example 3.

Suppose that we want to estimate the credit worthiness of Bond 6 in Table 1 with the available data. We already observed in Examples 1 and 2 that Bonds 1 to 4 do not create a desirable portfolio. Therefore, this shows that these bonds are consistently priced with respect to the interest rate model and based on the assumption that there is no desirable opportunity in market; hence they can be used as a benchmark according to Proposition 1 to measure the credit quality of Bond 6 within the available data. For Bonds 1, 2, 3, 4, and 6, the optimization problem in Equation (8) in this case produces an optimal solution of . Therefore, by Proposition 1, a lower bound for the credit premium of Bond 6 is approximately equal to 0.44.

7. Conclusions

A more general type of opportunity than the classical arbitrage is introduced to build optimal desirable portfolios. Optimization methods and duality theory together with the properties of risk measures are used to create an indicator which can measure this inefficiency in markets. A type of risk statistics is created in order to numerically implement the model in bond markets. The randomness in the bond market is described by an interest rate model. After calibration of the interest rate model, using the US daily treasury yield rates, simulations are used to estimate the sub-gradient set of the risk statistics. Finally, we provide some numerical examples based on real data where both market inefficiencies and the credit quality of a defaultable bond are measured.

Acknowledgments

The first author gratefully acknowledges the financial support of the Natural Sciences and Engineering Research Council (NSERC) of Canada grant 36860–2017. The authors are grateful to three anonymous reviewers for their constructive comments. We are also indebted to Alejandro Balbás for his many useful comments and discussions in particular regarding insights to Assumption 1. This research was partially completed during a sabbatical visit of the first author to the University Carlos III of Madrid.

Author Contributions

Both authors jointly identified the problem, conceived the model and wrote the paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Acerbi, Carlo, and Dirk Tasche. 2002. On the coherence of expected shortfall. Journal of Banking & Finance 26: 1487–503. [Google Scholar]

- Ahmed, Shabbir, Damir Filipović, and Gregor Svindland. 2008. A note on natural risk statistics. Operations Research Letters 36: 662–64. [Google Scholar] [CrossRef]

- Artzner, Philippe, Freddy Delbaen, Jean-Marc Eber, and David Heath. 1999. Coherent measures of risk. Mathematical Finance 9: 203–28. [Google Scholar] [CrossRef]

- Assa, Hirbod, and Manuel Morales. 2010. Risk measures on the space of infinite sequences. Mathematics and Financial Economics 2: 253–75. [Google Scholar] [CrossRef]

- Balbás, Alejandro, Beatriz Balbás, and Raquel Balbás. 2010a. Minimizing measures of risk by saddle point conditions. Journal of Computational and Applied Mathematics 234: 2924–31. [Google Scholar] [CrossRef]

- Balbás, Alejandro, Raquel Balbás, and José Garrido. 2010b. Extending pricing rules with general risk functions. European Journal of Operational Research 201: 23–33. [Google Scholar] [CrossRef]

- Balbás, Alejandro, José Garrido, and Silvia Mayoral. 2009. Properties of distortion risk measures. Methodology and Computing in Applied Probability 11: 385–99. [Google Scholar] [CrossRef]

- Balbás, Alejandro, and Susana López. 2008. Sequential arbitrage measurements and interest rate envelopes. Journal of Optimization Theory and Applications 138: 361–74. [Google Scholar] [CrossRef][Green Version]

- Ben-Tal, Aharon, and Marc Teboulle. 2007. An old-new concept of convex risk measures: The optimized certainty equivalent. Mathematical Finance 17: 449–76. [Google Scholar] [CrossRef]

- Björk, Tomas. 2009. Arbitrage Theory in Continuous Time. New York: Oxford University Press Inc. [Google Scholar]

- Bluhm, Christian, Ludger Overbeck, and Christoph Wagner. 2010. Introduction to Credit Risk Modeling. Boca Raton: CRC Press. [Google Scholar]

- Boero, Gianna, and Costanza Torricelli. 1996. A comparative evaluation of alternative models of the term structure of interest rates. European Journal of Operational Research 93: 205–23. [Google Scholar] [CrossRef]

- Černý, Aleš, and Stewart D. Hodges. 2002. The theory of good-deal pricing in incomplete markets. In Mathematical Finance, Bachelier Congress 2000. Berlin: Springer, pp. 175–202. [Google Scholar]

- Chen, Zhiwu, and Peter J. Knez. 1995. Measurement of market integration and arbitrage. The Review of Financial Studies 8: 287–325. [Google Scholar] [CrossRef]

- Daníelsson, Jón, Bjørn N. Jorgensen, Gennady Samorodnitsky, Mandira Sarma, and Casper G. de Vries. 2013. Fat tails, VaR and subadditivity. Journal of Econometrics 172: 283–91. [Google Scholar] [CrossRef]

- Dhaene, Jan, Roger J. A. Laeven, Steven Vanduffel, Gregory Darkiewicz, and Marc J. Goovaerts. 2008. Can a coherent risk measure be too subadditive? Journal of Risk and Insurance 75: 365–86. [Google Scholar] [CrossRef]

- Duffie, Darrell, and Kenneth J. Singleton. 2012. Credit Risk: Pricing, Measurement, and Management. Princeton: Princeton University Press. [Google Scholar]

- Föllmer, Hans, and Alexander Schi. 2011. Stochastic Finance: An Introduction in Discrete Time. Berlin: Walter de Gruyter GmbH & Co. KG. [Google Scholar]

- Föllmer, Hans, and Stefan Weber. 2015. The Axiomatic approach to risk Measures for capital determination. Annual Review of Financial Economics 7: 301–37. [Google Scholar] [CrossRef]

- Hansen, Lars Peter. 1982. Large sample properties of generalized method of moments estimators. Econometrica 50: 1029–54. [Google Scholar] [CrossRef]

- Kempf, Alexander, and Olaf Korn. 1998. Trading system and market integration. Journal of Financial Intermediation 7: 220–39. [Google Scholar] [CrossRef]

- Kou, Steven, Xianhua Peng, and Chris C. Heyde. 2013. External risk measures and Basel accords. Mathematics of Operations Research 38: 393–417. [Google Scholar] [CrossRef]

- Krätschmer, Volker, Alexander Schied, and Henryk Zähle. 2014. Comparative and qualitative robustness for law-invariant risk measures. Finance and Stochastics 18: 271–95. [Google Scholar] [CrossRef]

- Krokhmal, Pavlo, Jonas Palmquist, and Stanislav Uryasev. 2002. Portfolio optimization with conditional value-at- risk objective and constraints. Journal of Risk 4: 43–68. [Google Scholar] [CrossRef]

- Luenberger, David G. 1969. Optimization by Vector Spaces Methods. New York: John Wiley & Sons. [Google Scholar]

- Markowitz, Harry. 1952. Portfolio selection. Journal of Finance 7: 77–91. [Google Scholar]

- Okhrati, Ramin, and Hirbod Assa. 2017. Representation and approximation of convex dynamic risk measures with respect to strong-weak topologies. Stochastic Analysis and Applications 35: 604–14. [Google Scholar] [CrossRef]

- Protter, Philip E. 2004. Stochastic Integration and Differential Equations. Berlin: Springer-Verlag. [Google Scholar]

- Rockafellar, R. Tyrrell, and Stanislav Uryasev. 2000. Optimization of conditional value-at-risk. Journal of Risk 2: 21–42. [Google Scholar] [CrossRef]

- Rockafellar, R. Tyrrell, and Stanislav Uryasev. 2002. Conditional value-at-risk for general loss distributions. Journal of Banking & Finance 26: 1443–71. [Google Scholar]

- Rockafellar, R. Tyrrell, Stan Uryasev, and Michael Zabarankin. 2006. Generalized deviations in risk analysis. Finance and Stochastics 10: 51–74. [Google Scholar] [CrossRef]

- Sawaragi, Yoshikazu, Hirotaka Nakayama, and Tetsuzo Tanino. 1985. Theory of Multiobjective Optimization (Mathematics in Science and Engineering). Orlando: Academic Press. [Google Scholar]

- Wang, Shaun S. 2000. A class of distortion operators for financial and insurance risks. Journal of Risk and Insurance 67: 15–36. [Google Scholar] [CrossRef]

- Weber, Stefan, William Anderson, A.-M. Hamm, Thomas Knispel, Maren Liese, and Thomas Salfeld. 2013. Liquidity-adjusted risk measures. Mathematics and Financial Economics 7: 69–91. [Google Scholar] [CrossRef]

| 1 | VaR is a risk measure that is neither coherent nor convex; see the properties listed before and the comment on VaR after Equation (1). |

| 2 | Note that the maturity T could be replaced by a date even shorter than the earliest maturity and no essential modifications of the paper would be required. However, the numerical examples of the paper are under the assumption that T is the longest maturity within the bonds of the portfolio. |

| 3 | This refers to the set in Assumption 1. |

| 4 | This dynamic is under the physical measure. |

| 5 | The data are taken from the US Department of the Treasury available at: http://www.treasury.gov/resource-center/data-chart-center/interest-rates/Pages/TextView.aspx?data=yield. |

| 6 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).