Application of Standard Machine Learning Models for Medicare Fraud Detection with Imbalanced Data

Abstract

1. Introduction

2. Literature Review

2.1. The Challenges of Class Imbalance in Medicare Fraud Detection

2.2. The Role of Machine Learning in Medicare Fraud Detection

2.3. Graph-Based Approaches to Fraud Detection

2.4. The Impact of Feature Selection and Dimensionality Reduction

2.5. Addressing Model Evaluation and Validation in Real-World Applications

2.6. The Role of Predictive Analytics in Healthcare

2.7. The Economic and Social Impact of Medicare Fraud

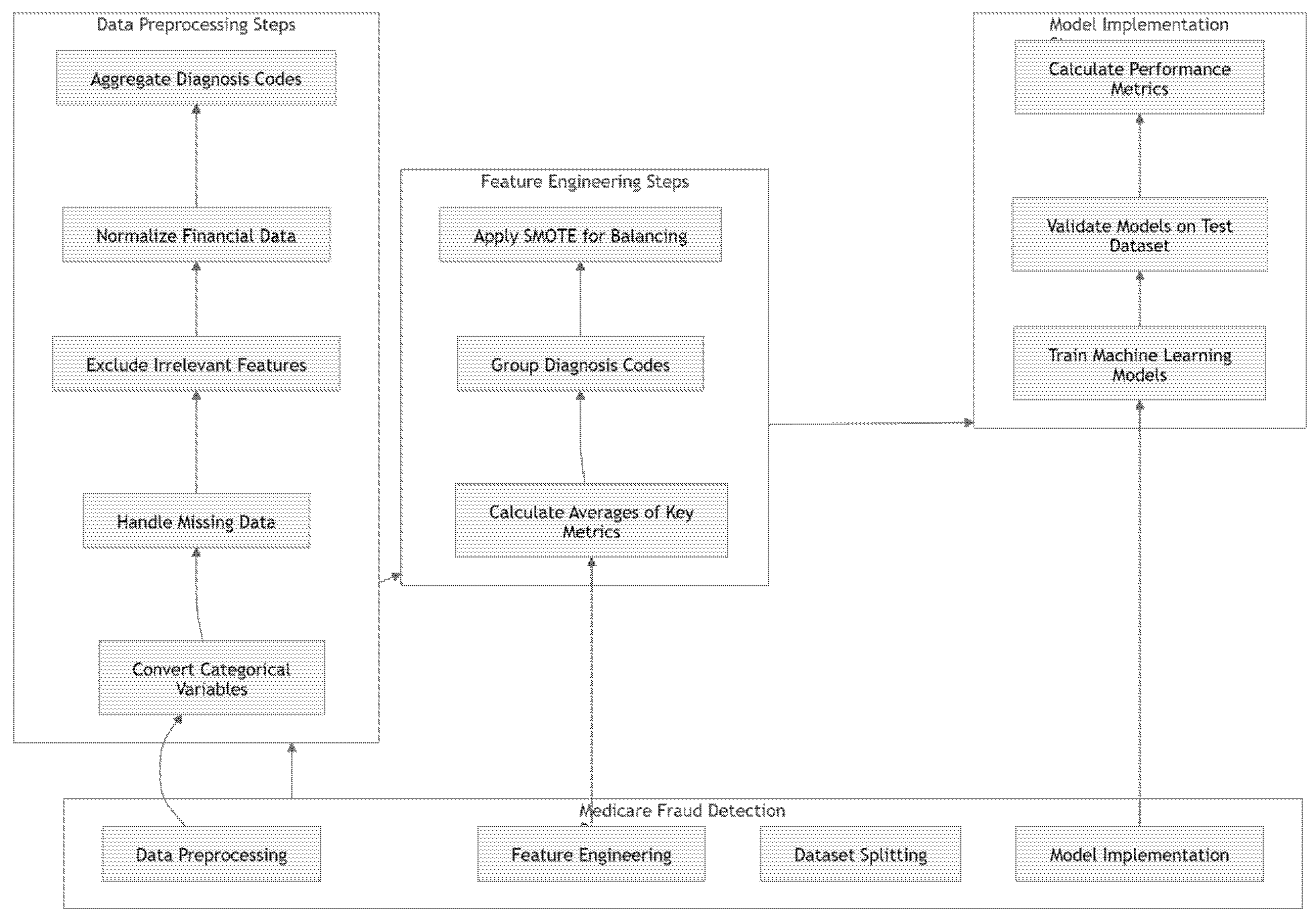

3. Materials and Methods

3.1. Data Preprocessing

3.2. Feature Engineering

3.3. Resampling Methods

4. Results

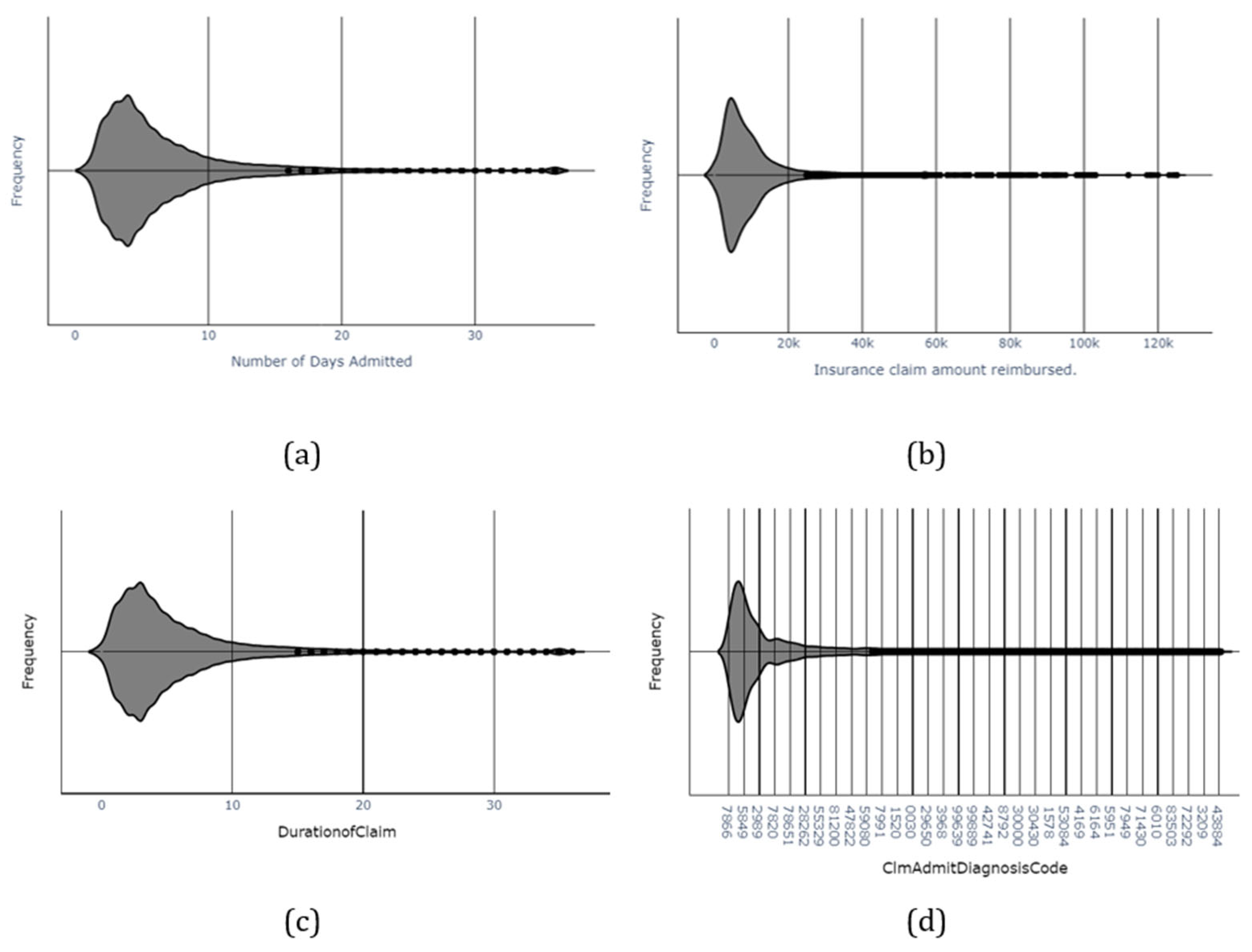

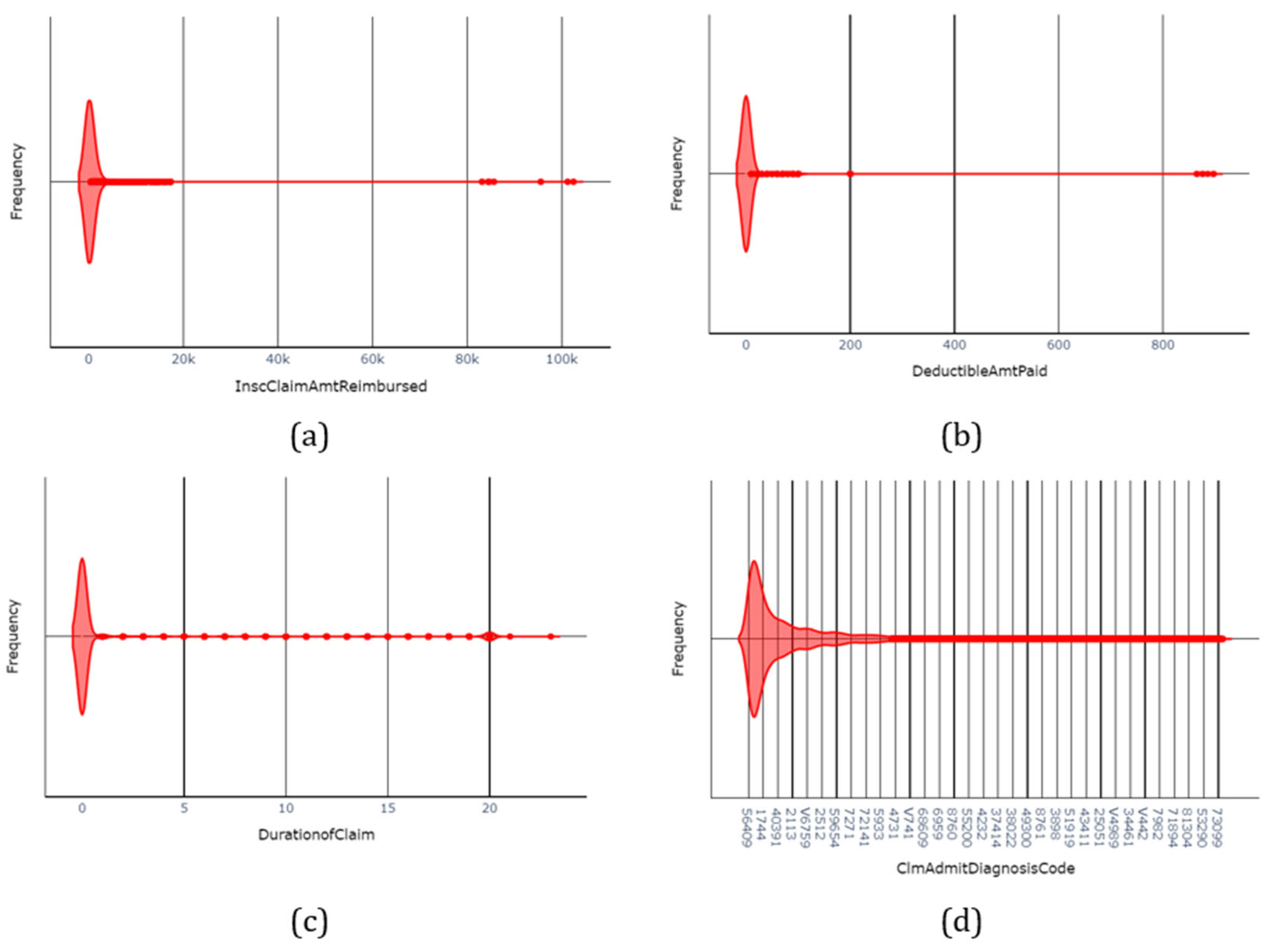

4.1. Data Collection

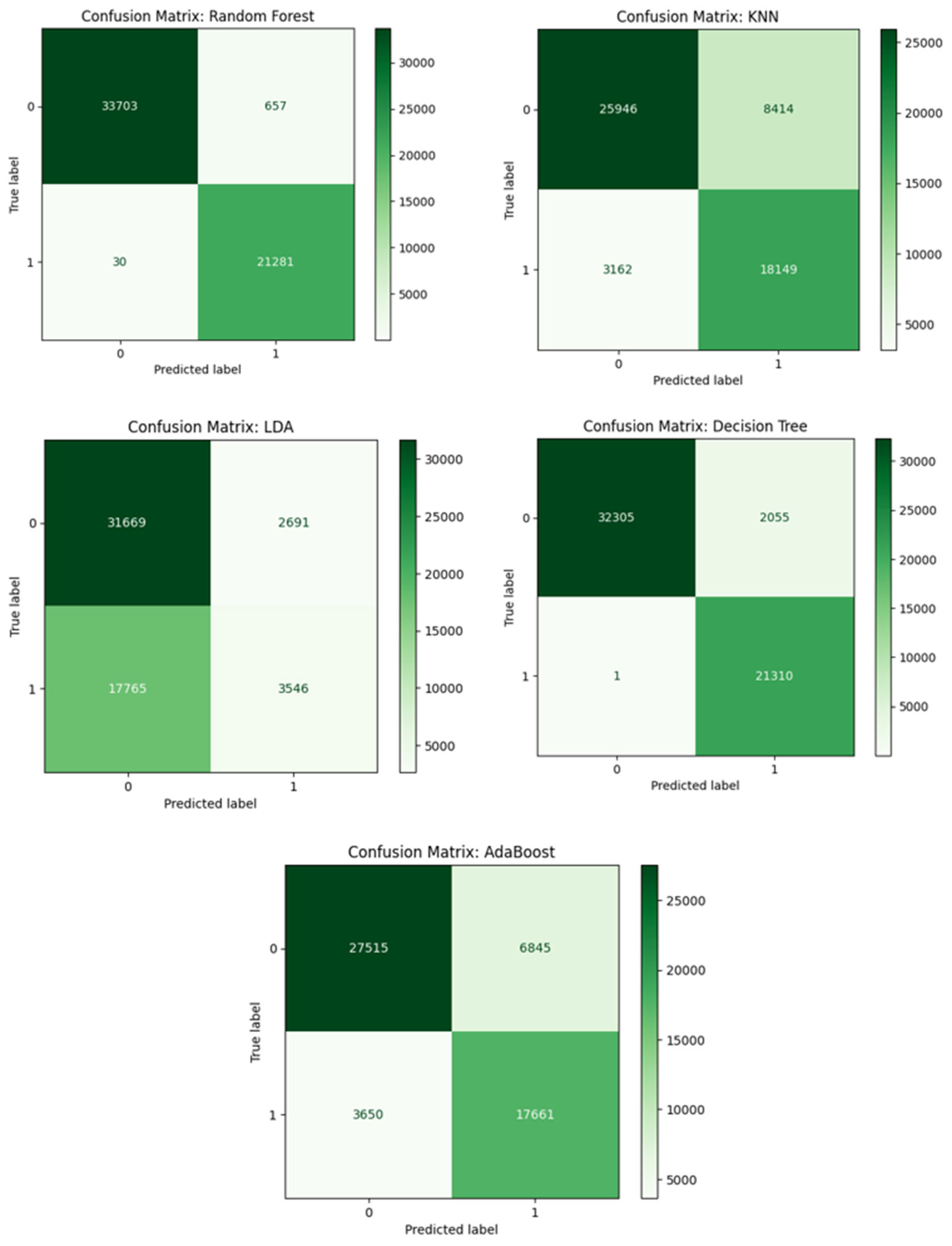

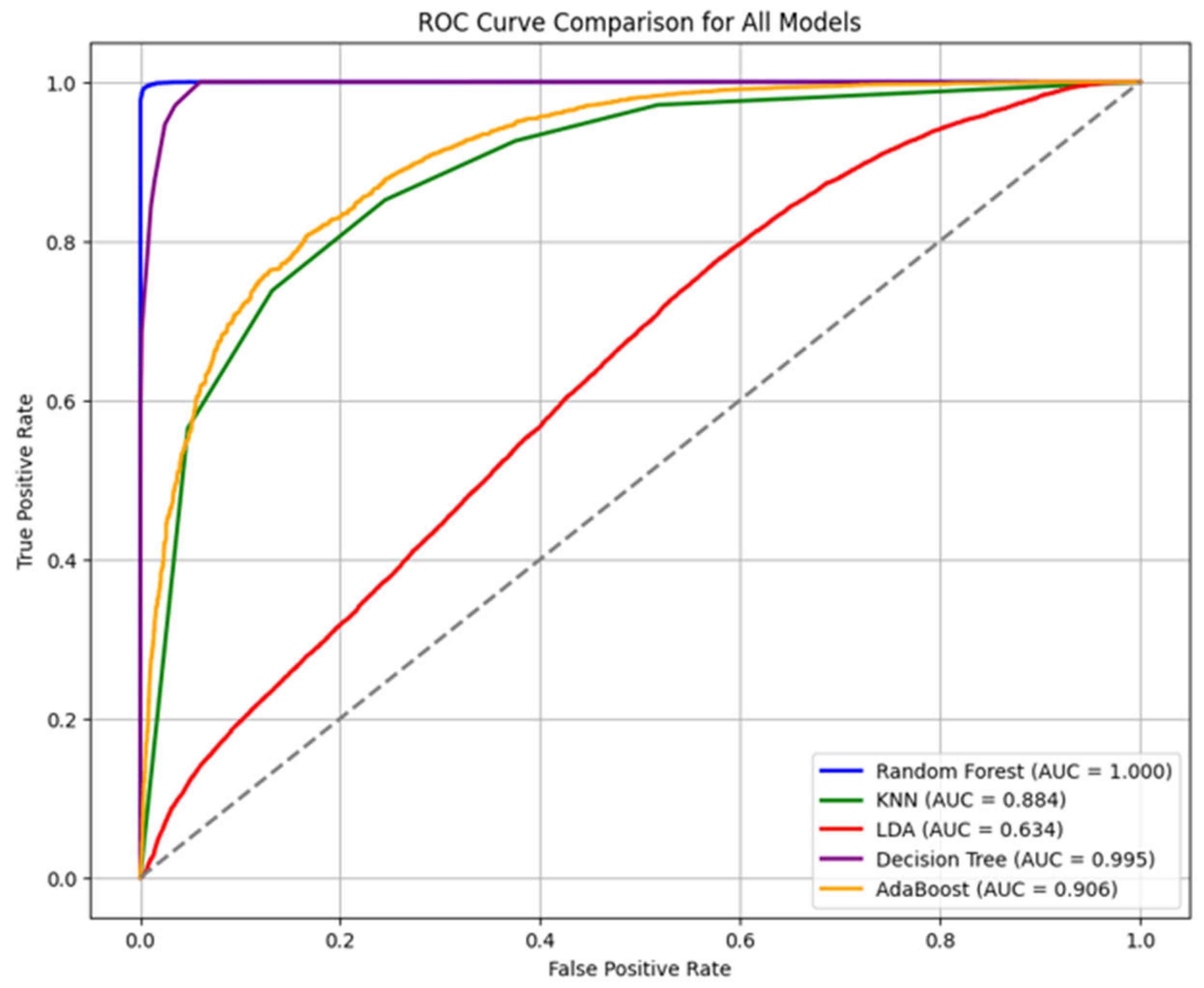

4.2. Findings

5. Discussion

6. Conclusions

Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ahmadi, Mohsen, Matin Khajavi, Abbas Varmaghani, Ali Ala, Kasra Danesh, and Danial Javaheri. 2025. Leveraging Large Language Models for Cybersecurity: Enhancing SMS Spam Detection with Robust and Context-Aware Text Classification. arXiv arXiv:2502.11014. [Google Scholar] [CrossRef]

- Arockiasamy, Jesu Marcus Immanuvel, and Gowrishankar Bhoopathi. 2025. A dual-model machine learning approach to medicare fraud detection: Combining unsupervised anomaly detection with supervised learning. Computer Science and Information Technologies 6: 245–52. [Google Scholar] [CrossRef]

- Arunkumar, C., Srijha Kalyan, and Hamsini Ravishankar. 2021. Fraudulent Detection in Healthcare Insurance. Paper presented at International Conference on Advances in Electrical and Computer Technologies, Coimbatore, India, October 1–2; Singapore: Springer Nature, pp. 1–9. [Google Scholar] [CrossRef]

- Babaei, G., and P. Giudici. 2025. Correlation Metrics for Safe Artificial Intelligence. Risks 13: 178. [Google Scholar] [CrossRef]

- Bauder, Richard A., and Taghi M. Khoshgoftaar. 2020. A study on rare fraud predictions with big Medicare claims fraud data. Intelligent Data Analysis 24: 141–61. [Google Scholar] [CrossRef]

- Bauder, Richard A., Matthew Herland, and Taghi M. Khoshgoftaar. 2019. Evaluating model predictive performance: A medicare fraud detection case study. Paper presented at 2019 IEEE International Conference on Information Reuse and Integration for Data Science (IRI), Los Angeles, CA, USA, July 30–August 1; pp. 9–14. [Google Scholar] [CrossRef]

- Bauder, Richard A., Taghi M. Khoshgoftaar, and Tawfiq Hasanin. 2018. Data sampling approaches with severely imbalanced big data for medicare fraud detection. Paper presented at International Conference on Tools with Artificial Intelligence (ICTAI), Volos, Greece, November 5–7; pp. 137–42. [Google Scholar] [CrossRef]

- Bottou, Léon. 2010. Large-scale machine learning with stochastic gradient descent. Paper presented at COMPSTAT’2010: 19th International Conference on Computational Statistics, Paris, France, August 22–27; 2010 Keynote, Invited and Contributed Papers. Heidelberg: Physica-Verlag HD, pp. 177–86. [Google Scholar]

- Bounab, Rayene, Bouchra Guelib, and Karim Zarour. 2024. A Novel Machine Learning Approach For handling Imbalanced Data: Leveraging SMOTE-ENN and XGBoost. Paper presented at PAIS 2024, 6th International Conference on Pattern Analysis and Intelligent Systems, El Oued, Algeria, April 24–25. [Google Scholar] [CrossRef]

- Chirchi, Khushi E., and B. Kavya. 2024. Unraveling Patterns in Healthcare Fraud through Comprehensive Analysis. Paper presented at 18th INDIAcom; 2024 11th International Conference on Computing for Sustainable Global Development (INDIACom), New Delhi, India, February 28–March 1; pp. 585–91. [Google Scholar] [CrossRef]

- Copeland, and Katrice Bridges. 2023. Health Care Fraud and the Erosion of Trust. Northwestern University Law Review 118: 89. [Google Scholar]

- Farhadi Nia, Masoumeh, Mohsen Ahmadi, and E. Irankhah. 2025. Transforming dental diagnostics with artificial intelligence: Advanced integration of ChatGPT and large language models for patient care. Frontiers in Dental Medicine 5: 1456208. [Google Scholar] [CrossRef] [PubMed]

- Giudici, Paolo. 2024. Safe machine learning. Statistics 58: 473–77. [Google Scholar] [CrossRef]

- Hancock, John T., Richard A. Bauder, Huanjing Wang, and Taghi M. Khoshgoftaar. 2023. Explainable machine learning models for Medicare fraud detection. Journal of Big Data 10: 154. [Google Scholar] [CrossRef]

- Hasanin, Tawfiq, Taghi M. Khoshgoftaar, Joffrey L. Leevy, and Richard A. Bauder. 2019. Severely imbalanced Big Data challenges: Investigating data sampling approaches. Journal of Big Data 6: 107. [Google Scholar] [CrossRef]

- He, Haibo, Yang Bai, Edwardo A. Garcia, and Shutao Li. 2008. ADASYN: Adaptive synthetic sampling approach for imbalanced learning. Paper presented at 2008 IEEE International Joint Conference on Neural Networks (IEEE World Congress on Computational Intelligence), Hong Kong, June 1–8; pp. 1322–28. [Google Scholar]

- Herland, Matthew, Richard A. Bauder, and Taghi M. Khoshgoftaar. 2017. Medical provider specialty predictions for the detection of anomalous medicare insurance claims. Paper presented at 2017 IEEE International Conference on Information Reuse and Integration (IRI), San Diego, CA, USA, August 4–6; pp. 579–88. [Google Scholar] [CrossRef]

- Herland, Matthew, Richard A. Bauder, and Taghi M. Khoshgoftaar. 2019. The effects of class rarity on the evaluation of supervised healthcare fraud detection models. Journal of Big Data 6: 21. [Google Scholar] [CrossRef]

- Johnson, Justin M., and Taghi M. Khoshgoftaar. 2019a. Deep learning and thresholding with class-imbalanced big data. Paper presented at 18th IEEE international conference on machine learning and applications (ICMLA), Boca Raton, FL, USA, December 16–19; pp. 755–62. [Google Scholar] [CrossRef]

- Johnson, Justin M., and Taghi M. Khoshgoftaar. 2019b. Medicare fraud detection using neural networks. Journal of Big Data 6: 63. [Google Scholar] [CrossRef]

- Johnson, Justin M., and Taghi M. Khoshgoftaar. 2020. Semantic Embeddings for Medical Providers and Fraud Detection. Paper presented at 2020 IEEE 21st International Conference on Information Reuse and Integration for Data Science (IRI), Las Vegas, NV, USA, August 11–13; pp. 224–30. [Google Scholar] [CrossRef]

- Johnson, Justin M., and Taghi M. Khoshgoftaar. 2022. Encoding High-Dimensional Procedure Codes for Healthcare Fraud Detection. SN Computer Science 3: 362. [Google Scholar] [CrossRef]

- Johnson, Justin M., and Taghi M. Khoshgoftaar. 2023. Data-Centric AI for Healthcare Fraud Detection. SN Computer Science 4: 389. [Google Scholar] [CrossRef] [PubMed]

- Karthik, Konduru Praveen, Taduvai Satvik Gupta, Doradla Kaushik, and T. K. Ramesh. 2025. Analysis of ML Models & Data Balancing Techniques for Medicare Fraud Using PySpark. Paper presented at 2025 Fifth IEEE International Conference on Advances in Electrical, Computing, Communication and Sustainable Technologies (ICAECT), Bhilai, India, January 9–10; pp. 1–6. [Google Scholar]

- Kumaraswamy, Nishamathi, Mia K. Markey, Tahir Ekin, Jamie C. Barner, and Karen Rascati. 2022. Healthcare Fraud Data Mining Methods: A Look Back and Look Ahead. Perspectives in Health Information Management 19: 1i. Available online: https://www.scopus.com/inward/record.uri?eid=2-s2.0-85128798400&partnerID=40&md5=f18fa3d0dc719c381f4cdd25d073b597 (accessed on 1 July 2025). [PubMed]

- LeCun, Yann, Yoshua Bengio, and Geoffrey Hinton. 2015. Deep learning. Nature 521: 436–44. [Google Scholar] [CrossRef] [PubMed]

- Leevy, Joffrey L., John Hancock, Taghi M. Khoshgoftaar, and Azadeh Abdollah Zadeh. 2023. Investigating the effectiveness of one-class and binary classification for fraud detection. Journal of Big Data 10: 157. [Google Scholar] [CrossRef]

- Leevy, Joffrey L., Taghi M. Khoshgoftaar, Richard A. Bauder, and Naeem Seliya. 2020. Investigating the relationship between time and predictive model maintenance. Journal of Big Data 7: 36. [Google Scholar] [CrossRef]

- Matschak, Tizian, Christoph Prinz, Florian Rampold, and Simon Trang. 2022. Show Me Your Claims and I’ll Tell You Your Offenses: Machine Learning-Based Decision Support for Fraud Detection on Medical Claim Data. Paper presented at Annual Hawaii International Conference on System Sciences, Maui, HI, USA, January 4–7; pp. 3729–37. Available online: https://www.scopus.com/inward/record.uri?eid=2-s2.0-85152140476&partnerID=40&md5=a75099b153fd5b0f43a66bb1c8d51c04 (accessed on 1 July 2025).

- Mayaki, Mansour Zoubeirou A., and Michel Riveill. 2022. Multiple Inputs Neural Networks for Fraud Detection. Paper presented at 2022 International Conference on Machine Learning, Control, and Robotics (MLCR), Suzhou, China, October 29–31; pp. 8–13. [Google Scholar] [CrossRef]

- Nabrawi, Eman, and Abdullah Alanazi. 2023. Fraud detection in healthcare insurance claims using machine learning. Risks 11: 160. [Google Scholar] [CrossRef]

- Obodoekwe, Nnaemeka, and Dustin Terence van der Haar. 2019. A Comparison of Machine Learning Methods Applicable to Healthcare Claims Fraud Detection. Paper presented at International Conference on Information Technology & Systems, Quito, Ecuador, February 6–8; pp. 548–57. [Google Scholar] [CrossRef]

- Qazi, Nadeem, and Kamran Raza. 2012. Effect of feature selection, Synthetic Minority Over-sampling (SMOTE) and under-sampling on class imbalance classification. Paper presented at 2012 14th International Conference on Modelling and Simulation, UKSim 2012, Cambridge, UK, March 28–30; pp. 145–50. [Google Scholar] [CrossRef]

- Rohitrox. 2024. Healthcare Provider Fraud Detection Analysis. San Francisco: Kaggle. [Google Scholar]

- Sakil, Mohammad Balayet Hossain, Md Amit Hasan, Md Shahin Alam Mozumder, Md Rokibul Hasan, Shafiul Ajam Opee, M. F. Mridha, and Zeyar Aung. 2025. Enhancing Medicare Fraud Detection with a CNN-Transformer-XGBoost Framework and Explainable AI. IEEE Access 13: 79609–22. [Google Scholar] [CrossRef]

- Sharma, Ritik, Sugandhi Midha, and Amit Semwal. 2022. Predictive Analysis on Multimodal Medicare Application. Paper presented at International Conference on Cyber Resilience (ICCR), Dubai, United Arab Emirates, October 6–7. [Google Scholar] [CrossRef]

- Veale, Michael, Max Van Kleek, and Reuben Binns. 2018. Fairness and accountability design needs for algorithmic support in high-stakes public sector decision-making. Paper presented at 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, April 21–27; pp. 1–14. [Google Scholar]

- Wang, Huanjing, John T. Hancock, and Taghi M. Khoshgoftaar. 2023. Improving medicare fraud detection through big data size reduction techniques. Paper presented at 2023 IEEE International Conference on Service-Oriented System Engineering (SOSE), Athens, Greece, July 17–20; pp. 208–17. [Google Scholar] [CrossRef]

- Yoo, Yeeun, Jinho Shin, and Sunghyon Kyeong. 2023. Medicare fraud detection using graph analysis: A comparative study of machine learning and graph neural networks. IEEE Access 11: 88278–94. [Google Scholar] [CrossRef]

| Author | Year | Aim | Result |

|---|---|---|---|

| Bounab et al. (2024) | 2024 | Proposed a hybrid method combining SMOTE with ENN to address data imbalance in healthcare fraud detection. | SMOTE-ENN with XGBoost improved efficiency in detecting fraud, outperforming traditional ML techniques. |

| Chirchi and Kavya (2024) | 2024 | Studied healthcare fraud detection using advanced ML models to predict provider fraud in Medicare. | SMOTE and advanced models improved fraud detection accuracy and addressed class imbalance issues. |

| Yoo et al. (2023) | 2023 | Investigated Medicare fraud detection using graph analysis to improve detection accuracy. | Graph neural networks outperformed traditional ML models in detecting Medicare fraud. |

| Wang et al. (2023) | 2023 | Tackled high dimensionality and class imbalance in Medicare fraud detection using feature selection and RUS. | Feature selection improved classification accuracy, addressing class imbalance and high dimensionality. |

| Johnson and Khoshgoftaar (2023) | 2023 | Introduced a data-centric approach to improve healthcare fraud classification performance using Medicare claims data. | Constructed large labeled datasets from CMS data, improving fraud detection reliability. |

| Hancock et al. (2023) | 2023 | Developed explainable ML models for Medicare fraud detection using feature selection techniques. | Feature selection reduced dimensionality while maintaining accuracy, improving transparency in fraud detection. |

| Mayaki and Riveill (2022) | 2022 | Examined the use of neural networks with autoencoders to predict fraudulent Medicare claims. | Deep neural network architecture improved classification accuracy for detecting Medicare fraud. |

| Matschak et al. (2022) | 2022 | Presented a CNN-based approach for detecting health insurance claim fraud. | Achieved an AUC of 0.7 for selected fraud types using a CNN-based approach. |

| Kumaraswamy et al. (2022) | 2022 | Reviewed data mining methods for healthcare fraud detection, focusing on digital systems. | Highlighted challenges in implementing digital fraud detection systems in healthcare. |

| Johnson and Khoshgoftaar (2022) | 2022 | Studied encoding high-dimensional procedure codes for healthcare fraud detection. | Binary tree decomposition and hashing improved classification accuracy for fraud detection. |

| Bauder and Khoshgoftaar (2020) | 2020 | Investigated the effects of class rarity on binary classification problems in Big Data fraud detection. | Oversampling and undersampling techniques improved model accuracy and reduced bias. |

| Johnson and Khoshgoftaar (2020) | 2020 | Explored the influence of medical provider specialty and semantic embeddings in detecting fraudulent providers. | Dense semantic embeddings improved model performance for detecting fraud. |

| Arunkumar et al. (2021) | 2021 | Investigated hybrid clustering and classification methods for healthcare insurance fraud detection. | Hybrid clustering and classification approaches outperformed other algorithms in classifying fraud. |

| Johnson and Khoshgoftaar (2019b) | 2019 | Applied neural networks to detect Medicare fraud with publicly available claims data. | Improved existing ML models for Medicare fraud detection, contributing to automated fraud detection. |

| Bauder et al. (2019) | 2019 | Evaluated ML model performance for real-world Medicare fraud detection. | Stressed the importance of validating ML models on new input data for real-world applications. |

| Obodoekwe and Haar (2019) | 2019 | Compared ML methods for detecting healthcare claims fraud. | Ensemble methods and neural networks were the most effective, while logistic regression performed poorly. |

| Hasanin et al. (2019) | 2019 | Analyzed the impact of class imbalance on Big Data analytics using ML algorithms. | Emphasized the importance of data sampling strategies to mitigate class imbalance in Big Data. |

| Herland et al. (2019) | 2019 | Investigated class rarity in supervised healthcare fraud detection models using Medicare data. | Detected fraudulent activities could recover up to $350 billion in financial losses. |

| Herland et al. (2017) | 2017 | Developed an anomaly detection model to identify potential healthcare fraud. | Improved anomaly detection model performance through feature selection and specialty grouping. |

| Karthik et al. (2025) | 2025 | Proposed ML models with PySpark and balancing techniques (RUS, SMOTE) for large-scale fraud detection. | Achieved scalable performance with highest specificity of 73% using 1:5 balanced datasets. |

| Johnson and Khoshgoftaar (2019a) | 2019 | Applied deep learning and imbalance handling techniques (ROS, RUS, ROS-RUS) to Medicare fraud detection. | Hybrid methods improved AUC and efficiency, reducing imbalance challenges. |

| Arockiasamy and Bhoopathi (2025) | 2025 | Proposed dual-model (unsupervised anomaly detection + supervised classification) for fraud detection. | Reduced false positives by 63% and improved AUC to 88.3%. |

| Chirchi and Kavya (2024) | 2024 | Analyzed provider fraud using SMOTE, Random Forest, Logistic Regression. | Identified provider behavior patterns, improved model robustness with SMOTE. |

| Herland et al. (2019) | 2019 | Investigated class rarity in supervised healthcare fraud detection. | Found performance drops with class rarity; proposed undersampling to mitigate imbalance. |

| Sakil et al. (2025) | 2025 | Proposed CNN-Transformer-XGBoost with explainable AI for Medicare fraud detection. | Achieved F1-score 0.95, AUC-ROC up to 0.98, surpassing Random Forest and SVM. |

| He et al. (2008) | 2008 | Proposed ADASYN (Adaptive Synthetic Sampling) to improve class balance by focusing on minority samples that are harder to classify. | Improved detection of complex patterns in imbalanced datasets by adaptively generating synthetic data. |

| LeCun et al. (2015) | 2015 | Presented deep feature extraction using autoencoders and deep neural networks. | Enabled automatic identification of relevant features in high-dimensional datasets, outperforming manual feature engineering. |

| Bottou (2010) | 2010 | Applied online learning with stochastic gradient descent (SGD) for large-scale ML. | Allowed for models to update continuously with streaming data, enhancing responsiveness to evolving fraud patterns. |

| Variable Name | Description |

|---|---|

| BeneID | Unique identifier for each beneficiary. |

| DOB | Date of birth of the beneficiary. |

| DOD | Date of death of the beneficiary. |

| Gender | Gender of the beneficiary. |

| Race | Race of the beneficiary. |

| RenalDiseaseIndicator | Indicator if the beneficiary has renal disease. |

| State | State code where the beneficiary resides. |

| County | County code where the beneficiary resides. |

| NoOfMonths_PartACov | Number of months the beneficiary was covered under Medicare Part A. |

| NoOfMonths_PartBCov | Number of months the beneficiary was covered under Medicare Part B. |

| ChronicCond_Alzheimer | Indicator if the beneficiary has Alzheimer’s disease. |

| ChronicCond_Heartfailure | Indicator if the beneficiary has heart failure. |

| ChronicCond_KidneyDisease | Indicator if the beneficiary has kidney disease. |

| ChronicCond_Cancer | Indicator if the beneficiary has cancer. |

| ChronicCond_ObstrPulmonary | Indicator if the beneficiary has obstructive pulmonary disease. |

| ChronicCond_Depression | Indicator if the beneficiary has depression. |

| ChronicCond_Diabetes | Indicator if the beneficiary has diabetes. |

| ChronicCond_IschemicHeart | Indicator if the beneficiary has ischemic heart disease. |

| ChronicCond_Osteoporasis | Indicator if the beneficiary has osteoporosis. |

| ChronicCond_RheumatoidArthritis | Indicator if the beneficiary has rheumatoid arthritis. |

| ChronicCond_Stroke | Indicator if the beneficiary has experienced a stroke. |

| IPAnnualReimbursementAmt | Annual inpatient reimbursement amount. |

| IPAnnualDeductibleAmt | Annual inpatient deductible amount. |

| OPAnnualReimbursementAmt | Annual outpatient reimbursement amount. |

| OPAnnualDeductibleAmt | Annual outpatient deductible amount. |

| ClaimID | Unique identifier for each claim. |

| ClaimStartDt | Start date of the claim. |

| ClaimEndDt | End date of the claim. |

| Provider | Unique identifier for the healthcare provider. |

| InscClaimAmtReimbursed | Insurance claim amount reimbursed. |

| AttendingPhysician | Identifier for the attending physician. |

| OperatingPhysician | Identifier for the operating physician. |

| OtherPhysician | Identifier for any other physician linked to the claim. |

| ClmDiagnosisCode_1 to 10 | Up to 10 diagnosis codes for a single claim. |

| ClmProcedureCode_1 to 6 | Up to 6 procedure codes for medical procedures performed during the claim period. |

| DeductibleAmtPaid | Deductible amount paid by the beneficiary for the claim. |

| ClmAdmitDiagnosisCode | Admission diagnosis code for inpatient claims. |

| Variable | Count | Mean | Std |

|---|---|---|---|

| Potential Fraud | 556,703 | 0.381 | 0.486 |

| Insurance Claim Amt Reimbursed | 556,703 | 996.936 | 3819.692 |

| Deductible Amt Paid | 556,703 | 78.428 | 273.809 |

| Admitted | 556,703 | 0.073 | 0.259 |

| Duration of Claim | 556,703 | 1.728 | 4.905 |

| Number of Days Admitted | 556,703 | 0.483 | 2.299 |

| Renal Disease Indicator | 556,703 | 0.197 | 0.397 |

| No. of Months Part A Cov | 556,703 | 11.931 | 0.890 |

| No. of Months Part B Cov | 556,703 | 11.939 | 0.786 |

| Model | Metric | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|---|

| Random Forest | Train | 0.992 | 0.985 | 1.000 | 0.992 |

| Validation | 0.988 | 0.970 | 0.999 | 0.984 | |

| KNN | Train | 0.895 | 0.853 | 0.955 | 0.901 |

| Validation | 0.792 | 0.683 | 0.852 | 0.758 | |

| LDA | Train | 0.665 | 0.841 | 0.408 | 0.549 |

| Validation | 0.633 | 0.569 | 0.166 | 0.257 | |

| Decision Tree | Train | 0.968 | 0.944 | 0.996 | 0.969 |

| Validation | 0.963 | 0.912 | 1.000 | 0.954 | |

| AdaBoost | Train | 0.836 | 0.816 | 0.866 | 0.840 |

| Validation | 0.811 | 0.721 | 0.829 | 0.771 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Farahmandazad, D.; Danesh, K.; Abadi, H.F.N. Application of Standard Machine Learning Models for Medicare Fraud Detection with Imbalanced Data. Risks 2025, 13, 198. https://doi.org/10.3390/risks13100198

Farahmandazad D, Danesh K, Abadi HFN. Application of Standard Machine Learning Models for Medicare Fraud Detection with Imbalanced Data. Risks. 2025; 13(10):198. https://doi.org/10.3390/risks13100198

Chicago/Turabian StyleFarahmandazad, Dorsa, Kasra Danesh, and Hossein Fazel Najaf Abadi. 2025. "Application of Standard Machine Learning Models for Medicare Fraud Detection with Imbalanced Data" Risks 13, no. 10: 198. https://doi.org/10.3390/risks13100198

APA StyleFarahmandazad, D., Danesh, K., & Abadi, H. F. N. (2025). Application of Standard Machine Learning Models for Medicare Fraud Detection with Imbalanced Data. Risks, 13(10), 198. https://doi.org/10.3390/risks13100198