The Illusion of Control: How Knowledge and Expertise Misclassify Uncertainty as Risk

Abstract

1. Introduction

2. Literature Review

2.1. Knight and the Risk and Uncertainty Distinction

2.2. Keynes and Uncertainty

2.3. Expected Utility Theory

2.4. Rationality

2.5. Contemporary Definitions: Modern Perspectives on Risk and Uncertainty

2.6. Examples of Behavioural and Cognitive Biases in Different Fields

2.7. Critiques of Risk Management Methods

3. Methodology

3.1. Linking Knowledge, Uncertainty and Risk

3.2. Classification Grid for Decision Contexts

4. Analysis of Knowledge and Expertise

4.1. The Definition and Relation of Knowledge and Expertise

4.2. Knowledge and Expertise as a Risk Reduction Tool

4.3. Limitations of Expertise and Knowledge

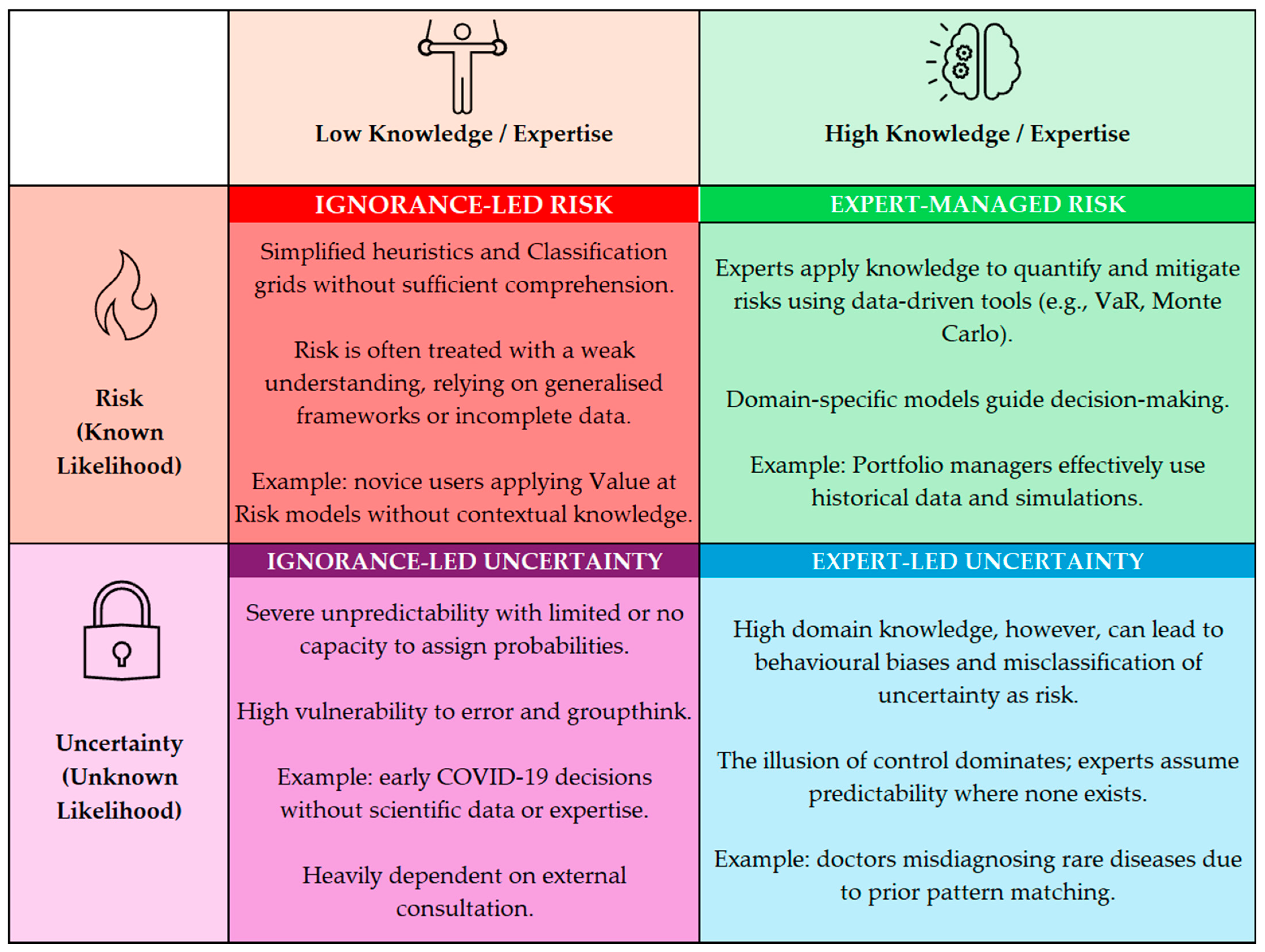

5. Construction of the Matrix

5.1. A Fundamental Method of Knowledge and Classification Grids

5.2. Matrices on Risk and Knowledge

5.3. Risk–Uncertainty and Knowledge–Expertise Matrix

5.4. Discussion of the Matrix

6. Study Novelty, Limitations and Future Research

6.1. Study Novelty and Contribution

6.2. Study Limitations

6.3. Future Research

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Arrow, Kenneth J. 1966. Exposition of the theory of choice under uncertainty. Synthese 16: 253–69. [Google Scholar] [CrossRef]

- Asare, Stephen K., and Arnold Wright. 1995. Normative and substantive expertise in multiple hypotheses evaluation. Organisational Behaviour and Human Decision Processes 64: 171–84. [Google Scholar] [CrossRef]

- Aven, Terje. 2013. On the Meaning of a Black Swan in a Risk Context. Safety Science 57: 44–51. [Google Scholar] [CrossRef]

- Aven, Terje. 2016. Risk Assessment and Risk Management: Review of Recent Advances on Their Foundation. European Journal of Operational Research 253: 1–13. [Google Scholar] [CrossRef]

- Aven, Terje. 2017. Improving risk characterisations in practical situations by highlighting knowledge aspects, with applications to Classification grids. Reliability Engineering & System Safety 167: 42–48. [Google Scholar]

- Bostrom, Nick. 2014. Superintelligence: Paths, Dangers, Strategies. Oxford: Oxford University Press. [Google Scholar]

- Buehler, Roger, Dale Griffin, and Michael Ross. 1994. Exploring the” planning fallacy”: Why people underestimate their task completion times. Journal of Personality and Social Psychology 67: 366. [Google Scholar] [CrossRef]

- Charness, Neil, and Richard S. Schultetus. 1999. Knowledge and expertise. In Handbook of Applied Cognition. Edited by Francis T. Durso. Hoboken: John Wiley & Sons Ltd., pp. 57–81. [Google Scholar]

- Committee of Sponsoring Organizations of the Treadway Commission (COSO). 2004. Enterprise Risk Management—Integrated Framework. New York: AICPA. [Google Scholar]

- Cox, Louis Anthony, Jr. 2008. What’s wrong with risk matrices? Risk Analysis 28: 497–512. [Google Scholar]

- Dow, S. 2019. Risk and uncertainty. In The Elgar Companion to John Maynard Keynes. Cheltenham: Edward Elgar Publishing, pp. 255–61. [Google Scholar]

- Dörner, Dietrich. 1996. The Logic of Failure: Recognising and Avoiding Error in Complex Situations. New York: Basic Books. [Google Scholar]

- Duijm, Nijs Jan. 2015. Recommendations on the use and design of Classification grids. Safety Science 76: 21–31. [Google Scholar] [CrossRef]

- Ellsberg, Daniel. 1961. Risk, Ambiguity, and the Savage Axioms. The Quarterly Journal of Economics 75: 643–69. [Google Scholar] [CrossRef]

- Fischhoff, Baruch. 1975. Hindsight is not equal to foresight: The effect of outcome knowledge on judgment under uncertainty. Journal of Experimental Psychology: Human Perception and Performance 1: 288. [Google Scholar] [CrossRef]

- Flyvbjerg, Bent. 2003. Megaprojects and Risk: An Anatomy of Ambition. Cambridge: Cambridge University Press. [Google Scholar]

- Gigerenzer, Gerd. 2002. Reckoning with Risk: Learning to Live with Uncertainty. London: Penguin Books. [Google Scholar]

- Groopman, Jerome. 2007. How Doctors Think. Boston: Houghton Mifflin. [Google Scholar]

- Hertz, David B., and Howard Thomas. 1983. Risk Analysis and Its Applications. Journal of Risk and Insurance 50: 79–100. [Google Scholar]

- Holling, Crawford S. 1973. Resilience and Stability of Ecological Systems. Annual Review of Ecology and Systematics 4: 1–23. [Google Scholar] [CrossRef]

- Holyoak, Keith J. 1991. Symbolic connectionism: Toward third-generation theories of expertise. In Toward a General Theory of Expertise: Prospects and Limits. Edited by K. Anders Ericsson and Jacqui Smith. Cambridge: Cambridge University Press, pp. 301–35. [Google Scholar]

- Jain, Jinesh, Nidhi Walia, Simarjeet Singh, and Esha Jain. 2022. Mapping the field of behavioural biases: A literature review using bibliometric analysis. Management Review Quarterly 72: 823–55. [Google Scholar] [CrossRef]

- Janis, Irving L. 1982. Groupthink: Psychological Studies of Policy Decisions and Fiascoes. Boston: Houghton Mifflin. [Google Scholar]

- Jordan, Silvia, Hannah Mitterhofer, and Lene Jørgensen. 2018. The interdiscursive appeal of risk matrices: Collective symbols, flexibility normalism and the interplay of ‘risk’and ‘uncertainty’. Accounting, Organizations and Society 67: 34–55. [Google Scholar] [CrossRef]

- Jorion, Philippe. 2006. Value at Risk: The New Benchmark for Managing Financial Risk, 3rd ed. New York: McGraw-Hill. [Google Scholar]

- Kahneman, Daniel. 2003. Maps of bounded rationality: Psychology for behavioral economics. American economic review 93: 1449–1475. [Google Scholar] [CrossRef]

- Kahneman, Daniel, and Amos Tversky. 1979. Prospect Theory: An Analysis of Decision under Risk. Econometrica 47: 263–91. [Google Scholar] [CrossRef]

- Keynes, John Maynard. 1937. The General Theory of Employment. The Quarterly Journal of Economics 51: 209–23. [Google Scholar] [CrossRef]

- Klayman, Joshua, and Young-Won Ha. 1987. Confirmation, Disconfirmation, and Information in Hypothesis Testing. Psychological Review 94: 211–28. [Google Scholar] [CrossRef]

- Knight, Frank H. 1921. Risk, Uncertainty, and Profit. Boston: Houghton Mifflin. [Google Scholar]

- Langer, Ellen J. 1975. The Illusion of Control. Journal of Personality and Social Psychology 32: 311–28. [Google Scholar] [CrossRef]

- Lewandowsky, Stephan, Daniel Little, and Michael L. Kalish. 2007. Knowledge and expertise. In Handbook of Applied Cognition, 2nd ed. Edited by FrancisT. Durso, Raymond S. Nickerson, Susan T. Dumais, Stephan Lewandowsky and Timothy J. Perfect. Chichester: Wiley, pp. 109–40. [Google Scholar]

- Perrow, Charles. 1984. Normal Accidents: Living with High-Risk Technologies. Princeton: Princeton University Press. [Google Scholar]

- Petroski, Henry. 1994. Design Paradigms: Case Histories of Error and Judgment in Engineering. Cambridge: Cambridge University Press. [Google Scholar]

- Reason, James. 2000. Human Error: Models and Management. BMJ 320: 768–70. [Google Scholar] [CrossRef]

- Renn, Ortwin, and Peter Graham. 2006. Risk Governance: Towards an Integrative Approach. White Paper No. 1. Geneva: International Risk Governance Council. [Google Scholar]

- Savage, Leonard J. 1954. The Foundations of Statistics. New York: Wiley. [Google Scholar]

- Shappell, Scott A., and Douglas A. Wiegmann. 2000. Human Factors Analysis and Classification System: HFACS. Washington, DC: Federal Aviation Administration, Office of Aviation Medicine. [Google Scholar]

- Simon, Herbert A. 1955. A Behavioral Model of Rational Choice. The Quarterly Journal of Economics 69: 99–118. [Google Scholar] [CrossRef]

- Simonsohn, Uri, and George Loewenstein. 2006. Mistake: The effect of previously encountered prices on current housing demand. The Economic Journal 116: 175–99. [Google Scholar] [CrossRef]

- Slovic, Paul. 1987. Perception of Risk. Science 236: 280–85. [Google Scholar] [CrossRef] [PubMed]

- Spiekermann, Raphael, Stefan Kienberger, John Norton, Felipe Briones, and Juergen Weichselgartner. 2015. The Disaster-Knowledge Matrix–Reframing and evaluating the knowledge challenges in disaster risk reduction. International Journal of Disaster Risk Reduction 13: 96–108. [Google Scholar] [CrossRef]

- Staw, Barry M. 1981. The escalation of commitment to a course of action. Academy of Management Review 6: 577–87. [Google Scholar] [CrossRef]

- Sutherland, Holly, Georgina Recchia, Sarah Dryhurst, and Alexandra L. J. Freeman. 2022. How people understand Classification grids, and how matrix design can improve their use: Findings from randomised controlled studies. Risk Analysis 42: 1023–41. [Google Scholar] [CrossRef]

- Taleb, Nassim Nicholas. 2007. The Black Swan: The Impact of the Highly Improbable. New York: Random House. [Google Scholar]

- The Royal Society. 1992. Risk: Analysis, Perception and Management: Report of a Royal Society Study Group. London: The Royal Society. [Google Scholar]

- Thompson, Kimberly M. 2003. Variability and Uncertainty Meet Risk Management and Risk Communication. Risk Analysis 22: 647–54. [Google Scholar] [CrossRef]

- Tversky, Amos, and Daniel Kahneman. 1974. Judgment under Uncertainty: Heuristics and Biases: Biases in judgments reveal some heuristics of thinking under uncertainty. Science 185: 1124–31. [Google Scholar] [CrossRef]

- von Neumann, John, and Oskar Morgenstern. 1947. Theory of Games and Economic Behavior. Princeton: Princeton University Press. [Google Scholar]

- Wideman, R. Max. 1992. Project and Program Risk Management. Newtown Square, PA: Project Management Institute. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Faccia, A.; Petratos, P.; Manni, F. The Illusion of Control: How Knowledge and Expertise Misclassify Uncertainty as Risk. Risks 2025, 13, 188. https://doi.org/10.3390/risks13100188

Faccia A, Petratos P, Manni F. The Illusion of Control: How Knowledge and Expertise Misclassify Uncertainty as Risk. Risks. 2025; 13(10):188. https://doi.org/10.3390/risks13100188

Chicago/Turabian StyleFaccia, Alessio, Pythagoras Petratos, and Francesco Manni. 2025. "The Illusion of Control: How Knowledge and Expertise Misclassify Uncertainty as Risk" Risks 13, no. 10: 188. https://doi.org/10.3390/risks13100188

APA StyleFaccia, A., Petratos, P., & Manni, F. (2025). The Illusion of Control: How Knowledge and Expertise Misclassify Uncertainty as Risk. Risks, 13(10), 188. https://doi.org/10.3390/risks13100188