Artificial Intelligence Reporting Guidelines’ Adherence in Nephrology for Improved Research and Clinical Outcomes

Abstract

1. Introduction

2. Methods

3. Common Ground for AI-Based Clinical Guidelines via FAIR Common Data Models

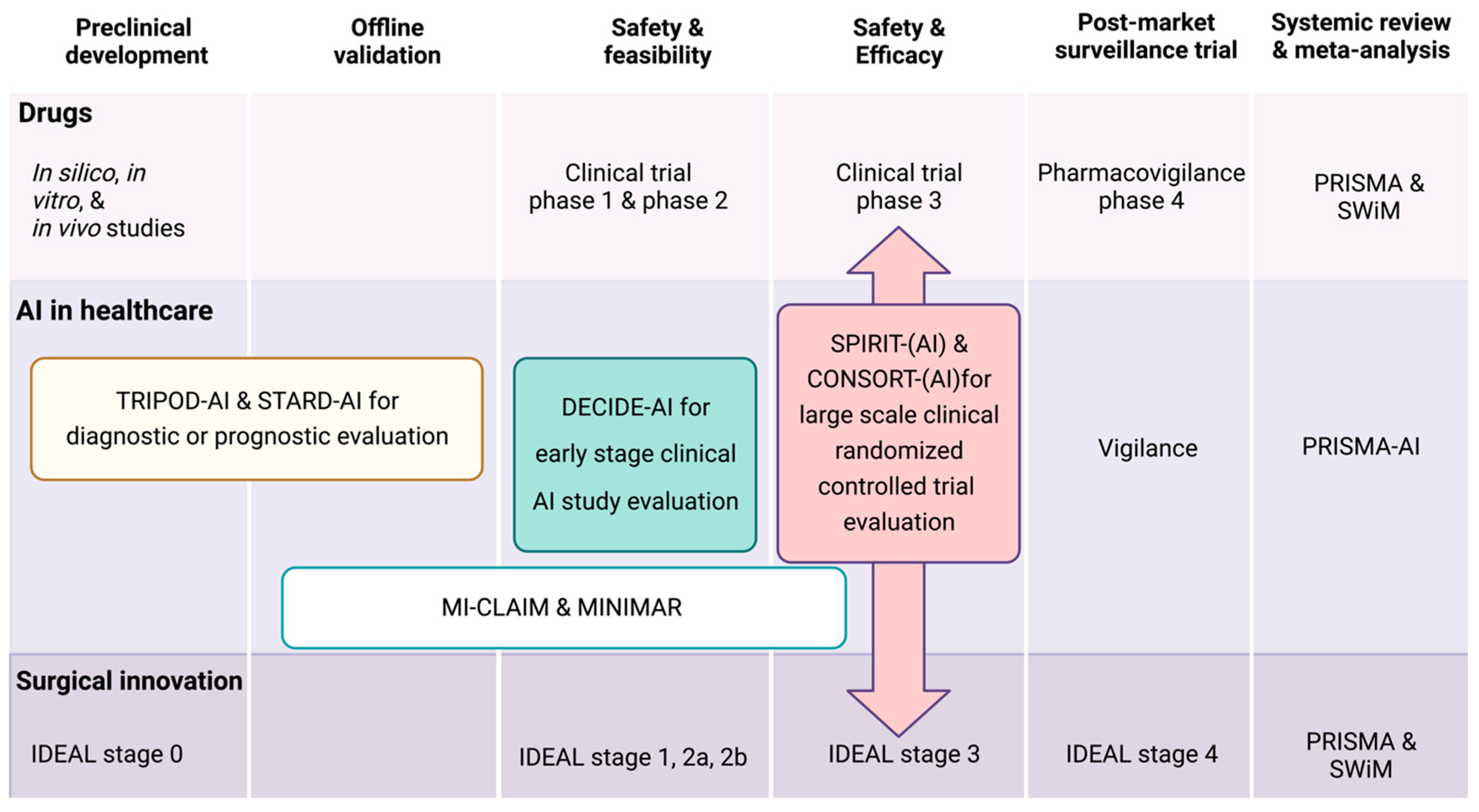

4. What Are AI Clinical Research Reporting Guidelines?

5. Why Do We Need an AI Reporting Guideline in General?

6. Which AI Reporting Guideline Should I Use for Nephrological Study?

7. What Are the Minimal Requirements for AI Reporting Guidelines?

8. What Else Could Be Done for an Improved Guideline Adherence and the Use of AI Models in Nephrology?

- Synthetic data as a digital twin of real-world patient data: Synthetic data is computer-generated data that mimics real-world data, while preserving its statistical properties [69,70]. Thus, it can enable researchers to share and collaborate on nephrology-related studies without risking the exposure of sensitive patient information. By sharing synthetic data, researchers can access larger and more diverse datasets, leading to more robust and generalizable findings. This approach fosters collaboration between institutions and researchers, accelerating advancements in the understanding, diagnosis, and treatment of kidney diseases, among others, while maintaining patient privacy and adhering to regulatory requirements [70].

- Predictive modeling: Synthetic data can be used to create large, diverse datasets that help to develop predictive models for various kidney diseases. These models can assist clinicians in predicting which patients are at high risk for developing kidney disease or experiencing complications. In addition, this can enable researchers to identify patterns and trends that may not be evident in smaller, less diverse datasets.

- Development of software requiring patient data: Synthetic data can also be used to develop software that requires patient data, like clinical decision support systems that assist clinicians in making treatment decisions for patients with specific kidney diseases. For instance, a decision support system could utilize synthetic data for training purposes to recommend the best treatment options for patients based on their clinical characteristics.

- User-centered design: The design of the AI system should be centered around the needs of the clinicians who will be using it. The system should be intuitive and easy to use, with a user interface that is easy to navigate. For example, AI-based support systems can be used to develop and implement clinical decision rules in nephrology [73]. These decision rules can support clinicians to obtain more timely decisions, such as when to initiate dialysis or refer a patient for a kidney transplant.

- Integration with clinical workflow: The AI system should be integrated into the clinical workflow in a way that minimizes disruption and maximizes efficiency [74]. This may involve integrating the system into existing EHR systems or other clinical tools already in place. In addition, diagnostic procedures in nephrology would depend on the ability to integrate data from various sources beyond EHR, such as laboratory test results, imaging data, or clinical trials. For example, these systems can predict the risk of related developing complications, such as the risk of progressing kidney failure [74].

- Training and education: Clinicians need to be trained on how to use the AI system effectively [75]. This may involve providing training on the system itself, as well as on the underlying data and algorithms, because clinicians need to understand how the AI system works and how it arrives at its recommendations. The system should be transparent and provide clear explanations of its recommendations, so that clinicians can make informed decisions.

- Healthcare regulators workplan: Aligned with the FDA’s enduring dedication to create and employ innovative strategies for overseeing medical device software and other digital health technologies, in April of 2019, the FDA released the “Proposed Regulatory Framework for Modifications to Artificial Intelligence/Machine Learning (AI/ML)-Based Software as a Medical Device (SaMD)-Discussion Paper and Request for Feedback”. This document outlined the FDA’s groundwork for a potential method of premarket evaluation for modifications to software driven by artificial intelligence and machine learning. However, the current challenges and rapid developments in the AI healthcare industry need more aggressive action from authorities to put them on one stream. Recently, the European Medicines Agency (EMA) and the Heads of Medicines Agencies (HMAs) have released a comprehensive artificial intelligence (AI) roadmap through 2028, outlining a united and synchronized approach to optimize the advantages of AI for stakeholders, while mitigating the associated risks. Here, the Common European data spaces are a key initiative aimed at unleashing the vast potential of data-driven innovation in the EU. They will facilitate the secure and trustworthy exchange of data across the EU, allowing businesses, public administrations, education, and individuals to maintain control over their own data while benefiting from a safe framework for sharing it for innovative purposes [76]. This initiative is crucial for enhancing the development of new data-driven products and services, thereby potentially forming an integral part of a connected and competitive European data economy. Complementing these data spaces, the European Commission is also addressing the risks associated with specific AI uses through a set of complementary, proportionate, and flexible rules, aiming to establish Europe as a global leader in setting AI standards. This legal framework for AI, known as the AI Act, brings clarity to AI developers, deployers, and users by focusing on areas not covered by existing national and EU legislations [77]. It categorizes AI risks into four levels, as follows: minimal, high, unacceptable, and specific transparency risks; it introduces dedicated rules for general purpose AI models. Together, these measures may represent a comprehensive approach to foster a safer, more trustworthy, and innovative data and AI landscape in Europe. However, the current challenges and rapid developments in the AI healthcare industry need more aggressive action from authority organizations such as the FDA, EMA, and PMDA to develop unified regulatory guidelines.

9. A Perspective of Generative Language Processing Utilization in Nephrology

10. Conclusions and Future Perspectives

Author Contributions

Funding

Conflicts of Interest

References

- Ngiam, K.Y.; Khor, I.W. Big data and machine learning algorithms for health-care delivery. Lancet Oncol. 2019, 20, e262–e273. [Google Scholar] [CrossRef]

- Shamshirband, S.; Fathi, M.; Dehzangi, A.; Chronopoulos, A.T.; Alinejad-Rokny, H. A review on deep learning approaches in healthcare systems: Taxonomies, challenges, and open issues. J. Biomed. Inf. 2021, 113, 103627. [Google Scholar] [CrossRef]

- Shmatko, A.; Ghaffari Laleh, N.; Gerstung, M.; Kather, J.N. Artificial intelligence in histopathology: Enhancing cancer research and clinical oncology. Nat. Cancer 2022, 3, 1026–1038. [Google Scholar] [CrossRef]

- Kowalewski, K.F.; Egen, L.; Fischetti, C.E.; Puliatti, S.; Juan, G.R.; Taratkin, M.; Ines, R.B.; Sidoti, A.M.A.; Mühlbauer, J.; Wessels, F.; et al. Artificial intelligence for renal cancer: From imaging to histology and beyond. Asian J. Urol. 2022, 9, 243–252. [Google Scholar] [CrossRef] [PubMed]

- Najia, A.; Yuan, P.; Markus, W.; Michéle, Z.; Martin, S. OMOP CDM Can Facilitate Data-Driven Studies for Cancer Prediction: A Systematic Review. Int. J. Mol. Sci. 2022, 23, 11834. [Google Scholar] [CrossRef]

- Cho, S.; Sin, M.; Tsapepas, D.; Dale, L.A.; Husain, S.A.; Mohan, S.; Natarajan, K. Content Coverage Evaluation of the OMOP Vocabulary on the Transplant Domain Focusing on Concepts Relevant for Kidney Transplant Outcomes Analysis. Appl. Clin. Inform. 2020, 11, 650–658. [Google Scholar] [CrossRef]

- Hinton, G. Deep Learning—A Technology with the Potential to Transform Health Care. JAMA 2018, 320, 1101–1102. [Google Scholar] [CrossRef] [PubMed]

- Nicholson, P.W. Big data and black-box medical algorithms. Sci. Transl. Med. 2018, 10, eaao5333. [Google Scholar] [CrossRef]

- Reinecke, I.; Zoch, M.; Reich, C.; Sedlmayr, M.; Bathelt, F. The Usage of OHDSI OMOP—A Scoping Review. Stud. Health Technol. Inform. 2021, 283, 95–103. [Google Scholar] [CrossRef] [PubMed]

- Belenkaya, R.; Gurley, M.J.; Golozar, A.; Dymshyts, D.; Miller, R.T.; Williams, A.E.; Ratwani, S.; Siapos, A.; Korsik, V.; Warner, J.; et al. Extending the OMOP Common Data Model and Standardized Vocabularies to Support Observational Cancer Research. JCO Clin. Cancer Inform. 2021, 5, 12–20. [Google Scholar] [CrossRef]

- Seneviratne, M.G.; Banda, J.M.; Brooks, J.D.; Shah, N.H.; Hernandez-Boussard, T.M. Identifying Cases of Metastatic Prostate Cancer Using Machine Learning on Electronic Health Records. AMIA Annu. Symp. Proc. 2018, 2018, 1498–1504. [Google Scholar]

- Ahmadi, N.; Nguyen, Q.V.; Sedlmayr, M.; Wolfien, M. A comparative patient-level prediction study in OMOP CDM: Applicative potential and insights from synthetic data. Sci. Rep. 2024, 14, 2287. [Google Scholar] [CrossRef]

- Park, C.; You, S.C.; Jeon, H.; Jeong, C.W.; Choi, J.W.; Park, R.W. Development and Validation of the Radiology Common Data Model (R-CDM) for the International Standardization of Medical Imaging Data. Yonsei Med. J. 2022, 63, S74. [Google Scholar] [CrossRef]

- i2b2: Informatics for Integrating Biology & the Bedside. Available online: https://www.i2b2.org/resrcs/ (accessed on 13 May 2023).

- Pcornet—The National Patient-Centered Clinical Research Network. Available online: https://pcornet.org/data/ (accessed on 13 May 2023).

- CDISC/SDTM. Available online: https://www.cdisc.org/standards/foundational/sdtm (accessed on 13 May 2023).

- Niel, O.; Boussard, C.; Bastard, P. Artificial Intelligence Can Predict GFR Decline during the Course of ADPKD. Am. J. Kidney Dis. Off. J. Natl. Kidney Found. 2018, 71, 911–912. [Google Scholar] [CrossRef]

- Goel, A.; Shih, G.; Riyahi, S.; Jeph, S.; Dev, H.; Hu, R.; Romano, D.; Teichman, K.; Blumenfeld, J.D.; Barash, I.; et al. Deployed Deep Learning Kidney Segmentation for Polycystic Kidney Disease MRI. Radiology. Artif. Intell. 2022, 4, e210205. [Google Scholar] [CrossRef]

- Beetz, N.L.; Geisel, D.; Shnayien, S.; Auer, T.A.; Globke, B.; Ollinger, R.; Trippel, T.D.; Schachtner, T.; Fehrenbach, U. Effects of Artificial Intelligence-Derived Body Composition on Kidney Graft and Patient Survival in the Eurotransplant Senior Program. Biomedicines 2022, 10, 554. [Google Scholar] [CrossRef] [PubMed]

- Nematollahi, M.; Akbari, R.; Nikeghbalian, S.; Salehnasab, C. Classification Models to Predict Survival of Kidney Transplant Recipients Using Two Intelligent Techniques of Data Mining and Logistic Regression. Int. J. Organ Transpl. Med. 2017, 8, 119–122. [Google Scholar]

- Niel, O.; Bastard, P. Artificial Intelligence in Nephrology: Core Concepts, Clinical Applications, and Perspectives. Am. J. Kidney Dis. 2019, 74, 803–810. [Google Scholar] [CrossRef] [PubMed]

- Xu, M.; Yoon, S.; Fuentes, A.; Park, D.S. A Comprehensive Survey of Image Augmentation Techniques for Deep Learning. Pattern Recognit. 2022, 137, 109347. [Google Scholar] [CrossRef]

- Saptarshi, B.; Kristian, S.; Prashant, S.; Markus, W.; Olaf, W. A Multi-Schematic Classifier-Independent Oversampling Approach for Imbalanced Datasets. IEEE Access 2021, 9, 123358–123374. [Google Scholar] [CrossRef]

- Nagendran, M.; Chen, Y.; Lovejoy, C.A.; Gordon, A.C.; Komorowski, M.; Harvey, H.; Topol, E.J.; Ioannidis, J.P.A.; Collins, G.S.; Maruthappu, M. Artificial intelligence versus clinicians: Systematic review of design, reporting standards, and claims of deep learning studies. BMJ 2020, 368, m689. [Google Scholar] [CrossRef] [PubMed]

- Deborah, P.; Shun, L.; Alyssa, A.G.; Anurag, S.; Joseph, J.Y.S.; Benjamin, H.K. Randomized Clinical Trials of Machine Learning Interventions in Health Care: A Systematic Review. JAMA Netw. Open 2023, 5, e2233946. [Google Scholar] [CrossRef]

- Inau, E.T.; Sack, J.; Waltemath, D.; Zeleke, A.A. Initiatives, Concepts, and Implementation Practices of FAIR (Findable, Accessible, Interoperable, and Reusable) Data Principles in Health Data Stewardship Practice: Protocol for a Scoping Review. JMIR Res. Protoc. 2021, 10, e22505. [Google Scholar] [CrossRef] [PubMed]

- Reinecke, I.; Zoch, M.; Wilhelm, M.; Sedlmayr, M.; Bathelt, F. Transfer of Clinical Drug Data to a Research Infrastructure on OMOP—A FAIR Concept. Stud. Health Technol. Inform. 2021, 287, 63–67. [Google Scholar] [CrossRef] [PubMed]

- Correa, R.; Shaan, M.; Trivedi, H.; Patel, B.; Celi, L.A.G.; Gichoya, J.W.; Banerjee, I. A Systematic Review of ‘Fair’ AI Model Development for Image Classification and Prediction. J. Med. Biol. Eng. 2022, 42, 816–827. [Google Scholar] [CrossRef]

- What is a Reporting Guideline? EQUATOR Network. Available online: https://www.equator-network.org/about-us/what-is-a-reporting-guideline/ (accessed on 20 February 2024).

- Kim, J.E.; Choi, Y.J.; Oh, S.W.; Kim, M.G.; Jo, S.K.; Cho, W.Y.; Ahn, S.Y.; Kwon, Y.J.; Ko, G.-J. The Effect of Statins on Mortality of Patients with Chronic Kidney Disease Based on Data of the Observational Medical Outcomes Partnership Common Data Model (OMOP-CDM) and Korea National Health Insurance Claims Database. Front. Nephrol. 2022, 1, 821585. [Google Scholar] [CrossRef]

- Bluemke, D.A.; Moy, L.; Bredella, M.A.; Ertl-Wagner, B.B.; Fowler, K.J.; Goh, V.J.; Halpern, E.F.; Hess, C.P.; Schiebler, M.L.; Weiss, C.R. Assessing Radiology Research on Artificial Intelligence: A Brief Guide for Authors, Reviewers, and Readers-From the Radiology Editorial Board. Radiology 2020, 294, 487–489. [Google Scholar] [CrossRef]

- Liu, X.; Faes, L.; Kale, A.U.; Wagner, S.K.; Fu, D.J.; Bruynseels, A.; Mahendiran, T.; Moraes, G.; Shamdas, M.; Kern, C.; et al. A comparison of deep learning performance against health-care professionals in detecting diseases from medical imaging: A systematic review and meta-analysis. Lancet Digit. Health 2019, 1, e271–e297. [Google Scholar] [CrossRef]

- Kim, D.; Jang, H.Y.; Kim, K.W.; Shin, Y.; Park, S.H. Design Characteristics of Studies Reporting the Performance of Artificial Intelligence Algorithms for Diagnostic Analysis of Medical Images: Results from Recently Published Papers. Korean J. Radiol. 2019, 20, 405–410. [Google Scholar] [CrossRef] [PubMed]

- Yusuf, M.; Atal, I.; Li, J.; Smith, P.; Ravaud, P.; Fergie, M.; Callaghan, M.; Selfe, J. Reporting quality of studies using machine learning models for medical diagnosis: A systematic review. BMJ Open 2020, 10, e034568. [Google Scholar] [CrossRef] [PubMed]

- Bozkurt, S.; Cahan, E.M.; Seneviratne, M.G.; Sun, R.; Lossio-Ventura, J.A.; Ioannidis, J.P.A.; Hernandez-Boussard, T. Reporting of demographic data and representativeness in machine learning models using electronic health records. J. Am. Med. Inform. Assoc. 2020, 27, 1878–1884. [Google Scholar] [CrossRef]

- Luo, W.; Phung, D.; Tran, T.; Gupta, S.; Rana, S.; Karmakar, C.; Shilton, A.; Yearwood, J.; Dimitrova, N.; Ho, T.B.; et al. Guidelines for Developing and Reporting Machine Learning Predictive Models in Biomedical Research: A Multidisciplinary View. J. Med. Internet Res. 2016, 18, e323. [Google Scholar] [CrossRef]

- Loftus, T.J.; Shickel, B.; Ozrazgat-Baslanti, T.; Ren, Y.; Glicksberg, B.S.; Cao, J.; Singh, K.; Chan, L.; Nadkarni, G.N.; Bihorac, A. Artificial intelligence-enabled decision support in nephrology. Nat. Rev. Nephrol. 2022, 18, 452–465. [Google Scholar] [CrossRef]

- Xie, G.; Chen, T.; Li, Y.; Chen, T.; Li, X.; Liu, Z. Artificial Intelligence in Nephrology: How Can Artificial Intelligence Augment Nephrologists’ Intelligence? Kidney Dis. 2020, 6, 1–6. [Google Scholar] [CrossRef]

- Kers, J.; Bulow, R.D.; Klinkhammer, B.M.; Breimer, G.E.; Fontana, F.; Abiola, A.A.; Hofstraat, R.; Corthals, G.L.; Peters-Sengers, H.; Djudjaj, S.; et al. Deep learning-based classification of kidney transplant pathology: A retrospective, multicentre, proof-of-concept study. Lancet Digit. Health 2022, 4, e18–e26. [Google Scholar] [CrossRef]

- Farris, A.B.; Vizcarra, J.; Amgad, M.; Cooper, L.A.D.; Gutman, D.; Hogan, J. Artificial intelligence and algorithmic computational pathology: An introduction with renal allograft examples. Histopathology 2021, 78, 791–804. [Google Scholar] [CrossRef]

- Yi, Z.; Salem, F.; Menon, M.C.; Keung, K.; Xi, C.; Hultin, S.; Haroon Al Rasheed, M.R.; Li, L.; Su, F.; Sun, Z.; et al. Deep learning identified pathological abnormalities predictive of graft loss in kidney transplant biopsies. Kidney Int. 2022, 101, 288–298. [Google Scholar] [CrossRef] [PubMed]

- Decruyenaere, A.; Decruyenaere, P.; Peeters, P.; Vermassen, F.; Dhaene, T.; Couckuyt, I. Prediction of delayed graft function after kidney transplantation: Comparison between logistic regression and machine learning methods. BMC Med. Inf. Decis. Mak. 2015, 15, 83. [Google Scholar] [CrossRef] [PubMed]

- Kawakita, S.; Beaumont, J.L.; Jucaud, V.; Everly, M.J. Personalized prediction of delayed graft function for recipients of deceased donor kidney transplants with machine learning. Sci. Rep. 2020, 10, 18409. [Google Scholar] [CrossRef]

- Costa, S.D.; de Andrade, L.G.M.; Barroso, F.V.C.; de Oliveira, C.M.C.; Daher, E.D.F.; Fernandes, P.F.C.B.C.; Esmeraldo, R.d.M.; de Sandes-Freitas, T.V. The impact of deceased donor maintenance on delayed kidney allograft function: A machine learning analysis. PLoS ONE 2020, 15, e0228597. [Google Scholar] [CrossRef] [PubMed]

- Raynaud, M.; Aubert, O.; Divard, G.; Reese, P.P.; Kamar, N.; Yoo, D.; Chin, C.S.; Bailly, E.; Buchler, M.; Ladriere, M.; et al. Dynamic prediction of renal survival among deeply phenotyped kidney transplant recipients using artificial intelligence: An observational, international, multicohort study. Lancet Digit. Health 2021, 3, e795–e805. [Google Scholar] [CrossRef]

- Ginley, B.; Lutnick, B.; Jen, K.Y.; Fogo, A.B.; Jain, S.; Rosenberg, A.; Walavalkar, V.; Wilding, G.; Tomaszewski, J.E.; Yacoub, R.; et al. Computational Segmentation and Classification of Diabetic Glomerulosclerosis. J. Am. Soc. Nephrol. 2019, 30, 1953–1967. [Google Scholar] [CrossRef]

- Hara, S.; Haneda, E.; Kawakami, M.; Morita, K.; Nishioka, R.; Zoshima, T.; Kometani, M.; Yoneda, T.; Kawano, M.; Karashima, S.; et al. Evaluating tubulointerstitial compartments in renal biopsy specimens using a deep learning-based approach for classifying normal and abnormal tubules. PLoS ONE 2022, 17, e0271161. [Google Scholar] [CrossRef]

- Korfiatis, P.; Denic, A.; Edwards, M.E.; Gregory, A.V.; Wright, D.E.; Mullan, A.; Augustine, J.; Rule, A.D.; Kline, T.L. Automated Segmentation of Kidney Cortex and Medulla in CT Images: A Multisite Evaluation Study. J. Am. Soc. Nephrol. 2022, 33, 420–430. [Google Scholar] [CrossRef]

- Sounderajah, V.; Ashrafian, H.; Golub, R.M.; Shetty, S.; De, F.J.; Hooft, L.; Moons, K.; Collins, G.; Moher, D.; Bossuyt, P.M.; et al. Developing a reporting guideline for artificial intelligence-centred diagnostic test accuracy studies: The STARD-AI protocol. BMJ Open 2021, 11, e047709. [Google Scholar] [CrossRef] [PubMed]

- Bossuyt, P.M.; Reitsma, J.B.; Bruns, D.E.; Gatsonis, C.A.; Glasziou, P.P.; Irwig, L.; Lijmer, J.G.; Moher, D.; Rennie, D.; de Vet, H.C.W.; et al. STARD 2015: An updated list of essential items for reporting diagnostic accuracy studies. BMJ 2015, 351, h5527. [Google Scholar] [CrossRef] [PubMed]

- Sounderajah, V.; Ashrafian, H.; Aggarwal, R.; De Fauw, J.; Denniston, A.K.; Greaves, F.; Karthikesalingam, A.; King, D.; Liu, X.; Markar, S.R.; et al. Developing specific reporting guidelines for diagnostic accuracy studies assessing AI interventions: The STARD-AI Steering Group. Nat. Med. 2020, 26, 807–808. [Google Scholar] [CrossRef] [PubMed]

- Vasey, B.; Nagendran, M.; Campbell, B.; Clifton, D.A.; Collins, G.S.; Denaxas, S.; Denniston, A.K.; Faes, L.; Geerts, B.; Ibrahim, M.; et al. Reporting guideline for the early-stage clinical evaluation of decision support systems driven by artificial intelligence: DECIDE-AI. Nat. Med. 2022, 28, 924–933. [Google Scholar] [CrossRef]

- Collins, G.S.; Moons, K.G.M. Reporting of artificial intelligence prediction models. Lancet 2019, 393, 1577–1579. [Google Scholar] [CrossRef]

- Tripod Statement. Available online: https://www.tripod-statement.org/ (accessed on 20 February 2024).

- Finlayson, S.G.; Subbaswamy, A.; Singh, K.; Bowers, J.; Kupke, A.; Zittrain, J.; Kohane, I.S.; Saria, S. The Clinician and Dataset Shift in Artificial Intelligence. N. Engl. J. Med. 2021, 385, 283–286. [Google Scholar] [CrossRef]

- Kelly, C.J.; Karthikesalingam, A.; Suleyman, M.; Corrado, G.; King, D. Key challenges for delivering clinical impact with artificial intelligence. BMC Med. 2019, 17, 195. [Google Scholar] [CrossRef]

- Moher, D.; Hopewell, S.; Schulz, K.F.; Montori, V.; Gøtzsche, P.C.; Devereaux, P.J.; Elbourne, D.; Egger, M.; Altman, D.G. CONSORT 2010 explanation and elaboration: Updated guidelines for reporting parallel group randomised trials. Int. J. Surg. 2012, 10, 28–55. [Google Scholar] [CrossRef]

- Yang, S.; Zhu, F.; Ling, X.; Liu, Q.; Zhao, P. Intelligent Health Care: Applications of Deep Learning in Computational Medicine. Front. Genet. 2021, 12, 607471. [Google Scholar] [CrossRef]

- Liu, X.; Cruz, R.S.; Moher, D.; Calvert, M.J.; Denniston, A.K. Reporting guidelines for clinical trial reports for interventions involving artificial intelligence: The CONSORT-AI extension. Lancet Digit. Health 2020, 2, e537–e548. [Google Scholar] [CrossRef] [PubMed]

- Kendall, T.J.; Robinson, M.; Brierley, D.J.; Lim, S.J.; O’Connor, D.J.; Shaaban, A.M.; Lewis, I.; Chan, A.W.; Harrison, D.J. Guidelines for cellular and molecular pathology content in clinical trial protocols: The SPIRIT-Path extension. Lancet Oncol. 2021, 22, e435–e445. [Google Scholar] [CrossRef] [PubMed]

- Si, L.; Zhong, J.; Huo, J.; Xuan, K.; Zhuang, Z.; Hu, Y.; Wang, Q.; Zhang, H.; Yao, W. Deep learning in knee imaging: A systematic review utilizing a Checklist for Artificial Intelligence in Medical Imaging (CLAIM). Eur. Radiol. 2022, 32, 1353–1361. [Google Scholar] [CrossRef] [PubMed]

- Belue, M.J.; Harmon, S.A.; Lay, N.S.; Daryanani, A.; Phelps, T.E.; Choyke, P.L.; Turkbey, B. The Low Rate of Adherence to Checklist for Artificial Intelligence in Medical Imaging Criteria Among Published Prostate MRI Artificial Intelligence Algorithms. J. Am. Coll. Radiol. 2023, 20, 134–145. [Google Scholar] [CrossRef] [PubMed]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, 71. [Google Scholar] [CrossRef]

- Cacciamani, G.E.; Chu, T.N.; Sanford, D.I.; Abreu, A.; Duddalwar, V.; Oberai, A.; Kuo, C.-C.J.; Liu, X.; Denniston, A.K.; Vasey, B.; et al. PRISMA AI reporting guidelines for systematic reviews and meta-analyses on AI in healthcare. Nat. Med. 2023, 29, 14–15. [Google Scholar] [CrossRef] [PubMed]

- Campbell, M.; McKenzie, J.E.; Sowden, A.; Katikireddi, S.V.; Brennan, S.E.; Ellis, S.; Hartmann-Boyce, J.; Ryan, R.; Shepperd, S.; Thomas, J.; et al. Synthesis without meta-analysis (SWiM) in systematic reviews: Reporting guideline. BMJ 2020, 368, l6890. [Google Scholar] [CrossRef] [PubMed]

- Hernandez-Boussard, T.; Bozkurt, S.; Ioannidis, J.P.A.; Shah, N.H. MINIMAR (MINimum Information for Medical AI Reporting): Developing reporting standards for artificial intelligence in health care. J. Am. Med. Inform. Assoc. 2020, 27, 2011–2015. [Google Scholar] [CrossRef]

- Norgeot, B.; Quer, G.; Beaulieu-Jones, B.K.; Torkamani, A.; Dias, R.; Gianfrancesco, M.; Arnaout, R.; Kohane, I.S.; Saria, S.; Topol, E.; et al. Minimum information about clinical artificial intelligence modeling: The MI-CLAIM checklist. Nat. Med. 2020, 26, 1320–1324. [Google Scholar] [CrossRef]

- Badrouchi, S.; Bacha, M.M.; Hedri, H.; Ben Abdallah, T.; Abderrahim, E. Toward generalizing the use of artificial intelligence in nephrology and kidney transplantation. J. Nephrol. 2022, 36, 1087–1100. [Google Scholar] [CrossRef]

- Gonzales, A.; Guruswamy, G.; Smith, S.R. Synthetic data in health care: A narrative review. PLOS Digit. Health 2023, 2, e0000082. [Google Scholar] [CrossRef]

- Guillaudeux, M.; Rousseau, O.; Petot, J.; Bennis, Z.; Dein, C.-A.; Goronflot, T.; Vince, N.; Limou, S.; Karakachoff, M.; Wargny, M.; et al. Patient-centric synthetic data generation, no reason to risk re-identification in biomedical data analysis. NPJ Digit. Med. 2023, 6, 37. [Google Scholar] [CrossRef] [PubMed]

- AlQudah, A.A.; Al-Emran, M.; Shaalan, K. Technology Acceptance in Healthcare: A Systematic Review. Appl. Sci. 2021, 11, 10537. [Google Scholar] [CrossRef]

- Choudhury, A.; Elkefi, S. Acceptance, initial trust formation, and human biases in artificial intelligence: Focus on clinicians. Front. Digit. Health 2022, 4, 966174. [Google Scholar] [CrossRef] [PubMed]

- Goldstein, B.A.; Bedoya, A.D. Guiding Clinical Decisions Through Predictive Risk Rules. JAMA Netw. Open 2020, 3, e2013101. [Google Scholar] [CrossRef] [PubMed]

- Sandhu, S.; Lin, A.L.; Brajer, N.; Sperling, J.; Ratliff, W.; Bedoya, A.D.; Balu, S.; O’Brien, C.; Sendak, M.P. Integrating a Machine Learning System into Clinical Workflows: Qualitative Study. J. Med. Internet Res. 2020, 22, e22421. [Google Scholar] [CrossRef] [PubMed]

- Balczewski, E.A.; Cao, J.; Singh, K. Risk Prediction and Machine Learning: A Case-Based Overview. Clin. J. Am. Soc. Nephrol. 2023, 18, 524–526. [Google Scholar] [CrossRef] [PubMed]

- Paranjape, K.; Schinkel, M.; Nannan, P.R.; Car, J.; Nanayakkara, P. Introducing Artificial Intelligence Training in Medical Education. JMIR Med. Educ. 2019, 5, e16048. [Google Scholar] [CrossRef] [PubMed]

- A European Approach to Artificial Intelligence. Available online: https://digital-strategy.ec.europa.eu/en/policies/european-approach-artificial-intelligence (accessed on 20 February 2024).

- Meskó, B.; Topol, E.J. The imperative for regulatory oversight of large language models (or generative AI) in healthcare. NPJ Digit. Med. 2023, 6, 120. [Google Scholar] [CrossRef] [PubMed]

| Key Box |

|---|

| Artificial intelligence: Artificial intelligence (AI) is a general term that implies the use of a computer to model intelligent behavior with minimal human intervention. Machine learning: Machine learning is one of the branches of artificial intelligence (AI), which focuses on the use of data and algorithms to imitate the way that humans learn, gradually improving its accuracy. Deep learning: Deep Learning is one type of machine learning algorithm that uses artificial neural networks that can learn extremely complex relationships between features and labels and have been shown to exceed human abilities in performing complex tasks [7]. Ground truth: This refers to the correct or “true” answer to a specific problem or question. In the biomedical field, it is a “gold standard” guideline, expert opinion, or clinically proven outcome that can be used to compare and evaluate model results. Black box algorithms: These are not used to explain or justify obtained results, i.e., neural network-trained and identified outcomes are mostly hard to explain even with a high accuracy prediction [8]. |

| Name | Stage of Study | Application in Nephrology or Other Healthcare Fields | EQUATOR Reporting Guidelines |

|---|---|---|---|

| TRIPOD-AI | Pre and clinical development | Extension of TRIPOD guideline used to report prediction models’ (diagnostic or prognostic) development, validation, and updates. | Yes |

| STARD-AI | Pre and clinical development | Extension of STARD guideline used to report diagnostic test accuracy studies or prediction model evaluation. | Yes |

| DECIDE-AI | Early clinical study stage evaluation | Used to report the early evaluation of AI systems as an intervention in live clinical settings (small-scale, formative evaluation), independently of the study design and AI system modality (diagnostic, prognostic, and/or therapeutic). | Yes |

| SPIRIT-AI | Comparative prospective evaluation | Extension of SPIRIT guideline and mainly uses randomized trials. | Yes |

| CONSORT-AI | Comparative prospective evaluation | Extension of CONSORT guideline and mainly uses clinical trial protocols. | Yes |

| PRISMA-AI | Systemic review analysis | Extension of PRISMA guideline, which are used for meta-analysis or systemic review analysis. | Yes |

| CLAIM | Medical image analysis | Extension of the STARD reporting guideline. CLAIM is used in AI medical imaging evaluations that include classification, image reconstruction, text analysis, and workflow optimization. The majority of autosomal dominant polycystic kidney disease and renal cancer CT or MRI images are used, but AI analysis studies did not adhere to the CLAIM guidelines. | Yes |

| MI-CLAIM | Minimal clinical AI modeling research | The guidelines are designed to inform readers and users about how the AI algorithm was developed, validated, and comprehensively reported. They are split into six parts: (1) study design; (2) separation of data into partitions for model training and model testing; (3) optimization and final model selection; (4) performance evaluation; (5) model examination; and (6) reproducible pipeline. | Yes |

| MINIMAR | Minimal healthcare AI modeling studies | MINIMAR reporting guideline stand upon four essential components: (1) study population and setting; (2) patient demographics; (3) model architecture; and (4) model evaluation. This reporting guideline can be applied for almost all healthcare studies. | No |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Salybekov, A.A.; Wolfien, M.; Hahn, W.; Hidaka, S.; Kobayashi, S. Artificial Intelligence Reporting Guidelines’ Adherence in Nephrology for Improved Research and Clinical Outcomes. Biomedicines 2024, 12, 606. https://doi.org/10.3390/biomedicines12030606

Salybekov AA, Wolfien M, Hahn W, Hidaka S, Kobayashi S. Artificial Intelligence Reporting Guidelines’ Adherence in Nephrology for Improved Research and Clinical Outcomes. Biomedicines. 2024; 12(3):606. https://doi.org/10.3390/biomedicines12030606

Chicago/Turabian StyleSalybekov, Amankeldi A., Markus Wolfien, Waldemar Hahn, Sumi Hidaka, and Shuzo Kobayashi. 2024. "Artificial Intelligence Reporting Guidelines’ Adherence in Nephrology for Improved Research and Clinical Outcomes" Biomedicines 12, no. 3: 606. https://doi.org/10.3390/biomedicines12030606

APA StyleSalybekov, A. A., Wolfien, M., Hahn, W., Hidaka, S., & Kobayashi, S. (2024). Artificial Intelligence Reporting Guidelines’ Adherence in Nephrology for Improved Research and Clinical Outcomes. Biomedicines, 12(3), 606. https://doi.org/10.3390/biomedicines12030606