Early Prediction of Dementia Using Feature Extraction Battery (FEB) and Optimized Support Vector Machine (SVM) for Classification

Abstract

1. Introduction

Aim of Study

2. Materials and Methods

- Individuals who presented a dementia diagnosis at the beginning of the study or before the ten-year mark.

- Individuals with missing data in the outcome column.

- Individuals with more than 10% incomplete data.

- Individuals who expired (died) well before the ten-year study period.

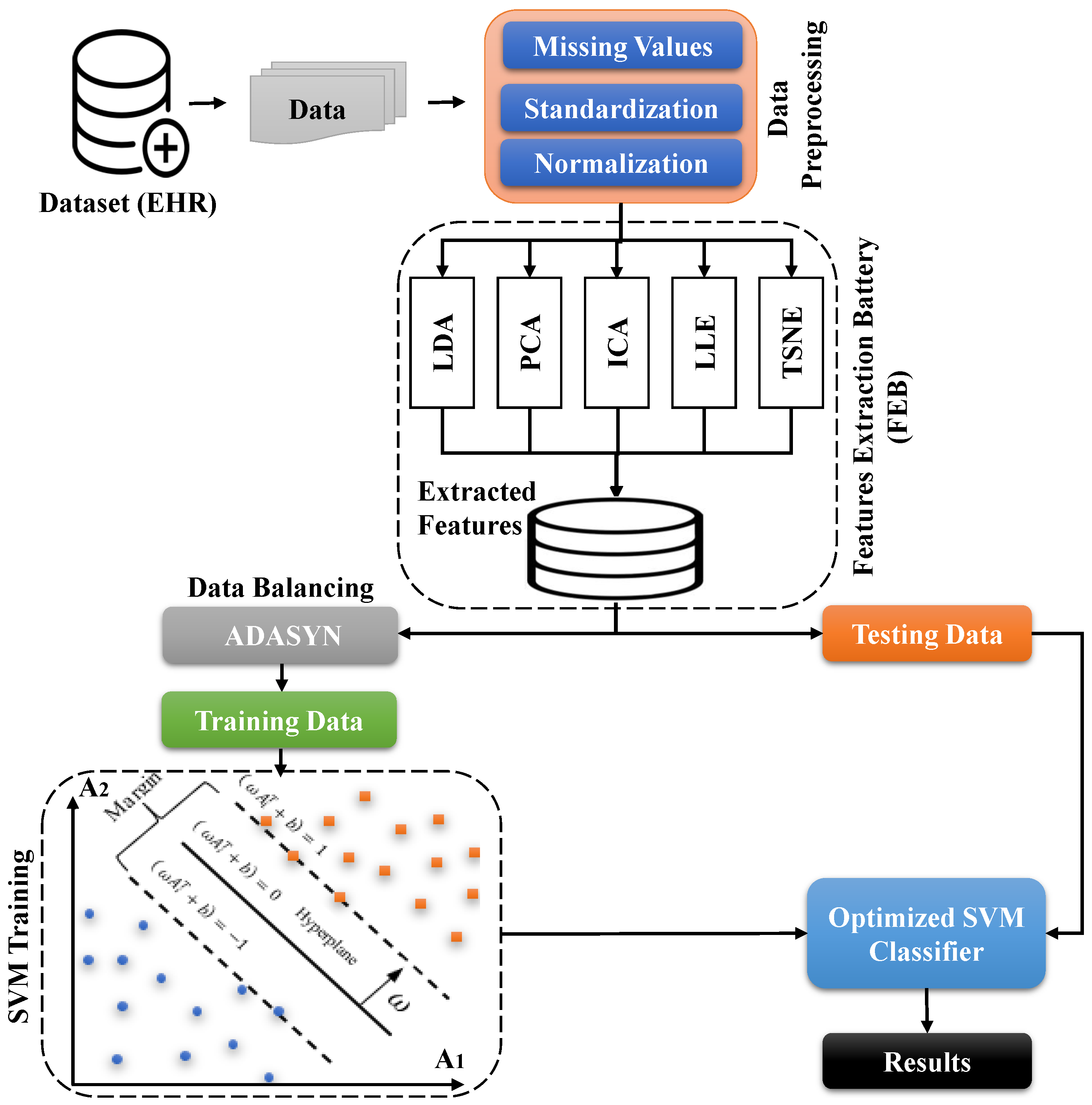

Proposed Work

3. Validation and Evaluation

4. Experimental Results

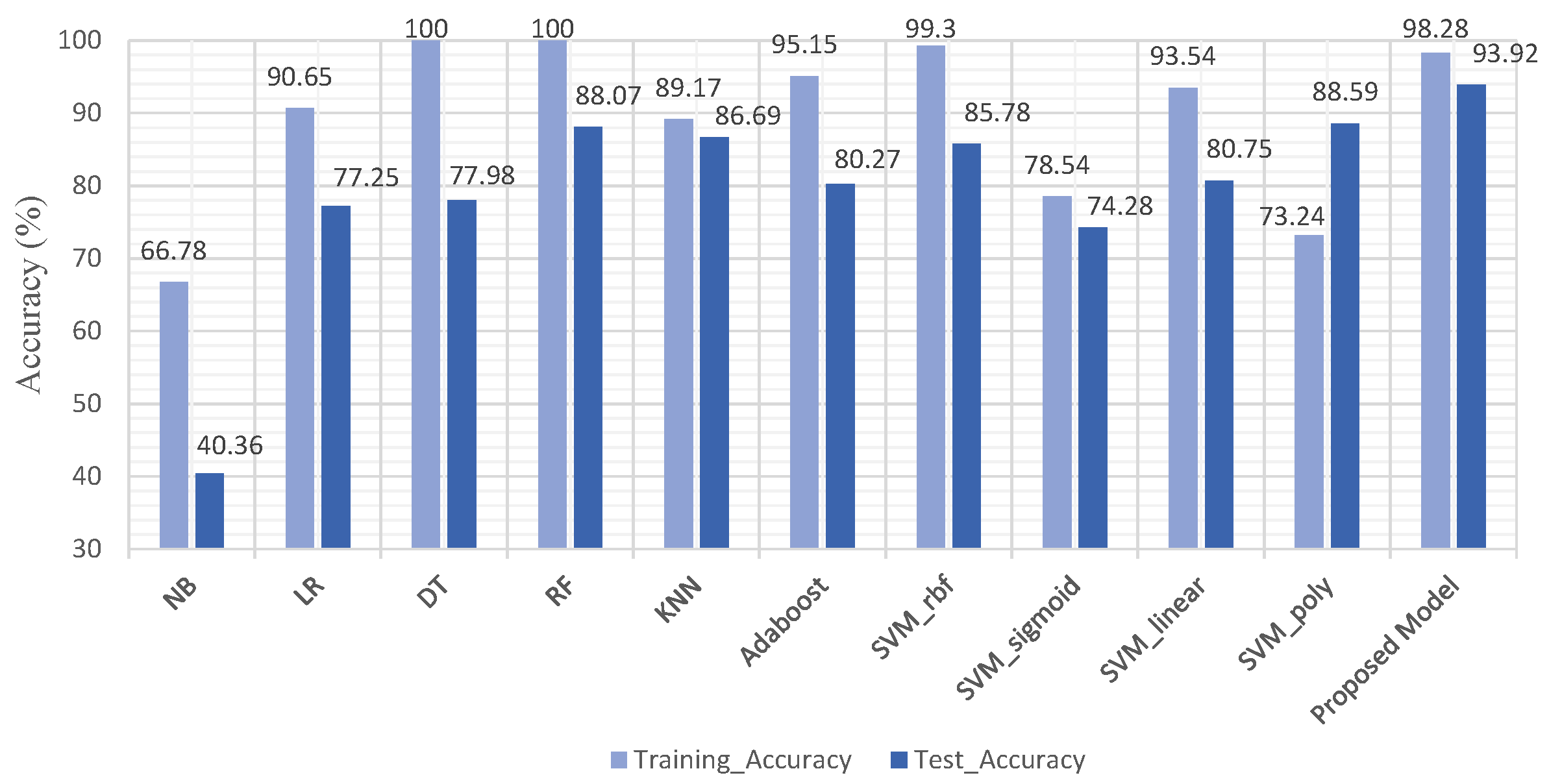

4.1. Experiment No.1: Performance of ML Models Using All Features

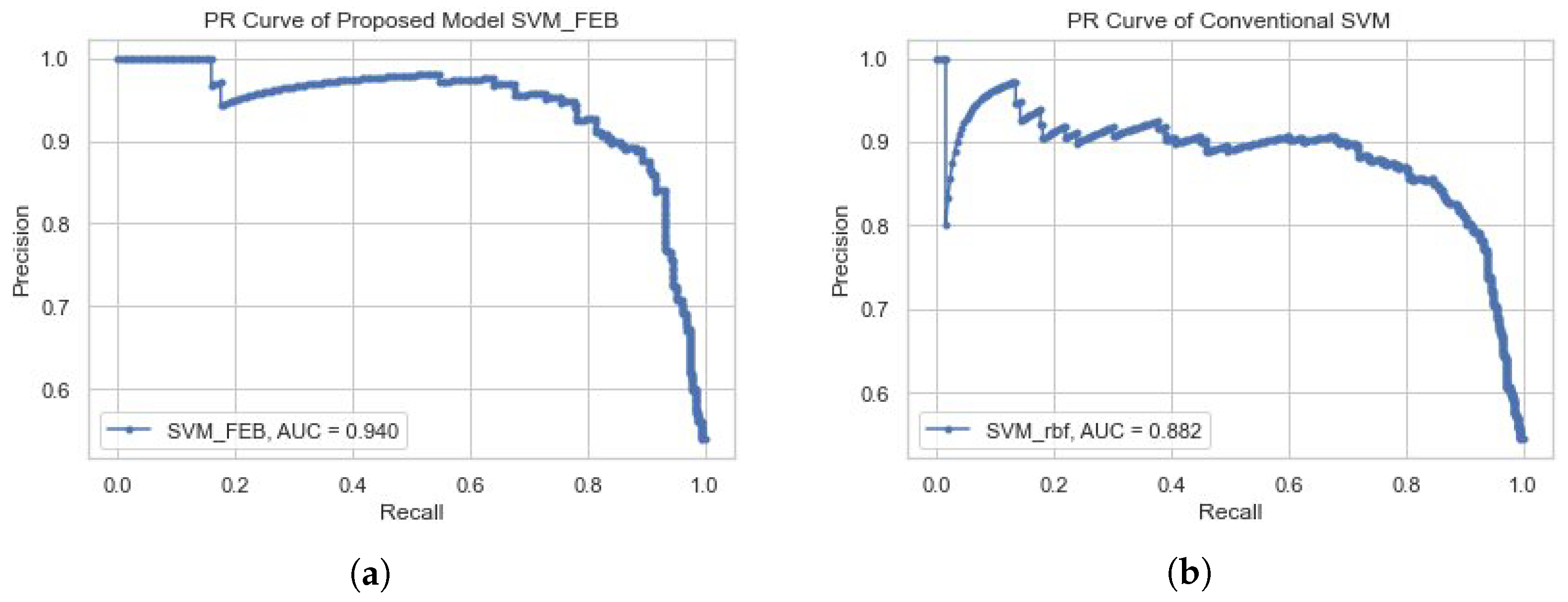

4.2. Experiment No.2: Performance of Proposed Model SVM-FEB

4.3. Experiment No.3: Performance of ML Models Based on FEB

4.4. Comparison of Dementia Prediction Methods

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lo, R.Y. The borderland between normal aging and dementia. Tzu-Chi Med. J. 2017, 29, 65. [Google Scholar] [CrossRef] [PubMed]

- Vrijsen, J.; Matulessij, T.; Joxhorst, T.; de Rooij, S.E.; Smidt, N. Knowledge, health beliefs and attitudes towards dementia and dementia risk reduction among the Dutch general population: A cross-sectional study. BMC Public Health 2021, 21, 857. [Google Scholar] [CrossRef] [PubMed]

- Who. Dementia. Available online: https://www.who.int/news-room/fact-sheets/detail/dementia (accessed on 21 December 2022).

- Duchesne, S.; Caroli, A.; Geroldi, C.; Barillot, C.; Frisoni, G.B.; Collins, D.L. MRI-based automated computer classification of probable AD versus normal controls. IEEE Trans. Med. Imaging 2008, 27, 509–520. [Google Scholar] [CrossRef] [PubMed]

- Lai, C.L.; Lin, R.T.; Liou, L.M.; Liu, C.K. The role of event-related potentials in cognitive decline in Alzheimer’s disease. Clin. Neurophysiol. 2010, 121, 194–199. [Google Scholar] [CrossRef]

- Patel, T.; Polikar, R.; Davatzikos, C.; Clark, C.M. EEG and MRI data fusion for early diagnosis of Alzheimer’s disease. In Proceedings of the 2008 30th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Vancouver, BC, Canada, 20–25 August 2008; pp. 1757–1760. [Google Scholar]

- Patnode, C.D.; Perdue, L.A.; Rossom, R.C.; Rushkin, M.C.; Redmond, N.; Thomas, R.G.; Lin, J.S. Screening for cognitive impairment in older adults: Updated evidence report and systematic review for the US Preventive Services Task Force. JAMA 2020, 323, 764–785. [Google Scholar] [CrossRef]

- Javeed, A.; Rizvi, S.S.; Zhou, S.; Riaz, R.; Khan, S.U.; Kwon, S.J. Heart risk failure prediction using a novel feature selection method for feature refinement and neural network for classification. Mob. Inf. Syst. 2020, 2020, 884315. [Google Scholar] [CrossRef]

- Javeed, A.; Zhou, S.; Yongjian, L.; Qasim, I.; Noor, A.; Nour, R. An intelligent learning system based on random search algorithm and optimized random forest model for improved heart disease detection. IEEE Access 2019, 7, 180235–180243. [Google Scholar] [CrossRef]

- Javeed, A.; Khan, S.U.; Ali, L.; Ali, S.; Imrana, Y.; Rahman, A. Machine learning-based automated diagnostic systems developed for heart failure prediction using different types of data modalities: A systematic review and future directions. Comput. Math. Methods Med. 2022, 2022, 9288452. [Google Scholar] [CrossRef]

- Ali, L.; Zhu, C.; Golilarz, N.A.; Javeed, A.; Zhou, M.; Liu, Y. Reliable Parkinson’s disease detection by analyzing handwritten drawings: Construction of an unbiased cascaded learning system based on feature selection and adaptive boosting model. IEEE Access 2019, 7, 116480–116489. [Google Scholar] [CrossRef]

- Akbar, W.; Wu, W.-p.; Saleem, S.; Farhan, M.; Saleem, M.A.; Javeed, A.; Ali, L. Development of Hepatitis Disease Detection System by Exploiting Sparsity in Linear Support Vector Machine to Improve Strength of AdaBoost Ensemble Model. Mob. Inf. Syst. 2020, 2020, 8870240. [Google Scholar] [CrossRef]

- Javeed, A.; Ali, L.; Mohammed Seid, A.; Ali, A.; Khan, D.; Imrana, Y. A Clinical Decision Support System (CDSS) for Unbiased Prediction of Caesarean Section Based on Features Extraction and Optimized Classification. Comput. Intell. Neurosci. 2022, 2022, 1901735. [Google Scholar] [CrossRef] [PubMed]

- Salem, F.A.; Chaaya, M.; Ghannam, H.; Al Feel, R.E.; El Asmar, K. Regression based machine learning model for dementia diagnosis in a community setting. Alzheimers Dement. 2021, 17, e053839. [Google Scholar] [CrossRef]

- Dallora, A.L.; Minku, L.; Mendes, E.; Rennemark, M.; Anderberg, P.; Sanmartin Berglund, J. Multifactorial 10-year prior diagnosis prediction model of dementia. Int. J. Environ. Res. Public Health 2020, 17, 6674. [Google Scholar] [CrossRef] [PubMed]

- Garcia-Gutierrez, F.; Delgado-Alvarez, A.; Delgado-Alonso, C.; Díaz-Álvarez, J.; Pytel, V.; Valles-Salgado, M.; Gil, M.J.; Hernández-Lorenzo, L.; Matías-Guiu, J.; Ayala, J.L.; et al. Diagnosis of Alzheimer’s disease and behavioural variant frontotemporal dementia with machine learning-aided neuropsychological assessment using feature engineering and genetic algorithms. Int. J. Geriatr. Psychiatry 2022, 5667. [Google Scholar] [CrossRef]

- Mirzaei, G.; Adeli, H. Machine learning techniques for diagnosis of alzheimer disease, mild cognitive disorder, and other types of dementia. Biomed. Signal Process. Control 2022, 72, 103293. [Google Scholar] [CrossRef]

- Hsiu, H.; Lin, S.K.; Weng, W.L.; Hung, C.M.; Chang, C.K.; Lee, C.C.; Chen, C.T. Discrimination of the Cognitive Function of Community Subjects Using the Arterial Pulse Spectrum and Machine-Learning Analysis. Sensors 2022, 22, 806. [Google Scholar] [CrossRef]

- Shahzad, A.; Dadlani, A.; Lee, H.; Kim, K. Automated Prescreening of Mild Cognitive Impairment Using Shank-Mounted Inertial Sensors Based Gait Biomarkers. IEEE Access 2022, 10, 15835. [Google Scholar] [CrossRef]

- World Health Organization. Dementia: A Public Health Priority; World Health Organization: Geneva, Switzerland, 2012.

- Lagergren, M.; Fratiglioni, L.; Hallberg, I.R.; Berglund, J.; Elmstahl, S.; Hagberg, B.; Holst, G.; Rennemark, M.; Sjolund, B.M.; Thorslund, M.; et al. A longitudinal study integrating population, care and social services data. The Swedish National study on Aging and Care (SNAC). Aging Clin. Exp. Res. 2004, 16, 158–168. [Google Scholar] [CrossRef]

- Nunes, B.; Silva, R.D.; Cruz, V.T.; Roriz, J.M.; Pais, J.; Silva, M.C. Prevalence and pattern of cognitive impairment in rural and urban populations from Northern Portugal. BMC Neurol. 2010, 10, 42. [Google Scholar] [CrossRef]

- Killin, L.O.; Starr, J.M.; Shiue, I.J.; Russ, T.C. Environmental risk factors for dementia: A systematic review. BMC Geriatr. 2016, 16, 175. [Google Scholar] [CrossRef]

- Yu, J.T.; Xu, W.; Tan, C.C.; Andrieu, S.; Suckling, J.; Evangelou, E.; Pan, A.; Zhang, C.; Jia, J.; Feng, L.; et al. Evidence-based prevention of Alzheimer’s disease: Systematic review and meta-analysis of 243 observational prospective studies and 153 randomised controlled trials. J. Neurol. Neurosurg. Psychiatry 2020, 91, 1201–1209. [Google Scholar] [CrossRef] [PubMed]

- Arvanitakis, Z.; Shah, R.C.; Bennett, D.A. Diagnosis and management of dementia. JAMA 2019, 322, 1589–1599. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S. Nearest neighbor selection for iteratively kNN imputation. J. Syst. Softw. 2012, 85, 2541–2552. [Google Scholar] [CrossRef]

- Loughrey, J.; Cunningham, P. Overfitting in wrapper-based feature subset selection: The harder you try the worse it gets. In Proceedings of the International Conference on Innovative Techniques and Applications of Artificial Intelligence, 13 December 2004; Springer: Cambridge, UK, 2004; pp. 33–43. [Google Scholar]

- Pourtaheri, Z.K.; Zahiri, S.H. Ensemble classifiers with improved overfitting. In Proceedings of the 2016 1st Conference on Swarm Intelligence and Evolutionary Computation (CSIEC), Bam, Iran, 9–11 March 2016; pp. 93–97. [Google Scholar]

- Alpaydin, E. Introduction to Machine Learning; MIT Press: Cambridge, MA, USA, 2020. [Google Scholar]

- He, H.; Bai, Y.; Garcia, E.A.; Li, S. ADASYN: Adaptive synthetic sampling approach for imbalanced learning. In Proceedings of the 2008 IEEE International Joint Conference on Neural Networks (IEEE World Congress on computational Intelligence), Hong Kong, China, 1–8 June 2008; pp. 1322–1328. [Google Scholar]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Javeed, A.; Dallora, A.L.; Berglund, J.S.; Anderberg, P. An Intelligent Learning System for Unbiased Prediction of Dementia Based on Autoencoder and Adaboost Ensemble Learning. Life 2022, 12, 1097. [Google Scholar] [CrossRef] [PubMed]

- Cho, P.C.; Chen, W.H. A double layer dementia diagnosis system using machine learning techniques. In Proceedings of the International Conference on Engineering Applications of Neural Networks, London, UK, 20–23 September 2012; pp. 402–412. [Google Scholar]

- Gurevich, P.; Stuke, H.; Kastrup, A.; Stuke, H.; Hildebrandt, H. Neuropsychological testing and machine learning distinguish Alzheimer’s disease from other causes for cognitive impairment. Front. Aging Neurosci. 2017, 9, 114. [Google Scholar] [CrossRef] [PubMed]

- Stamate, D.; Alghamdi, W.; Ogg, J.; Hoile, R.; Murtagh, F. A machine learning framework for predicting dementia and mild cognitive impairment. In Proceedings of the 2018 17th IEEE International Conference on Machine Learning and Applications (ICMLA), Orlando, FL, USA, 17–20 December 2018; pp. 671–678. [Google Scholar]

- Visser, P.J.; Lovestone, S.; Legido-Quigley, C. A metabolite-Based Machine Learning Approach to Diagnose Alzheimer-Type Dementia in Blood: Results from the European Medical Information Framework for Alzheimer Disease Biomarker Discovery Cohort; Wiley Online Library: Hoboken, NJ, USA, 2019. [Google Scholar]

- Karaglani, M.; Gourlia, K.; Tsamardinos, I.; Chatzaki, E. Accurate blood-based diagnostic biosignatures for Alzheimer’s disease via automated machine learning. J. Clin. Med. 2020, 9, 3016. [Google Scholar] [CrossRef]

- Ryzhikova, E.; Ralbovsky, N.M.; Sikirzhytski, V.; Kazakov, O.; Halamkova, L.; Quinn, J.; Zimmerman, E.A.; Lednev, I.K. Raman spectroscopy and machine learning for biomedical applications: Alzheimer’s disease diagnosis based on the analysis of cerebrospinal fluid. Spectrochim. Acta Part A Mol. Biomol. Spectrosc. 2021, 248, 119188. [Google Scholar] [CrossRef]

- Javeed, A.; Dallora, A.L.; Berglund, J.S.; Arif, A.A.; Liaqata, L.; Anderberg, P. Machine Learning for Dementia Prediction: A Systematic Review and Future Research Directions. J Med Syst. 2023, 47, 1573. [Google Scholar] [CrossRef]

- Antonovsky, A. The structure and properties of the sense of coherence scale. Soc. Sci. Med. 1993, 36, 725–733. [Google Scholar] [CrossRef]

- Wechsler, D. WAIS-III Administration and Scoring Manual; The Psychological Corporation: San Antonio, TX, USA, 1997. [Google Scholar]

- Livingston, G.; Blizard, B.; Mann, A. Does sleep disturbance predict depression in elderly people? A study in inner London. Br. J. Gen. Pract. 1993, 43, 445–448. [Google Scholar] [PubMed]

- Brooks, R.; Group, E. EuroQol: The current state of play. Health Policy 1996, 37, 53–72. [Google Scholar] [CrossRef] [PubMed]

- Katz, S. Assessing Self-maintenance: Activities of Daily Living, Mobility, and Instrumental Activities of Daily Living. J. Am. Geriatr. Soc. 1983, 31, 721–727. [Google Scholar] [CrossRef] [PubMed]

- Lawton, M.P.; Brody, E.M. Assessment of Older People: Self-Maintaining and Instrumental Activities of Daily Living. Gerontologist 1969, 9, 179–186. [Google Scholar] [CrossRef] [PubMed]

- Folstein, M.F.; Folstein, S.E.; McHugh, P.R. “Mini-Mental State”. A Practical Method for Grading the Cognitive State of Patients for the Clinician. J. Psychiatr. Res. 1975, 12, 189–198. [Google Scholar] [CrossRef] [PubMed]

- Agrell, B.; Dehlin, O. The clock-drawing test. Age Ageing 1998, 27, 399–403. [Google Scholar] [CrossRef]

- Jenkinson, C.; Layte, R. Development and testing of the UK SF-12 (short form health survey). J. Health Serv. Res. Policy 1997, 2, 14–18. [Google Scholar] [CrossRef] [PubMed]

- Montgomery, S.A.; Åsberg, M. A New Depression Scale Designed to be Sensitive to Change. Br. J. Psychiatry 1979, 134, 382–389. [Google Scholar] [CrossRef]

| Age_Group | Male | Female | Healthy_Subject | Dementia_Cases |

|---|---|---|---|---|

| 60 | 82 | 82 | 164 | 02 |

| 66 | 75 | 95 | 170 | 06 |

| 72 | 50 | 74 | 124 | 10 |

| 78 | 41 | 50 | 91 | 17 |

| 81 | 35 | 46 | 81 | 19 |

| 84 | 26 | 42 | 68 | 22 |

| 87 | 04 | 19 | 23 | 14 |

| 90+ | 00 | 05 | 05 | 01 |

| Total | 313 | 413 | 726 | 91 |

| Feature_Class | Feature_Names | Total |

|---|---|---|

| Demographic | Age, Gender | 02 |

| Lifestyle | Light Exercise, Alcohol Consumption, Alcohol Quantity, Work Status, Physical Workload, Present Smoker, Past Smoker, Number of Cigarettes a Day, Social Activities, Physically Demanding Activities, Leisure Activities | 11 |

| Social | Education, Religious Belief, Religious Activities, Voluntary Association, Social Network, Support Network, Loneliness | 07 |

| Physical Examination | Body Mass Index (BMI), Pain in the last 4 weeks, Heart Rate Sitting, Heart Rate Lying, Blood Pressure on the Right Arm, Hand Strength in Right Arm in a 10 s Interval, Hand Strength in Left Arm in a 10 s Interval, Feeling of Safety from Rising from a Chair, Assessment of Rising from a Chair, Single-Leg Standing with Right Leg, Single-Leg Standing with Left Leg, Dental Prosthesis, Number of Teeth | 13 |

| Psychological | Memory Loss, Memory Decline, Memory Decline 2, Abstract Thinking, Personality Change, Sense of Identity | 06 |

| Health Instruments | Sense of Coherence, Digit Span Test, Backwards Digit Span Test, Livingston Index, EQ5D Test, Activities of Daily Living, Instrumental Activities of Daily Living, Mini-Mental State Examination, Clock Drawing Test, Mental Composite Score of the SF-12 Health Survey, Physical Composite Score of the SF-12 Health Survey, Comprehensive Psychopathological Rating Scale | 12 |

| Medical History | Number of Medications, Family History of Importance, Myocardial Infarction, Arrhythmia, Heart Failure, Stroke, TIA/RIND, Diabetes Type 1, Diabetes Type 2, Thyroid Disease, Cancer, Epilepsy, Atrial Fibrillation, Cardiovascular Ischemia, Parkinson’s Disease, Depression, Other Psychiatric Diseases, Snoring, Sleep Apnea, Hip Fracture, Head Trauma, Developmental Disabilities, High Blood Pressure | 22 |

| Biochemical Test | Hemoglobin Analysis, C-Reactive Protein Analysis | 02 |

| Model | Acc._train | Acc._test | Precision | Recall | F1_score | MCC | 95% CI 1 |

|---|---|---|---|---|---|---|---|

| NB | 66.78 | 40.36 | 56.00 | 63.00 | 40.00 | 0.1695 | 0.77, 0.87 |

| LR | 90.65 | 77.25 | 55.00 | 57.00 | 77.00 | 0.1124 | 0.82, 0.91 |

| DT | 100 | 77.98 | 49.00 | 49.00 | 78.00 | 0.1157 | 0.78, 0.88 |

| RF | 100 | 88.07 | 55.00 | 51.00 | 88.00 | 0.4058 | 0.85, 0.93 |

| KNN | 89.17 | 86.69 | 51.00 | 50.00 | 87.00 | 0.3851 | 0.82, 0.92 |

| Adaboost | 95.15 | 80.27 | 66.00 | 51.00 | 80.00 | 0.1834 | 0.83, 0.91 |

| SVM_rbf | 99.30 | 85.78 | 88.00 | 56.00 | 86.00 | 0.2131 | 0.84, 0.93 |

| SVM_sigmoid | 78.54 | 74.28 | 56.00 | 63.00 | 74.00 | 0.1986 | 0.83, 0.92 |

| SVM_linear | 93.54 | 80.75 | 58.00 | 61.00 | 81.00 | 0.2042 | 0.82, 0.91 |

| SVM_poly | 73.24 | 88.59 | 45.00 | 50.00 | 89.00 | 0.2331 | 0.83, 0.92 |

| Model | Hyper. | Acc._train | Acc._test | Precision | Recall | F1_score | MCC |

|---|---|---|---|---|---|---|---|

| SVM_rbf | C:100, G:0.1 | 99.77 | 90.88 | 92.39 | 87.62 | 89.50 | 0.4178 |

| SVM_rbf | C:10, G:0.1 | 97.48 | 91.16 | 92.81 | 86.59 | 90.00 | 0.4216 |

| SVM_rbf | C:100, G:0.1 | 99.88 | 91.79 | 92.22 | 85.56 | 91.00 | 0.4487 |

| SVM_rbf | C:10, G:1 | 98.28 | 91.83 | 91.80 | 86.59 | 89.12 | 0.4387 |

| SVM_rbf | C:10, G:1 | 98.50 | 92.46 | 89.67 | 85.05 | 90.00 | 0.4725 |

| SVM_rbf | C:300, G:0.01 | 95.46 | 92.02 | 93.88 | 87.11 | 92.00 | 0.4747 |

| SVM_rbf | C:10, G:1 | 100 | 92.70 | 89.37 | 95.36 | 92.00 | 0.4852 |

| SVM_rbf | C:10, G:0.01 | 98.74 | 92.95 | 93.82 | 86.08 | 92.50 | 0.4810 |

| SVM_rbf | C:100, G:0.1 | 98.41 | 93.31 | 92.34 | 87.11 | 93.00 | 0.4853 |

| SVM_rbf | C:10, G:0.1 | 98.28 | 93.92 | 91.80 | 86.59 | 89.12 | 0.4987 |

| SVM_linear | C: 0.1, G: 01 | 84.54 | 82.99 | 96.15 | 77.31 | 85.71 | 0.3630 |

| SVM_sigmoid | C: 10, G: 0.001 | 83.23 | 82.97 | 96.52 | 71.64 | 82.00 | 0.3359 |

| SVM_poly | C:01, G:01 | 88.23 | 84.57 | 95.75 | 90.28 | 84.00 | 0.3732 |

| Model | Hyper. | Acc._train | Acc._test | Precision | Recall | F1_score | MCC |

|---|---|---|---|---|---|---|---|

| NB | Var:0.006 | 79.40 | 75.22 | 62.00 | 77.00 | 75.00 | 0.3642 |

| LR | C:100, S: newton, p:l2 | 84.46 | 78.36 | 64.00 | 79.00 | 78.00 | 0.3954 |

| DT | D: 03, E:04 | 87.80 | 86.00 | 96.02 | 74.74 | 85.00 | 0.3374 |

| RF | D:10, Ne:100. E:01 | 97.95 | 90.15 | 94.79 | 84.53 | 89.00 | 0.4286 |

| KNN | Lf:01, K:01, P:02 | 100 | 90.54 | 92.98 | 81.95 | 87.00 | 0.4324 |

| Adaboost | Lr:01, Ne: 10 | 86.77 | 85.63 | 79.38 | 86.76 | 86.00 | 0.3567 |

| SVM_Linear | C: 0.1, G: 01 | 84.54 | 84.99 | 96.15 | 77.31 | 85.71 | 0.3630 |

| SVM_Sigmoid | C: 10, G: 0.001 | 83.23 | 82.97 | 96.52 | 71.64 | 82.00 | 0.3359 |

| SVM_Poly | C:01, G:01 | 88.23 | 84.57 | 95.75 | 90.28 | 84.00 | 0.3732 |

| SVM_rbf | C:10, G:0.1 | 98.28 | 93.92 | 91.80 | 86.59 | 89.12 | 0.4987 |

| Study (Year) | Method | Accuracy (%) | Balancing |

|---|---|---|---|

| P. C. Cho and W. H. Chen (2012) [33] | PNNs | 83.00 | No |

| P. Gurevich et al. (2017) [34] | SVM | 89.00 | Yes |

| D. Stamate et al. (2018) [35] | Gradient Boosting | 88.00 | Yes |

| Visser et al. (2019) [36] | XGBoost+ RF | 88.00 | No |

| Dallora et al. (2020) [15] | DT | 74.50 | Yes |

| M. Karaglani et al. (2020) [37] | RF | 84.60 | No |

| E. Ryzhikova et al. (2021) [38] | ANN + SVM | 84.00 | No |

| F. A. Salem et al. (2021) [14] | RF | 88.00 | Yes |

| F. G. Gutierrez et al. (2022) [16] | GA | 84.00 | No |

| G. Mirzaei and H. Adeli (2022) [18] | MLP | 70.32 | No |

| A. Shahzad et al. (2022) [19] | SVM | 71.67 | No |

| A. Javeed et al. (2022) [32] | Autoencoder + Adaboost | 90.23 | Yes |

| Proposed Model (2023) | FEB + SVM | 93.92 | Yes |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Javeed, A.; Dallora, A.L.; Berglund, J.S.; Idrisoglu, A.; Ali, L.; Rauf, H.T.; Anderberg, P. Early Prediction of Dementia Using Feature Extraction Battery (FEB) and Optimized Support Vector Machine (SVM) for Classification. Biomedicines 2023, 11, 439. https://doi.org/10.3390/biomedicines11020439

Javeed A, Dallora AL, Berglund JS, Idrisoglu A, Ali L, Rauf HT, Anderberg P. Early Prediction of Dementia Using Feature Extraction Battery (FEB) and Optimized Support Vector Machine (SVM) for Classification. Biomedicines. 2023; 11(2):439. https://doi.org/10.3390/biomedicines11020439

Chicago/Turabian StyleJaveed, Ashir, Ana Luiza Dallora, Johan Sanmartin Berglund, Alper Idrisoglu, Liaqat Ali, Hafiz Tayyab Rauf, and Peter Anderberg. 2023. "Early Prediction of Dementia Using Feature Extraction Battery (FEB) and Optimized Support Vector Machine (SVM) for Classification" Biomedicines 11, no. 2: 439. https://doi.org/10.3390/biomedicines11020439

APA StyleJaveed, A., Dallora, A. L., Berglund, J. S., Idrisoglu, A., Ali, L., Rauf, H. T., & Anderberg, P. (2023). Early Prediction of Dementia Using Feature Extraction Battery (FEB) and Optimized Support Vector Machine (SVM) for Classification. Biomedicines, 11(2), 439. https://doi.org/10.3390/biomedicines11020439