A Systematic Review of Deep-Learning Methods for Intracranial Aneurysm Detection in CT Angiography

Abstract

:1. Introduction

2. Materials and Methods

2.1. Literature Search

2.2. Selection Criteria

2.3. Data Extraction and Quality Assessment

2.4. Data Synthesis and Statistical Analysis

3. Results

3.1. Characteristics of Included Studies

3.2. Test Dataset Characteristics

3.3. Sensitivity Analysis

3.4. Specificity Analysis

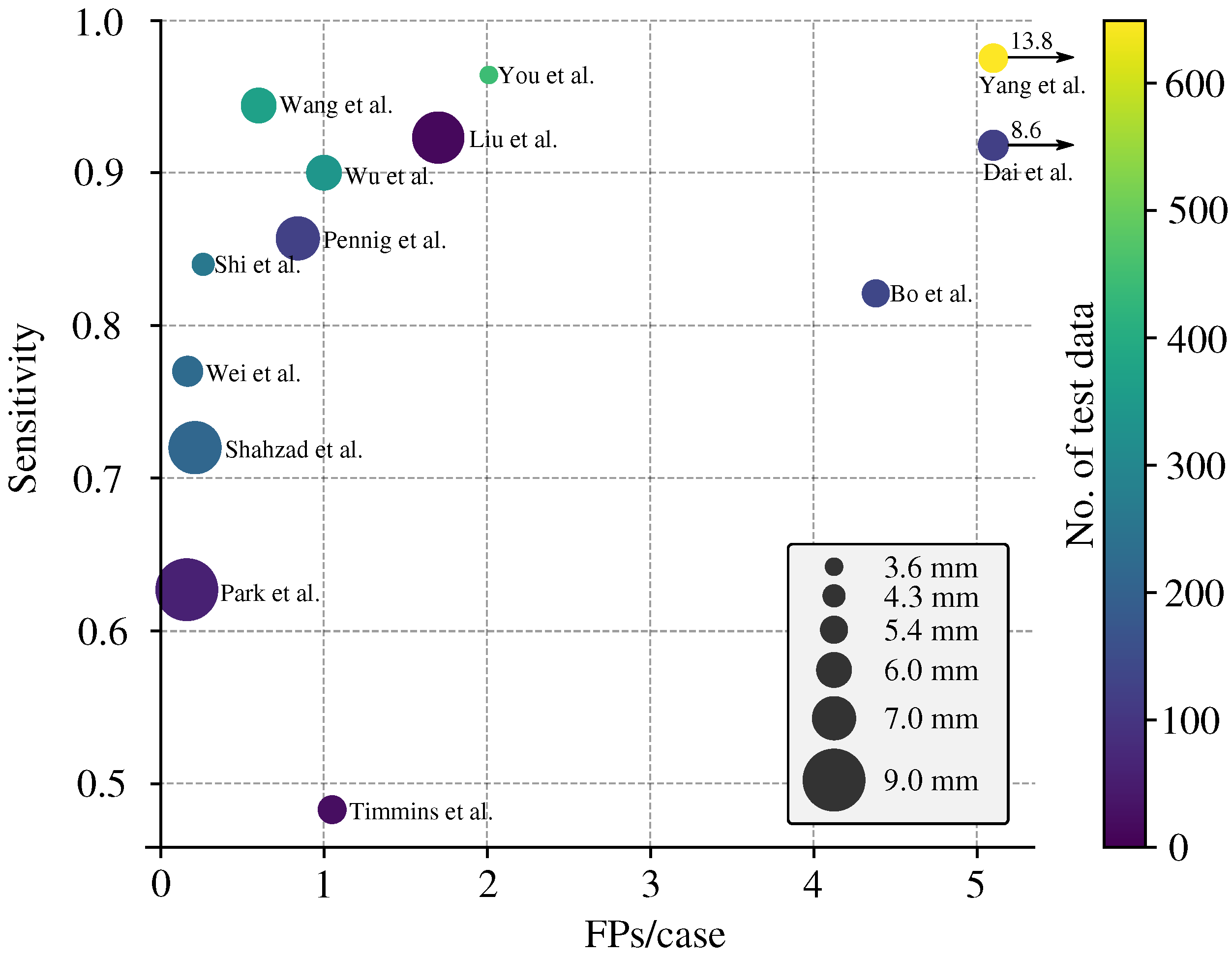

3.5. Enhanced FROC Curve

4. Discussion

4.1. Summary of Findings

4.2. Limitations of Reviewed Studies

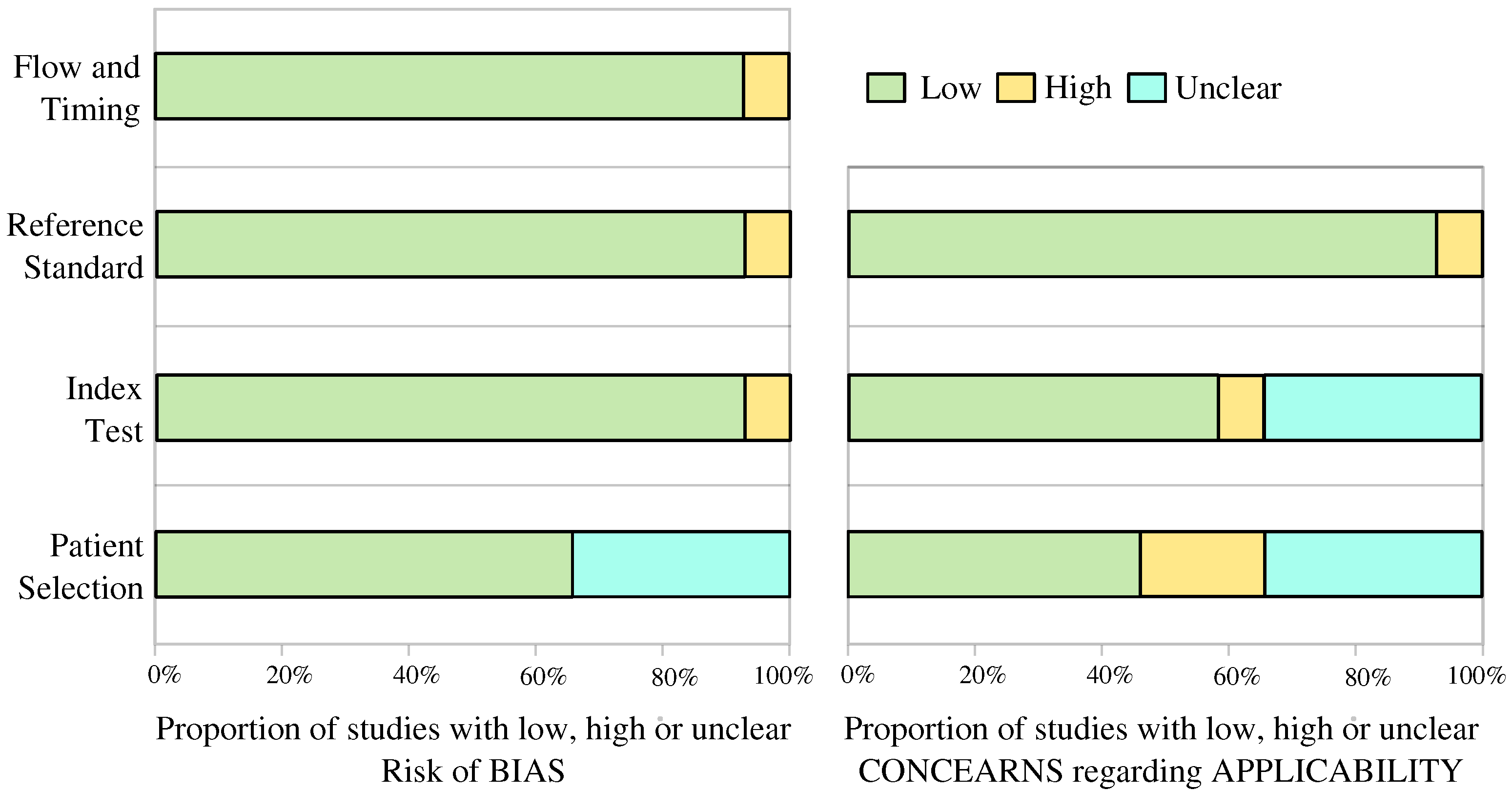

4.3. Bias and Applicability Assessment

4.4. Current State of the Art Performance

4.5. Size-Based Lesion-Level Sensitivity

4.6. Guidelines on Evaluation Metrics

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| DLM | Deep-learning model |

| CTA | Computer Tomography Angiography |

| MRA | Magnetic Resonance Angiography |

| DSA | digital subtraction angiography |

| FPs/image | False Positives Per Image |

| FROC | Free-Response Receiver Operating Characteristic |

| IA | Intracranial aneurysm |

Appendix A

Appendix B

Appendix C

| Studies | Risk of Bias | Applicability Concerns | |||||

|---|---|---|---|---|---|---|---|

| Patient Selection | Index Test | Reference Standard | Flown and Timing | Patient Selection | Index Test | Reference Standard | |

| Wang et al. [19] |  |  |  |  |  | ? |  |

| Liu et al. [20] |  |  |  |  | ? |  |  |

| You et al. [21] |  |  |  |  |  |  |  |

| Wu et al. [22] | ? |  |  |  | ? |  |  |

| Wei et al. [23] | ? |  |  |  | ? |  |  |

| Bo et al. [24] | ? |  |  |  |  |  |  |

| Pennig et al. [9] |  |  |  |  | ? |  |  |

| Meng et al. [31] |  |  |  |  |  |  |  |

| Yang et al. [8] |  |  |  |  |  |  |  |

| Shahzad et al. [25] |  |  |  |  | ? |  |  |

| Shi et al. [26] |  |  |  |  |  |  |  |

| Dai et al. [27] | ? |  |  |  |  | ? |  |

| Park et al. [28] |  |  |  |  |  | ? |  |

| Timmins et al. [29] | ? |  |  |  |  | ? |  |

| Heit et al. [30] |  |  |  |  |  | ? |  |

Low Risk; ? Unclear Risk;

Low Risk; ? Unclear Risk;  High Risk.

High Risk.References

- Van Gijn, J.; Kerr, R.S.; Rinkel, G.J. Subarachnoid haemorrhage. Lancet 2007, 369, 306–318. [Google Scholar] [CrossRef] [PubMed]

- Etminan, N.; Chang, H.S.; Hackenberg, K.; De Rooij, N.K.; Vergouwen, M.D.; Rinkel, G.J.; Algra, A. Worldwide incidence of aneurysmal subarachnoid hemorrhage according to region, time period, blood pressure, and smoking prevalence in the population: A systematic review and meta-analysis. JAMA Neurol. 2019, 76, 588–597. [Google Scholar] [CrossRef] [PubMed]

- Hoh, B.L.; Ko, N.U.; Amin-Hanjani, S.; Chou, S.H.Y.; Cruz-Flores, S.; Dangayach, N.S.; Derdeyn, C.P.; Du, R.; Hänggi, D.; Hetts, S.W.; et al. 2023 Guideline for the management of patients with aneurysmal subarachnoid hemorrhage: A guideline from the American Heart Association/American Stroke Association. Stroke 2023, 54, e314–e370. [Google Scholar] [CrossRef] [PubMed]

- Siyanaki, M.R.H.; Lucke-Wold, B.; Khan, M. Exploration of treatments for subarachnoid hemorrhage. J. Biomed Res. 2022, 3, 48. [Google Scholar]

- Rodriguez-Régent, C.; Edjlali-Goujon, M.; Trystram, D.; Boulouis, G.; Ben Hassen, W.; Godon-Hardy, S.; Nataf, F.; Machet, A.; Legrand, L.; Ladoux, A.; et al. Non-invasive diagnosis of intracranial aneurysms. Diagn. Interv. Imaging 2014, 95, 1163–1174. [Google Scholar] [CrossRef] [PubMed]

- Li, M.H.; Li, Y.D.; Gu, B.X.; Cheng, Y.S.; Wang, W.; Tan, H.Q.; Chen, Y.C. Accurate Diagnosis of Small Cerebral Aneurysms ≤5 mm in Diameter with 3.0-T MR Angiography. Radiology 2014, 271, 553–560. [Google Scholar] [CrossRef] [PubMed]

- Din, M.; Agarwal, S.; Grzeda, M.; Wood, D.A.; Modat, M.; Booth, T.C. Detection of cerebral aneurysms using artificial intelligence: A systematic review and meta-analysis. J. Neurointerv. Surg. 2023, 15, 262–271. [Google Scholar] [CrossRef] [PubMed]

- Yang, J.; Xie, M.; Hu, C.; Alwalid, O.; Xu, Y.; Liu, J.; Jin, T.; Li, C.; Tu, D.; Liu, X.; et al. Deep learning for detecting cerebral aneurysms with CT angiography. Radiology 2021, 298, 155–163. [Google Scholar] [CrossRef]

- Pennig, L.; Hoyer, U.C.I.; Krauskopf, A.; Shahzad, R.; Jünger, S.T.; Thiele, F.; Laukamp, K.R.; Grunz, J.P.; Perkuhn, M.; Schlamann, M.; et al. Deep learning assistance increases the detection sensitivity of radiologists for secondary intracranial aneurysms in subarachnoid hemorrhage. Neuroradiology 2021, 27, 1281–1286. [Google Scholar] [CrossRef]

- McDonald, R.J.; Schwartz, K.M.; Eckel, L.J.; Diehn, F.E.; Hunt, C.H.; Bartholmai, B.J.; Erickson, B.J.; Kallmes, D.F. The effects of changes in utilization and technological advancements of cross-sectional imaging on radiologist workload. Acad. Radiol. 2015, 22, 1191–1198. [Google Scholar] [CrossRef]

- Arimura, H.; Li, Q.; Korogi, Y.; Hirai, T.; Abe, H.; Yamashita, Y.; Katsuragawa, S.; Ikeda, R.; Doi, K. Automated computerized scheme for detection of unruptured intracranial aneurysms in three-dimensional magnetic resonance angiography1. Acad. Radiol. 2004, 11, 1093–1104. [Google Scholar] [CrossRef]

- Liu, X.; Faes, L.; Kale, A.U.; Wagner, S.K.; Fu, D.J.; Bruynseels, A.; Mahendiran, T.; Moraes, G.; Shamdas, M.; Kern, C.; et al. A comparison of deep learning performance against health-care professionals in detecting diseases from medical imaging: A systematic review and meta-analysis. Lancet Digit. Health 2019, 1, e271–e297. [Google Scholar] [CrossRef]

- Gu, F.; Wu, X.; Wu, W.; Wang, Z.; Yang, X.; Chen, Z.; Wang, Z.; Chen, G. Performance of deep learning in the detection of intracranial aneurysm: A systematic review and meta-analysis. Eur. J. Radiol. 2022, 155, 110457. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. Int. J. Surg. 2021, 88, 105906. [Google Scholar] [CrossRef]

- Bossuyt, P.; Davenport, C.; Deeks, J.; Hyde, C.; Leeflang, M.; Scholten, R. Cochrane Handbook for Systematic Reviews of Diagnostic Test Accuracy; Cochrane Collab: London, UK, 2013. [Google Scholar]

- Macaskill, P.; Gatsonis, C.; Deeks, J.; Harbord, R.; Takwoingi, Y. Cochrane Handbook for Systematic Reviews of Diagnostic Test Accuracy; John Wiley & Sons: Chichester, UK, 2010. [Google Scholar]

- Wang, J.; Zhu, H.; Wang, S.H.; Zhang, Y.D. A review of deep learning on medical image analysis. Mob. Netw. Appl. 2021, 26, 351–380. [Google Scholar] [CrossRef]

- Whiting, P.F.; Rutjes, A.W.; Westwood, M.E.; Mallett, S.; Deeks, J.J.; Reitsma, J.B.; Leeflang, M.M.; Sterne, J.A.; Bossuyt, P.M.; QUADAS-2 Group*. QUADAS-2: A revised tool for the quality assessment of diagnostic accuracy studies. Ann. Intern. Med. 2011, 155, 529–536. [Google Scholar] [CrossRef]

- Wang, J.; Sun, J.; Xu, J.; Lu, S.; Wang, H.; Huang, C.; Zhang, F.; Yu, Y.; Gao, X.; Wang, M.; et al. Detection of intracranial aneurysms using multiphase CT angiography with a deep learning model. Acad. Radiol. 2023, 30, 2477–2486. [Google Scholar] [CrossRef]

- Liu, X.; Mao, J.; Sun, N.; Yu, X.; Chai, L.; Tian, Y.; Wang, J.; Liang, J.; Tao, H.; Yuan, L.; et al. Deep Learning for Detection of Intracranial Aneurysms from Computed Tomography Angiography Images. J. Digit. Imaging 2023, 36, 114–123. [Google Scholar] [CrossRef]

- You, W.; Sun, Y.; Feng, J.; Wang, Z.; Li, L.; Chen, X.; Lv, J.; Tang, Y.; Deng, D.; Wei, D.; et al. Protocol and Preliminary Results of the Establishment of Intracranial Aneurysm Database for Artificial Intelligence Application Based on CTA Images. Front. Neurol. 2022, 13, 932933. [Google Scholar] [CrossRef]

- Wu, K.; Gu, D.; Qi, P.; Cao, X.; Wu, D.; Chen, L.; Qu, G.; Wang, J.; Pan, X.; Wang, X.; et al. Evaluation of an automated intracranial aneurysm detection and rupture analysis approach using cascade detection and classification networks. Comput. Med. Imaging Graph. 2022, 102, 102126. [Google Scholar] [CrossRef]

- Wei, X.; Jiang, J.; Cao, W.; Yu, H.; Deng, H.; Chen, J.; Bai, S.; Zhou, Z. Artificial intelligence assistance improves the accuracy and efficiency of intracranial aneurysm detection with CT angiography. Eur. J. Radiol. 2022, 149, 110169. [Google Scholar] [CrossRef] [PubMed]

- Bo, Z.H.; Qiao, H.; Tian, C.; Guo, Y.; Li, W.; Liang, T.; Li, D.; Liao, D.; Zeng, X.; Mei, L.; et al. Toward human intervention-free clinical diagnosis of intracranial aneurysm via deep neural network. Patterns 2021, 2, 100197. [Google Scholar] [CrossRef]

- Shahzad, R.; Pennig, L.; Goertz, L.; Thiele, F.; Kabbasch, C.; Schlamann, M.; Krischek, B.; Maintz, D.; Perkuhn, M.; Borggrefe, J. Fully automated detection and segmentation of intracranial aneurysms in subarachnoid hemorrhage on CTA using deep learning. Sci. Rep. 2020, 10, 21799. [Google Scholar] [CrossRef]

- Shi, Z.; Miao, C.; Schoepf, U.J.; Savage, R.H.; Dargis, D.M.; Pan, C.; Chai, X.; Li, X.L.; Xia, S.; Zhang, X.; et al. A clinically applicable deep-learning model for detecting intracranial aneurysm in computed tomography angiography images. Nat. Commun. 2020, 11, 6090. [Google Scholar] [CrossRef] [PubMed]

- Dai, X.; Huang, L.; Qian, Y.; Xia, S.; Chong, W.; Liu, J.; Di Ieva, A.; Hou, X.; Ou, C. Deep learning for automated cerebral aneurysm detection on computed tomography images. Int. J. Comput. Assist. Radiol. Surg. 2020, 15, 715–723. [Google Scholar] [CrossRef] [PubMed]

- Park, A.; Chute, C.; Rajpurkar, P.; Lou, J.; Ball, R.L.; Shpanskaya, K.; Jabarkheel, R.; Kim, L.H.; McKenna, E.; Tseng, J.; et al. Deep learning–assisted diagnosis of cerebral aneurysms using the HeadXNet model. JAMA Netw. Open 2019, 2, e195600. [Google Scholar] [CrossRef] [PubMed]

- Timmins, K.M.; Van der Schaaf, I.C.; Vos, I.N.; Ruigrok, Y.M.; Velthuis, B.K.; Kuijf, H.J. Geometric deep learning using vascular surface meshes for modality-independent unruptured intracranial aneurysm detection. IEEE Trans. Med. Imaging 2023. [Google Scholar] [CrossRef] [PubMed]

- Heit, J.J.; Honce, J.M.; Yedavalli, V.S.; Baccin, C.E.; Tatit, R.T.; Copeland, K.; Timpone, V.M. RAPID Aneurysm: Artificial intelligence for unruptured cerebral aneurysm detection on CT angiography. J. Stroke Cerebrovasc. Dis. 2022, 31, 106690. [Google Scholar] [CrossRef]

- Meng, C.; Yang, D.; Chen, D. Cerebral aneurysm image segmentation based on multi-modal convolutional neural network. Comput. Methods Programs Biomed. 2021, 208, 106285. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Shafiq, M.; Gu, Z. Deep Residual Learning for Image Recognition: A Survey. Appl. Sci. 2022, 12, 8972. [Google Scholar] [CrossRef]

- Kamnitsas, K.; Ledig, C.; Newcombe, V.F.; Simpson, J.P.; Kane, A.D.; Menon, D.K.; Rueckert, D.; Glocker, B. Efficient multi-scale 3D CNN with fully connected CRF for accurate brain lesion segmentation. Med. Image Anal. 2017, 36, 61–78. [Google Scholar] [CrossRef]

| Study | Publication Year | Modalities | Data Source | Deep Learning Model | Control Group | Data Availability |

|---|---|---|---|---|---|---|

| Wang et al. [19] | 2023 | CTA | multicenter | DAResUNet | yes | no |

| Liu et al. [20] | 2023 | CTA | multicenter | Deep Medic | no | U.R. |

| You et al. [21] | 2022 | CTA | multicenter | 3D-UNet | no | U.R. |

| Wu et al. [22] | 2022 | CTA | multicenter | Dual-channel ResNet | no | U.R. |

| Wei et al. [23] | 2022 | CTA | single center | ResUNet | no | no |

| Bo et al. [24] | 2021 | CTA | multicenter | GLIA-NET | yes | yes |

| Pennig et al. [9] | 2021 | CTA | single center | Deep Medic | no | U.R. |

| Meng et al. [31] | 2021 | CTA | single center | N.R. | no | no |

| Yang et al. [8] | 2021 | CTA | multicenter | 3D DLN-OR | yes | no |

| Shahzad et al. [25] | 2020 | CTA | single center | Deep Medic | no | no |

| Shi et al. [26] | 2020 | DSA, CTA | multicenter | DAResUNet | yes | no |

| Dai et al. [27] | 2020 | CTA | single center | ResNet | no | no |

| Park et al. [28] | 2019 | CTA | single center | HeadXNet | yes | no |

| Timmins et al. [29] | 2023 | CTA, MRA | single center | MESH CNN | no | no |

| Heit et al. [30] | 2022 | CTA | multicenter | N.R. | no | no |

| Study | No. of CTA Scans | No. of IA (Train/Test) | Lesion-Level Sensitivity | Patient-Level Specificity | FPs per Image | Average Size | Size Split |

|---|---|---|---|---|---|---|---|

| Wang et al. [19] | 1547 | 2037 (1667/175 + 195 ★) | 0.944 | N.R. | 0.6 | N.R. | yes |

| Liu et al. [20] | 90 | 112 (98/13) | 0.923 | N.R. | 1.7 | 7.9 | yes |

| You et al. [21] | 2272 | 2938 (2492/446) | 0.964 | N.R. | 2.01 | 3.6 | yes |

| Wu et al. [22] | 1508 | 1710 (1370/340) | 0.900 | N.R. | 1 | 6.0 | no |

| Wei et al. [23] | 212 | 224 (/224) | 0.77 | N.R. | 0.165 | 5.4 | yes |

| Bo et al. [24] | 1476 | 1590 (1363/126 + 101 ★) | 0.821 | N.R. | 4.38 | 5.0 | yes |

| Pennig et al. [9] | 172 | 205 (79/126) | 0.857 | N.R. | 0.84 | R.V. | no |

| Meng et al. [31] | 100 | N.R. | N.R. | N.R. | N.R. | N.R. | no |

| Yang et al. [8] | 1068 | 1543 (688/649 + 206 ★) | 0.975 | N.R. | 13.8 | 5.2 | yes |

| Shahzad et al. [25] | 253 | 294 (79/215) | 0.72 | N.R. | 0.21 | R.V. | no |

| Shi et al. [26] | 1313 | 1676 (1099/314 + 263 ★) | 0.84 | 0.71 | 0.26 | 4.3 | no |

| Dai et al. [27] | 311 | 344 (222/122) | 0.918 | N.R. | 8.6 | 5.4 | yes |

| Park et al. [28] | 818 | 328 (269/59) | 0.627 | 0.06 | 0.16 | no | no |

| Timmins et al. [29] | 20 | 25 (†/25) | 0.483 | N.R. | 1.05 | 5.1 | yes |

| Heit et al. [30] | 51 | 60 (0/60) | 0.95 | N.R. | N.R. | 5.4 | no |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bizjak, Ž.; Špiclin, Ž. A Systematic Review of Deep-Learning Methods for Intracranial Aneurysm Detection in CT Angiography. Biomedicines 2023, 11, 2921. https://doi.org/10.3390/biomedicines11112921

Bizjak Ž, Špiclin Ž. A Systematic Review of Deep-Learning Methods for Intracranial Aneurysm Detection in CT Angiography. Biomedicines. 2023; 11(11):2921. https://doi.org/10.3390/biomedicines11112921

Chicago/Turabian StyleBizjak, Žiga, and Žiga Špiclin. 2023. "A Systematic Review of Deep-Learning Methods for Intracranial Aneurysm Detection in CT Angiography" Biomedicines 11, no. 11: 2921. https://doi.org/10.3390/biomedicines11112921

APA StyleBizjak, Ž., & Špiclin, Ž. (2023). A Systematic Review of Deep-Learning Methods for Intracranial Aneurysm Detection in CT Angiography. Biomedicines, 11(11), 2921. https://doi.org/10.3390/biomedicines11112921