Digital Analysis of Sit-to-Stand in Masters Athletes, Healthy Old People, and Young Adults Using a Depth Sensor

Abstract

1. Introduction

2. Materials and Methods

2.1. Data Collection

2.1.1. Participants

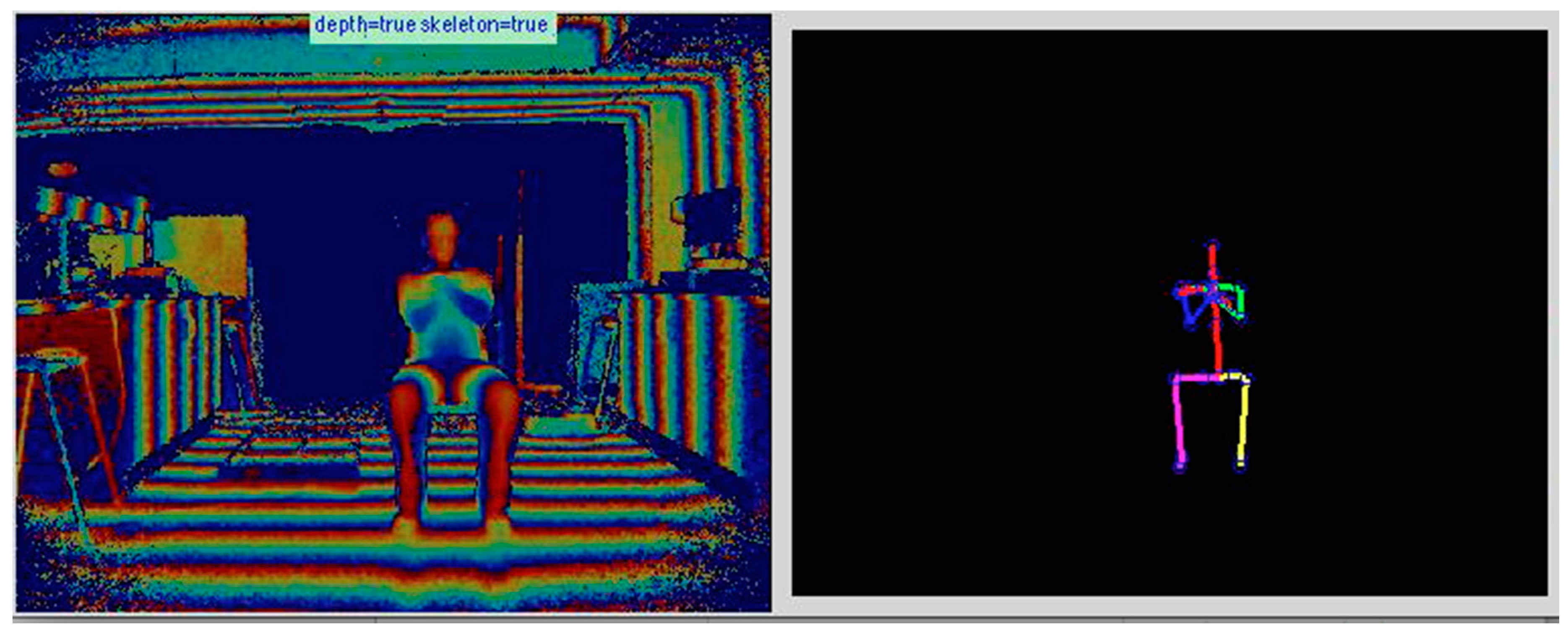

2.1.2. Data Acquisition

2.1.3. Data Labelling

- Peak of each sit-to-stand phase: The coder was asked to locate the minima and maxima of each peak of the standing and sitting repetition.

- Start and end of each sit-to-stand phase: The coder was asked to identify where they believe the start of the sit-to-stand and end (stand-to-sit) were located.

- Outlier frames: The K3Da dataset labelled each frame as a ‘good’ frame or ‘outlier’, which was reassessed by coders for agreement.

2.2. Assessment Framework

2.2.1. Phase 1: Outlier Detection

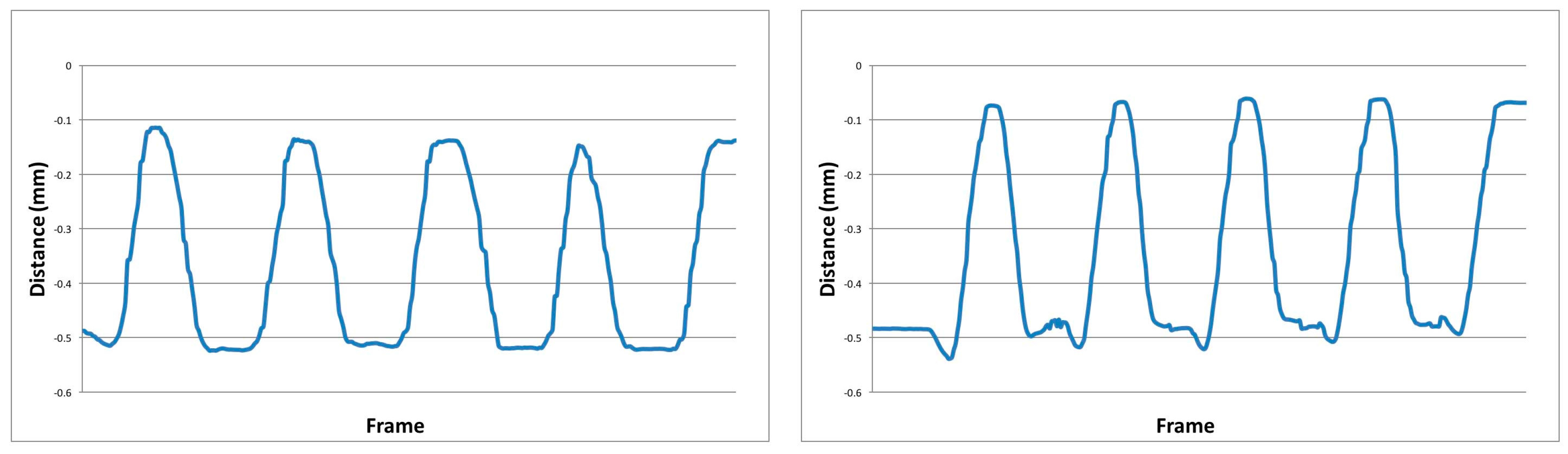

2.2.2. Phase 2: Feature Generation

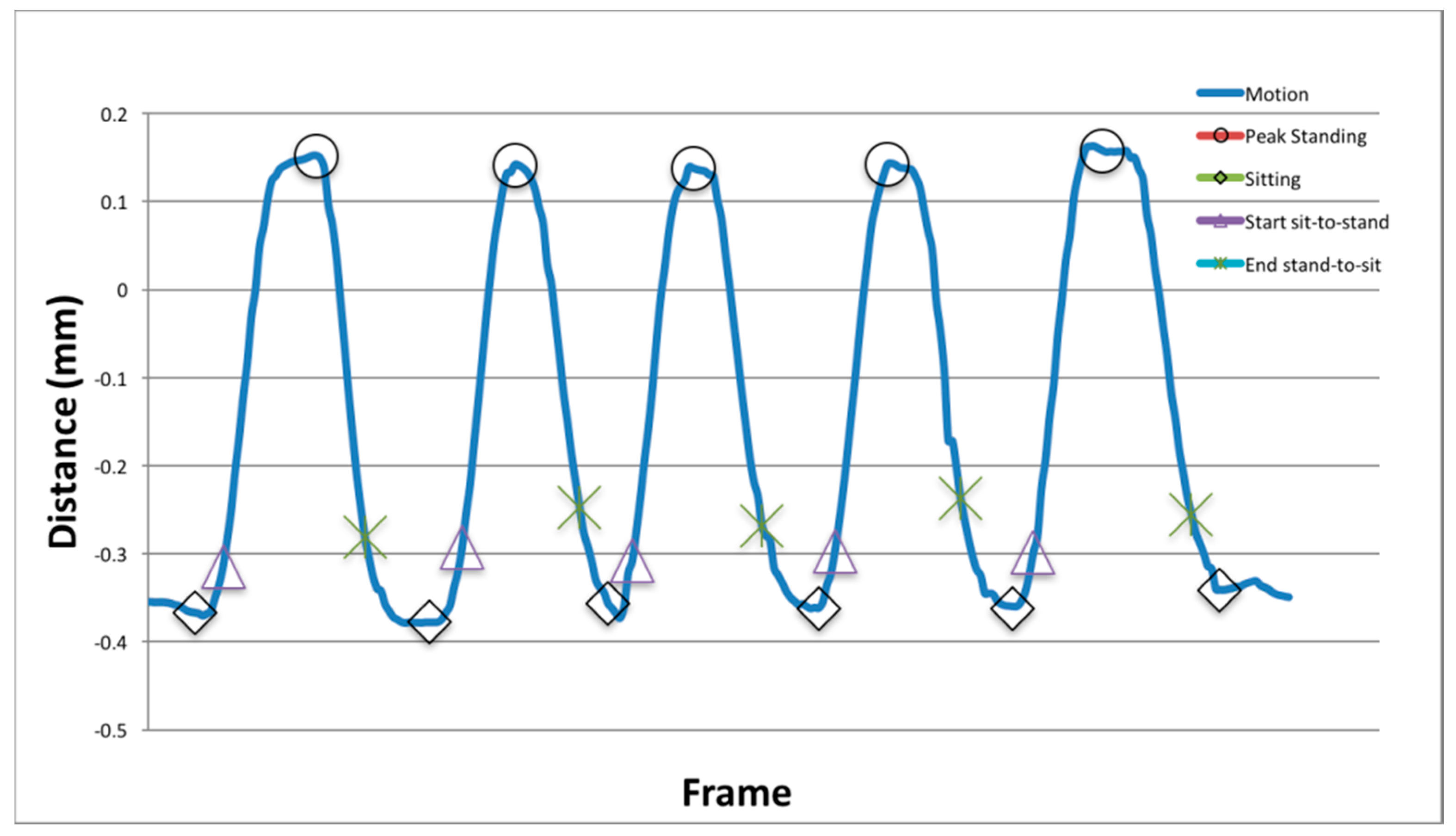

2.2.3. Phase 3: Transition Detection

- Peak-standing and sitting: The y-axis of the CoM (com) feature was low-pass filtered using a Butterworth filter with a normalised cut-off frequency of six frames. Peak standing and sitting points were detected using the inverse maxima, to identify the local minima and maxima of the vector.

- Start and end of each sit-to-stand phase: The start of the standing and sitting phase commenced when the following conditions were met; start of the sit-to-stand motion was defined as the first vertical increase above a threshold value, defined as the vertical mean (plus 15%), and the final stand-to-sit decreasing below the mean (minus 15%).

- Limitation: The maximum number of completed transitions was set at five, the required number for the sit-to-stand motion, meaning that if a participant performed more than five, they were not used in computation.

2.3. Statistical Analyses

- Stand Time (s): The time taken between each peak-sitting to peak-standing.

- Sit Time (s): The time taken between each peak-standing to peak-sitting.

- CoM Stand ML (cm) and AP (cm): The directional movement observed during each peak-sitting to peak-standing.

- CoM Sit ML (cm) and AP (cm): The directional movement observed during each peak-standing to peak-sitting.

- Stand UfV (m/s): The velocity observed during each peak-sitting to peak-standing.

- Sit UfV (m/s): The velocity observed during each peak-standing to peak-sitting.

- Stand UBFA (deg): The angle of the torso observed during each peak-sitting to peak-standing.

- Sit UBFA (deg): The angle of the torso observed during each peak-standing to peak-sitting.

- Total Time (s): The total time is computed from the first peak-sitting to the last peak-sitting (5 repetitions).

3. Results

3.1. Outlier Detection

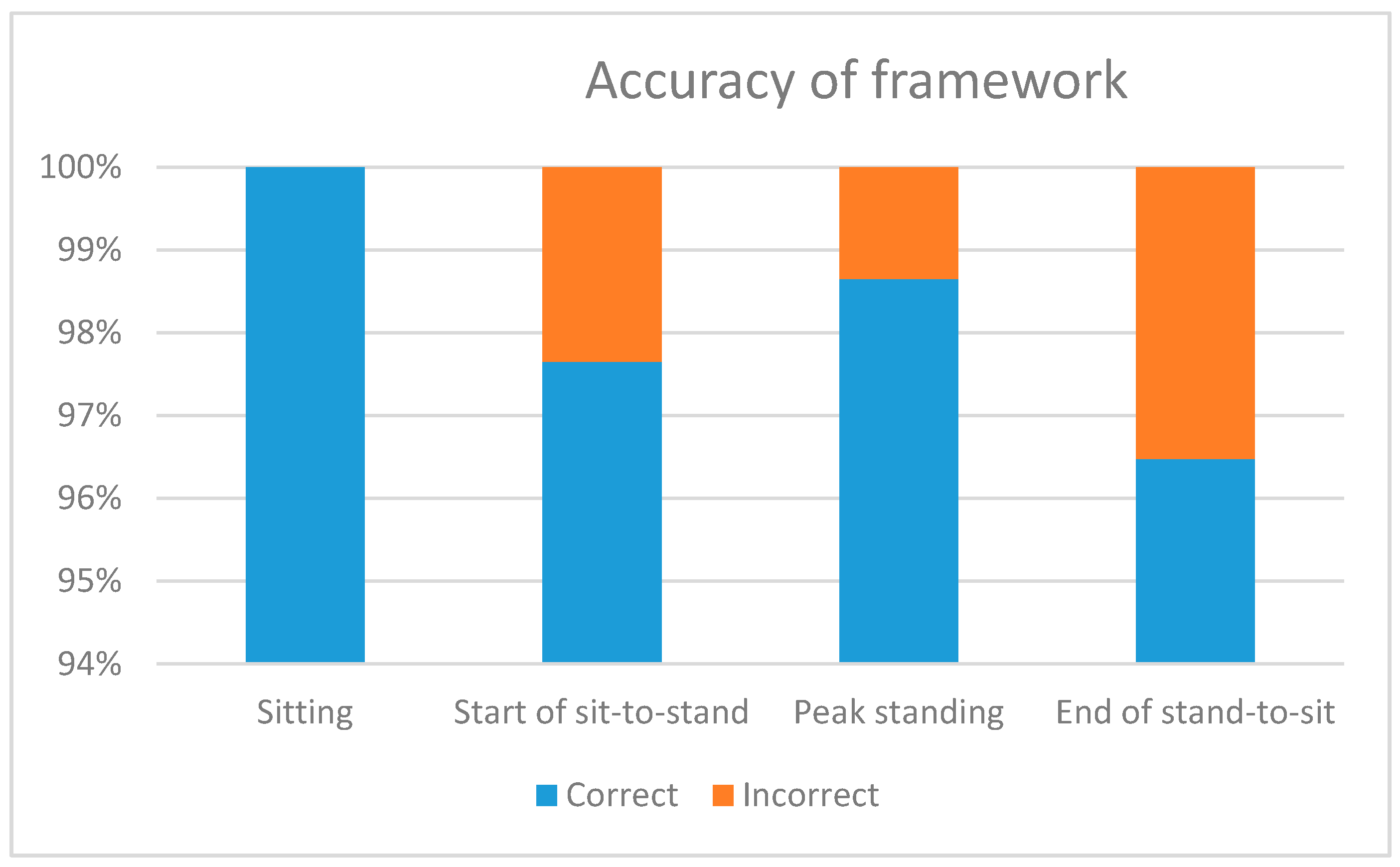

3.2. Transition Detection

3.3. Identifying Subtle Differences

4. Discussion and Conclusions

Author Contributions

Conflicts of Interest

References

- Leightley, D.; McPhee, J.S.; Yap, M.H. Automated Analysis and Quantification of Human Mobility Using a Depth Sensor. IEEE J. Biomed. Health Inform. 2017, 21, 939–948. [Google Scholar] [CrossRef] [PubMed]

- Leightley, D.; Yap, M.H.; Coulson, J.; Piasecki, M.; Cameron, J.; Barnouin, Y.; Tobias, J.; McPhee, J.S. Postural Stability during Standing Balance and Sit-to-Stand in Master Athlete Runners Compared with Nonathletic Old and Young Adults. J. Aging Phys. Act. 2017, 25, 345–350. [Google Scholar] [CrossRef] [PubMed]

- Clark, R.; Pua, Y.-H.; Fortin, K.; Ritchie, C.; Webster, K.; Denehy, L.; Bryant, A. Validity of the Microsoft Kinect for assessment of postural control. Gait Posture 2012, 36, 372–377. [Google Scholar] [CrossRef] [PubMed]

- Collard, R.M.; Boter, H.; Schoevers, R.A.; Oude Voshaar, R.C. Prevalence of frailty in community-dwelling older persons: A systematic review. J. Am. Geriatr. Soc. 2012, 60, 1487–1492. [Google Scholar] [CrossRef] [PubMed]

- Practitoners, G. Fit for Frailty: Consensus Best Practice Guidance for the Care of Older People Living with Frailty in Community and Outpatient Settings; British Geriatrics Society Tech Report; British Geriatrics Society: London, UK, 2014. [Google Scholar]

- Gill, J.; Allum, J.H.J.; Carpenter, M.G.; Held-Ziolkowska, M.; Adkin, A.L.; Honegger, F.; Pierchala, K. Trunk Sway Measures of Postural Stability during Clinical Balance Tests: Effects of Age. J. Gerontol. A Biol. Sci. Med. Sci. 2001, 56, M438–M447. [Google Scholar] [CrossRef] [PubMed]

- Fortino, G.; Gravina, R.; Guerrieri, A.; di Fatta, G. Engineering Large-Scale Body Area Networks Applications. In Proceedings of the 8th International Conference on Body Area Networks, Boston, MA, USA, 30 September–2 October 2013. [Google Scholar]

- Gregory, P.; Alexander, J.; Satinksky, J. Clinical Telerehabilitation: Applications for Physiatrists. Am. Acad. Phys. Med. Rehabil. 2011, 3, 647–656. [Google Scholar] [CrossRef] [PubMed]

- Leightley, D.; Yap, M.H.; Hewitt, B.M.; McPhee, J.S. Sensing Behaviour using the Kinect: Identifying Characteristic Features of Instability and Poor Performance during Challenging Balancing Tasks. In Proceedings of the Measuring Behavior 2016, Dublin, Ireland, 25–27 May 2016. [Google Scholar]

- Dehbandi, B.; Barachant, A.; Smeragliuolo, A.H.; Long, J.D.; Bumanlag, S.J.; He, V.; Lampe, A.; Putrino, D. Using data from the Microsoft Kinect 2 to determine postural stability in healthy subjects: A feasibility trial. PLoS ONE 2017, 12, e0170890. [Google Scholar] [CrossRef] [PubMed]

- Guenterberg, E.; Ostadabbas, S.; Ghasemzadeh, H.; Jafari, R. An Automatic Segmentation Technique in Body Sensor Networks based on Signal Energy. In Proceedings of the 4th International ICST Conference on Body Area Networks, Los Angeles, CA, USA, 1–3 April 2009. [Google Scholar]

- Mentiplay, B.F.; Perraton, L.G.; Bower, K.J.; Pua, Y.H.; McGaw, R.; Heywood, S.; Clark, R.A. Gait assessment using the Microsoft Xbox One Kinect: Concurrent validity and inter-day reliability of spatiotemporal and kinematic variables. J. Biomech. 2015, 48, 2166–2170. [Google Scholar] [CrossRef] [PubMed]

- Ejupi, A.; Brodie, M.; Gschwind, Y.J.; Lord, S.R.; Zagler, W.L.; Delbaere, K. Kinect-Based Five-Times-Sit-to-Stand Test for Clinical and In-Home Assessment of Fall Risk in Older People. Gerontology 2015, 62, 118–124. [Google Scholar] [CrossRef] [PubMed]

- Vernon, S.; Paterson, K.; Bower, K.; McGinley, J.; Miller, K.; Pua, Y.-H.; Clark, R.A. Quantifying Individual Components of the Timed up and Go Using the Kinect in People Living with Stroke. Neurorehabil. Neural Repair 2015, 29, 48–53. [Google Scholar] [CrossRef] [PubMed]

- Agethen, P.; Otto, M.; Mengel, S.; Rukzio, E. Using Marker-less Motion Capture Systems for Walk Path Analysis in Paced Assembly Flow Lines. Procedia CIRP 2016, 54, 152–157. [Google Scholar] [CrossRef]

- Bennett, S.L.; Goubran, R.; Rockwood, K.; Knoefel, F. Distinguishing between stable and unstable sit-to-stand transfers using pressure sensors. In Proceedings of the 2014 IEEE International Symposium on Medical Measurements and Applications (MeMeA), Lisbon, Portugal, 11–12 June 2014; pp. 1–4. [Google Scholar]

- Acorn, E.; Dipsis, N.; Pincus, T.; Stathis, K. Sit-to-Stand Movement Recognition Using Kinect. Stat. Learn. Data Sci. 2015, 9047, 179–192. [Google Scholar]

- Guralnik, J.; Simonsick, E.; Ferrucci, L.; Glynn, R.; Berkman, L.; Blazer, D.; Scherr, P.; Wallace, R. A Short Physical Performance Battery Assessing Lower Extremity Function: Association with Self-Reported Disability and Prediction of Mortality and Nursing Home Admission. J. Gerontol. 1994, 49, 85–93. [Google Scholar] [CrossRef]

- Leightley, D.; Yap, M.H.; Coulson, Y.B.J.; Mcphee, J.S. Benchmarking Human Motion Analysis Using Kinect One: An open source dataset. In Proceedings of the IEEE Conference of Asia-Pacific Signal and Information Processing Association, Chicago, IL, USA, 16–19 December 2015. [Google Scholar]

- Leightley, D. Recording Kinect One Stream Using C#. 2016. Available online: https://leightley.com/recording-kinect-one-streams-using-c/ (accessed on 11 July 2017).

- Blumrosen, G.; Miron, Y.; Intrator, N.; Plotnik, M. A Real-Time Kinect Signature-Based Patient Home Monitoring System. Sensors 2016, 16, 1965. [Google Scholar] [CrossRef] [PubMed]

- Arthur, D.; Vassilvitskii, S. K-means++: The Advantages of Careful Seeding. In Proceedings of the Eighteenth Annual ACM-SIAM Symposium on Discrete Algorithms, New Orleans, LA, USA, 7–9 January 2007; pp. 1027–1035. [Google Scholar]

- Sinha, A.; Chakravarty, K. Pose Based Person Identification Using Kinect. In Proceedings of the International Conference on Systems, Man and Cybernetics, Manchester, UK, 13–16 October 2013; pp. 497–503. [Google Scholar]

- Leightley, D.; Li, B.; McPhee, J.S.; Yap, M.H.; Darby, J. Exemplar-based Human Action Recognition with Template Matching from a Stream of Motion Capture. Image Anal. Recognit. 2014, 8815, 12–20. [Google Scholar]

- Gonzalez, A.; Fraisse, P.; Hayashibe, M. A personalized balance measurement for home-based rehabilitation. In Proceedings of the IEEE Conference on Neural Engineering, Paris, France, 22–24 April 2015; pp. 711–714. [Google Scholar]

- Gonzalez, A.; Hayashibe, M.; Fraisse, P. Estimation of the center of mass with Kinect and Wii balance board. In Proceedings of the International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7–12 October 2012; pp. 1023–1028. [Google Scholar]

- Bó, A.P.L.; Hayashibe, M.; Poignet, P. Joint angle estimation in rehabilitation with inertial sensors and its integration with Kinect. In Proceedings of the 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Boston, MA, USA, 30 August–3 September 2011; pp. 3479–3483. [Google Scholar]

- Rittweger, J.; di Prampero, P.E.; Maffulli, N.; Narici, M.V. Sprint and endurance power and ageing: An analysis of master athletic world records. Proc. R. Soc. Lond. B Biol. Sci. 2009, 276, 683–689. [Google Scholar] [CrossRef] [PubMed]

- Degens, H.; Maden-Wilkinson, T.M.; Ireland, A.; Korhonen, M.T.; Suominen; Heinonen, A.; Radak, Z.; McPhee, J.S.; Rittweger, J. Relationship between ventilatory function and age in master athletes and a sedentary reference population. J. Age 2013, 35, 1007–1015. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Michaelis, I.; Kwiet, A.; Gast, U.; Boshof, A.; Antvorskov, T.; Jung, T.; Rittweger, J.; Felsenberg, D. Decline of specific peak jumping power with age in master runners. J. Musculoskelet. Neuronal Interact. 2008, 8, 64–70. [Google Scholar] [PubMed]

- Runge, M.; Rittweger, J.; Russo, C.R.; Schiessl, H.; Felsenberg, D. Is muscle power output a key factor in the age-related decline in physical performance? A comparison of muscle cross section, chair-rising test and jumping power. Clin. Physiol. Funct. Imaging 2004, 24, 335–340. [Google Scholar] [CrossRef] [PubMed]

- Adusumilli, G.; Joseph, S.E.; Samaan, M.A.; Schultz, B.; Popovic, T.; Souza, R.B.; Majumdar, S. iPhone Sensors in Tracking Outcome Variables of the 30-Second Chair Stand Test and Stair Climb Test to Evaluate Disability: Cross-Sectional Pilot Study. JMIR Mhealth Uhealth 2017, 5, e166. [Google Scholar] [CrossRef] [PubMed]

- Bonato, P. Advances in wearable technology and its medical applications. In Proceedings of the 2010 Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Buenos Aires, Argentina, 31 August–4 September 2010; pp. 2021–2024. [Google Scholar]

- Papi, E.; Koh, W.S.; McGregor, A.H. Wearable technology for spine movement assessment: A systematic review. J. Biomech. 2017, 64, 186–197. [Google Scholar] [CrossRef] [PubMed]

- Milani, S.; Calvagno, G. Joint denoising and interpolation of depth maps for MS Kinect sensors. In Proceedings of the 2012 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Kyoto, Japan, 25–30 March 2012; pp. 797–800. [Google Scholar]

| Parameter (SD) | Young Adult | Healthy Old | Masters Athletes | p-Value |

|---|---|---|---|---|

| Age, years | 26.40 (±3.16) | 74.90 (±4.11) | 66.93 (±5.03) a,b | 0.00 |

| Height, cm | 176.47 (±8.59) | 170.30 (±5.97) c | 166.01 (±10.07) | 0.04 |

| Weight, kg | 77.93 (±18.11) | 80.25 (±15.32) | 61.90 (±9.39) | 0.594 |

| Body mass index | 23.01 (±5.70) b,c | 22.65 (±5.38) | 19.14 (±2.11) | 0.04 |

| Parameter | Average Detection Rate (SD) |

|---|---|

| Sitting | 6 (±0) |

| Start of sit-to-stand | 4.76 (±0.48) |

| Peak standing | 4.93 (±0.35) |

| End of stand-to-sit | 4.34 (±0.63) |

| Parameter | Young Adults | Healthy Old | Masters Athletes | p-Value |

|---|---|---|---|---|

| Stand Time (s) | 1.02 (±0.18) | 2.02 (±0.21) | 1.51 (±0.19) a | 0.02 |

| CoM Stand ML (cm) | 0.24 (0.05) | 0.03 (0.26) | 0.17 (0.06) | 0.56 |

| CoM Stand AP (cm) | 0.21 (±0.01) b | 0.01 (±0.19) | 0.04 (±0.14) | 0.04 |

| Stand UBFA (deg) | 12 (±2.86) | 18 (±4.09) | 14 (±3.58) | 0.62 |

| Stand UfV (m/s) | 0.82 (±0.19) | 0.71 (±0.38) | 0.73 (±0.19) | 0.16 |

| Sit Time (s) | 0.92 (±0.23) | 1.47 (±0.73) | 0.98 (±0.35) | 0.23 |

| CoM Sit ML (cm) | 0.22 (0.06) | 0.04 (0.28) a,c | 0.22 (0.09) | 0.00 |

| CoM Sit AP (cm) | 0.22 (±0.03) | 0.03 (±0.17) | 0.05 (±0.16) | 0.53 |

| Sit UBFA (deg) | 17 (±3.19) | 16 (±3.71) a | 10 (±2.38) a | 0.05 |

| Sit UfV (m/s) | 0.98 (±0.19) | 0.78 (±0.58) | 0.83 (±0.21) a | 0.04 |

| Total time (s) | 7.98 (±2.09) | 12.18 (±3.76) | 9.28 (±0.94) | 0.24 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Leightley, D.; Yap, M.H. Digital Analysis of Sit-to-Stand in Masters Athletes, Healthy Old People, and Young Adults Using a Depth Sensor. Healthcare 2018, 6, 21. https://doi.org/10.3390/healthcare6010021

Leightley D, Yap MH. Digital Analysis of Sit-to-Stand in Masters Athletes, Healthy Old People, and Young Adults Using a Depth Sensor. Healthcare. 2018; 6(1):21. https://doi.org/10.3390/healthcare6010021

Chicago/Turabian StyleLeightley, Daniel, and Moi Hoon Yap. 2018. "Digital Analysis of Sit-to-Stand in Masters Athletes, Healthy Old People, and Young Adults Using a Depth Sensor" Healthcare 6, no. 1: 21. https://doi.org/10.3390/healthcare6010021

APA StyleLeightley, D., & Yap, M. H. (2018). Digital Analysis of Sit-to-Stand in Masters Athletes, Healthy Old People, and Young Adults Using a Depth Sensor. Healthcare, 6(1), 21. https://doi.org/10.3390/healthcare6010021