Bridging the Gap: From AI Success in Clinical Trials to Real-World Healthcare Implementation—A Narrative Review

Abstract

1. Introduction

Purpose and Objective of the Study

- What are the key barriers hindering the translation of AI from controlled clinical trials to real-world healthcare?

- How can methodological, ethical, and operational challenges be mitigated?

- What structured framework can support the scalable and equitable integration of AI in diverse clinical settings?

2. Results

2.1. Discrepancy Between Clinical Trial Outcomes and Real-World Implementation

2.2. Challenges in Real-World Implementation

2.3. Ethical and Societal Implications of Artificial Intelligence in Healthcare

- Bias and Inequity

- 2.

- Accountability and Transparency

- 3.

- Privacy and Consent

2.4. Methodological Rigor and Reporting Standards

2.5. Operational and Practical Challenges

2.6. Scalability of AI Solutions in Healthcare

3. Discussion

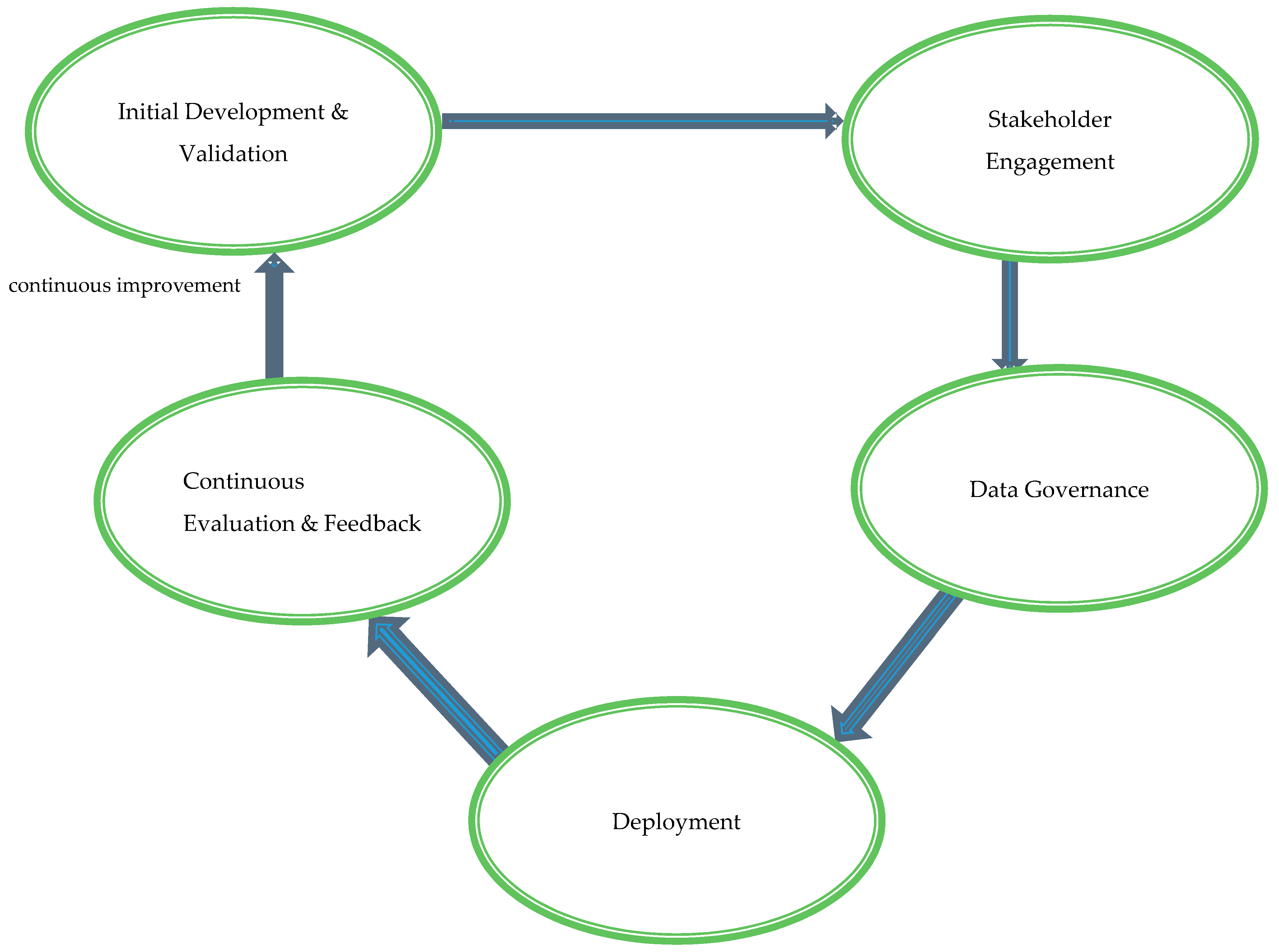

3.1. Proposed Model: AI Healthcare Integration Framework (AI-HIF)

3.2. Theoretical Foundations: Anchoring AI-HIF in Established Models

- Initial Development and Validation: This foundational stage emphasizes the rigorous development and validation of AI tools to meet clinical standards and adapt to diverse healthcare settings. Collaborative efforts involving multidisciplinary teams—comprising clinicians, data scientists, ethicists, and policy experts—guide the design and functionality of AI systems. Iterative testing with diverse and representative datasets enhances the generalizability and reliability of AI tools across various populations and clinical scenarios. Compliance with international regulatory standards, such as those set by the FDA, EMA, and WHO, ensures global applicability and acceptance.

- Stakeholder Engagement: Successful AI integration necessitates active collaboration among all relevant stakeholders, including healthcare providers, patients, policymakers, and AI developers. Regular consultations and interdisciplinary meetings gather input and feedback, ensuring that AI tools align with clinical needs and patient expectations. Participatory design processes incorporate end-user perspectives, enhancing the usability and relevance of AI systems. Transparent communication builds trust and addresses concerns, fostering higher acceptance and sustained utilization of AI technologies within clinical workflows.

- Data Governance: Robust data governance is critical to maintaining the integrity, privacy, security, and ethical use of data in AI applications. Standardized protocols for data collection, storage, and processing ensure consistency and interoperability across different healthcare systems. Adherence to global data protection regulations, such as GDPR and HIPAA, safeguards patient information from breaches and misuse. Bias mitigation strategies, including the use of diverse and representative datasets during AI model training, promote equity in healthcare delivery and prevent the perpetuation of existing disparities.

- Deployment: The practical implementation of AI tools into clinical workflows requires minimizing disruption while maximizing healthcare service enhancements. Pilot programs in selected clinical settings, such as radiology departments or intensive care units, assess the feasibility and impact of AI systems before large-scale deployment. Comprehensive training programs enhance healthcare professionals’ digital literacy and proficiency in utilizing AI tools effectively. Developing and optimizing necessary technological infrastructure—encompassing hardware, software, and network capabilities—supports the seamless integration of AI technologies into existing healthcare environments.

- Continuous Evaluation and Feedback: The sustained efficacy, ethical alignment, and adaptability of AI tools post-deployment are ensured through continuous evaluation and feedback mechanisms. Monitoring performance and impact using key performance indicators (KPIs) and health outcomes facilitates the identification of areas for improvement. Feedback loops involving end-users provide insights that drive iterative refinements and updates to AI systems, maintaining their relevance and effectiveness in evolving healthcare landscapes. This dynamic process addresses emerging challenges and upholds the ethical integrity and long-term success of AI integrations within clinical practice.

3.3. Global Applicability and Scalability

3.4. Integration of Theoretical Foundations

3.5. Recommendations and Implications

3.6. Limitations

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Khalifa, M.; Albadawy, M. AI in diagnostic imaging: Revolutionising accuracy and efficiency. Comput. Methods Programs Biomed. Update 2024, 5, 100146. [Google Scholar] [CrossRef]

- Hassanein, S.; El Arab, R.A.; Abdrbo, A.; Abu-Mahfouz, M.S.; Gaballah, M.K.F.; Seweid, M.M.; Almari, M.; Alzghoul, H. Artificial intelligence in nursing: An integrative review of clinical and operational impacts. Front. Digit. Health 2025, 7, 1552372. [Google Scholar] [CrossRef]

- Alowais, S.A.; Alghamdi, S.S.; Alsuhebany, N.; Alqahtani, T.; Alshaya, A.I.; Almohareb, S.N.; Aldairem, A.; Alrashed, M.; Bin Saleh, K.; Badreldin, H.A.; et al. Revolutionizing healthcare: The role of artificial intelligence in clinical practice. BMC Med. Educ. 2023, 23, 689. [Google Scholar] [CrossRef]

- Maleki Varnosfaderani, S.; Forouzanfar, M. The Role of AI in Hospitals and Clinics: Transforming Healthcare in the 21st Century. Bioengineering 2024, 11, 337. [Google Scholar] [CrossRef]

- Han, R.; Acosta, J.N.; Shakeri, Z.; Ioannidis, J.P.A.; Topol, E.J.; Rajpurkar, P. Randomised controlled trials evaluating artificial intelligence in clinical practice: A scoping review. Lancet Digit. Health 2024, 6, e367–e373. [Google Scholar] [CrossRef]

- Jiang, F.; Jiang, Y.; Zhi, H.; Dong, Y.; Li, H.; Ma, S.; Wang, Y.; Dong, Q.; Shen, H.; Wang, Y. Artificial intelligence in healthcare: Past, present and future. Stroke Vasc. Neurol. 2017, 2, 230–243. [Google Scholar] [CrossRef]

- Topol, E.J. High-performance medicine: The convergence of human and artificial intelligence. Nat. Med. 2019, 25, 44–56. [Google Scholar] [CrossRef]

- Nagendran, M.; Chen, Y.; A Lovejoy, C.; Gordon, A.C.; Komorowski, M.; Harvey, H.; Topol, E.J.; A Ioannidis, J.P.; Collins, G.S.; Maruthappu, M. Artificial intelligence versus clinicians: Systematic review of design, reporting standards, and claims of deep learning studies. BMJ 2020, 368, m689. [Google Scholar] [CrossRef]

- Wiens, J.; Saria, S.; Sendak, M.; Ghassemi, M.; Liu, V.X.; Doshi-Velez, F.; Jung, K.; Heller, K.; Kale, D.; Goldenberg, A.; et al. Author Correction: Do no harm: A roadmap for responsible machine learning for health care. Nat. Med. 2019, 25, 1627. [Google Scholar] [CrossRef]

- Yu, K.H.; Beam, A.L.; Kohane, I.S. Artificial intelligence in healthcare. Nat. Biomed. Eng. 2018, 2, 719–731. [Google Scholar] [CrossRef]

- Thomas, J.; Harden, A. Methods for the thematic synthesis of qualitative research in systematic reviews. BMC Med. Res. Methodol. 2008, 8, 45. [Google Scholar] [CrossRef] [PubMed]

- Bekbolatova, M.; Mayer, J.; Ong, C.W.; Toma, M. Transformative Potential of AI in Healthcare: Definitions, Applications, and Navigating the Ethical Landscape and Public Perspectives. Healthcare 2024, 12, 125. [Google Scholar] [CrossRef] [PubMed]

- Manz, C.R.; Parikh, R.B.; Small, D.S.; Evans, C.N.; Chivers, C.; Regli, S.H.; Hanson, C.W.; Bekelman, J.E.; Rareshide, C.A.L.; O’connor, N.; et al. Effect of Integrating Machine Learning Mortality Estimates With Behavioral Nudges to Clinicians on Serious Illness Conversations Among Patients With Cancer: A Stepped-Wedge Cluster Randomized Clinical Trial. JAMA Oncol. 2020, 6, e204759. [Google Scholar] [CrossRef] [PubMed]

- Nicolae, A.; Semple, M.; Lu, L.; Smith, M.; Chung, H.; Loblaw, A.; Morton, G.; Mendez, L.C.; Tseng, C.-L.; Davidson, M.; et al. Conventional vs machine learning-based treatment planning in prostate brachytherapy: Results of a Phase I randomized controlled trial. Brachytherapy 2020, 19, 470–476. [Google Scholar] [CrossRef]

- Tsoumpa, M.; Kyttari, A.; Matiatou, S.; Tzoufi, M.; Griva, P.; Pikoulis, E.; Riga, M.; Matsota, P.; Sidiropoulou, T. The Use of the Hypotension Prediction Index Integrated in an Algorithm of Goal Directed Hemodynamic Treatment during Moderate and High-Risk Surgery. J. Clin. Med. 2021, 10, 5884. [Google Scholar] [CrossRef]

- Meijer, F.; Honing, M.; Roor, T.; Toet, S.; Calis, P.; Olofsen, E.; Martini, C.; van Velzen, M.; Aarts, L.; Niesters, M.; et al. Reduced postoperative pain using Nociception Level-guided fentanyl dosing during sevoflurane anaesthesia: A randomised controlled trial. Br. J. Anaesth. 2020, 125, 1070–1078. [Google Scholar] [CrossRef]

- Seyyed-Kalantari, L.; Zhang, H.; McDermott, M.B.A.; Chen, I.Y.; Ghassemi, M. Underdiagnosis bias of artificial intelligence algorithms applied to chest radiographs in under-served patient populations. Nat. Med. 2021, 27, 2176–2182. [Google Scholar] [CrossRef]

- Beede, E.; Baylor, E.; Hersch, F.; Iurchenko, A.; Wilcox, L.; Ruamviboonsuk, P.; Vardoulakis, L.M. A Human-Centered Evaluation of a Deep Learning System Deployed in Clinics for the Detection of Diabetic Retinopathy. In Proceedings of the Conference on Human Factors in Computing Systems—Proceedings 2020, Honolulu, HI, USA, 25–30 April 2020. [Google Scholar]

- Lam, T.Y.T.; Cheung, M.F.K.; Munro, Y.L.; Lim, K.M.; Shung, D.; Sung, J.J.Y. Randomized Controlled Trials of Artificial Intelligence in Clinical Practice: Systematic Review. J. Med. Internet Res. 2022, 24, e37188. [Google Scholar] [CrossRef]

- Plana, D.; Shung, D.L.; Grimshaw, A.A.; Saraf, A.; Sung, J.J.Y.; Kann, B.H. Randomized Clinical Trials of Machine Learning Interventions in Health Care: A Systematic Review. JAMA Netw. Open 2022, 5, E2233946. [Google Scholar] [CrossRef]

- Chen, R.J.; Wang, J.J.; Williamson, D.F.K.; Chen, T.Y.; Lipkova, J.; Lu, M.Y.; Sahai, S.; Mahmood, F. Algorithm fairness in artificial intelligence for medicine and healthcare. Nat. Biomed. Eng. 2023, 7, 719. [Google Scholar] [CrossRef]

- Norori, N.; Hu, Q.; Aellen, F.M.; Faraci, F.D.; Tzovara, A. Addressing bias in big data and AI for health care: A call for open science. Patterns 2021, 2, 100347. [Google Scholar] [CrossRef] [PubMed]

- Ferrara, C.; Sellitto, G.; Ferrucci, F.; Palomba, F.; De Lucia, A. Fairness-aware machine learning engineering: How far are we? Empir. Softw. Eng. 2024, 29, 9. [Google Scholar] [CrossRef] [PubMed]

- Zuhair, V.; Babar, A.; Ali, R.; Oduoye, M.O.; Noor, Z.; Chris, K.; Okon, I.I.; Rehman, L.U. Exploring the Impact of Artificial Intelligence on Global Health and Enhancing Healthcare in Developing Nations. J. Prim. Care Community Health 2024, 15, 21501319241245850. [Google Scholar] [CrossRef]

- Li, Y.H.; Li, Y.L.; Wei, M.Y.; Li, G.Y. Innovation and challenges of artificial intelligence technology in personalized healthcare. Sci. Rep. 2024, 14, 18994. [Google Scholar] [CrossRef] [PubMed]

- Cary, M.P.; Bessias, S.; McCall, J.; Pencina, M.J.; Grady, S.D.; Lytle, K.; Economou-Zavlanos, N.J. Empowering nurses to champion Health equity & BE FAIR: Bias elimination for fair and responsible AI in healthcare. J. Nurs. Scholarsh. 2024, 57, 130–139. [Google Scholar] [CrossRef]

- Russell, R.G.P.; Novak, L.L.; Patel, M.; Garvey, K.V.P.; Craig, K.J.T.; Jackson, G.P.; Moore, D.; Miller, B.M.M. Competencies for the Use of Artificial Intelligence-Based Tools by Health Care Professionals. Acad. Med. 2023, 98, 348–356. [Google Scholar] [CrossRef]

- González-Sendino, R.; Serrano, E.; Bajo, J. Mitigating bias in artificial intelligence: Fair data generation via causal models for transparent and explainable decision-making. Future Gener. Comput. Syst. 2024, 155, 384–401. [Google Scholar] [CrossRef]

- Yu, K.-H.; Healey, E.; Leong, T.-Y.; Kohane, I.S.; Manrai, A.K. Medical Artificial Intelligence and Human Values. N. Engl. J. Med. 2024, 390, 1895–1904. [Google Scholar] [CrossRef]

- Habli, I.; Lawton, T.; Porter, Z. Artificial intelligence in health care: Accountability and safety. Bull. World Health Organ. 2020, 98, 251. [Google Scholar] [CrossRef]

- Mennella, C.; Maniscalco, U.; De Pietro, G.; Esposito, M. Ethical and regulatory challenges of AI technologies in healthcare: A narrative review. Heliyon 2024, 10, e26297. [Google Scholar] [CrossRef]

- Shahzad, R.; Ayub, B.; Rehman Siddiqui, M.A. Quality of reporting of randomised controlled trials of artificial intelligence in healthcare: A systematic review. BMJ Open 2022, 12, e061519. [Google Scholar] [CrossRef] [PubMed]

- Petersson, L.; Larsson, I.; Nygren, J.M.; Nilsen, P.; Neher, M.; Reed, J.E.; Tyskbo, D.; Svedberg, P. Challenges to implementing artificial intelligence in healthcare: A qualitative interview study with healthcare leaders in Sweden. BMC Health Serv. Res. 2022, 22, 850. [Google Scholar] [CrossRef] [PubMed]

- Nair, M.; Svedberg, P.; Larsson, I.; Nygren, J.M. A comprehensive overview of barriers and strategies for AI implementation in healthcare: Mixed-method design. PLoS ONE 2024, 19, e0305949. [Google Scholar] [CrossRef]

- Wubineh, B.Z.; Deriba, F.G.; Woldeyohannis, M.M. Exploring the opportunities and challenges of implementing artificial intelligence in healthcare: A systematic literature review. Urol. Oncol. Semin. Orig. Investig. 2024, 42, 48–56. [Google Scholar] [CrossRef] [PubMed]

- Esmaeilzadeh, P. Challenges and strategies for wide-scale artificial intelligence (AI) deployment in healthcare practices: A perspective for healthcare organizations. Artif. Intell. Med. 2024, 151, 102861. [Google Scholar] [CrossRef]

- Rajagopal, A.; Ayanian, S.; Ryu, A.J.; Qian, R.; Legler, S.R.; Peeler, E.A.; Issa, M.; Coons, T.J.; Kawamoto, K. Machine Learning Operations in Health Care: A Scoping Review. Mayo Clin. Proc. Digit. Health 2024, 2, 421–437. [Google Scholar] [CrossRef]

- Kreuzberger, D.; Kuhl, N.; Hirschl, S. Machine Learning Operations (MLOps): Overview, Definition, and Architecture. IEEE Access 2023, 11, 31866–31879. [Google Scholar] [CrossRef]

- Singla, A. Machine Learning Operations (MLOps): Challenges and Strategies. J. Knowl. Learn. Sci. Technol. 2023, 2, 333–340. [Google Scholar] [CrossRef]

- Ranschaert, E.; Rezazade Mehrizi, M.H.; Grootjans, W.; Cook, T.S. (Eds.) AI Implementation in Radiology; Springer Nature: Dordrecht, The Netherlands, 2024. [Google Scholar] [CrossRef]

- Dehghani, F.; Dibaji, M.; Anzum, F.; Dey, L.; Basdemir, A.; Bayat, S.; Boucher, J.-C.; Drew, S.; Eaton, S.E.; Frayne, R.; et al. Trustworthy and Responsible AI for Human-Centric Autonomous Decision-Making Systems. arXiv 2024, arXiv:2408.15550. [Google Scholar]

- Tilala, M.H.; Chenchala, P.K.; Choppadandi, A.; Kaur, J.; Naguri, S.; Saoji, R.; Devaguptapu, B. Ethical Considerations in the Use of Artificial Intelligence and Machine Learning in Health Care: A Comprehensive Review. Cureus 2024, 16, e62443. [Google Scholar] [CrossRef]

- Dankwa-Mullan, I. Health Equity and Ethical Considerations in Using Artificial Intelligence in Public Health and Medicine. Prev. Chronic Dis. 2024, 21, E64. [Google Scholar] [CrossRef] [PubMed]

- Damschroder, L.J.; Aron, D.C.; Keith, R.E.; Kirsh, S.R.; Alexander, J.A.; Lowery, J.C. Fostering implementation of health services research findings into practice: A consolidated framework for advancing implementation science. Implement. Sci. 2009, 4, 150. [Google Scholar] [CrossRef] [PubMed]

- Venkatesh, V.; Davis, F.D. Theoretical extension of the Technology Acceptance Model: Four longitudinal field studies. Manag. Sci. 2000, 46, 186–204. [Google Scholar] [CrossRef]

- Davis, F.D. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 1989, 13, 319–339. [Google Scholar] [CrossRef]

| Category | Key Findings | Implementation Challenges | Proposed Strategies |

|---|---|---|---|

| Clinical Efficacy | AI systems can achieve diagnostic accuracies comparable to or better than human clinicians. | Limited generalizability from single-center, homogeneous studies. | Conduct studies across multiple centers with diverse populations. Use pragmatic trials to test AI in real-world clinical settings. |

| Algorithmic Bias and Equity | AI can underdiagnose underserved groups, worsening healthcare disparities. | Biases in training data lead to unfair outcomes. | Use diverse and representative datasets when training AI models. Implement fairness measures like equalized odds and demographic parity. |

| Integration Complexities | AI tools face data interoperability issues and do not fit well into existing workflows. | Technical barriers like incompatible data systems. | Implement standardized data processes (MLOps) for better integration. Develop AI tools that fit into current workflows. |

| Ethical and Societal Implications | AI decision-making lacks transparency, complicating accountability. | Blurred responsibility for AI-driven decisions. | Enhance the explainability of AI systems. Establish clear oversight and governance policies. |

| Operational Efficiency | AI can both reduce and increase operational workload, depending on the context. | Resistance from healthcare staff. | Start with pilot programs and gradual implementation. Invest in necessary infrastructure and staff training. |

| Methodological Rigor | Many AI studies do not follow reporting standards, affecting their reliability. | Poor documentation of AI processes and handling of data. | Follow established reporting guidelines like CONSORT-AI. Provide detailed information on AI limitations and negative outcomes. |

| Scalability of AI Solutions | Scaling AI is crucial but hindered by technical, organizational, and ethical issues. | Data interoperability and algorithm biases. | Use robust data and model management practices. Build interdisciplinary teams to tackle technical and organizational barriers. |

| Policy and Governance | Lack of effective policy frameworks slows responsible AI deployment. | Need for guidelines on bias, transparency, and accountability. | Develop comprehensive policies in collaboration with all stakeholders. Regularly review and update AI systems and policies. |

| Interdisciplinary Collaboration | Successful AI integration needs collaboration across medicine, computer science, ethics, and policy. | Bridging AI research with clinical practice. | Create interdisciplinary teams for AI development and implementation. Involve clinicians in the AI design process. |

| Patient-Centered Outcomes | AI should be evaluated based on patient quality of life, treatment adherence, and long-term health outcomes. | Technical metrics do not always reflect patient care improvements. | Focus AI evaluations on outcomes that matter to patients. Conduct long-term studies to assess real-world impact. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

El Arab, R.A.; Abu-Mahfouz, M.S.; Abuadas, F.H.; Alzghoul, H.; Almari, M.; Ghannam, A.; Seweid, M.M. Bridging the Gap: From AI Success in Clinical Trials to Real-World Healthcare Implementation—A Narrative Review. Healthcare 2025, 13, 701. https://doi.org/10.3390/healthcare13070701

El Arab RA, Abu-Mahfouz MS, Abuadas FH, Alzghoul H, Almari M, Ghannam A, Seweid MM. Bridging the Gap: From AI Success in Clinical Trials to Real-World Healthcare Implementation—A Narrative Review. Healthcare. 2025; 13(7):701. https://doi.org/10.3390/healthcare13070701

Chicago/Turabian StyleEl Arab, Rabie Adel, Mohammad S. Abu-Mahfouz, Fuad H. Abuadas, Husam Alzghoul, Mohammed Almari, Ahmad Ghannam, and Mohamed Mahmoud Seweid. 2025. "Bridging the Gap: From AI Success in Clinical Trials to Real-World Healthcare Implementation—A Narrative Review" Healthcare 13, no. 7: 701. https://doi.org/10.3390/healthcare13070701

APA StyleEl Arab, R. A., Abu-Mahfouz, M. S., Abuadas, F. H., Alzghoul, H., Almari, M., Ghannam, A., & Seweid, M. M. (2025). Bridging the Gap: From AI Success in Clinical Trials to Real-World Healthcare Implementation—A Narrative Review. Healthcare, 13(7), 701. https://doi.org/10.3390/healthcare13070701