Predicting High Urinary Tract Infection Rates in Skilled Nursing Facilities: A Machine Learning Approach

Abstract

1. Introduction

- A machine learning model incorporating SNF characteristics will outperform traditional logistic regression in predicting facilities at high risk for HAIs.

- Specific facility-level characteristics, such as geographic location, number of staffed beds, and average length of stay, are significant predictors of urinary tract infections in skilled nursing facilities.

1.1. Background

1.2. Gap in the Literature

1.3. Aim of the Study

2. Materials and Methods

2.1. Study Design and Data Collection

2.2. Factor Variables

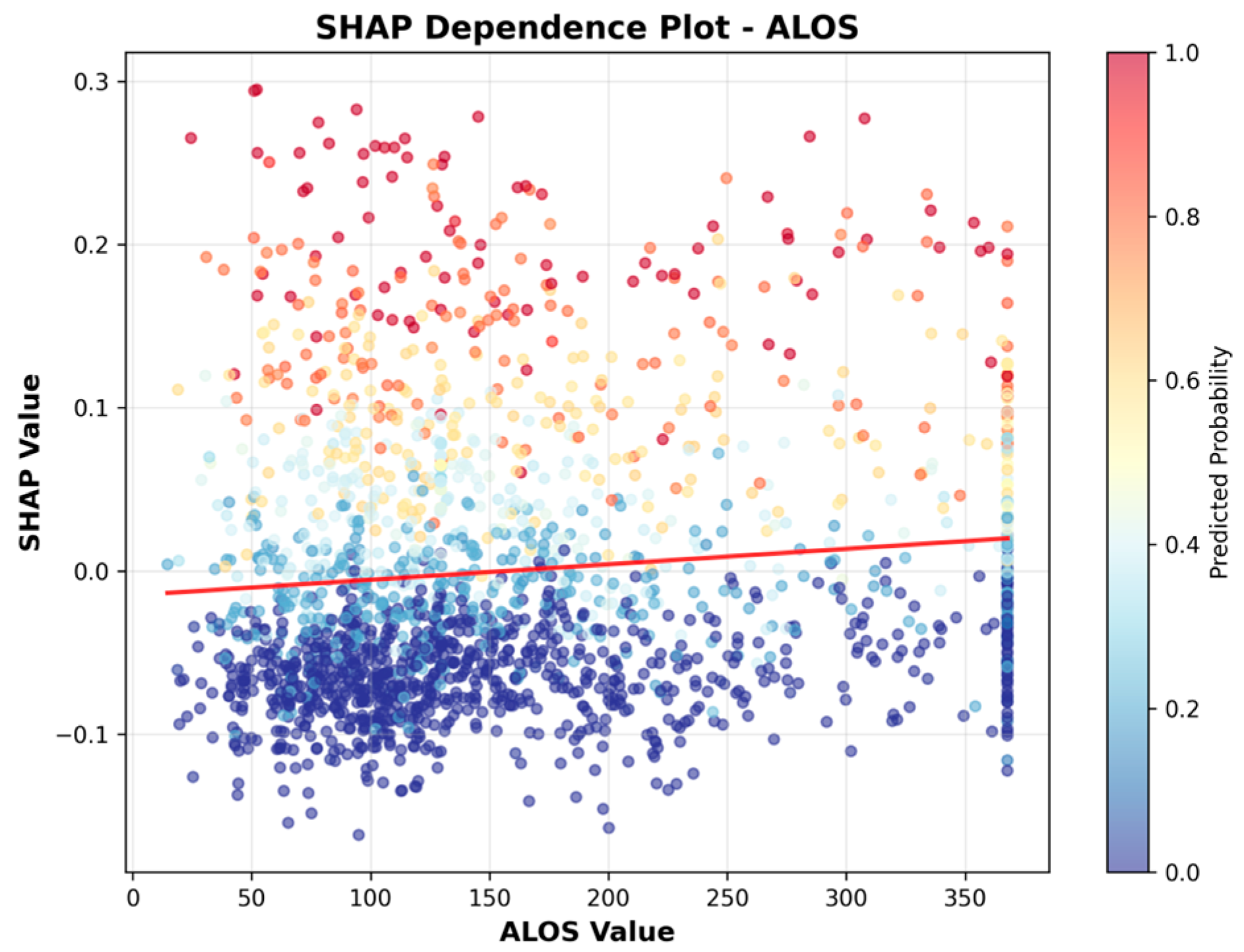

- alos: Average length of stay in days (continuous);

- num_staffed_beds: Number of staffed beds (continuous);

- nurse_hrs: Total nurse staffing hours per resident per day (continuous);

- pt_hrs: Physical therapist (PT) staffing hours per resident per day (continuous);

- ownership: facility ownership type, categorical (values included: ‘Proprietary-Partnership’, ‘Proprietary-Other’, ‘Proprietary-Individual’, ‘Proprietary-Corporation’, ‘Voluntary Nonprofit-Other’: 0, ‘Voluntary Nonprofit-Church’, ‘Governmental-Hospital District’, ‘Governmental-County’: 0, ‘Governmental-State’, ‘Governmental-Other’, ‘Governmental-Federal’, ‘Governmental-City’, ‘Governmental-City-County’;

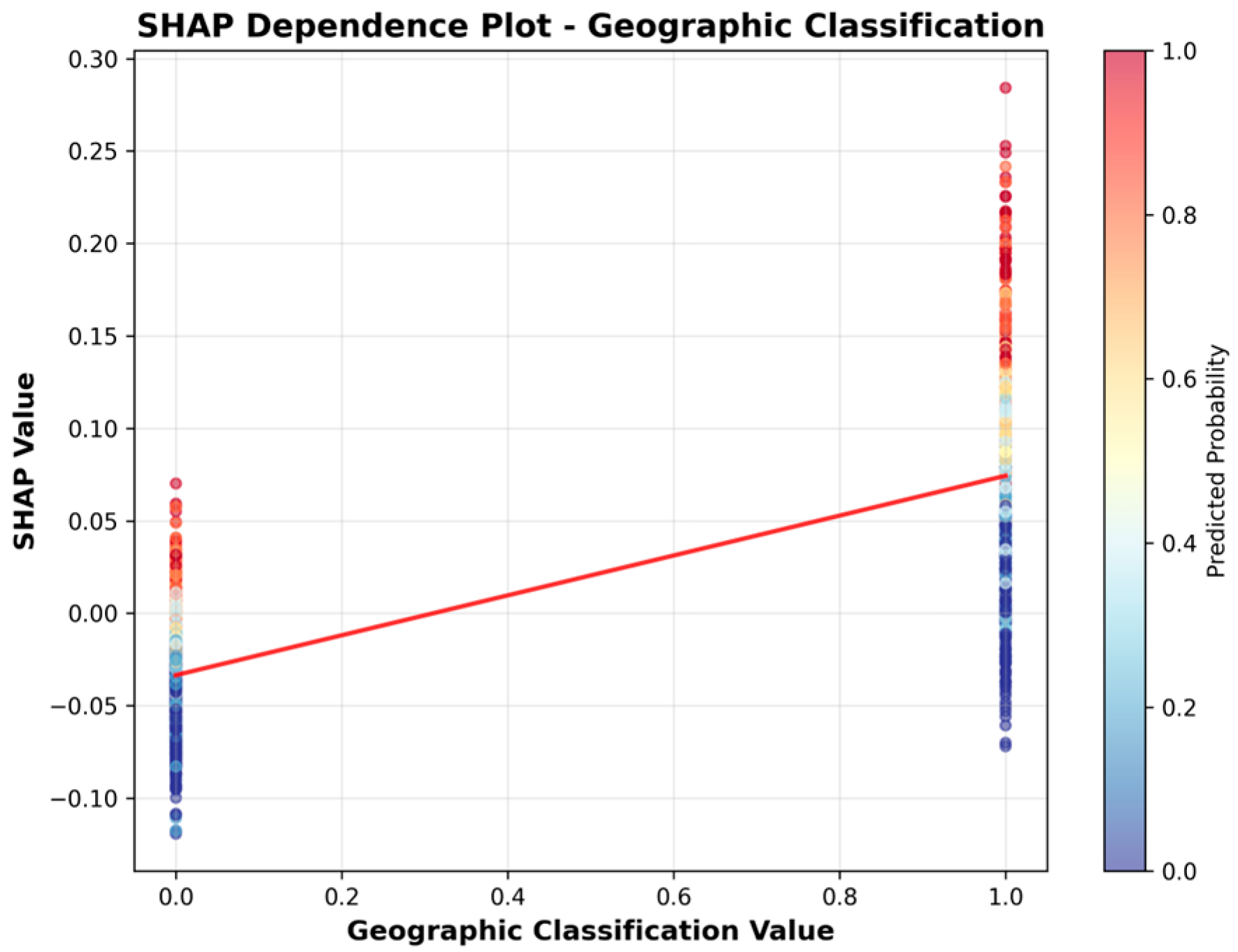

- geographic classification: Rural or urban classification (categorical: rural = 1 or urban = 0);

- accreditation: facility accreditation agency, if any (binary: accredited = 1, not accredited = 0);

- aco_affiliations: Accountable Care Organization (ACO) affiliation (binary: affiliated = 1, not affiliated = 0);

- star_rating: CMS SNF Overall Star Rating (categorical: 1 to 5);

- year: the SNF-year of observations (categorical ordinal: 2019, 2020, 2021, 2022, 2023, 2024).

2.3. Data Collection and Preprocessing

2.3.1. Categorical Encoding

2.3.2. Missingness Analysis and Imputation

2.4. Outlier Detection and Handling

2.5. Multicollinearity and Target Encoding

2.6. Data Analysis

3. Results

3.1. Sample Descriptives

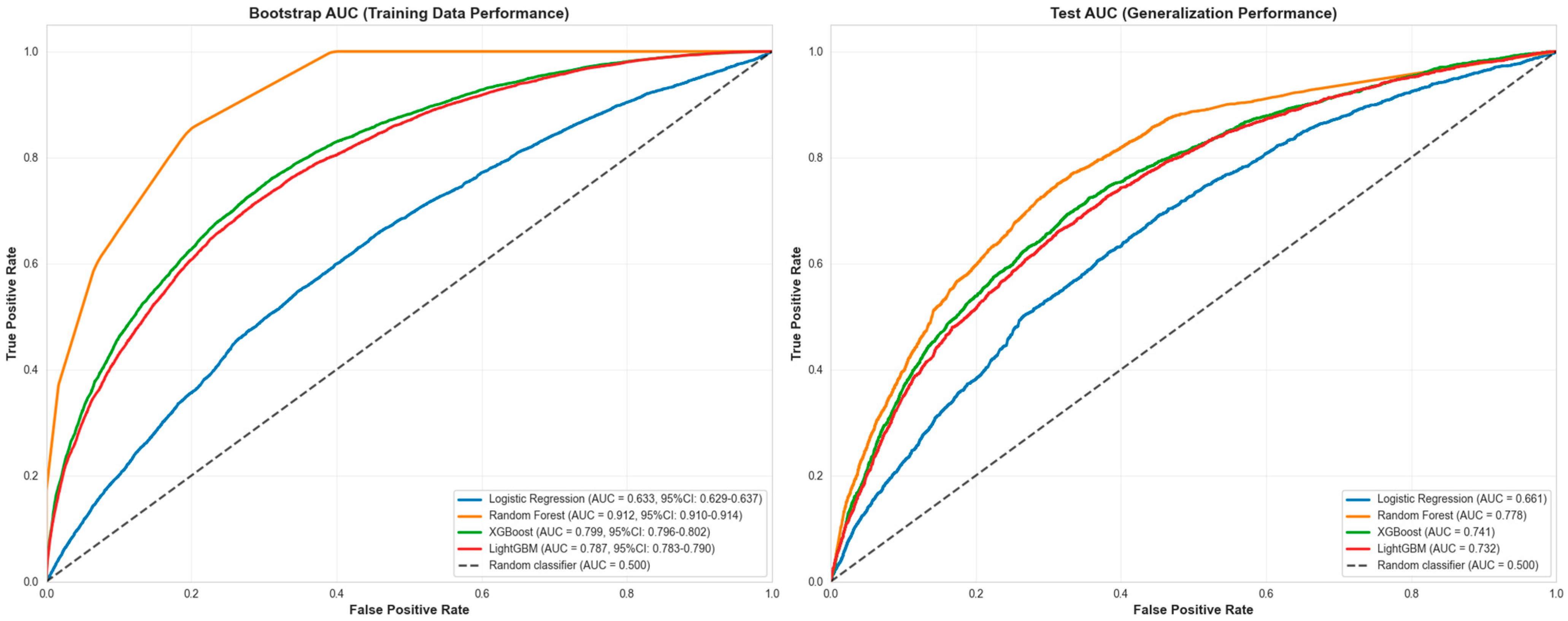

3.2. Machine Learning Results

4. Sensitivity Analyses

4.1. Geographic Classification Sensitivity

4.2. Grouped Facility Split Sensitivity Analysis

5. Discussion

5.1. Comparison to Previous Research

5.2. SNF Facility Characteristics

5.3. Study Results and Hypotheses

5.4. Limitations

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ACO | Accountable Care Organization |

| ALOS | Average Length of Stay |

| AUC-ROC | Area Under The Receiver Operating Characteristic Curve |

| CMS | Centers for Medicare & Medicaid Services |

| HAI | Healthcare-Associated Infection |

| IQR | Interquartile Range |

| ML | Machine Learning |

| ROC-AUC | Receiver Operating Characteristic–Area Under the Curve |

| ROC | Receiver Operating Characteristic |

| SHAP | Shapley Additive Explanations |

| SNF | Skilled Nursing Facility |

| UTI | Urinary Tract Infection |

| VIF | Variance Inflation Factor |

References

- Oliveira, R.M.C.; de Sousa, A.H.F.; de Salvo, M.A.; Petenate, A.J.; Gushken, A.K.F.; Ribas, E.; Torelly, E.M.S.; Silva, K.C.C.D.; Bass, L.M.; Tuma, P.; et al. Estimating the savings of a national project to prevent healthcare-associated infections in intensive care units. J. Hosp. Infect. 2024, 143, 8–17. [Google Scholar] [CrossRef]

- Rosenthal, V.D.; Maki, D.G.; Jamulitrat, S.; Medeiros, E.A.; Todi, S.K.; Gomez, D.Y.; Leblebicioglu, H.; Khader, I.A.; Novales, M.G.M.; Berba, R.; et al. International nosocomial infection control consortium (INICC) report, data summary for 2003–2008. Am. J. Infect. Control 2010, 38, 95–104. [Google Scholar] [CrossRef]

- Beauvais, B.M.; Dolezel, D.M.; Shanmugam, R.; Wood, D.; Pradhan, R. An Exploratory Analysis of the Association Between Healthcare Associated Infections & Hospital Financial Performance. Healthcare 2024, 12, 1314. [Google Scholar] [CrossRef]

- U.S. Department of Health and Human Services. HAI National Action Plan. 2022. Available online: https://www.hhs.gov/oidp/topics/health-care-associated-infections/hai-action-plan/index.html#P3 (accessed on 26 December 2024).

- Centers for Medicare & Medicaid Services. Skilled Nursing Facility Healthcare-Associated Infections Requiring Hospitalization for the Skilled Nursing Facility Quality Reporting Program. 2023. Available online: https://www.cms.gov/files/document/snf-hai-technical-report.pdf (accessed on 4 January 2025).

- Liu, Y.; Li, Y.; Huang, Y.; Zhang, J.; Ding, J.; Zeng, Q.; Tian, T.; Ma, Q.; Liu, X. Prediction of Catheter-Associated Urinary Tract Infections Among Neurosurgical Intensive Care Patients: A Decision Tree Analysis. World Neurosurg. 2024, 170, 123–132. [Google Scholar] [CrossRef]

- Rosenthal, V.D.; Yin, R.; Abbo, L.M.; Lee, B.H.; Rodrigues, C.; Myatra, S.N.; Divatia, J.V.; Kharbanda, M.; Nag, B.; Rajhans, P.; et al. An international prospective study of INICC analyzing the incidence and risk factors for catheter-associated urinary tract infections in 235 ICUs across 8 Asian Countries. Am. J. Infect. Control 2024, 52, 54–60. [Google Scholar] [CrossRef]

- Zhao, Y.; Chen, C.; Huang, Z.; Wang, H.; Tie, X.; Yang, J.; Cui, W.; Xu, J. Prediction of upcoming urinary tract infection after intracerebral hemorrhage: A machine learning approach based on statistics collected at multiple time points. Front. Neurol. 2023, 14, 1223680. [Google Scholar] [CrossRef]

- Jakobsen, R.S.; Nielsen, T.D.; Leutscher, P.; Koch, K.; Nielsen, T.D.; Leutscher, P.; Koch, K. Clinical Explainable Machine Learning Models for Early Identification of Patients at Risk of Hospital-Acquired Urinary Tract Infection. J. Hosp. Infect. 2024, 154, 112–121. [Google Scholar] [CrossRef] [PubMed]

- Hessels, A.J.; Guo, J.; Johnson, C.T.; Larson, E. Impact of patient safety climate on infection prevention practices and healthcare worker and patient outcomes. Am. J. Infect. Control 2023, 51, 482–489. [Google Scholar] [CrossRef] [PubMed]

- Agency for Health Research and Quality. AHRQ’s Healthcare-Associated Infections Program. 2024. Available online: https://www.ahrq.gov/hai/index.html (accessed on 23 November 2024).

- Centers for Medicare & Medicaid Services. Hospital-Acquired Condition Reduction Program. 2024. Available online: https://www.cms.gov/medicare/quality/value-based-programs/hospital-acquired-conditions. (accessed on 2 January 2025).

- U.S. Centers for Disease Control and Prevention. Healthcare Associated Infections. 2024. Available online: https://www.cdc.gov/healthcare-associated-infections/about/index.html (accessed on 2 December 2024).

- Revelas, A. Healthcare-associated infections: A public health problem. Niger. Med. J. 2012, 53, 59–64. [Google Scholar] [CrossRef] [PubMed]

- Center for Disease Control and Prevention. HAIs: Reports and Data. 2024. Available online: https://www.cdc.gov/healthcare-associated-infections/php/data/index.html (accessed on 24 November 2024).

- Monegro, A.F.; Muppidi, V.; Regunath, H. Hospital-Acquired Infections. 2023. Available online: https://www.ncbi.nlm.nih.gov/books/NBK441857/ (accessed on 22 January 2025).

- U.S. Centers for Disease Control and Prevention. Current HAI Progress Report. 2024. Available online: https://www.cdc.gov/healthcare-associated-infections/php/data/progress-report.html (accessed on 22 May 2024).

- Cristina, M.L.; Spagnolo, A.M.; Giribone, L.; Demartini, A.; Sartini, M. Epidemiology and Prevention of Healthcare-Associated Infections in Geriatric Patients: A Narrative Review. Int. J. Environ. Res. Public Health 2021, 17, 5333. [Google Scholar] [CrossRef]

- Mezzatesta, S.; Torino, C.; Meo, P.D.; Fiumara, G.; Vilasi, A. A machine learning-based approach for predicting the outbreak of cardiovascular diseases in patients on dialysis. Comput. Methods Programs Biomed. 2019, 177, 9–15. [Google Scholar] [CrossRef]

- Lantz, B. Machine Learning with R: Expert Techniques for Improving Predictive Modeling, 3rd ed.; Packt Publishing: Birmingham, UK, 2019. [Google Scholar]

- Fulton, L.V.; McLeod, A.J.; Dolezel, D.M.; Bastian, N.; Fulton, C. Deep Vision for Breast Cancer Classification and Segmentation. Cancers 2021, 13, 5384. [Google Scholar] [CrossRef] [PubMed]

- Fulton, L.V.; Dolezel, D.M.; Yan, Y.; Fulton, C.P. Classification of Alzheimer’s Disease with and without Imagery Using Gradient Boosted Machines and ResNet-50. Brain Sci. 2019, 9, 212. [Google Scholar] [CrossRef] [PubMed]

- Friedant, A.J.; Gouse, B.M.; Boehme, A.K.; Siegler, J.E.; Albright, K.C.; Monlezun, D.J.; George, A.J.; Beasley, T.M.; Martin-Schild, S. A Simple Prediction Score for Developing a Hospital-Acquired Infection after Acute Ischemic Stroke. J. Stroke Cerebrovasc. Dis. 2015, 24, 680–686. [Google Scholar] [CrossRef]

- Grey, L.; South, C.; Balentine, C.; Porembka, M.; Mansour, J.; Wang, S.; Yopp, A.; Polanco, P.; Zeh, H.; Augustine, M. Machine Learning Improves Prediction Over Logistic Regression on Resected Colon Cancer Patients. J. Surg. Res. 2022, 275, 181–193. [Google Scholar] [CrossRef]

- Sufriyana, H.; Husnayain, A.; Chen, Y.-L.; Kuo, C.Y.; Singh, O.; Yeh, T.-Y.; Wu, Y.-W.; Su, E. Comparison of Multivariable Logistic Regression and Other Machine Learning Algorithms for Prognostic Prediction Studies in Pregnancy Care: Systematic Review and Meta-Analysis. JMIR Med. Inf. 2020, 8, e16503. [Google Scholar] [CrossRef]

- Sohn, S.; Larson, D.W.; Habermann, E.B.; Naessens, J.M.; Alabbad, J.Y.; Liu, H. Detection of Clinically Important Colorectal Surgical Site Infection Using Bayesian Network. J. Surg. Res. 2017, 209, 168–173. [Google Scholar] [CrossRef] [PubMed]

- Hirano, Y.; Shinmoto, K.; Okada, Y.; Suga, K.; Bombard, J.; Murahata, S.; Shrestha, M.; Ocheja, P.; Tanaka, A. Machine Learning Approach to Predict Positive Screening of Methicillin-Resistant Staphylococcus Aureus During Mechanical Ventilation Using Synthetic Dataset From MIMIC-IV Database. Front. Med. 2021, 8, 694520. [Google Scholar] [CrossRef]

- Beauvais, B.M.; Dolezel, D.M.; Ramamonjiarivelo, Z.H. An Exploratory Analysis of the Association Between Hospital Quality Measures and Financial Performance. Healthcare 2023, 11, 2758. [Google Scholar] [CrossRef]

- Definitive Healthcare. Hospital View. 2024. Available online: https://www.definitivehc.com/ (accessed on 22 November 2024).

- Koenig, L.; Soltoff, S.; Demiralp, B.; Demehim, A.; Foster, N. Complication Rates, Hospital Size, and Bias in the CMS Hospital-Acquired Condition Reduction Program. Am. J. Med. Qual. 2017, 32, 611–616. [Google Scholar] [CrossRef]

- Smith, J.G.; Plover, C.M.; McChesney, M.C.; Lake, E.T. Isolated, Small, and Large Hospitals have Fewer Nursing Resources than Urban Hospitals: Implications for Rural Health Policy. Public Health Nurs. 2019, 36, 469–477. [Google Scholar] [CrossRef] [PubMed]

- Devereaux, P.; Choi, P.; Lacchetti, C.; Weaver, B.; Schunemann, H.; Haines, T. A systematic review and meta-analysis of studies comparing mortality rates of private for-profit and private not-for-profit hospital. Can. Med. Assoc. J. 2002, 166, 1399–1406. [Google Scholar]

- Anderson, A.; Chen, J. ACO Affiliated Hospitals Increase Implementation of Care Coordination Strategies. Med. Care 2019, 57, 300–304. [Google Scholar] [CrossRef]

- Gucwa, A.L.; Dolar, V.; Ye, C.; Epstein, S. Correlations between quality ratings of skilled nursing facilities and multidrug-resistant urinary tract infections. Am. J. Infect. Control 2016, 44, 1256–1260. [Google Scholar] [CrossRef]

- Rogers, M.A.; Fries, B.E.; Kaufman, S.R.; Mody, L.; McMahon, L.F., Jr.; Saint, S. Mobility and other predictors of hospitalization for urinary tract infection: A retrospective cohort study. BMC Geriatr. 2008, 25, 31. [Google Scholar] [CrossRef]

- Divine, K.; McVey, L. Physical Therapy Management in Recurrent Urinary Tract Infections: A Case Report. J. Women’s Pelvic Health Phys. Ther. 2021, 45, 27–33. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-Learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830, Version 1.7.1. Available online: https://scikit-learn.org (accessed on 15 October 2025).

- Chen, T.; Guestrin, C. XGBoost: A scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; Version 3.0.4. Available online: https://dl.acm.org/doi/10.1145/2939672.2939785 (accessed on 24 July 2025).

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.Y. LightGBM: A highly efficient gradient boosting decision tree. Adv. Neural Inf. Process. Syst. 2017, 30, 52. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Shi, Y.; Ke, G.; Soukhavong, D.; Lamb, J.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; et al. LightGBM: Light Gradient Boosting Machine [Computer Software]. Version 4.6.0. 2025. Available online: https://github.com/Microsoft/LightGBM (accessed on 15 October 2025).

- Lundberg, S.M.; Lee, S.I. A unified approach to interpreting model predictions. Adv. Neural Inf. Process. Syst. 2017, 30, 4768–4777. [Google Scholar] [CrossRef]

- Wu, M.; Pu, L.; Grealish, L.; Jones, C.; Moyle, W. The effectiveness of nurse-led interventions for preventing urinary tract infections in older adults in residential aged care facilities: A systematic review. J. Clin. Nurs. 2020, 29, 1432–1444. [Google Scholar] [CrossRef]

- Genao, L.; Buhr, G. Urinary Tract Infections in Older Adults Residing in Long-Term Care Facilities. Ann. Longterm Care 2012, 20, 33–38. [Google Scholar]

| Statistic | 2019 | 2020 | 2021 | 2022 | 2023 | 2024 | Imputation Strategy |

|---|---|---|---|---|---|---|---|

| accreditation | 99.69 | 99.69 | 99.68 | 99.68 | 99.68 | 99.69 | Dropped |

| aco_affiliations | 95.47 | 95.48 | 95.47 | 95.49 | 95.49 | 95.47 | Dropped |

| alos | 3.65 | 3.64 | 3.64 | 3.64 | 3.68 | 3.75 | Median |

| for_profit | 2.87 | 2.85 | 2.84 | 2.84 | 2.86 | 2.94 | Mode |

| geographic_classification | 0 | 0 | 0 | 0 | 0 | 0 | Not imputed |

| government_owned | 2.87 | 2.85 | 2.84 | 2.84 | 2.86 | 2.94 | Mode |

| num_staffed_beds | 3.51 | 3.5 | 3.51 | 3.5 | 3.55 | 3.62 | Median |

| nurse_hrs | 100 | 100 | 100 | 100 | 100 | 0.78 | Dropped |

| pt_hrs | 100 | 100 | 100 | 100 | 100 | 0.78 | Dropped |

| star_rating | 0.69 | 0.7 | 0.72 | 0.72 | 0.76 | 0.85 | Mode |

| uti_rate | 0 | 0 | 0 | 0 | 0 | 0 | Not imputed |

| year | 0 | 0 | 0 | 0 | 0 | 0 | Not imputed |

| Feature | VIF |

|---|---|

| For Profit | 1.48 |

| Government Owned | 1.44 |

| Geographic Classification | 1.13 |

| Num Staffed Beds | 1.1 |

| ALOS | 1.07 |

| Star rating | 1.06 |

| Statistic | num_staffed_beds | alos | uti_rate * | alos_imputed | num_staffed_beds_imputed |

|---|---|---|---|---|---|

| count | 91,471 | 91,345 | 94,877 | 94,877 | 94,877 |

| mean | 112.62 | 160.12 | 2.02 | 157.85 | 112 |

| std | 47.72 | 97.6 | 2.48 | 93.37 | 45.9 |

| min | 8 | 1 | 0 | 1 | 8 |

| 25% | 78 | 88.51 | 0.06 | 89.71 | 80 |

| 50% | 107 | 129.53 | 1.25 | 129.53 | 107 |

| 75% | 136 | 205.96 | 2.89 | 200.92 | 134 |

| max | 223 | 382.14 | 32.35 | 367.74 | 215 |

| Variable | Category | n | Percentage (%) |

|---|---|---|---|

| year | 2019 | 13,851 | 16.51 |

| 2020 | 13,917 | 16.59 | |

| 2021 | 13,967 | 16.65 | |

| 2022 | 14,021 | 16.71 | |

| 2023 | 14,068 | 16.77 | |

| 2024 | 14,071 | 16.77 | |

| geographic_classification | 0 | 65,503 | 69.04 |

| 1 | 29,374 | 30.96 | |

| for_profit | 0 | 26,726 | 28.17 |

| 1 | 68,151 | 71.83 | |

| government_owned | 0 | 85,225 | 89.83 |

| 1 | 9652 | 10.17 | |

| star_rating | 1 | 23,411 | 24.68 |

| 2 | 20,403 | 21.50 | |

| 3 | 19,002 | 20.03 | |

| 4 | 16,502 | 17.39 | |

| 5 | 15,559 | 16.4 | |

| for_profit | 0 | 26,726 | 28.17 |

| 1 | 68,151 | 71.83 |

| Year | n * | Mean |

|---|---|---|

| 2019 | 13,851 | 2.61 |

| 2020 | 13,917 | 2.47 |

| 2021 | 13,967 | 2.35 |

| 2022 | 14,021 | 2.29 |

| 2023 | 14,068 | 2.11 |

| 2024 | 14,071 | 1.87 |

| Model | Accuracy | ROC-AUC * | F1-Score | AUC-PR |

|---|---|---|---|---|

| Random Forest | 0.794 | 0.914 | 0.467 | 0.438 |

| XGBoost | 0.814 | 0.8 | 0.253 | 0.392 |

| LightGBM | 0.814 | 0.789 | 0.238 | 0.383 |

| Logistic Regression | 0.81 | 0.634 | 0.07 | 0.298 |

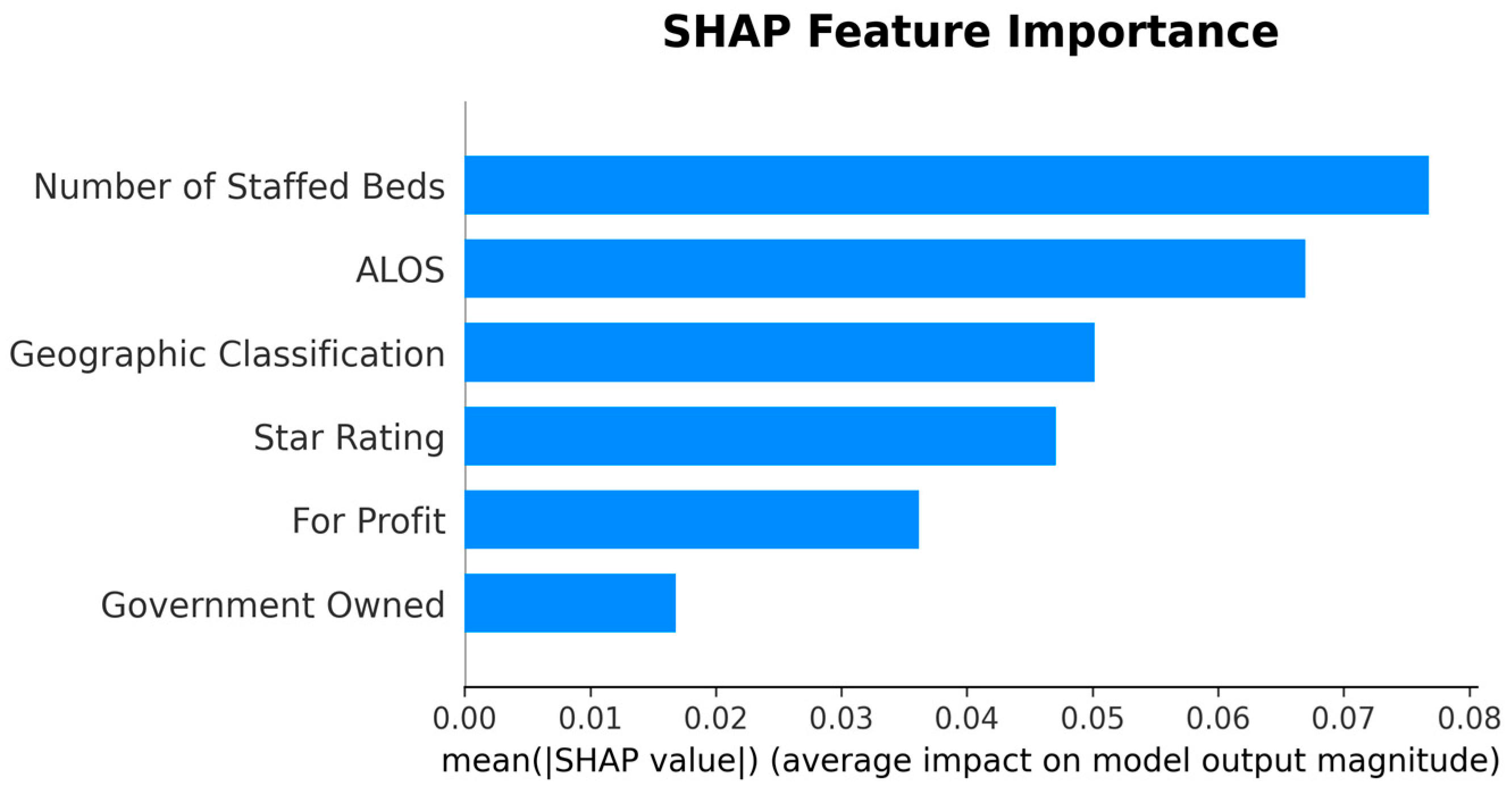

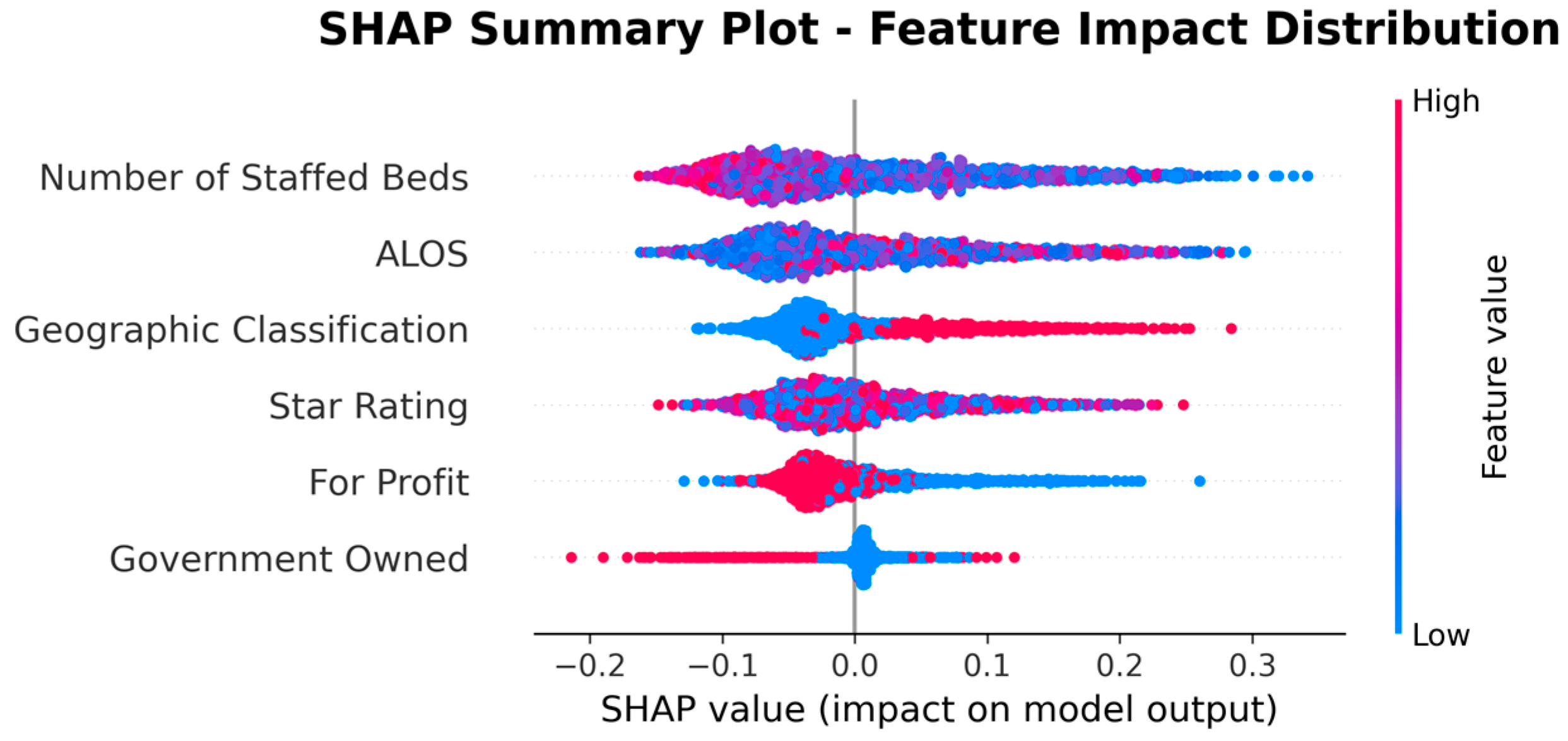

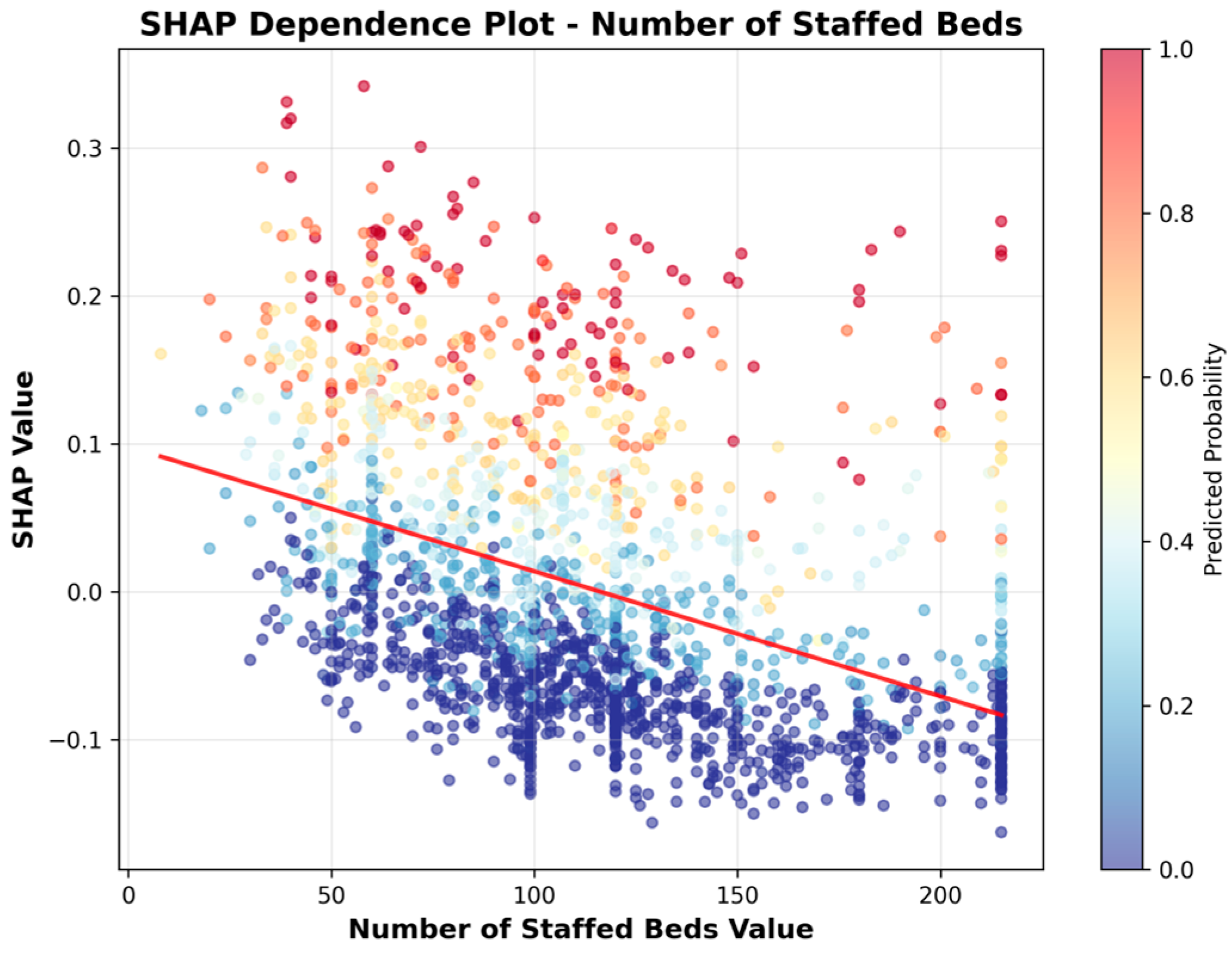

| Rank | Feature | |SHAP| Mean |

|---|---|---|

| 1 | Number of Staffed Beds | 0.0768 |

| 2 | ALOS | 0.0669 |

| 3 | Geographic Classification | 0.0502 |

| 4 | Star Rating | 0.0471 |

| 8 | For Profit | 0.0362 |

| 9 | Government Owned | 0.0168 |

| Analysis * | ROC-AUC (95% CI) | F1-Score (95% CI) | Test Sample Size |

|---|---|---|---|

| Main Analysis | 0.912 (0.910–0.914) | 0.662 (0.656–0.668) | 14,071 |

| Rural | 0.920 (0.918–0.921) | 0.637 (0.631–0.644) | 9746 |

| Urban | 0.886 (0.882–0.891) | 0.694 (0.686–0.703) | 4325 |

| Split Method | ROC-AUC (95% CI) | F1-Score (95% CI) | Train Facilities | Test Facilities | Test Samples |

|---|---|---|---|---|---|

| Temporal Split (Original) | 0.912 (0.910–0.914) | 0.662 (0.656–0.668) | 14,127 | 14,071 | 14,071 |

| Grouped Facility Split | 0.872 (0.869–0.874) | 0.576 (0.569–0.582) | 9915 | 4250 | 28,373 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dolezel, D.; Wang, T.; Gobert, D. Predicting High Urinary Tract Infection Rates in Skilled Nursing Facilities: A Machine Learning Approach. Healthcare 2025, 13, 2632. https://doi.org/10.3390/healthcare13202632

Dolezel D, Wang T, Gobert D. Predicting High Urinary Tract Infection Rates in Skilled Nursing Facilities: A Machine Learning Approach. Healthcare. 2025; 13(20):2632. https://doi.org/10.3390/healthcare13202632

Chicago/Turabian StyleDolezel, Diane, Tiankai Wang, and Denise Gobert. 2025. "Predicting High Urinary Tract Infection Rates in Skilled Nursing Facilities: A Machine Learning Approach" Healthcare 13, no. 20: 2632. https://doi.org/10.3390/healthcare13202632

APA StyleDolezel, D., Wang, T., & Gobert, D. (2025). Predicting High Urinary Tract Infection Rates in Skilled Nursing Facilities: A Machine Learning Approach. Healthcare, 13(20), 2632. https://doi.org/10.3390/healthcare13202632