Exploring the Role of Artificial Intelligence in Smart Healthcare: A Capability and Function-Oriented Review

Abstract

1. Introduction

1.1. Scope

1.2. Purpose

- Clarifying the capability spectrum from narrow to Superintelligent AI.

- Outlining the functional evolution from reactive machines to theoretical self-aware systems.

- Mapping current technologies to these categories to evaluate readiness, risk, and research opportunities.

1.3. Contributions

- Dual Perspective Framework: Introduces a unique classification of AI systems in smart healthcare based on capability (Narrow AI, General AI, and Superintelligence) and functionality (Reactive Machines, Limited Memory, ToM, and Self-Aware AI).

- Technology to Function Mapping: Provides a clear mapping of existing AI applications such as diagnostic imaging, predictive modeling, and AI mental health tools onto the defined capability and functionality axes.

- Contemporary Literature Synthesis (Post-2021): Consolidates and critiques recent research (2021–2025), including state-of-the-art techniques like federated learning, multimodal analysis, and AI power patient monitoring systems.

- Future Outlook and Ethical Insights: Highlights the ethical, legal, and operational challenges that arise as healthcare transitions toward more intelligent and autonomous AI systems, especially those approaching AGI or Superintelligent AI.

- Guidance for Stakeholders: Offers practical insights for healthcare practitioners, technologists, and policymakers to evaluate AI readiness, align it with clinical goals, and anticipate regulatory needs.

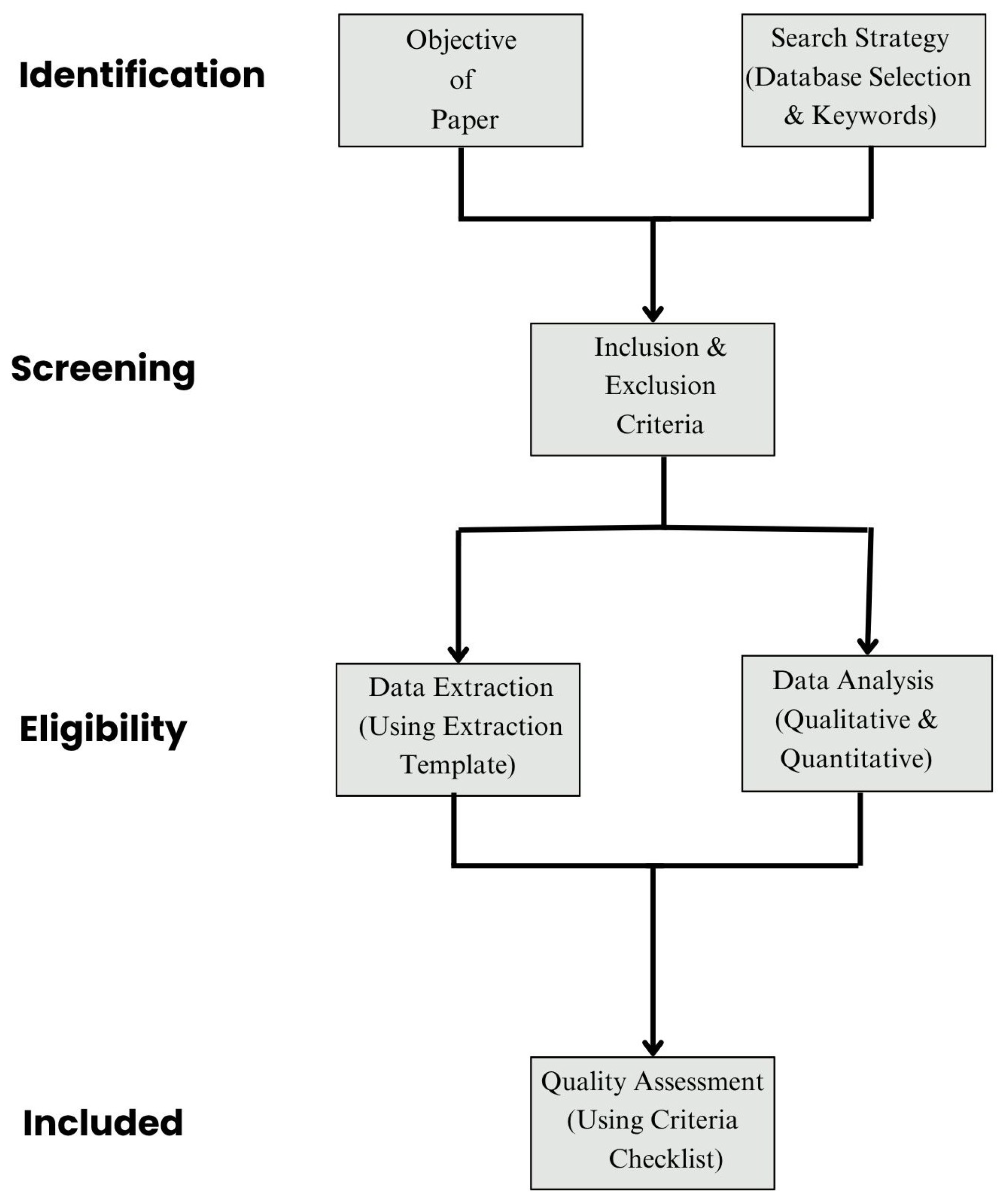

2. Methods

2.1. Search Strategy

- (“Artificial Intelligence” OR “AI” OR “machine learning” OR “deep learning” OR “generative AI”)

- AND (“smart healthcare” OR “clinical decision support” OR “digital health” OR “medical AI”)

- AND (“capabilities” OR “functionalities” OR “narrow AI” OR “AGI” OR “superintelligence” OR “Theory of Mind” OR “self-aware AI”)

2.2. Eligibility Criteria

- Peer-reviewed journal or conference papers published in English between 2021 and 2025.

- Studies focused on AI applications in healthcare using clearly defined AI systems or frameworks.

- Articles discussing AI classification, capability levels (e.g., Narrow AI and AGI), or system functionalities (e.g., Limited Memory and ToM).

- Papers describing real-world or simulated deployment in clinical settings or smart healthcare infrastructure.

- Non-peer-reviewed literature (e.g., preprints and whitepapers).

- Editorials, opinion pieces, or theoretical articles without application relevance.

- Studies outside the healthcare domain or focused solely on mathematical formulations of AI.

- Redundant studies not offering unique contribution to either capability-based or functionality-based classification.

2.3. Study Selection Process

2.4. Data Extraction and Mapping Framework

2.5. Quality Assessment Criteria

- Clear description of AI system and model architecture;

- Defined clinical objective or healthcare application;

- Description of data types and sources;

- Explanation of capability or functionality alignment;

- Evaluation of model performance or deployment outcome;

- Evidence of clinical relevance or simulation;

- Addressing of ethical or interpretability considerations;

- Reproducibility elements (e.g., code availability and data links).

2.6. Data Synthesis Strategy

- Categorization by AI capability: Narrow, AGI, or Superintelligent.

- Categorization by functionality: Reactive, Limited Memory, ToM, or Self-Aware.

- Use case alignment (e.g., mental health, diagnostics, imaging, robotic systems).

- Mapping technologies to the dual framework.

- Thematic clustering of ethical and deployment challenges.

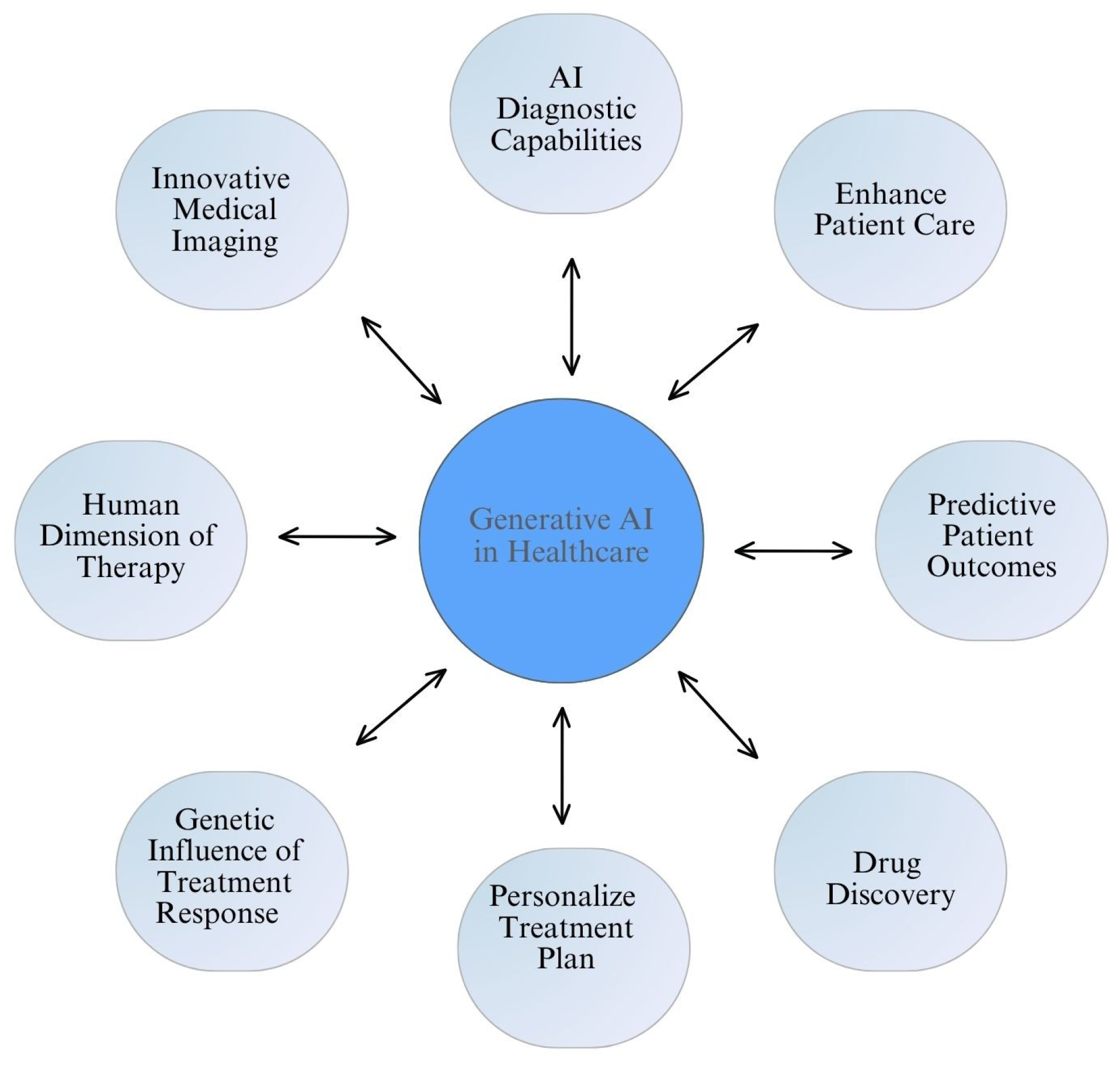

3. AI in Smart Healthcare: Based on Capabilities

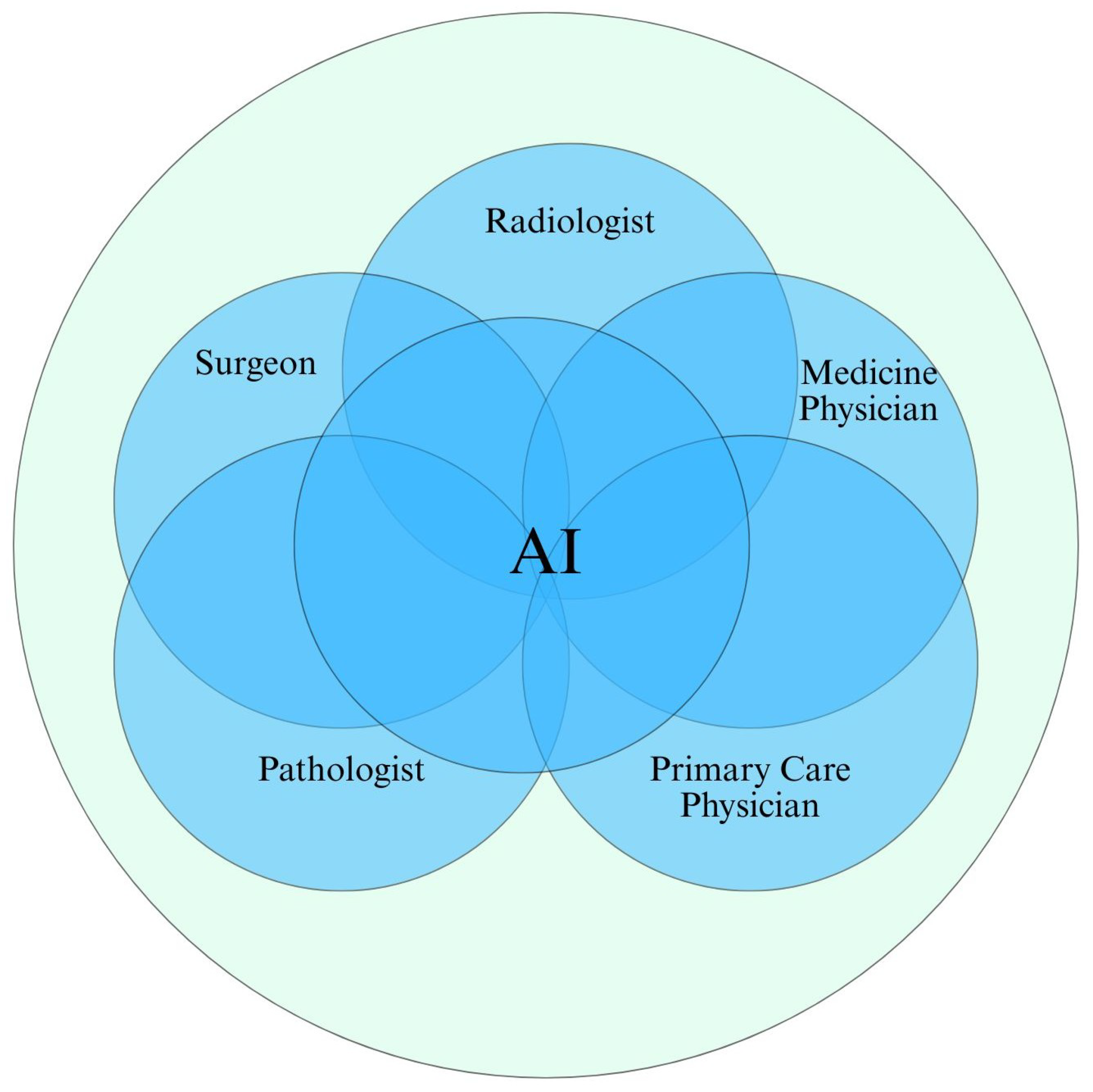

3.1. Narrow AI: The Present Foundation of Smart Healthcare

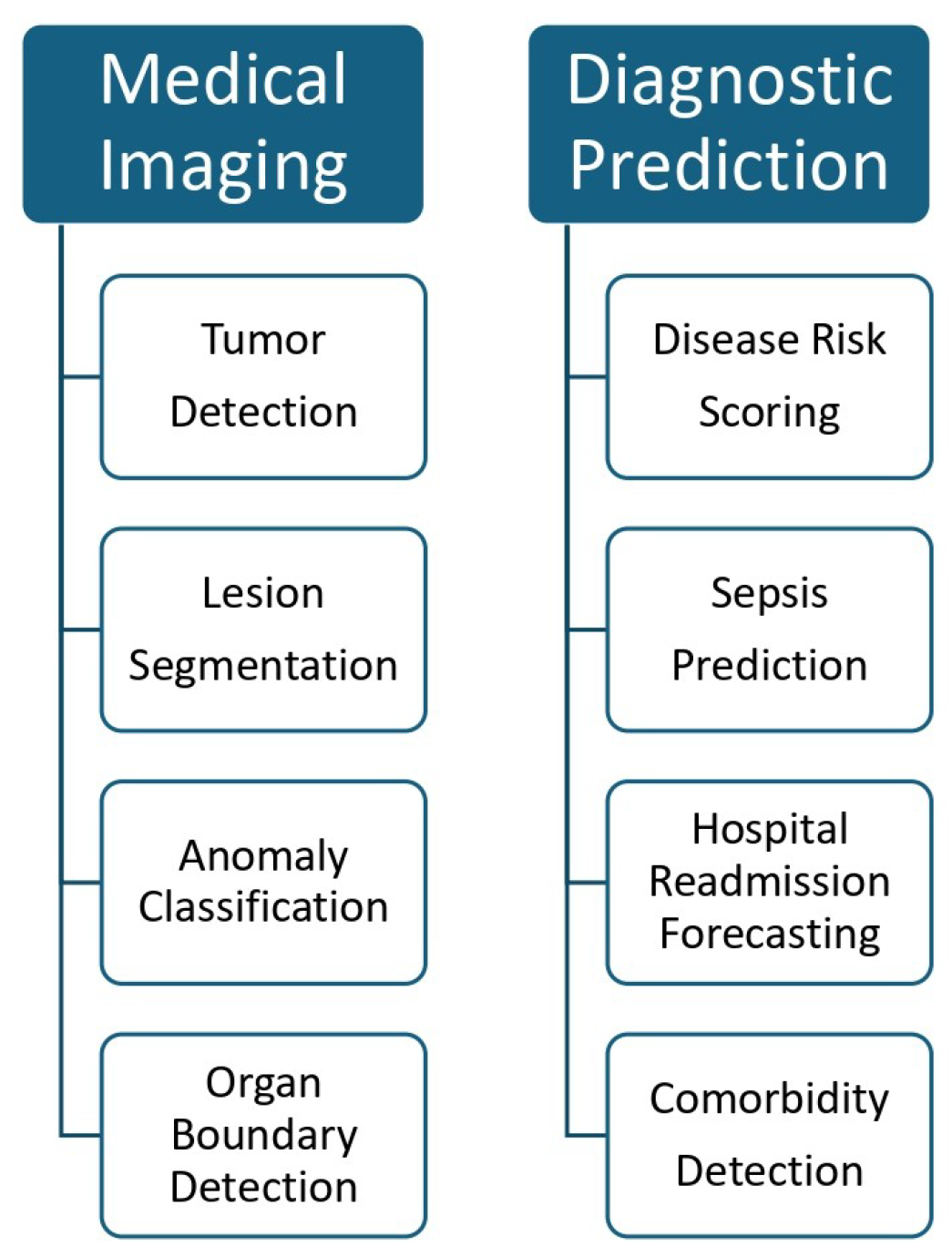

3.1.1. Applications in Medical Imaging and Diagnostic

3.1.2. Clinical Decision Support Systems (CDSS)

3.1.3. Virtual Health Assistants and Chatbots

3.1.4. Wearable Devices and Remote Monitoring

3.1.5. Administrative and Workflow Optimization

3.2. General AI: Toward Contextual and Adaptive Intelligence

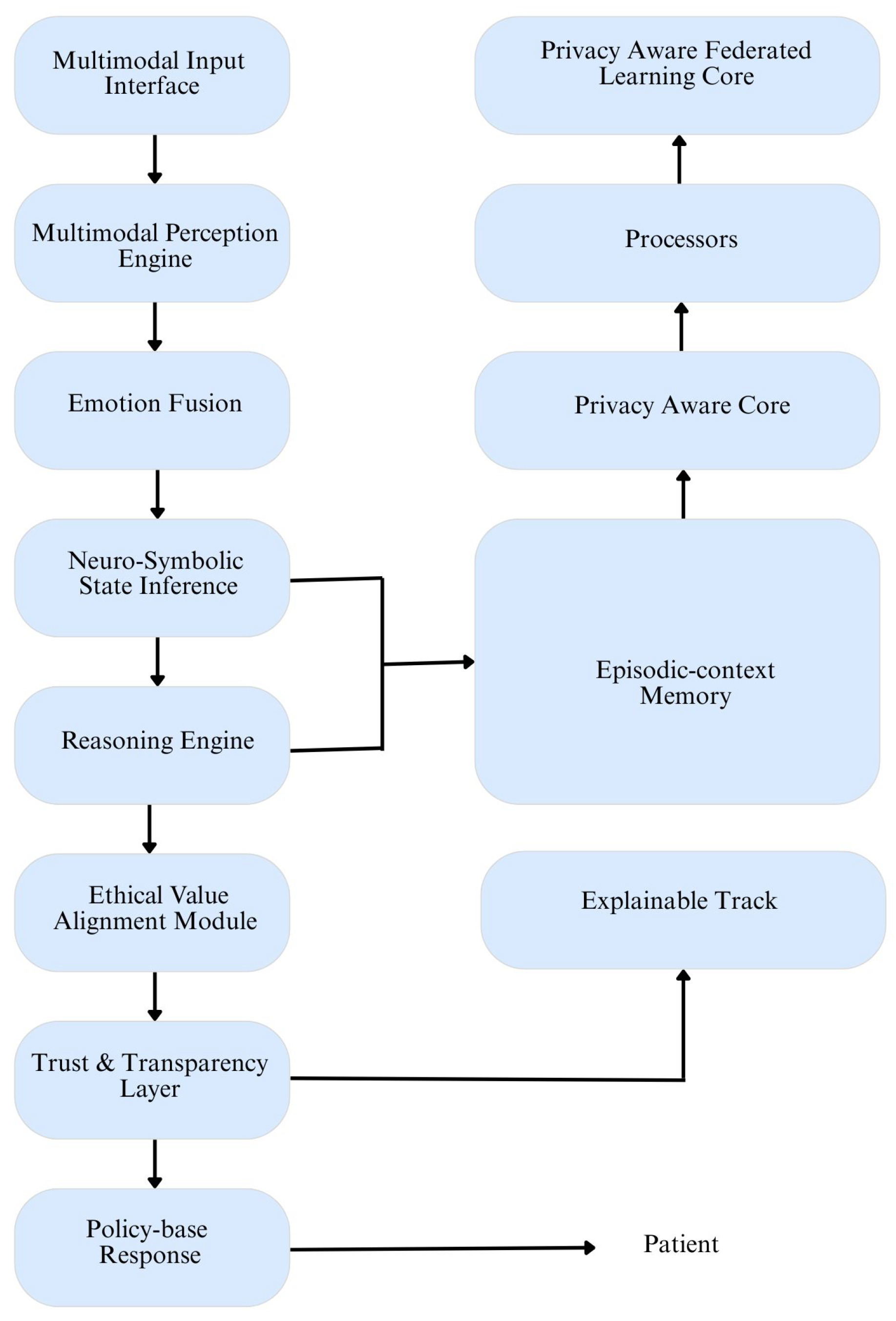

3.2.1. Multimodal Patient Understanding

3.2.2. Cognitive Flexibility in Mental Health Applications

3.2.3. Adaptive Learning in Clinical Settings

3.3. Superintelligent AI: Theoretical Cognitive Supremacy

3.3.1. Autonomous Knowledge Discovery

- Read and synthesize thousands of new research papers daily.

- Design novel clinical trials.

- Model the effects of drugs at the molecular level.

- Devise treatment plans personalized to the genetic and epigenetic profile of each individual.

3.3.2. Global Health System Management

3.3.3. Integration of Ethical, Emotional, and Social Intelligence

4. AI in Smart Healthcare: Based on Functionalities

- Reactive Machines.

- Limited Memory.

- Theory of Mind.

- Self-Aware Systems.

4.1. Reactive Machines

4.1.1. Structure and Operation

4.1.2. Applications in Smart Healthcare

- ICU Alarm Systems: These systems detect abnormal parameters in patient vitals, such as heart rate or oxygen saturation, and trigger alerts. They follow pre-set thresholds and act instantaneously without learning from past cases [76].

- Early Expert Systems: Tools like MYCIN (for infectious diseases) and Internist-I (for internal medicine) are classic examples of reactive systems in medicine. These systems used if–then logic to provide diagnostic suggestions and therapeutic options [77].

- Medical Device Automation: Many medical machines like infusion pumps, ventilators, and defibrillators rely on reactive logic to function safely in real time without adapting from previous data [78].

4.1.3. Value in Healthcare

4.2. Limited Memory Systems

4.2.1. Architecture

4.2.2. Applications in Smart Healthcare

- Medical Imaging: AI models using deep CNN are widely used for detecting tumors, lesions, and organ anomalies from radiographic images. Ref. [82] showed how hybrid CNN-based systems accurately predicted breast cancer metastasis from mammograms and metadata.

- Risk Stratification: ML models trained on electronic health records (EHRs) can predict hospital readmission, mortality, or sepsis development. These models consider past diagnoses, medications, and lab results to generate risk scores [53].

- Wearable Monitoring and Remote Sensing: Devices like Fitbit, Apple Watch, or specialized ECG patches use AI to monitor physiological signals such as heart rate, sleep cycles, or respiratory rate. These tools analyze patterns over time and alert users or providers about concerning trends [83].

- Digital Mental Health Tools: Chatbots such as Woebot and Wysa employ session-based memory to deliver tailored psychological interventions. They remember user inputs during a session to provide context-aware dialogue and offer real-time cognitive behavioral therapy [34].

4.2.3. Functional Characteristics

- Utilizes stored data for prediction.

- Requires retraining for model updates.

- Supports short-term memory within fixed boundaries.

- Does not generalize across tasks.

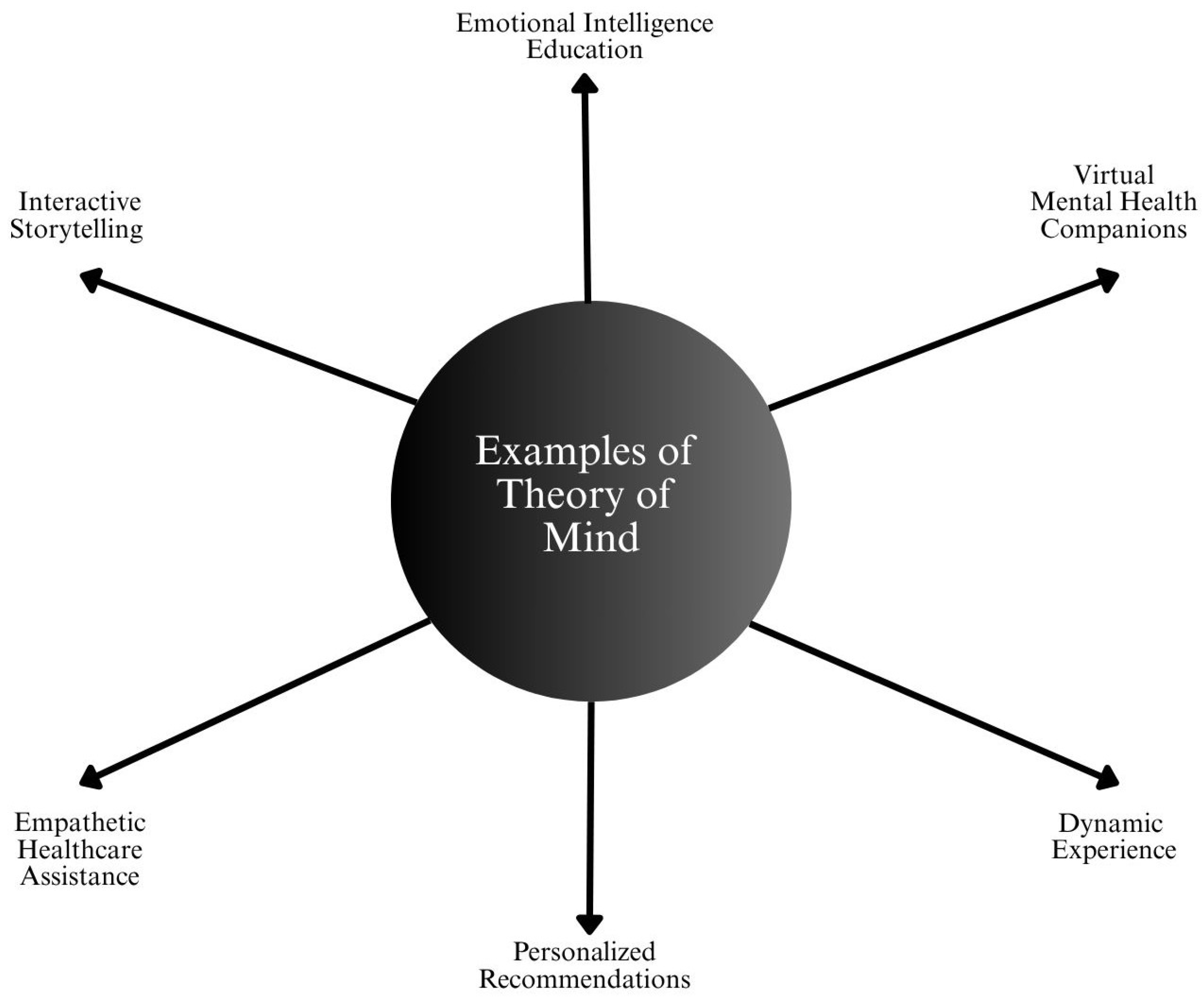

4.3. Theory of Mind Systems

4.3.1. Operational Features

- Infers user intent beyond text or data.

- Understands affective states and behavioral context.

- Adjusts responses based on the perceived emotional or cognitive status of the user.

4.3.2. Healthcare Applications

- Empathy-Aware Mental Health Tools: AI chatbots enhanced with emotion recognition capabilities can detect user tone, sentiment, or emotional distress. The EmpatheticDialogues dataset and systems trained on it are being explored for empathetic response generation [92].

- Conversational AI in Counseling: Advanced NLP systems are being adapted for therapy bots that can adjust interaction styles based on patient emotional feedback. Ref. [34] reported that users prefer bots that demonstrate empathy, mirroring basic Theory of Mind behavior.

- Pediatric and Geriatric Care Assistants: In environments where patients may be non-verbal or cognitively impaired, AI systems using facial expression and speech pattern recognition can infer emotional or physical discomfort [92].

- Clinical Communication Support: Systems are being designed to assist doctors in delivering complex or sensitive information, with AI suggesting language modifications based on the patient’s comprehension level and psychological state [93].

4.3.3. Multimodal Fusion for ToM

- Text (conversation).

- Audio (tone, pitch, emotion).

- Visual (facial expression, body language).

- Contextual data (history, environment).

4.4. Self-Aware Systems

4.4.1. Emerging Concepts in Healthcare Systems

- Explainable AI (XAI): Systems that provide rationales for their decisions, particularly in medical imaging or diagnosis. Saliency maps in CNNs highlight which part of an X-ray image influenced the model’s decision early form of self-reflective behavior [60].

- Uncertainty Estimation: AI models that can indicate when they are not confident in a prediction simulate a rudimentary form of introspection [95].

- Adaptive Clinical Learning Systems: Systems that monitor their own performance across populations, and suggest re-training or flag anomalous data points, embody limited aspects of meta cognition [96].

4.4.2. Application in Risk Management

4.4.3. Therapeutic Identity in Mental Health AI

5. Synthesis: Capabilities vs. Functionalities

- Narrow AI + Limited Memory.

- AGI + Theory of Mind.

- Superintelligent AI + Self-Awareness.

5.1. Narrow AI + Limited Memory: The Operational Backbone of Today’s Smart Healthcare

5.1.1. Current Use in Smart Healthcare

- Clinical Decision Support Systems (CDSS): Tools that provide physicians with evidence-based suggestions for diagnosis and treatment based on structured data from EHRs [98].

- Medical Imaging: CNN-based models trained to detect abnormalities such as tumors, fractures, or nodules from CT, MRI, and X-ray images [99].

- Predictive Analytics: Algorithms that forecast risks of readmission, sepsis, or treatment complications using past patient data [100].

- Mental Health Chatbots: Tools like Wysa and Woebot use session-based memory and NLP to offer CBT and mood tracking [101].

5.1.2. Value Proposition

- High accuracy within specialized domains.

- Trustworthy through auditability and static behavior.

- Relatively low risk in deployment due to limited autonomy.

5.2. General AI + Theory of Mind: The Emerging Horizon of Adaptive, Empathetic Intelligence

5.2.1. Current Use in Smart Healthcare

- Large Language Models (LLMs): Systems like Med-PaLM and GatorTron exhibit early-stage general reasoning capabilities across diverse clinical queries [102].

- Multimodal AI Models: Research is underway to integrate imaging, EHR data, genomic profiles, and behavioral metrics into unified decision-making tools [103].

- Affective Computing: Emotion-aware chatbots and assistive robots that respond to user tone and sentiment are early steps toward ToM in AI [104].

- Contextual Care Tools: Systems designed to adapt communication style depending on whether the user is a clinician, caregiver, or patient [53].

5.2.2. Functionality and Potential

- Handle unstructured and multimodal data.

- Understand the mental state and intent of the use.

- Adjust behavior based on empathy, cultural awareness, and situational context.

5.2.3. Representative Clinical Use Cases for AGI in Smart Healthcare

5.3. Superintelligent AI + Self-Awareness: A Theoretical Apex of Cognitive and Ethical Complexity

5.3.1. Current Use in Smart Healthcare

- Explainable AI (XAI): Systems that rationalize their own decisions (e.g., saliency maps and attention mechanisms) [109].

- Uncertainty Quantification: AI models that indicate the degree of confidence in their predictions, enabling human oversight [95].

- Self-Monitoring Agents: Systems capable of logging their performance, flagging anomalies, and recommending updates [110].

5.3.2. Conceptual Role in Smart Healthcare

- Independently conduct medical research and discover treatments.

- Run entire healthcare ecosystems autonomously.

- Resolve ethical dilemmas by weighing societal impact, cultural norms, and individual patient values.

- Provide lifelong, personalized care surpassing human limitations in cognition and availability.

5.4. Comparative Framework: Bridging Capabilities and Functionalities

Key Insights from the Synthesis

- Most Deployed Systems Reside in the Narrow AI + Limited Memory Quadrant: These systems dominate because they are practical, validated, and easier to regulate, making them ideal for tasks like diagnostics and workflow automation [105].

- Emerging Research Aligns with the AGI + Theory of Mind Paradigm: There is growing momentum toward creating emotionally intelligent and context-aware systems. While these models show promise, they require significant advancement in natural language understanding, multimodal processing, and interoperability [113].

- Superintelligent + Self-Aware Systems Serve as a Theoretical Boundary: This quadrant is valuable for philosophical, ethical, and governance considerations, guiding the development of safeguards and frameworks even before such systems exist [114].

6. Challenges and Considerations in AI-Driven Smart Healthcare

6.1. Bias and Fairness

6.1.1. Sources of Bias

- Training Data Bias: When training datasets are skewed toward specific populations (e.g., white, male, and urban patients), AI models may underperform for marginalized communities. Dermatology AI trained on light-skinned images may fail to detect skin cancer in patients with darker skin tones [115].

- Labeling Bias: If clinical labels are assigned inconsistently by different practitioners, especially in subjective diagnoses (e.g., mental health and pain levels), AI systems may learn incorrect or misleading associations [116].

- Deployment Bias: Once deployed, AI tools may exacerbate disparities if they are more accessible to high-income or tech-savvy populations, leaving others underserved [117].

6.1.2. Impact on Healthcare Equity

- Misdiagnosis or missed diagnosis in minority populations.

- Allocation of resources skewed toward majority groups.

- Worsening of health disparities despite the promise pf AI to reduce them.

6.2. Interpretability and Trust

6.2.1. The “Black Box” Problem

- AI recommendations contradict clinical judgment.

- There are legal or ethical consequences for incorrect predictions.

- The user cannot justify an AI-driven diagnosis or treatment to the patient.

6.2.2. Clinical Implications

- Delayed adoption of effective tools.

- Overreliance on AI without appropriate oversight.

- Resistance from clinicians due to lack of confidence.

6.3. Regulatory Complexity and Oversight

6.3.1. Capability-Specific Regulation

- Narrow AI systems (e.g., imaging classifiers) can be regulated similarly to traditional medical devices through validation, accuracy thresholds, and risk assessments [129].

- AGI models (e.g., foundation models for diagnosis) require broader guidelines, especially for ethical alignment, training data provenance, and cross-context generalizability [130].

- Autonomous AI systems, as envisioned in superintelligence or advanced self-awareness, challenge current regulatory paradigms entirely and call for international coordination and ethical governance [131].

6.3.2. Current Regulatory Bodies and Guidelines

- The FDA (U.S.) has begun to regulate AI/ML based Software as a Medical Device (SaMD), requiring manufacturers to provide evidence of performance, safety, and effectiveness [132].

- The European Union’s AI Act classifies healthcare AI as “high-risk”, mandating transparency, human oversight, and post-market monitoring [133].

- Global efforts, such as the WHO’s guidance on AI ethics in healthcare, are emerging to set universal standards [134].

6.4. Data Security and Privacy

6.4.1. Risks Involved

- Data breaches can lead to the exposure of personal health information (PHI), with legal and ethical consequences [135].

- Re-identification attacks may occur when anonymized datasets are matched with external data sources [136].

- Unauthorized model inference could allow third parties to extract sensitive information from AI systems, especially generative models [137].

6.4.2. Increasing Risk with Advancing AI

- Advanced models may memorize training data, especially if not properly regularized.

- Cloud-based AI platforms introduce vulnerabilities in data storage and access.

- Cross-institutional models, such as federated learning, while designed for privacy, still pose metadata leakage risks.

6.4.3. Regional Feasibility of AGI Development: The Case of Korea

- Establishing AI-specific ethical data governance frameworks.

- Encouraging privacy-preserving data sharing across medical institutions.

- Aligning domestic laws with global AI policy efforts to enable international collaboration.

6.5. Cross-Disciplinary Insights into Explainability, Fairness, and Robustness

- Applying financial XAI techniques to enhance clinical interpretability and shared decision-making.

- Adapting legal fairness audits for demographic bias tracking in clinical trials and AI validation datasets.

- Utilizing robustness tools from autonomous systems to manage uncertainty and atypical patient cases.

6.6. Limitations

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Liu, Z.; Si, L.; Shi, S.; Li, J.; Zhu, J.; Lee, W.H.; Lo, S.L.; Yan, X.; Chen, B.; Fu, F.; et al. Classification of three anesthesia stages based on near-infrared spectroscopy signals. IEEE J. Biomed. Health Inform. 2024, 28, 5270–5279. [Google Scholar] [CrossRef]

- Ma, N.; Fang, X.; Zhang, Y.; Xing, B.; Duan, L.; Lu, J.; Han, B.; Ma, D. Enhancing the sensitivity of spin-exchange relaxation-free magnetometers using phase-modulated pump light with external Gaussian noise. Opt. Express 2024, 32, 33378–33390. [Google Scholar] [CrossRef] [PubMed]

- Long, T.; Song, X.; Han, B.; Suo, Y.; Jia, L. In Situ Magnetic Field Compensation Method for Optically Pumped Magnetometers Under Three-Axis Nonorthogonality. IEEE Trans. Instrum. Meas. 2023, 73, 9502112. [Google Scholar] [CrossRef]

- He, W.; Zhu, J.; Feng, Y.; Liang, F.; You, K.; Chai, H.; Sui, Z.; Hao, H.; Li, G.; Zhao, J.; et al. Neuromorphic-enabled video-activated cell sorting. Nat. Commun. 2024, 15, 10792. [Google Scholar] [CrossRef] [PubMed]

- Liu, B.; Du, H.; Zhang, J.; Jiang, J.; Zhang, X.; He, F.; Niu, B. Developing a new sepsis screening tool based on lymphocyte count, international normalized ratio and procalcitonin (LIP score). Sci. Rep. 2022, 12, 20002. [Google Scholar] [CrossRef]

- Ding, Z.; Zhang, L.; Zhang, Y.; Yang, J.; Luo, Y.; Ge, M.; Yao, W.; Hei, Z.; Chen, C. A Supervised Explainable Machine Learning Model for Perioperative Neurocognitive Disorder in Liver-Transplantation Patients and External Validation on the Medical Information Mart for Intensive Care IV Database: Retrospective Study. J. Med. Internet Res. 2025, 27, e55046. [Google Scholar] [CrossRef]

- Li, J.; Li, J.; Wang, C.; Verbeek, F.J.; Schultz, T.; Liu, H. Outlier detection using iterative adaptive mini-minimum spanning tree generation with applications on medical data. Front. Physiol. 2023, 14, 1233341. [Google Scholar] [CrossRef]

- Li, H.; Wang, Z.; Guan, Z.; Miao, J.; Li, W.; Yu, P.; Jimenez, C.M. UCFNNet: Ulcerative colitis evaluation based on fine-grained lesion learner and noise suppression gating. Comput. Methods Programs Biomed. 2024, 247, 108080. [Google Scholar] [CrossRef]

- Liu, J.; Wang, X.; Ye, X.; Chen, D. Improved health outcomes of nasopharyngeal carcinoma patients 3 years after treatment by the AI-assisted home enteral nutrition management. Front. Nutr. 2025, 11, 1481073. [Google Scholar] [CrossRef]

- Bajwa, J.; Munir, U.; Nori, A.; Williams, B. Artificial Intelligence in Healthcare: Transforming the Practice of Medicine. Future Healthc. J. 2021, 8, e188–e194. [Google Scholar] [CrossRef]

- Olawade, D.B.; David-Olawade, A.C.; Wada, O.Z.; Asaolu, A.J.; Adereni, T.; Ling, J. Artificial Intelligence in Healthcare Delivery: Prospects and Pitfalls. J. Med. Surg. Public Health 2024, 3, 100108. [Google Scholar] [CrossRef]

- Gao, X.; He, P.; Zhou, Y.; Qin, X. Artificial Intelligence Applications in Smart Healthcare: A Survey. Future Internet 2024, 16, 308. [Google Scholar] [CrossRef]

- Li, C.; Wang, H.; Wen, Y.; Yin, R.; Zeng, X.; Li, K. GenoM7GNet: An Efficient N 7-Methylguanosine Site Prediction Approach Based on a Nucleotide Language Model. IEEE/ACM Trans. Comput. Biol. Bioinform. 2024, 21, 2258–2268. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Shen, Y.; Li, J.; Wang, T.; Peng, J.; Shang, X. Enhanced RNA secondary structure prediction through integrative deep learning and structural context analysis. Nucleic Acids Res. 2025, 53, gkaf533. [Google Scholar] [CrossRef]

- Liang, J.; Chen, L.; Li, Y.; Chen, Y.; Yuan, L.; Qiu, Y.; Ma, S.; Fan, F.; Cheng, Y. Unraveling the prefrontal cortex-basolateral amygdala pathway’s role on schizophrenia’s cognitive impairments: A multimodal study in patients and mouse models. Schizophr. Bull. 2024, 50, 913–923. [Google Scholar] [CrossRef]

- Luo, F.; Liu, L.; Guo, M.; Liang, J.; Chen, L.; Shi, X.; Liu, H.; Cheng, Y.; Du, Y. Deciphering and targeting the ESR2–miR-10a-5p–BDNF Axis in the Prefrontal cortex: Advancing Postpartum Depression understanding and therapeutics. Research 2024, 7, 0537. [Google Scholar] [CrossRef]

- Lou, Y.; Cheng, M.; Cao, Q.; Li, K.; Qin, H.; Bao, M.; Zhang, Y.; Lin, S.; Zhang, Y. Simultaneous quantification of mirabegron and vibegron in human plasma by HPLC-MS/MS and its application in the clinical determination in patients with tumors associated with overactive bladder. J. Pharm. Biomed. Anal. 2024, 240, 115937. [Google Scholar] [CrossRef]

- Tian, J.; Zhou, Y.; Yin, L.; AlQahtani, S.A.; Tang, M.; Lu, S.; Wang, R.; Zheng, W. Control Structures and Algorithms for Force Feedback Bilateral Teleoperation Systems: A Comprehensive Review. Comput. Model. Eng. Sci. (CMES) 2025, 142, 973. [Google Scholar] [CrossRef]

- Hu, F.; Yang, H.; Qiu, L.; Wei, S.; Hu, H.; Zhou, H. Spatial structure and organization of the medical device industry urban network in China: Evidence from specialized, refined, distinctive, and innovative firms. Front. Public Health 2025, 13, 1518327. [Google Scholar] [CrossRef]

- Awasthi, R.; Ramachandran, S.P.; Mishra, S.; Mahapatra, D.; Arshad, H.; Atreja, A.; Mathur, P. Artificial Intelligence in Healthcare: 2024 Year in Review. medRxiv 2025, preprint. [Google Scholar] [CrossRef]

- De Micco, F.; Di Palma, G.; Ferorelli, D.; De Benedictis, A.; Tomassini, L.; Tambone, V.; Scendoni, R. Artificial Intelligence in Healthcare: Transforming Patient Safety with Intelligent Systems—A Systematic Review. Front. Med. 2025, 11, 1522554. [Google Scholar] [CrossRef] [PubMed]

- Abbas, Z.; Rehman, M.U.; Tayara, H.; Chong, K.T. ORI-Explorer: A unified cell-specific tool for origin of replication sites prediction by feature fusion. Bioinformatics 2023, 39, btad664. [Google Scholar] [CrossRef] [PubMed]

- Rajpurkar, P.; Irvin, J.; Ball, R.L.; Zhu, K.; Yang, B.; Mehta, H.; Lungren, M.P. Deep Learning for Chest Radiograph Diagnosis: A Retrospective Comparison of the CheXNeXt Algorithm to Practicing Radiologists. PLoS Med. 2018, 15, e1002686. [Google Scholar] [CrossRef]

- Martiniussen, M.A.; Larsen, M.; Hovda, T.; Kristiansen, M.U.; Dahl, F.A.; Eikvil, L.; Hofvind, S. Performance of Two Deep Learning-based AI Models for Breast Cancer Detection and Localization on Screening Mammograms from BreastScreen Norway. Radiol. Artif. Intell. 2025, 7, e240039. [Google Scholar] [CrossRef]

- Zaidi, S.A.J.; Ghafoor, A.; Kim, J.; Abbas, Z.; Lee, S.W. HeartEnsembleNet: An innovative hybrid ensemble learning approach for cardiovascular risk prediction. Healthcare 2025, 13, 507. [Google Scholar] [CrossRef] [PubMed]

- Aggarwal, R.; Sounderajah, V.; Martin, G.; Ting, D.S.; Karthikesalingam, A.; King, D.; Darzi, A. Diagnostic Accuracy of Deep Learning in Medical Imaging: A Systematic Review and Meta-analysis. NPJ Digit. Med. 2021, 4, 65. [Google Scholar] [CrossRef]

- Park, J.; Lim, S. Federated AI Pilots in Korean Hospitals: Opportunities and System Integration Barriers. J. Digit. Health Syst. 2022, 4, 120–132. [Google Scholar]

- Buess, L.; Keicher, M.; Navab, N.; Maier, A.; Arasteh, S.T. From Large Language Models to Multimodal AI: A Scoping Review on the Potential of Generative AI in Medicine. arXiv 2025, arXiv:2502.09242. [Google Scholar]

- Johnson, K.B.; Wei, W.Q.; Weeraratne, D.; Frisse, M.E.; Misulis, K.; Rhee, K.; Snowdon, J.L. Precision Medicine, AI, and the Future of Personalized Health Care. Clin. Transl. Sci. 2021, 14, 86–93. [Google Scholar] [CrossRef]

- Ghebrehiwet, I.; Zaki, N.; Damseh, R.; Mohamad, M.S. Revolutionizing Personalized Medicine with Generative AI: A Systematic Review. Artif. Intell. Rev. 2024, 57, 128. [Google Scholar] [CrossRef]

- Li, Y.H.; Li, Y.L.; Wei, M.Y.; Li, G.Y. Innovation and Challenges of Artificial Intelligence Technology in Personalized Healthcare. Sci. Rep. 2024, 14, 18994. [Google Scholar] [CrossRef] [PubMed]

- Cuzzolin, F.; Morelli, A.; Cirstea, B.; Sahakian, B.J. Knowing Me, Knowing You: Theory of Mind in AI. Psychol. Med. 2020, 50, 1057–1061. [Google Scholar] [CrossRef] [PubMed]

- Costa, A.I.L.D.; Barros, L.; Diogo, P. Emotional Labor in Pediatric Palliative Care: A Scoping Review. Nurs. Rep. 2025, 15, 118. [Google Scholar] [CrossRef] [PubMed]

- Adams, C.; Lee, J.; Thomas, R. Conversational AI in Mental Health: From Symptom Checkers to Therapeutic Agents. J. Digit. Health 2022, 8, 211–222. [Google Scholar]

- Teo, Z.L.; Jin, L.; Liu, N.; Li, S.; Miao, D.; Zhang, X.; Ting, D.S.W. Federated Machine Learning in Healthcare: A Systematic Review on Clinical Applications and Technical Architecture. Cell Rep. Med. 2024, 5, 101419. [Google Scholar] [CrossRef]

- Abbas, S.R.; Abbas, Z.; Zahir, A.; Lee, S.W. Federated Learning in Smart Healthcare: A Comprehensive Review on Privacy, Security, and Predictive Analytics with IoT Integration. Healthcare 2024, 12, 2587. [Google Scholar] [CrossRef]

- Adusumilli, S.; Damancharla, H.; Metta, A. Enhancing Data Privacy in Healthcare Systems Using Blockchain Technology. Trans. Latest Trends Artif. Intell. 2023, 4. [Google Scholar]

- Holzinger, A.; Biemann, C.; Pattichis, C.S.; Kell, D.B. What do we need to build explainable AI systems for the medical domain? arXiv 2017, arXiv:1712.09923. [Google Scholar]

- Goktas, P.; Grzybowski, A. Shaping the future of healthcare: Ethical clinical challenges and pathways to trustworthy AI. J. Clin. Med. 2025, 14, 1605. [Google Scholar] [CrossRef]

- Babu, M.V.S.; Banana, K.R.I.S.H.N.A. A Study on Narrow Artificial Intelligence—An Overview. Int. J. Eng. Sci. Adv. Technol. 2024, 24, 210–219. [Google Scholar]

- Walker, L. Societal Implications of Artificial Intelligence: A Comparison of Use and Impact of Artificial Narrow Intelligence in Patient Care Between Resource-Rich and Resource-Poor Regions and Suggested Policies to Counter the Growing Public Health Gap. Ph.D. Thesis, Technische Universität Wien, Vienna, Austria, 2024. [Google Scholar]

- Kuusi, O.; Heinonen, S. Scenarios from Artificial Narrow Intelligence to Artificial General Intelligence—Reviewing the Results of the International Work/Technology 2050 Study. World Futur. Rev. 2022, 14, 65–79. [Google Scholar] [CrossRef]

- Schlegel, D.; Uenal, Y. A Perceived Risk Perspective on Narrow Artificial Intelligence. In Proceedings of the Pacific Asia Conference on Information Systems (PACIS), Dubai, United Arab Emirates, 20–24 June 2021; p. 44. [Google Scholar]

- Kim, H.; Yoon, J. The Impact of Korea’s Personal Information Protection Act (PIPA) on Health Data Sharing: Legal and Ethical Considerations. J. Korean Med. Law 2022, 29, 25–38. [Google Scholar] [CrossRef]

- Ahn, S.; Park, J. Current Status and Prospects of Artificial Intelligence Utilization in Korea’s Medical Field. Healthc. Inform. Res. 2023, 29, 101–113. [Google Scholar]

- Baidar Bakht, A.; Javed, S.; Gilani, S.Q.; Karki, H.; Muneeb, M.; Werghi, N. Deepbls: Deep feature-based broad learning system for tissue phenotyping in colorectal cancer wsis. J. Digit. Imaging 2023, 36, 1653–1662. [Google Scholar] [CrossRef]

- Rehman, M.U.; Akhtar, S.; Zakwan, M.; Mahmood, M.H. Novel architecture with selected feature vector for effective classification of mitotic and non-mitotic cells in breast cancer histology images. Biomed. Signal Process. Control 2022, 71, 103212. [Google Scholar] [CrossRef]

- Bakht, A.B.; Javed, S.; AlMarzouqi, H.; Khandoker, A.; Werghi, N. Colorectal cancer tissue classification using semi-supervised hypergraph convolutional network. In Proceedings of the 2021 IEEE 18th International Symposium on Biomedical Imaging (ISBI), Nice, France, 13–16 April 2021; pp. 1306–1309. [Google Scholar]

- Bakht, A.B.; Javed, S.; Dina, R.; Almarzouqi, H.; Khandoker, A.; Werghi, N. Thyroid nodule cell classification in cytology images using transfer learning approach. In Proceedings of the 12th International Conference on Soft Computing and Pattern Recognition (SoCPaR 2020), Online, India, 15–18 December 2020; Springer: Cham, Switzerland, 2021; pp. 539–549. [Google Scholar]

- Sunarti, S.; Rahman, F.F.; Naufal, M.; Risky, M.; Febriyanto, K.; Masnina, R. Artificial Intelligence in Healthcare: Opportunities and Risk for Future. Gac. Sanit. 2021, 35, S67–S70. [Google Scholar] [CrossRef]

- Kumar, R. Artificial Intelligence: The Future of Healthcare. Medicon Med. Sci. 2024, 6, 1–6. [Google Scholar]

- Knevel, R.; Liao, K.P. From Real-World Electronic Health Record Data to Real-World Results Using Artificial Intelligence. Ann. Rheum. Dis. 2023, 82, 306–311. [Google Scholar] [CrossRef]

- Lee, E.E.; Torous, J.; Choudhury, M.D.; Depp, C.A.; Graham, S.A.; Kim, H.C.; Jeste, D.V. Artificial Intelligence for Mental Health Care: Clinical Applications, Barriers, Facilitators, and Artificial Wisdom. Biol. Psychiatry Cogn. Neurosci. Neuroimaging 2021, 6, 856–864. [Google Scholar] [CrossRef]

- Denecke, K.; Abd-Alrazaq, A.; Househ, M. Artificial Intelligence for Chatbots in Mental Health: Opportunities and Challenges. In Multiple Perspectives on Artificial Intelligence in Healthcare: Opportunities and Challenges; Springer: Berlin/Heidelberg, Germany, 2021; pp. 115–128. [Google Scholar]

- Junaid, S.B.; Imam, A.A.; Abdulkarim, M.; Surakat, Y.A.; Balogun, A.O.; Kumar, G.; Hashim, A.S. Recent Advances in Artificial Intelligence and Wearable Sensors in Healthcare Delivery. Appl. Sci. 2022, 12, 10271. [Google Scholar] [CrossRef]

- Xie, Y.; Lu, L.; Gao, F.; He, S.J.; Zhao, H.J.; Fang, Y.; Dong, Z. Integration of Artificial Intelligence, Blockchain, and Wearable Technology for Chronic Disease Management: A New Paradigm in Smart Healthcare. Curr. Med. Sci. 2021, 41, 1123–1133. [Google Scholar] [CrossRef] [PubMed]

- Tyagi, N.; Bhushan, B. Natural Language Processing (NLP) Based Innovations for Smart Healthcare Applications in Healthcare 4.0. In Enabling Technologies for Effective Planning and Management in Sustainable Smart Cities; Springer: Berlin/Heidelberg, Germany, 2023; pp. 123–150. [Google Scholar]

- Zhou, B.; Yang, G.; Shi, Z.; Ma, S. Natural Language Processing for Smart Healthcare. IEEE Rev. Biomed. Eng. 2022, 17, 4–18. [Google Scholar] [CrossRef] [PubMed]

- Badawy, M. Integrating Artificial Intelligence and Big Data into Smart Healthcare Systems: A Comprehensive Review of Current Practices and Future Directions. In Artificial Intelligence Evolution; Springer: Berlin/Heidelberg, Germany, 2023; pp. 133–153. [Google Scholar]

- Karine, K.; Marlin, B. Using LLMs to Improve RL Policies in Personalized Health Adaptive Interventions. In Proceedings of the Second Workshop on Patient-Oriented Language Processing (CL4Health), Albuquerque, NM, USA, 3–4 May 2025; pp. 137–147. [Google Scholar]

- Kumar, M.V.; Ramesh, G.P. Smart IoT Based Health Care Environment for an Effective Information Sharing Using Resource Constraint LLM Models. J. Smart Internet Things (JSIoT) 2024, 2024, 133–147. [Google Scholar] [CrossRef]

- Zhou, Z.; Asghar, M.A.; Nazir, D.; Siddique, K.; Shorfuzzaman, M.; Mehmood, R.M. An AI-Empowered Affect Recognition Model for Healthcare and Emotional Well-Being Using Physiological Signals. Clust. Comput. 2023, 26, 1253–1266. [Google Scholar] [CrossRef] [PubMed]

- Ali, H. Reinforcement Learning in Healthcare: Optimizing Treatment Strategies, Dynamic Resource Allocation, and Adaptive Clinical Decision-Making. Int. J. Comput. Appl. Technol. Res. 2022, 11, 88–104. [Google Scholar]

- Neumann, W.J.; Gilron, R.; Little, S.; Tinkhauser, G. Adaptive Deep Brain Stimulation: From Experimental Evidence Toward Practical Implementation. Mov. Disord. 2023, 38, 937–948. [Google Scholar] [CrossRef]

- Elango, S.; Manjunath, L.; Prasad, D.; Sheela, T.; Ramachandran, G.; Selvaraju, S. Super Artificial Intelligence Medical Healthcare Services and Smart Wearable System Based on IoT for Remote Health Monitoring. In Proceedings of the 2023 5th International Conference on Smart Systems and Inventive Technology (ICSSIT), Tirunelveli, India, 23–25 January 2023; pp. 1180–1186. [Google Scholar] [CrossRef]

- Johnsen, M. Super AI. 2025. Available online: https://www.maria-johnsen.com/super-ai/ (accessed on 9 June 2025).

- Li, J.; Carayon, P. Health Care 4.0: A Vision for Smart and Connected Health Care. IISE Trans. Healthc. Syst. Eng. 2021, 11, 171–180. [Google Scholar] [CrossRef]

- Bostrom, N. Superintelligence: Paths, Dangers, Strategies; Oxford University Press: Oxford, UK, 2014. [Google Scholar]

- Peters, T. Cybertheology and the Ethical Dimensions of Artificial Superintelligence: A Theological Inquiry into Existential Risks. Khazanah Theol. 2024, 6, 1–12. [Google Scholar] [CrossRef]

- Morley, J.; Machado, C.C.; Burr, C.; Cowls, J.; Joshi, I.; Taddeo, M.; Floridi, L. The Ethics of AI in Health Care: A Mapping Review. Soc. Sci. Med. 2020, 260, 113172. [Google Scholar] [CrossRef]

- Søvik, A.O. What Overarching Ethical Principle Should a Superintelligent AI Follow? AI Soc. 2022, 37, 1505–1518. [Google Scholar] [CrossRef]

- Bickley, S.J.; Torgler, B. Cognitive Architectures for Artificial Intelligence Ethics. AI Soc. 2023, 38, 501–519. [Google Scholar] [CrossRef]

- Sukhobokov, A.; Belousov, E.; Gromozdov, D.; Zenger, A.; Popov, I. A Universal Knowledge Model and Cognitive Architectures for Prototyping AGI. Cogn. Syst. Res. 2024, 88, 101279. [Google Scholar] [CrossRef]

- Fiorotti, R.; Rocha, H.R.; Coutinho, C.R.; Rueda-Medina, A.C.; Nardoto, A.F.; Fardin, J.F. A Novel Strategy for Simultaneous Active/Reactive Power Design and Management Using Artificial Intelligence Techniques. Energy Convers. Manag. 2023, 294, 117565. [Google Scholar] [CrossRef]

- Manchana, R. AI-Powered Observability: A Journey from Reactive to Proactive, Predictive, and Automated. Int. J. Sci. Res. (IJSR) 2024, 13, 1745–1755. [Google Scholar] [CrossRef]

- González-Nóvoa, J.A.; Busto, L.; Rodríguez-Andina, J.J.; Fariña, J.; Segura, M.; Gómez, V.; Veiga, C. Using Explainable Machine Learning to Improve Intensive Care Unit Alarm Systems. Sensors 2021, 21, 7125. [Google Scholar] [CrossRef]

- Lourdusamy, R.; Gnanaprakasam, J. 13 Expert Systems in AI. In Data Science with Semantic Technologies: Deployment and Exploration; Springer: Berlin/Heidelberg, Germany, 2023; p. 217. [Google Scholar]

- Zinchenko, V.; Chetverikov, S.; Akhmad, E.; Arzamasov, K.; Vladzymyrskyy, A.; Andreychenko, A.; Morozov, S. Changes in Software as a Medical Device Based on Artificial Intelligence Technologies. Int. J. Comput. Assist. Radiol. Surg. 2022, 17, 1969–1977. [Google Scholar] [CrossRef]

- Mohammad, G.B.; Potluri, S.; Kumar, A.; P, D.; Tiwari, R.; Shrivastava, R.; Dekeba, K. An Artificial Intelligence-Based Reactive Health Care System for Emotion Detections. Comput. Intell. Neurosci. 2022, 2022, 8787023. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, Y.; Wang, D.; Tong, X.; Liu, T.; Zhang, S.; Clarke, M. Artificial Intelligence for COVID-19: A Systematic Review. Front. Med. 2021, 8, 704256. [Google Scholar] [CrossRef]

- Panesar, A.; Panesar, H. Artificial Intelligence and Machine Learning in Global Healthcare. In Handbook of Global Health; Springer: Berlin/Heidelberg, Germany, 2021; pp. 1775–1813. [Google Scholar]

- Zhou, J.; Park, J.H.; Choi, Y. Hybrid Deep Learning Models for Breast Cancer Lymph Node Metastasis Prediction Using Mammograms and Clinical Metadata. Sci. Rep. 2022, 12, 3445. [Google Scholar]

- Shajari, S.; Kuruvinashetti, K.; Komeili, A.; Sundararaj, U. The Emergence of AI-Based Wearable Sensors for Digital Health Technology: A Review. Sensors 2023, 23, 9498. [Google Scholar] [CrossRef]

- Abbas, Z.; Rehman, M.U.; Tayara, H.; Lee, S.W.; Chong, K.T. m5C-Seq: Machine learning-enhanced profiling of RNA 5-methylcytosine modifications. Comput. Biol. Med. 2024, 182, 109087. [Google Scholar] [CrossRef] [PubMed]

- Staszak, M.; Staszak, K.; Wieszczycka, K.; Bajek, A.; Roszkowski, K.; Tylkowski, B. Machine Learning in Drug Design: Use of Artificial Intelligence to Explore the Chemical Structure–Biological Activity Relationship. Wiley Interdiscip. Rev. Comput. Mol. Sci. 2022, 12, e1568. [Google Scholar] [CrossRef]

- Kilari, S.D. Use Artificial Intelligence into Facility Design and Layout Planning Work in Manufacturing Facility. Eur. J. Artif. Intell. Mach. Learn. 2025, 4, 27–30. [Google Scholar] [CrossRef]

- Scaife, A. Making the Right Decision in Facility Management and Facility Operations With the Best Analysis: A Systematic Review of Artificial Intelligence in Facility Management. Ph.D. Thesis, University of Maryland University College, College Park, MD, USA, 2024. [Google Scholar]

- Zhou, Y.; Wang, F.; Tang, J.; Nussinov, R.; Cheng, F. Artificial intelligence in COVID-19 drug repurposing. Lancet Digit. Health 2020, 2, e667–e676. [Google Scholar] [CrossRef]

- Nguyen, T.; Le, H.; Quinn, T.P.; Nguyen, T.; Le, T.D.; Venkatesh, S. GraphDTA: Predicting drug–target binding affinity with graph neural networks. Bioinformatics 2021, 37, 1140–1147. [Google Scholar] [CrossRef]

- Rządeczka, M.; Sterna, A.; Stolińska, J.; Kaczyńska, P.; Moskalewicz, M. The Efficacy of Conversational AI in Rectifying the Theory-of-Mind and Autonomy Biases: Comparative Analysis. JMIR Ment. Health 2025, 12, e64396. [Google Scholar] [CrossRef]

- Garcia-Lopez, A. Theory of Mind in Artificial Intelligence Applications. In The Theory of Mind Under Scrutiny: Psychopathology, Neuroscience, Philosophy of Mind and Artificial Intelligence; Springer Nature Switzerland: Cham, Switzerland, 2024; pp. 723–750. [Google Scholar]

- Seitz, L. Artificial empathy in healthcare chatbots: Does it feel authentic? Comput. Hum. Behav. Artif. Humans 2024, 2, 100067. [Google Scholar] [CrossRef]

- Mannhardt, N.; Bondi-Kelly, E.; Lam, B.; Mozannar, H.; O’Connell, C.; Asiedu, M.; Sontag, D. Impact of large language model assistance on patients reading clinical notes: A mixed-methods study. arXiv 2024, arXiv:2401.09637. [Google Scholar]

- Lv, C.; Gu, Y.; Guo, Z.; Xu, Z.; Wu, Y.; Zhang, F.; Zheng, X. Towards Biologically Plausible Computing: A Comprehensive Comparison. arXiv 2024, arXiv:2406.16062. [Google Scholar]

- Ghoshal, B.; Tucker, A. Estimating uncertainty and interpretability in deep learning for coronavirus (COVID-19) detection. arXiv 2020, arXiv:2003.10769. [Google Scholar]

- Sendak, M.P.; Ratliff, W.; Sarro, D.; Alderton, E.; Futoma, J.; Gao, M.; O’Brien, C. Real-world integration of a sepsis deep learning technology into routine clinical care: Implementation study. JMIR Med. Inform. 2020, 8, e15182. [Google Scholar] [CrossRef] [PubMed]

- Ghandeharioun, A.; Shen, J.H.; Jaques, N.; Ferguson, C.; Jones, N.; Lapedriza, A.; Picard, R. Approximating interactive human evaluation with self-play for open-domain dialog systems. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2019; Volume 32. [Google Scholar]

- Mebrahtu, T.F.; Skyrme, S.; Randell, R.; Keenan, A.M.; Bloor, K.; Yang, H.; Thompson, C. Effects of computerised clinical decision support systems (CDSS) on nursing and allied health professional performance and patient outcomes: A systematic review of experimental and observational studies. BMJ Open 2021, 11, e053886. [Google Scholar] [CrossRef] [PubMed]

- Xiao, C.; Sun, J. Introduction to Deep Learning for Healthcare; Springer Nature: Cham, Switzerland, 2021. [Google Scholar]

- Al-Quraishi, T.; Al-Quraishi, N.; AlNabulsi, H.; Al-Qarishey, H.; Ali, A.H. Big data predictive analytics for personalized medicine: Perspectives and challenges. Appl. Data Sci. Anal. 2024, 2024, 32–38. [Google Scholar] [CrossRef]

- Sarkar, S.; Gaur, M.; Chen, L.K.; Garg, M.; Srivastava, B. A review of the explainability and safety of conversational agents for mental health to identify avenues for improvement. Front. Artif. Intell. 2023, 6, 1229805. [Google Scholar] [CrossRef]

- Yang, X.; Chen, A.; PourNejatian, N.; Shin, H.C.; Smith, K.E.; Parisien, C.; Wu, Y. Gatortron: A large clinical language model to unlock patient information from unstructured electronic health records. arXiv 2022, arXiv:2203.03540. [Google Scholar]

- AlSaad, R.; Abd-Alrazaq, A.; Boughorbel, S.; Ahmed, A.; Renault, M.A.; Damseh, R.; Sheikh, J. Multimodal large language models in health care: Applications, challenges, and future outlook. J. Med. Internet Res. 2024, 26, e59505. [Google Scholar] [CrossRef]

- Kossack, P.; Unger, H. Emotion-aware Chatbots: Understanding, Reacting and Adapting to Human Emotions in Text Conversations. In Proceedings of the International Conference on Autonomous Systems, Palermo, Italy, 17–19 October 2023; pp. 158–175. [Google Scholar]

- Bjerring, J.C.; Busch, J. Artificial intelligence and patient-centered decision-making. Philos. Technol. 2021, 34, 349–371. [Google Scholar] [CrossRef]

- Weiner, E.B.; Dankwa-Mullan, I.; Nelson, W.A.; Hassanpour, S. Ethical challenges and evolving strategies in the integration of artificial intelligence into clinical practice. PLoS Digit. Health 2025, 4, e0000810. [Google Scholar] [CrossRef]

- Zhavoronkov, A.; Ivanenkov, Y.A.; Aliper, A.; Veselov, M.S.; Aladinskiy, V.A.; Aladinskaya, A.V.; Terentiev, V.A.; Polykovskiy, D.A.; Kuznetsov, M.D.; Asadulaev, A.; et al. Deep learning enables rapid identification of potent DDR1 kinase inhibitors. Nat. Biotechnol. 2019, 37, 1038–1040. [Google Scholar] [CrossRef]

- Rajkomar, A.; Dean, J.; Kohane, I. Machine learning in medicine. New Engl. J. Med. 2019, 380, 1347–1358. [Google Scholar] [CrossRef]

- Gonçalves, T.; Rio-Torto, I.; Teixeira, L.F.; Cardoso, J.S. A survey on attention mechanisms for medical applications: Are we moving toward better algorithms? IEEE Access 2022, 10, 98909–98935. [Google Scholar] [CrossRef]

- Herrera, F.; Calderón, R. Opacity as a Feature, Not a Flaw: The LoBOX Governance Ethic for Role-Sensitive Explainability and Institutional Trust in AI. arXiv 2025, arXiv:2505.20304. [Google Scholar]

- Aithal, P.S. Super-intelligent machines—analysis of developmental challenges and predicted negative consequences. Int. J. Appl. Eng. Manag. Lett. (IJAEML) 2023, 7, 109–141. [Google Scholar] [CrossRef]

- Talati, D.V. Quantum AI and the Future of Super Intelligent Computing. J. Artif. Intell. Gen. Sci. (JAIGS) 2025, 8, 44–51. [Google Scholar] [CrossRef]

- Singhal, K.; Azizi, S.; Tu, T.; Mahdavi, S.S.; Wei, J.; Chung, H.W.; Scales, N.; Tanwani, A.; Cole-Lewis, H.; Pfohl, S.; et al. Large language models encode clinical knowledge. Nature 2023, 620, 172–180. [Google Scholar] [CrossRef]

- Floridi, L.; Cowls, J. A unified framework of five principles for AI in society. In Machine Learning and the City: Applications in Architecture and Urban Design; UCL Press: London, UK, 2022; pp. 535–545. [Google Scholar]

- Finlayson, S.G.; Subbaswamy, A.; Singh, K.; Bowers, J.; Kupke, A.; Zittrain, J.; Saria, S. The clinician and dataset shift in artificial intelligence. New Engl. J. Med. 2021, 385, 283–286. [Google Scholar] [CrossRef]

- Timmons, A.C.; Duong, J.B.; Fiallo, N.S.; Lee, T.; Vo, H.P.Q.; Ahle, M.W.; Comer, J.S.; Brewer, L.C.; Frazier, S.L.; Chaspari, T. A call to action on assessing and mitigating bias in artificial intelligence applications for mental health. Perspect. Psychol. Sci. 2023, 18, 1062–1096. [Google Scholar] [CrossRef]

- Sikstrom, L.; Maslej, M.M.; Hui, K.; Findlay, Z.; Buchman, D.Z.; Hill, S.L. Conceptualising fairness: Three pillars for medical algorithms and health equity. BMJ Health Care Inform. 2022, 29, e100459. [Google Scholar] [CrossRef]

- Grote, T.; Keeling, G. Enabling fairness in healthcare through machine learning. Ethics Inf. Technol. 2022, 24, 39. [Google Scholar] [CrossRef]

- Li, H.; Moon, J.T.; Shankar, V.; Newsome, J.; Gichoya, J.; Bercu, Z. Health inequities, bias, and artificial intelligence. Tech. Vasc. Interv. Radiol. 2024, 27, 100990. [Google Scholar] [CrossRef]

- Wadden, J.J. Defining the undefinable: The black box problem in healthcare artificial intelligence. J. Med. Ethics 2022, 48, 764–768. [Google Scholar] [CrossRef] [PubMed]

- Felder, R.M. Coming to terms with the black box problem: How to justify AI systems in health care. Hastings Cent. Rep. 2021, 51, 38–45. [Google Scholar] [CrossRef] [PubMed]

- Ennab, M.; Mcheick, H. Enhancing interpretability and accuracy of AI models in healthcare: A comprehensive review on challenges and future directions. Front. Robot. AI 2024, 11, 1444763. [Google Scholar] [CrossRef]

- Band, S.S.; Yarahmadi, A.; Hsu, C.C.; Biyari, M.; Sookhak, M.; Ameri, R.; Liang, H.W. Application of explainable artificial intelligence in medical health: A systematic review of interpretability methods. Inform. Med. Unlocked 2023, 40, 101286. [Google Scholar] [CrossRef]

- Rajkomar, A.; Hardt, M.; Howell, M.D.; Corrado, G.; Chin, M.H. Ensuring Fairness in Machine Learning to Advance Health Equity. Ann. Intern. Med. 2018, 169, 866–872. [Google Scholar] [CrossRef]

- Gerke, S.; Minssen, T.; Cohen, G. Ethical and legal challenges of artificial intelligence-driven healthcare. In Artificial Intelligence in Healthcare; Academic Press: Cambridge, MA, USA, 2020; pp. 295–336. [Google Scholar]

- Kaissis, G.; Ziller, A.; Passerat-Palmbach, J.; Ryffel, T.; Usynin, D.; Trask, A.; Braren, R. End-to-end privacy preserving deep learning on multi-institutional medical imaging. Nat. Mach. Intell. 2021, 3, 473–484. [Google Scholar] [CrossRef]

- Amann, J.; Blasimme, A.; Vayena, E.; Frey, D.; Madai, V.I. Explainability for artificial intelligence in healthcare: A multidisciplinary perspective. BMC Med. Inform. Decis. Mak. 2020, 20, 310. [Google Scholar] [CrossRef] [PubMed]

- Wang, F.; Casalino, L.P.; Khullar, D. Deep Learning in Medicine—Promise, Progress, and Challenges. JAMA Intern. Med. 2023, 183, 345–352. [Google Scholar] [CrossRef]

- Wellnhofer, E. Real-world and regulatory perspectives of artificial intelligence in cardiovascular imaging. Front. Cardiovasc. Med. 2022, 9, 890809. [Google Scholar] [CrossRef]

- Khuat, T.T.; Kedziora, D.J.; Gabrys, B. The roles and modes of human interactions with automated machine learning systems. arXiv 2022, arXiv:2205.04139. [Google Scholar]

- Youvan, D.C. Illuminating Intelligence: Bridging Humanity and Artificial Consciousness. 2024; Preprint. [Google Scholar] [CrossRef]

- Muehlematter, U.J.; Daniore, P.; Vokinger, K.N. Approval of artificial intelligence and machine learning-based medical devices in the USA and Europe (2015–20): A comparative analysis. Lancet Digit. Health 2021, 3, e195–e203. [Google Scholar] [CrossRef]

- Huergo Lora, A.J. Classification of AI Systems as High-Risk (Chapter III, Section 1). In The EU regulation on Artificial Intelligence: A commentary; Wolters Kluwers Italia: Milano, Italy, 2025. [Google Scholar]

- Corrêa, N.K.; Galvão, C.; Santos, J.W.; Del Pino, C.; Pinto, E.P.; Barbosa, C.; de Oliveira, N. Worldwide AI ethics: A review of 200 guidelines and recommendations for AI governance. Patterns 2023, 4, 100798. [Google Scholar] [CrossRef]

- Abbasi, N.; Smith, D.A. Cybersecurity in Healthcare: Securing Patient Health Information (PHI), HIPPA Compliance Framework and the Responsibilities of Healthcare Providers. J. Knowl. Learn. Sci. Technol. 2024, 3, 278–287. [Google Scholar] [CrossRef]

- Zuo, Z.; Watson, M.; Budgen, D.; Hall, R.; Kennelly, C.; Al Moubayed, N. Data anonymization for pervasive health care: Systematic literature mapping study. JMIR Med. Inform. 2021, 9, e29871. [Google Scholar] [CrossRef] [PubMed]

- Feretzakis, G.; Papaspyridis, K.; Gkoulalas-Divanis, A.; Verykios, V.S. Privacy-Preserving Techniques in Generative AI and Large Language Models: A Narrative Review. Information 2024, 15, 697. [Google Scholar] [CrossRef]

- Carmi, L.; Zohar, M.; Riva, G.M. The European General Data Protection Regulation (GDPR) in mHealth: Theoretical and practical aspects for practitioners’ use. Med. Sci. Law 2023, 63, 61–68. [Google Scholar] [CrossRef]

- Muthalakshmi, M.; Jeyapal, K.; Vinoth, M.; PS, D.; Murugan, N.S.; Sheela, K.S. Federated learning for secure and privacy-preserving medical image analysis in decentralized healthcare systems. In Proceedings of the 2024 5th International Conference on Electronics and Sustainable Communication Systems (ICESC), Coimbatore, India, 7–9 August 2024; pp. 1442–1447. [Google Scholar]

- Choi, S.; Kang, M. Challenges in Reusing Medical Data for AI Research under the Korean Medical Service Act. Healthc. Inform. Res. 2023, 29, 101–110. [Google Scholar]

- Voigt, P.; von dem Bussche, A. The EU General Data Protection Regulation (GDPR): A Practical Guide; Springer International Publishing: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Park, E.; Tanaka, H. Cross-border Data Flow in Asia: Challenges and Policy Trends. Asian J. Law Technol. 2024, 12, 44–59. [Google Scholar]

- Lee, J.; Kim, Y. The MyData Initiative in Korea: Implications for Healthcare Data Portability. Health Policy Technol. 2021, 10, 100569. [Google Scholar]

- Arrieta, A.B.; Díaz-Rodríguez, N.; Del Ser, J.; Bennetot, A.; Tabik, S.; Barbado, A.; Garcia, S.; Gil-Lopez, S.; Molina, D.; Benjamins, R.; et al. Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Inf. Fusion 2020, 58, 82–115. [Google Scholar] [CrossRef]

- Zhang, Z.; Wu, C.; Qu, S.; Chen, X. An explainable artificial intelligence approach for financial distress prediction. Inf. Process. Manag. 2022, 59, 102988. [Google Scholar] [CrossRef]

- Chen, A.; Rossi, R.A.; Park, N.; Trivedi, R.; Wang, Y.; Yu, T.; Ahmed, N.K. Fairness-aware graph neural networks: A survey. ACM Trans. Knowl. Discov. Data 2024, 18, 1–23. [Google Scholar] [CrossRef]

- Raji, I.D.; Dobbe, R. Concrete problems in AI safety, revisited. arXiv 2023, arXiv:2401.10899. [Google Scholar]

- Brunke, L.; Greeff, M.; Hall, A.W.; Yuan, Z.; Zhou, S.; Panerati, J.; Schoellig, A.P. Safe learning in robotics: From learning-based control to safe reinforcement learning. Annu. Rev. Control. Robot. Auton. Syst. 2022, 5, 411–444. [Google Scholar] [CrossRef]

| Study (Year) | Scope | Focus Area | Framework Type | Advantages | Limitations |

|---|---|---|---|---|---|

| [10] | General AI in Healthcare | Deep learning | Application-based | Broad overview of DL in diagnostics | No functional or capability-based categorization |

| [38] | XAI in Healthcare | Interpretability | Technical taxonomy | Introduced interpretability challenges in clinical AI | No classification of AI types or deployment stages |

| [23] | AI for Radiology | Diagnostic imaging | Narrow AI case study | Strong benchmarking of imaging models | Specific to radiology; lacks generalizability |

| [31] | Personalized Medicine | Predictive modeling | Limited memory-based | Personalized care pathway insights | Focused on narrow, reactive AI only |

| [34] | Conversational AI | Mental health bots | Emotional modeling | Emphasized empathy-aware dialogue systems | Does not generalize to other AI functionalities |

| [39] | Trust in Medical AI | Regulatory | Human-centered AI design | Excellent coverage of XAI + uncertainty estimation | Missing systematic tech-to-function mapping |

| This Work (2025) | Smart Healthcare Systems | AI Capabilities and Functionalities | Dual Framework (Capability + Functionality) | Holistic synthesis, new classification, tech-function alignment | Real-world deployment data are limited |

| Selection Stage | Number of Records |

|---|---|

| Initial search hits | 800 |

| Duplicates removed | 278 |

| Title/abstract screened | 522 |

| Full-text articles reviewed | 148 |

| Final studies included that mainly focused on this topic | 42 |

| Variable | Description |

|---|---|

| Study Information | Authors, year, country, journal |

| AI Capability Type | Narrow AI, General AI (AGI), Superintelligent AI |

| AI Functional Type | Reactive, Limited Memory, Theory of Mind, Self-Aware |

| Clinical Use Case | Diagnosis, triage, prognosis, robotic surgery, mental health, etc. |

| AI Technique | CNN, RNN, LLM, transformer, federated learning, etc. |

| Data Type Used | Imaging, EHR, genomic data, audio/textual data |

| Deployment Setting | Simulated lab, hospital-based, telemedicine, wearable device |

| Outcome Focus | Accuracy, interpretability, empathy, adaptability, autonomy |

| Capability Level | Core Trait | Current Use | Cognitive Scope | Clinical Role | Representative Systems |

|---|---|---|---|---|---|

| Narrow AI | Task-specific learning | Diagnostic imaging, chatbots, EHR prediction models | Limited to trained tasks | Assistive tools | DeepMind, Zebra, Aidoc, Wysa |

| General AI (AGI) | Cross-domain reasoning | Multimodal modeling, adaptive LLMs | Context-aware, human-like | Augmented clinician | Med-PaLM, GatorTron |

| Superintelligent AI | Surpasses human cognition | Theoretical | Beyond human capacity | Autonomous healthcare leader | Not yet realized |

| Functional Type | Core Behavior | Healthcare Applications | Memory or Learning |

|---|---|---|---|

| Reactive Machines | Respond to present inputs only | ICU alerts, rule-based diagnostics, infusion control | No memory |

| Limited Memory Systems | Learn from historical data, no continuous learning | Imaging analysis, EHR-based risk prediction, wearable monitoring | Short-term memory |

| Theory of Mind | Understand user emotions and intentions | Empathy-aware chatbots, geriatric AI, adaptive clinical communication | Emotion/context modeling |

| Self-Aware AI | Model internal state and confidence | XAI, uncertainty-aware systems, adaptive therapeutic agents | Meta-cognition (early features) |

| Perspective | Current Use in Smart Healthcare | Functional Description |

|---|---|---|

| Narrow AI + Limited Memory | Clinical decision support, imaging, diagnostics, mental health bots | Uses historical data to make task specific decisions; no real-time learning or cross-domain flexibility |

| AGI + Theory of Mind | Early stage LLMs, emotion-aware chatbots, adaptive clinical assistants | Attempts human like reasoning and emotion modeling using multimodal, contextual data; not yet fully realized |

| Superintelligent AI + Self-Awareness | Theoretical; explored in XAI and ethical AI research | Hypothetical systems with full autonomy, self reflection, and ethical cognition; no clinical deployment |

| Challenge | Description | Impact | Mitigation Strategy | Reference |

|---|---|---|---|---|

| Bias and Fairness | AI systems can reflect or amplify biases present in training data, affecting fairness across demographics. | Undermines trust and may lead to healthcare disparities. | Use diverse training data; implement fairness audits. | [124] |

| Interpretability | AI models, especially DL, often lack transparency, making it difficult for clinicians to trust outputs. | Limits clinical adoption and medico legal accountability. | Incorporate explainable AI (XAI) models and visualizations. | [38] |

| Regulation | AI deployment requires compliance with evolving legal and ethical frameworks. | Regulatory uncertainty slows innovation and deployment. | Develop adaptive, region-specific AI policies. | [125] |

| Data Security | Storing and sharing sensitive patient data raises concerns around privacy, encryption, and misuse. | Breaches may lead to legal liability and patient harm. | Employ federated learning and differential privacy. | [126] |

| Clinical Integration | Embedding AI into existing clinical workflows without disrupting care delivery is technically and culturally complex. | Causes resistance among staff and workflow inefficiency. | Co-design solutions with clinicians for smooth adoption. | [127] |

| Infrastructure and Cost | High development, deployment, and maintenance costs limit access in resource-constrained healthcare settings. | Restricts scalability and global AI implementation. | Invest in cloud infrastructure and public and private partnerships. | [128] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abbas, S.R.; Seol, H.; Abbas, Z.; Lee, S.W. Exploring the Role of Artificial Intelligence in Smart Healthcare: A Capability and Function-Oriented Review. Healthcare 2025, 13, 1642. https://doi.org/10.3390/healthcare13141642

Abbas SR, Seol H, Abbas Z, Lee SW. Exploring the Role of Artificial Intelligence in Smart Healthcare: A Capability and Function-Oriented Review. Healthcare. 2025; 13(14):1642. https://doi.org/10.3390/healthcare13141642

Chicago/Turabian StyleAbbas, Syed Raza, Huiseung Seol, Zeeshan Abbas, and Seung Won Lee. 2025. "Exploring the Role of Artificial Intelligence in Smart Healthcare: A Capability and Function-Oriented Review" Healthcare 13, no. 14: 1642. https://doi.org/10.3390/healthcare13141642

APA StyleAbbas, S. R., Seol, H., Abbas, Z., & Lee, S. W. (2025). Exploring the Role of Artificial Intelligence in Smart Healthcare: A Capability and Function-Oriented Review. Healthcare, 13(14), 1642. https://doi.org/10.3390/healthcare13141642