Deep Learning Applications in Dental Image-Based Diagnostics: A Systematic Review

Abstract

1. Introduction

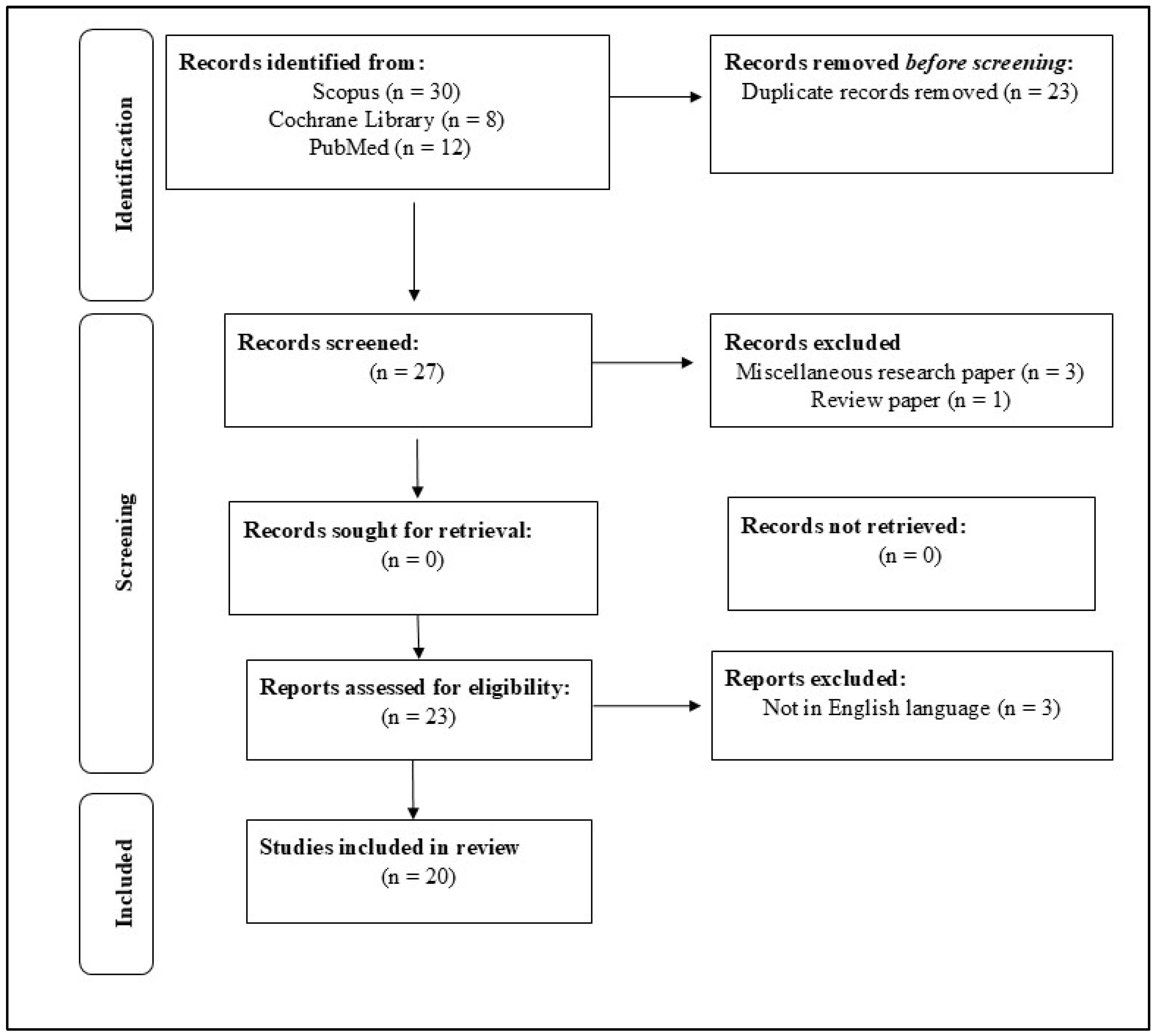

2. Materials and Methods

2.1. Methodological Framework

2.2. Search Strategy

2.3. Criteria of Exclusion and Inclusion

2.3.1. Criteria for Inclusion

- Studies along with specified keywords;

- Articles (research) available from 2014 to 2023;

- English-language studies; and

- Original research articles.

2.3.2. Criteria for Exclusion

- Studies not focusing on AI-based diagnostics in pediatric dentistry;

- Articles not including full-text access; and

- Ongoing research projects.

2.4. Quality Assessment

3. Results

| Study (Author, Year) | Study Design | AI Model | Dataset Type and Size (N) | Preprocessing Techniques | Validation Method | Application | Experimental Method | Outcomes |

|---|---|---|---|---|---|---|---|---|

| Bouchahma et al., [4] (2019) | Clinical study | CNN | Panoramic X-rays, N = 700 | Grayscale conversion, normalization | Not reported | Operative dentistry and Endodontia | Deep Learning → Supervised Learning → CNN | Accuracy: 87%; RCT detection: 88%; Fluoride: 98% |

| Arisu et al., [6] 2018 | Clinical study | ANN | Intraoral images, N = 2400 | Not reported | Internal testing only | Restorative dentistry | Deep Learning → Supervised Learning → ANN | Composite curing prediction; No metric reported |

| Takahashi et al., [7] 2020 | Experimental study | CNN | Panoramic X-rays, N = 1498 | Image enhancement, segmentation | Train/test split | Prosthodontics | Deep Learning → Supervised Learning → CNN | Qualitative classification; No metric reported |

| Li et al., [8] 2020 | Clinical study | Automated photo analysis | Facial and intraoral photos, N = 1050 | Alignment of landmarks | Manual comparison | Esthetic dentistry | Image Analysis → Automated Integration → Not specified | Subjective cosmetic application; No metric reported |

| Kuwada et al., [10] 2020 | Clinical study | Detect Net, Alex Net, and VGG-16 | Panoramic X-rays, N = 550 | Grayscale conversion, normalization | Train/validation/test split | Orthodontics | Deep Learning → Supervised Learning → CNN (DetectNet, etc.) | Precision, recall, F1-score reported |

| Yamaguchi et al., [11] 2019 | Clinical study | CNN | Cephalometric radiographs, N = 1146 | Image resizing, grayscale conversion | Holdout validation | Restorative dentistry | Deep Learning → Supervised Learning → CNN | Prediction of crown debonding; No metric reported |

| Patcas et al., [12] 2019 | Study of Cohort | CNN | Orthodontic photos, N = 1200 | Image cropping, standardization | Internal dataset | Orthodontics | Deep Learning → Supervised Learning → CNN | Subjective age/attractiveness estimation; No metric reported |

| Li et al., [13] (2015) | Experimental research | GA and BPNN | Orthodontic measurements, N = 1000 | Feature normalization | Internal validation | Aesthetic dentistry | Deep Learning → Supervised Learning → GA + BPNN | Objective tooth color matching; No metric reported |

| Kositbowornchai et al. [17] (2016) | Clinical study | LVQ Neural Network | Panoramic X-rays, N = 600 | Image normalization | Holdout validation | Restorative dentistry | Deep Learning → Supervised Learning → LVQ-NN | Caries detection; No metric reported |

| Patcas et al., [18] (2019) | Clinical study | CNN | Orthodontic photographs, N = 1200 | Image cropping, standardization | Internal dataset; no k-fold | Orthodontics | Deep Learning → Supervised Learning → CNN | Assessment of cleft patient profiles and frontal aesthetics; No metric reported |

| Vranckx et al., [19] (2020) | Clinical study | CNN and ResNet-101 | Dental radiographs, N = 3000+ | Rescaling, augmentation | Cross-validation not specified | Operative dentistry | Deep Learning → Supervised Learning → CNN (ResNet-101) | Molar segmentation; No metric reported |

| Lee et al., [20] (2020) | Clinical study | ML (e.g., Decision Trees, SVM) | TMJ radiographs, N = 2100 | Grayscale conversion, filtering | Train/test split | Oral and Maxillofacial Surgery | ML → Supervised Learning → SVM, Decision Trees | TMJOA classification; Accuracy: 95% |

| Cui et al., [21] (2020) | Cohort study | CDS ML model | Dataset from 5 hospitals, N = ~4000 cases | Data normalization cleaning | Separate test set | Oral and Maxillofacial Surgery | ML → Supervised Learning → CDS model | Accuracy: 99.16% |

| Sornam and Prabhakaran [16] (2019) | Clinical study | BPNN with LB-ABC | Dental records, N = 750 | Data normalization | 10-fold cross-validation | Restorative dentistry | Deep Learning → Supervised Learning → Hybrid BPNN | Accuracy: 99.16% |

| Setzer et al., [15] (2020) | Clinical study | DL for CBCT | CBCT scans, N = 1000 | Noise removal, segmentation | External validation | Endodontics | Deep Learning → Supervised Learning → CNN | Lesion detection; No metric reported |

| Cantu et al., [14] (2020) | Clinical study | CNN | CBCT images, N = 800 | Voxel normalization, filtering | Stratified train/test split | Operative dentistry and Oral Radiology | Deep Learning → Supervised Learning → CNN | Performance exceeds clinicians; No metric reported |

| Aliaga et al., [22] (2020) | Experimental study | Automatic segmentation | Facial scans, N = 350 | Mesh alignment, surface smoothing | Manual comparison | Operative dentistry and Oral and Maxillofacial Surgery | Image Analysis → Automated → Not specified | Osteoporosis detection; No metric reported |

| Kim et al., [23] (2018) | Case–control study | ML classifiers (SVM, RF, etc.) | Dental images, N = 2500 | Feature scaling | Train/test split | Oral and Maxillofacial Surgery and Oral Medicine | ML → Supervised Learning → SVM, RF | BRONJ prediction; Accuracy: 91.8% |

| Dumast et al., [24] (2018) | Case–control study | CNN and Shape variation | Radiographs, N = 1820 | Histogram equalization | Test set validation | Oral and Maxillofacial Surgery | Deep Learning → Supervised Learning → CNN Bone classification; Accuracy reported | Morphological classification; Accuracy: 86.5% |

| Sorkhabi and Khajeh [25] (2019) | Clinical study | 3D CNN | Intraoral scans, N = 1200 | 3D normalization | 6-month clinical validation | Implant dentistry | Deep Learning → Supervised Learning → 3D CNN | Alveolar bone density classification; Accuracy: 91.2% |

- Blinding: In the context of AI model development, blinding is relevant when human annotators are involved in labeling training datasets, as knowledge of patient identity or outcome can introduce bias into the ground truth, potentially compromising model validity. Among the included studies, only Bouchahma et al. [4] explicitly reported the use of blinding during the annotation of training data, underscoring a general lack of attention to this potential bias across the reviewed literature.

4. Discussion

5. Limitations

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Shapiro, S.C. Artificial intelligence (AI). In Encyclopedia of Computer Science, 2nd ed.; Wiley: Hoboken, NJ, USA, 2003; pp. 89–93. [Google Scholar]

- Ramesh, A.N.; Kambhampati, C.; Monson, J.R.; Drew, P.J. Artificial intelligence in medicine. Ann. R. Coll. Surg. Engl. 2004, 86, 334–338. [Google Scholar] [CrossRef] [PubMed]

- A Primer: Artificial Intelligence Versus Neural Networks. The Scientist Magazine®. Available online: https://www.the-scientist.com/magazine-issue/artificial-intelligence-versus-neural-networks-65802 (accessed on 15 December 2024).

- Bouchahma, M.; Ben Hammouda, S.; Kouki, S.; Alshemaili, M.; Samara, K. An automatic dental decay treatment prediction using a deep convolutional neural network on X-ray images. In Proceedings of the 2019 IEEE/ACS 16th International Conference on Computer Systems and Applications (AICCSA), Abu Dhabi, United Arab Emirates, 1–4 October 2019. [Google Scholar]

- Ekert, T.; Krois, J.; Meinhold, L.; Elhennawy, K.; Emara, R.; Golla, T.; Schwendicke, F. Deep Learning for the Radiographic Detection of Apical Lesions. J. Endod. 2019, 45, 917–922.e5. [Google Scholar] [CrossRef]

- Deniz Arısu, H.; Eligüzeloglu Dalkilic, E.; Alkan, F.; Erol, S.; Uctasli, M.B.; Cebi, A. Use of artificial neural network in determination of shade, light curing unit, and composite parameters’ effect on bottom/top Vickers hardness ratio of composites. Biomed. Res. Int. 2018, 2018, 4856707. [Google Scholar] [CrossRef] [PubMed]

- Takahashi, T.; Nozaki, K.; Gonda, T.; Ikebe, K. A system for designing removable partial dentures using artificial intelligence. Part 1. Classification of partially edentulous arches using a convolutional neural network. J. Prosthodont. Res. 2021, 65, 115–118. [Google Scholar] [CrossRef]

- Li, M.; Xu, X.; Punithakumar, K.; Le, L.H.; Kaipatur, N.; Shi, B. Automated integration of facial and intra-oral images of anterior teeth. Comput. Biol. Med. 2020, 122, 103794. [Google Scholar] [CrossRef]

- Poplin, R.; Varadarajan, A.V.; Blumer, K.; Liu, Y.; McConnell, M.V.; Corrado, G.S.; Peng, L.; Webster, D.R. Prediction of cardiovascular risk factors from retinal fundus photographs via deep learning. Nat. Biomed. Eng. 2018, 2, 158–164. [Google Scholar] [CrossRef] [PubMed]

- Kuwada, C.; Ariji, Y.; Fukuda, M.; Kise, Y.; Fujita, H.; Katsumata, A.; Ariji, E. Deep learning systems for detecting and classifying the presence of impacted supernumerary teeth in the maxillary incisor region on panoramic radiographs. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. 2020, 130, 464–469. [Google Scholar] [CrossRef]

- Yamaguchi, S.; Lee, C.; Karaer, O.; Ban, S.; Mine, A.; Imazato, S. Predicting the Debonding of CAD/CAM Composite Resin Crowns with AI. J. Dent. Res. 2019, 98, 1234–1238. [Google Scholar] [CrossRef]

- Patcas, R.; Bernini, D.A.J.; Volokitin, A.; Agustsson, E.; Rothe, R.; Timofte, R. Applying artificial intelligence to assess the impact of orthognathic treatment on facial attractiveness and estimated age. Int. J. Oral Maxillofac. Surg. 2019, 48, 77–83. [Google Scholar] [CrossRef]

- Li, H.; Lai, L.; Chen, L.; Lu, C.; Cai, Q. The prediction in computer color matching of dentistry based on GA+BP neural network. Comput. Math. Methods Med. 2015, 2015, 816719. [Google Scholar] [CrossRef]

- Cantu, A.G.; Gehrung, S.; Krois, J.; Chaurasia, A.; Rossi, J.G.; Gaudin, R.; Elhennawy, K.; Schwendicke, F. Detecting caries lesions of different radiographic extension on bitewings using deep learning. J. Dent. 2020, 100, 103425. [Google Scholar] [CrossRef] [PubMed]

- Setzer, F.C.; Shi, K.J.; Zhang, Z.; Yan, H.; Yoon, H.; Mupparapu, M.; Li, J. Artificial intelligence for the computer-aided detection of periapical lesions in cone-beam computed tomographic images. J. Endod. 2020, 46, 987–993. [Google Scholar] [CrossRef]

- Sornam, M.; Prabhakaran, M. Logit-based artificial bee colony optimization (LB-ABC) approach for dental caries classification using a back propagation neural network. Integr. Intell. Comput. Commun. Secur. 2019, 771, 79–91. [Google Scholar]

- Kositbowornchai, S.; Siriteptawee, S.; Plermkamon, S.; Bureerat, S.; Chetchotsak, D. An artificial neural network for detection of simulated dental caries. Int. J. Comput. Assist. Radiol. Surg. 2016, 1, 91–96. [Google Scholar] [CrossRef]

- Patcas, R.; Timofte, R.; Volokitin, A.; Agustsson, E.; Eliades, T.; Eichenberger, M.; Bornstein, M.M. Facial attractiveness of cleft patients: A direct comparison between artificial-intelligence-based scoring and conventional rater groups. Eur. J. Orthod. 2019, 41, 428–433. [Google Scholar] [CrossRef]

- Vranckx, M.; Van Gerven, A.; Willems, H.; Vandemeulebroucke, A.; Ferreira Leite, A.; Politis, C.; Jacobs, R. Artificial Intelligence (AI)-Driven Molar Angulation Measurements to Predict Third Molar Eruption on Panoramic Radiographs. Int. J. Environ. Res. Public Health 2020, 17, 3716. [Google Scholar] [CrossRef] [PubMed]

- Lee, K.S.; Kwak, H.J.; Oh, J.M.; Jha, N.; Kim, Y.J.; Kim, W.; Baik, U.B.; Ryu, J.J. Automated detection of TMJ osteoarthritis based on artificial intelligence. J. Dent. Res. 2020, 99, 1363–1367. [Google Scholar] [CrossRef]

- Cui, Q.; Chen, Q.; Liu, P.; Liu, D.; Wen, Z. Clinical decision support model for tooth extraction therapy derived from electronic dental records. J. Prosthet. Dent. 2021, 126, 83–90. [Google Scholar] [CrossRef]

- Aliaga, I.; Vera, V.; Vera, M.; García, E.; Pedrera, M.; Pajares, G. Automatic computation of mandibular indices in dental panoramic radiographs for early osteoporosis detection. Artif. Intell. Med. 2020, 103, 101816. [Google Scholar] [CrossRef]

- Kim, D.W.; Kim, H.; Nam, W.; Kim, H.J.; Cha, I.H. Machine learning to predict the occurrence of bisphosphonate-related osteonecrosis of the jaw associated with dental extraction: A preliminary report. Bone 2018, 116, 207–214. [Google Scholar] [CrossRef]

- de Dumast, P.; Mirabel, C.; Cevidanes, L.; Ruellas, A.; Yatabe, M.; Ioshida, M.; Ribera, N.T.; Michoud, L.; Gomes, L.; Huang, C.; et al. A web-based system for neural network based classification in temporomandibular joint osteoarthritis. Comput. Med. Imaging Graph. 2018, 67, 45–54. [Google Scholar] [CrossRef] [PubMed]

- Sorkhabi, M.M.; Saadat Khajeh, M. Classification of alveolar bone density using 3-D deep convolutional neural network in the cone-beam CT images: A 6-month clinical study. Measurement 2019, 148, 106945. [Google Scholar] [CrossRef]

- Caliskan, S.; Tuloglu, N.; Celik, O.; Ozdemir, C.; Kizilaslan, S.; Bayrak, S. A pilot study of a deep learning approach to submerged primary tooth classification and detection. Int. J. Comput. Dent. 2021, 24, 1–9. [Google Scholar] [CrossRef]

- Kılıc, M.C.; Bayrakdar, I.S.; Celik, O.; Bilgir, E.; Orhan, K.; Aydın, O.B.; Kaplan, F.A.; Sağlam, H.; Aslan, A.F.; Yılmaz , A.B. Artificial intelligence system for automatic deciduous tooth detection and numbering in panoramic radiographs. Dentomaxillofac. Radiol. 2021, 50, 20200172. [Google Scholar] [CrossRef] [PubMed]

- Zheng, L.; Wang, H.; Mei, L.; Chen, Q.; Zhang, Y.; Zhang, H. Artificial intelligence in digital cariology: A new tool for the diagnosis of deep caries and pulpitis using convolutional neural networks. Ann. Transl. Med. 2021, 9, 763. [Google Scholar] [CrossRef]

- Bulatova, G.; Kusnoto, B.; Grace, V.; Tsay, T.P.; Avenetti, D.M.; Sanchez, F.J.C. Assessment of automatic cephalometric landmark identification using artificial intelligence. Orthod. Craniofac. Res. 2021, 24 (Suppl. S2), 37–42. [Google Scholar] [CrossRef]

- Zhao, T.; Zhou, J.; Yan, J.; Cao, L.; Cao, Y.; Hua, F.; He, H. Automated adenoid hypertrophy assessment with lateral cephalometry in children based on artificial intelligence. Diagnostics 2021, 11, 1386. [Google Scholar] [CrossRef] [PubMed]

- Seo, H.; Hwang, J.; Jeong, T.; Shin, J. Comparison of deep learning models for cervical vertebral maturation stage classification on lateral cephalometric radiographs. J. Clin. Med. 2021, 10, 3591. [Google Scholar] [CrossRef]

- Kim, E.G.; Oh, I.S.; So, J.E.; Kang, J.; Le, V.N.T.; Tak, M.K.; Lee, D.-W. Estimating cervical vertebral maturation with a lateral cephalogram using the convolutional neural network. J. Clin. Med. 2021, 10, 5400. [Google Scholar] [CrossRef]

- Karhade, D.S.; Roach, J.; Shrestha, P.; Simancas-Pallares, M.A.; Ginnis, J.; Burk, Z.J.S.; Ribeiro, A.A.; Cho, H.; Wu, D.; Divaris, K. An automated machine learning classifier for early childhood caries. Pediatr. Dent. 2021, 43, 191–197. [Google Scholar] [PubMed]

- Rudin, C. Stop Explaining Black Box Machine Learning Models for High Stakes Decisions and Use Interpretable Models Instead. Nat. Mach. Intell. 2019, 1, 206–215. [Google Scholar] [CrossRef] [PubMed]

- Rajpurkar, P.; Irvin, J.; Ball, R.L.; Zhu, K.; Yang, B.; Mehta, H.; Duan, T.; Ding, D.; Bagul, A.; Langlotz, C.P.; et al. Deep learning for chest radiograph diagnosis: A retrospective comparison of the CheXNeXt algorithm to practicing radiologists. PLoS Med. 2018, 15, e1002686. [Google Scholar] [CrossRef] [PubMed]

- Available online: https://www.fda.gov/medical-devices/software-medical-device-samd/artificial-intelligence-and-machine-learning-software-medical-device (accessed on 15 November 2024).

- Aboy, M.; Minssen, T.; Vayena, E. Navigating the EU AI Act: Implications for regulated digital medical products. NPJ Digit. Med. 2024, 7, 237. [Google Scholar] [CrossRef] [PubMed]

| Study (Author, Year) | Randomization | Blinding | Withdrawal/Dropout Mentioned | Multiple Variables Measurement | Estimation of Sample Size | Clear Exclusion/Inclusion Criteria | Reliability of Examiner | Prespecified Outcomes | Study Quality/Bias Risk |

|---|---|---|---|---|---|---|---|---|---|

| Bouchahma et al., [4] (2019) | Not conducted | Implemented | Mentioned | Measured Repeatedly | Estimated | Clearly Defined | Tested | Predetermined | Low |

| Arisu et al., [6] 2018 | Not Conducted | Not Applied | Mentioned | Measured Repeatedly | Estimated | Clearly Defined | Tested | Not Specified | Moderate |

| Takahashi et al., [7] 2020 | Not Conducted | Not Applied | Mentioned | Measured Repeatedly | Estimated | Clearly Defined | Tested | Predetermined | Low |

| Li et al., [8] 2020 | Not Conducted | Not Applied | Mentioned | Measured Repeatedly | Estimated | Clearly Defined | Tested | Predetermined | Low |

| Kuwada et al., [10] 2020 | Conducted | Not Applied | Mentioned | Measured Repeatedly | Estimated | Clearly Defined | Tested | Predetermined | Low |

| Yamaguchi et al., [11] 2019 | Not conducted | Not Applied | Unclear | Measured repeatedly | Estimated | Clearly Defined | Tested | Not Specified | Moderate |

| Patcas et al., [12] 2019 | Not Conducted | Not Applied | Mentioned | Measured Repeatedly | Estimated | Clearly Defined | Tested | Predetermined | Low |

| Li et al., [13] (2015) | Unclear | Unclear | Mentioned | Measured Repeatedly | Estimated | Clearly Defined | Not Tested | Predetermined | Moderate |

| Kositbowornchai et al., [17] (2016) | Not Conducted | Not Applied | Mentioned | Measured Repeatedly | Estimated | Clearly Defined | Tested | Predetermined | Low |

| Patcas et al., [18] (2019) | Not Conducted | Not Applied | Mentioned | Measured Repeatedly | Estimated | Clearly Defined | Tested | Predetermined | Low |

| Vranckx et al., [19] (2020) | Conducted | Not Applied | Mentioned | Measured Repeatedly | Estimated | Clearly Defined | Tested | Predetermined | Moderate |

| Lee et al., [20] (2020) | Not Conducted | Not Applied | Mentioned | Not Measured Repeatedly | Estimated | Not clearly Defined | Tested | Not specified | Moderate |

| Cui et al., [21] (2020) | Not Conducted | Not Applied | Mentioned | Measured Repeatedly | Estimated | Not Clearly Defined | Tested | Not Specified | Low |

| Sornam and Prabhakaran [16] (2019) | Not Conducted | Not Applied | Mentioned | Measured Repeatedly | Estimated | Clearly Defined | Tested | Predetermined | Low |

| Setzer et al., [15] (2020) | Not Conducted | Not Applied | Mentioned | Measured Repeatedly | Estimated | Clearly Defined | Tested | Predetermined | Low |

| Cantu et al., [14] (2020) | Not Conducted | Not Applied | Mentioned | Measured Repeatedly | Estimated | Clearly Defined | Tested | Predetermined | Low |

| Aliaga et al., [22] (2020) | Not Conducted | Not Applied | Mentioned | Measured Repeatedly | Estimated | Clearly Defined | Tested | Predetermined | Low |

| Kim et al., [23] (2018) | Not Conducted | Not Applied | Mentioned | Measured Repeatedly | Estimated | Clearly Defined | Tested | Predetermined | Low |

| Dumast et al., [24] (2018) | Not Conducted | Not Applied | Mentioned | Measured Repeatedly | Estimated | Clearly Defined | Tested | Predetermined | Low |

| Sorkhabi and Khajeh [25] (2019) | Not Conducted | Not Applied | Mentioned | Measured Repeatedly | Estimated | Clearly Defined | Tested | Predetermined | Low |

| Design of Study | No. of Studies | Percentage | Bias Risks |

|---|---|---|---|

| Randomized | 2 | 76 | Moderate |

| Cross-sectional | 9 | 80 | Low |

| Diagnostic test accuracy | 9 | 88 | Low |

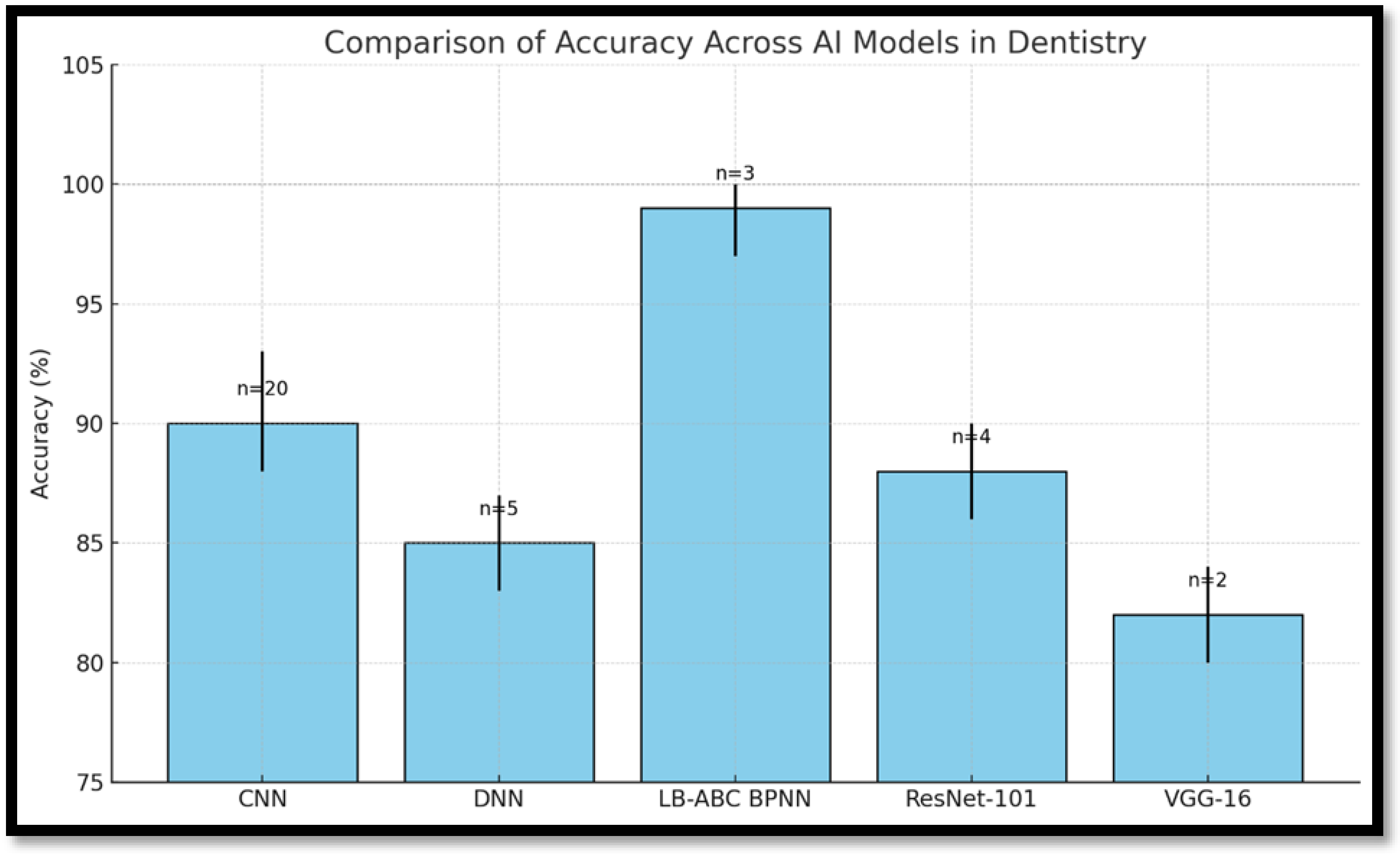

| AI Model | Diagnostic Accuracy | Interpretability | Deployment Feasibility | Suitable Applications |

|---|---|---|---|---|

| CNN | High (85–93%) | Low | Requires GPU resources | Image-based diagnostics (caries, lesions) |

| Hybrid BPNN (LB-ABC) | Very High (~99%) | Moderate | Moderate complexity | Oral cancer, caries classification |

| DNN | High to Very High | Low | Moderate to High | Treatment planning, structured data tasks |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khattak, O.; Hashem, A.S.; Alqarni, M.S.; Almufarrij, R.A.S.; Siddiqui, A.Y.; Anis, R.; Ahmad, S.; Fareed, M.A.; Alothmani, O.S.; Alkhershawy, L.H.S.; et al. Deep Learning Applications in Dental Image-Based Diagnostics: A Systematic Review. Healthcare 2025, 13, 1466. https://doi.org/10.3390/healthcare13121466

Khattak O, Hashem AS, Alqarni MS, Almufarrij RAS, Siddiqui AY, Anis R, Ahmad S, Fareed MA, Alothmani OS, Alkhershawy LHS, et al. Deep Learning Applications in Dental Image-Based Diagnostics: A Systematic Review. Healthcare. 2025; 13(12):1466. https://doi.org/10.3390/healthcare13121466

Chicago/Turabian StyleKhattak, Osama, Ahmed Shawkat Hashem, Mohammed Saad Alqarni, Raha Ahmed Shamikh Almufarrij, Amna Yusuf Siddiqui, Rabia Anis, Shahzad Ahmad, Muhammad Amber Fareed, Osama Shujaa Alothmani, Lama Habis Samah Alkhershawy, and et al. 2025. "Deep Learning Applications in Dental Image-Based Diagnostics: A Systematic Review" Healthcare 13, no. 12: 1466. https://doi.org/10.3390/healthcare13121466

APA StyleKhattak, O., Hashem, A. S., Alqarni, M. S., Almufarrij, R. A. S., Siddiqui, A. Y., Anis, R., Ahmad, S., Fareed, M. A., Alothmani, O. S., Alkhershawy, L. H. S., Alabidin, W. W. Z., Issrani, R., & Agarwal, A. (2025). Deep Learning Applications in Dental Image-Based Diagnostics: A Systematic Review. Healthcare, 13(12), 1466. https://doi.org/10.3390/healthcare13121466