The Impact of AI Scribes on Streamlining Clinical Documentation: A Systematic Review

Abstract

1. Introduction

2. Methods

2.1. Overview

2.2. Eligibility Criteria

2.3. Information Sources and Search Strategy

2.4. Selection and Data Collection Process

2.5. Synthesis Methods and Reporting Quality Assessment

3. Results

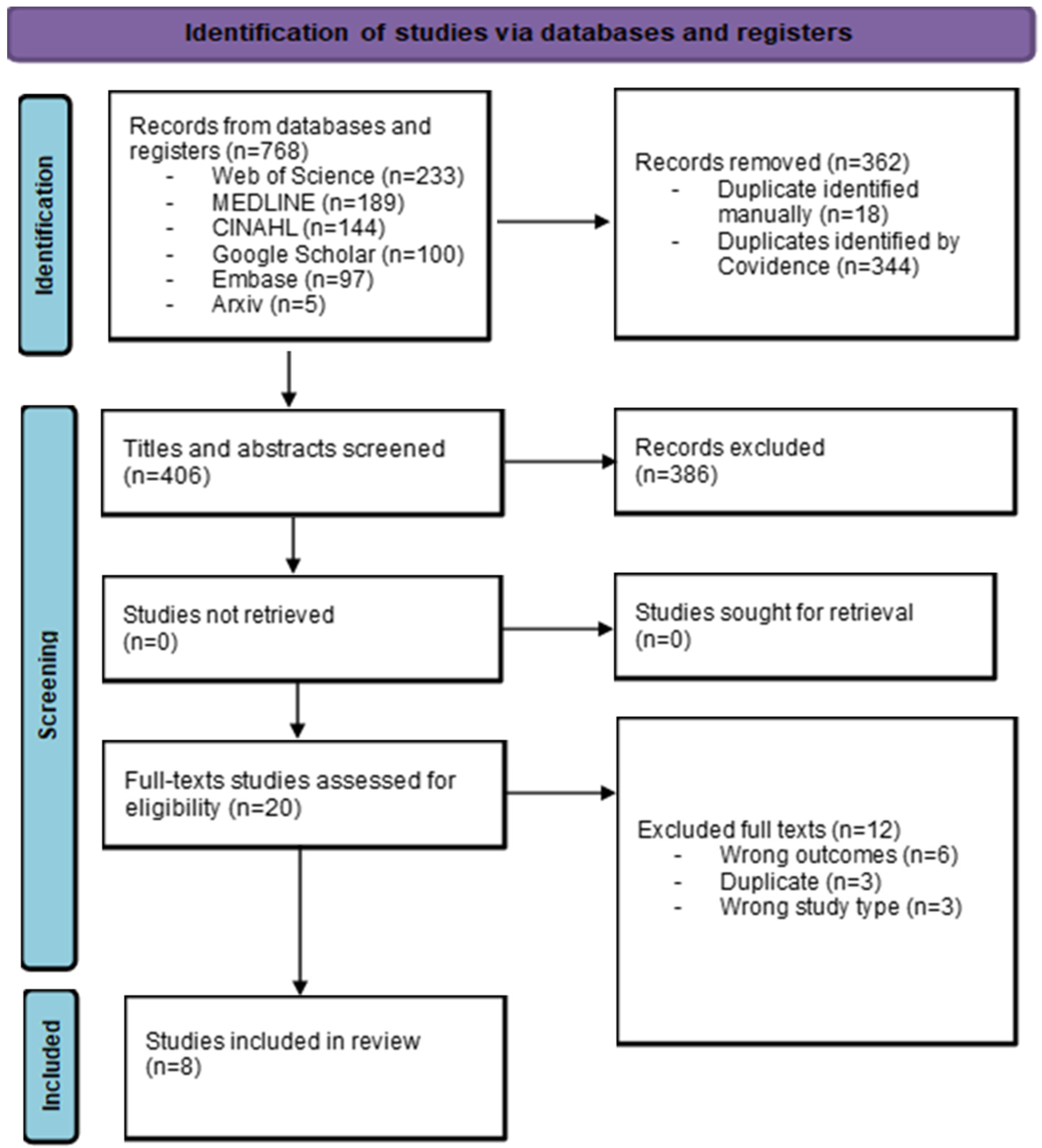

3.1. Study Selection

3.2. Study Characteristics

3.3. Methods and Approaches to Evaluating AI Scribes’ Impacts

3.4. Settings of Included Studies

3.5. Global Characteristics of AI Scribes Used in Interventions

3.6. Features of AI Scribe Systems

3.7. Overview of the Impacts of AI Scribe Systems

3.8. Impacts of AI Scribes on Clinician Outcomes

3.9. Impacts of AI Scribes on Healthcare System Efficiency

3.10. Impacts of AI Scribes on Documentation Outcomes

3.11. Impacts of AI Scribes on Patient Outcomes

3.12. Factors for Successful Adoption and Implementation of AI Scribes in Clinical Settings

3.13. Patient Perspectives and Ethical Considerations

3.14. Quality Assessment of Included Studies

4. Discussion

4.1. Summary of Results

4.2. Strengths

4.3. Limitations

4.3.1. Limitations of This Review

4.3.2. Open Issues and Research Gaps Identified in the Included Literature

4.4. Future Directions

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Medline (Ovid) | ||

| Date of the search: 1 November 2024 | ||

| Database limit: no database search limit has been applied. | ||

| # | Search strategy | Results |

| 1 | ((Ambient OR Clinical OR Medical OR automated OR virtual OR Digital OR AI OR “Artificial Intelligence” OR E) adj1 scrib*).ti,ab,kf | 189 |

| Embase (Embase.com) | ||

| Date of the search: 1 November 2024 | ||

| Database limit: results have been limited to Embase & prepublications only. | ||

| # | Search strategy | Results |

| 1 | ((Ambient OR Clinical OR Medical OR automated OR virtual OR Digital OR AI OR “Artificial Intelligence” OR E) NEAR/1 scrib*):ti,ab,kw | 254 |

| 2 | #1 AND [embase]/lim NOT ([embase]/lim AND [medline]/lim) | 97 |

| CINAHL (EBSCO) | ||

| Date of the search: 1 November 2024 | ||

| Database limit: no database search limit has been applied. | ||

| # | Search strategy | Results |

| 1 | TI ((Ambient OR Clinical OR Medical OR automated OR virtual OR Digital OR AI OR “Artificial Intelligence” OR E) N1 scrib*) OR AB ((Ambient OR Clinical OR Medical OR automated OR virtual OR Digital OR AI OR “Artificial Intelligence” OR E) N1 scrib*) | 144 |

| Web of Science | ||

| Date of the search: 1 November 2024 | ||

| Database limit: no database search limit has been applied. | ||

| # | Search strategy | Results |

| 1 | TS = ((Ambient OR Clinical OR Medical OR automated OR virtual OR Digital OR AI OR “Artificial Intelligence” OR E) NEAR/1 scrib*) | 321 |

| 2 | TS = (health* OR care OR Medic* OR clinical) | 11,720,971 |

| 3 | #1 AND #2 | 233 |

| Arxiv (https://arxiv.org/) | ||

| Date of the search: 1 November 2024 | ||

| Database limit: search in all fields. | ||

| # | Search strategy | Results |

| 1 | “Ambient scribe” OR “Clinical scribe” OR “Medical scribe” OR “automated scribe” OR “virtual scribe” OR “Digital scribe” OR “AI scribe” OR “E scribe” | 5 |

| Google Scholar (https://harzing.com/resources/publish-or-perish) | ||

| Date of the search: 1 November 2024 | ||

| Database limit: only up to the 100 first results have been considered; citations and patents options have been removed. | ||

| # | Search strategy | Results |

| 1 | “Ambient scribe” OR “Clinical scribe” OR “Medical scribe” OR “automated scribe” OR “virtual scribe” OR “Digital scribe” OR “AI scribe” OR “E scribe” | 100 |

Appendix B

| Studies | Criteria from the Mixed Methods Appraisal Tool | ||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| S1 | S2 | 1.1 | 1.2 | 1.3 | 1.4 | 1.5 | 2.1 | 2.2 | 2.3 | 2.4 | 2.5 | 3.1 | 3.2 | 3.3 | 3.4 | 3.5 | 4.1 | 4.2 | 4.3 | 4.4 | 4.5 | 5.1 | 5.2 | 5.3 | 5.4 | 5.5 | |

| Haberle [31] | Yes | Yes | 1 | 0 | 0 | 1 | 1 | ||||||||||||||||||||

| Hudelson [32] | Yes | Yes | 1 | 1 | 0 | 1 | 1 | 1 | 1 | 1 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | ||||||||||

| Islam [33] | Yes | Yes | 1 | 1 | 1 | 0 | 0 | 1 | 0 | 1 | 0 | 1 | |||||||||||||||

| Kernberg [34] | Yes | Yes | 0 | 1 | 1 | 1 | 1 | ||||||||||||||||||||

| Nguyen [35] | Yes | Yes | 1 | 1 | 0 | 1 | 1 | 0 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | ||||||||||

| Sezgin [36] | Yes | No | 1 | 1 | 1 | 1 | 1 | ||||||||||||||||||||

| vanBuchem [37] | Yes | Yes | 0 | 0 | 1 | 1 | 1 | ||||||||||||||||||||

| Wang [38] | Yes | Yes | 0 | 1 | 1 | 1 | 1 | ||||||||||||||||||||

References

- Johnson, K.B.; Neuss, M.J.; Detmer, D.E. Electronic health records and clinician burnout: A story of three eras. J. Am. Med. Inform. Assoc. 2021, 28, 967–973. [Google Scholar] [CrossRef]

- Gardner, R.L.; Cooper, E.; Haskell, J.; Harris, D.A.; Poplau, S.; Kroth, P.J.; Linzer, M. Physician stress and burnout: The impact of health information technology. J. Am. Med. Inform. Assoc. 2019, 26, 106–114. [Google Scholar] [CrossRef]

- Canadian Medical Association. Administrative burden: Facts. 2024. Available online: https://www.cma.ca/our-focus/administrative-burden/facts (accessed on 10 December 2024).

- Rao, S.K.; Kimball, A.B.; Lehrhoff, S.R.; Hidrue, M.K.; Colton, D.G.; Ferris, T.G.; Torchiana, D.F. The impact of administrative burden on academic physicians: Results of a hospital-wide physician survey. Acad. Med. 2017, 92, 237–243. [Google Scholar] [CrossRef] [PubMed]

- Gesner, E.J.; Gazarian, P.K.; Dykes, P.C. The burden and burnout in documenting patient care: An integrative literature review. Stud. Health Technol. Inform. 2019, 264, 1194–1198. [Google Scholar]

- Tran, B.D.; Rosenbaum, K.; Zheng, K. An interview study with medical scribes on how their work may alleviate clinician burnout through delegated health IT tasks. J. Am. Med. Inform. Assoc. 2021, 28, 907–914. [Google Scholar] [CrossRef]

- Corby, S.; Ash, J.S.; Mohan, V.; Becton, J.; Solberg, N.; Bergstrom, R.; Orwoll, B.; Hoekstra, C.; Gold, J.A. A qualitative study of provider burnout: Do medical scribes hinder or help? JAMIA Open 2021, 4, ooab047. [Google Scholar] [CrossRef] [PubMed]

- Sattler, A.; Rydel, T.; Nguyen, C.; Lin, S. One year of family physicians’ observations on working with medical scribes. J. Am. Board Fam. Med. 2018, 31, 49–56. [Google Scholar] [CrossRef]

- Walker, K.J.; Dunlop, W.; Liew, D.; Staples, M.P.; Johnson, M.; Ben-Meir, M.; Rodda, H.G.; Turner, I.; Phillips, D. An economic evaluation of the costs of training a medical scribe to work in Emergency Medicine. Emerg. Med. J. 2016, 33, 865–869. [Google Scholar] [CrossRef]

- Ghatnekar, S.; Faletsky, A.; Nambudiri, V.E. Digital scribes in dermatology: Implications for practice. J. Am. Acad. Dermatol. 2022, 86, 968–969. [Google Scholar] [CrossRef]

- Quiroz, J.C.; Laranjo, L.; Kocaballi, A.B.; Berkovsky, S.; Rezazadegan, D.; Coiera, E. Challenges of developing a digital scribe to reduce clinical documentation burden. NPJ Digit. Med. 2019, 2, 114. [Google Scholar] [CrossRef] [PubMed]

- Coiera, E.; Kocaballi, B.; Halamka, J.; Laranjo, L. The digital scribe. NPJ Digit. Med. 2018, 1, 58. [Google Scholar] [CrossRef]

- Nawab, K. Artificial intelligence scribe: A new era in medical documentation. Artif. Intell. Health 2024, 1, 12–15. [Google Scholar] [CrossRef]

- Solano, L.A.; Smith, R. Can AI Hear Me? Nine Facts about the Artificial Intelligence scribe. JNHMA 2024, 2, 9–15. [Google Scholar] [CrossRef]

- Morandín-Ahuerma, F. What is artificial intelligence? Int. J. Res. Publ. Rev. 2022, 3, 1947–1951. [Google Scholar] [CrossRef]

- Rathore, F.A.; Rathore, M.A. The emerging role of artificial intelligence in healthcare. J. Pak. Med. Assoc. 2023, 73, 1368–1369. [Google Scholar] [CrossRef]

- Mishra, V.; Ugemuge, S.; Tiwade, Y. Artificial intelligence changing the future of healthcare diagnostics. J. Cell. Biotechnol. 2023, 9, 161–168. [Google Scholar] [CrossRef]

- van Buchem, M.M.; Boosman, H.; Bauer, M.P.; Kant, I.M.J.; Cammel, S.A.; Steyerberg, E.W. The digital scribe in clinical practice: A scoping review and research agenda. NPJ Digit. Med. 2021, 4, 57. [Google Scholar] [CrossRef]

- Tierney, A.A.; Gayre, G.; Hoberman, B.; Mattern, B.; Ballesca, M.; Kipnis, P.; Liu, V.; Lee, K. Ambient artificial intelligence scribes to alleviate the burden of clinical documentation. NEJM Catal. Innov. Care Deliv. 2024, 5, CAT.23.0404. [Google Scholar] [CrossRef]

- Finley, G.; Edwards, E.; Robinson, A.; Brenndoerfer, M.; Sadoughi, N.; Fone, J.; Axtmann, N.; Miller, M.; Suendermann-Oeft, D. An automated medical scribe for documenting clinical encounters. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Demonstrations, New Orleans, LA, USA, 2–4 June 2018; pp. 11–15. [Google Scholar]

- Crampton, N.H. Ambient virtual scribes: Mutuo Health’s AutoScribe as a case study of artificial intelligence-based technology. Healthc. Manag. Forum 2020, 33, 34–38. [Google Scholar] [CrossRef] [PubMed]

- Shah, S.J.; Devon-Sand, A.; Ma, S.P.; Jeong, Y.; Crowell, T.; Smith, M.; Liang, A.S.; Delahaie, C.; Hsia, C.; Shanafelt, T.; et al. Ambient artificial intelligence scribes: Physician burnout and perspectives on usability and documentation burden. J. Am. Med. Inform. Assoc. 2025, 32, 375–380. [Google Scholar] [CrossRef]

- Biswas, A.; Talukdar, W. Intelligent clinical documentation: Harnessing generative AI for patient-centric clinical note generation. Int. J. Inf. Technol. 2024, 12, 1483. [Google Scholar] [CrossRef]

- Lynn, L.A. Artificial intelligence systems for complex decision-making in acute care medicine: A review. Patient Saf. Surg. 2019, 13, 6. [Google Scholar] [CrossRef] [PubMed]

- Higgins, J.P.T.; Thomas, J.; Chandler, J.; Cumpston, M.; Li, T.; Page, M.J.; Welch, V.A. (Eds.) Cochrane Handbook for Systematic Reviews of Interventions Version 6.5 (updated August 2024); Cochrane: London, UK, 2024; Available online: www.training.cochrane.org/handbook (accessed on 12 December 2024).

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. PLoS Med. 2021, 18, e1003583. [Google Scholar] [CrossRef] [PubMed]

- Covidence. Covidence Systematic Review Software; Veritas Health Innovation: Melbourne, Australia; Available online: https://www.covidence.org/ (accessed on 12 December 2024).

- Hong, Q.N.; Pluye, P.; Fàbregues, S.; Bartlett, G.; Boardman, F.; Cargo, M.; Dagenais, P.; Gagnon, M.P.; Griffiths, F.; Nicolau, B.; et al. Improving the content validity of the mixed methods appraisal tool: A modified e-Delphi study. J. Clin. Epidemiol. 2019, 111, 49–59. [Google Scholar] [CrossRef]

- Hong, Q.N.; Fàbregues, S.; Bartlett, G.; Boardman, F.; Cargo, M.; Dagenais, P.; Gagnon, M.P.; Griffiths, F.; Nicolau, B.; O’Cathain, A.; et al. The mixed methods appraisal tool (MMAT) version 2018 for information professionals and researchers. EFI 2018, 34, 285–291. [Google Scholar] [CrossRef]

- Hong, Q.N.; Pluye, P.; Fàbregues, S.; Bartlett, G.; Boardman, F.; Cargo, M.; Dagenais, P.; Gagnon, M.P.; Griffiths, F.; Nicolau, B.; et al. Mixed methods appraisal tool (MMAT) version 2018, user guide. Mix. Methods Apprais. Tool 2018, 1148552, 1–7. Available online: https://content.iospress.com/articles/education-for-information/efi180221 (accessed on 11 February 2025).

- Haberle, T.; Cleveland, C.; Snow, G.L.; Barber, C.; Stookey, N.; Thornock, C.; Younger, L.; Mullahkhel, B.; Ize-Ludlow, D. The impact of nuance DAX ambient listening AI documentation: A cohort study. J. Am. Med. Inform. Assoc. 2024, 31, 975–979. [Google Scholar] [CrossRef]

- Hudelson, C.; Gunderson, M.A.; Pestka, D.; Christiaansen, T.; Stotka, B.; Kissock, L.; Markowitz, R.; Badlani, S.; Melton, G.B. Selection and Implementation of Virtual Scribe Solutions to Reduce Documentation Burden: A Mixed Methods Pilot. AMIA Summits Transl. Sci. Proc. 2024, 2024, 230–238. [Google Scholar] [PubMed]

- Islam, M.N.; Mim, S.T.; Tasfia, T.; Hossain, M.M. Enhancing patient treatment through automation: The development of an efficient scribe and prescribe system. Inform. Med. Unlocked 2024, 45, 101456. [Google Scholar] [CrossRef]

- Kernberg, A.; Gold, J.A.; Mohan, V. Using ChatGPT-4 to Create Structured Medical Notes From Audio Recordings of Physician-Patient Encounters: Comparative Study. J. Med. Internet Res. 2024, 26, e54419. [Google Scholar] [CrossRef]

- Nguyen, O.T.; Turner, K.; Charles, D.; Sprow, O.; Perkins, R.; Hong, Y.R.; Islam, J.Y.; Khanna, N.; Alishahi Tabriz, A.; Hallanger-Johnson, J.; et al. Implementing digital scribes to reduce electronic health record documentation burden among cancer care clinicians: A mixed-methods pilot study. JCO Clin. Cancer Inform. 2023, 7, e2200166. [Google Scholar] [CrossRef] [PubMed]

- Sezgin, E.; Sirrianni, J.W.; Kranz, K. Evaluation of a Digital Scribe: Conversation Summarization for Emergency Department Consultation Calls. Appl. Clin. Inform. 2024, 15, 600–611. [Google Scholar] [CrossRef] [PubMed]

- van Buchem, M.M.; Kant, I.M.; King, L.; Kazmaier, J.; Steyerberg, E.W.; Bauer, M.P. Impact of a digital scribe system on clinical documentation time and quality: Usability study. JMIR AI 2024, 3, e60020. [Google Scholar] [CrossRef]

- Wang, J.; Lavender, M.; Hoque, E.; Brophy, P.; Kautz, H. A patient-centred digital scribe for automatic medical documentation. JAMIA Open 2021, 4, ooab003. [Google Scholar] [CrossRef]

- PRISMA Statement. PRISMA 2020 Flow Diagram. Published 2021. Available online: https://www.prisma-statement.org/prisma-2020-flow-diagram (accessed on 11 February 2025).

- Tran, B.D.; Chen, Y.; Liu, S.; Zheng, K. How does medical scribes’ work inform development of speech-based clinical documentation technologies? A systematic review. J. Am. Med. Inform. Assoc. 2020, 27, 808–817. [Google Scholar] [CrossRef] [PubMed]

- Benko, S.; Idarraga, A.; Bohl, D.D.; Hamid, K.S. Virtual scribe services decrease documentation burden without affecting patient satisfaction: A randomized controlled trial. Foot Ankle Orthop. 2019, 4, S105. [Google Scholar] [CrossRef]

- Li, B.; Crampton, N.; Yeates, T.; Xia, Y.; Tian, X.; Truong, K. Automating clinical documentation with digital scribes: Understanding the impact on physicians. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; pp. 1–12. [Google Scholar]

- Mojeski, J.A.; Singh, J.; McCabe, J.; Colegio, O.R.; Rothman, I. An institutional scribe program increases productivity in a dermatology clinic. Health Policy Technol. 2020, 9, 174–176. [Google Scholar] [CrossRef]

- Corby, S.; Gold, J.A.; Mohan, V.; Solberg, N.; Becton, J.; Bergstrom, R.; Orwoll, B.; Hoekstra, C.; Ash, J.S. A sociotechnical multiple perspectives approach to the use of medical scribes: A deeper dive into the scribe-provider interaction. In AMIA Annual Symposium Proceedings; American Medical Informatics Association: Bethesda, MD, USA, 2019; pp. 333–342. [Google Scholar] [PubMed] [PubMed Central]

- Reuben, D.B.; Miller, N.; Glazier, E.; Koretz, B.K. Frontline account: Physician partners: An antidote to the electronic health record. J. Gen. Intern. Med. 2016, 31, 961–963. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

| PICO(S) Elements [26] | Elements in this Review |

|---|---|

| Participants | All healthcare providers engaged in clinical documentation |

| Intervention | AI tools designed to streamline clinical documentation, including:

|

| Comparison | Usual administrative practices with no additional AI support. |

| Outcomes |

|

| Setting | All clinical settings |

| Specifications for the studies |

|

| Language and publication year | No restrictions. |

| Studies | Country | Year | Aim of Study | Study Design | Study Setting and Participants | Outcomes Assessed |

|---|---|---|---|---|---|---|

| Haberle et al. [31] | USA | 2024 | To assess the impact of an ambient listening and digital scribing solution, Nuance Dragon Ambient eXperience (DAX), on caregiver engagement, time spent on Electronic Health Records (EHR), including after-hours use, productivity, panel size for value-based care providers, documentation timeliness, and Current Procedural Terminology (CPT) submissions. | Peer-matched controlled cohort study | Outpatient clinics within an integrated healthcare system. A total of 99 providers from 12 specialties participated. Seventy-six matched control group providers were included in the analysis. | Primary: provider engagement, productivity, panel size, documentation, and coding timeliness. Secondary: patient safety, likelihood to recommend, and number of patients opting out. |

| Hudelson et al. [32] | USA | 2024 | (1) To identify and test virtual scribe solutions, both live and asynchronous, tailored to the healthcare system’s needs; (2) to evaluate and implement these technologies to reduce clinicians’ documentation burden, a major contributor to physician burnout. | Mixed methods pilot study | An integrated academic healthcare system. Sixteen clinicians from diverse specialties. | Primary: clinicians’ documentation burden, clinicians’ overall experience. Secondary: none. |

| Islam et al. [33] | Bangladesh | 2024 | (1) To develop an automated scribe and intelligent prescribing system for health professionals by identifying user requirements; (2) to design a system that generates medical notes and prescriptions efficiently from voice commands, enhancing the usability of digital scribe solutions; and (3) to evaluate the system’s performance to ensure it meets clinicians’ needs and improves documentation processes. | AI system development process. Post-test questionnaire | A Medical College Hospital. Enlisted the participation of 17 diabetes patients and six doctors. | Primary: similarity rates between AI scribes and prescriptions compared to those generated manually, the system’s usability. Secondary: none. |

| Kernberg et al. [34] | USA | 2024 | To evaluate the accuracy and quality of Subjective, Objective, Assessment, and Plan (SOAP) notes generated by ChatGPT-4 using established History and Physical Examination transcripts as the gold standard, identifying errors and assessing performance across categories. | Comparative Study | Fourteen simulated patient-provider encounters, including professional standardized patients, represented a wide range of ambulatory specialties and two clinical experts. | Primary: an AI model’s (ChatGPT-4) performance evaluation (e.g., variations in errors, accuracy, and quality of notes generated) using established transcripts of “History and Physical Examination” as the gold standard. Secondary: none. |

| Nguyen et al. [35] | USA | 2023 | To pilot a digital scribe in live clinic settings at a National Cancer Institute–designated Comprehensive Cancer Center, assess its impact on clinician well-being and documentation burden, and identify facilitators and barriers to effective implementation. | Mixed-methods longitudinal pilot study | Clinic settings at a National Cancer Institute–designated Comprehensive Cancer Center, evaluated by 21 “clinician champions”. | Primary: Impact on clinician well-being and documentation burden, implementation facilitators and barriers for effective AI scribe use, feasibility, and usability. Secondary: Clinicians reported some patients expressed unease at having their visits recorded on a smartphone. |

| Sezgin et al. [36] | USA | 2024 | To present a proof-of-concept digital scribe system for summarizing Emergency Department consultation calls to support clinical documentation and report its performance. | Usability Study. Quantitative descriptive. | Nationwide Children’s Hospital Physician Consult and Transfer Center. One hundred phone call recordings from 100 unique callers (physicians) for ED referrals are used. | Primary: Performance (e.g., accuracy rates in medical records, ability to comprehend and replicate the structure and flow of clinical dialogue) of four pre-trained large language models (T5-small39, T5-base39, PEGASUSPubMed47, and BART-Large-CNN46) to support clinical documentation. Secondary: None. |

| van Buchem et al. [37] | Netherlands | 2023 | To assess the impact of a Dutch digital scribe system on clinical documentation efficiency and quality. | Usability Study | Leiden University Medical Center. Twenty-two medical students with experience in clinical practice and documentation. | Primary: Clinical documentation efficiency (i.e., summarization time) and quality (e.g., accuracy, usefulness). Secondary: None. |

| Wang et al. [38] | USA | 2021 | To develop a digital scribe for automatic medical documentation using patient-centered communication elements. | Simulation of patient encounters. Quantitative descriptive. | Across multiple departments within a university medical center with two medical students. | Primary: efficiency, training required, documentation speed, patient-centered communication, and reliability. Secondary: None. |

| Studies | Type of AI Scribe (Name) | Key Global Characteristics | How the System Works | |||||

|---|---|---|---|---|---|---|---|---|

| CD | RT | AN | ADE | AS | AA | |||

| Haberle et al. [31] | Mobile App (Nuance DAX) | An AI-powered, voice-enabled solution that automatically documents clinical encounters using ambient listening and conversational AI to generate comprehensive documentation from patient-provider conversations. | X | X | X | X | X | X |

| Hudelson et al. [32] | Mobile App (Not disclosed) | Two virtual scribe solutions were compared: (1) Live Virtual Scribe (All Human-Driven) and (2) Asynchronous Virtual Scribe (Hybrid AI/Human), which uses audio recordings, machine learning, and NLP to pre-populate notes, then reviewed and finalized by a human scribe within 4 h, working asynchronously. | X | X | X | X | ||

| Islam et al. [33] | Software (Not disclosed) | The system converts patient voice descriptions into text to generate medical notes and uses extracted medical terms for this purpose. It also creates e-prescriptions from doctors’ voice commands. Using NLP and machine learning, it records medical information and generates prescriptions based on voice input from healthcare professionals. | X | X | X | X | X | X |

| Kernberg et al. [34] | Chatbot (Not disclosed) | The ChatGPT–4–generated Subjective, Objective, Assessment, and Plan (SOAP) format is a standard clinical documentation model that organizes interview data into structured headers. It provides a clear framework for healthcare professionals to record and share patient information. | X | X | X | |||

| Nguyen et al. [35] | Mobile App (Dragon Ambient eXperience) | DS smartphone app: The digital scribe’s AI components structured the recorded information into a visit note, and the vendor’s staff performed initial editing before the notes were released to the clinician. | X | X | ||||

| Sezgin et al. [36] | Pre-trained large language models (Not disclosed) | The system converts audio recordings into text: AWS Transcribe transcribes the audio, and an annotator reviews and corrects the transcript. Transcription documents are organized as text input for the model, with nurse summary notes used as reference. Four pre-trained language models (T5-small, T5-base, PEGASUS-PubMed, and BART-Large-CNN) are employed to summarize clinical conversations based on their strengths in the healthcare domain. | X | |||||

| van Buchem et al. [37] | Software (Autoscriber) | A web-based tool that transcribes and summarizes medical conversations in Dutch, English, and German. It uses a transformer-based speech-to-text model fine-tuned to clinical data, along with large language models like GPT-3.5 and GPT-4, and a custom prompt structure for summarization. The tool also features self-learning functionality, which was not evaluated in this study. | X | X | ||||

| Wang et al. [38] | Mobile App/Software (Not disclosed) | It uses automatic speech recognition and natural language processing to transcribe and summarize conversations between healthcare providers and patients into written text. | X | X | X | X | X | |

| Categories of Outcomes | Impacts of AI Scribes |

|---|---|

| (1) Clinician outcomes (e.g., experience with the tool, stress, burnout, documentation burden, etc.) | Provider engagement:

|

| (2) Healthcare system efficiency (e.g., wait times, patient throughput, costs, etc.) | Productivity (the volume and intensity of clinical services provided by healthcare providers):

|

| (3) Documentation outcomes (e.g., accuracy, relevance, deficiency rates, etc.) | Documentation time and EHR usage:

|

| (4) Patient outcomes (e.g., safety or quality of care, experience with technology, etc.) | Patient safety:

|

| Categories of Factors | Items for Successful Adoption and Implementation of AI Scribes in Clinical Settings |

|---|---|

| (1) Training and support needs |

|

| (2) Organizational preparation |

|

| (3) Technical considerations and improvements |

|

| (4) Evaluation and workflow integration |

|

| (5) Ethical considerations |

|

| (6) Further research and future directions |

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sasseville, M.; Yousefi, F.; Ouellet, S.; Naye, F.; Stefan, T.; Carnovale, V.; Bergeron, F.; Ling, L.; Gheorghiu, B.; Hagens, S.; et al. The Impact of AI Scribes on Streamlining Clinical Documentation: A Systematic Review. Healthcare 2025, 13, 1447. https://doi.org/10.3390/healthcare13121447

Sasseville M, Yousefi F, Ouellet S, Naye F, Stefan T, Carnovale V, Bergeron F, Ling L, Gheorghiu B, Hagens S, et al. The Impact of AI Scribes on Streamlining Clinical Documentation: A Systematic Review. Healthcare. 2025; 13(12):1447. https://doi.org/10.3390/healthcare13121447

Chicago/Turabian StyleSasseville, Maxime, Farzaneh Yousefi, Steven Ouellet, Florian Naye, Théo Stefan, Valérie Carnovale, Frédéric Bergeron, Linda Ling, Bobby Gheorghiu, Simon Hagens, and et al. 2025. "The Impact of AI Scribes on Streamlining Clinical Documentation: A Systematic Review" Healthcare 13, no. 12: 1447. https://doi.org/10.3390/healthcare13121447

APA StyleSasseville, M., Yousefi, F., Ouellet, S., Naye, F., Stefan, T., Carnovale, V., Bergeron, F., Ling, L., Gheorghiu, B., Hagens, S., Gareau-Lajoie, S., & LeBlanc, A. (2025). The Impact of AI Scribes on Streamlining Clinical Documentation: A Systematic Review. Healthcare, 13(12), 1447. https://doi.org/10.3390/healthcare13121447