Abstract

Previous studies have explored use of smart glasses in telemedicine, but no study has investigated its use in teleradiography. The purpose of this study was to implement a six-month pilot program for Western Australian X-ray operators (XROs) to use smart glasses to obtain assisted reality support in their radiography practice from their supervising radiographers, and evaluate its effectiveness in terms of XROs’ competence improvement and equipment usability. Pretest–posttest design with evaluation of the XROs’ competence (including their X-ray image quality) and smart glasses usability by XROs in two remote centers and their supervising radiographers from two sites before and after the program using four questionnaire sets and X-ray image quality review was employed in this experimental study. Paired t-test was used for comparing mean values of the pre- and post-intervention pairs of 11-point scale questionnaire and image quality review items to determine any XROs’ radiography competence improvements. Content analysis was used to analyze open questions about the equipment usability. Our study’s findings based on 13 participants (11 XROs and 2 supervising radiographers) and 2053 X-ray images show that the assisted reality support helped to improve the XROs’ radiography competence (specifically X-ray image quality), with mean post-intervention competence values of 6.16–7.39 (out of 10) and statistical significances (p < 0.001–0.05), and the equipment was considered effective for this purpose but not easy to use.

Keywords:

competence; Google Glass; medical imaging; nurse; radiography; radiology; rural health; telemedicine; videoconferencing 1. Introduction

Australian X-ray operators (XROs) are healthcare workers, often nurses, approved by their jurisdiction regulators, such as Radiological Council of Western Australia (WA), to undertake a limited range of basic radiographic examinations such as chest and extremity X-rays in rural/remote areas where a radiographer is not available. This unavailability of radiographers can be attributed to reasons including the challenge associated with full-time radiographer recruitment, a radiographer’s position being deemed redundant as a result of small rural population, etc. [1]. The XRO model enables patients in rural/remote regions to receive a basic radiography service in their local areas and, hence, avoid long-distance travel and unnecessary financial, emotional and social burdens. However, from the XROs’ perspective, the radiography duty is an extra role beyond their primary profession which requires a transfer of skills. This extended role together with geographical isolation causes various challenges faced by the XROs, including a lack of professional support and continuing professional development (CPD) opportunities, a communication barrier and responsibility overload. These result in potential impacts on their wellbeing, service quality and patient safety [1].

Currently, the major employer of WA XROs, WA Country Health Service (WACHS), arranges radiographers from larger clinical (expert) centers to provide telephone support to their XROs practicing in smaller rural/remote centers to address these issues and as a means for the XROs’ CPD. Although the telephone support is common for traditional telehealth practice [2], our previous qualitative study on WA XROs’ competence, and barriers and facilitators to their radiography practice published in 2020 identified that videoconferencing (VC) support appears to be a better approach [1]. This kind of VC support for XROs was proved effective for improving quality of X-ray images produced by XROs in another Australian state (Queensland) in 2017 [3]. However, the major issues with the traditional VC platform such as the one used in the Queensland’s XROs study [3] are a limited field of view of the fixed camera for the experts (supervising radiographers) to understand clinical situations faced by their XROs and the requirement of hand control for operating the VC equipment [2,4].

Recently, the use of smart glasses in healthcare has become popular despite its first use being reported in 2013 when Google Glass became available [2,4,5]. Smart glasses are a head-mounted computing device with wireless connectivity, a camera, a video display and a headset attached to a frame for a user to wear it like traditional eyeglasses. Unlike the traditional camera, the smart glasses allow the wearer to offer their first-person view (without any blind spots) to remote experts through the head-mounted camera and integrated VC software. The remote experts can provide real-time audio and/or visual guidance on managing various situations (via the same VC platform) received by the wearer through its headset and video display. Its control can be completely handsfree through voice and/or motion recognition [2,4]. Examples of its recent application areas in healthcare include basic life support [6]; medical [7] and nursing student trainings [8,9,10]; emergency medical service delivery [11,12]; telehealth and telemedicine practice for metropolitan, rural and remote areas involving general and specialist physicians such as neuroradiologists, neurosurgeons and pediatric ophthalmologists; nurses; and emergency medical technicians [5,13,14,15,16]. Hence, these uses indicate that the smart glasses could be considered a better technological solution for the XROs in WA rural/remote areas to seek professional support from their supervising radiographers in the larger clinical centers to undertake challenging radiography examinations, and improve their radiographic skills, including X-ray image quality, over time as a better CPD channel when compared with the traditional VC support reported in the aforementioned Queensland XROs’ CPD study [3].

Nevertheless, a systematic review on the use of smart glasses in telemedicine covering 21 studies published in 2023 revealed that there are several barriers for successful implementation of smart glasses in telemedicine, such as ergonomics, human factors, technical limitations, and organizational, security and privacy issues, and no study has investigated the use of smart glasses in teleradiography as yet [2]. The purpose of this study was to implement a pilot program for WA XROs to use smart glasses for obtaining remote support in their radiography practice from their supervising radiographers, and evaluate the effectiveness of this assisted reality support in terms of the XROs’ competence improvement and usability of the assisted reality equipment. It was hypothesized that the assisted reality support helped to improve the WA XROs’ radiography competence and X-ray image quality, and the equipment was considered easy to use and effective.

2. Materials and Methods

This study was an experimental study with methods similar to the one by Rawle et al. [3]. Pretest–posttest design with evaluation of the XROs’ competence (including their X-ray image quality) and usability of smart glasses by XROs and supervising radiographers before and after the pilot program was employed. Two WACHS remote clinical centers with relatively large numbers of radiography cases performed by their XROs per year and their (two) corresponding supervising sites (expert centers) were selected for piloting the assisted reality support for six months (1 October 2023–30 March 2024). These centers were chosen because 1. WACHS was the major employer of WA XROs; 2. approximately 1000 cases were performed by the two XROs’ centers per year in total; and 3. all four centers were far (about 1400–2500 km) away from the WA capital, Perth [1]. The required sample size was calculated using Equation (1) [17].

where n is the sample size required; Zα is 1.96 for a two-tailed test with a significance level of 0.05; Z1−β is 0.8416 for a power of 80%; estimated σ is 1.2; and estimated effect size is 20% based on the similar study by Rawle et al. [3].

According to the calculation, 565 X-ray images were required for image quality review per arm, i.e., 1130 images in total. The smart glasses used in our pilot program were RealWear Navigator 500 (Vancouver, Washington, USA) [10,13,18,19]. The study was conducted in accordance with the Declaration of Helsinki, and approved by the WACHS Human Research Ethics Committee (HREC) and Research Governance Unit (project reference number is RGS0000005633 and dates of approvals were 27 October 2022 and 2 December 2022), and Curtin University HREC (approval number is HRE2022-0610 and date of approval was 28 October 2022). Written informed consent was obtained from all participants including XROs, radiographers and patients involved in the study except for the retrospective review of patients’ X-ray images taken before the intervention with a wavier of consent approved by the WACHS HREC and Research Governance Unit, and Curtin University HREC [3].

2.1. Participant Selection

All XROs, and their patients and supervising radiographers, of the four centers were invited to participate in this pilot program. The following were the participants’ inclusion and exclusion criteria [3].

2.1.1. Inclusion Criteria

XROs

- -

- Approved by the Radiological Council of WA as XROs;

- -

- Employed by WACHS.

Supervising Radiographers

- -

- Registered with Australian Health Practitioner Regulation Agency as diagnostic radiographers;

- -

- Appointed by the Radiological Council of WA as supervising approved radiographers;

- -

- Employed by WACHS.

Patients

- -

- Pediatric and adult patients with X-ray examinations performed by the XROs between 1 April 2023 and 30 September 2023 (pre-intervention period for the retrospective X-ray image quality review), and between 1 October 2023 and 30 March 2024 (intervention period).

2.1.2. Exclusion Criteria

XROs, Supervising Radiographers and Patients

- -

- Refusal to consent to participation/unable to obtain consent.

2.2. Assisted Reality Support Program

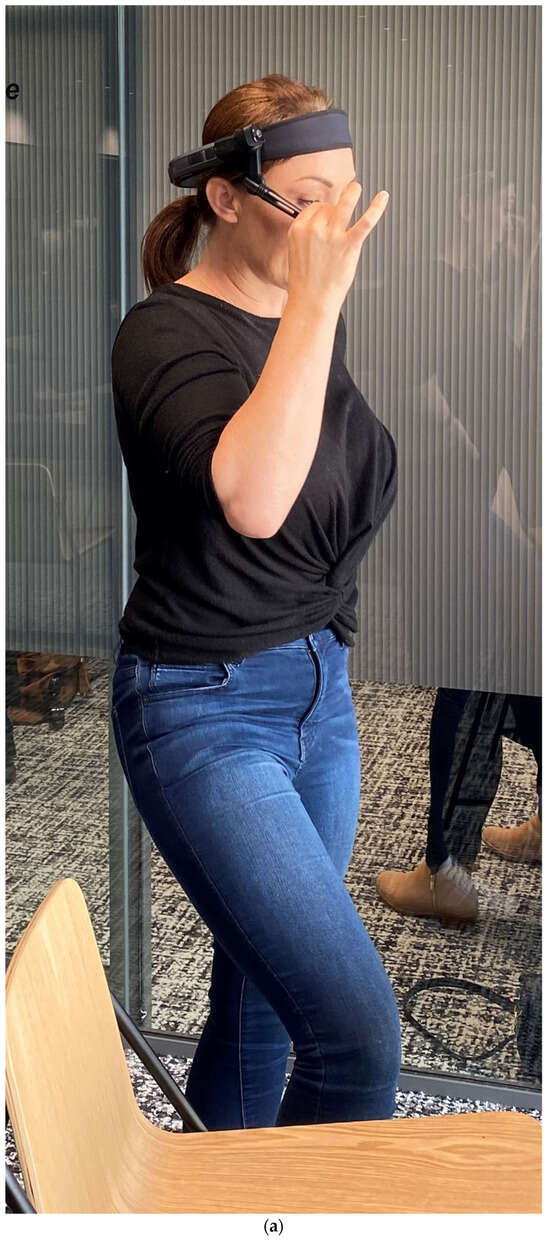

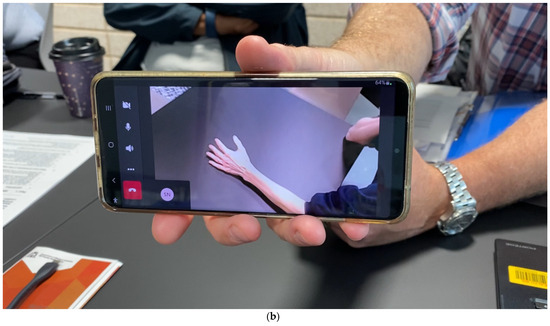

A train-the-trainer model was adopted for the assisted reality equipment vendor to train our research team, and subsequently, our team trained the XROs and their supervising radiographers to appropriately use the equipment before implementing the assisted reality support in the clinical practice [10,20]. Each training session took place at either the participants’ centers or our university with a duration of 1–2 h. Figure 1 shows one of our equipment training sessions with an XRO to wear the RealWear Navigator 500 smart glasses to perform a simulated hand X-ray examination under the guidance of her supervising radiographer. During that training session, both a smartphone (Galaxy S22, Samsung Group, Yeongtong-gu, Suwon, South Korea) and an Apple iPad 10.2” (9th generation) with 64-gigabyte data storage and WiFi plus cellular connectivity (Cupertino, CA, USA) were used by the supervising radiographer to provide the guidance through Microsoft Teams (version 4.20.1, Microsoft Corporation, Redmond, WA, USA), which was the only VC platform approved by WACHS for clinical use. However, for the assisted reality support intervention, only the Apple iPads were employed for the supervising radiographers to use Microsoft Teams to provide the guidance [21]. All XROs and their supervising radiographers were provided one-page quick-reference guides for requesting and providing the assisted reality support after the training, respectively [19].

Figure 1.

Equipment training session for (a) X-ray operators to complete a simulated hand X-ray examination with assisted reality support from (b) their supervising radiographer at our university.

Each supervising radiographer’s site was given one iPad (Apple Inc., Cupertino, CA, USA) and every XRO’s center was provided one smart glasses device (RealWear Inc., Vancouver, WA, USA). Both iPad and smart glasses were connected to their centers’ network through WiFi with a fourth-generation broadband cellular network (4G) as a backup internet connection [2]. The XROs only used the assisted reality support when they encountered challenging cases for performing radiographic examinations and/or image quality reviews during the intervention, aligning with the existing WACHS protocol for the telephone support [1].

2.3. Evaluation of Assisted Reality Support Program

Four sets of questionnaires (pre- and post-intervention questionnaires for the XROs and supervising radiographers) were developed based on Andersson et al.’s [22,23] validated radiographers’ competence scale (designed for radiographers with dual registrations as radiographers and nurses [22,23,24]) with additional open questions about usability of the assisted reality equipment (based on Yoon et al.’s [10] study on using assisted reality to provide remote support for nursing student training). Andersson et al.’s [22,23] and Yoon et al.’s [10] questionnaires were selected for developing our evaluation questionnaires because they were designed for nurses and, hence, matched our XROs’ characteristics which were predominantly nurses. The questionnaire was piloted with three XROs and two radiographers not directly involved in our study, resulting in several revisions for improving its reliability and validity [25]. The developed questionnaires were delivered to the XROs and supervising radiographers to assess the XROs’ radiography competence (using an 11-point scale, 0—no competence; 1—extremely low competence; 2—very low competence; 3—low competence; 4—just below minimally acceptable competence; 5—minimally acceptable competence; 6—just above minimally acceptable competence; 7—competence; 8—high competence; 9—very high competence; 10—outstanding competence) before and after the intervention [22,23,24]. Rawle et al.’s [3] 11-point image quality grading scale (0—no attempt to meet quality requirement;1—extremely poor quality; 2—very poor quality; 3—poor quality; 4—just below minimally acceptable diagnostic quality; 5—meeting minimally acceptable diagnostic quality; 6—just above minimally acceptable diagnostic quality; 7—good quality; 8—very good quality; 9—excellent quality; 10—outstanding quality) was used to assess the quality of X-ray images acquired by the XROs prior to and during the intervention through a picture archiving and communication system (PACS) [25,26]. Only one observer (who was a current WACHS-approved radiographer and a former area chief medical imaging technologist (MIT)) was involved in this image quality review process to avoid inter-observer variability [3]. The image quality grading scale was piloted with two radiographers not involved in this study to improve its reliability and validity before administration [25].

2.4. Data Analysis

SPSS Statistics 29 (International Business Machines Corporation, Armonk, NY, USA) was used for statistical analysis. For multiple choice items (demographics questions of the questionnaires and X-ray image information questions of the image quality assessment forms), percentage of frequency was used for data analysis. For the 11-point scale items of the four questionnaires and two image quality assessment forms, mean and standard deviation were calculated and a paired t-test was used for comparing the mean values of the pre- and post-intervention pairs of the 11-point scale items to determine any XROs’ radiography competence (including image quality) improvements and enable findings comparison with the similar study [3,27,28]. A p-value less than 0.05 represented statistical significance [3,26,29,30,31]. Content analysis with quasi-statistics as an accounting system was used to analyze the open questions about the usability of the assisted reality equipment [25,32].

3. Results

All XROs (total: 11; site 1: 6; site 2: 5) and supervising radiographers (total: 2; 1 per site) of the four selected centers were recruited and completed the assisted reality support program training and pre-intervention questionnaires afterwards, yielding 100% response rates. One XRO withdrew shortly after the training due to her prescription glasses being incompatible with the smart glasses. During the intervention, the numbers of XROs of the two centers fluctuated, consistent with the usual WACHS staffing arrangement. At the end of the intervention, six XROs (3 per site) were rostered to perform radiography duty. Half of the rostered XROs and all supervising radiographers returned the post-intervention questionnaires, resulting in 50% and 100% response rates, respectively. Table 1 shows their demographics.

Table 1.

Summary of Demographic Information of X-ray Operators (n = 11) and Supervising Radiographers (n = 2).

Table 2 and Table 3 show the participants’ perceptions of XRO radiographic competences with no statistically significant difference before and after the assisted reality support program (p = 0.099–1.000). However, notable mean value decreases are noted for the “participating in quality improvement for patient safety and care” and “adapting examination based on patient’s needs” competences under the patient care (Table 2) and technical processes categories (Table 3) after the intervention, respectively. Moreover, these two competences and the other five (more than half) technical process-related competence (“adapting examination based on patient’s needs”, “reducing radiation doses for patients and staff”, “producing accurate and correct images”, “evaluating image quality against referral and clinical question” and “optimizing image quality”) levels of XROs were perceived minimally acceptable (level 5) or just above this (level 6) overall after the program.

Table 2.

Participants’ perceptions of X-ray operator radiographic competences (related to patient care) before and after assisted reality support program.

Table 3.

Participants’ perceptions of X-ray operator radiographic competences (related to technical processes) before and after assisted reality support program.

Table 4 demonstrates the participants’ perceptions of assisted reality support and equipment usability before and after the intervention. The technical performances of the equipment were well perceived after the program. These included the audio and video quality and data transmission speed enabling the supervising radiographers to obtain adequate ideas about the XROs’ situations. However, 60% of participants preferred the telephone (smartphone) support to the assisted reality support as a result of 100% of participants indicating issues of ill-fitting headband, voice control, login and non-intuitive design of smart glasses after the intervention.

Table 4.

Participants’ perceptions of assisted reality support and equipment usability before and after intervention.

Table 5 illustrates the types, projections, patient ages, image receptor (computed radiography cassette) sizes and exposure factors (kV and milliampere-seconds) of the X-ray examinations performed by the two centers’ XROs within six months before (the numbers of examinations and images were 487 and 982, respectively) and during the six-month intervention period (the numbers of examinations and images were 495 and 1071, respectively). Their frequencies and proportions before and during the program were comparable. Table 6 shows the quality of X-ray images before and during the intervention. Statistically significant improvements of X-ray image quality are noted for all criteria with about half (inclusion of required anatomy, side marker and image quality regarding artifact) determined good quality and the others meeting just above minimally acceptable diagnostic quality requirements during the program (p < 0.001–0.05). In contrast, about half of the areas (beam collimation, image quality regarding exposure and overall diagnostic value for pathology identification) only met the minimally acceptable diagnostic quality requirements before the intervention.

Table 5.

Information of X-ray images acquired within six months before (pre-intervention: n = 982) and during assisted reality support program (intervention: n = 1071).

Table 6.

Quality of X-ray images acquired within six months before and during assisted reality support program.

4. Discussion

Over the last few years, numerous studies have investigated the use of smart glasses (with [33,34,35,36,37,38,39,40,41,42,43,44,45,46] and without augmented reality [5,6,7,8,9,10,11,12,13,14,15,16]) in healthcare. Given that our study participants lived in rural/remote areas with an expected digital divide issue [47,48,49], the more advanced use of smart glasses, i.e., augmented reality with superimposing virtual objects on video of real world was not used for our XROs to obtain remote support in their radiography practice from their supervising radiographers [33,34,35,36,37,38,39,40,41,42,43,44,45,46]. Among the recent studies on the use of smart glasses without any augmented reality [5,6,7,8,9,10,12,13,14,15,16], only four evaluated its use with real patients and their sample sizes were 8 [15], 37 [16], 103 [5] and 622 [14], respectively. For the other studies [6,7,8,9,10,12,13], only one to six simulated clinical scenarios were involved. In contrast, our study covered 497 patients during the intervention period, which could be considered a strength. Also, according to the systematic review on the use of smart glasses in telemedicine with the inclusion of 21 studies published in 2023 [2], our study is the first one to investigate the use of smart glasses in teleradiography.

Our study findings presented in Table 6 demonstrate that the use of smart glasses helped our study’s XROs to significantly improve their radiography competence and X-ray image quality with at least just above minimally acceptable diagnostic quality rating (six) for all aspects during the program (p < 0.001–0.05). The average increase in these mean values of image quality scores was 0.59. Our findings match the ones of the Queensland’s study on using traditional VC platform for teleradiography and an 11-point scale to evaluate the quality of 326 pre-intervention and 234 intervention X-ray images acquired by their XROs with statistically significant improvements for all image quality criteria, with all but one at least rating six, and average increase in mean values being 0.6. It is noted that their “appropriate collimation” criterion only had a mean rating of 5.9 during their intervention [3].

Nonetheless, Table 2 and Table 3 reveal that our assisted reality support program did not support the XROs in improving their radiography competence. More concerningly, mean value decreases are noted in many aspects of their radiography competence after the intervention although there was no statistically significant difference for all competence items. It is well known that the use of questionnaire for competence assessment might not be reliable. For example, Graves et al. [50] asked 140 medical students to indicate their self-perceived competence before and after their objective structured clinical examination (OSCE) through the use of questionnaires. They found that there was a decrease in their students’ self-perceived competence after the OSCE and their students’ competence ratings were weakly correlated with the corresponding OSCE results. Hence, our findings shown in Table 2 and Table 3 should be used with caution.

As per the aforementioned systematic review on the use of smart glasses in telemedicine [2], there are four major challenges, ergonomics and human factors, technical limitations, organizational factors, and security and privacy issues affecting the usability of smart glasses in clinical environment. Their details are as follows.

- Ergonomics and Human Factors

- Prescription glasses incompatible with smart glasses;

- Smart glasses camera range and gaze direction misaligned;

- Voice control issue;

- Smart glasses as distraction.

- Technical Limitations

- Network stability and bandwidth issues;

- Low battery capacity;

- Small video display size;

- Background noise not removed;

- Ambient lighting affecting video quality of display and camera;

- Program (including video streaming) interface not user friendly.

- Organizational Factors

- Extra workload;

- Expensive equipment;

- Extensive equipment training required.

- Security and Privacy Issues

- Data breach;

- Patient privacy violation.

Our study’s results show that the above issues, except most of the items under the categories of technical limitations (network stability and bandwidth issues, low battery capacity, small video display size and background noise not removed) and security and privacy issues (patient privacy violation and data breach), were also reported by our participants (Table 4). For our intervention, 4G internet connection and extra batteries were arranged for the XROs as backup. Moreover, our participants indicated that the smart glasses video quality was sufficient. Although the RealWear smart glasses were able to remove the background noise, one of our supervising radiographers expressed his need of hearing the patients for the assisted reality support. No patient privacy violation and data breach occurred in our intervention because a written informed consent was obtained from each patient after explaining to them that the video was only viewed (but not recorded) by the supervising radiographers, consistent with the standard-of-care procedure. Moreover, two-factor authentication (2FA) was required to use Microsoft Teams on the smart glasses and iPads. However, these measures for addressing the patient privacy and data security requirements together with the issues of voice control and non-intuitive software interface design of the smart glasses significantly increased the workload of XROs who considered this as a distraction (interfering with radiographic examination process). This is because extra time and effort were required to obtain consent, complete 2FA and use the smart glasses via voice control with unfamiliar software interface [2,51]. These factors could explain why one XRO and both supervising radiographers preferred the smart phones to the current assisted reality equipment.

Apparently, the design of smart glasses needs to be further improved to become more intuitive for promoting its use in healthcare. Recent research has already started exploring this. For example, Zhang et al. [11] conducted a study in 2022 to determine the implications of smart glasses design for its wider adoption in emergency medical services. However, the ill-fitting headband and voice control issues can be readily addressed to a certain extent by using other head mounts for smart glasses, such as a cap [52], and a wireless keyboard [53], despite voice control being one of the benefits (reasons) of its use in a healthcare environment. Moreover, when funding is available, a smart glasses device can be assigned to each XRO. In this way, they can connect their assigned devices to Microsoft Teams at the beginning of their shifts to avoid the 2FA login issue occurring during radiographic examinations [51]. Also, smart phones with high mobility can be given to supervising radiographers to provide the assisted reality support anywhere and anytime [54]. In addition, it would be interesting to match the use of smart glasses with smartphone apps specifically tailored to various fields of medicine to understand their potential mutual application in the future [55,56].

This study had three major limitations. Only three XROs and two supervising radiographers completed the post-intervention questionnaires. However, 982 patients’ X-ray examination data were used for evaluating the XROs’ competence development, which was greater than the similar study on using the traditional VC platform for XROs’ CPD in Queensland [3]. Also, for many studies about using the smart glasses in healthcare, only one to six simulated clinical scenarios instead of real patients were employed for the evaluation [6,7,8,9,10,12,13]. Moreover, our non-patient participant number exceeded some of the similar smart glasses studies’ ones which were as low as two participants. This resulted in our study’s smart glasses usability evaluation findings matching those reported in the systematic review on the use of smart glasses in telemedicine [2]. Although our intervention period was in line with the Queensland XROs’ study, which was six months, and Table 5 shows the frequencies and proportions of examination types, projections and patient ages before and during our program were comparable, it would be better if the intervention period was longer for implementing the aforementioned remedies, such as providing other smart glasses head mount options and wireless keyboards to XROs and smart phones to supervising radiographers, and evaluating their effectiveness. Besides, as per the human research ethics requirements, patients who were unable to provide consent during the intervention were excluded from our study, but this situation was the same as the one of Rawle et al.’s study [3].

5. Conclusions

Our study’s findings show that the assisted reality support helped to significantly improve the WA XROs’ radiography competence (specifically X-ray image quality) and the equipment was considered effective for this purpose. Nonetheless, our participants indicated that the equipment was not easy to use due to the ill-fitting headband, voice control and login issues of the smart glasses, and lower mobility of iPads. The remedies including use of other head mounts, wireless keyboards and smart phones, and completion of 2FA at the beginning of shifts rather than radiographic examinations are recommended to potentially address these issues. Further research is needed to evaluate the effectiveness of these recommendations. Also, future studies should be conducted to improve the design of smart glasses to become more intuitive and user friendly for promoting its use in healthcare.

Author Contributions

Conceptualization, C.K.C.N., M.B. and S.N.; methodology, C.K.C.N., M.B. and S.N.; software, C.K.C.N., M.B. and S.N.; validation, C.K.C.N., M.B. and S.N.; formal analysis, C.K.C.N., M.B. and S.N.; investigation, C.K.C.N., M.B. and S.N.; resources, C.K.C.N. and M.B.; data curation, C.K.C.N., M.B. and S.N.; writing—original draft preparation, C.K.C.N., M.B. and S.N.; writing—review and editing, C.K.C.N., M.B. and S.N.; visualization, C.K.C.N.; supervision, C.K.C.N.; project administration, C.K.C.N. and M.B.; funding acquisition, C.K.C.N. and M.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Royal Perth Hospital Imaging Research Grant 2022, grant number SGA0270422 and WACHS Pitch Your Pilot Program 2022, grant number WACHS202311745. The APC was funded by WACHS Pitch Your Pilot Program 2022, grant number WACHS202311745.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the WACHS HREC and Research Governance Unit (project reference number: RGS0000005633 and dates of approvals: 27 October 2022 and 2 December 2022), and Curtin University HREC (approval number: HRE2022-0610 and date of approval: 28 October 2022).

Informed Consent Statement

Written informed consent was obtained from all participants involved in the study except for the retrospective review of patients’ X-ray images taken before the intervention with a waiver of consent approved by the WACHS HREC and Research Governance Unit, and Curtin University HREC.

Data Availability Statement

The data are not publicly available due to ethical restrictions.

Acknowledgments

The authors would like to thank the WACHS Area Chief MIT, Teena Anderson, for her assistance with the participant recruitment, and the XROs, radiographers and patients who participated in this study for their contributions of time and effort.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Chen, F.C.Y.; Ng, C.K.C.; Sun, Z. X-ray operators’ self-perceived competence, barriers and facilitators in general radiography practice in Western Australia. Radiography 2020, 26, e207–e213. [Google Scholar] [CrossRef]

- Zhang, Z.; Bai, E.; Joy, K.; Ghelaa, P.N.; Adelgais, K.; Ozkaynak, M. Smart glasses for supporting distributed care work: Systematic review. JMIR Med. Inform. 2023, 11, e44161. [Google Scholar] [CrossRef] [PubMed]

- Rawle, M.; Oliver, T.; Pighills, A.; Lindsay, D. Improving education and supervision of Queensland X-ray operators through video conference technology: A teleradiography pilot project. J. Med. Radiat. Sci. 2017, 64, 244–250. [Google Scholar] [CrossRef] [PubMed]

- Romare, C.; Skär, L. Smart glasses for caring situations in complex care environments: Scoping review. JMIR mHealth uHealth 2020, 8, e16055. [Google Scholar] [CrossRef] [PubMed]

- Munusamy, T.; Karuppiah, R.; Bahuri, N.F.A.; Sockalingam, S.; Cham, C.Y.; Waran, V. Telemedicine via smart glasses in critical care of the neurosurgical patient-COVID-19 pandemic preparedness and response in neurosurgery. World Neurosurg. 2021, 145, e53–e60. [Google Scholar] [CrossRef]

- Aranda-García, S.; Otero-Agra, M.; Fernández-Méndez, F.; Herrera-Pedroviejo, E.; Darné, M.; Barcala-Furelos, R.; Rodríguez-Núñez, A. Augmented reality training in basic life support with the help of smart glasses. A pilot study. Resusc. Plus 2023, 14, 100391. [Google Scholar] [CrossRef]

- Willis, C.; Dawe, J.; Leng, C. Creating a smart classroom in intensive care using assisted reality technology. J. Intensiv. Care Soc. 2024, 25, 89–94. [Google Scholar] [CrossRef]

- Lee, Y.; Kim, S.K.; Yoon, H.; Choi, J.; Kim, H.; Go, Y. Integration of extended reality and a high-fidelity simulator in team-based simulations for emergency scenarios. Electronics 2021, 10, 2170. [Google Scholar] [CrossRef]

- Kim, S.K.; Lee, Y.; Yoon, H.; Choi, J. Adaptation of extended reality smart glasses for core nursing skill training among undergraduate nursing students: Usability and feasibility study. J. Med. Internet Res. 2021, 23, e24313. [Google Scholar] [CrossRef]

- Yoon, H.; Kim, S.K.; Lee, Y.; Choi, J. Google glass-supported cooperative training for health professionals: A case study based on using remote desktop virtual support. J. Multidiscip. Healthc. 2021, 14, 1451–1462. [Google Scholar] [CrossRef]

- Zhang, Z.; Ramiya Ramesh Babu, N.A.; Adelgais, K.; Ozkaynak, M. Designing and implementing smart glass technology for emergency medical services: A sociotechnical perspective. JAMIA Open 2022, 5, ooac113. [Google Scholar] [CrossRef] [PubMed]

- Aranda-García, S.; Santos-Folgar, M.; Fernández-Méndez, F.; Barcala-Furelos, R.; Pardo Ríos, M.; Hernández Sánchez, E.; Varela-Varela, L.; San Román-Mata, S.; Rodríguez-Núñez, A. “Dispatcher, can you help me? A woman is giving birth”. A pilot study of remote video assistance with smart glasses. Sensors 2022, 23, 409. [Google Scholar] [CrossRef] [PubMed]

- Lee, Y.; Kim, S.K.; Yoon, H.; Choi, J.; Go, Y.; Park, G.W. Smart glasses and telehealth services by professionals in isolated areas in Korea: Acceptability and concerns. Technol. Health Care 2023, 31, 855–865. [Google Scholar] [CrossRef] [PubMed]

- Diaka, J.; Van Damme, W.; Sere, F.; Benova, L.; van de Put, W.; Serneels, S. Leveraging smart glasses for telemedicine to improve primary healthcare services and referrals in a remote rural district, Kingandu, DRC, 2019–2020. Glob. Health Action 2021, 14, 2004729. [Google Scholar] [CrossRef] [PubMed]

- Martínez-Galdámez, M.; Fernández, J.G.; Arteaga, M.S.; Pérez-Sánchez, L.; Arenillas, J.F.; Rodríguez-Arias, C.; Čulo, B.; Rotim, A.; Rotim, K.; Kalousek, V. Smart glasses evaluation during the COVID-19 pandemic: First-use on neurointerventional procedures. Clin. Neurol. Neurosurg. 2021, 205, 106655. [Google Scholar] [CrossRef] [PubMed]

- Ho, T.C.; Kolin, T.; Stewart, C.; Reid, M.W.; Lee, T.C.; Nallasamy, S. Evaluation of high-definition video smart glasses for real-time telemedicine strabismus consultations. J. AAPOS 2021, 25, e1–e74. [Google Scholar] [CrossRef] [PubMed]

- Kadam, P.; Bhalerao, S. Sample size calculation. Int. J. Ayurveda Res. 2010, 1, 55–57. [Google Scholar] [CrossRef] [PubMed]

- Sobieraj, S.; Eimler, S.; Rinkenauer, G. Can smart glasses change how people evaluate healthcare professionals? A mixed-method approach to using smart glasses in hospitals. Int. J. Hum.-Comput. Stud. 2023, 178, 103081. [Google Scholar] [CrossRef]

- RealWear Navigator® 500. Available online: https://www.realwear.com/devices/navigator-500 (accessed on 18 April 2024).

- Nexø, M.A.; Kingod, N.R.; Eshøj, S.H.; Kjærulff, E.M.; Nørgaard, O.; Andersen, T.H. The impact of train-the-trainer programs on the continued professional development of nurses: A systematic review. BMC Med. Educ. 2024, 24, 30. [Google Scholar] [CrossRef]

- Morimoto, T.; Kobayashi, T.; Hirata, H.; Otani, K.; Sugimoto, M.; Tsukamoto, M.; Yoshihara, T.; Ueno, M.; Mawatari, M. XR (extended reality: Virtual reality, augmented reality, mixed reality) technology in spine medicine: Status quo and quo vadis. J. Clin. Med. 2022, 11, 470. [Google Scholar] [CrossRef]

- Andersson, B.T.; Christensson, L.; Jakobsson, U.; Fridlund, B.; Broström, A. Radiographers’ self-assessed level and use of competencies—A national survey. Insights Imaging 2012, 3, 635–645. [Google Scholar] [CrossRef] [PubMed]

- Andersson, B.T.; Christensson, L.; Fridlund, B.; Broström, A. Development and psychometric evaluation of the radiographers’ competence scale. Open J. Nurs. 2012, 2, 85–96. [Google Scholar] [CrossRef]

- Vanckavičienė, A.; Macijauskienė, J.; Blaževičienė, A.; Basevičius, A.; Andersson, B.T. Assessment of radiographers’ competences from the perspectives of radiographers and radiologists: A cross-sectional survey in Lithuania. BMC Med. Educ. 2017, 17, 25. [Google Scholar] [CrossRef] [PubMed]

- Ng, C.K.C.; White, P.; McKay, J.C. Development of a web database portfolio system with PACS connectivity for undergraduate health education and continuing professional development. Comput. Methods Programs Biomed. 2009, 94, 26–38. [Google Scholar] [CrossRef] [PubMed]

- Floyd, D.M.; Trepp, E.R.; Ipaki, M.; Ng, C.K.C. Study of radiologic technologists’ perceptions of picture archiving and communication system (PACS) competence and educational issues in Western Australia. J. Digit. Imaging 2015, 28, 315–322. [Google Scholar] [CrossRef] [PubMed]

- Tsagris, M.; Pandis, N. Normality test: Is it really necessary? Am. J. Orthod. Dentofacial Orthop. 2021, 159, 548–549. [Google Scholar] [CrossRef] [PubMed]

- Rochon, J.; Gondan, M.; Kieser, M. To test or not to test: Preliminary assessment of normality when comparing two independent samples. BMC Med. Res. Methodol. 2012, 12, 81. [Google Scholar] [CrossRef] [PubMed]

- Dewland, T.A.; Hancock, L.N.; Sargeant, S.; Bailey, R.K.; Sarginson, R.A.; Ng, C.K.C. Study of lone working magnetic resonance technologists in Western Australia. Int. J. Occup. Med. Environ. Health 2013, 26, 837–845. [Google Scholar] [CrossRef] [PubMed]

- Christie, S.; Ng, C.K.C.; Sá Dos Reis, C. Australasian radiographers’ choices of immobilisation strategies for paediatric radiological examinations. Radiography 2020, 26, 27–34. [Google Scholar] [CrossRef]

- MacKay, M.; Hancy, C.; Crowe, A.; D’Rozario, R.; Ng, C.K.C. Attitudes of medical imaging technologists on use of gonad shielding in general radiography. Radiographer 2012, 59, 35–39. [Google Scholar] [CrossRef]

- Ng, C.K.C. A review of the impact of the COVID-19 pandemic on pre-registration medical radiation science education. Radiography 2022, 28, 222–231. [Google Scholar] [CrossRef]

- Yoo, I.; Kong, H.J.; Joo, H.; Choi, Y.; Kim, S.W.; Lee, K.E.; Hong, J. User experience of augmented reality glasses-based tele-exercise in elderly women. Healthc. Inform. Res. 2023, 29, 161–167. [Google Scholar] [CrossRef] [PubMed]

- Baashar, Y.; Alkawsi, G.; Wan Ahmad, W.N.; Alomari, M.A.; Alhussian, H.; Tiong, S.K. Towards wearable augmented reality in healthcare: A comparative survey and analysis of head-mounted displays. Int. J. Environ. Res. Public Health 2023, 20, 3940. [Google Scholar] [CrossRef]

- Zhang, Z.; Joy, K.; Harris, R.; Ozkaynak, M.; Adelgais, K.; Munjal, K. Applications and user perceptions of smart glasses in emergency medical services: Semistructured interview study. JMIR Hum. Factors 2022, 9, e30883. [Google Scholar] [CrossRef]

- Cerdán de Las Heras, J.; Tulppo, M.; Kiviniemi, A.M.; Hilberg, O.; Løkke, A.; Ekholm, S.; Catalán-Matamoros, D.; Bendstrup, E. Augmented reality glasses as a new tele-rehabilitation tool for home use: Patients’ perception and expectations. Disabil. Rehabil. Assist. Technol. 2022, 17, 480–486. [Google Scholar] [CrossRef]

- Lu, L.; Zhang, J.; Xie, Y.; Gao, F.; Xu, S.; Wu, X.; Ye, Z. Wearable health devices in health care: Narrative systematic review. JMIR mHealth uHealth 2020, 8, e18907. [Google Scholar] [CrossRef]

- Zuidhof, N.; Ben Allouch, S.; Peters, O.; Verbeek, P. Defining smart glasses: A rapid review of state-of-the-art perspectives and future challenges from a social sciences’ perspective. Augment. Hum. Res. 2021, 6, 15. [Google Scholar] [CrossRef]

- Follmann, A.; Ruhl, A.; Gösch, M.; Felzen, M.; Rossaint, R.; Czaplik, M. Augmented reality for guideline presentation in medicine: Randomized crossover simulation trial for technically assisted decision-making. JMIR mHealth uHealth 2021, 9, e17472. [Google Scholar] [CrossRef] [PubMed]

- Frederick, J.; van Gelderen, S. Revolutionizing simulation education with smart glass technology. Clin. Simul. Nurs. 2021, 52, 43–49. [Google Scholar] [CrossRef]

- Lareyre, F.; Chaudhuri, A.; Adam, C.; Carrier, M.; Mialhe, C.; Raffort, J. Applications of head-mounted displays and smart glasses in vascular surgery. Ann. Vasc. Surg. 2021, 75, 497–512. [Google Scholar] [CrossRef]

- Yoon, H. Opportunities and challenges of smartglass-assisted interactive telementoring. Appl. Syst. Innov. 2021, 4, 56. [Google Scholar] [CrossRef]

- Klinker, K.; Wiesche, M.; Krcmar, H. Digital transformation in health care: Augmented reality for hands-free service innovation. Inf. Syst. Front. 2020, 22, 1419–1431. [Google Scholar] [CrossRef]

- Özdemir-Güngör, D.; Göken, M.; Basoglu, N.; Shaygan, A.; Dabić, M.; Daim, T.U. An acceptance model for the adoption of smart glasses technology by healthcare professionals. In International Business and Emerging Economy Firms. Palgrave Studies of Internationalization in Emerging Markets; Larimo, J., Marinov, M., Marinova, S., Leposky, T., Eds.; Palgrave Macmillan: Cham, Germany, 2020; pp. 163–194. [Google Scholar] [CrossRef]

- Follmann, A.; Ohligs, M.; Hochhausen, N.; Beckers, S.K.; Rossaint, R.; Czaplik, M. Technical support by smart glasses during a mass casualty incident: A randomized controlled simulation trial on technically assisted triage and telemedical app use in disaster medicine. J. Med. Internet Res. 2019, 21, e11939. [Google Scholar] [CrossRef] [PubMed]

- Alismail, A.; Thomas, J.; Daher, N.S.; Cohen, A.; Almutairi, W.; Terry, M.H.; Huang, C.; Tan, L.D. Augmented reality glasses improve adherence to evidence-based intubation practice. Adv. Med. Educ. Pract. 2019, 10, 279–286. [Google Scholar] [CrossRef]

- Vassilakopoulou, P.; Hustad, E. Bridging digital divides: A literature review and research agenda for information systems research. Inf. Syst. Front. 2023, 25, 955–969. [Google Scholar] [CrossRef]

- Lythreatis, S.; Singh, S.K.; El-Kassar, A. The digital divide: A review and future research agenda. Technol. Forecast. Soc. Chang. 2022, 175, 121359. [Google Scholar] [CrossRef]

- Sanders, C.K.; Scanlon, E. The digital divide is a human rights issue: Advancing social inclusion through social work advocacy. J. Hum. Rights Soc. Work. 2021, 6, 130–143. [Google Scholar] [CrossRef]

- Graves, L.; Lalla, L.; Young, M. Evaluation of perceived and actual competency in a family medicine objective structured clinical examination. Can. Fam. Physician 2017, 63, e238–e243. [Google Scholar] [PubMed]

- Suleski, T.; Ahmed, M.; Yang, W.; Wang, E. A review of multi-factor authentication in the Internet of Healthcare Things. Digit. Health 2023, 9, 20552076231177144. [Google Scholar] [CrossRef]

- Accessories. Available online: https://shop.realwear.com/collections/accessories/head-mounting-options/ (accessed on 26 April 2024).

- Folding Bluetooth Keyboard and Touchpad. Available online: https://theavrlab.com.au/intelligent-wearable-products/folding-bluetooth-keyboard-and-touchpad/ (accessed on 26 April 2024).

- Wang, X.; Shi, J.; Lee, K.M. The digital divide and seeking health information on smartphones in Asia: Survey study of ten countries. J. Med. Internet Res. 2022, 24, e24086. [Google Scholar] [CrossRef]

- Sánchez-Rodríguez, M.T.; Pinzón-Bernal, M.Y.; Jiménez-Antona, C.; Laguarta-Val, S.; Sánchez-Herrera-Baeza, P.; Fernández-González, P.; Cano-de-la-Cuerda, R. Designing an informative app for neurorehabilitation: A feasibility and satisfaction study by physiotherapists. Healthcare 2023, 11, 2549. [Google Scholar] [CrossRef] [PubMed]

- Pascadopoli, M.; Zampetti, P.; Nardi, M.G.; Pellegrini, M.; Scribante, A. Smartphone applications in dentistry: A scoping review. Dent. J. 2023, 11, 243. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).