The Use of Artificial Intelligence for Skin Disease Diagnosis in Primary Care Settings: A Systematic Review

Abstract

1. Introduction

2. Materials and Methods

2.1. Search Criteria

2.2. Inclusion and Exclusion Criteria

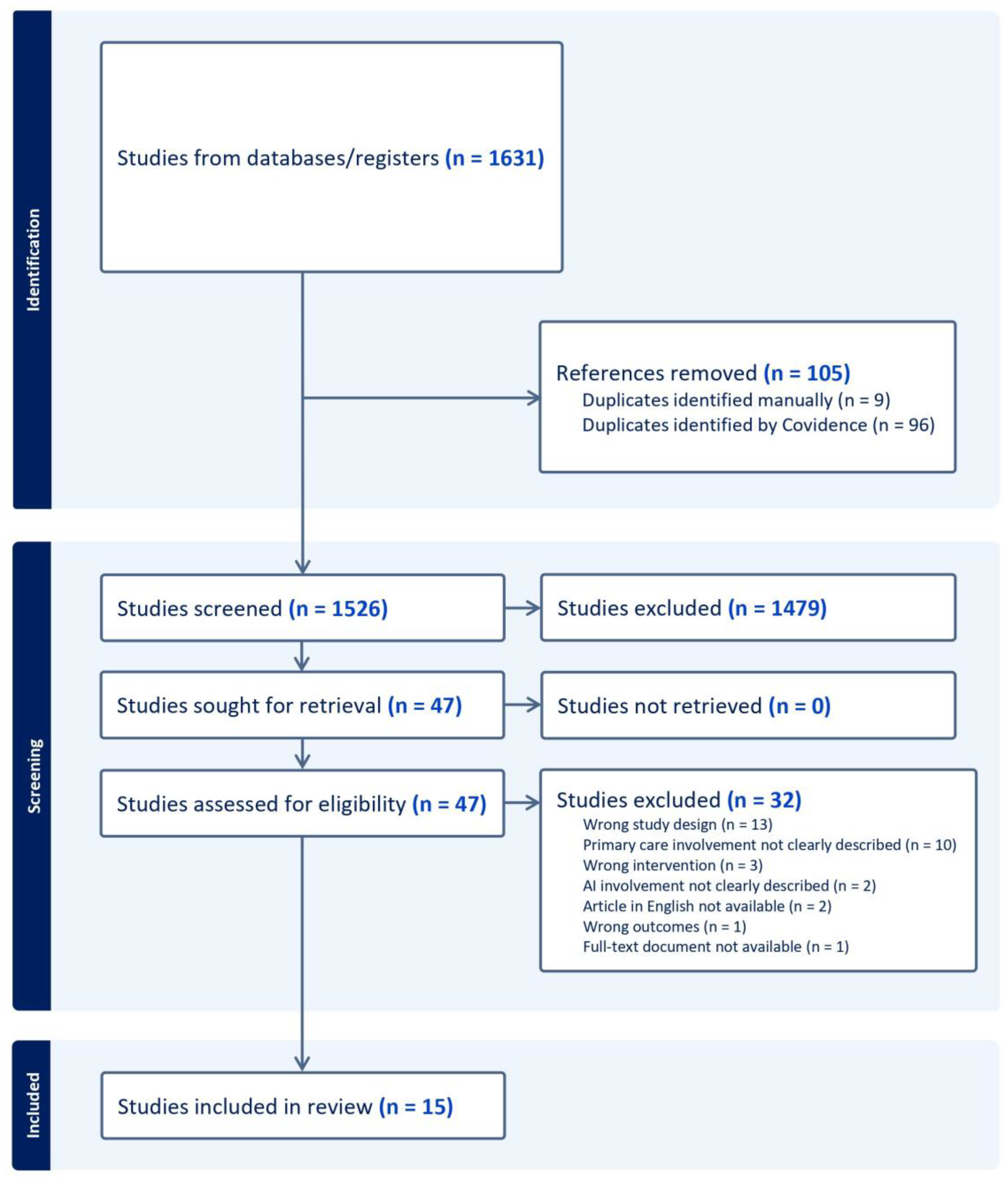

2.3. Study Selection

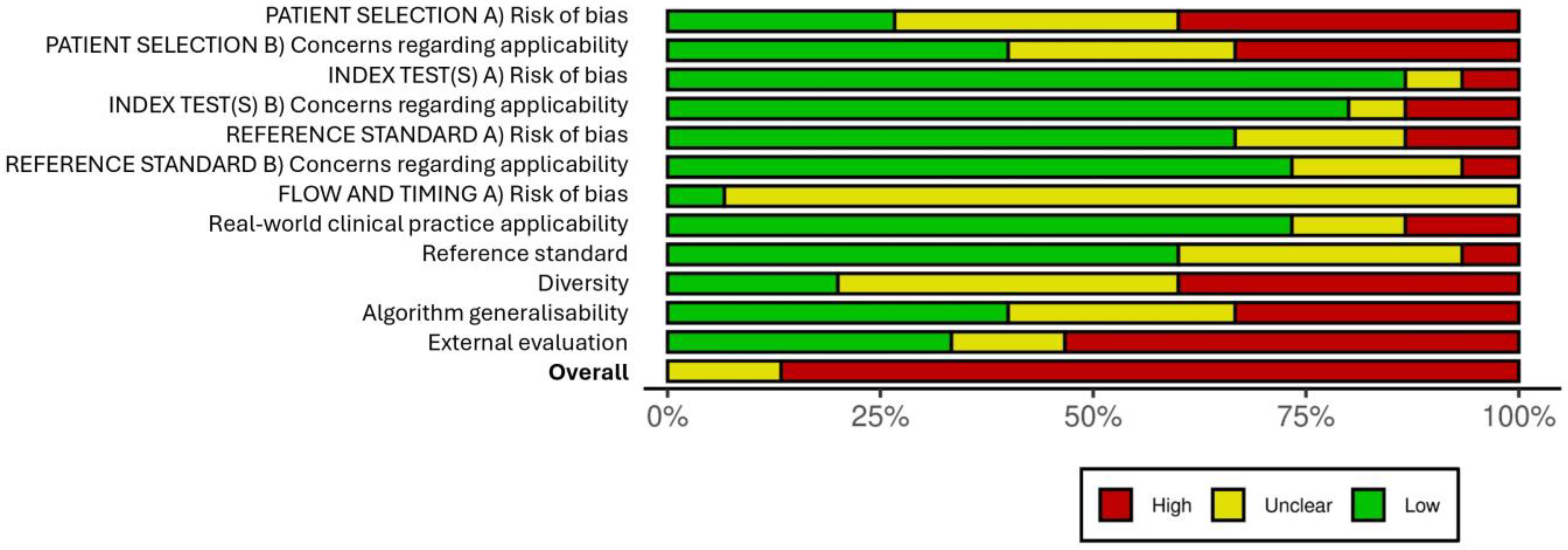

2.4. Risk-of-Bias Assessment

3. Results

3.1. Search Outcomes

3.2. Study Characteristics

3.3. Primary Outcome

Diagnostic Accuracy of AI Algorithms in Skin Diseases

3.4. Secondary Outcomes

3.4.1. AI/ML Algorithm Design

3.4.2. Appropriateness of Datasets Used to Develop the AI Algorithm

3.4.3. Usefulness of AI in the Management of Skin Diseases

3.4.4. Types of Skin Diseases in which AI Is Used

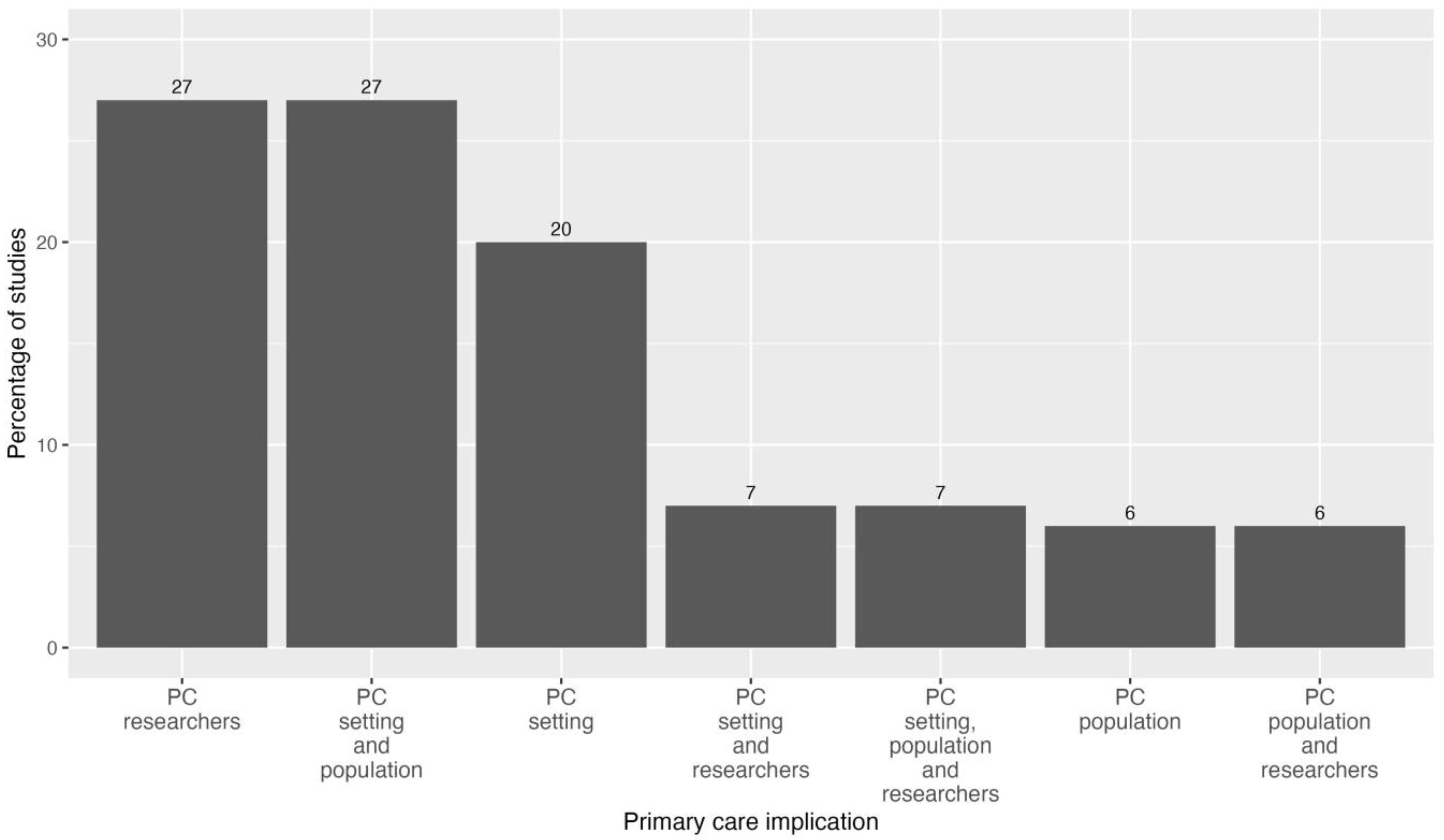

3.4.5. Primary Care Implication of the Studies

4. Discussion

Strengths and Limitations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Search Strategies for Bibliographic Databases

References

- Lim, H.W.; Collins, S.A.; Resneck, J.S., Jr.; Bolognia, J.L.; Hodge, J.A.; Rohrer, T.A.; Van Beek, M.J.; Margolis, D.J.; Sober, A.J.; Weinstock, M.A.; et al. The burden of skin disease in the United States. J. Am. Acad. Dermatol. 2017, 76, 958–972.e2. [Google Scholar] [CrossRef] [PubMed]

- Schofield, J.K.; Fleming, D.; Grindlay, D.; Williams, H. Skin conditions are the commonest new reason people present to general practitioners in England and Wales. Br. J. Dermatol. 2011, 165, 1044–1050. [Google Scholar] [CrossRef] [PubMed]

- Lowell, B.A.; Catherine, W.; Kirsner, R.S.; Haven, N.; Haven, W. Dermatology in primary care: Prevalence and patient disposition. J. Am. Acad. Dermatol. 2001, 45, 24–27. [Google Scholar] [CrossRef]

- Federman, D.G.; Kirsner, R.S. The Abilities of Primary Care Physicians in Dermatology: Implications for Quality of Care. Am. J. Manag. Care 1997, 3, 1487–1492. [Google Scholar]

- Tran, H.; Chen, K.; Lim, A.C.; Jabbour, J.; Shumack, S. Assessing diagnostic skill in dermatology: A comparison between general practitioners and dermatologists. Australas. J. Dermatol. 2005, 46, 230–234. [Google Scholar] [CrossRef]

- Porta, N.; Juan, J.S.; Grasa, M.P.; Simal, E.; Ara, M.; Querol, I. Diagnostic Agreement between Primary Care Physicians and Dermatologists in the Health Area of a Referral Hospital. Actas Dermo-Sifiliogr. 2008, 99, 207–212. [Google Scholar] [CrossRef]

- Alcántara, S.; Márquez, A.; Corrales, A.; Neila, J.; Polo, J.; Camacho, F. Estudio de las consultas por motivos dermatológicos en atención primaria y especializada. Piel 2014, 29, 4–8. [Google Scholar] [CrossRef]

- Jones, O.T.; Matin, R.N.; van der Schaar, M.; Bhayankaram, K.P.; I Ranmuthu, C.K.; Islam, M.S.; Behiyat, D.; Boscott, R.; Calanzani, N.; Emery, J.; et al. Artificial intelligence and machine learning algorithms for early detection of skin cancer in community and primary care settings: A systematic review. Lancet Digit. Health 2022, 4, e466–e476. [Google Scholar] [CrossRef]

- Du-Harpur, X.; Watt, F.; Luscombe, N.; Lynch, M.D. What is AI? Applications of artificial intelligence to dermatology. Br. J. Dermatol. 2020, 183, 423–430. [Google Scholar] [CrossRef]

- Jain, A.; Way, D.; Gupta, V.; Gao, Y.; de Oliveira Marinho, G.; Hartford, J.; Sayres, R.; Kanada, K.; Eng, C.; Nagpal, K.; et al. Development and Assessment of an Artificial Intelligence-Based Tool for Skin Condition Diagnosis by Primary Care Physicians and Nurse Practitioners in Teledermatology Practices. JAMA Netw. Open 2021, 4, e217249. [Google Scholar] [CrossRef]

- Hekler, A.; Utikal, J.S.; Enk, A.H.; Hauschild, A.; Weichenthal, M.; Maron, R.C.; Berking, C.; Haferkamp, S.; Klode, J.; Schadendorf, D.; et al. Superior skin cancer classification by the combination of human and artificial intelligence. Eur. J. Cancer 2019, 120, 114–121. [Google Scholar] [CrossRef] [PubMed]

- Galmarini, C.M.; Lucius, M. Artificial intelligence: A disruptive tool for a smarter medicine. Eur. Rev. Med. Pharmacol. Sci. 2020, 24, 7462–7474. [Google Scholar] [PubMed]

- Martorell, A.; Martin-Gorgojo, A.; Ríos-Viñuela, E.; Rueda-Carnero, J.M.; Alfageme, F.; Taberner, R. Inteligencia artificial en dermatología: Amenaza u oportunidad? Actas Dermo-Sifiliogr. 2022, 113, 30–46. [Google Scholar] [CrossRef] [PubMed]

- Patel, S.; Wang, J.V.; Motaparthi, K.; Lee, J.B. Artificial intelligence in dermatology for the clinician. Clin. Dermatol. 2021, 39, 667–672. [Google Scholar] [CrossRef] [PubMed]

- Lallas, A.; Zalaudek, I.; Argenziano, G.; Longo, C.; Moscarella, E.; Di Lernia, V.; Al Jalbout, S.; Apalla, Z. Dermoscopy in General Dermatology. Dermatol. Clin. 2013, 31, 679–694. [Google Scholar] [CrossRef] [PubMed]

- Marghoob, A.A.; Usatine, R.P.; Jaimes, N. Dermoscopy for the family physician. Am. Fam. Physician 2013, 88, 441–450. [Google Scholar] [PubMed]

- Menzies, S.; Emery, J.; Staples, M.; Davies, S.; McAvoy, B.; Fletcher, J.; Shahid, K.; Reid, G.; Avramidis, M.; Ward, A.; et al. Impact of dermoscopy and short-term sequential digital dermoscopy imaging for the management of pigmented lesions in primary care: A sequential intervention trial. Br. J. Dermatol. 2009, 161, 1270–1277. [Google Scholar] [CrossRef] [PubMed]

- Tschandl, P.; Rinner, C.; Apalla, Z.; Argenziano, G.; Codella, N.; Halpern, A.; Janda, M.; Lallas, A.; Longo, C.; Malvehy, J.; et al. Human–computer collaboration for skin cancer recognition. Nat. Med. 2020, 26, 1229–1234. [Google Scholar] [CrossRef] [PubMed]

- Haenssle, H.A.; Fink, C.; Schneiderbauer, R.; Toberer, F.; Buhl, T.; Blum, A.; Kalloo, A.; Hassen, A.B.H.; Thomas, L.; Enk, A.; et al. Man against machine: Diagnostic performance of a deep learning convolutional neural network for dermoscopic melanoma recognition in comparison to 58 dermatologists. Ann. Oncol. 2018, 29, 1836–1842. [Google Scholar] [CrossRef]

- Shrivastava, V.K.; Londhe, N.D.; Sonawane, R.S.; Suri, J.S. A novel and robust Bayesian approach for segmentation of psoriasis lesions and its risk stratification. Comput. Methods Programs Biomed. 2017, 150, 9–22. [Google Scholar] [CrossRef]

- Han, S.; Park, I.; Chang, S.; Na, J. Augmented intelligence dermatology: Deep neural networks empower medical professionals in diagnosing skin cancer and predicting treatment options for general skin disorders. J. Investig. Dermatol. 2019, 139, S171. [Google Scholar] [CrossRef]

- Liu, Y.; Jain, A.; Eng, C.; Way, D.H.; Lee, K.; Bui, P.; Kanada, K.; de Oliveira Marinho, G.; Gallegos, J.; Gabriele, S.; et al. A deep learning system for differential diagnosis of skin diseases. Nat. Med. 2020, 26, 900–908. [Google Scholar] [CrossRef] [PubMed]

- Wu, H.; Yin, H.; Chen, H.; Sun, M.; Liu, X.; Yu, Y.; Tang, Y.; Long, H.; Zhang, B.; Zhang, J.; et al. A deep learning, image based approach for automated diagnosis for inflammatory skin diseases. Ann. Transl. Med. 2020, 8, 581. [Google Scholar] [CrossRef] [PubMed]

- Mathur, J.; Chouhan, V.; Pangti, R.; Kumar, S.; Gupta, S. A convolutional neural network architecture for the recognition of cutaneous manifestations of COVID-19. Dermatol. Ther. 2021, 34, e14902. [Google Scholar] [CrossRef] [PubMed]

- Thomsen, K.; Christensen, A.L.; Iversen, L.; Lomholt, H.B.; Winther, O. Deep Learning for Diagnostic Binary Classification of Multiple-Lesion Skin Diseases. Front. Med. 2020, 7, 574329. [Google Scholar] [CrossRef] [PubMed]

- Parlamento Europeo Consejo de la Unión Europea. REGLAMENTO (UE) 2017/745 DEL PARLAMENTO EUROPEO Y DEL CONSEJO de 5 de abril de 2017 sobre los productos sanitarios. D La Unión Eur. 2017, 2013, 175. Available online: https://www.boe.es/buscar/doc.php?id=DOUE-L-2017-80916 (accessed on 30 May 2023).

- EU European Union. Directiva 93/42/CEE del consejo del parlamento europeo, relativa a los productos sanitarios. Dir 93/42/CEE. 1993, Volume 120, p. 66. Available online: https://eur-lex.europa.eu/legal-content/ES/TXT/HTML/?uri=CELEX:31993L0042 (accessed on 30 May 2023).

- Daneshjou, R.; Barata, C.; Betz-Stablein, B.; Celebi, M.E.; Codella, N.; Combalia, M.; Guitera, P.; Gutman, D.; Halpern, A.; Helba, B.; et al. Checklist for Evaluation of Image-Based Artificial Intelligence Reports in Dermatology: CLEAR Derm Consensus Guidelines from the International Skin Imaging Collaboration Artificial Intelligence Working Group. JAMA Dermatol. 2022, 158, 90–96. [Google Scholar] [CrossRef] [PubMed]

- Vasey, B.; Nagendran, M.; Campbell, B.; Clifton, D.A.; Collins, G.S.; Denaxas, S.; Denniston, A.K.; Faes, L.; Geerts, B.; Ibrahim, M.; et al. Reporting guideline for the early stage clinical evaluation of decision support systems driven by artificial intelligence: DECIDE-AI. BMJ 2022, 377, e070904. [Google Scholar] [CrossRef]

- Gerke, S.; Babic, B.; Evgeniou, T.; Cohen, I.G. The need for a system view to regulate artificial intelligence/machine learning-based software as medical device. NPJ Digit. Med. 2020, 3, 53. [Google Scholar] [CrossRef]

- Joshi, G.; Jain, A.; Araveeti, S.R.; Adhikari, S.; Garg, H.; Bhandari, M. FDA-Approved Artificial Intelligence and Machine Learning (AI/ML)-Enabled Medical Devices: An Updated Landscape. Electronics 2024, 13, 498. [Google Scholar] [CrossRef]

- Tschandl, P.; Codella, N.; Akay, B.N.; Argenziano, G.; Braun, R.P.; Cabo, H.; Gutman, D.; Halpern, A.; Helba, B.; Hofmann-Wellenhof, R.; et al. Comparison of the accuracy of human readers versus machine-learning algorithms for pigmented skin lesion classification: An open, web-based, international, diagnostic study. Lancet Oncol. 2019, 20, 938–947. [Google Scholar] [CrossRef] [PubMed]

- Kamioka, H. Preferred reporting items for systematic review and meta-analysis protocols (prisma-p) 2015 statement. Jpn. Pharmacol. Ther. 2019, 47, 1177–1185. [Google Scholar]

- Sarker, I.H. Deep Learning: A Comprehensive Overview on Techniques, Taxonomy, Applications and Research Directions. SN Comput. Sci. 2021, 2, 420. [Google Scholar] [CrossRef] [PubMed]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Covidence—Literature Review Management. Available online: https://get.covidence.org/literature-review?campaignid=18238395256&adgroupid=138114520982&gclid=EAIaIQobChMI7Yrg5PWd_wIVQs7VCh247gJrEAAYASAAEgIt4fD_BwE (accessed on 30 May 2023).

- Whiting, P.F.; Rutjes, A.W.; Westwood, M.E.; Mallett, S.; Deeks, J.J.; Reitsma, J.B.; Leeflang, M.M.; Sterne, J.A.; Bossuyt, P.M.; QUADAS-2 Group. QUADAS-2: A revised tool for the quality assessment of diagnostic accuracy studies. Ann. Intern. Med. 2011, 155, 529–536. [Google Scholar] [CrossRef] [PubMed]

- Jayakumar, S.; Sounderajah, V.; Normahani, P.; Harling, L.; Markar, S.R.; Ashrafian, H.; Darzi, A. Quality assessment standards in artificial intelligence diagnostic accuracy systematic reviews: A meta-research study. NPJ Digit. Med. 2022, 5, 11. [Google Scholar] [CrossRef] [PubMed]

- Phillips, M.; Greenhalgh, J.; Marsden, H.; Palamaras, I. Detection of Malignant Melanoma Using Artificial Intelligence: An Observational Study of Diagnostic Accuracy. Dermatol. Pract. Concept. 2019, 10, e2020011. [Google Scholar] [CrossRef] [PubMed]

- Anderson, J.; Tejani, I.; Jarmain, T.; Kellett, L.; Moy, R. Superiority of Artificial Intelligence in the Diagnostic Performance of Malignant Melanoma Compared to Dermatologists and Primary Care Providers. TechRxiv. Preprint. 2022. [CrossRef]

- Dulmage, B.; Tegtmeyer, K.; Zhang, M.Z.; Colavincenzo, M.; Xu, S. A Point-of-Care, Real-Time Artificial Intelligence System to Support Clinician Diagnosis of a Wide Range of Skin Diseases. J. Invest. Dermatol. 2021, 141, 1230–1235. [Google Scholar] [CrossRef]

- Giavina-Bianchi, M.; Cordioli, E.; Dos Santos, A.P. Accuracy of Deep Neural Network in Triaging Common Skin Diseases of Primary Care Attention. Front. Med. 2021, 8, 670300. [Google Scholar] [CrossRef]

- Giavina-Bianchi, M.; de Sousa, R.M.; Paciello, V.Z.A.; Vitor, W.G.; Okita, A.L.; Prôa, R.; Severino, G.L.D.S.; Schinaid, A.A.; Espírito Santo, R.; Machado, B.S. Implementation of artificial intelligence algorithms for melanoma screening in a primary care setting. PLoS ONE 2021, 16, e0257006. [Google Scholar] [CrossRef]

- Lucius, M.; De All, J.; De All, J.A.; Belvisi, M.; Radizza, L.; Lanfranconi, M.; Lorenzatti, V.; Galmarini, C.M. Deep Neural Frameworks Improve the Accuracy of General Practitioners in the Classification of Pigmented Skin Lesions. Diagnostics 2020, 10, 969. [Google Scholar] [CrossRef]

- Muñoz-López, C.; Ramírez-Cornejo, C.; Marchetti, M.A.; Han, S.S.; Del Barrio-Díaz, P.; Jaque, A.; Uribe, P.; Majerson, D.; Curi, M.; Del Puerto, C.; et al. Performance of a deep neural network in teledermatology: A single-centre prospective diagnostic study. J. Eur. Acad. Dermatol. Venereol. 2021, 35, 546–553. [Google Scholar] [CrossRef] [PubMed]

- Pangti, R.; Mathur, J.; Chouhan, V.; Kumar, S.; Rajput, L.; Shah, S.; Gupta, A.; Dixit, A.; Dholakia, D.; Gupta, S.; et al. A machine learning-based, decision support, mobile phone application for diagnosis of common dermatological diseases. J. Eur. Acad. Dermatol. Venereol. 2021, 35, 536–545. [Google Scholar] [CrossRef]

- Sangers, T.; Reeder, S.; van der Vet, S.; Jhingoer, S.; Mooyaart, A.; Siegel, D.M.; Nijsten, T.; Wakkee, M. Validation of a Market-Approved Artificial Intelligence Mobile Health App for Skin Cancer Screening: A Prospective Multicenter Diagnostic Accuracy Study. Dermatology 2022, 238, 649–656. [Google Scholar] [CrossRef] [PubMed]

- Soenksen, L.R.; Kassis, T.; Conover, S.T.; Marti-Fuster, B.; Birkenfeld, J.S.; Tucker-Schwartz, J.; Naseem, A.; Stavert, R.R.; Kim, C.C.; Senna, M.M.; et al. Using deep learning for dermatologist-level detection of suspicious pigmented skin lesions from wide-field images. Sci. Transl. Med. 2021, 13, eabb3652. [Google Scholar] [CrossRef] [PubMed]

- Yu, Z.; Kaizhi, S.; Jianwen, H.; Guanyu, Y.; Yonggang, W. A deep learning-based approach toward differentiating scalp psoriasis and seborrheic dermatitis from dermoscopic images. Front. Med. 2022, 9, 965423. [Google Scholar] [CrossRef]

- Anderson, J.M.; Tejani, I.; Jarmain, T.; Kellett, L.; Moy, R. Superiority of artificial intelligence compared to dermatologists and primary care providers in the diagnosis of malignant melanoma. J. Investig. Dermatol. 2022, 142, S108. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Navarro, C.L.A.; Damen, J.A.; Takada, T.; Nijman, S.W.; Dhiman, P.; Ma, J.; Collins, G.S.; Bajpai, R.; Riley, R.D.; Moons, K.G.; et al. Risk of bias in studies on prediction models developed using supervised machine learning techniques: Systematic review. BMJ 2021, 375, n2281. [Google Scholar] [CrossRef]

- Brinker, T.J.; Hekler, A.; Utikal, J.S.; Grabe, N.; Schadendorf, D.; Klode, J.; Berking, C.; Steeb, T.; Enk, A.H.; von Kalle, C. Skin Cancer classification using convolutional neural networks: Systematic review. J. Med. Internet Res. 2018, 20, e11936. [Google Scholar] [CrossRef]

- Dick, V.; Sinz, C.; Mittlböck, M.; Kittler, H.; Tschandl, P. Accuracy of Computer-Aided Diagnosis of Melanoma: A Meta-analysis. JAMA Dermatol. 2019, 155, 1291–1299. [Google Scholar] [CrossRef] [PubMed]

| Authors | Country | Study Design | Publication Date | Gold Standard |

|---|---|---|---|---|

| Anderson, Jane et al. [40] | United States | Diagnostic test accuracy study | 2022 | Histopathology |

| Dulmage, Brittany et al. [41] | United States | Diagnostic test accuracy study | 2020 | Dermatologists’ consensus |

| Giavina-Bianchi, M. et al. [42] | Brazil | Diagnostic test accuracy study | 2021 | Dermatologists’ consensus |

| Giavina-Bianchi, M. et al. [43] | Brazil | Diagnostic test accuracy study | 2021 | Dermatologists’ consensus |

| Jain, A. et al. [10] | United States | Diagnostic test accuracy study | 2021 | Dermatologists’ consensus Histopathology |

| Liu, Y. et al. [22] | United States | Diagnostic test accuracy study | 2020 | Dermatologists’ consensus |

| Lucius, M. et al. [44] | Argentina | Diagnostic test accuracy study | 2020 | Not described |

| Muñoz-López, C. et al. [45] | Chile | Diagnostic test accuracy study | 2020 | In-person clinic visit Dermatologists’ consensus |

| Pangti, R. et al. [46] | India | Diagnostic test accuracy study | 2020 | Dermatologists’ consensus |

| Phillips, M. et al. [39] | Europe Australia | Diagnostic test accuracy study, meta-analysis | 2019 | Histopathology |

| Sangers, T. et al. [47] | Europe Australia New Zealand | Diagnostic test accuracy study | 2022 | Dermatologists’ consensus Histopathology |

| Soenksen, LR. et al. [48] | Europe | Diagnostic test accuracy study | 2021 | Dermatologists’ consensus |

| Thomsen, K. et al. [25] | Europe | Image-based retrospective study | 2020 | Dermatologists’ consensus Histopathology |

| Tschandl, P. et al. [32] | Europe Australia | Diagnostic test accuracy study | 2019 | Histopathology Dermatologists’ monitoring |

| Yu, Z. et al. [49] | East Asia | Diagnostic test accuracy study | 2022 | Histopathology Dermatologists’ consensus |

| Authors | Participants (n) | Sex (%) | Age Range | Ethnicity | Skin Type |

|---|---|---|---|---|---|

| Anderson, Jane et al. | 100 * | Not disclosed | Not disclosed | Not disclosed | Not disclosed |

| Brittany Dulmage et al. | 222 * | Not disclosed | Not disclosed | Not disclosed | All of them |

| Giavina-Bianchi, M. et al. | 6945 | Not disclosed | Not disclosed | Not disclosed | Not disclosed |

| Giavina-Bianchi, M. et al. | Not disclosed | >18 | Not disclosed | Not disclosed | |

| Jain, A. et al. | 1016 | 64.2% female | 18–65 | Mixed ethnicity | All of them |

| 35.8% male | |||||

| Liu, Y. et al. | 16,114 | 63.1% female | 18–65 | Mixed ethnicity | All of them |

| Lucius, M. et al. | 233 (separated into three experiments 163 + 35 + 35) | Not disclosed | Not disclosed | Caucasian | All of them |

| Muñoz-López, C. et al. | 281 | 63% female | 18–65 | Mixed ethnicity | Fitzpatrick I–II |

| 37% male | Fitzpatrick III–IV | ||||

| Pangti, R. et al. | 5014 | Not disclosed | Not disclosed | South Asian | Not disclosed |

| Phillips, M. et al. | Not disclosed | Not disclosed | Not disclosed | Not disclosed | |

| Sangers, T. et al. | 372 | 50.8% female | 58–78 | Not disclosed | Fitzpatrick I–II |

| 49.2% male | Fitzpatrick III–IV | ||||

| Soenksen, LR. et al. | 133 | Not disclosed | Not disclosed | Not disclosed | All of them |

| Thomsen, K. et al. | 2342 | Not disclosed | Not disclosed | Not disclosed | Fitzpatrick II–III |

| Tschandl, P. et al. | 1511 * | Not disclosed | Not disclosed | Not disclosed | Not disclosed |

| Yu, Z. et al. | 617 | 45.4% female | 18–65 | Asian/Middle Eastern | Not disclosed |

| 54.6% male |

| Authors | Categories | SEN | SPE | Accuracy | AUC | PPV | PNV |

|---|---|---|---|---|---|---|---|

| Anderson, Jane et al. | 0.80 | 0.95 | 0.92 | 0.80 | 0.95 | ||

| Dulmage et al. | Top 1 | 0.68 | |||||

| Top 3 | 0.80 | ||||||

| Giavina-Bianchi, M. et al. | 0.91 | 0.98 | 0.90 | ||||

| Giavina-Bianchi, M. et al. | Dermoscopy model | 0.90 | 0.89 | 0.89 | 0.96 | 0.64 | 0.98 |

| Jain, A. et al. | Clinical model | 0.91 | 0.84 | 0.85 | 0.94 | 0.57 | 0.98 |

| Liu, Y. et al. | Top 1 | 0.58 | 0.71 | ||||

| Top 3 | 0.83 | 0.93 | |||||

| Lucius, M. et al. | Low image resolution | 0.76 | |||||

| High image resolution | 0.78 | ||||||

| Low-resolution images + clinical data | 0.79 | ||||||

| High-resolution images + clinical data | 0.80 | ||||||

| Muñoz-López, C. et al. | Top 1 | 0.41 | |||||

| Top 3 | 0.64 | ||||||

| Pangti, R. et al. | Ext Top 1 | 0.99 | 0.75 | 0.9 | 0.61 | 0.99 | |

| Ext Top 3 | 0.89 | ||||||

| Int Top 1 | 0.77 | 0.95 | |||||

| Phillips, M. et al. | 0.85 | 0.85 | 0.93 | ||||

| Sangers, T. et al. | 0.87 | 0.70 | 0.76 | 0.61 | 0.91 | ||

| Soenksen, LR. et al. | 0.89 | 0.90 | 0.85 | 0.97 | |||

| Thomsen, K. et al. | Psoriasis vs. eczema | 0.82 | 0.74 | 0.78 | 0.86 | 0.77 | 0.79 |

| Acne vs. rosacea | 0.85 | 0.90 | 0.89 | 0.90 | 0.69 | 0.96 | |

| Cutaneous t-cell lymphoma vs. eczema | 0.74 | 0.84 | 0.81 | 0.88 | 0.63 | 0.90 | |

| Tschandl, P. et al. | MetaOptima | 0.89 | 0.96 | ||||

| DAILSYLab | 0.86 | 0.97 | |||||

| Medical Image Analysis | 0.85 | 0.96 | |||||

| Yu, Z. et al. | 0.96 | 0.88 | 0.92 |

| Study | Relative Difference in Diagnostic Agreement | ||

|---|---|---|---|

| Unassisted | Assisted | ||

| Dulmage, B. et al. | Agreement (%) | 36 | 68 |

| Jain, A. et al. | Top 1 PCP agreement (%) | 48 | 58 |

| Top 3 PCP agreement (%) | 58 | 68 | |

| Top 1 NP agreement (%) | 46 | 58 | |

| Top 3 NP agreement (%) | 54 | 66 | |

| Lucius, M. et al. | Agreement (%) | 17.29 | 42.42 |

| Yu, Z. et al. | AUC GP 1 | 0.537 | 0.778 |

| AUC GP 2 | 0.575 | 0.788 | |

| Authors | Training Set (n Images) | Test Set (n Images) | Internal Validation | External Validation | |

|---|---|---|---|---|---|

| Anderson, Jane et al. | 100 | ||||

| Brittany Dulmage et al. | 69,195 | 3862 | 3869 | 222 | |

| Giavina-Bianchi, M. et al. | 140,446 | 24,000 | 6975 | ||

| Giavina-Bianchi, M. et al. | Dermoscopy model | 21,074 | 2633 | 2635 | |

| Clinical model | 2466 | 308 | 309 | ||

| Jain, A. et al. | 64,837 | 14,883 | |||

| Liu, Y. et al. | 64,837 | 14,883 | |||

| Lucius, M. et al. | 8313 | 1702 | |||

| Muñoz-López, C. et al. | 220,680 | 3501 | 17,125 | 340 | |

| Pangti, R. et al. | 12,350 | 3068 | 5014 | ||

| Phillips, M. et al. | 7102 | ||||

| Sangers, T. et al. | 785 | ||||

| Soenksen, LR. et al. | 20,388 | 6796 | 6796 | ||

| Thomsen, K. et al. | 13,232 | 1657 | 1654 | ||

| Tschandl, P. et al. | 10,015 | 1195 | |||

| Yu, Z. et al. | 1088 | 136 | 134 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Escalé-Besa, A.; Vidal-Alaball, J.; Miró Catalina, Q.; Gracia, V.H.G.; Marin-Gomez, F.X.; Fuster-Casanovas, A. The Use of Artificial Intelligence for Skin Disease Diagnosis in Primary Care Settings: A Systematic Review. Healthcare 2024, 12, 1192. https://doi.org/10.3390/healthcare12121192

Escalé-Besa A, Vidal-Alaball J, Miró Catalina Q, Gracia VHG, Marin-Gomez FX, Fuster-Casanovas A. The Use of Artificial Intelligence for Skin Disease Diagnosis in Primary Care Settings: A Systematic Review. Healthcare. 2024; 12(12):1192. https://doi.org/10.3390/healthcare12121192

Chicago/Turabian StyleEscalé-Besa, Anna, Josep Vidal-Alaball, Queralt Miró Catalina, Victor Hugo Garcia Gracia, Francesc X. Marin-Gomez, and Aïna Fuster-Casanovas. 2024. "The Use of Artificial Intelligence for Skin Disease Diagnosis in Primary Care Settings: A Systematic Review" Healthcare 12, no. 12: 1192. https://doi.org/10.3390/healthcare12121192

APA StyleEscalé-Besa, A., Vidal-Alaball, J., Miró Catalina, Q., Gracia, V. H. G., Marin-Gomez, F. X., & Fuster-Casanovas, A. (2024). The Use of Artificial Intelligence for Skin Disease Diagnosis in Primary Care Settings: A Systematic Review. Healthcare, 12(12), 1192. https://doi.org/10.3390/healthcare12121192