Effects of Visual Communication Design Accessibility (VCDA) Guidelines for Low Vision on Public and Open Government Health Data

Abstract

1. Introduction

2. Materials and Methods

2.1. Visual Communication Design Accessibility (VCDA) Guidelines for Low Vision

- (a)

- Text size and thickness support more than three options:

- (b)

- Designated background colors contrasted with white characters:

- (c)

- Batch layout considering TTS:

- (d)

- Output alternate text or provide a TTS-listening button:

- (e)

- The characters in the image are recognized by optical character recognition (OCR) systems:

- (f)

- Provide a text-only button if the body text of the information, such as media, is supported:

- (g)

- If a video is included, notify the user about its playability as alternative text:

- (h)

- State the current page position in a consistent position of the entire information:

- (i)

- Always provide the same menu (e.g., Home or Top) to return to the first place.

- (j)

- The input area of all buttons is defined as 1.5 times the size of the button:

- (k)

- Provide a text-oriented black-and-white screen based on the user experience:

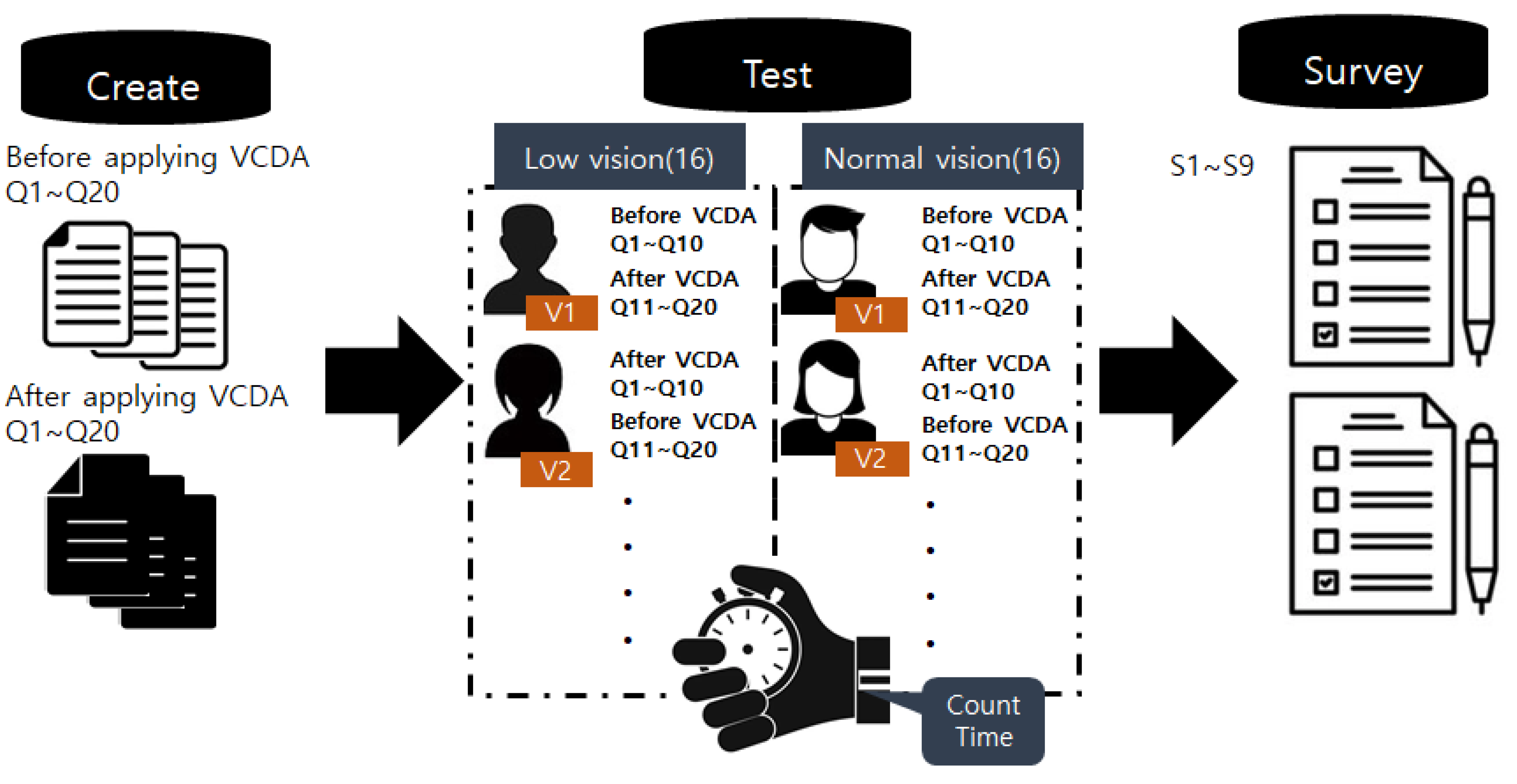

2.2. Experimental Design

2.2.1. Participants

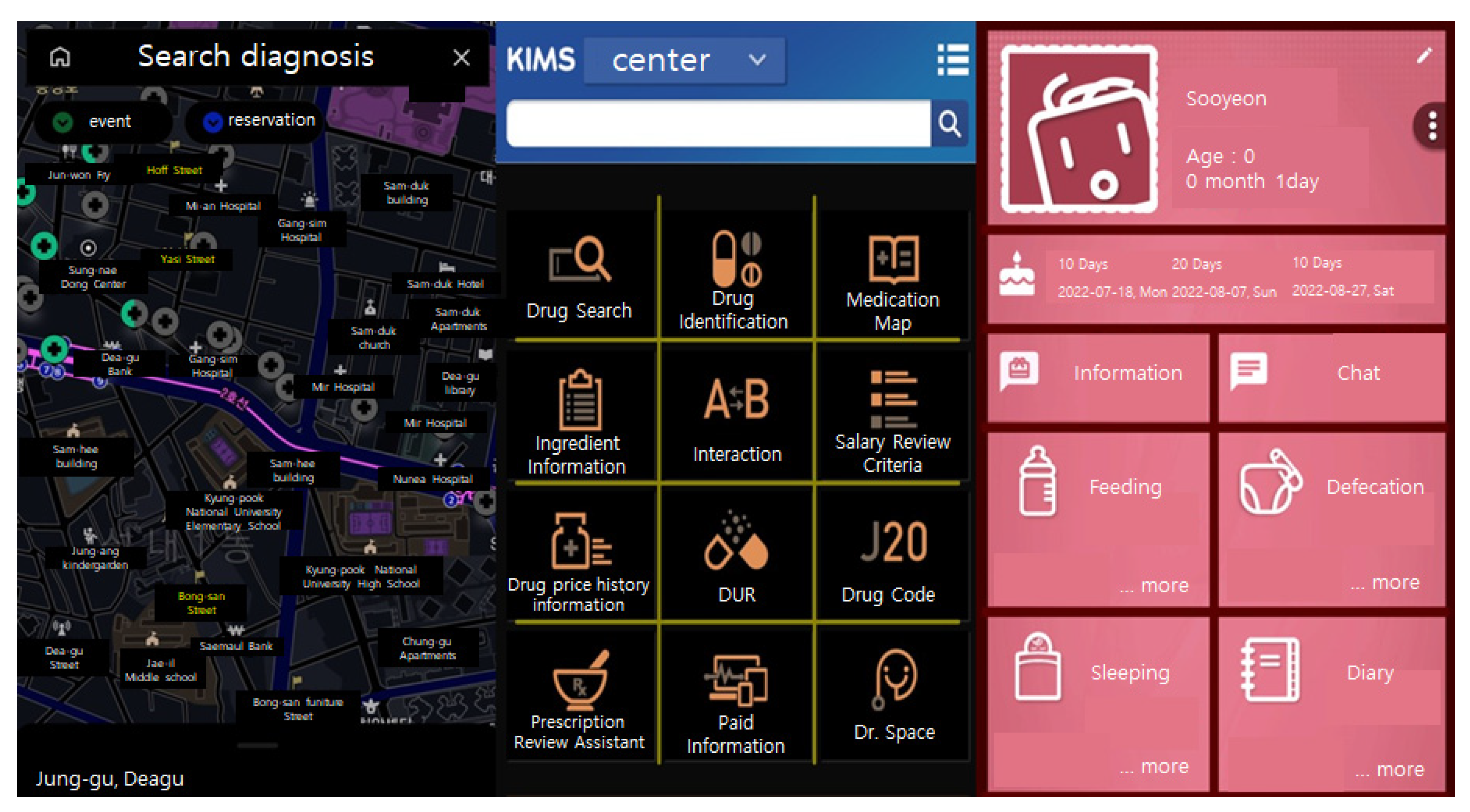

2.2.2. VCDA Test App

2.2.3. Procedure for Data Collection

2.2.4. Tools Used in This Study

Tools to Test Information Recognition Speed

Satisfaction Survey

2.2.5. Statistical Analysis

3. Results

3.1. Participant Demographics

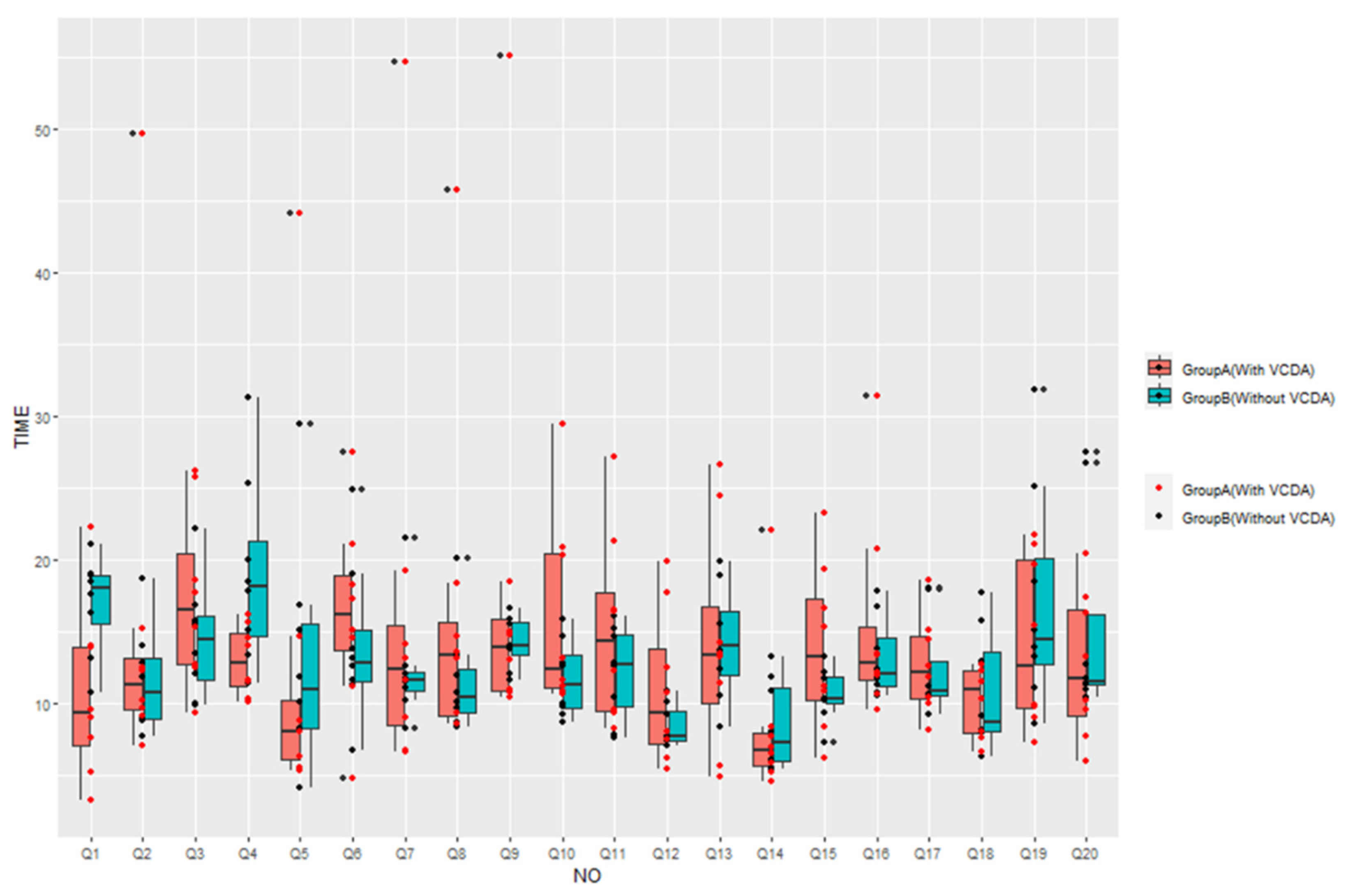

3.2. Comparison of Visual Information Recognition Speed between Group

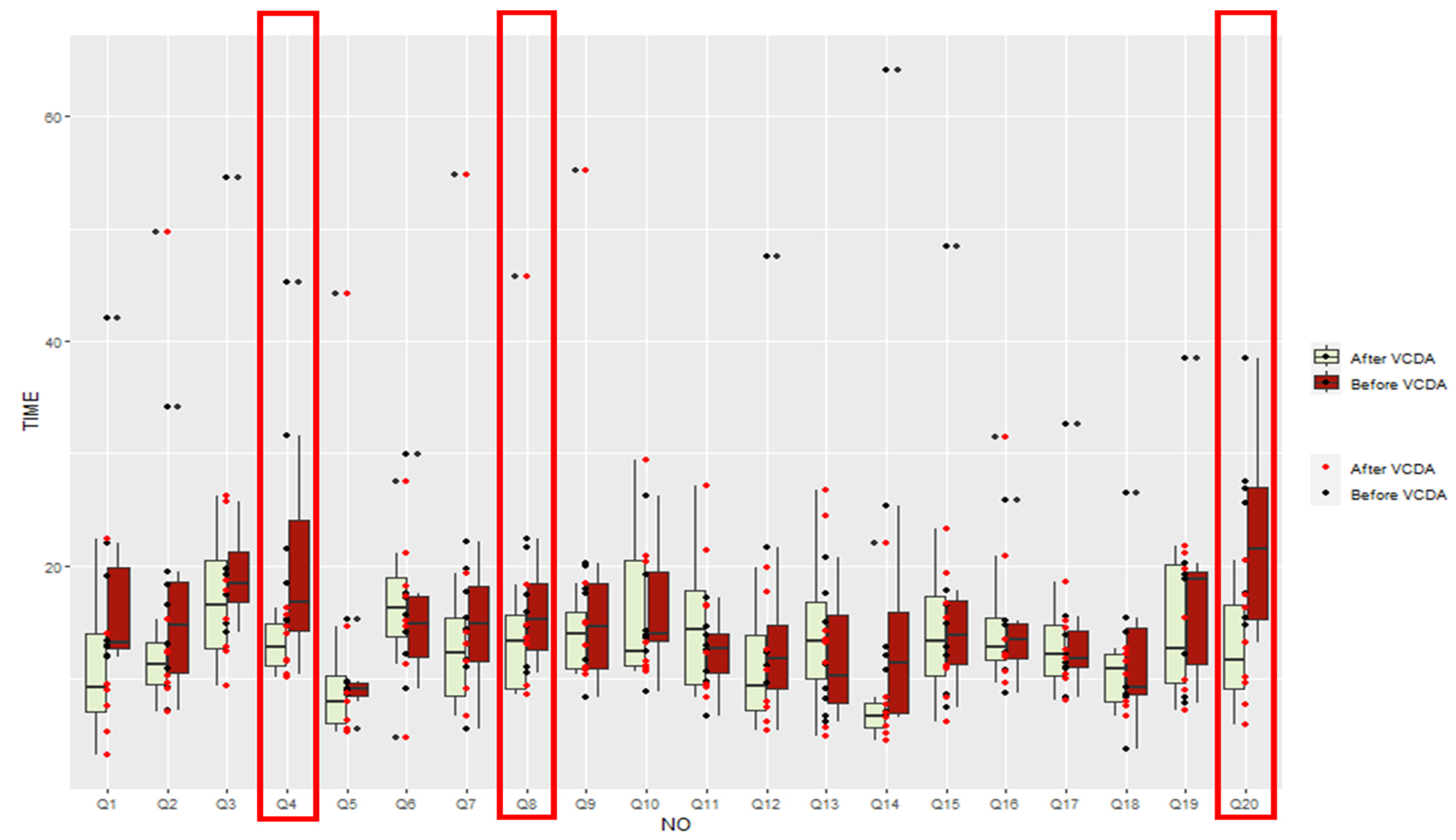

3.3. Improvement Level of Visual Recognition Speed after VCDA by Items

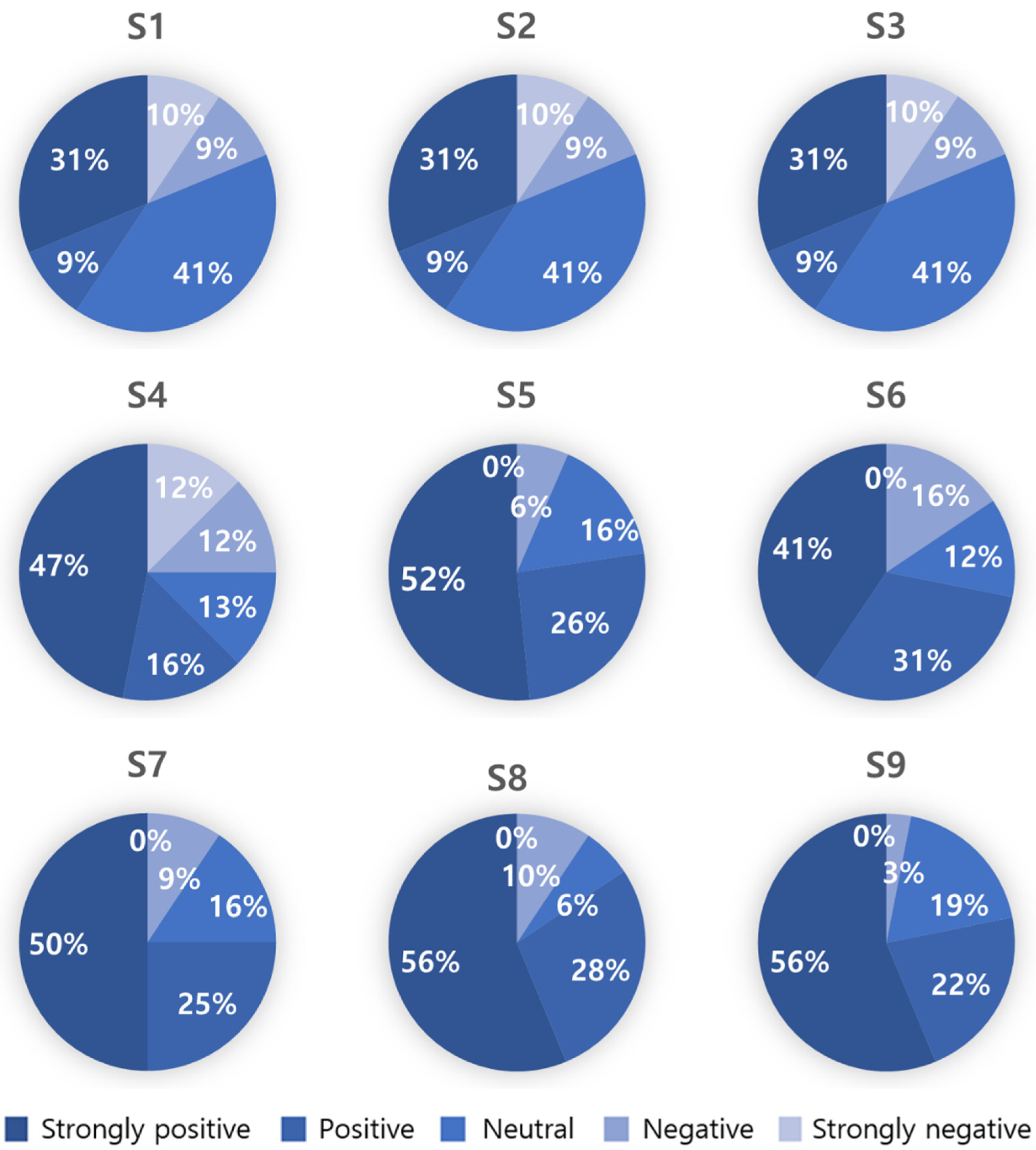

3.4. Satisfaction Survey Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zafar, S.; Asif, M.; Bin Ahmad, M.; Ghazal, T.M.; Faiz, T.; Ahmad, M.; Khan, M.A. Assistive Devices Analysis for Visually Impaired Persons: A Review on Taxonomy. IEEE Access 2022, 10, 13354–13366. [Google Scholar] [CrossRef]

- Acosta-Vargas, P.; Novillo-Villegas, S.; Salvador-Acosta, B.; Calvopina, M.; Kyriakidis, N.; Ortiz-Prado, E.; Salvador-Ullauri, L. Accessibility Analysis of Worldwide COVID-19-Related Information Portals. Int. J. Environ. Res. Public Health 2022, 19, 12102. [Google Scholar] [CrossRef]

- Calzada, I. Data Co-Operatives through Data Sovereignty. Smart Cities 2021, 4, 1158–1172. [Google Scholar] [CrossRef]

- Zarocostas, J. How to fight an infodemic. Lancet 2020, 395, 676. [Google Scholar] [CrossRef]

- Lee, S.M.; Lee, D. Lessons Learned from Battling COVID-19: The Korean Experience. Int. J. Environ. Res. Public Health 2020, 17, 7548. [Google Scholar] [CrossRef] [PubMed]

- Văduva (Ene), L.L.; Nedelcu, A.-M.; Stancu (Zamfir), D.; Bălan, C.; Purcărea, I.-M.; Gurău, M.; Cristian, D.A. Digital Technologies for Public Health Services after the COVID-19 Pandemic: A Risk Management Analysis. Sustainability 2023, 15, 3146. [Google Scholar] [CrossRef]

- Kamalakannan, S.; Bhattacharjya, S.; Bogdanova, Y.; Papadimitriou, C.; Arango-Lasprilla, J.C.; Bentley, J.; Jesus, T.S.; Refugee Empowerment Task Force, International Networking Group of the American Congress of Rehabilitation Medicine. Health Risks and Consequences of a COVID-19 Infection for People with Disabilities: Scoping Review and Descriptive Thematic Analysis. Int. J. Environ. Res. Public Health 2021, 18, 4348. [Google Scholar] [CrossRef]

- Layton, N.; Mont, D.; Puli, L.; Calvo, I.; Shae, K.; Tebbutt, E.; Hill, K.D.; Callaway, L.; Hiscock, D.; Manlapaz, A.; et al. Access to Assistive Technology during the COVID-19 Global Pandemic: Voices of Users and Families. Int. J. Environ. Res. Public Health 2021, 18, 11273. [Google Scholar] [CrossRef] [PubMed]

- Open Data Portal. Available online: https://www.data.go.kr/ (accessed on 28 February 2023).

- Khurshid, M.M.; Zakaria, N.H.; Rashid, A.; Ahmad, M.N.; Arfeen, M.I.; Faisal Shehzad, H.M. Modeling of Open Government Data for Public Sector Organizations Using the Potential Theories and Determinants—A Systematic Review. Informatics 2020, 7, 24. [Google Scholar] [CrossRef]

- Davies, T.; Walker, S.B.; Rubinstein, M.; Perini, F. The State of Open Data: Histories and Horizons; African Minds, IDRC: Cape Town, South Africa, 2019; pp. 166–180. [Google Scholar]

- Kim, H. Lesson Learned from the Power of Open Data: Resolving the Mask Shortage Problem Caused by COVID-19 in South Korea. Sustainability 2021, 13, 278. [Google Scholar] [CrossRef]

- Koh, K.; Hyder, A.; Karale, Y.; Kamel Boulos, M.N. Big Geospatial Data or Geospatial Big Data? A Systematic Narrative Review on the Use of Spatial Data Infrastructures for Big Geospatial Sensing Data in Public Health. Remote Sens. 2022, 14, 2996. [Google Scholar] [CrossRef]

- Open, Useful and Re-Usable Data (OURdata) Index- 2019-Policy Paper–OECD. Available online: https://www.oecd.org/gov/digital-government/policy-paper-ourdata-index-2019.htm (accessed on 28 February 2023).

- National Assembly Research Service. Available online: https://www.nars.go.kr/report/list.do?cmsCode=CM0043/ (accessed on 28 February 2023).

- Open Data Strategy Council. Available online: https://www.odsc.go.kr/ (accessed on 28 February 2023).

- Fernández-Díaz, E.; Iglesias-Sánchez, P.P.; Jambrino-Maldonado, C. Exploring WHO Communication during the COVID 19 Pandemic through the WHO Website Based on W3C Guidelines: Accessible for All? Int. J. Environ. Res. Public Health 2020, 17, 5663. [Google Scholar] [CrossRef]

- Erkut, B. From Digital Government to Digital Governance: Are We There Yet? Sustainability 2020, 12, 860. [Google Scholar] [CrossRef]

- Madiah, M.; Hisham, S. User-interface design: A case study of partially sighted children in Malaysia. In Proceedings of the 2010 International Conference on User Science and Engineering (i-USEr), Shah Alam, Malaysia, 13–15 December 2010; pp. 168–173. [Google Scholar] [CrossRef]

- Kim, W.J.; Kim, I.K.; Kim, M.J.; Lee, E. Effect of UX Design Guideline on the information accessibility for the visually impaired in the mobile health apps. In Proceedings of the 2018 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Madrid, Spain, 3–6 December 2018; pp. 1103–1106. [Google Scholar] [CrossRef]

- Moreno, L.; Valencia, X.; Pérez, J.E.; Arrue, M. An exploratory study of web adaptation techniques for people with low vision. Univers. Access Inf. Soc. 2021, 20, 223–237. [Google Scholar] [CrossRef]

- Di Sotto, S.; Viviani, M. Health Misinformation Detection in the Social Web: An Overview and a Data Science Approach. Int. J. Environ. Res. Public Health 2022, 19, 2173. [Google Scholar] [CrossRef]

- de Moraes Rossetto, A.G.; Sega, C.; Leithardt, V.R.Q. An Architecture for Managing Data Privacy in Healthcare with Blockchain. Sensors 2022, 22, 8292. [Google Scholar] [CrossRef]

- Qureshi, H.H.; Wong, D.H.T. Usability of user-centric mobile application design from visually impaired people’s perspective. In Universal Access in Human-Computer Interaction. Design Approaches and Supporting Technologies: 14th International Conference, UAHCI 2020, Held as Part of the 22nd HCI International Conference, HCII 2020, Copenhagen, Denmark, 19–24 July 2020, Proceedings, Part I 22; Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 311–322. [Google Scholar]

- Alsaeedi, A. Comparing Web Accessibility Evaluation Tools and Evaluating the Accessibility of Webpages: Proposed Frameworks. Information 2020, 11, 40. [Google Scholar] [CrossRef]

- Acosta-Vargas, P.; Salvador-Acosta, B.; Salvador-Ullauri, L.; Villegas-Ch, W.; Gonzalez, M. Accessibility in Native Mobile Applications for Users with Disabilities: A Scoping Review. Appl. Sci. 2021, 11, 5707. [Google Scholar] [CrossRef]

- Mobile Accessibility at W3C|Web Accessibility Initiative (WAI)|W3C. Available online: https://www.w3.org/WAI/standards-guidelines/mobile/ (accessed on 28 February 2023).

- Lemenager, T.; Neissner, M.; Koopmann, A.; Reinhard, I.; Georgiadou, E.; Müller, A.; Kiefer, F.; Hillemacher, T. COVID-19 Lockdown Restrictions and Online Media Consumption in Germany. Int. J. Environ. Res. Public Health 2021, 18, 14. [Google Scholar] [CrossRef] [PubMed]

- Newton, F.; Megaw, R. Mechanisms of Photoreceptor Death in Retinitis Pigmentosa. Genes 2020, 11, 1120. [Google Scholar] [CrossRef] [PubMed]

- Tong, J.; Huang, J.; Khou, V.; Martin, J.; Kalloniatis, M.; Ly, A. Topical Review: Assessment of Binocular Sensory Processes in Low Vision. Optom. Vis. Sci. 2021, 98, 310–325. [Google Scholar] [CrossRef] [PubMed]

- Almeida, L.; Menezes, P.; Dias, J. Interface Transparency Issues in Teleoperation. Appl. Sci. 2020, 10, 6232. [Google Scholar] [CrossRef]

| Increased data exposure | Support for zoom in/out for the main content. |

| Support for alternative color schemes. | |

| Data accessibility | Intuitive navigation. |

| The menu intuitively responds to user intentions. | |

| Support for input methods other than touch. | |

| Information acquisition | Use of highly legible fonts. |

| Highlighting main images that users can access. | |

| Highlighting action-triggering media. | |

| Data search | Always placing text input boxes in the same location. |

| Showing search results immediately for the search text box. | |

| Speech recognition for text input. |

| Readability of text | (a) Text size and thickness support more than three options. |

| (b) Designated background colors that are contrasted with white characters. | |

| (c) Batch layout considering text-to-speech (TTS). | |

| Accessibility to Media (Image/Video) | (d) Output alternate text or provide a TTS-listening button. |

| (e) The characters in the image are recognized by optical character recognition (OCR) systems. | |

| (f) Provide a text-only button if the body text of information, such as media, is supported. | |

| (g) If a movie is included, notify its playability as alternative text. | |

| Simple, intuitive navigation | (h) State the current page position in a consistent position for the entire information. |

| (i) Always provide the same menu (e.g., Home or Top) to return to the first place. | |

| (j) The input area of all buttons is defined as 1.5 times the size of the button. | |

| (k) Provide a text-oriented black-and-white screen based on user experience. |

| Q1 | List the names of the two menus to the right of “First Aid Tips.” |

| Q2 | List the names of the two pharmacies at 507 m and 640 m. |

| Q3 | Name the pharmacy in Gaenari Park and in Uzukatsu Restaurant. |

| Q4 | List the three departments on the left side of “Plastic Surgery” right below “Hospitals You Frequently Visit.” |

| Q5 | Among the menus at the top, name the menu to the right of “Find Pharmacy.” |

| Q6 | There are three buttons in the popup window. Please explain the location of the “Resend Verification Code” button among them. |

| Q7 | Please tell us the names of all three menus under “Present Baby” menu when checking insurance premiums. |

| Q8 | What input is being asked for under the picture of the baby? (Read the text) |

| Q9 | How many times has Mr. Lim donated blood? Tell us the date when the next blood donation is possible. |

| Q10 | As of 9 July 2022, please tell us all the remaining blood types on “10.0 days” and “10.7 days” of the blood holding status. |

| Q11 | Please tell me the names of both menus on the “Prognosis Management” menu, arranged side by side. |

| Q12 | This page is a screen introducing the service of the app. What is the name of this app? |

| Q13 | Please tell me how many subway lines pass through Gyeongbuk Elementary School and Kangsim Hospital. |

| Q14 | How many points does Mr. Lim have? |

| Q15 | Please tell me the schedule for the two days after Constitution Day. |

| Q16 | Please tell me the names of the three menus on the top row of the “Encyclopedia of Dementia.” |

| Q17 | Please tell me the four menus on the far right (vertical bar) in order. |

| Q18 | Is it necessary to agree to the “optional agreement” in the Terms of Use to sign up for membership? |

| Q19 | Are you currently taking any medications? What should you press on the screen to add medications? |

| Q20 | On the app screen, how many days ago was “Sooyeon” born? Also, does the app have a menu where you can write a diary entry? |

| Characteristics | Group A * | Group B * |

|---|---|---|

| Gender | ||

| Male | 7 | 6 |

| Female | 9 | 10 |

| Age(year) | ||

| 20–29 | 11 | 3 |

| 30–39 | 4 | 7 |

| 40–49 | 3 | |

| 50–59 | 1 | |

| 60–69 | 1 | 2 |

| Vision | ||

| <6/18 | 16 | |

| >6/18 | 16 |

| Before | After | Z 1 | p-Value | |

|---|---|---|---|---|

| Group A * | 160.76 | 140.64 | −1.96 | 0.05 |

| Group B * | 130.92 | 129.21 | −0.70 | 0.48 |

| Z 2 | −1.47 | −0.42 | ||

| p-value | 0.14 | 0.67 |

| Significantly Improved 1 | Practically Improved | Maintained | |

|---|---|---|---|

| Group A * | Q4, Q8, Q20 (3) | Q1, Q2, Q3, Q5, Q7, Q9, Q10, Q11, Q12, Q13, Q14, Q15, Q18, Q19 (15) | Q16, Q17 (2) |

| Group B * | Q10 (1) | Q1, Q2, Q3, Q4, Q5, Q6, Q7, Q8, Q9, Q11, Q12, Q13, Q14, Q15, Q16, Q17, Q19, Q20 (18) | Q18 (1) |

| Question | Mean | Standard Deviation |

|---|---|---|

| [S1] Do you think the VCDA proposed in this study is functionally valuable? | 4.25 | 1.05 |

| [S2] Do you think the VCDA helped you recognize information compared to a normal screen? | 3.97 | 1.00 |

| [S3] From an aesthetic perspective (personal design taste), do you think a normal screen is more aesthetically satisfying than the VCDA? | 3.44 | 1.29 |

| [S4] From this point of view, do you think developing a VCDA to “improve information awareness” is worthwhile compared to a normal screen designed with the “aesthetic satisfaction” of the information? | 3.72 | 1.49 |

| [S5] Do you think applying the VCDA on all public data apps by default would improve visual information access for low vision? | 4.23 | 0.96 |

| [S6] Do you think it is reasonable to mandate the provision of the VCDA option to provide accessibility (e.g., in-app settings) in current public data apps with the normal screen developed for normal-vision people? | 3.97 | 1.09 |

| [S7] Do you think that investment in the study should be made if the VCDA does not affect information access for normal-vision people but only helps people with low vision? | 4.16 | 1.02 |

| [S8] Do you think the research that decreases the information recognition speed of public government data should continue in the future? | 4.31 | 0.97 |

| [S9] Do you think further research is needed to increase accessibility for all social communities, not just for those with low vision? | 4.31 | 0.90 |

| Total | 4.08 | 0.81 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lim, J.; Kim, W.; Kim, I.; Lee, E. Effects of Visual Communication Design Accessibility (VCDA) Guidelines for Low Vision on Public and Open Government Health Data. Healthcare 2023, 11, 1047. https://doi.org/10.3390/healthcare11071047

Lim J, Kim W, Kim I, Lee E. Effects of Visual Communication Design Accessibility (VCDA) Guidelines for Low Vision on Public and Open Government Health Data. Healthcare. 2023; 11(7):1047. https://doi.org/10.3390/healthcare11071047

Chicago/Turabian StyleLim, Jongho, Woojin Kim, Ilkon Kim, and Eunjoo Lee. 2023. "Effects of Visual Communication Design Accessibility (VCDA) Guidelines for Low Vision on Public and Open Government Health Data" Healthcare 11, no. 7: 1047. https://doi.org/10.3390/healthcare11071047

APA StyleLim, J., Kim, W., Kim, I., & Lee, E. (2023). Effects of Visual Communication Design Accessibility (VCDA) Guidelines for Low Vision on Public and Open Government Health Data. Healthcare, 11(7), 1047. https://doi.org/10.3390/healthcare11071047