The Relationship between Internet Patient Satisfaction Ratings and COVID-19 Outcomes

Abstract

1. Introduction

2. Materials and Methods

2.1. Data Sources

2.2. Statistical Analysis

- First year of the pandemic: 11 March 2020–11 March 2021;

- Initial pandemic: 11 March 2020–11 June 2020;

- Later pandemic: 11 June 2020–11 March 2021.

- Summer/Fall pandemic: 11 June 2020–11 November 2020;

- Holiday rise: 11 November 2020–11 January 2021;

- Holiday drop: 11 January 2021–11 March 2021.

- yit = COVID-19 outcome accounting for localities, time, covariates, and error;

- α = y-intercept;

- k = number of covariates;

- i = number of localities;

- t = observations across time;

- βk = coefficient for each covariate;

- xik = time-invariant covariates across localities;

- uit = random error varying across localities and time.

- Population infection rate → star rating, age ≥ 65, poverty;

- Population death rate → star rating, age ≥ 65, poverty, no health insurance;

- Infected death rate → star rating, age ≥ 65, poverty, no health insurance.

3. Results

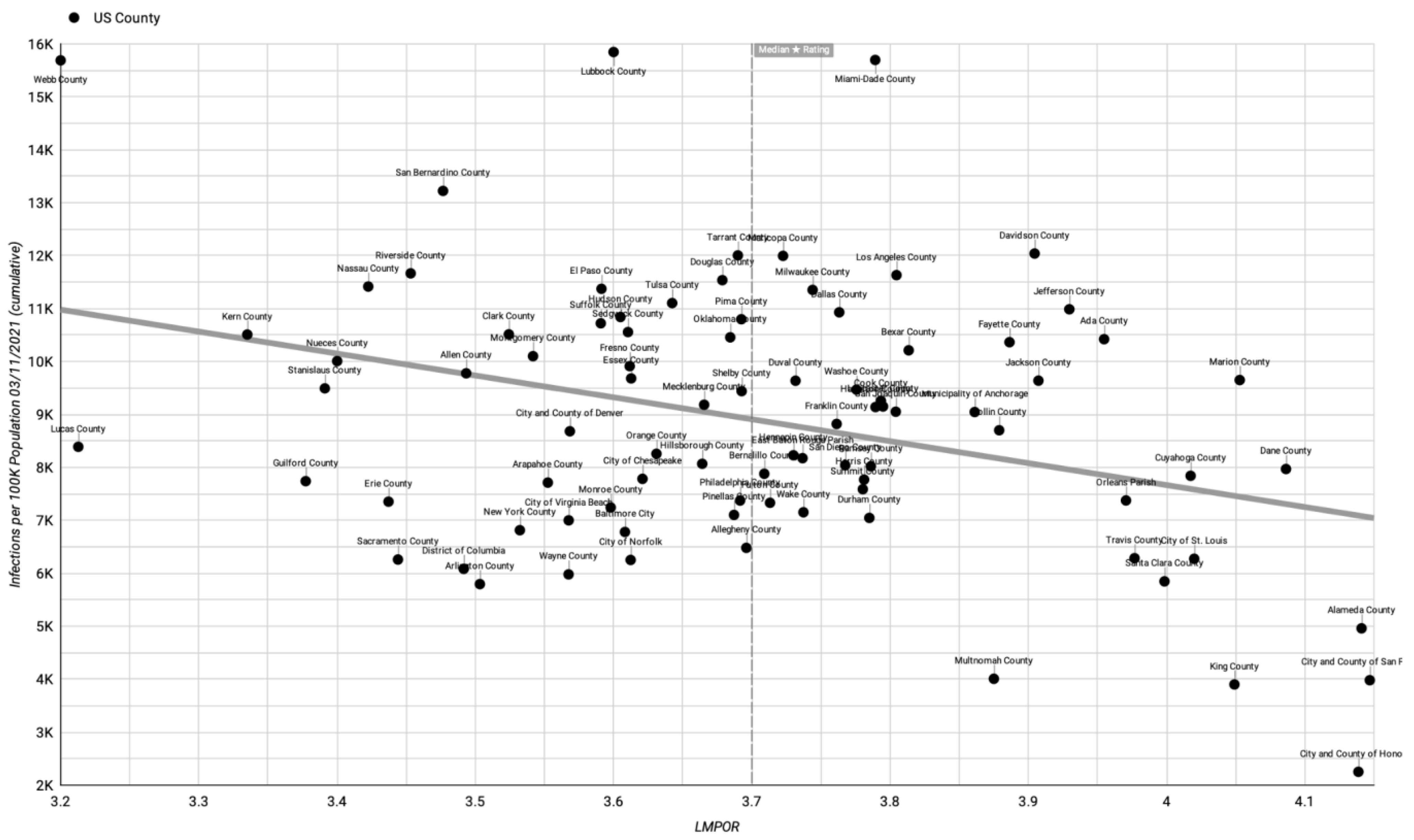

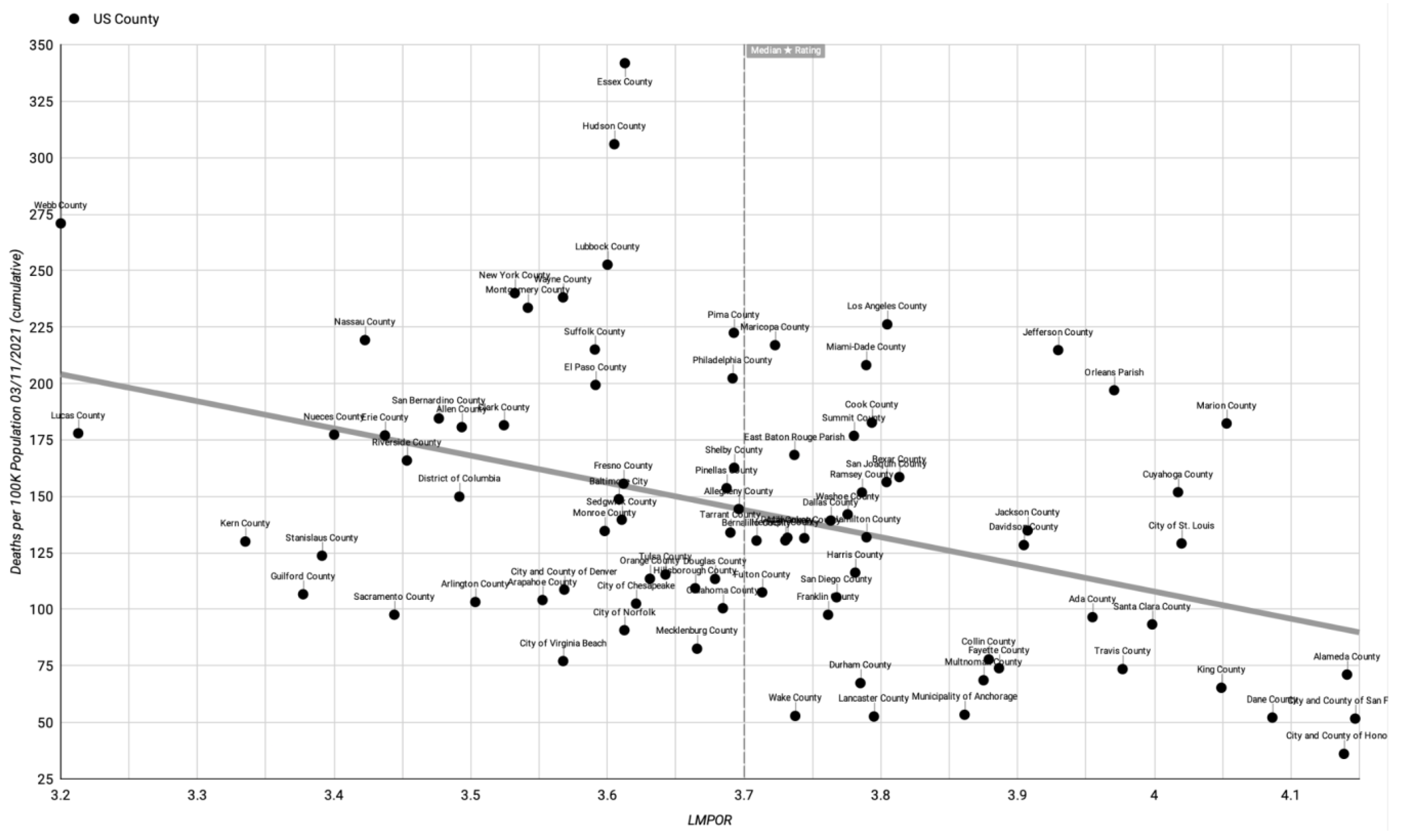

3.1. Descriptive Data

3.2. Main Results

4. Discussion

4.1. Implications

4.2. Limitations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Giordano, L.A.; Elliott, M.N.; Goldstein, E.; Lehrman, W.G.; Spencer, P.A. Development, Implementation, and Public Reporting of the HCAHPS Survey. Med. Care Res. Rev. 2010, 67, 27–37. [Google Scholar] [CrossRef] [PubMed]

- Wallace, B.C.; Paul, M.J.; Sarkar, U.; Trikalinos, T.A.; Dredze, M. A large-scale quantitative analysis of latent factors and sentiment in online doctor reviews. J. Am. Med. Inform. Assoc. 2014, 21, 1098–1103. [Google Scholar] [CrossRef] [PubMed]

- Smith, R.J.; Lipoff, J.B. Evaluation of Dermatology Practice Online Reviews: Lessons from Qualitative Analysis. JAMA Dermatol. 2016, 152, 153–157. [Google Scholar] [CrossRef] [PubMed]

- Schlesinger, M.; Grob, R.; Shaller, D.; Martino, S.C.; Parker, A.M.; Finucane, M.L.; Cerully, J.L.; Rybowsk, L. Taking Patients’ Narratives about Clinicians from Anecdote to Science. N. Engl. J. Med. 2015, 373, 675–679. [Google Scholar] [CrossRef] [PubMed]

- Ranard, B.L.; Werner, R.M.; Antanavicius, T.; Schwartz, H.A.; Smith, R.J.; Meisel, Z.F.; Asch, D.A.; Ungar, L.H.; Merchant, R.M. Yelp Reviews of Hospital Care Can Supplement and Inform Traditional Surveys of the Patient Experience of Care. Health Aff. 2016, 35, 697–705. [Google Scholar] [CrossRef]

- Lagu, T.; Goff, S.L.; Hannon, N.S.; Shatz, A.; Lindenauer, P.K. A Mixed-Methods Analysis of Patient Reviews of Hospital Care in England: Implications for Public Reporting of Health Care Quality Data in the United States. Jt. Comm. J. Qual. Patient Saf. 2013, 39, 7–15. [Google Scholar] [CrossRef]

- Kellogg, C.; Zhu, Y.; Cardenas, V.; Vazquez, K.; Johari, K.; Rahman, A.; Enguidanos, S. What Consumers Say about Nursing Homes in Online Reviews. Gerontologist 2018, 58, e273–e280. [Google Scholar] [CrossRef]

- Lin, Y.; Hong, Y.; Henson, B.; Stevenson, R.; Hong, S.; Lyu, T.; Liang, C. Assessing Patient Experience and Healthcare Quality of Dental Care Using Patient Online Reviews in the United States: Mixed Methods Study. J. Med. Internet Res. 2020, 22, e18652. [Google Scholar] [CrossRef]

- Bardach, N.S.; Penaloza, R.; Boscardin, W.J.; Dudley, R.A. The relationship between commercial website ratings and traditional hospital performance measures in the USA. BMJ Qual. Saf. 2013, 22, 194–202. [Google Scholar] [CrossRef]

- Greaves, F.; Pape, U.J.; King, D.; Darzi, A.; Majeed, A.; Wachter, R.M.; Millett, C. Associations between Web-Based Patient Ratings and Objective Measures of Hospital Quality. Arch. Intern. Med. 2012, 172, 435–436. [Google Scholar] [CrossRef]

- Kilaru, A.S.; Meisel, Z.F.; Paciotti, B.; Ha, Y.P.; Smith, R.J.; Ranard, B.L.; Merchant, R.M. What do patients say about emergency departments in online reviews? A qualitative study. BMJ Qual. Saf. 2016, 25, 14–24. [Google Scholar] [CrossRef]

- Yu, J.; Samuel, L.; Yalçin, S.; Sultan, A.A.; Kamath, A.F. Patient-Recorded Physician Ratings: What Can We Learn from 11,527 Online Reviews of Orthopedic Surgeons? J. Arthroplast. 2020, 35, S364–S367. [Google Scholar] [CrossRef]

- Tran, N.; Lee, J. Online Reviews as Health Data: Examining the Association between Availability of Health Care Services and Patient Star Ratings Exemplified by the Yelp Academic Dataset. JMIR Public Health Surveill. 2017, 3, e43. [Google Scholar] [CrossRef]

- Vanguard Communications. New Happy Patient Index Ranks Best & Worst U.S. Cities by Physician Reviews. 2014. Available online: https://www.globenewswire.com/news-release/2014/06/02/641256/10084268/en/New-Happy-Patient-Index-Ranks-Best-Worst-U-S-Cities-by-Physician-Reviews.html (accessed on 21 January 2021).

- USA Facts. Detailed Methodology and Sources: COVID-19 Data. 2020. Available online: https://usafacts.org/articles/detailed-methodology-covid-19-data/ (accessed on 21 January 2021).

- ACS Demographic and Housing Estimates. U.S. Census Bureau, 2015–2019 American Community Survey 5-Year Estimates. Available online: https://data.census.gov/table?q=DP05:+ACS+DEMOGRAPHIC+AND+HOUSING+ESTIMATES&g=050XX00US06077,32003,06037,13121,12031,21067,27123,22071,25025,22033,02020,06085,20173,34013,06001,34017,12086,16001,32031,29510,31109,27053,08031,26163,06099,31055,29095,11001,12057,06059,06019,15003,12095,17031,24510,01073,08041,06065,06067,08005,12103,06029,18003,18097,04019,01101,04013,06071,06073,06075,40109,36029,36103,37119,39035,39153,55025,41051,48303,47037,48029,37183,39061,37081,48141,48085,42003,42101,40143,47157,48201,36059,51013,37063,35001,53033,39049,36055,55079,51550,48479,48439,51710,36061,39095,51810,48453,48355,48113&tid=ACSDP5Y2019.DP05 (accessed on 13 May 2021).

- Selected Economic Characteristics. U.S. Census Bureau, 2015–2019 American Community Survey 5-Year Estimates. Available online: https://data.census.gov/table?q=DP03:+SELECTED+ECONOMIC+CHARACTERISTICS&g=050XX00US06077,32003,06037,13121,12031,21067,27123,22071,25025,22033,02020,06085,20173,34013,06001,34017,12086,16001,32031,29510,31109,27053,08031,26163,06099,31055,29095,11001,12057,06059,06019,15003,12095,17031,24510,01073,08041,06065,06067,08005,12103,06029,18003,18097,04019,01101,04013,06071,06073,06075,40109,36029,36103,37119,39035,39153,55025,41051,48303,47037,48029,37183,39061,37081,48141,48085,42003,42101,40143,47157,48201,36059,51013,37063,35001,53033,39049,36055,55079,51550,48479,48439,51710,36061,39095,51810,48453,48355,48113&tid=ACSDP5Y2019.DP03 (accessed on 13 May 2021).

- Selected Social Characteristics in the United States. U.S. Census Bureau, 2015–2019 American Community Survey 5-Year Estimates. Available online: https://data.census.gov/table?q=DP02:+SELECTED+SOCIAL+CHARACTERISTICS+IN+THE+UNITED+STATES&g=050XX00US06077,32003,06037,13121,12031,21067,27123,22071,25025,22033,02020,06085,20173,34013,06001,34017,12086,16001,32031,29510,31109,27053,08031,26163,06099,31055,29095,11001,12057,06059,06019,15003,12095,17031,24510,01073,08041,06065,06067,08005,12103,06029,18003,18097,04019,01101,04013,06071,06073,06075,40109,36029,36103,37119,39035,39153,55025,41051,48303,47037,48029,37183,39061,37081,48141,48085,42003,42101,40143,47157,48201,36059,51013,37063,35001,53033,39049,36055,55079,51550,48479,48439,51710,36061,39095,51810,48453,48355,48113&tid=ACSDP5Y2019.DP02 (accessed on 13 May 2021).

- Kim, L.; Garg, S.; O’halloran, A.; Whitaker, M.; Pham, H.; Anderson, E.J.; Armistead, I.; Bennett, N.M.; Billing, L.; Como-Sabetti, K.; et al. Risk Factors for Intensive Care Unit Admission and In-hospital Mortality among Hospitalized Adults Identified through the US Coronavirus Disease 2019 (COVID-19)-Associated Hospitalization Surveillance Network (COVID-NET). Clin. Infect. Dis. 2021, 72, e206–e214. [Google Scholar] [CrossRef]

- Patel, J.A.; Nielsen, F.B.H.; Badiani, A.A.; Assi, S.; Unadkat, V.A.; Patel, B.; Ravindrane, R.; Wardle, H. Poverty, inequality and COVID-19: The forgotten vulnerable. Public Health 2020, 183, 110–111. [Google Scholar] [CrossRef]

- Adhikari, S.; Pantaleo, N.P.; Feldman, J.M.; Ogedegbe, O.; Thorpe, L.; Troxel, A.B. Assessment of Community-Level Disparities in Coronavirus Disease 2019 (COVID-19) Infections and Deaths in Large US Metropolitan Areas. JAMA Netw. Open 2020, 3, e2016938. [Google Scholar] [CrossRef]

- McWilliams, J.M. Health consequences of uninsurance among adults in the United States: Recent evidence and implications. Milbank Q. 2009, 87, 443–494. [Google Scholar] [CrossRef]

- Hong, Y.; Liang, C.; Radcliff, T.; Wigfall, L.; Street, R. What Do Patients Say About Doctors Online? A Systematic Review of Studies on Patient Online Reviews. J. Med. Internet Res. 2019, 21, e12521. [Google Scholar] [CrossRef]

- Chen, Y. User-Generated Physician Ratings—Evidence from Yelp. 2018. Available online: http://stanford.edu/~yiwei312/papers/jmp_chen.pdf (accessed on 28 January 2021).

- Meterko, M.; Wright, S.; Lin, H.; Lowy, E.; Cleary, P.D. Mortality among patients with acute myocardial infarction: The influences of patient-centered care and evidence-based medicine. Health Serv. Res. 2010, 45 Pt 1, 1188–1204. [Google Scholar] [CrossRef]

- Fong, R.L.; Bertakis, K.D.; Franks, P. Association between Obesity and Patient Satisfaction. Obesity 2006, 14, 1402–1411. [Google Scholar] [CrossRef]

- Sequist, T.D.; Schneider, E.C.; Anastario, M.; Odigie, E.G.; Marshall, R.; Rogers, W.H.; Safran, D.G. Quality monitoring of physicians: Linking patients’ experiences of care to clinical quality and outcomes. J. Gen. Intern. Med. 2008, 23, 1784–1790. [Google Scholar] [CrossRef] [PubMed]

- Lang, F.; Floyd, M.R.; Beine, K.L. Clues to Patients’ Explanations and Concerns About Their Illnesses—A Call for Active Listening. Arch. Fam. Med. 2000, 9, 222–227. [Google Scholar] [CrossRef] [PubMed]

- Montini, T.; Noble, A.A.; Stelfox, H.T. Content analysis of patient complaints. Int. J. Qual. Health Care 2008, 20, 412–420. [Google Scholar] [CrossRef]

- Lynn-sMcHale, D.J.; Deatrick, J.A. Trust between Family and Health Care Provider. J. Fam. Nurs. 2000, 6, 210–230. [Google Scholar] [CrossRef]

- Coulter, A. Patients’ views of the good doctor—Doctors have to earn patients’ trust. BMJ 2002, 325, 668–669. [Google Scholar] [CrossRef]

- Bogue, R.J.; Guarneri, J.G.; Reed, M.; Bradley, K.; Hughes, J. Secrets of physician satisfaction: Study identifies pressure points and reveals life practices of highly satisfied doctors. Physician Exec. 2006, 32, 6. Available online: https://link.gale.com/apps/doc/A155405677/AONE (accessed on 1 July 2021).

- Funk, C.; Hefferon, M.; Kennedy, B.; Johnson, C. Trust and Mistrust in Americans’ Views of Scientific Experts. Pew Research Center. 2019. Available online: https://www.pewresearch.org/science/2019/08/02/trust-and-mistrust-in-americans-views-of-scientific-experts/ (accessed on 1 July 2021).

- Cowling, B.J.; Aiello, A.E. Public Health Measures to Slow Community Spread of Coronavirus Disease 2019. J. Infect. Dis. 2020, 221, 1749–1751. [Google Scholar] [CrossRef]

- MacIntyre, C.R.; Seale, H.; Dung, T.C.; Hien, N.T.; Nga, P.T.; Chughtai, A.A.; Rahman, B.; Dwyer, D.E.; Wang, Q. A cluster randomised trial of cloth masks compared with medical masks in healthcare workers. BMJ Open 2015, 5, e006577. [Google Scholar] [CrossRef]

- Caballero-Domínguez, C.C.; Jiménez-Villamizar, M.P.; Campo-Arias, A. Suicide risk during the lockdown due to coronavirus disease (COVID-19) in Colombia. Death Stud. 2020, 46, 885–890. [Google Scholar] [CrossRef]

- 2020 Survey of America’s Physicians COVID-19 Impact Edition—Part One of Three: COVID-19′s Impact on Physicians’ Practices and Their Patients. Physicians Foundation. 2020. Available online: http://physiciansfoundation.org/wp-content/uploads/2020/08/20-1278-Merritt-Hawkins-2020-Physicians-Foundation-Survey.6.pdf (accessed on 11 February 2021).

- Mitchell, E.P. Declines in Cancer Screening During COVID-19 Pandemic. J. Natl. Med. Assoc. 2020, 112, 563–564. [Google Scholar] [CrossRef]

- Donnally, C.J.; Li, D.J.; Maguire, J.A.; Roth, E.S.; Barker, G.P.; McCormick, J.R.; Rush, A.J.; Lebwohl, N.H. How social media, training, and demographics influence online reviews across three leading review websites for spine surgeons. Spine J. 2018, 18, 2081–2090. [Google Scholar] [CrossRef]

- Ramaswamy, A.; Yu, M.; Drangsholt, S.; Ng, E.; Culligan, P.J.; Schlegel, P.N.; Hu, J.C. Patient Satisfaction with Telemedicine During the COVID-19 Pandemic: Retrospective Cohort Study. J. Med. Internet Res. 2020, 22, e20786. [Google Scholar] [CrossRef]

- Kwok, S.; Adam, S.; Ho, J.H.; Iqbal, Z.; Turkington, P.; Razvi, S.; Le Roux, C.W.; Soran, H.; Syed, A.A. Obesity: A critical risk factor in the COVID-19 pandemic. Clin. Obes. 2020, 10, e12403. [Google Scholar] [CrossRef]

- Drewnowski, A.; Specter, S.E. Poverty and obesity: The role of energy density and energy costs. Am. J. Clin. Nutr. 2004, 79, 6–16. [Google Scholar] [CrossRef]

- Lyman, G.H.; Kuderer, N.M. The strengths and limitations of meta-analyses based on aggregate data. BMC Med. Res. Methodol. 2005, 5, 14. [Google Scholar] [CrossRef]

- Langerhuizen, D.W.G.; Brown, L.E.; Doornberg, J.N.; Ring, D.; Kerkhoffs, G.M.M.J.; Janssen, S.J. Analysis of Online Reviews of Orthopaedic Surgeons and Orthopaedic Practices Using Natural Language Processing. J. Am. Acad. Orthop. Surg. 2021, 29, 337–344. [Google Scholar] [CrossRef]

- Martinez, K.A.; Rood, M.; Jhangiani, N.; Kou, L.; Boissy, A.; Rothberg, M.B. Association between Antibiotic Prescribing for Respiratory Tract Infections and Patient Satisfaction in Direct-to-Consumer Telemedicine. JAMA Internet Med. 2018, 178, 1558–1560. [Google Scholar] [CrossRef]

- Vanguard Communications. Doctors Online Reviews Are Polarized. Available online: https://vanguardcommunications.net/reviews-love-or-hate-doctors/ (accessed on 1 July 2021).

- New York State Office of the Attorney General. Attorney General Cuomo Secures Settlement with Plastic Surgery Franchise That Flooded Internet with False Positive Reviews. 2009. Available online: https://ag.ny.gov/press-release/2009/attorney-general-cuomo-secures-settlement-plastic-surgery-franchise-flooded (accessed on 1 July 2021).

- Yelp. Yelp’s Consumer Protection Initiative: Empowering Our Users. 2018. Available online: https://blog.yelp.com/2018/08/yelps-consumer-protection-initiative-empowering-our-users (accessed on 1 July 2021).

| Min. | Median | Average | Max. | Standard Deviation | |

|---|---|---|---|---|---|

| County Population | 226,941 | 836,062 | 1,229,100 | 10,081,570 | 1,343,643 |

| Poverty | 5.60% | 14.70% | 14.54% | 27.50% | 4.29% |

| Age ≥ 65 | 9.20% | 13.40% | 13.63% | 24.30% | 2.36% |

| No Health Insurance | 3.20% | 8.50% | 9.23% | 27.70% | 4.38% |

| Stars | 3.20 | 3.70 | 3.70 | 4.15 | 0.21 |

| 11 March 2020 | 11 June 2020 | 11 November 2020 | 11 January 2021 | 11 March 2021 | |

|---|---|---|---|---|---|

| Modeled Number of Days | 1 | 93 | 246 | 307 | 366 |

| Modeled Number of Counties | 89 | 89 | 89 | 89 | 89 |

| Modeled Population | 109,389,862 | 109,389,862 | 109,389,862 | 109,389,862 | 109,389,862 |

| US Population | 328,239,523 | 328,239,523 | 328,239,523 | 328,239,523 | 328,239,523 |

| Model Pop./US Pop. | 33.326% | 33.326% | 33.326% | 33.326% | 33.326% |

| Model Deaths Total | 32 | 35,961 | 77,656 | 114,369 | 169,656 |

| US Deaths Total | 40 | 113,073 | 238,816 | 369,388 | 523,420 |

| Model Deaths/US Deaths | 80.0% | 31.8% | 32.5% | 31.0% | 32.4% |

| Model Infections Total | 623 | 698,299 | 3,609,295 | 7,868,688 | 10,128,763 |

| US Infections Total | 1339 | 2,010,456 | 10,286,991 | 22,265,944 | 28,731,120 |

| Model Infections/US Infections | 46.5% | 34.7% | 35.1% | 35.3% | 35.3% |

| Model Deaths/Model Population | 0.0% | 0.0% | 0.1% | 0.1% | 0.2% |

| US Deaths/US Population | 0.0% | 0.0% | 0.1% | 0.1% | 0.2% |

| Model Rate/US Rate | 240.1% | 95.4% | 97.6% | 92.9% | 97.3% |

| Model Infections/Model Population | 0.0% | 0.6% | 3.3% | 7.2% | 9.3% |

| US Infections/US Population | 0.0% | 0.6% | 3.1% | 6.8% | 8.8% |

| Model Rate/US Rate | 139.6% | 104.2% | 105.3% | 106.0% | 105.8% |

| Model Deaths/Model Infected | 5.1% | 5.1% | 2.2% | 1.5% | 1.7% |

| US Deaths/US Infected | 3.0% | 5.6% | 2.3% | 1.7% | 1.8% |

| Model Rate/US Rate | 171.9% | 91.6% | 92.7% | 87.6% | 91.9% |

| Model Avg. Incidence ** | +0.3★ ARR | +0.3★ RRR | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Actual | +0.3 | 95% CI | Est. | 95% CI | Est. | 95% CI | ||||

| Pandemic Year (11 March 2020–11 March 2021) | ||||||||||

| Infections/Population | 3.04% | 2.72% | 2.46% | 2.97% | 0.33% | 0.58% | 0.07% | 10.73% | 19.17% | 2.29% |

| Deaths/Population | 0.06% | 0.04% | 0.01% | 0.08% | 0.01% | 0.05% | −0.02% | 25.14% | 84.07% | −33.79% |

| Deaths/Infections | 2.61% | 2.17% | 1.93% | 2.40% | 0.44% | 0.67% | 0.20% | 16.79% | 25.78% | 7.79% |

| Early Pandemic (3 November 2020–6 November 2020) | ||||||||||

| Infections/Population | 0.29% | 0.20% | 0.05% | 0.36% | 0.08% | 0.23% | −0.07% | 28.56% | 81.16% | −24.04% |

| Deaths/Population | 0.01% | 0.01% | −0.02% | 0.04% | 0.01% | 0.04% | −0.03% | 40.58% | 263.76% | −182.61% |

| Deaths/Infections | 3.46% | 3.16% | 2.62% | 3.71% | 0.30% | 0.84% | −0.25% | 8.56% | 24.32% | −7.20% |

| Later Pandemic (6 November 2020–3 November 2021) | ||||||||||

| Infections/Population | 3.99% | 3.58% | 3.24% | 3.91% | 0.41% | 0.75% | 0.07% | 10.32% | 18.81% | 1.83% |

| Deaths/Population | 0.07% | 0.06% | 0.01% | 0.10% | 0.02% | 0.06% | −0.03% | 24.07% | 85.00% | −36.85% |

| Deaths/Infections | 2.32% | 1.84% | 1.58% | 2.09% | 0.48% | 0.74% | 0.23% | 20.89% | 31.80% | 9.98% |

| Summer/Fall Pandemic (6 November 2020–11 November 2020) | ||||||||||

| Infections/Population | 1.90% | 1.69% | 1.37% | 2.00% | 0.21% | 0.52% | −0.10% | 11.06% | 27.62% | −5.50% |

| Deaths/Population | 0.05% | 0.04% | −0.01% | 0.09% | 0.02% | 0.06% | −0.03% | 29.24% | 125.76% | −67.28% |

| Deaths/Infections | 2.84% | 2.19% | 1.82% | 2.56% | 0.65% | 1.02% | 0.28% | 22.99% | 36.03% | 9.95% |

| Holiday Rise (11 November 2020–1 November 2021) | ||||||||||

| Infections/Population | 5.06% | 4.69% | 3.88% | 5.49% | 0.37% | 1.17% | −0.43% | 7.31% | 23.19% | −8.57% |

| Deaths/Population | 0.08% | 0.06% | −0.04% | 0.16% | 0.02% | 0.12% | −0.08% | 23.05% | 145.02% | −98.91% |

| Deaths/Infections | 1.73% | 1.37% | 0.91% | 1.83% | 0.37% | 0.83% | −0.10% | 21.10% | 47.71% | −5.50% |

| Holiday Drop (1 November 2021–3 November 2021) | ||||||||||

| Infections/Population | 8.26% | 7.29% | 6.29% | 8.28% | 0.97% | 1.97% | −0.03% | 11.76% | 23.86% | −0.33% |

| Deaths/Population | 0.13% | 0.10% | −0.02% | 0.23% | 0.02% | 0.15% | −0.10% | 19.32% | 119.12% | −80.47% |

| Deaths/Infections | 1.56% | 1.38% | 0.93% | 1.83% | 0.18% | 0.63% | −0.27% | 11.27% | 40.08% | −17.53% |

| +0.3 ★Modeled Outcomes | Difference (Actual–Modeled) | ||||||

|---|---|---|---|---|---|---|---|

| Pandemic Year (11 March 2020–11 March 2021) | Actual | Estimate | 95% CI | Estimate | 95% CI | ||

| Infections of US Population | 28,729,781 | 25,646,572 | 23,221,551 | 28,071,593 | 3,083,209 | 658,188 | 5,508,230 |

| Deaths of US Population | 523,380 | 391,807 | 83,364 | 700,249 | 131,573 | −176,869 | 440,016 |

| Deaths of US Infected | 523,380 | 435,518 | 388,440 | 482,596 | 87,862 | 40,784 | 134,940 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Stanley, J.; Hensley, M.; King, R.; Baum, N. The Relationship between Internet Patient Satisfaction Ratings and COVID-19 Outcomes. Healthcare 2023, 11, 1411. https://doi.org/10.3390/healthcare11101411

Stanley J, Hensley M, King R, Baum N. The Relationship between Internet Patient Satisfaction Ratings and COVID-19 Outcomes. Healthcare. 2023; 11(10):1411. https://doi.org/10.3390/healthcare11101411

Chicago/Turabian StyleStanley, Jonathan, Mark Hensley, Ronald King, and Neil Baum. 2023. "The Relationship between Internet Patient Satisfaction Ratings and COVID-19 Outcomes" Healthcare 11, no. 10: 1411. https://doi.org/10.3390/healthcare11101411

APA StyleStanley, J., Hensley, M., King, R., & Baum, N. (2023). The Relationship between Internet Patient Satisfaction Ratings and COVID-19 Outcomes. Healthcare, 11(10), 1411. https://doi.org/10.3390/healthcare11101411