Fusion-Extracted Features by Deep Networks for Improved COVID-19 Classification with Chest X-ray Radiography

Abstract

1. Introduction

2. The Related Works of Classification COVID-19 Using Deep Learning Methods

2.1. Single Deep Learning Method

2.2. Fusion-Based Models

3. Materials and Methods

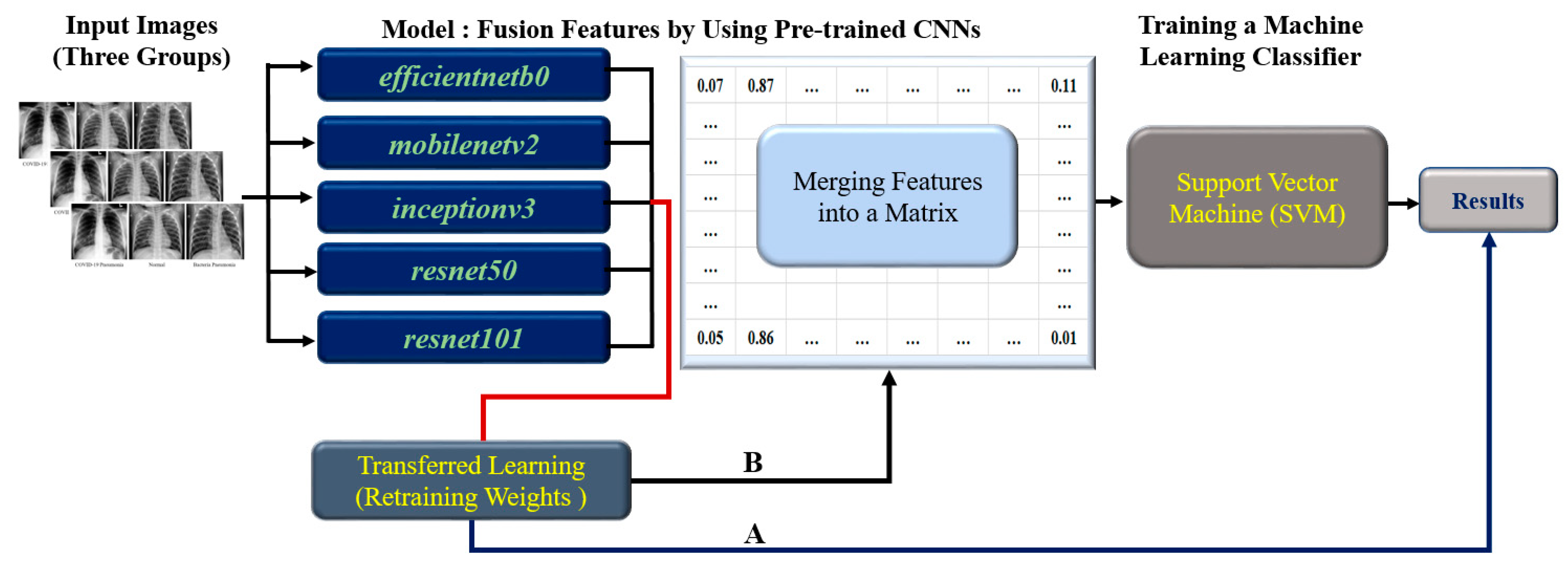

3.1. The Flow of Research

- Image preprocessing: Prepare chest X-ray images for input, including image resizing and normalization.

- Transferred Learning: Train each of the five individual CNN models (EfficientNetb0, MobileNetv2, Inceptionv3, ResNet50, and ResNet101) using transferred learning on the preprocess chest X-ray images.

- Feature Extraction: Extract features from each CNN model’s output layer.

- Feature Fusion for training model using 80% of the dataset: A. Split the dataset into training (80%) and testing (20%) sets. B. Combine the features extracted from each CNN model using the training set. C. Create a feature matrix with dimensions 15 × 4208 for the training set, which combines all five individual CNN model features (each contributing a 3 × 4208 feature size). D. Proceed to train the SVM classifier using the fused feature matrix from the training set.

- Training and testing SVM Classifier: Use the fused feature matrix as input for training the SVM classifier with an RBF kernel. The fused feature matrix size is 15 × 1052 for testing the SVM classifier.

- Evaluated Classifier Performance: A. Apply the trained SVM classifier on the test dataset to predict the class labels (COVID-19, Normal, or Bacterial). B. Calculate performance metrics such as accuracy, Kappa values, recall rate, precision scores, and ROC area.

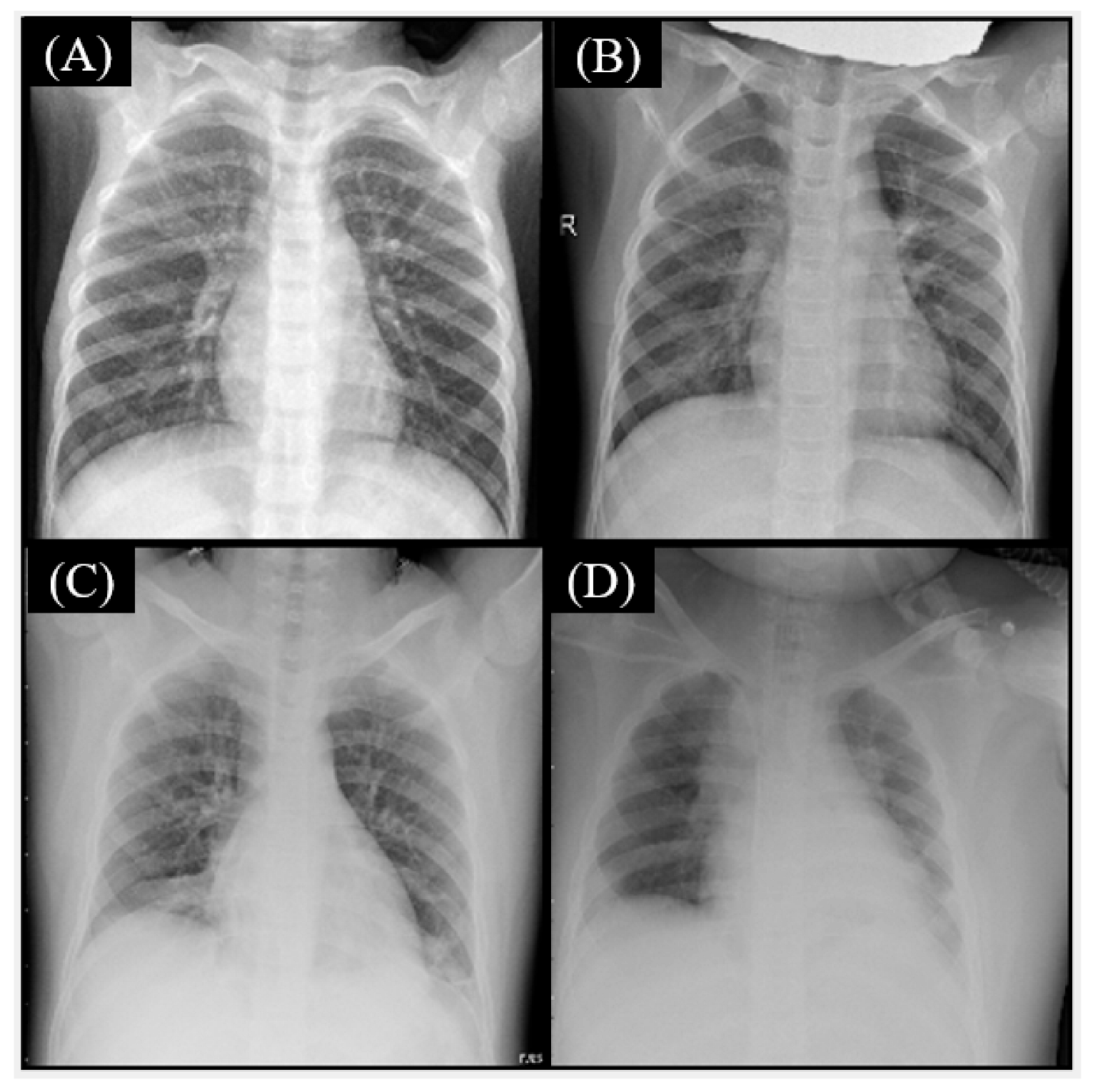

3.2. The Datasets

3.3. Image Processing

- The first step is to load the image into memory and convert it to an appropriate format for analysis. This can include resizing the image to a 300 × 300 matrix size and normalizing the pixel values to (0, 1).

- Gray chest X-ray images can sometimes lack contrast or sharpness, making it difficult for a CNN to identify features. The input chest X-ray images were transformed to RGB three channels (or pseudo-color). This step was performed to enhance the contrast and sharpness of the images, enabling the CNN models to identify relevant features better.

3.4. Transferred Learning for Convolutional Neural Network

- Thirty epochs: The number of epochs determines how often the entire dataset passes through the training process. The choice of 30 epochs might have been based on previous experiments or research, indicating that this number of epochs provides a good balance between training time and model performance (i.e., avoiding underfitting or overfitting).

- Batch size of five: The batch size is the number of samples used for each weight update during training. A smaller batch size, such as five, can result in faster convergence of the model and potentially better generalization to new data, as it introduces some noise during training. However, it might require more computation time compared to larger batch sizes. The choice of a batch size of five could be based on prior experience, computational constraints, or the specific dataset used in this study.

- Learning rate of 0.001: The learning rate determines the step size taken during optimization. A learning rate of 0.001 is common in many deep learning applications, as it often balances convergence speed and stability. This learning rate might have been chosen based on prior research or empirical results, suggesting that it works well for this study’s specific problem and model architecture.

3.5. Performance Index for Classification

4. Results

5. Discussion

- Complementary information: Different CNN architectures have varied strengths in recognizing specific features or patterns in the data. By merging the features extracted by multiple CNNs, Fusion CNN can access a richer and more comprehensive set of features, which aids in achieving better classification results.

- Ensemble effect: Combining the outputs of multiple models can help reduce the risk of overfitting the training data and improve the generalization to unseen data. Fusion CNN effectively works as an ensemble method, where the combined predictions of several models lead to a more accurate and robust final prediction.

- Error correction: If a single CNN model makes a mistake in classification, the other models’ correct predictions can compensate for the error when the features are merged in Fusion CNN. This error correction mechanism can lead to improved performance.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Subhalakshmi, R.T.; Appavu Alias Balamurugan, S.; Sasikala, S. Deep learning-based fusion model for COVID-19 diagnosis and classification using computed tomography images. Concurrent Eng. Res. Appl. 2022, 30, 116–127. [Google Scholar] [CrossRef] [PubMed]

- Bhattacharjya, U.; Sarma, K.K.; Medhi, J.P.; Choudhury, B.K.; Barman, G. Automated diagnosis of COVID-19 using radiological modalities and artificial intelligence functionalities: A retrospective study based on chest HRCT database. Biomed. Signal Process. Control 2023, 80, 104297. [Google Scholar] [CrossRef]

- Bahabri, I.; Abdulaal, A.; Alanazi, T.; Alenazy, S.; Alrumih, Y.; Alqahtani, R.; Al Johani, S.; Bosaeed, M.; Al-Dorzi, H.M. Characteristics, Management, and Outcomes of Community-Acquired Pneumonia due to Respiratory Syncytial Virus: A Retrospective Study. Pulm. Med. 2023, 2023, 4310418. [Google Scholar] [CrossRef]

- Khan, A.I.; Shah, J.L.; Bhat, M.M. CoroNet: A deep neural network for detection and diagnosis of COVID-19 from chest x-ray images. Comput. Methods Programs Biomed. 2020, 196, 105581. [Google Scholar] [CrossRef] [PubMed]

- Rahman, T.; Khandakar, A.; Qiblawey, Y.; Tahir, A.; Kiranyaz, S.; Kashem, S.B.A.; Islam, M.T.; Al Maadeed, S.; Zughaier, S.M.; Khan, M.S.; et al. Exploring the effect of image enhancement techniques on COVID-19 detection using chest X-ray images. Comput. Biol. Med. 2021, 132, 104319. [Google Scholar] [CrossRef]

- Wang, L.; Lin, Z.Q.; Wong, A. COVID-Net: A tailored deep convolutional neural network design for detection of COVID-19 cases from chest X-ray images. Sci. Rep. 2020, 10, 19549. [Google Scholar] [CrossRef] [PubMed]

- Ozsoz, M.; Ibrahim, A.U.; Serte, S.; Al-Turjman, F.; Yakoi, P.S. Viral and bacterial pneumonia detection using artificial intelligence in the era of COVID-19. Res. Sq. 2021. [Google Scholar] [CrossRef]

- Nour, M.; Cömert, Z.; Polat, K. A novel medical diagnosis model for COVID-19 infection detection based on deep features and Bayesian optimization. Appl. Soft Comput. 2020, 97, 106580. [Google Scholar] [CrossRef]

- Wang, D.; Mo, J.; Zhou, G.; Xu, L.; Liu, Y. An efficient mixture of deep and machine learning models for COVID-19 diagnosis in chest X-ray images. PLoS ONE 2020, 15, e0242535. [Google Scholar] [CrossRef]

- Hamza, A.; Khan, M.A.; Wang, S.-H.; Alhaisoni, M.; Alharbi, M.; Hussein, H.S.; Alshazly, H.; Kim, Y.J.; Cha, J. COVID-19 classification using chest X-ray images based on fusion-assisted deep Bayesian optimization and Grad-CAM visualization. Front. Public Health 2022, 10, 1046296. [Google Scholar] [CrossRef]

- Narasimha Reddy, S.; Kotte, K.; Santosh, N.C.; Sridharan, A.; Uthayakumar, J. Multi-modal fusion of deep transfer learning-based COVID-19 diagnosis and classification using chest X-ray images. Multimed. Tools Appl. 2023, 82, 12653–12677. [Google Scholar] [CrossRef] [PubMed]

- Malik, H.; Anees, T.; Din, M.; Naeem, A. CDC_Net: Multi-classification convolutional neural network model for detection of COVID-19, pneumothorax, pneumonia, lung cancer, and tuberculosis using chest X-rays. Multimed. Tools Appl. 2022, 1–26. [Google Scholar] [CrossRef] [PubMed]

- Dey, N.; Zhang, Y.D.; Rajinikanth, V.; Pugalenthi, R.; Raja, N.S.M. Customized VGG19 architecture for pneumonia detection in chest X-rays. Pattern Recognit. Lett. 2021, 143, 67–74. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An incremental improvement. arXiv 2018. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Khan, M.A.; Azhar, M.; Ibrar, K.; Alqahtani, A.; Alsubai, S.; Binbusayyis, A.; Kim, Y.J.; Chang, B. COVID-19 Classification from Chest X-ray Images: A Framework of Deep Explainable Artificial Intelligence. Comput. Intell. Neurosci. 2022, 2022, 4254631. [Google Scholar] [CrossRef]

- Karar, M.E.; Hemdan, E.E.; Shouman, M.A. Cascaded deep learning classifiers for computer-aided diagnosis of COVID-19 and pneumonia diseases in X-ray scans. Complex Intell. Syst. 2021, 7, 235–247. [Google Scholar] [CrossRef]

- Constantinou, M.; Exarchos, T.; Vrahatis, A.G.; Vlamos, P. COVID-19 Classification on Chest X-ray Images Using Deep Learning Methods. Int. J. Environ. Res. Public Health 2023, 20, 2035. [Google Scholar] [CrossRef]

- Chouat, I.; Echtioui, A.; Khemakhem, R.; Zouch, W.; Ghorbel, M.; Hamida, A.B. COVID-19 detection in CT and CXR images using deep learning models. Biogerontology 2022, 23, 65–84. [Google Scholar] [CrossRef] [PubMed]

- Attallah, O. RADIC: A tool for diagnosing COVID-19 from chest CT and X-ray scans using deep learning and quad-radiomics. Chemom. Intell. Lab. Syst. 2023, 233, 104750. [Google Scholar] [CrossRef] [PubMed]

- Attallah, O.; Samir, A. A wavelet-based deep learning pipeline for efficient COVID-19 diagnosis via CT slices. Appl. Soft Comput. 2022, 128, 109401. [Google Scholar] [CrossRef]

- Attallah, O. A computer-aided diagnostic framework for coronavirus diagnosis using texture-based radiomics images. Digit. Health 2022, 8, 20552076221092543. [Google Scholar] [CrossRef] [PubMed]

- Attallah, O. Deep learning-based CAD system for COVID-19 diagnosis via spectral-temporal images. In Proceedings of the 12th International Conference on Information Communication and Management (ICICM ‘22), London, UK, 13–15 July 2022; pp. 25–33. [Google Scholar] [CrossRef]

- Shankar, K.; Perumal, E. A novel hand-crafted with deep learning features based fusion model for COVID-19 diagnosis and classification using chest X-ray images. Complex Intell. Syst. 2021, 7, 1277–1293. [Google Scholar] [CrossRef] [PubMed]

- Ragab, D.A.; Attallah, O. FUSI-CAD: Coronavirus (COVID-19) diagnosis based on the fusion of CNNs and handcrafted features. PeerJ Comput. Sci. 2020, 6, e306. [Google Scholar] [CrossRef]

- Kermany, D.S.; Goldbaum, M.; Cai, W.; Lewis, M.A.; Xia, H.; Zhang, K. Identifying Medical Diagnoses and Treatable Diseases by Image-Based Deep Learning. Cell 2018, 172, 1122–1131.e9. [Google Scholar] [CrossRef]

| CNN | Recall Rate | Precision | Accuracy | Kappa | ||||

|---|---|---|---|---|---|---|---|---|

| COVID-19 | Normal | Bacterial | COVID-19 | Normal | Bacterial | |||

| EfficientNetb0 | 0.995 | 0.983 | 0.988 | 0.990 | 0.988 | 0.983 | 0.988 | 0.983 |

| MobileNetv2 | 0.987 | 0.919 | 0.964 | 0.980 | 0.975 | 0.918 | 0.956 | 0.934 |

| Inceptionv3 | 0.984 | 0.964 | 0.973 | 0.990 | 0.970 | 0.961 | 0.973 | 0.960 |

| ResNet50 | 0.881 | 0.905 | 0.972 | 0.980 | 0.978 | 0.832 | 0.920 | 0.880 |

| ResNet101 | 0.984 | 0.966 | 0.976 | 0.988 | 0.978 | 0.959 | 0.975 | 0.962 |

| Fusion CNN | 0.997 | 0.994 | 0.992 | 0.998 | 0.991 | 0.994 | 0.994 | 0.991 |

| Fusion CNN | EfficientNetb0 | |||||

|---|---|---|---|---|---|---|

| COVID-19 | Normal | Bacterial | COVID-19 | Normal | Bacterial | |

| COVID-19 | 1653 | 3 | 2 | 1649 | 4 | 5 |

| Normal | 4 | 1784 | 14 | 5 | 1771 | 26 |

| Bacterial | 1 | 9 | 1790 | 4 | 17 | 1779 |

| MobileNetv2 | Inceptionv3 | |||||

| COVID-19 | 1636 | 3 | 19 | 1632 | 15 | 11 |

| Normal | 10 | 1656 | 136 | 5 | 1737 | 60 |

| Bacterial | 25 | 40 | 1735 | 11 | 38 | 1751 |

| ResNet50 | ResNet101 | |||||

| COVID-19 | 1460 | 7 | 191 | 1631 | 7 | 20 |

| Normal | 8 | 1631 | 163 | 7 | 1740 | 55 |

| Bacterial | 21 | 29 | 1750 | 12 | 32 | 1756 |

| Group | Recall Rate | False Positive Rate | Precision | ROC Area |

|---|---|---|---|---|

| COVID-19 | 0.997 | 0.003 | 0.997 | 0.998 |

| Normal | 0.994 | 0.007 | 0.991 | 0.994 |

| Bacterial | 0.990 | 0.009 | 0.993 | 0.995 |

| Model | Type | Feature Size |

|---|---|---|

| Fusion CNN | Transferred Learning + Classifier Base | 15 × 4208 |

| EfficientNetb0 | Transferred Learning | 3 × 4208 |

| MobileNetv2 | Transferred Learning | 3 × 4208 |

| Inceptionv3 | Transferred Learning | 3 × 4208 |

| ResNet50 | Transferred Learning | 3 × 4208 |

| ResNet101 | Transferred Learning | 3 × 4208 |

| Author | Year | Method | Dataset | Accuracy | Limitation |

|---|---|---|---|---|---|

| Khan et al. [4] | 2020 | CoroNet (Deep Neural Network) | Chest X-ray images | 0.896 | Limited dataset |

| Karar et al. [21] | 2021 | Cascaded Deep Learning Classifiers | Chest X-ray images | 0.999 | Complexity of the model |

| Constantinou et al. [22] | 2023 | Pre-trained CNNs with Transfer Learning | Chest X-ray images | 0.960 | Limited generalization capability |

| Chouat et al. [23] | 2022 | CT and CXR images with Deep Learning Models | CT and CXR images | 0.905 | Limited to specific CNN models |

| Attallah [24] | 2023 | RADIC (Deep Learning and Quad-Radiomics) | CT and X-ray images | 0.994 | Complexity and computational cost |

| Attallah & Samir [25] | 2022 | Wavelet-based Deep Learning Pipeline | CT images | 0.997 | Limited to CT slices |

| Attallah [26] | 2022 | Texture-based Radiomics Images for COVID-19 Diagnosis | CT images | 0.997 | Limited to texture-based features |

| Shankar & Perumal [27] | 2021 | FM-HCF-DLF Model (Hand-crafted and Deep Learning Features Fusion) | Chest X-ray images | 0.941 | May not work well on larger datasets |

| Ragab & Attallah [29] | 2020 | FUSI-CAD (Fusion of CNNs and Hand-crafted Features) | Chest X-ray images | 0.990 | Complexity and computational cost |

| The Presented Method | 2023 | Fusion CNN Method | Chest X-ray images | 0.974 | Without Combined CT Images |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, K.-H.; Lu, N.-H.; Okamoto, T.; Huang, Y.-H.; Liu, K.-Y.; Matsushima, A.; Chang, C.-C.; Chen, T.-B. Fusion-Extracted Features by Deep Networks for Improved COVID-19 Classification with Chest X-ray Radiography. Healthcare 2023, 11, 1367. https://doi.org/10.3390/healthcare11101367

Lin K-H, Lu N-H, Okamoto T, Huang Y-H, Liu K-Y, Matsushima A, Chang C-C, Chen T-B. Fusion-Extracted Features by Deep Networks for Improved COVID-19 Classification with Chest X-ray Radiography. Healthcare. 2023; 11(10):1367. https://doi.org/10.3390/healthcare11101367

Chicago/Turabian StyleLin, Kuo-Hsuan, Nan-Han Lu, Takahide Okamoto, Yung-Hui Huang, Kuo-Ying Liu, Akari Matsushima, Che-Cheng Chang, and Tai-Been Chen. 2023. "Fusion-Extracted Features by Deep Networks for Improved COVID-19 Classification with Chest X-ray Radiography" Healthcare 11, no. 10: 1367. https://doi.org/10.3390/healthcare11101367

APA StyleLin, K.-H., Lu, N.-H., Okamoto, T., Huang, Y.-H., Liu, K.-Y., Matsushima, A., Chang, C.-C., & Chen, T.-B. (2023). Fusion-Extracted Features by Deep Networks for Improved COVID-19 Classification with Chest X-ray Radiography. Healthcare, 11(10), 1367. https://doi.org/10.3390/healthcare11101367