Health Professionals’ Experience Using an Azure Voice-Bot to Examine Cognitive Impairment (WAY2AGE)

Abstract

:1. Introduction

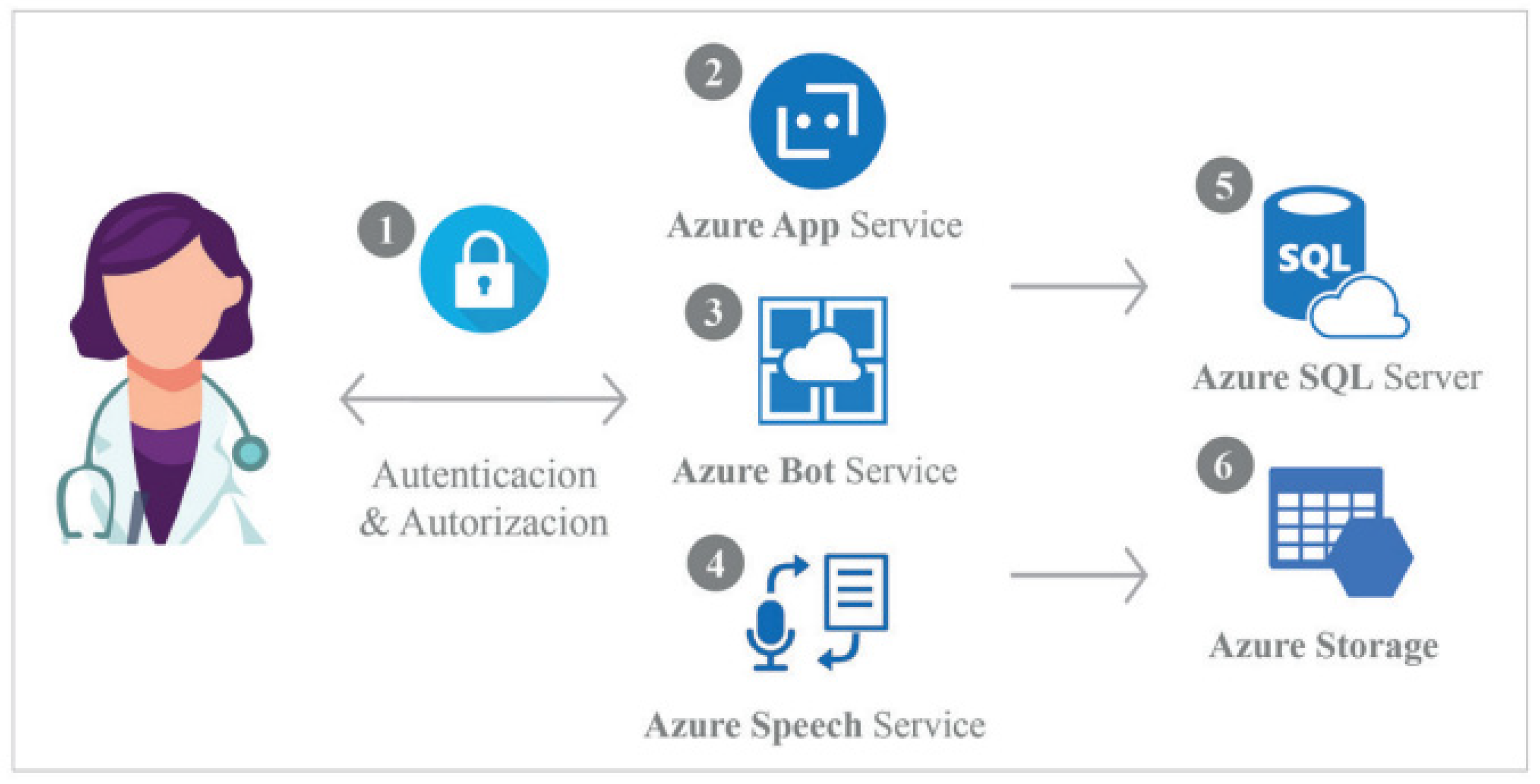

2. WAY2AGE Proposal

- The user (a health care professional) accesses the WAY2AGE application and identifies themselves in the system. The credentials are stored in a database for security reasons. Role-based authorization is controlled by the application.

- Once healthcare professionals are logged in, they can create new sessions or consult results and recordings.

- Healthcare professionals access the Bot Service page where the Bot Service interacts with older adults under assessment via text and voice.

- The Speech Service interprets the older adult’s words and transforms them into text, recording the session in MP3.

- The text results are recorded in the database by recording the code and date as well as each answer.

- MP3 files are uploaded to the storage space linked to the database record.

3. WAY2AGE Question Definitions

- [InputHints.ExpectingInput]

- …(welcomeText,"es-ES-ElviraNeural","es-ES"),InputHints.ExpectingInput), cancellationToken);

- … Prompt = MessageFactory.Text("¿Cómo se siente hoy?", null, InputHints.ExpectingInput)

- }, cancellationToken);}

- if (!resultado.Result && promptContext.Recognized.Succeeded && !promptContext.Recognized.Value.ToLower().Contains("ripete la domanda")) {

- promptContext.Options.Prompt.Speak = "<speak version=\"1.0\"></speak>";

- AuxText = AuxText + promptContext.Recognized.Value;

- promptContext.Recognized.Value = AuxText;

- private static async Task<DialogTurnResult> Step5Async(WaterfallStepContext stepContext, CancellationToken cancellationToken) {

- stepContext.Values["Step4"] = (string)stepContext.Result;

- return await stepContext.PromptAsync(nameof(TextPrompt),

- new PromptOptions

- {

- Prompt = MessageFactory.Text(" ¿En qué lugar estamos y cuántos años tiene? ", null, InputHints.ExpectingInput)

- }, cancellationToken);

- }

- StartTime = DateTime.Now;

- stepTimer =true;

- if(stepTimer) {

- var minute = DateTime.Now - StartTime;

- if (minute > TimeSpan.FromMinutes(1) {

- stepTimer = false;

- promptContext.Recognized.Value = AuxText;

- return Task.FromResult(true);

- }

4. Health Professionals’ Experience

¿Cómo ha sido la experiencia? (How was your experience?). Answer: 1 (Very bad experience), 2 (Bad experience), 3 (Neutral), 4 (Good experience) and 5 (Very good experience).¿Es WAY2AGE fácil de usar? (Is WAY2AGE easy to use?). Answer: 1 (Very complicated), 2 (Complicated), 3(Neutral), 4 (Easy) and 5 (Very easy).¿Cree que WAY2AGE facilita la evaluación cognitiva? (Do you think WAY2AGE facilitates cognitive assessment?) Answer: 1 (Strongly disagree), 2 (Disagree), 3(Neutral), 4 (Agree) and 5 (Strongly agree).¿Utilizaría esta herramienta en su trabajo? (Would you use this tool in your work?). 1 (Strongly disagree), 2 (Disagree), 3(Neutral), 4 (Agree) and 5 (Strongly agree).

5. Future Lines of Research: Second Phase

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Alsunaidi, S.J.; Almuhaideb, A.M.; Ibrahim, N.M.; Shaikh, F.S.; Alqudaihi, K.S.; Alhaidari, F.A.; Khan, I.U.; Aslam, N.; Alshahrani, M.S. Applications of Big Data Analytics to Control COVID-19 Pandemic. Sensors 2021, 21, 2282. [Google Scholar] [CrossRef]

- Della Gatta, F.; Terribili, C.; Fabrizi, E.; Moret-Tatay, C. Making Older Adults’ Cognitive Health Visible After Covid-19 Outbreak. Front. Psychol. 2021, 12, 648208. [Google Scholar] [CrossRef]

- Deif, M.A.; Solyman, A.A.A.; Alsharif, M.H.; Uthansakul, P. Automated Triage System for Intensive Care Admissions during the COVID-19 Pandemic Using Hybrid XGBoost-AHP Approach. Sensors 2021, 21, 6379. [Google Scholar] [CrossRef] [PubMed]

- Belmonte-Fernández, Ó.; Puertas-Cabedo, A.; Torres-Sospedra, J.; Montoliu-Colás, R.; Trilles-Oliver, S. An Indoor Positioning System Based on Wearables for Ambient-Assisted Living. Sensors 2016, 17, 36. [Google Scholar] [CrossRef]

- Helbostad, J.; Vereijken, B.; Becker, C.; Todd, C.; Taraldsen, K.; Pijnappels, M.; Aminian, K.; Mellone, S. Mobile Health Applications to Promote Active and Healthy Ageing. Sensors 2017, 17, 622. [Google Scholar] [CrossRef]

- Sansano Sansano, E.; Belmonte-Fernandez, O.; Montoliu, R.; Gasco-Compte, A.; Caballer Miedes, A.; Bayarri Iturralde, P. Improving Positioning Accuracy in Ambient Assisted Living Environments. A Multi-Sensor Approach. In Proceedings of the 2019 15th International Conference on Intelligent Environments (IE), Rabat, Morocco, 24–27 June 2019; pp. 22–29. [Google Scholar]

- Isaacson, R.; Saif, N. A Missed Opportunity for Dementia Prevention? Current Challenges for Early Detection and Modern-Day Solutions. J. Prev. Alzheimers Dis. 2020, 7, 291–293. [Google Scholar] [CrossRef]

- Galanis, P.; Vraka, I.; Fragkou, D.; Bilali, A.; Kaitelidou, D. Nurses’ Burnout and Associated Risk Factors during the COVID-19 Pandemic: A Systematic Review and Meta-analysis. J. Adv. Nurs. 2021, 77, 3286–3302. [Google Scholar] [CrossRef] [PubMed]

- Costello, H.; Walsh, S.; Cooper, C.; Livingston, G. A Systematic Review and Meta-Analysis of the Prevalence and Associations of Stress and Burnout among Staff in Long-Term Care Facilities for People with Dementia. Int. Psychogeriatr. 2019, 31, 1203–1216. [Google Scholar] [CrossRef]

- Petry, H.; Ernst, J.; Steinbrüchel-Boesch, C.; Altherr, J.; Naef, R. The Acute Care Experience of Older Persons with Cognitive Impairment and Their Families: A Qualitative Study. Int. J. Nurs. Stud. 2019, 96, 44–52. [Google Scholar] [CrossRef] [PubMed]

- Maslow, K.; Fortinsky, R.H. Nonphysician Care Providers Can Help to Increase Detection of Cognitive Impairment and Encourage Diagnostic Evaluation for Dementia in Community and Residential Care Settings. Gerontologist 2018, 58, S20–S31. [Google Scholar] [CrossRef]

- Pinto, T.C.C.; Machado, L.; Bulgacov, T.M.; Rodrigues-Júnior, A.L.; Costa, M.L.G.; Ximenes, R.C.C.; Sougey, E.B. Is the Montreal Cognitive Assessment (MoCA) Screening Superior to the Mini-Mental State Examination (MMSE) in the Detection of Mild Cognitive Impairment (MCI) and Alzheimer’s Disease (AD) in the Elderly? Int. Psychogeriatr. 2019, 31, 491–504. [Google Scholar] [CrossRef]

- Folstein, M.; Folstein, S.; McHugh, P. “Mini-Mental State”. A Practical Method for Grading the Cognitive State of Patients for the Clinician. J. Psychiatr. Res. 1975, 12, 189–198. [Google Scholar] [CrossRef]

- Baños, J.H.; Franklin, L.M. Factor Structure of the Mini-Mental State Examination in Adult Psychiatric Inpatients. Psychol. Assess. 2002, 14, 397–400. [Google Scholar] [CrossRef]

- Dean, P.M.; Feldman, D.M.; Morere, D.; Morton, D. Clinical Evaluation of the Mini-Mental State Exam with Culturally Deaf Senior Citizens. Arch. Clin. Neuropsychol. 2009, 24, 753–760. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Grealish, L. Mini-Mental State Questionnaire: Problems with Its Use in Palliative Care. Int. J. Palliat. Nurs. 2000, 6, 298–302. [Google Scholar] [CrossRef]

- Xu, G.; Meyer, J.S.; Huang, Y.; Du, F.; Chowdhury, M.; Quach, M. Adapting Mini-Mental State Examination for Dementia Screening among Illiterate or Minimally Educated Elderly Chinese. Int. J. Geriatr. Psychiatry 2003, 18, 609–616. [Google Scholar] [CrossRef]

- Uhlmann, R.F.; Larson, E.B. Effect of Education on the Mini-Mental State Examination as a Screening Test for Dementia. J. Am. Geriatr. Soc. 1991, 39, 876–880. [Google Scholar] [CrossRef]

- Galasko, D.; Klauber, M.R.; Hofstetter, C.R.; Salmon, D.P.; Lasker, B.; Thal, L.J. The Mini-Mental State Examination in the Early Diagnosis of Alzheimer’s Disease. Arch. Neurol. 1990, 47, 49–52. [Google Scholar] [CrossRef]

- Wiggins, M.E.; Price, C. Mini-Mental State Examination (MMSE). In Encyclopedia of Gerontology and Population Aging; Gu, D., Dupre, M.E., Eds.; Springer: Cham, Switzerland, 2021; pp. 3236–3239. [Google Scholar]

- Koder, D.-A.; Klahr, A. Training Nurses in Cognitive Assessment: Uses and Misuses of the Mini-Mental State Examination. Educ. Gerontol. 2010, 36, 827–833. [Google Scholar] [CrossRef]

- Tinklenberg, J.; Brooks, J.O.; Tanke, E.D.; Khalid, K.; Poulsen, S.L.; Kraemer, H.C.; Gallagher, D.; Thornton, J.E.; Yesavage, J.A. Factor Analysis and Preliminary Validation of the Mini-Mental State Examination from a Longitudinal Perspective. Int. Psychogeriatr. 1990, 2, 123–134. [Google Scholar] [CrossRef] [PubMed]

- Patten, S.B.; Fick, G.H. Clinical Interpretation of the Mini-Mental State. Gen. Hosp. Psychiatry 1993, 15, 254–259. [Google Scholar] [CrossRef]

- Lobo, A.; Saz, P.; Marcos, G.; Día, J.L.; de la Cámara, C.; Ventura, T.; Morales Asín, F.; Fernando Pascual, L.; Montañés, J.A.; Aznar, S. Revalidation and standardization of the cognition mini-exam (first Spanish version of the Mini-Mental Status Examination) in the general geriatric population. Med. Clin. 1999, 112, 767–774. [Google Scholar]

- Gagnon, M.; Letenneur, L.; Dartigues, J.-F.; Commenges, D.; Orgogozo, J.-M.; Barberger-Gateau, P.; Alpérovitch, A.; Décamps, A.; Salamon, R. Validity of the Mini-Mental State Examination as a Screening Instrument for Cognitive Impairment and Dementia in French Elderly Community Residents. Neuroepidemiology 1990, 9, 143–150. [Google Scholar] [CrossRef] [PubMed]

- Newkirk, L.A.; Kim, J.M.; Thompson, J.M.; Tinklenberg, J.R.; Yesavage, J.A.; Taylor, J.L. Validation of a 26-Point Telephone Version of the Mini-Mental State Examination. J. Geriatr. Psychiatry Neurol. 2004, 17, 81–87. [Google Scholar] [CrossRef] [PubMed]

- Moret-Tatay, C.; Iborra-Marmolejo, I.; Jorques-Infante, M.J.; Esteve-Rodrigo, J.V.; Schwanke, C.H.A.; Irigaray, T.Q. Can Virtual Assistants Perform Cognitive Assessment in Older Adults? A Review. Medicina 2021, 57, 1310. [Google Scholar] [CrossRef] [PubMed]

- Alhanai, T.; Au, R.; Glass, J. Spoken Language Biomarkers for Detecting Cognitive Impairment. In Proceedings of the 2017 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU), Okinawa, Japan, 16–20 December 2017; pp. 409–416. [Google Scholar]

- Thomas, J.A.; Burkhardt, H.A.; Chaudhry, S.; Ngo, A.D.; Sharma, S.; Zhang, L.; Au, R.; Hosseini Ghomi, R. Assessing the Utility of Language and Voice Biomarkers to Predict Cognitive Impairment in the Framingham Heart Study Cognitive Aging Cohort Data. J. Alzheimer’s Dis. 2020, 76, 905–922. [Google Scholar] [CrossRef]

- Mitzner, T.L.; Savla, J.; Boot, W.R.; Sharit, J.; Charness, N.; Czaja, S.J.; Rogers, W.A. Technology Adoption by Older Adults: Findings from the PRISM Trial. Gerontol. 2019, 59, 34–44. [Google Scholar] [CrossRef]

- Moret-Tatay, C.; Beneyto-Arrojo, M.J.; Gutierrez, E.; Boot, W.R.; Charness, N. A Spanish Adaptation of the Computer and Mobile Device Proficiency Questionnaires (CPQ and MDPQ) for Older Adults. Front. Psychol. 2019, 10, 1165. [Google Scholar] [CrossRef]

- Daniel, G.; Cabot, J.; Deruelle, L.; Derras, M. Xatkit: A Multimodal Low-Code Chatbot Development Framework. IEEE Access 2020, 8, 15332–15346. [Google Scholar] [CrossRef]

- Portacolone, E.; Halpern, J.; Luxenberg, J.; Harrison, K.L.; Covinsky, K.E. Ethical Issues Raised by the Introduction of Artificial Companions to Older Adults with Cognitive Impairment: A Call for Interdisciplinary Collaborations. J. Alzheimer’s Dis. 2020, 76, 445–455. [Google Scholar] [CrossRef]

- Sharma, V.; Nigam, V.; Sharma, A.K. Cognitive Analysis of Deploying Web Applications on Microsoft Windows Azure and Amazon Web Services in Global Scenario. Mater. Today Proc. 2020, in press. [Google Scholar] [CrossRef]

- Mueller, K.D.; Hermann, B.; Mecollari, J.; Turkstra, L.S. Connected Speech and Language in Mild Cognitive Impairment and Alzheimer’s Disease: A Review of Picture Description Tasks. J. Clin. Exp. Neuropsychol. 2018, 40, 917–939. [Google Scholar] [CrossRef] [PubMed]

- Smolík, F.; Stepankova, H.; Vyhnálek, M.; Nikolai, T.; Horáková, K.; Matějka, Š. Propositional Density in Spoken and Written Language of Czech-Speaking Patients with Mild Cognitive Impairment. J. Speech Lang. Hear. Res. 2016, 59, 1461–1470. [Google Scholar] [CrossRef] [PubMed]

- Sung, J.E.; Choi, S.; Eom, B.; Yoo, J.K.; Jeong, J.H. Syntactic Complexity as a Linguistic Marker to Differentiate Mild Cognitive Impairment from Normal Aging. J. Speech Lang. Hear. Res. 2020, 63, 1416–1429. [Google Scholar] [CrossRef] [PubMed]

- Aramaki, E.; Shikata, S.; Miyabe, M.; Kinoshita, A. Vocabulary Size in Speech May Be an Early Indicator of Cognitive Impairment. PLoS ONE 2016, 11, e0155195. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Measure | Action Required |

|---|---|

| Orientation for time | Year, Season, Month, Date and Day |

| Orientation for place | State, Country, City, Building and Floor |

| Registration | Repetition of three words |

| Attention/Calculation | Subtraction of a number from a given digit |

| Recall | To recall the three words in the repetition phase |

| Naming | To name two common objects |

| Repetition | Repetition of a sentence |

| Three-Stage verbal command | To follow instructions with a piece of paper |

| Written command | Performing an action by understanding a written sentence |

| Writing | To write a spontaneous sentence |

| Construction | To draw interlocking pentagons |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Moret-Tatay, C.; Radawski, H.M.; Guariglia, C. Health Professionals’ Experience Using an Azure Voice-Bot to Examine Cognitive Impairment (WAY2AGE). Healthcare 2022, 10, 783. https://doi.org/10.3390/healthcare10050783

Moret-Tatay C, Radawski HM, Guariglia C. Health Professionals’ Experience Using an Azure Voice-Bot to Examine Cognitive Impairment (WAY2AGE). Healthcare. 2022; 10(5):783. https://doi.org/10.3390/healthcare10050783

Chicago/Turabian StyleMoret-Tatay, Carmen, Hernán Mario Radawski, and Cecilia Guariglia. 2022. "Health Professionals’ Experience Using an Azure Voice-Bot to Examine Cognitive Impairment (WAY2AGE)" Healthcare 10, no. 5: 783. https://doi.org/10.3390/healthcare10050783

APA StyleMoret-Tatay, C., Radawski, H. M., & Guariglia, C. (2022). Health Professionals’ Experience Using an Azure Voice-Bot to Examine Cognitive Impairment (WAY2AGE). Healthcare, 10(5), 783. https://doi.org/10.3390/healthcare10050783