COVID-19 Vaccination-Related Sentiments Analysis: A Case Study Using Worldwide Twitter Dataset

Abstract

:1. Introduction

- Q1: What are people’s sentiments toward COVID-19 vaccination on the social media platform Twitter?

- Q2: How effective is the proposed approach for tweets’ sentiment classification?

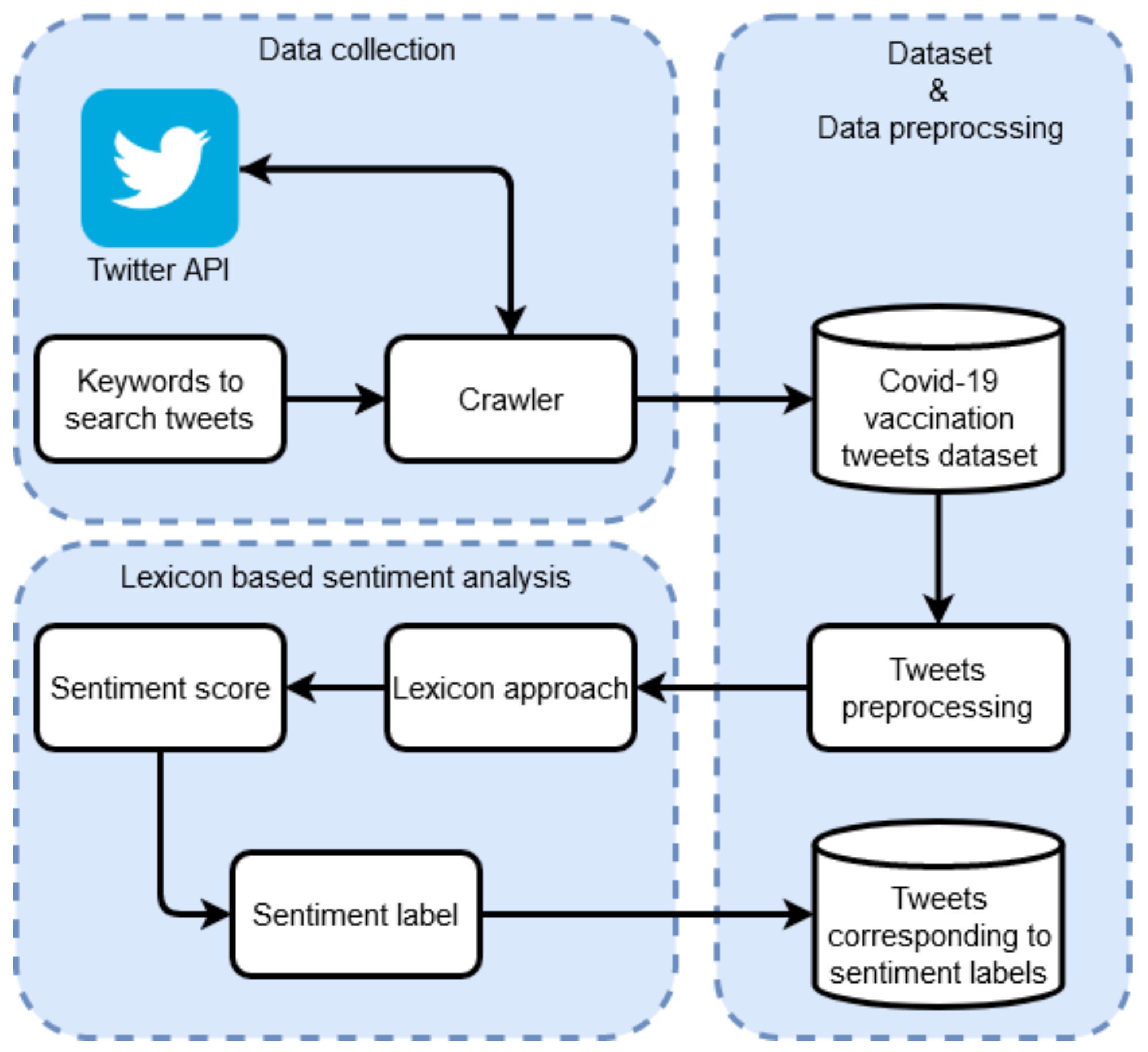

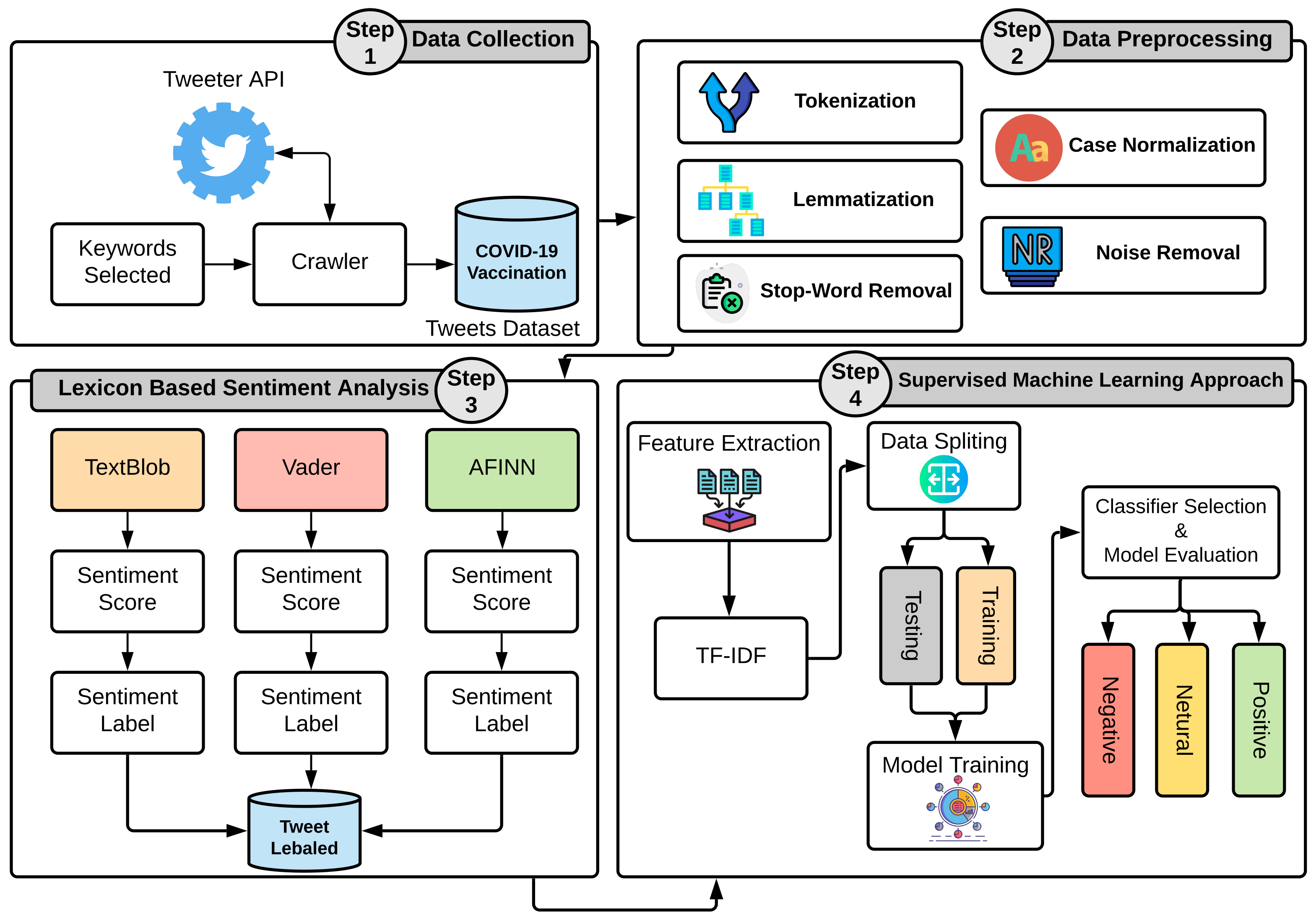

- This study proposes a methodology to perform a systematic analysis of people’s perceptions and perspectives towards COVID-19 global vaccination. For this purpose, a worldwide dataset has been created by collecting the tweets about people’s sentiments regarding COVID-19 vaccination.

- For determining the polarity of the sentiment into positive, negative, and neutral, TextBlob, VADER, and AFINN lexicon-based approaches were used. Different supervised learning machine learning models were applied to the datasets annotated by these approaches to determine the most accurate model.

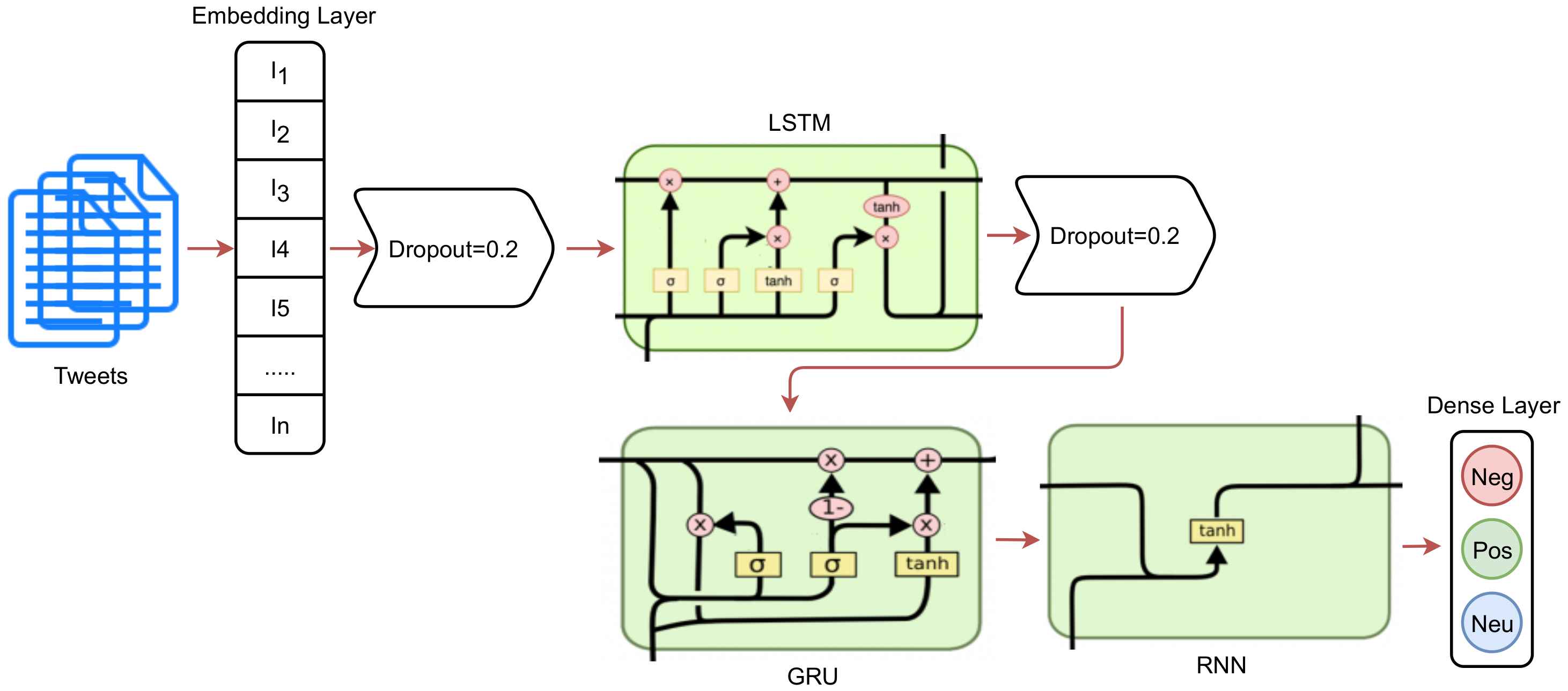

- To obtain higher accuracy for sentiment classification, an ensemble model LSTM-GRNN is proposed that comprises long short-term memory, a gated recurrent unit, and neural network. Experimental results are validated by comparing the performance with state-of-the-art approaches.

2. Related Work

3. Materials and Methods

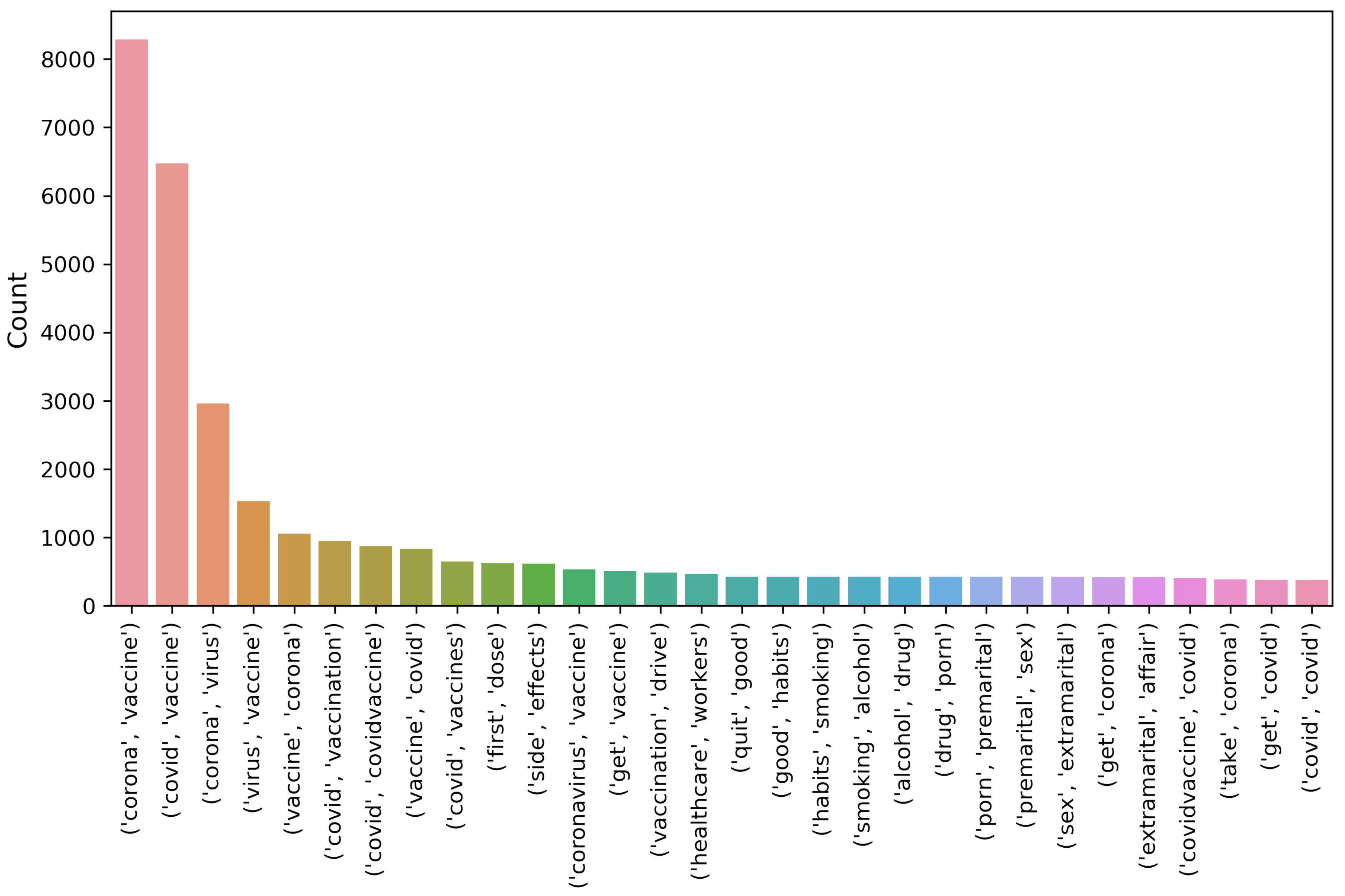

3.1. Dataset Description

3.2. Data Preprocessing

3.2.1. Removal of Username, Hashtags, and Hyperlinks

3.2.2. Removal of Numbers, Punctuation and Stop Words

3.2.3. Case Conversion, Stemming, and Lemmatization

3.3. Lexicon-Based Methods

3.3.1. TextBlob

| Algorithm 1 TextBlob algorithm for sentiment analysis. |

Input: Input: Worldwide COVID-19 Vaccination Tweets Result: Polarity Score 0 ⟶ (Positive) Polarity Score 0 ⟶ (Neutral) Polarity Score 0 ⟶ (Negative) initialization loop (each tweet in tweets) Compute Polarity Score TextBlob (tweet) condition: if (Polarity Score > 0) then Tweet Sentiment = Positive; elseif (Polarity Score = 0) then Tweet Sentiment = Neutral; else Tweet Sentiment = Negative; condition end loop end |

3.3.2. Valence Aware Dictionary for Sentiment Reasoning

| Algorithm 2 VADER algorithm for sentiment analysis. |

Input: Input: Worldwide Covid19 Vaccination Tweets Result: Compound Score 0.05 ⟶ (Positive) Compound Score > −0.05 to Compound Score < 0.05 ⟶ (Neutral) Compound Score 0.05 ⟶ (Negative) initialization loop (each tweet in tweets) Compute Compound Score VADER (tweet) condition: if (Compound Score 0.05) then Tweet Sentiment = Positive; elseif (Compound Score > −0.05 to Compound Score < 0.05) then Tweet Sentiment = Neutral; elseif (Compound Score 0.05) then Tweet Sentiment = Negative; condition end loop end |

3.3.3. AFINN

3.4. Machine Learning Approaches Used for Experiments

3.4.1. Term Frequency-Inverted Document Frequency Features

| Algorithm 3 AFINN algorithm for sentiment analysis. |

Input: Input: Worldwide Covid19 Vaccination Tweets Result: Polarity Score 0 ⟶ (Positive) Polarity Score 0 ⟶ (Neutral) Polarity Score 0 ⟶ (Negative) initialization loop (each tweet in tweets) Compute Polarity Score AFINN (tweet) condition: if (Polarity Score > 0) then Tweet Sentiment = Positive; elseif (Polarity Score = 0) then Tweet Sentiment = Neutral; else Tweet Sentiment = Negative; condition end loop end |

3.4.2. Decision Tree

3.4.3. Random Forest

3.4.4. Logistic Regression

3.5. Deep Learning Models for Sentiment Analysis

3.6. Architecture of Proposed LSTM-GRNN

3.7. Lexicon-Based Approach for Sentiment Analysis

3.8. Proposed Methodology for Sentiment Analysis

4. Results and Discussions

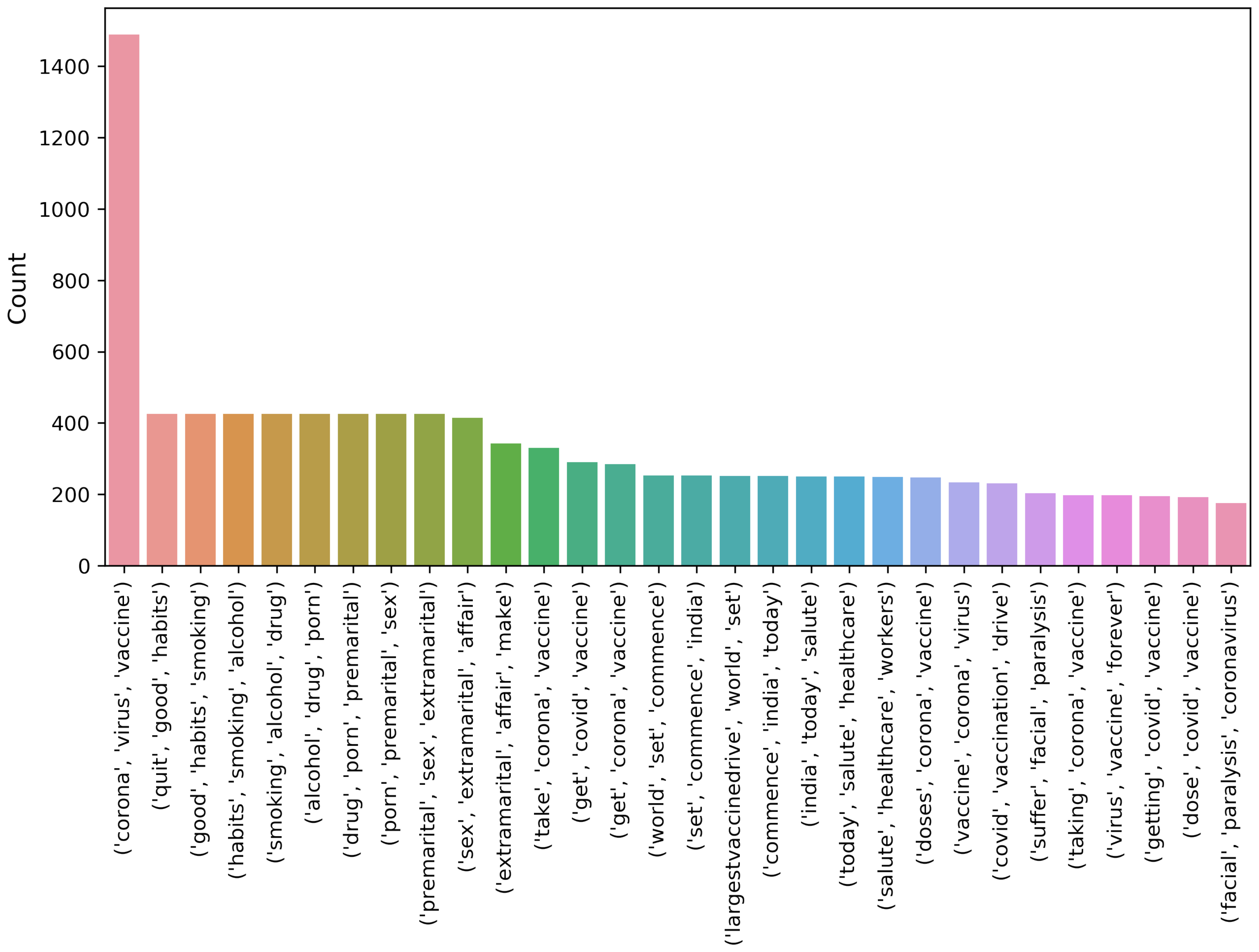

4.1. POS Tags of Dataset

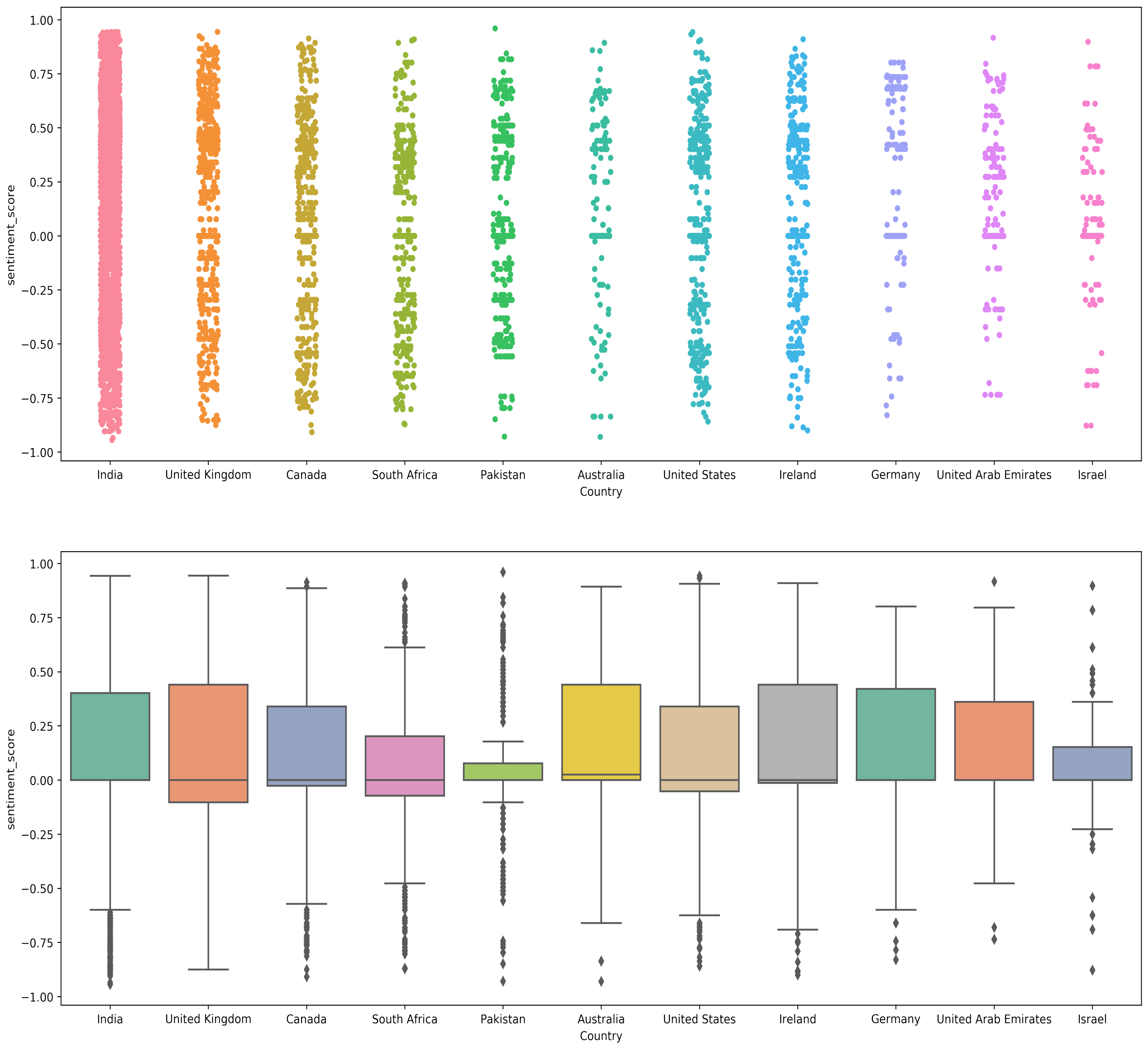

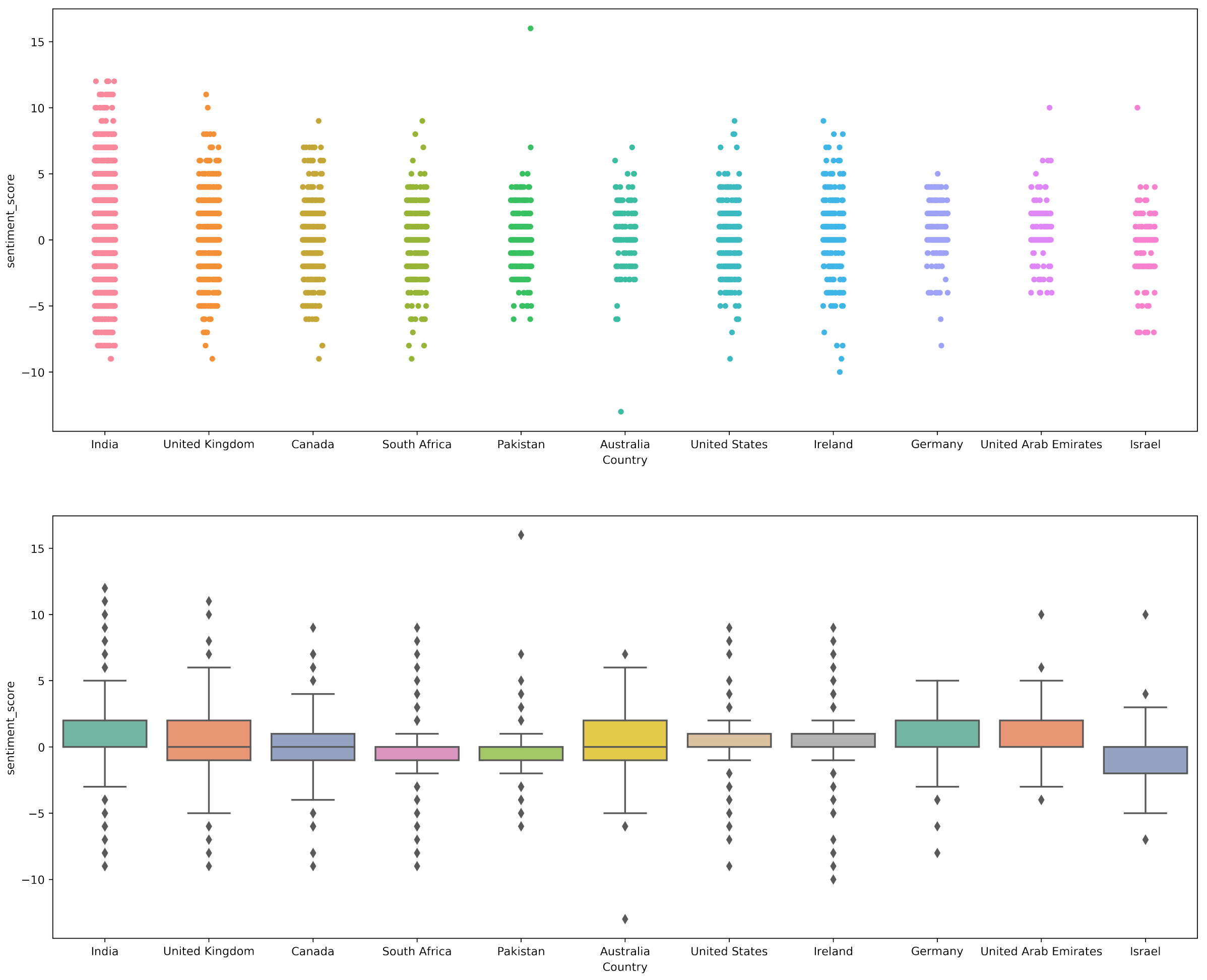

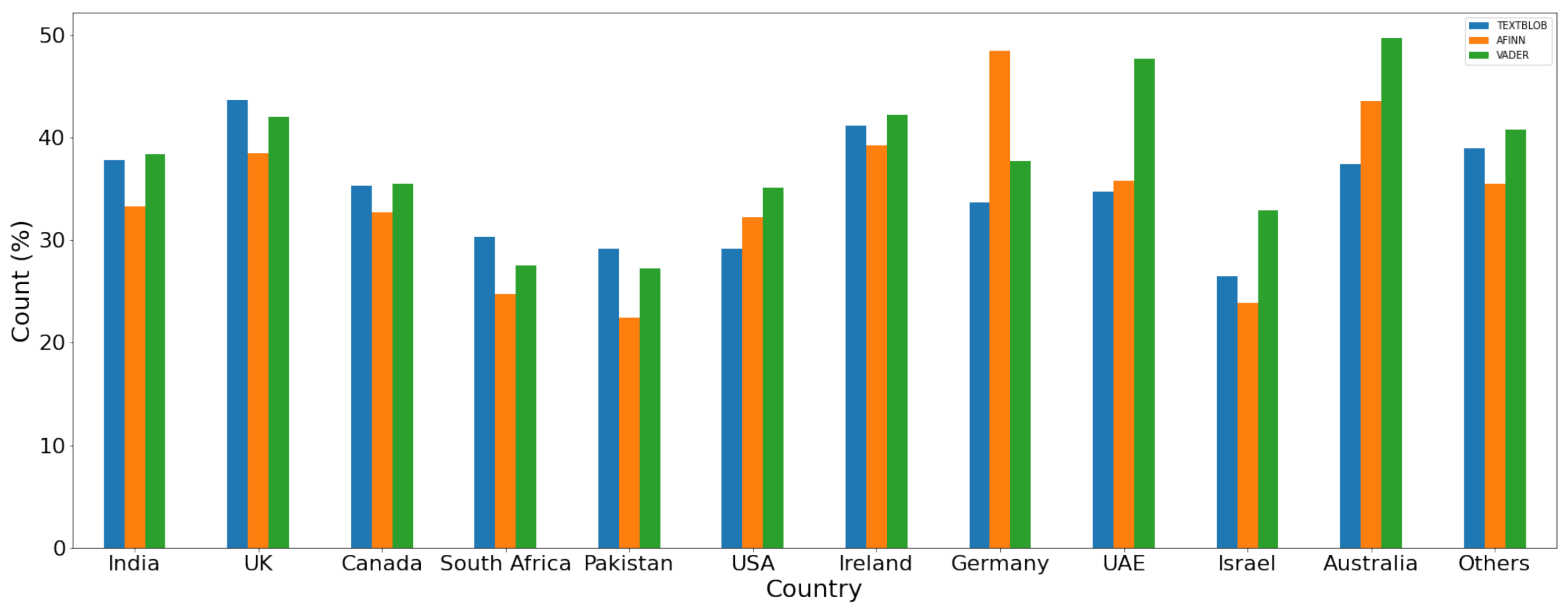

4.2. Sentiment Analysis Using TextBlob

4.3. Sentiment Analysis Using VADER

4.4. Sentiment Analysis Using AFINN

4.5. Sentiment Analysis Using Machine Learning Models

4.6. Experimental Results of Deep Learning Models

4.7. Comparison with State-of-the-Art Studies

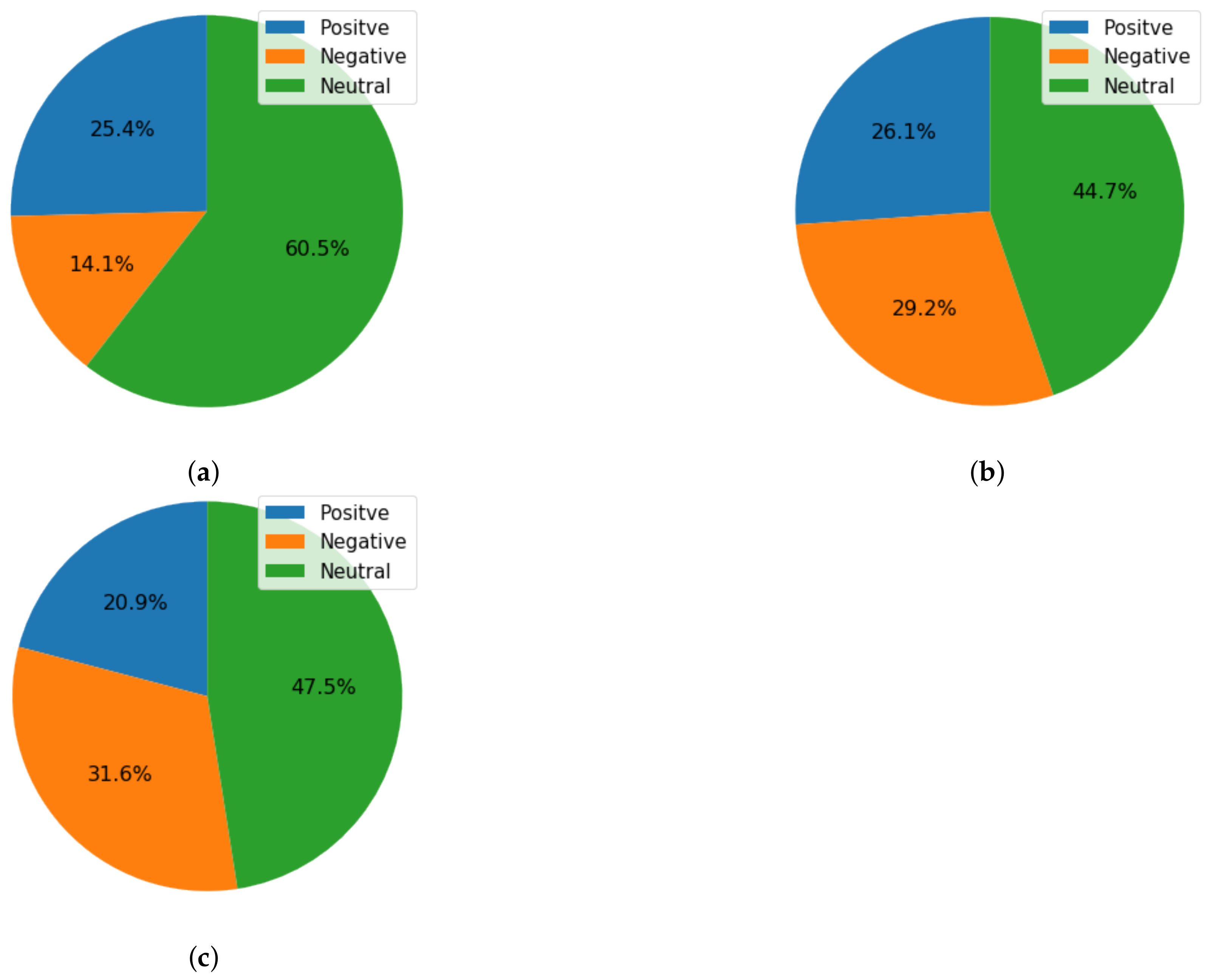

4.8. Time-Based Sentiment Analysis

5. Conclusions

5.1. Findings of Research

- The ratio of positive sentiments is high as compared to the ratio of negative sentiments in tweets related to COVID-19 vaccinations.

- The ratio of sentiments for positive, negative, and neutral sentiments may vary, yet, on average, the number of neutral sentiments is higher than negative and positive sentiments.

- Time-based analysis of tweets related to COVID-19 vaccination indicates a negative trend, that is, the ratio of negative sentiments slightly increased over time.

- Tree-based machine learning models proved perform better than other models. Ensemble models can be a good choice for obtaining higher levels of classification accuracy when dealing with tweets’ textual data.

- Regarding the performance of lexicon-based approaches, the use of TextBlob for annotation leads to higher levels of performance.

5.2. Limitations and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lone, S.A.; Ahmad, A. COVID-19 pandemic—An African perspective. Emerg. Microbes Infect. 2020, 9, 1300–1308. [Google Scholar] [CrossRef] [PubMed]

- Balkhair, A.A. COVID-19 pandemic: A new chapter in the history of infectious diseases. Oman Med. J. 2020, 35, e123. [Google Scholar] [CrossRef] [PubMed]

- Dai, X.; Xiong, Y.; Li, N.; Jian, C. Vaccine types. In Vaccines-the History and Future; IntechOpen: London, UK, 2019. [Google Scholar]

- Jones, I.; Roy, P. Sputnik V COVID-19 vaccine candidate appears safe and effective. Lancet 2021, 20, 642–643. [Google Scholar] [CrossRef]

- Chagla, Z. The BNT162b2 (BioNTech/Pfizer) vaccine had 95% efficacy against COVID-19 ≥ 7 days after the 2nd dose. Ann. Intern. Med. 2021, 174, JC15. [Google Scholar] [CrossRef] [PubMed]

- Mahase, E. Covid-19: Pfizer reports 100% vaccine efficacy in children aged 12 to 15. BMJ 2021, 373, n881. [Google Scholar] [CrossRef]

- Hung, I.F.; Poland, G.A. Single-dose Oxford—AstraZeneca COVID-19 vaccine followed by a 12-week booster. Lancet 2021, 397, 854–855. [Google Scholar] [CrossRef]

- Livingston, E.H.; Malani, P.N.; Creech, C.B. The Johnson & Johnson Vaccine for COVID-19. JAMA 2021, 325, 1575. [Google Scholar]

- Mukandavire, Z.; Nyabadza, F.; Malunguza, N.J.; Cuadros, D.F.; Shiri, T.; Musuka, G. Quantifying early COVID-19 outbreak transmission in South Africa and exploring vaccine efficacy scenarios. PLoS ONE 2020, 15, e0236003. [Google Scholar] [CrossRef]

- Statement for Healthcare Professionals: How COVID-19 Vaccines Are Regulated for Safety and Effectiveness. Available online: https://www.who.int/news/item/11-06-2021-statement-for-healthcare-professionals-how-covid-19-vaccines-are-regulated-for-safety-and-effectiveness (accessed on 31 January 2022).

- Smith, L.E.; Amlôt, R.; Weinman, J.; Yiend, J.; Rubin, G.J. A systematic review of factors affecting vaccine uptake in young children. Vaccine 2017, 35, 6059–6069. [Google Scholar] [CrossRef] [Green Version]

- World Health Organization. Behavioural Considerations for Acceptance and Uptake of COVID-19 Vaccines: WHO Technical Advisory Group on Behavioural Insights and Sciences for Health; Meeting Report, 15 October 2020; World Health Organization: Geneva, Switzerland, 2020. [Google Scholar]

- Allagui, I.; Breslow, H. Social media for public relations: Lessons from four effective cases. Public Relat. Rev. 2016, 42, 20–30. [Google Scholar] [CrossRef]

- Valentini, C. Is using social media “good” for the public relations profession? A critical reflection. Public Relat. Rev. 2015, 41, 170–177. [Google Scholar] [CrossRef]

- World Health Organization. Guidance on Developing a National Deployment and Vaccination Plan for COVID-19 Vaccines: Interim Guidance, 16 November 2020; Technical Report; World Health Organization: Geneva, Switzerland, 2020. [Google Scholar]

- Driss, O.B.; Mellouli, S.; Trabelsi, Z. From citizens to government policy-makers: Social media data analysis. Gov. Inf. Q. 2019, 36, 560–570. [Google Scholar] [CrossRef]

- Yigitcanlar, T.; Kankanamge, N.; Preston, A.; Gill, P.S.; Rezayee, M.; Ostadnia, M.; Xia, B.; Ioppolo, G. How can social media analytics assist authorities in pandemic-related policy decisions? Insights from Australian states and territories. Health Inf. Sci. Syst. 2020, 8, 1–21. [Google Scholar] [CrossRef] [PubMed]

- Liao, Q.; Yuan, J.; Dong, M.; Yang, L.; Fielding, R.; Lam, W.W.T. Public engagement and government responsiveness in the communications about COVID-19 during the early epidemic stage in China: Infodemiology study on social media data. J. Med. Internet Res. 2020, 22, e18796. [Google Scholar] [CrossRef]

- Singh, P.; Dwivedi, Y.K.; Kahlon, K.S.; Sawhney, R.S.; Alalwan, A.A.; Rana, N.P. Smart monitoring and controlling of government policies using social media and cloud computing. Inf. Syst. Front. 2020, 22, 315–337. [Google Scholar] [CrossRef] [Green Version]

- Keith Norambuena, B.; Lettura, E.F.; Villegas, C.M. Sentiment analysis and opinion mining applied to scientific paper reviews. Intell. Data Anal. 2019, 23, 191–214. [Google Scholar] [CrossRef]

- Pang, B.; Lee, L.; Vaithyanathan, S. Thumbs up? Sentiment classification using machine learning techniques. arXiv 2002, arXiv:cs/0205070v1. [Google Scholar]

- Taboada, M.; Brooke, J.; Tofiloski, M.; Voll, K.; Stede, M. Lexicon-based methods for sentiment analysis. Comput. Linguist. 2011, 37, 267–307. [Google Scholar] [CrossRef]

- Liu, B. Opinion mining and sentiment analysis. In Web Data Mining; Springer: Berlin/Heidelberg, Germany, 2011; pp. 459–526. [Google Scholar]

- Garg, Y.; Chatterjee, N. Sentiment analysis of twitter feeds. In Proceedings of the International Conference on Big Data Analytics, New Delhi, India, 20–23 December 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 33–52. [Google Scholar]

- Altrabsheh, N.; Cocea, M.; Fallahkhair, S. Learning sentiment from students’ feedback for real-time interventions in classrooms. In Proceedings of the International Conference on Adaptive and Intelligent Systems, Bournemouth, UK, 8–10 September 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 40–49. [Google Scholar]

- El Alaoui, I.; Gahi, Y.; Messoussi, R.; Chaabi, Y.; Todoskoff, A.; Kobi, A. A novel adaptable approach for sentiment analysis on big social data. J. Big Data 2018, 5, 12. [Google Scholar] [CrossRef]

- Sánchez-Rada, J.F.; Iglesias, C.A. CRANK: A Hybrid Model for User and Content Sentiment Classification Using Social Context and Community Detection. Appl. Sci. 2020, 10, 1662. [Google Scholar] [CrossRef] [Green Version]

- NLTK Library. Available online: https://www.nltk.org/ (accessed on 5 February 2021).

- Loria, S. TextBlob Documentation. Release 0.15 2018, 2, 269. [Google Scholar]

- Vijayarani, S.; Janani, R. Text mining: Open source tokenization tools-an analysis. Adv. Comput. Intell. Int. J. ACII 2016, 3, 37–47. [Google Scholar]

- Laksono, R.A.; Sungkono, K.R.; Sarno, R.; Wahyuni, C.S. Sentiment analysis of restaurant customer reviews on TripAdvisor using Naïve Bayes. In Proceedings of the 2019 12th International Conference on Information & Communication Technology and System (ICTS), Surabaya, Indonesia, 18 July 2019; pp. 49–54. [Google Scholar]

- Sohangir, S.; Petty, N.; Wang, D. Financial sentiment lexicon analysis. In Proceedings of the 2018 IEEE 12th International Conference on Semantic Computing (ICSC), Laguna Hills, CA, USA, 31 January–2 February 2018; pp. 286–289. [Google Scholar]

- Amin, A.; Hossain, I.; Akther, A.; Alam, K.M. Bengali vader: A sentiment analysis approach using modified vader. In Proceedings of the 2019 International Conference on Electrical, Computer and Communication Engineering (ECCE), Cox’sBazar, Bangladesh, 7–9 February 2019; pp. 1–6. [Google Scholar]

- Kirlic, A.; Orhan, Z. Measuring human and Vader performance on sentiment analysis. Invent. J. Res. Technol. Eng. Manag. 2017, 1, 42–46. [Google Scholar]

- Nielsen, F.Å. Afinn Project. 2017. Available online: https://www2.imm.dtu.dk/pubdb/edoc/imm6975.pdf (accessed on 31 January 2022).

- AFINN Sentiment Lexicon. Available online: http://corpustext.com/reference/sentiment_afinn.html (accessed on 15 February 2021).

- Nielsen, F.Å. A new ANEW: Evaluation of a word list for sentiment analysis in microblogs. arXiv 2011, arXiv:1103.2903. [Google Scholar]

- Yu, B. An evaluation of text classification methods for literary study. Lit. Linguist. Comput. 2008, 23, 327–343. [Google Scholar] [CrossRef]

- Rustam, F.; Ashraf, I.; Mehmood, A.; Ullah, S.; Choi, G.S. Tweets classification on the base of sentiments for US airline companies. Entropy 2019, 21, 1078. [Google Scholar] [CrossRef] [Green Version]

- Robertson, S. Understanding inverse document frequency: On theoretical arguments for IDF. J. Doc. 2004, 60, 503–520. [Google Scholar] [CrossRef] [Green Version]

- Zhang, W.; Yoshida, T.; Tang, X. A comparative study of TF* IDF, LSI and multi-words for text classification. Expert Syst. Appl. 2011, 38, 2758–2765. [Google Scholar] [CrossRef]

- Brijain, M.; Patel, R.; Kushik, M.; Rana, K. A Survey on Decision Tree Algorithm for Classification. 2014. Available online: http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.673.2797 (accessed on 31 January 2022).

- Biau, G.; Scornet, E. A random forest guided tour. Test 2016, 25, 197–227. [Google Scholar] [CrossRef] [Green Version]

- Breiman, L. Bagging predictors. Mach. Learn. 1996, 24, 123–140. [Google Scholar] [CrossRef] [Green Version]

- Liaw, A.; Wiener, M. Classification and regression by randomForest. R News 2002, 2, 18–22. [Google Scholar]

- Schapire, R.E. A brief introduction to boosting. Ijcai Citeseer 1999, 99, 1401–1406. [Google Scholar]

- Freund, Y.; Schapire, R.E. A decision-theoretic generalization of on-line learning and an application to boosting. J. Comput. Syst. Sci. 1997, 55, 119–139. [Google Scholar] [CrossRef] [Green Version]

- Rustam, F.; Mehmood, A.; Ahmad, M.; Ullah, S.; Khan, D.M.; Choi, G.S. Classification of shopify app user reviews using novel multi text features. IEEE Access 2020, 8, 30234–30244. [Google Scholar] [CrossRef]

- Sebastiani, F. Machine learning in automated text categorization. ACM Comput. Surv. CSUR 2002, 34, 1–47. [Google Scholar] [CrossRef]

- Talpada, H.; Halgamuge, M.N.; Vinh, N.T.Q. An analysis on use of deep learning and lexical-semantic based sentiment analysis method on twitter data to understand the demographic trend of telemedicine. In Proceedings of the 2019 11th International Conference on Knowledge and Systems Engineering (KSE), Da Nang, Vietnam, 24–26 October 2019; pp. 1–9. [Google Scholar]

- Saad, E.; Din, S.; Jamil, R.; Rustam, F.; Mehmood, A.; Ashraf, I.; Choi, G.S. Determining the Efficiency of Drugs under Special Conditions from Users’ Reviews on Healthcare Web Forums. IEEE Access 2021, 9, 85721–85737. [Google Scholar] [CrossRef]

- Nousi, C.; Tjortjis, C. A Methodology for Stock Movement Prediction Using Sentiment Analysis on Twitter and StockTwits Data. In Proceedings of the 2021 6th South-East Europe Design Automation, Computer Engineering, Computer Networks and Social Media Conference (SEEDA-CECNSM), Preveza, Greece, 24–26 September 2021; pp. 1–7. [Google Scholar]

- Rustam, F.; Khalid, M.; Aslam, W.; Rupapara, V.; Mehmood, A.; Choi, G.S. A performance comparison of supervised machine learning models for Covid-19 tweets sentiment analysis. PLoS ONE 2021, 16, e0245909. [Google Scholar] [CrossRef]

- Jamil, R.; Ashraf, I.; Rustam, F.; Saad, E.; Mehmood, A.; Choi, G.S. Detecting sarcasm in multi-domain datasets using convolutional neural networks and long short-term memory network model. PeerJ Comput. Sci. 2021, 7, e645. [Google Scholar] [CrossRef]

- Rupapara, V.; Rustam, F.; Amaar, A.; Washington, P.B.; Lee, E.; Ashraf, I. Deepfake tweets classification using stacked Bi-LSTM and words embedding. PeerJ Comput. Sci. 2021, 7, e745. [Google Scholar] [CrossRef]

| User Name | Location | Tweets |

|---|---|---|

| lunini | Washington DC | As expected WHO celebrates return of # USA to the organization during the surge of the covid # pandemic # COVID19€| https://t.co/TbVcBF3Nxr (accessed on: 20 May 2021) |

| danschoenmn | St. Paul Park | We€re learning there was no federal plan to get the vaccine to our citizens. NONE! Imagine knowing something is har€| https://t.co/XU9ADtpNlV (accessed on: 20 May 2021) |

| RichardILevine | Hawaii, USA | @drdavidsamadi And THAT is how you end a pandemic. And credit will go to the # vaccine. where did we see this cleri€| https://t.co/X6OnOhbiMs (accessed on: 20 May 2021) |

| FrancicoCabral | Lisboa | There€s only one way forward: every person on earth will either get the virus or the vaccine. # COVID19 # vaccine |

| Tweets before Removal | Tweets after Removal |

|---|---|

| Many thyroid and autoimmune patients wonder whether they should get the COVID vaccine. Thyroid Expert Mary S€| https://t.co/8OHcyR5kQ7 (accessed on: 20 May 2021) | Many thyroid and autoimmune patients are wondering whether they should get the COVID vaccine. Thyroid Expert Mary S€| |

| As expected @WHO celebrates return of # USA to the organization during the surge of the covid #pandemic #COVID19€| https://t.co/TbVcBF3Nxr (accessed on: 20 May 2021) | As expected celebrates return of to the organization during the surge of the covid | |

| Tweets before Removal | Tweets after Removal |

|---|---|

| Many thyroid and autoimmune patients are wondering whether they should get the COVID vaccine. Thyroid Expert Mary S€| | Many thyroid autoimmune patients wondering whether get COVID vaccine. Thyroid Expert Mary |

| As expected, celebrates return of to the organization during the surge of the covid | | expected celebrates return organization surge covid |

| Tweets before Removal | Tweets after Removal |

|---|---|

| Many thyroid autoimmune patients are wondering whether to get the COVID vaccine. Thyroid Expert Mary | many thyroid autoimmune patient wonder whether get covid vaccine thyroid expert mary |

| expected celebrates return organization surge covid | expect celebrate return organization surge covid |

| Before Preprocessing | After Preprocessing |

|---|---|

| Many thyroid and autoimmune patients are wondering whether they should get the COVID-19 vaccine. Thyroid Expert Mary S€| https://t.co/8OHcyR5kQ7 (accessed on: 20 May 2021) | many thyroid autoimmune patient wonder whether get covid vaccine thyroid expert mary |

| As expected, @WHO celebrated the return of #USA to the organization during the surge of the covid #pandemic #COVID19€| https://t.co/TbVcBF3Nxr (accessed on: 20 May 2021) | expect celebrate return organization surge covid |

| Sentiment | Score |

|---|---|

| Negative | Polarity score 0 |

| Neutral | Polarity score = 0 |

| Positive | Polarity score 0 |

| Sentiment | Score |

|---|---|

| Negative | compound score −0.05 |

| Neutral | compound score > −0.05 to compound score < 0.05 |

| Positive | compound score 0.05 |

| Sentiment | Score |

|---|---|

| Negative | Polarity score < 0 |

| Neutral | Polarity score = 0 |

| Positive | Polarity score > 0 |

| LSTM | CNN | RNN |

|---|---|---|

| Embedding (5000, 200) Dropout (0.2) LSTM (100) Dropout (0.2) Dense (3, activation = ‘softmax’) | Embedding (5000, 200) Dropout (0.2) Conv1D (128, 4, activation = ‘relu’) MaxPooling1D (pool_size = 4) Flatten () Dense (32) Dense (2, activation = ‘softmax’) | Embedding (5000, 200) Dropout (0.2) SimpleRNN (32) Dense (3, activation = ‘softmax’) |

| GRU | CNN-LSTM | LSTM-GRNN |

| Embedding (5000, 200) Dropout (0.2) GRU (100) Dropout (0.2) Dense (3, activation = ‘softmax’) | Embedding (5000, 200) Dropout (0.2) Conv1D (128, 4, activation = ‘relu’) MaxPooling1D (pool_size = 4) LSTM (128) Dense (32) Dense (3, activation = ‘softmax’) | Embedding (5000, 200) Dropout (0.2) LSTM (100) Dropout (0.2) GRU (100) SimpleRNN (32) Dense (3, activation = ‘softmax’) |

| loss = ‘categorical_crossentropy’, optimizer = ‘adam’, epochs = 100 | ||

| NN | Count | JJ | Count | Entity Name | Entity Type | Count |

|---|---|---|---|---|---|---|

| Vaccine | 30,209 | Corona | 4483 | India | GPE | 3033 |

| Virus | 3540 | Good | 1262 | Today | DATE | 1787 |

| India | 2686 | Dose | 1080 | First | ORDINAL | 1557 |

| World | 1879 | Many | 1052 | China | GPE | 635 |

| Health | 1791 | Great | 894 | Million | CARDINAL | 503 |

| Pfizer | 1587 | Free | 789 | Pakistan | GPE | 473 |

| Country | 1525 | Safe | 739 | Pfizer | ORG | 428 |

| Worker | 1405 | Pandemic | 665 | Healthcare | ORG | 413 |

| News | 1403 | Medical | 608 | Norway | GPE | 404 |

| Government | 991 | Premarital | 425 | Chinese | NORP | 288 |

| Country | Positive | Negative | Neutral |

|---|---|---|---|

| All Countries | 38.33 | 12.86 | 48.81 |

| India | 37.74 | 10.66 | 51.60 |

| United Kingdom | 43.62 | 13.72 | 42.66 |

| Canada | 35.31 | 14.36 | 50.33 |

| South Africa | 30.31 | 11.32 | 58.36 |

| Pakistan | 29.18 | 14.23 | 56.58 |

| United State | 29.18 | 14.23 | 56.58 |

| Ireland | 41.14 | 13.90 | 44.96 |

| Germany | 33.63 | 9.87 | 56.50 |

| UAE | 34.72 | 8.81 | 56.48 |

| Israel | 26.45 | 17.42 | 56.13 |

| Australia | 37.41 | 16.33 | 46.253 |

| Other Countries | 38.92 | 13.25 | 47.83 |

| Country | Positive (%) | Negative (%) | Neutral (%) |

|---|---|---|---|

| All Countries | 39.95 | 22.31 | 37.74 |

| India | 38.39 | 20.85 | 40.75 |

| United Kingdom | 41.97 | 27.30 | 30.73 |

| Canada | 35.48 | 24.42 | 40.10 |

| South Africa | 27.53 | 25.61 | 46.86 |

| Pakistan | 27.22 | 22.42 | 50.36 |

| United State | 35.09 | 25.15 | 39.76 |

| Ireland | 42.23 | 24.52 | 33.24 |

| Germany | 37.67 | 12.11 | 50.22 |

| UAE | 47.67 | 12.95 | 39.39 |

| Israel | 32.90 | 15.48 | 51.61 |

| Australia | 49.66 | 20.41 | 29.93 |

| Others Countries | 40.77 | 22.30 | 36.93 |

| Country | Positive (%) | Negative (%) | Neutral (%) |

|---|---|---|---|

| Total | 35.01 | 23.78 | 41.22 |

| India | 33.31 | 20.87 | 45.83 |

| United Kingdom | 38.41 | 27.98 | 33.61 |

| Canada | 32.67 | 26.40 | 40.92 |

| South Africa | 24.74 | 26.65 | 48.61 |

| Pakistan | 22.42 | 27.05 | 50.53 |

| United State | 32.25 | 24.14 | 43.61 |

| Ireland | 39.24 | 23.16 | 37.60 |

| Germany | 48.43 | 13.90 | 37.67 |

| UAE | 35.75 | 13.47 | 50.78 |

| Israel | 23.87 | 41.29 | 34.84 |

| Australia | 43.54 | 27.89 | 28.57 |

| Other Country | 35.50 | 24.10 | 40.40 |

| Model | Accuracy (%) | Precision (%) | Recall (%) | F1 Score (%) |

|---|---|---|---|---|

| DT | 92 | 93 | 87 | 90 |

| RF | 93 | 96 | 92 | 94 |

| LR | 93 | 94 | 87 | 89 |

| Model | Accuracy (%) | Precision (%) | Recall (%) | F1 Score (%) |

|---|---|---|---|---|

| DT | 83 | 86 | 81 | 82 |

| RF | 90 | 92 | 89 | 90 |

| LR | 90 | 91 | 88 | 89 |

| Model | Accuracy (%) | Precision (%) | Recall (%) | F1 Score (%) |

|---|---|---|---|---|

| DT | 84 | 87 | 81 | 83 |

| RF | 90 | 92 | 89 | 90 |

| LR | 89 | 90 | 88 | 89 |

| Model | Accuracy (%) | Precision (%) | Recall (%) | F1 Score (%) |

|---|---|---|---|---|

| LSTM | 92 | 90 | 90 | 90 |

| GRU | 93 | 93 | 93 | 92 |

| RNN | 92 | 92 | 92 | 92 |

| CNN | 87 | 87 | 87 | 87 |

| CNN-LSTM | 88 | 88 | 88 | 88 |

| LSTM-GRNN | 95 | 95 | 95 | 95 |

| Ref. | Year | Model | Accuracy (%) |

|---|---|---|---|

| [39] | 2019 | LR-SGDC | 90 |

| [53] | 2021 | ET + FU | 91 |

| [54] | 2021 | CNN-LSTM | 88 |

| [55] | 2021 | Stacked Bi-LSTM | 93 |

| This study | 2021 | LSTM-GRNN | 95 |

| Year | Ratio of Sentiments | ||

|---|---|---|---|

| Positive | Negative | Neutral | |

| TextBlob | |||

| 2021 | 38.33 | 12.86 | 48.81 |

| 2022 | 25.40 | 14.10 | 60.50 |

| VADER | |||

| 2021 | 39.95 | 22.31 | 37.74 |

| 2022 | 26.10 | 29.20 | 44.70 |

| AFINN | |||

| 2021 | 35.01 | 23.78 | 41.22 |

| 2022 | 20.90 | 31.60 | 47.50 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Reshi, A.A.; Rustam, F.; Aljedaani, W.; Shafi, S.; Alhossan, A.; Alrabiah, Z.; Ahmad, A.; Alsuwailem, H.; Almangour, T.A.; Alshammari, M.A.; et al. COVID-19 Vaccination-Related Sentiments Analysis: A Case Study Using Worldwide Twitter Dataset. Healthcare 2022, 10, 411. https://doi.org/10.3390/healthcare10030411

Reshi AA, Rustam F, Aljedaani W, Shafi S, Alhossan A, Alrabiah Z, Ahmad A, Alsuwailem H, Almangour TA, Alshammari MA, et al. COVID-19 Vaccination-Related Sentiments Analysis: A Case Study Using Worldwide Twitter Dataset. Healthcare. 2022; 10(3):411. https://doi.org/10.3390/healthcare10030411

Chicago/Turabian StyleReshi, Aijaz Ahmad, Furqan Rustam, Wajdi Aljedaani, Shabana Shafi, Abdulaziz Alhossan, Ziyad Alrabiah, Ajaz Ahmad, Hessa Alsuwailem, Thamer A. Almangour, Musaad A. Alshammari, and et al. 2022. "COVID-19 Vaccination-Related Sentiments Analysis: A Case Study Using Worldwide Twitter Dataset" Healthcare 10, no. 3: 411. https://doi.org/10.3390/healthcare10030411

APA StyleReshi, A. A., Rustam, F., Aljedaani, W., Shafi, S., Alhossan, A., Alrabiah, Z., Ahmad, A., Alsuwailem, H., Almangour, T. A., Alshammari, M. A., Lee, E., & Ashraf, I. (2022). COVID-19 Vaccination-Related Sentiments Analysis: A Case Study Using Worldwide Twitter Dataset. Healthcare, 10(3), 411. https://doi.org/10.3390/healthcare10030411