Comparison of Subjective Facial Emotion Recognition and “Facial Emotion Recognition Based on Multi-Task Cascaded Convolutional Network Face Detection” between Patients with Schizophrenia and Healthy Participants

Abstract

1. Introduction

2. Materials and Methods

2.1. Research Design

2.2. Participants

2.3. Data Collection

2.4. Analysis Methods

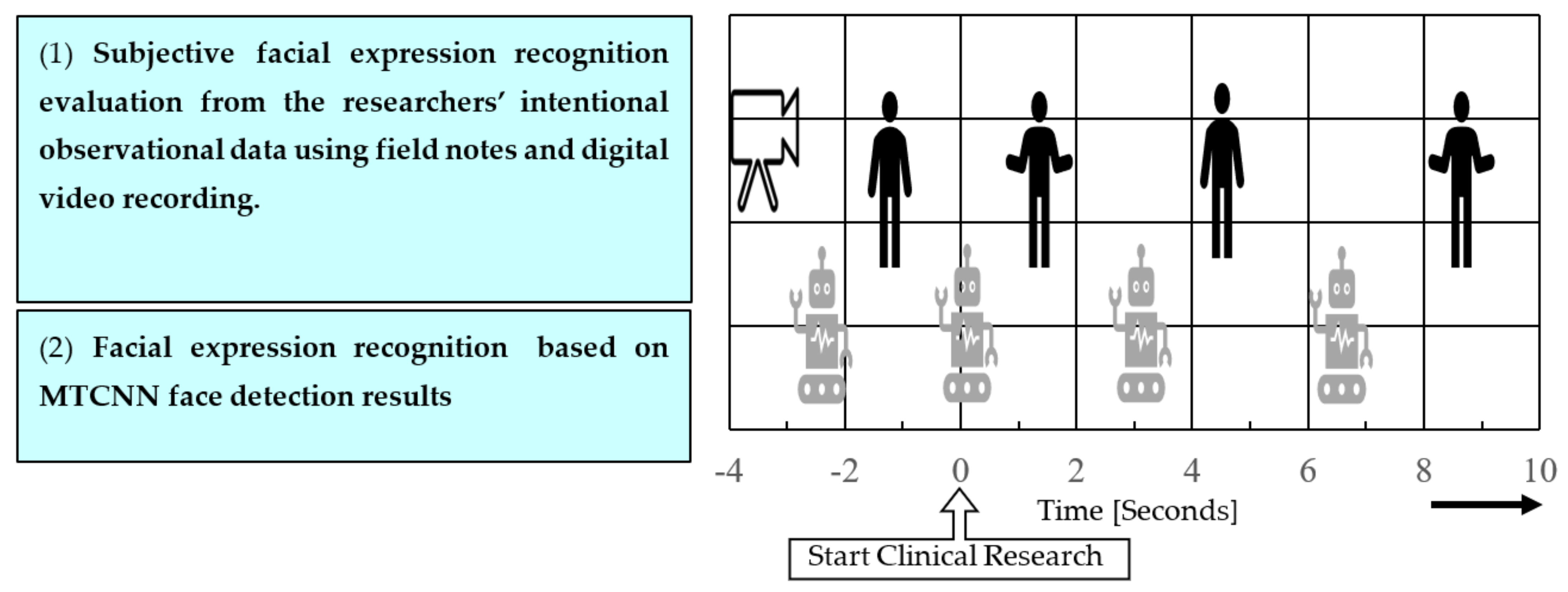

2.4.1. Subjective FER by Medical Experts

2.4.2. Analysis by the FER Based on MTCNN Face Detection Algorithm

2.4.3. Establishing a Region of Interest

2.4.4. Preliminary Experiment to Confirm Discriminant Accuracy of the FER Based on MTCNN Face Detection

2.4.5. Statistical Processing Method

2.5. Ethical Considerations

3. Results

3.1. Reliability and Validity of the FER Based on MTCNN Face Detection

3.2. Percentage of Agreement between the Subjective FER and FER Based on MTCNN Face Detection

3.3. Reliability of the FER

4. Discussion

Limitations of the Study

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Couture, S.M.; Penn, D.L.; Roberts, D.L. The functional significance of social cognition in schizophrenia: A review. Schizophr. Bull. 2006, 32 (Suppl. 1), S44–S63. [Google Scholar] [CrossRef] [PubMed]

- Ito, F.; Matsumoto, K.; Miyakoshi, T.; Ohmuro, N.; Uchida, T.; Matsuoka, H. Emotional processing during speech communication and positive symptoms in schizophrenia. Psychiatry Clin. Neurosci. 2013, 67, 526–531. [Google Scholar] [CrossRef] [PubMed]

- Pinkham, A.E.; Penn, D.L.; Perkins, D.O.; Graham, K.A.; Siegel, M. Emotion perception and social skill over the course of psychosis: A comparison of individuals “at-risk” for psychosis and individuals with early and chronic schizophrenia spectrum illness. Cogn. Neuropsychiatry 2007, 12, 198–212. [Google Scholar] [CrossRef] [PubMed]

- Tahir, Y.; Yang, Z.; Chakraborty, D.; Thalmann, N.; Thalmann, D.; Maniam, Y.; Binte Abdul Rashid, N.A.; Tan, B.L.; Lee Chee Keong, J.; Dauwels, J. Non-verbal speech cues as objective measures for negative symptoms in patients with schizophrenia. PLoS ONE 2019, 14, e0214314. [Google Scholar] [CrossRef] [PubMed]

- Bowie, C.R.; Harvey, P.D. Communication abnormalities predict functional outcomes in chronic schizophrenia: Differential associations with social and adaptive functions. Schizophr. Res. 2008, 103, 240–247. [Google Scholar] [CrossRef] [PubMed]

- Raffard, S.; Bortolon, C.; Khoramshahi, M.; Salesse, R.N.; Burca, M.; Marin, L.; Bardy, B.G.; Billard, A.; Macioce, V.; Capdevielle, D. Humanoid robots versus humans: How is emotional valence of facial expressions recognized by individuals with schizophrenia? An exploratory study. Schizophr. Res. 2016, 176, 506–513. [Google Scholar] [CrossRef]

- Schoenhofer, S.O.; van Wynsberghe, A.; Boykin, A. Engaging robots as nursing partners in caring: Nursing as caring meets care-centered value-sensitive design. Int. J. Hum. Caring 2019, 23, 157–167. [Google Scholar] [CrossRef]

- Miyagawa, M.; Kai, Y.; Yasuhara, Y.; Ito, H.; Betriana, F.; Tanioka, T.; Locsin, R. Consideration of safety management when using Pepper, a humanoid robot for care of older adults. Intell. Control Autom. 2020, 11, 15–24. [Google Scholar] [CrossRef]

- Leszczyńska, A. Facial emotion perception and schizophrenia symptoms. Psychiatr. Pol. 2015, 49, 1159–1168. [Google Scholar] [CrossRef]

- Seo, E.; Park, H.Y.; Park, K.; Koo, S.J.; Lee, S.Y.; Min, J.E.; Lee, E.; An, S.K. Impaired facial emotion recognition in individuals at ultra-high risk for psychosis and associations with schizotypy and paranoia level. Front. Psychiatry 2020, 11, 577. [Google Scholar] [CrossRef]

- Aubin, L.; Mostafaoui, G.; Amiel, C.; Serré, H.; Capdevielle, D.; de Menibus, M.H.; Boiché, J.; Schmidt, R.; Raffard, S.; Marin, L. Study of coordination between patients with schizophrenia and socially assistive robot during physical activity. Int. J. Soc. Robot. 2021, 13, 1625–1640. [Google Scholar] [CrossRef]

- Niznikiewicz, M.A.; Kubicki, M.; Mulert, C.; Condray, R. Schizophrenia as a disorder of communication. Schizophr. Res. Treat. 2013, 2013, 952034. [Google Scholar] [CrossRef]

- Cohen, L.; Khoramshahi, M.; Salesse, R.N.; Bortolon, C.; Słowiński, P.; Zhai, C.; Tsaneva-Atanasova, K.; Di Bernardo, M.; Capdevielle, D.; Marin, L.; et al. Influence of facial feedback during a cooperative human–robot task in schizophrenia. Sci. Rep. 2017, 7, 15023. [Google Scholar] [CrossRef]

- Kupper, Z.; Ramseyer, F.; Hoffmann, H.; Tschacher, W. Nonverbal synchrony in social interactions of patients with schizophrenia indicates socio-communicative deficits. PLoS ONE 2015, 10, e0145882. [Google Scholar] [CrossRef]

- Ozeki, T.; Mouri, T.; Sugiura, H.; Yano, Y.; Miyosawa, K. Use of communication robots to converse with people suffering from schizophrenia. ROBOMECH J. 2020, 7, 13. [Google Scholar] [CrossRef]

- Tanioka, T.; Locsin, R.C.; Betriana, F.; Kai, Y.; Osaka, K.; Baua, E.; Schoenhofer, S. Intentional Observational Clinical Research Design: Innovative design for complex clinical research using advanced technology. Int. J. Environ. Res. Public Health 2021, 18, 11184. [Google Scholar] [CrossRef]

- Zhang, K.; Zhang, Z.; Li, Z.; Qiao, Y. Joint face detection and alignment using multitask cascaded convolutional networks. IEEE Signal Process. Lett. 2016, 23, 1499–1503. [Google Scholar] [CrossRef]

- Tanioka, T.; Yokotani, T.; Tanioka, R.; Betriana, F.; Matsumoto, K.; Locsin, R.; Zhao, Y.; Osaka, K.; Miyagawa, M.; Schoenhofer, S. Development issues of healthcare robots: Compassionate communication for older adults with dementia. Int. J. Environ. Res. Public Health 2021, 18, 4538. [Google Scholar] [CrossRef]

- Osaka, K. Development of the model for the intermediary role of nurses in transactive relationships with healthcare robots. Int. J. Hum. Caring 2020, 24, 265–274. [Google Scholar]

- Osaka, K.; Sugimoto, H.; Tanioka, T.; Yasuhara, Y.; Locsin, R.C.; Zhao, Y.; Okuda, K.; Saito, K. Characteristics of a transactive phenomenon in relationships among older adults with dementia, nurses as intermediaries, and communication robot. Intell. Control Autom. 2017, 08, 111–125. [Google Scholar] [CrossRef]

- Ko, H.; Kim, K.; Bae, M.; Seo, M.G.; Nam, G.; Park, S.; Park, S.; Ihm, J.; Lee, J.Y. Changes in computer-analyzed facial expressions with age. Sensors 2021, 21, 4858. [Google Scholar] [CrossRef] [PubMed]

- Wingenbach, T.S.H.; Ashwin, C.; Brosnan, M. Sex differences in facial emotion recognition across varying expression intensity levels from videos. PLoS ONE 2018, 13, e0190634. [Google Scholar] [CrossRef] [PubMed]

- Jeong, D.; Kim, B.G.; Dong, S.Y. Deep joint spatiotemporal network (DJSTN) for efficient facial expression recognition. Sensors 2020, 20, 1936. [Google Scholar] [CrossRef] [PubMed]

- Facial Expression Recognition on AffectNet. Available online: https://paperswithcode.com/sota/facial-expression-recognition-on-affectnet?metric=Accuracy%20(7%20emotion) (accessed on 8 November 2022).

- Ge, H.; Dai, Y.; Zhu, Z.; Wang, B. Robust face recognition based on multi-task convolutional neural network. Math. Biosci. Eng. 2021, 18, 6638–6651. [Google Scholar] [CrossRef] [PubMed]

- Face Classification and Detection. Available online: https://github.com/oarriaga/face_classification/ (accessed on 1 November 2022).

- Facial Expression Recognition. Available online: https://pypi.org/project/fer/ (accessed on 1 November 2022).

- Passarelli, M.; Masini, M.; Bracco, F.; Petrosino, M.; Chiorri, C. Development and validation of the Facial Expression Recognition Test (FERT). Psychol. Assess. 2018, 30, 1479–1490. [Google Scholar] [CrossRef] [PubMed]

- Gao, Z.; Zhao, W.; Liu, S.; Liu, Z.; Yang, C.; Xu, Y. Facial emotion recognition in schizophrenia. Front. Psychiatry 2021, 12, 633717. [Google Scholar] [CrossRef]

- Faghel-Soubeyrand, S.; Lecomte, T.; Bravo, M.A.; Lepage, M.; Potvin, S.; Abdel-Baki, A.; Villeneuve, M.; Gosselin, F. Abnormal visual representations associated with confusion of perceived facial expression in schizophrenia with social anxiety disorder. npj Schizophr. 2020, 6, 28. [Google Scholar] [CrossRef]

- Calvo, M.G.; Nummenmaa, L. Detection of emotional faces: Salient physical features guide effective visual search. J. Exp. Psychol. Gen. 2008, 137, 471–494. [Google Scholar] [CrossRef]

- Smith, M.L.; Cottrell, G.W.; Gosselin, F.; Schyns, P.G. Transmitting and decoding facial expressions. Psychol. Sci. 2005, 16, 184–189. [Google Scholar] [CrossRef]

- Eisenbarth, H.; Alpers, G.W. Happy mouth and sad eyes: Scanning emotional facial expressions. Emotion 2011, 11, 860–865. [Google Scholar] [CrossRef]

- Guo, K. Holistic gaze strategy to categorize facial expression of varying intensities. PLoS ONE 2012, 7, e42585. [Google Scholar] [CrossRef]

- Vaidya, A.R.; Jin, C.; Fellows, L.K. Eye spy: The predictive value of fixation patterns in detecting subtle and extreme emotions from faces. Cognition 2014, 133, 443–456. [Google Scholar] [CrossRef]

- Green, C.; Guo, K. Factors contributing to individual differences in facial expression categorisation. Cogn. Emot. 2018, 32, 37–48. [Google Scholar] [CrossRef]

- Jack, R.E.; Garrod, O.G.; Yu, H.; Caldara, R.; Schyns, P.G. Facial expressions of emotion are not culturally universal. Proc. Natl. Acad. Sci. USA 2012, 109, 7241–7244. [Google Scholar] [CrossRef]

- Daudelin-Peltier, C.; Forget, H.; Blais, C.; Deschênes, A.; Fiset, D. The effect of acute social stress on the recognition of facial expression of emotions. Sci. Rep. 2017, 7, 1036. [Google Scholar] [CrossRef]

- Fan, Y.; Lam, J.C.K.; Li, V.O.K. Demographic effects on facial emotion expression: An interdisciplinary investigation of the facial action units of happiness. Sci. Rep. 2021, 11, 5214. [Google Scholar] [CrossRef]

| Healthy Participants | Age (Years) | FER Based on MTCNN FaceDetection | Examiner 1 | Examiner 2 | Examiner 3 | Examiner 4 | Examiner 5 | Examiner 6 | Coefficient of Identity (%) |

|---|---|---|---|---|---|---|---|---|---|

| 1 | 40 | Happy | Neutral | Happy | Neutral | Happy | Happy | Happy | 71.43 |

| 2 | 50 | Happy | Neutral | Happy | Neutral | Happy | Happy | Happy | 71.43 |

| 3 | 60 | Happy | Happy | Happy | Happy | Happy | Surprised | Surprised | 71.43 |

| 4 | 60 | Happy | Happy | Happy | Happy | Happy | Happy | Neutral | 85.71 |

| 5 | 60 | Happy | Neutral | Neutral | Neutral | Happy | Happy | Happy | 42.86 |

| 6 | 60 | Fear | Neutral | Happy | Neutral | Happy | Happy | Happy | 42.86 |

| 7 | 60 | Happy | Neutral | Happy | Neutral | Happy | Happy | Happy | 71.43 |

| 8 | 70 | Happy | Neutral | Happy | Happy | Happy | Happy | Happy | 85.71 |

| 9 | 70 | Happy | Happy | Happy | Happy | Happy | Happy | Neutral | 85.71 |

| 10 | 70 | Happy | Happy | Happy | Surprised | Happy | Happy | Surprised | 71.43 |

| Participants | Examiner A | Examiner B | Examiner C |

|---|---|---|---|

| (a) Patient group | 40.63 (%) | 34.38 (%) | 25.00 (%) |

| (b) Healthy participant group | 42.50 (%) | 52.50 (%) | 17.50 (%) |

| Participants | Not Matched | Matched |

|---|---|---|

| (a) Patient group (n = 31) | 28 | 3 |

| Adjusted residual | 0.7 | −0.7 |

| (b) Healthy participant group (n = 40) | 34 | 6 |

| Adjusted residual | −0.7 | 0.7 |

| Participants | ICC | 95% CI | F-Test with True Value of 0 | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Lower Limit | Upper Limit | Value | Degree of Freedom 1 | Degree of Freedom 2 | p-Value | ||||

| (a) Patient group | FER based on MTCNN face detection | Average Measured Value | 0.41 | −0.02 | 0.69 | 1.70 | 30.00 | 90.00 | 0.03 |

| (b) Healthy participant group | 0.24 | −0.24 | 0.56 | 1.31 | 39.00 | 117.00 | 0.13 | ||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Akiyama, T.; Matsumoto, K.; Osaka, K.; Tanioka, R.; Betriana, F.; Zhao, Y.; Kai, Y.; Miyagawa, M.; Yasuhara, Y.; Ito, H.; et al. Comparison of Subjective Facial Emotion Recognition and “Facial Emotion Recognition Based on Multi-Task Cascaded Convolutional Network Face Detection” between Patients with Schizophrenia and Healthy Participants. Healthcare 2022, 10, 2363. https://doi.org/10.3390/healthcare10122363

Akiyama T, Matsumoto K, Osaka K, Tanioka R, Betriana F, Zhao Y, Kai Y, Miyagawa M, Yasuhara Y, Ito H, et al. Comparison of Subjective Facial Emotion Recognition and “Facial Emotion Recognition Based on Multi-Task Cascaded Convolutional Network Face Detection” between Patients with Schizophrenia and Healthy Participants. Healthcare. 2022; 10(12):2363. https://doi.org/10.3390/healthcare10122363

Chicago/Turabian StyleAkiyama, Toshiya, Kazuyuki Matsumoto, Kyoko Osaka, Ryuichi Tanioka, Feni Betriana, Yueren Zhao, Yoshihiro Kai, Misao Miyagawa, Yuko Yasuhara, Hirokazu Ito, and et al. 2022. "Comparison of Subjective Facial Emotion Recognition and “Facial Emotion Recognition Based on Multi-Task Cascaded Convolutional Network Face Detection” between Patients with Schizophrenia and Healthy Participants" Healthcare 10, no. 12: 2363. https://doi.org/10.3390/healthcare10122363

APA StyleAkiyama, T., Matsumoto, K., Osaka, K., Tanioka, R., Betriana, F., Zhao, Y., Kai, Y., Miyagawa, M., Yasuhara, Y., Ito, H., Soriano, G., & Tanioka, T. (2022). Comparison of Subjective Facial Emotion Recognition and “Facial Emotion Recognition Based on Multi-Task Cascaded Convolutional Network Face Detection” between Patients with Schizophrenia and Healthy Participants. Healthcare, 10(12), 2363. https://doi.org/10.3390/healthcare10122363