Reliability Generalization Meta-Analysis of Internet Gaming Disorder Scale

Abstract

:1. Introduction

1.1. Literature Review

1.2. Goal Setting

2. Materials and Methods

2.1. Information Sources

2.2. Eligibility Criteria

2.3. Search Strategy

2.3.1. First Iteration

2.3.2. Second Iteration

2.3.3. Third Iteration

2.4. Selection of Studies

2.5. Data Extraction

2.6. Analysis

2.6.1. Description and Evaluation of α Coefficients

2.6.2. Modeling

2.6.3. Sources of Heterogeneity

2.6.4. Outliers and Robust Estimation

3. Results

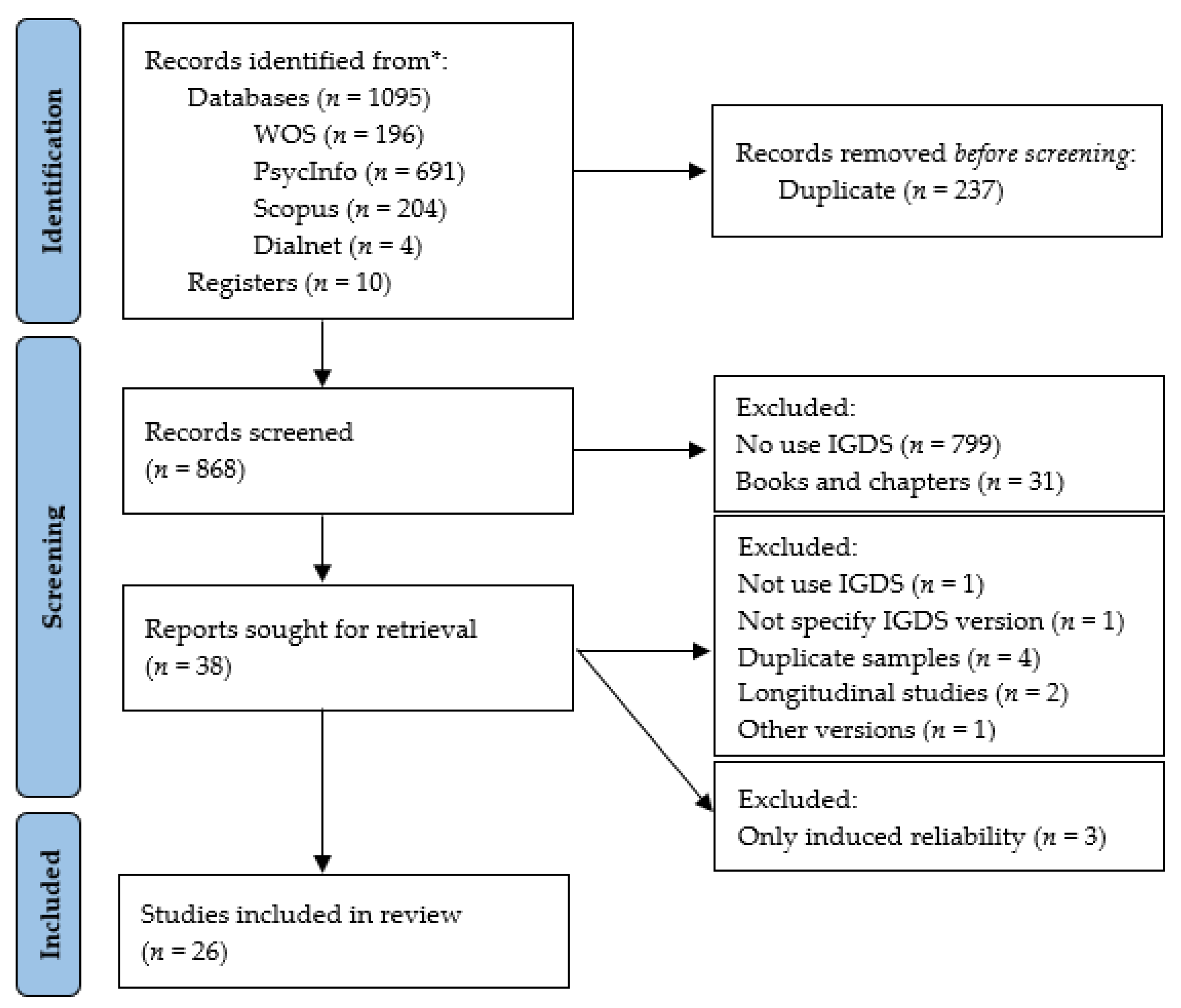

3.1. Results of the Study Selection Process

3.2. Description and Evaluation of the Level

3.3. Random-Effects Model

3.3.1. Mean Reliability

3.3.2. Robust Estimate

3.4. Varying Coefficients Model

3.4.1. Heterogeneity Estimation

3.4.2. Exploratory Analysis

4. Discussion

4.1. Limitations

4.2. Practical Implications and Future Research

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix B

| TITLE | Yes | No | NA |

|---|---|---|---|

| 1. Title | X | ||

| ABSTRACT | |||

| 2. Abstract | X | ||

| INTRODUCTION | |||

| 3. Background | X | ||

| 4. Objectives | X | ||

| METHOD | |||

| 5. Selection criteria | X | ||

| 6. Search strategies | X | ||

| 7. Data extraction | X | ||

| 8. Reported reliability | X | ||

| 9. Estimating the reliability induction and other sources of bias | X | ||

| 10. Data extraction of inducing studies | X | ||

| 11. Reliability of data extraction | X | ||

| 12. Transformation method | X | ||

| 13. Statistical model | X | ||

| 14. Weighting method | X | ||

| 15. Heterogeneity assessment | X | ||

| 16. Moderator analyses | X | ||

| 17. Additional analyses | X | ||

| 18. Software | X | ||

| RESULTS | |||

| 19. Results of the study selection process | X | ||

| 20. Mean reliability and heterogeneity | X | ||

| 21. Moderator analyses | X | ||

| 22. Sensitivity analyses | X | ||

| 23. Comparison of inducing and reporting studies | X | ||

| 24. Data set | X | ||

| DISCUSSION | |||

| 25. Summary of results | X | ||

| 26. Limitations | X | ||

| 27. Implications for practice | X | ||

| 28. Implications for future research | X | ||

| FUNDING | |||

| 29. Funding | X | ||

| PROTOCOL | |||

| 30. Protocol | X |

Appendix C

| No. | Study | Version | Reported Reliability | Study Validity | Method | Reliability | Retest | |

|---|---|---|---|---|---|---|---|---|

| Available | Not Avail. | |||||||

| 1 | Lemmens et al. (2015) [9] | IGDS9D | X | Empirical | α | 0.83 | ||

| 2 | Sioni et al. (2017) [59] | IGDS9D | X | Induced | α | 0.85 | ||

| 3 | Wartberg et al. (2017) [61] | IGDS9D | X | Induced | α | 0.82 | ||

| 4 | Baiumy et al. (2018) [20] | IGDS9D | X | Induced | α | 0.61 | ||

| 5 | Buiza-Aguado et al. (2018) [62] | IGDS9D | X | Induced | ω | 0.81 | ||

| 6 | Koning et al. (2018) [71] | IGDS9D | X | Induced | α | 0.74 | ||

| 7 | Van Den Eijnden et al. (2018) [73] | IGDS9D | X | Induced | α | 0.73 | ||

| 8 | Brooks and Clark (2019) [63] | IGDS9D | X | Induced | α | 0.82 | ||

| 9 | Dedeaux (2019) [69] | IGDS9D | X | Induced | α | 0.84 | ||

| 10 | Stockdale et al. (2019) [60] | IGDS9D | X | Induced | α | 0.81 | ||

| 11 | Grajewski et al. (2020) [64] | IGDS9D | X | Induced | α | 0.69 | ||

| 12 | Lei et al. (2020) [18] | IGDS9D | X | Empirical | α | 0.81 | 0.83 | |

| 13 | Wartberg et al. (2020) [24] | IGDS9D | X | Induced | α | 0.66 | ||

| 14 | Zendle (2020) [74] | IGDS9D | X | Induced | α | 0.79 | ||

| 15 | Booth et al. (2021) [68] | IGDS9D | X | Induced | α | 0.74 | ||

| 16 | Liu et al. (2021) [65] | IGDS9D | X | Induced | α | 0.83 | ||

| 17 | Oka et al. (2021) [15] | IGDS9D | X | Induced | α | 0.84 | ||

| 18 | Paschke et al. (2021) [21] | IGDS9D | X | Empirical | α | 0.78 | ||

| 19 | Paschke et al. (2021) [21] | IGDS9D | X | Empirical | α | 0.72 | ||

| 20 | Paschke et al. (2021) [21] | IGDS9D | X | Empirical | α | 0.56 | ||

| 1 | Lemmens et al. (2015) [9] | IGDS9P | X | Empirical | α | 0.95 | ||

| 2 | Evren et al. (2017) [19] | IGDS9P | X | Empirical | α | 0.93 | 0.756 | |

| 3 | Mills et al. (2018) | IGDS9P | X | Induced | α | 0.85 | ||

| 4 | Lei et al. (2020) [18] | IGDS9P | X | Empirical | α | 0.89 | 0.84 | |

| 1 | Lemmens et al. (2015) [9] | IGDS27D | X | Empirical | α | 0.93 | ||

| 2 | Reyes et al. (2019) [72] | IGDS27D | X | Induced | α | 0.9 | ||

| 3 | Ait Daoud (2020) [57] | IGDS27D | X | Induced | α | 0.93 | ||

| 4 | Zemestani et al. (2021) [67] | IGDS27D | X | Induced | α | 0.81 | ||

| 1 | Lemmens et al. (2015) [9] | IGDS27P | X | Empirical | α | 0.94 | ||

| 2 | Evren et al. (2017) [19] | IGDS27P | X | Empirical | α | 0.97 | 0.759 | |

| 3 | Allen and Anderson (2018) [22] | IGDS27P | X | Induced | α | 0.96 | ||

| 4 | Gibbons and Bouldin (2019) [70] | IGDS27P | X | Induced | α | 0.96 | ||

| 5 | Mills and Allen (2020) [66] | IGDS27P | X | Induced | α | 0.96 | ||

| No. | Study | V | Lang | English Language | Application Mode | N Sample | Sample | Gamer Condition |

|---|---|---|---|---|---|---|---|---|

| 1 | Lemmens et al. (2015) [9] | IGDS9D | Dutch | N | Self-report | 989 | Gen. Comm | Gamers |

| 2 | Sioni et al. (2017) [59] | IGDS9D | English | Y | Self-report | 394 | Gen. Comm | Gamers |

| 3 | Wartberg et al. (2017) [61] | IGDS9D | German | N | Interview | 1020 | Adolescents | Mixed |

| 4 | Baiumy et al. (2018) [20] | IGDS9D | Arabic | N | Self-report | 204 | Young | Gamers |

| 5 | Buiza-Aguado et al. (2018) [62] | IGDS9D | Spanish | N | Self-report | 708 | Adolescents | Mixed |

| 6 | Koning et al. (2018) [71] | IGDS9D | Dutch | N | Self-report | 354 | Adolescents | NR |

| 7 | Van Den Eijnden et al. (2018) [73] | IGDS9D | Dutch | N | Self-report | 538 | Adolescents | NR |

| 8 | Brooks and Clark (2019) [63] | IGDS9D | English | Y | Self-report | 257 | Gen. Comm | Mixed |

| 9 | Dedeaux (2019) [69] | IGDS9D | English | Y | Self-report | 310 | Gen. Comm | NR |

| 10 | Stockdale et al. (2019) [60] | IGDS9D | English | N | Encuesta | 855 | Adults | Mixed |

| 11 | Grajewski et al. (2020) [64] | IGDS9D | Polish | N | Self-report | 1306 | Gen. Comm | Gamers |

| 12 | Lei et al. (2020) [18] | IGDS9D | Chinese | N | Self-report | 351 | Gen. Comm | NR |

| 13 | Wartberg et al. (2020) [24] | IGDS9D | German | N | Interview | 1001 | Adolescents | Mixed |

| 14 | Zendle (2020) [74] | IGDS9D | English | Y | Self-report | 1081 | Gen. Comm | Mixed |

| 15 | Booth et al. (2021) [68] | IGDS9D | English | Y | Self-report | 2078 | Adults | Mixed |

| 16 | Liu et al. (2021) [65] | IGDS9D | Chinese | N | NR | 1121 | Adolescents | Mixed |

| 17 | Oka et al. (2021) [15] | IGDS9D | Japanese | N | Self-report | 3938 | Gen. Comm | Mixed |

| 18 | Paschke et al. (2021) [21] | IGDS9D | German | N | Interview | 762 | Adolescents | Gamers |

| 19 | Paschke et al. (2021) [21] | IGDS9D | German | N | Interview | 777 | Adolescents | Gamers |

| 20 | Paschke et al. (2021) [21] | IGDS9D | German | N | Interview | 784 | Adolescents | Gamers |

| 1 | Lemmens et al. (2015) [9] | IGDS9P | Dutch | N | Self-report | 923 | Gen. Comm | Gamers |

| 2 | Evren et al. (2017) [19] | IGDS9P | Turkish | N | Self-report | 457 | Young | Mixed |

| 3 | Mills et al. (2018) | IGDS9P | English | Y | Self-report | 1029 | Gen. Comm | Gamers |

| 4 | Lei et al. (2020) [18] | IGDS9P | Chinese | N | Self-report | 378 | Gen. Comm | NR |

| 1 | Lemmens et al. (2015) [9] | IGDS27D | Dutch | N | Self-report | 989 | Gen. Comm | Gamers |

| 2 | Reyes et al. (2019) [72] | IGDS27D | NR | NR | Self-report | 1026 | Gen. Comm | Gamers |

| 3 | Ait Daoud (2020) [57] | IGDS27D | English | Y | Self-report | 423 | Gen. Comm | Gamers |

| 4 | Zemestani et al. (2021) [67] | IGDS27D | Persian | N | Self-report | 481 | Gen. Comm | Mixed |

| 1 | Lemmens et al. (2015) [9] | IGDS27P | Dutch | N | Self-report | 923 | Gen. Comm | Gamers |

| 2 | Evren et al. (2017) [19] | IGDS27P | Turkish | N | Self-report | 457 | Young | Mixed |

| 3 | Allen and Anderson (2018) [22] | IGDS27P | English | Y | Self-report | 315 | Young | Gamers |

| 4 | Gibbons and Bouldin (2019) [70] | IGDS27P | English | Y | Self-report | 272 | Young | NR |

| 5 | Mills and Allen (2020) [66] | IGDS27P | English | Y | Self-report | 487 | Gen. Comm | Gamers |

References

- Asociación Estadounidense de Psiquiatría [APA]. Manual Diagnóstico y Estadístico de Trastornos Mentales, 5th ed.; Asociación Estadounidense de Psiquiatría: Arlington, VA, USA, 2013. [Google Scholar]

- Montag, C.; Schivinski, B.; Sariyska, R.; Kannen, C.; Demetrovics, Z.; Pontes, H.M. Síntomas psicopatológicos y motivos del juego en los juegos desordenados: Una comparación psicométrica entre los marcos de diagnóstico de la OMS y la APA. J. Clin. Med. 2019, 8, 1691. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Carbonell, X. The diagnosis of video game addiction in the Dsm-5 and the Icd-11: Challenges and opportunities for clinicians. Psychol. Pap. 2020, 41, 211–218. [Google Scholar] [CrossRef]

- Beranuy, M.; Machimbarrena, J.; Vega-Osés, M.A.; Carbonell, X.; Griffiths, M.D.; Pontes, H.M.; González-Cabrera, J. Spanish validation of the internet gaming disorder scale—Short form (IGDS9-SF): Prevalence and relationship with online gambling and quality of life. Int. J. Environ. Res. Public Health 2020, 17, 1562. [Google Scholar] [CrossRef] [Green Version]

- Organización Mundial de la Salud. 11ª Revisión de la Clasificación Internacional de Enfermedades (CIE-11). Organización Mundial de la Salud (OMS). 2018. Available online: https://icd.who.int (accessed on 11 July 2021).

- Stevens, M.W.; Dorstyn, D.; Delfabbro, P.H.; King, D.L. Global prevalence of gaming disorder: A systematic review and meta-analysis. Aust. N. Z. J. Psychiatry 2021, 55, 553–568. [Google Scholar] [CrossRef]

- García-García, E.; Martínez-Gimeno, M.-L.; Benítez-Andrades, J.A.; Miranda-Gómez, J.; Zapata-Cornejo, E.d.D.; Escobar-Aguilar, G. Substance use and addictive behavior in spanish adolescents in secondary school. Healthcare 2021, 9, 186. [Google Scholar] [CrossRef]

- Wang, R.; Yang, S.; Yan, Y.; Tian, Y.; Wang, P. Internet gaming disorder in early adolescents: Gender and depression differences in a latent growth model. Healthcare 2021, 9, 1188. [Google Scholar] [CrossRef]

- Lemmens, J.S.; Valkenburg, P.M.; Gentile, D.A. The internet gaming disorder scale. Assessment 2015, 27, 567–582. [Google Scholar]

- Paulus, F.; Ohmann, S.; Von Gontard, A.; Popow, C. Internet gaming disorder in children and adolescents: A systematic review. Dev. Med. Child Neurol. 2018, 60, 645–659. [Google Scholar] [CrossRef]

- Wang, H.; Cheng, C. New perspectives on the prevalence and associated factors of gaming disorder in Hong Kong community adults: A generational approach. Comput. Hum. Behav. 2021, 114, 106574. [Google Scholar] [CrossRef]

- Fam, J.Y. Prevalence of internet gaming disorder in adolescents: A meta-analysis across three decades. Scand. J. Psychol. 2018, 59, 524–531. [Google Scholar] [CrossRef]

- Warman, P. 2018 Global Games Market Report. Recovered from 2018. Available online: https://cdn2.hubspot.net/hubfs/700740/Reports/Newzoo_2018_Global_Games_Market_Report_Light.pdf (accessed on 10 February 2022).

- Cabeza-Ramírez, L.J.; Muñoz-Fernández, G.A.; Santos-Roldán, L. Video game streaming in young people and teenagers: Uptake, user groups, dangers, and opportunities. Healthcare 2021, 9, 192. [Google Scholar] [CrossRef] [PubMed]

- Oka, T.; Hamamura, T.; Miyake, Y.; Kobayashi, N.; Honjo, M.; Kawato, M.; Kubo, T.; Chiba, T. Prevalence and risk factors of internet gaming disorder and problematic internet use before and during the COVID-19 pandemic: A large online survey of Japanese adults. J. Psychiatr. Res. 2021, 142, 218–225. [Google Scholar] [CrossRef] [PubMed]

- Pontes, H.M.; Macur, M.; Griffiths, M.D. Internet gaming disorder among slovenian primary schoolchildren: Findings from a nationally representative sample of adolescents. J. Behav. Addict. 2016, 5, 304–310. [Google Scholar] [CrossRef] [Green Version]

- Chamarro, A.; Carbonell, X.; Manresa, J.; Munoz-Miralles, R.; Ortega-González, R.; Lopez-Morron, M.; Batalla-Martinez, C.; Toran-Montserrat, P. El cuestionario de experiencias relacionadas con los videojuegos (CERV): Un instrumento para detectar el uso problemático de videojuegos en adolescentes españoles. Adicciones 2014, 26, 303–311. [Google Scholar] [CrossRef] [Green Version]

- Lei, W.; Liu, K.; Zeng, Z.; Liang, X.; Huang, C.; Gong, K.; He, W.; Xiang, B.; Zhang, J.; Zheng, X.; et al. The psychometric properties of the Chinese version Internet gaming disorder scale. Addict. Behav. 2020, 113, 106670. [Google Scholar] [CrossRef]

- Evren, C.; Dalbudak, E.; Topçu, M.; Kutlu, N.; Evren, B. The psychometric properties of the Turkish version of the Internet Gaming Disorder Scale. Dusunen. Adam. J. Psychiatry Neurol. Sci. 2017, 4, 316–324. [Google Scholar] [CrossRef] [Green Version]

- Baiumy, S.; Elella, E.A.; Hewedi, D.; Elkholy, H. Internet gaming disorder scale. Middle East Curr. Psychiatry 2018, 25, 13–15. [Google Scholar] [CrossRef]

- Paschke, K.; Peter-Michael, S.; Thomasius, R. Validity and psychometric properties of the internet gaming disorder scale in three large independent samples of children and adolescents. Int. J. Environ. Health Res. 2021, 18, 1095. [Google Scholar] [CrossRef]

- Allen, J.J.; Anderson, C.A. Satisfaction and frustration of basic psychological needs in the real world and in video games predict internet gaming disorder scores and well-being. Comput. Hum. Behav. 2018, 84, 220–229. [Google Scholar] [CrossRef]

- Bernaldo-de-Quirós, M.; Labrador-Méndez, M.; Sánchez-Iglesias, I.; Labrador, F.J. Instrumentos de medida del trastorno de juego en internet en adolescentes y jóvenes según criterios DSM-5: Una revisión sistemática. Addiciones 2020, 32, 291–302. [Google Scholar] [CrossRef]

- Wartberg, L.; Kriston, L.; Thomasius, R. Internet gaming disorder and problematic social media use in a representative sample of German adolescents: Prevalence estimates, comorbid depressive symptoms and related psychosocial aspects. Comput. Hum. Behav. 2020, 103, 31–36. [Google Scholar] [CrossRef]

- Badenes-Ribera, L.; Rubio-Aparicio, M.; Sánchez-Meca, J. Meta-análisis de generalización de la fiabilidad. Inf. Psicológica 2020, 119, 17–32. [Google Scholar] [CrossRef]

- Santos, G. Validez y Confiabilidad del Cuestionario de Calidad de Vida SF-36 en Mujeres con LUPUS, Puebla. Bachelor’s Thesis, Universidad Autónoma de Puebla, Puebla, Mexico, 2017. [Google Scholar]

- Merino-Soto, C.; Angulo-Ramos, M. Validity induction: Comments on the study of compliance questionnaire for rheumatology. Rev. Colomb. De Reumatol. 2020, 28, 312–313. [Google Scholar] [CrossRef]

- Merino-Soto, C.; Angulo-Ramos, M. Metric studies of the compliance questionnaire on rheumatology (CQR): A case of validity induction? Reumatol. Clínica 2021. [Google Scholar] [CrossRef]

- Reidl-Martínez, L. Confiabilidad en la medición. Investigación en educación médica. Elsevier 2013, 2, 107–111. [Google Scholar]

- Sánchez-Meca, J.; López-Pina, J.A.; López López, J.A. Generalización de la fiabilidad: Un enfoque metaanalítico aplicado a la fiabilidad. Fisioterapia 2009, 31, 262–270. [Google Scholar] [CrossRef]

- Yoon, S.; Yang, Y.; Ro, E.; Ahn, W.Y.; Kim, J.; Shin, S.H.; Chey, J.; Choi, K.H. Reliability, and convergent and discriminant validity of gaming disorder scales: A meta-analysis. Front. Psychol. 2021, 7, 764209. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Rubio-Aparicio, M.; Badenes-Ribera, L.; Sánchez-Meca, J.; Fabris, M.A.; Longobardi, C. A reliability generalization meta-analysis of self-report measures of muscle dysmorphia. Clin. Psychol. Sci. Pract. 2020, 27, e12303. [Google Scholar] [CrossRef]

- Bonett, D.G.; Wright, T.A. Cronbach’s alpha reliability: Interval estimation, hypothesis testing, and sample size planning. J. Organ. Behav. 2015, 36, 3–15. [Google Scholar] [CrossRef]

- Savalei, V. A comparison of several approaches for controlling measurement error in small samples. Psychol. Methods 2019, 24, 352–370. [Google Scholar] [CrossRef] [PubMed]

- Hedges, L.V. A random effects model for effect sizes. Psychol. Bull. 1983, 93, 388–395. [Google Scholar] [CrossRef]

- Bonett, D.G. Varying coefficient meta-analytic methods for alpha reliability. Psychol. Methods 2010, 15, 368–385. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Huang, J.L.; Bowling, N.A.; Liu, M.; Li, Y. Detecting insufficient effort responding with an infrequency scale: Evaluating validity and participant reactions. J. Bus. Psychol. 2014, 30, 299–311. [Google Scholar] [CrossRef]

- Huang, J.L.; Liu, M.; Bowling, N.A. Insufficient effort responding: Examining an insidious confound in survey data. J. Appl. Psychol. 2015, 100, 828–845. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Schmidt, F.L.; Oh, I.-S.; Hayes, T.L. Fixed- versus random-effects models in meta-analysis: Model properties and an empirical comparison of differences in results. Br. J. Math. Stat. Psychol. 2009, 62, 97–128. [Google Scholar] [CrossRef] [Green Version]

- Veroniki, A.A.; Jackson, D.; Bender, R.; Kuss, O.; Langan, D.; Higgins, J.P.; Knapp, G.; Salanti, G. Methods to calculate uncertainty in the estimated overall effect size from a random-effects meta-analysis. Res. Synth. Methods 2019, 10, 23–43. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Raudenbush, S.W. Analyzing effect sizes: Random-effects models. In The Handbook of Research Synthesis and Meta-Analysis, 2nd ed.; Cooper, H., Hedges, L.V., Valentine, J.C., Eds.; Russell Sage Foundation: New York, NY, USA, 2009; pp. 295–316. [Google Scholar]

- Knapp, G.; Hartung, J. Improved tests for a random effects meta-regression with a single covariate. Stat. Med. 2003, 22, 2693–2710. [Google Scholar] [CrossRef] [PubMed]

- Cochran, W.G. The combination of estimates from different experiments. Biometrics 1954, 10, 101–129. [Google Scholar] [CrossRef]

- IntHout, J.; Ioannidis, J.P.; Borm, G.F. The Hartung-Knapp-Sidik-Jonkman method for random effects meta-analysis is straightforward and considerably outperforms the standard DerSimonian-Laird method. BMC Med. Res. Methodol. 2014, 14, 25. [Google Scholar] [CrossRef] [Green Version]

- Fletcher, J. What is heterogeneity and is it important? BMJ 2007, 334, 94–96. [Google Scholar] [CrossRef]

- Higgins, J.P.T.; Thomas, J.; Chandler, J.; Cumpston, M.; Li, T.; Page, M.J.; Welch, V.A. Cochrane Handbook for Systematic Reviews of Interventions Version 6.3 (Updated February 2022); Cochrane 2022. Available online: www.training.cochrane.org/handbook. (accessed on 11 March 2022).

- Crippa, A.; Khudyakov, P.; Wang, M.; Orsini, N.; Spiegelman, D. A new measure of between-studies heterogeneity in meta-analysis. Stat. Med. 2016, 35, 3661–3675. [Google Scholar] [CrossRef]

- von Hippel, P.T. The heterogeneity statistic I2 can be biased in small meta-analyses. BMC Med. Res. Methodol. 2015, 15, 35. [Google Scholar] [CrossRef] [Green Version]

- Deeks, J.; Higgins, J.; Altman, D. Analyzing data and undertakingmeta-analyses. In Cochrane Handbook for Systematic Reviews of Interventions Version 5.0.0; Higgins, J., Green, S., Eds.; Wiley: Chichester, UK, 2008. [Google Scholar]

- Higgins, J.P.T.; Thompson, S.G.; Deeks, J.J.; Altman, D.G. Measuring inconsistency in meta-analyses. BMJ 2003, 327, 557–560. [Google Scholar] [CrossRef] [Green Version]

- Sánchez-Meca, J.; López-López, J.A.; López-Pina, J.A. Some recommended statistical analytic practices when reliability generalization studies are conducted. Br. J. Math. Stat. Psychol. 2013, 66, 402–425. [Google Scholar] [CrossRef] [PubMed]

- Romano, J.L.; Kromrey, J.D.; Hibbard, S.T. A Monte Carlo study of eight confidence interval methods for coefficient alpha. Educ. Psychol. Meas. 2010, 70, 376–393. [Google Scholar] [CrossRef]

- Bonett, D.G. Sample size requirements for testing and estimating coefficient alpha. J. Educ. Behav. Stat. 2002, 27, 335–340. [Google Scholar] [CrossRef]

- Whitehead, A.; Whitehead, J. A general parametric approach to the meta-analysis of randomized clinical trials. Stat. Med. 1991, 10, 1665–1677. [Google Scholar] [CrossRef] [PubMed]

- Shuster, J.J. Empirical vs. natural weighting in random effects meta-analysis. Stat. Med. 2010, 29, 1259–1265. [Google Scholar]

- Ait Daoud, I. The Effect of Stress Susceptibility on the Relationship Between Attachment and Internet Gaming. Master’s Thesis, University of Minessota, Minessota, MN, USA, 2020. [Google Scholar]

- Mills, D.J.; Milyavskaya, M.; Heath, N.L.; Derevensky, J.L. Gaming motivation and problematic video gaming: The role of needs frustration. Eur. J. Soc. Psychol. 2018, 48, 551–559. [Google Scholar] [CrossRef] [Green Version]

- Sioni, S.R.; Burleson, M.H.; Bekerian, D.A. Internet gaming disorder: Social phobia and identifying with your virtual self. Comput. Hum. Behav. 2017, 71, 11–15. [Google Scholar] [CrossRef]

- Stockdale, L.; Coyne, S.M. Parenting paused: Pathological video game use and parenting out-comes. Addict. Behav. Rep. 2019, 11, 100244. [Google Scholar]

- Wartberg, L.; Kriston, L.; Kammerl, R. Associations of social support, friends only known through the internet, and health-related quality of life with internet gaming disorder in adolescence. Cyberpsychol. Behav. Soc. Netw. 2017, 20, 436–441. [Google Scholar] [CrossRef] [PubMed]

- Buiza-Aguado, C.; Alonso-Canovas, A.; Conde-Mateos, C.; Buiza-Navarrete, J.J.; Gentile, D. Problematic video gaming in a young spanish population: Association with psychosocial health. Cyberpsychol. Behav. Soc. Netw. 2018, 21, 388–394. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Brooks, G.A.; Clark, L. Associations between loot box use, problematic gaming and gambling, and gambling-related cognitions. Addict. Behav. 2019, 96, 26–34. [Google Scholar] [CrossRef] [PubMed]

- Grajewski, P.; Dragan, M. Adverse childhood experiences, dissociation, and anxious attachment style as risk factors of gaming disorder. Addict. Behav. Rep. 2020, 11, 100269. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Gong, R.; Yu, Y.; Xu, C.; Yu, X.; Chang, R.; Wang, H.; Wang, S.; Wang, Q.; Cai, Y. Longitudinal predictors for incidence of internet gaming disorder among adolescents: The roles of time spent on gaming and depressive symptoms. J. Adolesc. 2021, 92, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Mills, D.J.; Allen, J.J. Self-determination theory, internet gaming disorder, and the mediating role of self-control. Comput. Hum. Behav. 2020, 105, 106209. [Google Scholar] [CrossRef]

- Zemestani, M.; Niakan, F.; Shafeizadeh, K.; Griffiths, M.D. The relationship between psychobio-logical dimensions of personality and internet gaming disorder: The role of positive and negative affects. Curr. Psychol. 2021, 1–10. [Google Scholar] [CrossRef]

- Booth, M.A. Domestic Bliss, or Technological Diss? Problematic Media Use, Attachment, and Relationship Outcomes. Master’s Thesis, Brigham Young University, Provo, UT, USA, 2020. [Google Scholar]

- Dedeaux, J.A. Internet Overuse and Its Impact on Deviant Behavior and Attitudes. Ph.D. Thesis, Wichita State University, Wichita, KS, USA, 2019. [Google Scholar]

- Gibbons, J.A.; Bouldin, B. Videogame play and events are related to unhealthy emotion regulation in the form of low fading affect bias in autobiographical memory. Conscious. Cogn. 2019, 74, 102778. [Google Scholar] [CrossRef] [PubMed]

- Koning, I.M.; Peeters, M.; Finkenauer, C.; Van Den Eijnden, R.J.J.M. Bidirectional effects of Internet-specific parenting practices and compulsive social media and Internet game use. J. Behav. Addict. 2018, 7, 624–632. [Google Scholar] [CrossRef] [PubMed]

- Reyes, M.E.S.; Davis, R.D.; Lim, R.A.N.N.; Lim, K.R.S.; Paulino, R.F.; Carandang, A.M.D.; Azarraga, M.G.S. Five-factor model traits as predictors of pathological gaming among selected Filipino gamers. Psychol. Stud. 2019, 64, 213–220. [Google Scholar] [CrossRef]

- Van den Eijnden, R.; Koning, I.; Doornwaard, S.; van Gurp, F.; ter Bogt, T. The impact of heavy and disordered use of games and social media on adolescents’ psychological, social, and school functioning. J. Behav. Addict. 2018, 7, 697–706. [Google Scholar] [CrossRef]

- Zendle, D. Beyond loot boxes: A variety of gambling-like practices in video games are linked to both problem gambling and disordered gaming. PeerJ 2020, 8, e9466. [Google Scholar] [CrossRef] [PubMed]

- Bonett, D.G. Meta-analytic interval estimation for bivariate correlations. Psychol. Methods 2008, 13, 173–181. [Google Scholar] [CrossRef] [Green Version]

- Krizan, Z. Synthesizer 1.0: A varying-coefficient meta-analytic tool. Behav. Res. Methods 2010, 42, 863–870. [Google Scholar] [CrossRef]

- Mikolajewicz, N.; Komarova, S.V. Meta-analytic methodology for basic research: A practical guide. Front. Physiol. 2019, 10, 203. [Google Scholar] [CrossRef] [Green Version]

- Schulz, A.; Schürmann, C.; Skipka, G.; Bender, R. Performing meta-analyses with very few studies. In Meta-Research: Methods and Protocols, Methods in Molecular Biology; Evangelou, E., Veroniki, A.A., Eds.; Springer Science + Business Media: New York, NY, USA, 2021; Volume 2345, pp. 91–102. [Google Scholar]

- Noma, H. boutliers: Outlier Detection and Influence Diagnostics for Meta-Analysis. R Package Versión, 1.1-1. 2020. Available online: https://CRAN.R-project.org/package=boutliers (accessed on 9 March 2022).

- Viechtbauer, W. Conducting meta-analyses in r with the metafor package. J. Stat. Softw. 2010, 36, 1–48. [Google Scholar] [CrossRef] [Green Version]

- Bonett, D.G. vcmeta: Varying Coefficient Meta-Analysis. R package versión, 1.0.0. 2021. Available online: https://CRAN.R-project.org/package=vcmeta (accessed on 9 March 2022).

- Harrer, M.; Cuijpers, P.; Furukawa, T.; Ebert, D.D. dmetar: Companion R Package for The Guide ‘Doing Meta-Analysis in R’. R package version 0.0.9000. 2019. Available online: http://dmetar.protectlab.org/ (accessed on 9 March 2022).

- R Core Team. R: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria. 2021. Available online: https://www.R-project.org/ (accessed on 9 March 2022).

- Sánchez-Meca, J.; López-Pina, J.A. The meta-analytic approach of reliability generalization. Rev. Española De Salud Pública 2008, 5, 37–64. [Google Scholar]

- Nunnally, J.C. Psychometric Theory, 2nd ed.; McGraw-Hill: New York, NY, USA, 1987. [Google Scholar]

- Zimmerman, D.W.; Zumbo, B.D.; Lalonde, C. Coefficient alpha as an estimate of test reliability under violation of two assumptions. Educ. Psychol. Meas. 1993, 53, 33–49. [Google Scholar] [CrossRef]

- Langan, D. Assessing heterogeneity in random-effects meta-analysis. In Meta-Research: Methods and Protocols, Methods in Molecular Biology; Evangelou, E., Veroniki, A.A., Eds.; Springer Science + Business Media: New York, NY, USA, 2021; Volume 2345, pp. 67–89. [Google Scholar]

- Pinsoneault, T.B. Detecting random, partially random, and nonrandom Minnesota Multiphasic Personality Inventory-2 protocols. Psychol. Assess. 2007, 19, 159–164. [Google Scholar] [CrossRef] [PubMed]

- Evans, R.G.; Dinning, W.D. Response consistency among high F scale scorers on the MMPI. J. Clin. Psychol. 1983, 39, 246–248. [Google Scholar] [CrossRef]

- Huang, J.L.; Curran, P.G.; Keeney, J.; Poposki, E.M.; DeShon, R.P. Detecting and deterring insufficient effort responding to surveys. J. Bus. Psychol. 2012, 27, 99–114. [Google Scholar] [CrossRef]

- Meade, A.W.; Craig, S.B. Identifying careless responses in survey data. Psychol. Methods 2012, 17, 437–455. [Google Scholar] [CrossRef] [Green Version]

- Merino-Soto, C.; Martí-Vilar, M.; Serrano-Pastor, L. Careless responses and construct validity of Wong-Law emotional intelligence scale. PsyCh J. 2021, 10, 944–946. [Google Scholar] [CrossRef]

- Clark, M.E.; Gironda, R.J.; Young, R.W. Detection of back random responding: Effectiveness of MMPI-2 and personality assessment inventory validity indices. Psychol. Assess. 2003, 15, 223. [Google Scholar] [CrossRef]

- Godinho, A.; Kushnir, V.; Cunningham, J.A. Unfaithful findings: Identifying careless responding in addictions research. Addiction 2016, 111, 955–956. [Google Scholar] [CrossRef]

- Arias, V.B.; Garrido, L.E.; Jenaro, C.; Martínez-Molina, A.; Arias, B. A little garbage in, lots of garbage out: Assessing the impact of careless responding in personality survey data. Behav. Res. Methods 2020, 52, 2489–2505. [Google Scholar] [CrossRef]

- Merino-Soto, C.; Calderón-De la Cruz, G. Validez de estudios peruanos sobre estrés y burnout. Rev. Peru. De Med. Exp. Y Salud Publica 2018, 35, 353–354. [Google Scholar] [CrossRef]

- Flora, D.B. Your coefficient alpha is probably wrong, but which coefficient omega is right? A tutorial on using R to obtain better reliability estimates. Adv. Methods Pract. Psychol. Sci. 2020, 3, 484–501. [Google Scholar]

- McDonald, R.P. Test theory: A Unified Treatment; Erlbaum: Mahwah, NJ, USA; New York, NY, USA, 1999. [Google Scholar]

- McNeish, D. Thanks coefficient alpha, we’ll take it for here. Psychol. Method 2018, 23, 412–433. [Google Scholar] [CrossRef] [PubMed]

- Riley, R.D.; Higgins, J.P.T.; Deeks, J.J. Interpretation of random effects meta-analyses. BMJ 2011, 342, 964–967. [Google Scholar] [CrossRef] [Green Version]

- Brannick, M.T.; Potter, S.M.; Benitez, B.; Morris, S.B. Bias and precision of alternate estimators in meta-analysis: Benefits of blending schmidt-hunter and hedges approaches. Organ. Res. Methods 2019, 22, 490–514. [Google Scholar] [CrossRef]

- Gronau, Q.F.; Heck, D.W.; Berkhout, S.W.; Haaf, J.M.; Wagenmakers, E.-J. A primer on Bayesian model-averaged meta-analysis. Adv. Methods Pract. Psychol. Sci. 2021, 4, 25152459211031256. [Google Scholar] [CrossRef]

- King, D.L.; Chamberlain, S.R.; Carragher, N.; Billieux, J.; Stein, D.; Mueller, K.; Potenza, M.N.; Rumpf, H.J.; Saunders, J.; Starcevic, V.; et al. Screening and assessment tools for gaming disorder: A comprehensive systematic review. Clin. Psychol. Rev. 2020, 77, 101831. [Google Scholar] [CrossRef]

- Sánchez-Meca, J.; Marín-Martínez, F.; López-López, J.A.; Núñez-Núñez, R.M.; Rubio-Aparicio, M.; López-García, J.J.; López-Pina, J.A.; Blázquez-Rincón, D.M.; López-Ibáñez, C.; López-Nicolás, R. Improving the reporting quality of reliability generalization meta-analyses: The REGEMA checklist. Res. Synth. Methods 2021, 12, 516–536. [Google Scholar] [CrossRef]

| N | α | se | 95% CI α | Levels | Impact on Variability | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Ll | UL | >0.70 | >0.80 | VRATIO | Qvratio | TAURATIO | Qtauratio | ||||

| IGDS9D | 18,828 | ||||||||||

| Study 1 | 989 | 0.830 | 0.00 | 0.814 | 0.845 | Y | Y | 10.07 * | 0.87 | 10.02 * | 0.82 |

| Study 2 | 394 | 0.850 | 0.00 | 0.826 | 0.871 | Y | Y | 10.04 * | 0.88 | 0.99 * | 0.83 |

| Study 3 | 1020 | 0.820 | 0.01 | 0.803 | 0.836 | Y | Y | 10.08 * | 0.89 | 10.03 * | 0.84 |

| Study 4 | 204 | 0.610 | 0.00 | 0.523 | 0.684 | N | N | 0.92 | 0.92 | 0.88 | 0.88 |

| Study 5 | 708 | 0.810 | 0.04 | 0.788 | 0.830 | Y | NC | 1.09 * | 0.88 | 1.04 * | 0.83 |

| Study 6 | 354 | 0.740 | 0.01 | 0.697 | 0.778 | NC | N | 1.10 * | 0.88 | 1.05 * | 0.83 |

| Study 7 | 538 | 0.730 | 0.02 | 0.694 | 0.763 | NC | N | 1.09 * | 0.89 | 1.04 * | 0.84 |

| Study 8 | 257 | 0.820 | 0.01 | 0.784 | 0.851 | Y | NC | 1.08 * | 0.88 | 1.03 * | 0.83 |

| Study 9 | 310 | 0.840 | 0.01 | 0.811 | 0.865 | Y | Y | 1.06 * | 0.89 | 1.00 * | 0.84 |

| Study 10 | 855 | 0.810 | 0.01 | 0.790 | 0.828 | Y | NC | 1.09 * | 0.89 | 1.04 * | 0.83 |

| Study 11 | 1306 | 0.690 | 0.01 | 0.664 | 0.714 | NC | N | 1.04 * | 0.89 | 0.99 * | 0.84 |

| Study 12 | 351 | 0.810 | 0.01 | 0.778 | 0.838 | Y | NC | 1.09 * | 0.90 | 1.04 * | 0.84 |

| Study 13 | 1001 | 0.660 | 0.01 | 0.627 | 0.691 | N | N | 0.98 * | 0.90 | 0.93 * | 0.84 |

| Study 14 | 1081 | 0.790 | 0.01 | 0.771 | 0.808 | Y | NC | 1.11 * | 0.86 | 1.05 * | 0.81 |

| Study 15 | 2078 | 0.740 | 0.01 | 0.723 | 0.756 | N | N | 1.10 * | 0.88 | 1.05 * | 0.83 |

| Study 16 | 1121 | 0.830 | 0.00 | 0.815 | 0.844 | Y | Y | 1.07 * | 0.86 | 1.02 * | 0.81 |

| Study 17 | 3938 | 0.840 | 0.00 | 0.832 | 0.847 | Y | Y | 1.06 * | 0.88 | 1.00 * | 0.82 |

| Study 18 | 762 | 0.780 | 0.00 | 0.756 | 0.803 | Y | N | 1.11 * | 0.87 | 1.06 * | 0.81 |

| Study 19 | 777 | 0.720 | 0.01 | 0.689 | 0.748 | NC | N | 1.09 * | 0.88 | 1.03 * | 0.83 |

| Study 20 | 784 | 0.560 | 0.01 | 0.512 | 0.604 | N | N | 0.68 | 0.90 | 0.63 | 0.85 |

| IGDS9P | 2787 | ||||||||||

| Study 1 | 923 | 0.95 | 0.002 | 0.945 | 0.955 | Y | Y | 1.09 * | 0.26 | 0.81 | 0.26 |

| Study 2 | 457 | 0.93 | 0.005 | 0.920 | 0.939 | Y | Y | 1.73 * | 0.21 | 0.45 | 0.21 |

| Study 9 | 1029 | 0.85 | 0.007 | 0.836 | 0.863 * | Y | Y | 0.61 * | 0.25 | 1.30 | 0.25 |

| Study 18 | 378 | 0.89 | 0.009 | 0.872 | 0.906 | Y | Y | 1.87 * | 0.21 | 1.42 | 0.21 |

| IGDS27D | 2919 | ||||||||||

| Study 1 | 989 | 0.93 | 0.003 | 0.9234 | 0.936 | Y | Y | 1.64 ** | 0.27 | 1.23 * | 0.17 |

| Study 14 | 1026 | 0.90 | 0.004 | 0.8908 | 0.908 | Y | Y | 2.02 * | 0.17 | 1.52 * | 0.11 |

| Study 16 | 423 | 0.93 | 0.004 | 0.9198 | 0.939 | Y | Y | 1.64 * | 0.18 | 1.23 * | 0.10 |

| Study 26 | 481 | 0.81 | 0.012 | 0.7842 | 0.833 * | Y | NC | 0.12 | 0.22 | 0.09 | 0.15 |

| IGDS27P | 2454 | ||||||||||

| Study 1 | 923 | 0.95 | 0.001 | 0.95 | 0.96 | Y | Y | 0.31 | 0.64 | 0.20 | 0.49 |

| Study 2 | 457 | 0.94 | 0.002 | 0.93 | 0.94 | Y | Y | 1.03 * | 0.64 | 0.80 * | 0.49 |

| Study 5 | 315 | 0.97 | 0.002 | 0.96 | 0.97 | Y | Y | 1.63 * | 0.78 | 1.33 * | 0.60 |

| Study 13 | 272 | 0.96 | 0.003 | 0.95 | 0.96 | Y | Y | 1.62 * | 0.78 | 1.32 * | 0.60 |

| Study 19 | 487 | 0.96 | 0.003 | 0.95 | 0.96 | Y | Y | 1.64 * | 0.64 | 1.33 * | 0.49 |

| K | α+ | 95% CI | Heterogeneity | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Confidence LL, UL | Prediction LL, UL | Q (df) | τ (τ2) | I2 (CI 95%) | Rb (CI 95%) | CVb (CI 95%) | CVw | |||

| Random coefficients model | ||||||||||

| IGDS9D | 20 | 0.775 | 0.74, 0.80 | 0.56, 0.88 | 726.40 ** (11) | 0.307 (0.094) | 97.43 (95.5, 98.8) | 96.1 (96.2, 96.4) | 0.206 (0.20, 0.20) | 0.864 |

| IGDS9P | 4 | 0.912 | 0.81, 0.95 | 0.51, 0.98 | 279.53 ** (3) | 0.488 (0.233) | 98.55 (95.4, 99.8) | 98.4 (98.3, 98.4) | 0.198 (0.19, 0.20) | 0.408 |

| IGDS27D | 4 | 0.908 | 0.79, 0.95 | 0.48, 0.98 | 174.98 ** (3) | 0.465 (0.217) | 98.63 (95.7, 99.9) | 98.5 (98.3, 98.6) | 0.201 (0.19, 0.20) | 0.383 |

| IGDS27P | 5 | 0.958 | 0.943, 0.969 | 0.913, 0.980 | 79.41 ** (4) | 0.244 (0.05) | 92.99 (80.6, 99.1) | 92.2 (93.1, 93.5) | 0.07 (0.07, 0.07) | 0.473 |

| Varying coefficients model | ||||||||||

| K | α’+ | Confidence LL, UL | ||||||||

| IGDS9D | 20 | 0.764 | 0.755, 0.775 | - | - | - | - | - | - | - |

| IGDS9P | 4 | 0.905 | 0.889, 0.991 | - | - | - | - | - | - | - |

| IGDS27D | 4 | 0.892 | 0.885, 0.899 | |||||||

| IGDS27P | 5 | 0.958 | 0.955, 0.960 | |||||||

| IGDS9D | Kmeans 1 (ng = 3) | Kmeans 2 (ng = 2) |

|---|---|---|

| Study 1 | 3 | 1 |

| Study 2 | 3 | 1 |

| Study 3 | 3 | 1 |

| Study 4 | 1 | 2 |

| Study 5 | 3 | 1 |

| Study 6 | 2 | 2 |

| Study 7 | 2 | 2 |

| Study 8 | 3 | 1 |

| Study 9 | 3 | 1 |

| Study 10 | 3 | 1 |

| Study 11 | 2 | 2 |

| Study 12 | 3 | 1 |

| Study 13 | 2 | 2 |

| Study 14 | 3 | 1 |

| Study 15 | 2 | 2 |

| Study 16 | 3 | 1 |

| Study 17 | 3 | 1 |

| Study 18 | 3 | 1 |

| Study 19 | 2 | 2 |

| Study 20 | 1 | 2 |

| Wc SSC | 91.3% | 71.8% |

| IGDS9P | Kmeans 1 (ng = 2) | |

| Study 1 | 1 | - |

| Study 2 | 2 | - |

| Study 3 | 1 | - |

| Study 4 | 1 | - |

| 83.1% | - | |

| IGDS27D | Kmeans 1 (ng = 2) | |

| Study 1 | 2 | - |

| Study 2 | 2 | - |

| Study 3 | 2 | - |

| Study 4 | 1 | - |

| 93.8% | ||

| IGDS27P | Kmeans 1 (ng = 2) | |

| Study 1 | 1 | - |

| Study 2 | 2 | - |

| Study 3 | 1 | - |

| Study 4 | 1 | - |

| 37.5% | - |

| 2 Clusters | 3 Clusters | |||

|---|---|---|---|---|

| c2 (df) | Cramer—V | c2 (df) | Cramer—V | |

| Language | 16.38 NS (19) | 0.373 | 4.97 NS (19) | 0.343 |

| English language | 2.78 NS (19) | 0.187 | 8.59 NS (19) | 0.296 |

| Application mode | 5.87 NS (19) | 0.308 | 9.28 NS (19) | 0.341 |

| Sample | 7.69 NS (19) | 0.334 | 6.26 NS (19) | 0.268 |

| Gamer condition | 4.97 NS (19) | 0.254 | 8.06 NS (19) | 0.291 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gisbert-Pérez, J.; Martí-Vilar, M.; Merino-Soto, C.; Vallejos-Flores, M. Reliability Generalization Meta-Analysis of Internet Gaming Disorder Scale. Healthcare 2022, 10, 1992. https://doi.org/10.3390/healthcare10101992

Gisbert-Pérez J, Martí-Vilar M, Merino-Soto C, Vallejos-Flores M. Reliability Generalization Meta-Analysis of Internet Gaming Disorder Scale. Healthcare. 2022; 10(10):1992. https://doi.org/10.3390/healthcare10101992

Chicago/Turabian StyleGisbert-Pérez, Júlia, Manuel Martí-Vilar, César Merino-Soto, and Miguel Vallejos-Flores. 2022. "Reliability Generalization Meta-Analysis of Internet Gaming Disorder Scale" Healthcare 10, no. 10: 1992. https://doi.org/10.3390/healthcare10101992

APA StyleGisbert-Pérez, J., Martí-Vilar, M., Merino-Soto, C., & Vallejos-Flores, M. (2022). Reliability Generalization Meta-Analysis of Internet Gaming Disorder Scale. Healthcare, 10(10), 1992. https://doi.org/10.3390/healthcare10101992