Basic Empathy Scale: A Systematic Review and Reliability Generalization Meta-Analysis

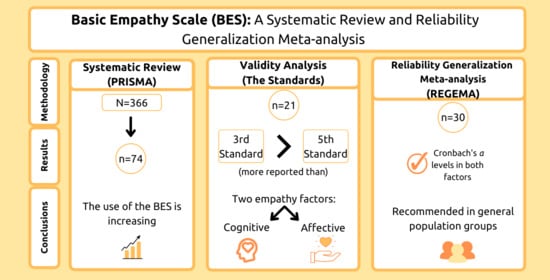

Abstract

:1. Introduction

1.1. The Development of the BES

1.2. Description of the Scale

1.3. Proposals for the Study of the Scale Properties

1.4. The Current Study

2. Materials and Methods

2.1. Systematic Review

2.1.1. Search Strategy and Information Sources

2.1.2. Eligibility Criteria and Selection Process

2.1.3. Data Collection Process

2.2. Description of the Validity Study

2.3. Meta-Analysis

2.3.1. Article Eligibility

2.3.2. Description and Assessment of Cronbach’s α Coefficients

2.3.3. Reliability Generalization

2.4. Corroboration of the Meta-Analytical Report

3. Results

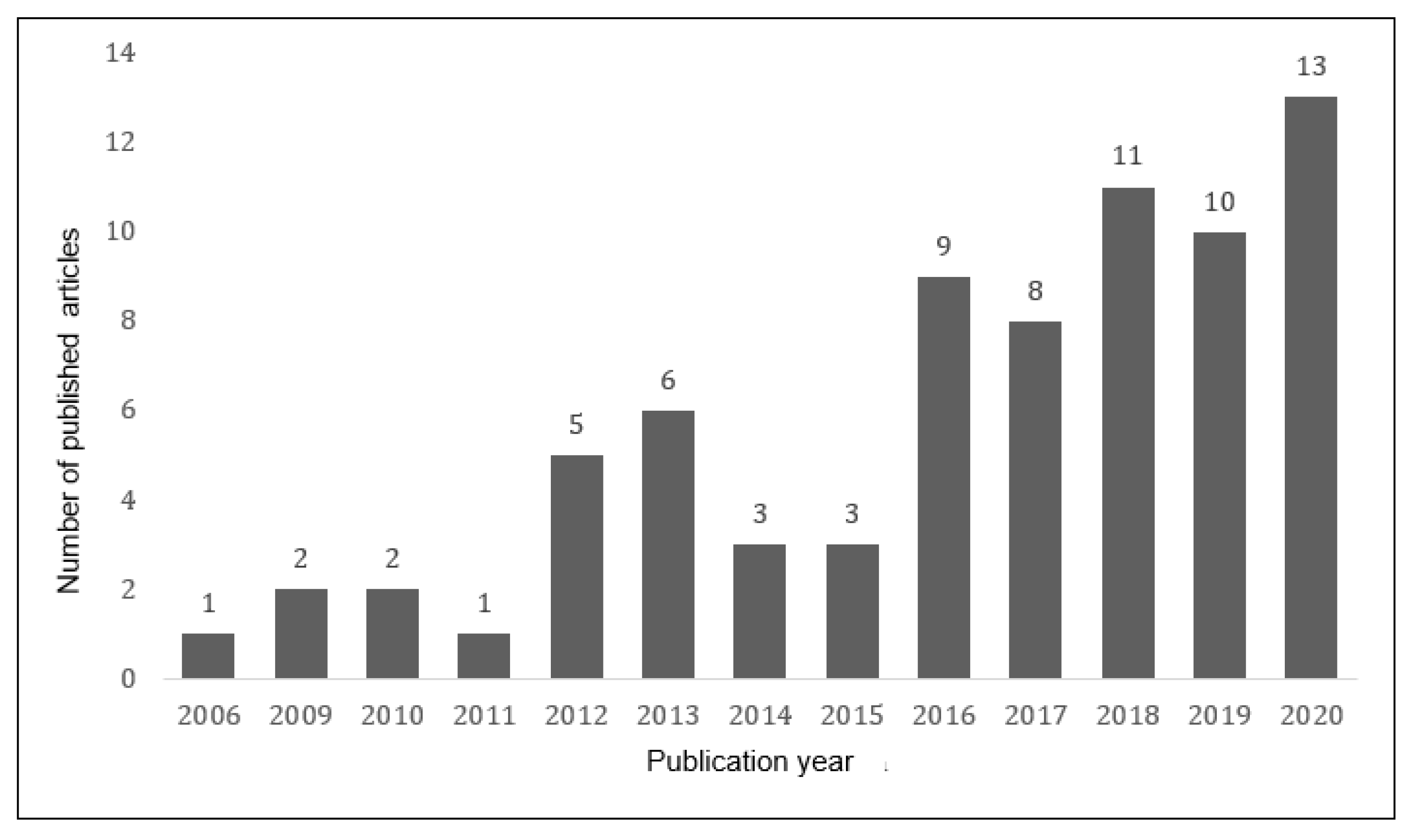

3.1. Systematic Review

3.1.1. Study Selection and Study Characteristics

3.1.2. Results of Syntheses

3.2. Validity Analysis

3.2.1. Evidence Based on Test Content

3.2.2. Evidence Based on Response Processes

3.2.3. Evidence Based on Internal Structure

3.2.4. Evidence Based on Relations to Other Variables

3.2.5. Evidence Based on the Consequences of Testing

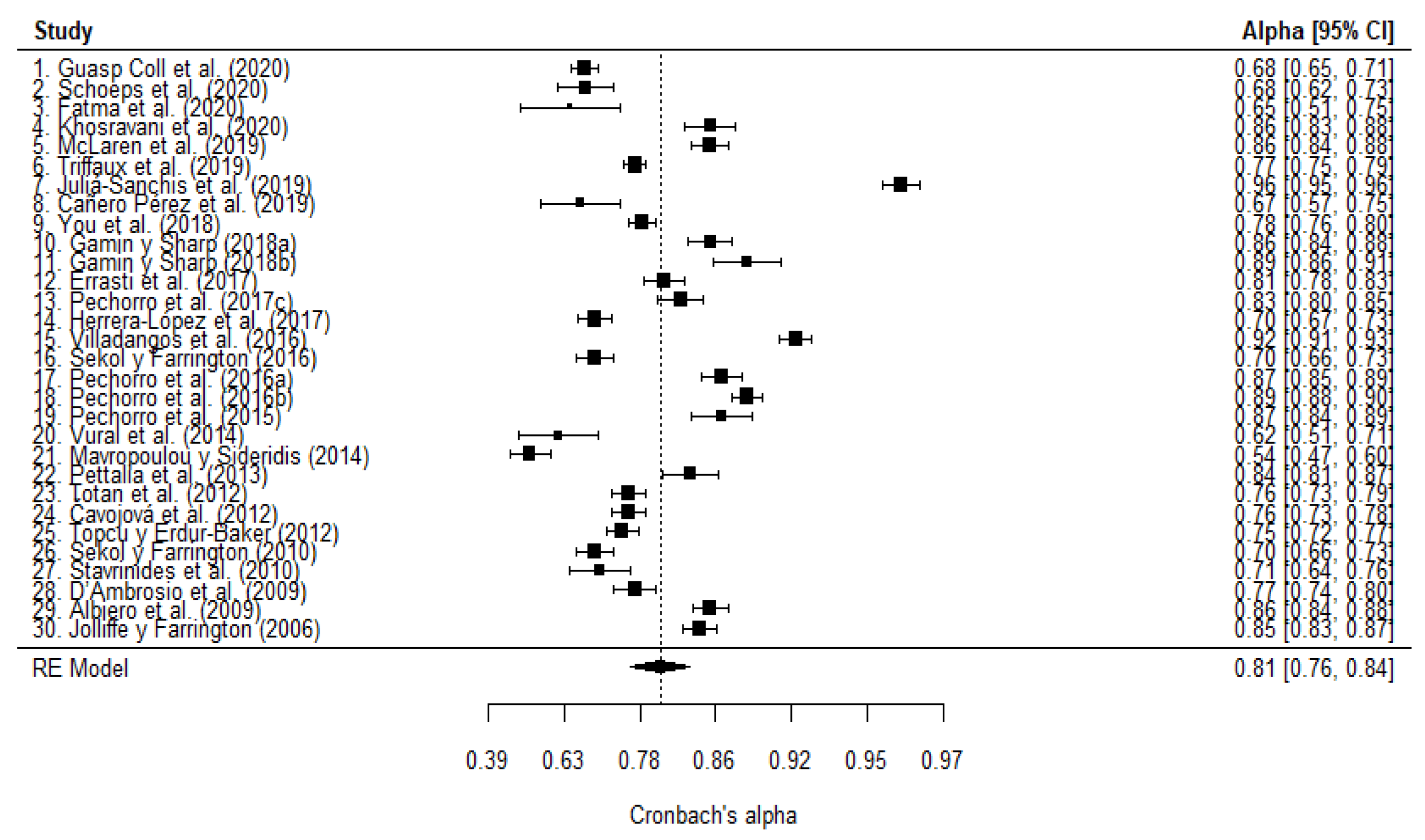

3.3. Meta-Analysis

3.3.1. Reliability Report

3.3.2. Reliability Levels Description

3.3.3. Reliability Generalization and General Heterogeneity Assessment

3.3.4. Heterogeneity Assessment: Moderator Analysis

3.3.5. Robust Estimation

4. Discussion

4.1. Limitations and Future Research

4.2. Practical Implication

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Study | Test Content | Response Processes | Internal Structure | Relations to Other Variables | Consequences of Testing | ||||

|---|---|---|---|---|---|---|---|---|---|

| Factor Analysis | Reliability | Test-Retest | Invariance | Equivalence between Other Versions | |||||

| Zych et al. (2020) | Translation reviewed by experts. | No. | CFA. | Cronbach’s alpha. | No. | No. | Compare the original version with the reduced version within the same studio. | Convergent evidence. Social and emotional competencies and moral disconnection. | No. |

| McLaren et al. (2019) | No. | No. | CFA. | Cronbach’s alpha. | No | Group: gender. Level: intercepts. | Compare the original version with the reduced version within the same studio. | Convergent evidence. Psychopathy, insensitivity traits, and behavioral and emotional disorders. | No. |

| Ventura-León et al. (2019) | No. | No. | 1st CFA 2nd SEM. | McDonald’s omega. | No. | Group: gender. Level: residual. | No. | No. | No. |

| Merino-Soto et al. (2019) | No. | No. | 1st CFA 2nd SEM. | Cronbach’s alpha and McDonald’s omega. | No | Group: gender and study level. Level: residual. | No. | No. | No. |

| You et al. (2018) | Translation reviewed by experts. | No. | CFA. | Cronbach’s alpha. | No. | Group: gender. Level: scalar. | No. | Convergent evidence. Bullying behavior and school attachment. | No. |

| Pechorro et al. (2017b) | Translation reviewed by experts. | No. | CFA. | Cronbach’s alpha and McDonald’s omega. | No. | No. | Compare the original version with the reduced version within the same studio. | Convergent evidence. Psychopathy, insensitivity traits, aggression, behavioral disorders, and aggression. | No. |

| Herrera-López et al. (2017) | The test was evaluated with 60 subjects before being administered, but without justification that it resulted in a validity test. | The test was evaluated with 60 subjects before being administered, but without justification that it resulted in a validity test. | 1st CFA 2nd SEM. | Cronbach’s alpha and McDonald’s omega. | No. | Group: gender. Level: residual. | No. | Convergent evidence. Social and regulatory adjustment. | No. |

| Bensalah et al. (2016) | It was reviewed by participants who had previously received the instrument. | No. | CFA. | Cronbach’s alpha. | 1 month. | No. | Compare the original version with the reduced version within the same studio. | Discriminant evidence. Social desirability. | No. |

| Anastácio et al. (2016) | Translation reviewed by experts. | No. | CFA. | Cronbach’s alpha. | No. | Group: gender and age. Level: scalar. | No. | Convergent evidence. Interpersonal conflict and social skills. | No. |

| Villadangos et al. (2016) | No. | No. | CFA. | Cronbach’s alpha. | No. | No. | No. | Convergent evidence: kindness. Discriminant evidence: narcissism and psychoticism. | No. |

| Heynen et al. (2016) | No. | No. | CFA. | Cronbach’s alpha. | No. | No. | Compare the original version with the reduced version within the same studio. | Convergent evidence. Insensitivity traits. | No. |

| Merino-Soto and Grimaldo-Muchotrigo (2015) | No. | No. | CFA. | Cronbach’s alpha and McDonald’s omega. | No. | No. | No. | No. | No. |

| Pechorro et al. (2015) | Translation reviewed by experts. | No. | CFA. | Cronbach’s alpha. | No. | No. | Compare the original version with the reduced version within the same studio. | Convergent evidence: social anxiety. Discriminant evidence: psychopathy, insensitivity, and aggression. | No. |

| Sánchez-Pérez et al. (2014) | No. | No. | CFA. | Cronbach’s alpha. | No. | No. | Compare the original version with the reduced version within the same studio. | Convergent evidence. Family environment, family dissatisfaction, parenting, social skills, and aggression. | Self-reported empathy measures reported by children and those reported by their parents should be treated in a complementary, not equivalent, way. (Clinical implications.) |

| Carré et al. (2013) | Translation reviewed by experts. | No. | CFA. | Cronbach’s alpha. | 7 weeks. | No. | Compare the original version with the reduced version within the same studio. | Convergent evidence: empathy, alexithymia, and emotional state. Discriminant evidence: Social desirability. | No. |

| Salas-Wright et al. (2013) | Translation reviewed by experts. | Cognitive interviews were given to participants. | 1st CFA 2nd MGFA. | Cronbach’s alpha. | No. | Group: level of development. Level: metric. | Compare their results to those of other validations. | Convergent evidence. Crime, violence, and antisocial behavior. | No. |

| Geng et al. (2012) | Translation reviewed by experts. | A pilot group is asked item by item, although it is not justified that the purpose is to evaluate validity. | 1st EFA 2nd CFA. | Cronbach’s alpha. | 4 weeks. | No. | Compare their results to those of other validations and compare the original version with the reduced version within the same studio. | Convergent evidence: Strengths and difficulties. | No. |

| Čavojová et al. (2012) | Translation reviewed by experts. | No. | 1st CFA 2nd SEM. | Cronbach’s alpha. | No. | Group: gender. Level: metric. | No. | Convergent evidence: empathy. Discriminant evidence: theory of mind. | No. |

| D’Ambrosio et al. (2009) | No. | No. | 1st CFA 2nd SEM. | Cronbach’s alpha. | 3 weeks. | No. | Compare their results to those of other validations. | Convergent evidence: empathy and alexithymia. Discriminant evidence: social desirability. | No. |

| Albiero et al. (2009) | Translation reviewed by experts. | No. | CFA. | Cronbach’s alpha. | No. | No. | Compare their results to those of other validations. | Convergent evidence: emotional empathy, sympathy, and pro-social behavior. Discriminant evidence: social desirability. | No. |

| Jolliffe and Farrington (2006a) | Explanation of the creation of the items. | No. | 1st EFA 2nd CFA. | Cronbach’s alpha. | No. | No. | Compare the original version with the reduced version within the same studio. | Sympathy, alexithymia, intelligence, impulsivity, personality, parental supervision, and behavioral response to witnessing bullying. Discriminant evidence: social desirability. | No. |

Appendix B

| Study | Cognitive Empathy α | 95% CI α | Levels | Affective Empathy α | 95% CI α | Levels | ||||

|---|---|---|---|---|---|---|---|---|---|---|

| Lower Limit | Upper Limit | <0.70 | <0.80 | Lower Limit | Upper Limit | <0.70 | <0.80 | |||

| 1. Guasp Coll et al. (2020) | 0.68 | 0.65 | 0.71 | I | N | 0.68 | 0.65 | 0.71 | I | N |

| 2. Schoeps et al. (2020) | 0.76 | 0.71 | 0.8 | Y | I | 0.68 | 0.62 | 0.73 | I | N |

| 3. Fatma et al. (2020) | 0.782 | 0.7 | 0.84 | Y | I | 0.649 | 0.51 | 0.75 | I | N |

| 4. Khosravani et al. (2020) | 0.88 | 0.86 | 0.9 | Y | Y | 0.86 | 0.83 | 0.88 | Y | Y |

| 5. McLaren et al. (2019) | 0.78 | 0.75 | 0.81 | Y | I | 0.86 | 0.84 | 0.88 | Y | Y |

| 6. Triffaux et al. (2019) | 0.66 | 0.63 | 0.68 | N | N | 0.77 | 0.75 | 0.79 | Y | N |

| 7. Juliá-Sanchis et al. (2019) | 0.96 | 0.95 | 0.96 | Y | Y | 0.96 | 0.95 | 0.96 | Y | Y |

| 8. Cañero Pérez et al. (2019) | 0.77 | 0.7 | 0.82 | Y | I | 0.67 | 0.57 | 0.75 | I | N |

| 9. You et al. (2018) | 0.83 | 0.81 | 0.85 | Y | Y | 0.78 | 0.76 | 0.8 | Y | I |

| 10. Gamin and Sharp (2018a) | 0.79 | 0.76 | 0.82 | Y | I | 0.86 | 0.84 | 0.88 | Y | Y |

| 11. Gamin and Sharp (2018b) | 0.82 | 0.78 | 0.86 | Y | I | 0.89 | 0.86 | 0.91 | Y | Y |

| 12. Errasti et al. (2017) | 0.78 | 0.75 | 0.81 | Y | I | 0.81 | 0.78 | 0.83 | Y | I |

| 13. Pechorro et al. (2017c) | 0.91 | 0.9 | 0.92 | Y | Y | 0.83 | 0.8 | 0.85 | Y | Y |

| 14. Herrera-López et al. (2017) | 0.67 | 0.63 | 0.7 | I | N | 0.7 | 0.67 | 0.73 | I | N |

| 15. Villadangos et al. (2016) | 0.96 | 0.96 | 0.96 | Y | Y | 0.92 | 0.91 | 0.93 | Y | Y |

| 16. Sekol and Farrington (2016) | 0.68 | 0.64 | 0.72 | I | N | 0.7 | 0.66 | 0.73 | I | N |

| 17. Pechorro et al. (2016a) | 0.9 | 0.88 | 0.91 | Y | Y | 0.87 | 0.85 | 0.89 | Y | Y |

| 18. Pechorro et al. (2016b) | 0.93 | 0.92 | 0.94 | Y | Y | 0.89 | 0.88 | 0.9 | Y | Y |

| 19. Pechorro et al. (2015) | 0.9 | 0.88 | 0.92 | Y | Y | 0.87 | 0.84 | 0.89 | Y | Y |

| 20. Vural et al. (2014) | 0.78 | 0.71 | 0.83 | Y | I | 0.62 | 0.51 | 0.71 | I | N |

| 21. Mavropoulou and Sideridis (2014) | 0.66 | 0.61 | 0.7 | I | N | 0.54 | 0.47 | 0.6 | N | N |

| 22. Pettalia et al. (2013) | 0.7 | 0.64 | 0.75 | I | N | 0.84 | 0.81 | 0.87 | Y | Y |

| 23. Totan et al. (2012) | 0.8 | 0.78 | 0.82 | Y | I | 0.76 | 0.73 | 0.79 | Y | N |

| 24. Čavojová et al. (2012) | 0.7 | 0.67 | 0.73 | I | N | 0.76 | 0.73 | 0.78 | Y | N |

| 25. Topcu and Erdur-Baker (2012) | 0.81 | 0.79 | 0.83 | Y | I | 0.75 | 0.72 | 0.77 | Y | N |

| 26. Sekol and Farrington (2010) | 0.68 | 0.64 | 0.72 | I | N | 0.7 | 0.66 | 0.73 | I | N |

| 27. Stavrinides et al. (2010) | 0.8 | 0.75 | 0.84 | Y | I | 0.71 | 0.64 | 0.76 | I | N |

| 28. D’Ambrosio et al. (2009) | 0.66 | 0.61 | 0.7 | I | N | 0.77 | 0.74 | 0.8 | Y | I |

| 29. Albiero et al. (2009) | 0.74 | 0.71 | 0.77 | Y | N | 0.86 | 0.84 | 0.88 | Y | Y |

| 30. Jolliffe and Farrington (2006) | 0.79 | 0.77 | 0.81 | Y | I | 0.85 | 0.83 | 0.87 | Y | Y |

Appendix C

| Study | Sample Characteristics | Language of the Instrument | Cognitive Empathy Cronbach’s α | Affective Empathy Cronbach’s α | |||

|---|---|---|---|---|---|---|---|

| Number of Subjects | Sex | Type | Generational Group | ||||

| 1. Guasp Coll et al. (2020) | 991 | Mixed | General | Adolescents | Spanish | 0.68 | 0.68 |

| 2. Schoeps et al. (2020) | 250 | Mixed | General | Young | Spanish | 0.76 | 0.68 |

| 3. Fatma et al. (2020) | 80 | Mixed | General | Adults | Turkish | 0.782 | 0.649 |

| 4. Khosravani et al. (2020) | 300 | Mixed | Special | Adults | Persian | 0.88 | 0.86 |

| 5. McLaren et al. (2019) | 555 | Mixed | Special | Adults | English | 0.78 | 0.86 |

| 6. Triffaux et al. (2019) | 1602 | Mixed | General | Young | French | 0.66 | 0.77 |

| 7. Juliá-Sanchis et al. (2019) | 544 | Mixed | General | Adults | Spanish | 0.96 | 0.96 |

| 8. Cañero Pérez et al. (2019) | 122 | Mixed | General | Young | Spanish | 0.77 | 0.67 |

| 9. You et al. (2018) | 993 | Mixed | General | Adolescents | Korean | 0.83 | 0.78 |

| 10. Gamin and Sharp (2018a) | 403 | Mixed | Special | Adolescents | English | 0.79 | 0.86 |

| 11. Gamin and Sharp (2018b) | 177 | Mixed | Special | Adolescents | English | 0.82 | 0.89 |

| 12. Errasti et al. (2017) | 503 | Mixed | General | Adolescents | Spanish | 0.78 | 0.81 |

| 13. Pechorro et al. (2017c) | 377 | Women | Special | Adolescents | Portuguese | 0.91 | 0.83 |

| 14. Herrera-López et al. (2017) | 747 | Mixed | General | Adolescents | Spanish | 0.67 | 0.7 |

| 15. Villadangos et al. (2016) | 752 | Mixed | General | Young | Spanish | 0.96 | 0.92 |

| 16. Sekol and Farrington (2016) | 601 | Mixed | Special | Young | English | 0.68 | 0.7 |

| 17. Pechorro et al. (2016a) | 438 | Mixed | Special | Young | Portuguese | 0.90 | 0.87 |

| 18. Pechorro et al. (2016b) | 782 | Mixed | Special | Young | Portuguese | 0.93 | 0.89 |

| 19. Pechorro et al. (2015) | 221 | Men | Special | Young | Portuguese | 0.90 | 0.87 |

| 20. Vural et al. (2014) | 124 | Mixed | General | Adults | Turkish | 0.78 | 0.62 |

| 21. Mavropoulou and Sideridis (2014) | 475 | Mixed | General | Adolescents | English | 0.66 | 0.54 |

| 22. Pettalia et al. (2013) | 260 | Mixed | General | Adolescents | English | 0.70 | 0.84 |

| 23. Totan et al. (2012) | 698 | Mixed | General | Young | Turkish | 0.80 | 0.76 |

| 24. Čavojová et al. (2012) | 747 | Mixed | General | Adolescents | Slovakian | 0.70 | 0.76 |

| 25. Topcu and Erdur-Baker (2012) | 795 | Mixed | General | Adolescents | Turkish | 0.81 | 0.75 |

| 26. Sekol and Farrington (2010) | 601 | Mixed | Special | Young | English | 0.68 | 0.70 |

| 27. Stavrinides et al. (2010) | 205 | Mixed | General | Adolescents | Greek | 0.80 | 0.71 |

| 28. D’Ambrosio et al. (2009) | 446 | Mixed | General | Adolescents | French | 0.66 | 0.77 |

| 29. Albiero et al. (2009) | 655 | Mixed | General | Adolescents | Italian | 0.74 | 0.86 |

| 30. Jolliffe and Farrington (2006) | 720 | Mixed | General | Adolescents | English | 0.79 | 0.85 |

Appendix D

| TITLE | Yes | No | Page | NA |

| 1. Title | X | 1 | ||

| ABSTRACT | Yes | No | Page | NA |

| 2. Abstract | X | 1 | ||

| INTRODUCTION | Yes | No | Page | NA |

| 3. Background | X | 2 | ||

| 4. Objectives | X | 4 | ||

| METHOD | Yes | No | Page | NA |

| 5. Selection criteria | X | 5 | ||

| 6. Search strategies | X | 5 | ||

| 7. Data extraction | X | 6 | ||

| 8. Reported reliability | X | 6 | ||

| 9. Estimating the reliability induction and other sources of bias | X | 6 | ||

| 10. Data extraction of inducing studies | X | 6 | ||

| 11. Reliability of data extraction | X | 6 | ||

| 12. Transformation method | X | 7 | ||

| 13. Statistical model | X | 7 | ||

| 14. Weighting method | X | 7 | ||

| 15. Heterogeneity assessment | X | 7 | ||

| 16. Moderator analyses | X | 8 | ||

| 17. Additional analyses | X | 8 | ||

| 18. Software | X | 8 | ||

| RESULTS | Yes | No | Page | NA |

| 19. Results of the study selection process | X | 13 | ||

| 20. Mean reliability and heterogeneity | X | 14 | ||

| 21. Moderator analyses | X | 16 | ||

| 22. Sensitivity analyses | X | 14 | ||

| 23. Comparison of inducing and reporting studies | X | 13 | ||

| 24. Data set | X | 25 | ||

| DISCUSSION | Yes | No | Page | NA |

| 25. Summary of results | X | 19 | ||

| 26. Limitations | X | 19 | ||

| 27. Implications for practice | X | 20 | ||

| 28. Implications for future research | X | 19 | ||

| FUNDING | Yes | No | Page | NA |

| 29. Funding | X | 21 | ||

| PROTOCOL | Yes | No | Page | NA |

| 30. Protocol | X |

References

- Cuff, B.M.P.; Brown, S.J.; Taylor, L.; Howat, D.J. Empathy: A Review of the Concept. Emot. Rev. 2016, 8, 144–153. [Google Scholar] [CrossRef]

- Eklund, J.; Meranius, M.S. Toward a consensus on the nature of empathy: A review of reviews. Patient Educ. Couns. 2021, 104, 300–307. [Google Scholar] [CrossRef]

- Jolliffe, D.; Farrington, D.P. Development and validation of the Basic Empathy Scale. J. Adolesc. 2006, 29, 589–611. [Google Scholar] [CrossRef]

- Davis, M.H.A. A multidimensional approach to individual differences in empathy. Cat. Sel. Doc. Psychol. 1980, 10, 1–17. Available online: https://www.researchgate.net/publication/34891073_A_Multidimensional_Approach_to_Individual_Differences_in_Empathy (accessed on 15 November 2021).

- Hogan, R. Development of an empathy scale. J. Consult. Clin. Psychol. 1969, 33, 307. [Google Scholar] [CrossRef]

- Mehrabian, A.; Epstein, N. A measure of emotional empathy. J. Personal. 1972, 40, 525–543. [Google Scholar] [CrossRef] [PubMed]

- Chismar, D. Empathy and sympathy: The important difference. J. Value Inq. 1988, 22, 257–266. [Google Scholar] [CrossRef]

- Eisenberg, N. Empathy and Sympathy: A Brief Review of the Concepts and Empirical Literature. Anthrozoös 1988, 2, 15–17. [Google Scholar] [CrossRef]

- Pernice, R.E.; Ommundsen, R.; van der Veer, K.; Larsen, K. On the use of student samples for scale construction. Psychol. Rep. 2008, 102, 459–464. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hanel, P.H.P.; Vione, K.C. Do Student Samples Provide an Accurate Estimate of the General Public? PLoS ONE 2016, 11, e0168354. [Google Scholar] [CrossRef] [Green Version]

- Cohen, D.; Strayer, J. Empathy in Conduct-Disordered and Comparison youth. Dev. Psychol. 1996, 32, 988. [Google Scholar] [CrossRef]

- Jolliffe, D.; Farrington, D.P. Empathy and offending: A systematic review and meta-analysis. Aggress. Violent Behav. 2004, 9, 441–476. [Google Scholar] [CrossRef]

- Jolliffe, D.; Farrington, D.P. Examining the relationship between low empathy and bullying. Aggress. Behav. 2006, 32, 540–550. [Google Scholar] [CrossRef]

- Jolliffe, D.; Farrington, D.P. Empathy Versus Offending, Aggression and Bullying; Taylor and Francis: Milton, MA, USA, 2021. [Google Scholar]

- Heynen, E.J.E.; Van Der Helm, G.H.P.; Stams, G.J.J.M.; Korebrits, A.M. Measuring Empathy in a German Youth Prison: A Validation of the German Version of the Basic Empathy Scale (BES) in a Sample of Incarcerated Juvenile Offenders. J. Forensic Psychol. Pract. 2016, 16, 336. [Google Scholar] [CrossRef] [Green Version]

- Pechorro, P.; Ayala-Nunes, L.; Kahn, R.; Nunes, C. The Reactive–Proactive Aggression Questionnaire: Measurement Invariance and Reliability Among a School Sample of Portuguese Youths. Child Psychiatry Hum. Dev. 2018, 49, 523. [Google Scholar] [CrossRef]

- Pechorro, P.; Da Silva, D.R.; Rijo, D.; Gonçalves, R.A.; Andershed, H. Psychometric Properties and Measurement Invariance of the Youth Psychopathic Traits Inventory—Short Version among Portuguese Youth. J. Psychopathol. Behav. Assess. 2017, 39, 486. [Google Scholar] [CrossRef]

- Sánchez-Pérez, N.; Fuentes, L.J.; Jolliffe, D.; González-Salinas, C. Assessing children’s empathy through a Spanish adaptation of the Basic Empathy Scale: Parent’s and child’s report forms. Front. Psychol. 2014, 5, 1438. [Google Scholar] [CrossRef] [Green Version]

- Pechorro, P.; Kahn, R.E.; Abrunhosa Gonçalves, R.; Ray, J.V. Psychometric properties of Basic Empathy Scale among female juvenile delinquents and school youths. Int. J. Law Psychiatry 2017, 55, 29–36. [Google Scholar] [CrossRef]

- Pechorro, P.; Gonçalves, R.A.; Andershed, H.; Delisi, M. Female Psychopathic Traits in Forensic and School Context: Comparing the Antisocial Process Screening Device Self-Report and the Youth Psychopathic Traits Inventory-Short. J. Psychopathol. Behav. Assess. 2017, 39, 642. [Google Scholar] [CrossRef]

- Villadangos, M.; Errasti, J.; Amigo, I.; Jolliffe, D.; García-Cueto, E. Characteristics of Empathy in young people measured by the Spanish validation of the Basic Empathy Scale. Psicothema 2016, 28, 323–329. [Google Scholar] [CrossRef] [PubMed]

- Oliva Delgado, A.; Antolín Suárez, L.; Pertegal Vega, M.A.; Ríos Bermúdez, M.; Parra Jiménez, A.; Hernando Gómez, A.; Reina Flores, M.D.C. Instrumentos Para la Evaluación de la Salud Mental y el Desarrollo Positivo Adolescente y los Activos que lo Promueven. Consejería de Salud (Sevilla, España). 2011. Available online: https://idus.us.es/bitstream/handle/11441/32153/desarrolloPositivo_instrumentos.pdf?sequence=1 (accessed on 15 November 2021).

- Merino-Soto, C.; Grimaldo-Muchotrigo, M. Validación estructural de la escala básica de empatía (Basic Empathy Scale) modificada en Adolescents: Un estudio preliminar. Rev. Colomb. Psicol. 2015, 24, 261–270. [Google Scholar] [CrossRef]

- Merino-Soto, C.; López-Fernández, V.; Grimaldo-Muchotrigo, M. Invarianza de medición y estructural de la escala básica de empatía breve (BES-B) en niños y Adolescents peruanos. Rev. Colomb. Psicol. 2019, 28, 15–32. [Google Scholar] [CrossRef] [Green Version]

- Bensalah, L.; Stefaniak, N.; Carre, A.; Besche-Richard, C. The Basic Empathy Scale adapted to French middle childhood: Structure and development of empathy. Behav. Res. 2015, 48, 1410–1420. [Google Scholar] [CrossRef] [Green Version]

- Carré, A.; Stefaniak, N.; D’Ambrosio, F.; Bensalah, L.; Besche-Richard, C. The Basic Empathy Scale in Adults (BES-A): Factor Structure of a Revised Form. Psychol. Assess. 2013, 25, 679–691. [Google Scholar] [CrossRef] [Green Version]

- Ma, J.; Wang, X.; Qiu, Q.; Zhan, H.; Wu, W. Changes in Empathy in Patients With Chronic Low Back Pain: A Structural–Functional Magnetic Resonance Imaging Study. Front. Hum. Neurosci. 2020, 14, 326. [Google Scholar] [CrossRef] [PubMed]

- Decety, J.; Svetlova, M. Putting together phylogenetic and ontogenetic perspectives on empathy. Dev. Cogn. Neurosci. 2012, 2, 1–24. [Google Scholar] [CrossRef] [PubMed]

- Hemmerdinger, J.M.; Stoddart, S.D.R.; Lilford, R.J. A systematic review of tests of empathy in medicine. BMC Med. Educ. 2007, 7, 24. [Google Scholar] [CrossRef] [Green Version]

- Yu, J.; Kirk, M. Evaluation of empathy measurement tools in nursing: Systematic review. J. Adv. Nurs. 2009, 65, 1790–1806. [Google Scholar] [CrossRef] [PubMed]

- American Educational Research Association; American Psychological Association; National Council on Measurement in Education. Standards for Educational and Psychological Testing; American Educational Research Association: Washington, DC, USA, 2014. [Google Scholar]

- Hawkins, M.; Elsworth, G.R.; Hoban, E.; Osborne, R.H. Questionnaire validation practice within a theoretical framework: A systematic descriptive literature review of health literacy assessments. BMJ Open 2020, 10, e035974. [Google Scholar] [CrossRef] [PubMed]

- Badenes-Ribera, L.; Rubio-Aparicio, M.; Sánchez-Meca, J. Reliability generalization and meta-analysis. Inf. Psicol. 2020, 119, 17–32. [Google Scholar] [CrossRef]

- Vacha-Haase, T. Reliability Generalization: Exploring Variance in Measurement Error Affecting Score Reliability Across Studies. Educ. Psychol. Meas. 1998, 58, 6–20. [Google Scholar] [CrossRef]

- Sánchez-Meca, J.; Marín-Martínez, F.; López-López, J.A.; Núñez-Núñez, R.M.; Rubio-Aparicio, M.; López-García, J.J.; López-Pina, J.A.; Blázquez-Rincón, D.M.; López-Ibáñez, C.; López-Nicolás, R. Improving the reporting quality of reliability generalization meta-analyses: The REGEMA checklist. Res. Synth. Methods 2021, 12, 516–536. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Rubio-Aparicio, M.; Badenes-Ribera, L.; Sánchez-Meca, J.; Fabris, M.A.; Longobardi, C. A reliability generalization meta-analysis of self-report measures of muscle dysmorphia. Clin. Psychol. Sci. Pract. 2020, 27, e12303. [Google Scholar] [CrossRef]

- The Jamovi Project. Jamovi (Version 1.6). 2019. Available online: https://www.jamovi.org (accessed on 15 November 2021).

- Viechtbauer, W. Conducting Meta-Analyses in R with the metafor Package. J. Stat. Softw. 2010, 36, 1–48. [Google Scholar] [CrossRef] [Green Version]

- Bonett, D.G.; Wright, T.A. Cronbach’s alpha reliability: Interval estimation, hypothesis testing, and sample size planning. J. Organ. Behav. 2015, 36, 3–15. [Google Scholar] [CrossRef]

- Savalei, V. A Comparison of Several Approaches for Controlling Measurement Error in Small Samples. Psychol. Methods 2019, 24, 352–370. [Google Scholar] [CrossRef] [PubMed]

- Taber, K.S. The Use of Cronbach’s Alpha When Developing and Reporting Research Instruments in Science Education. Res. Sci. Educ. 2017, 48, 1273–1296. [Google Scholar] [CrossRef]

- Egger, M.; Smith, G.D.; Schneider, M.; Minder, C. Bias in meta-analysis detected by a simple, graphical test. BMJ 1997, 315, 629–634. [Google Scholar] [CrossRef] [Green Version]

- Begg, C.B.; Mazumdar, M. Operating Characteristics of a Rank Correlation Test for Publication Bias. Biometrics 1994, 50, 1088–1101. [Google Scholar] [CrossRef]

- Hayashino, Y.; Noguchi, Y.; Fukui, T. Systematic Evaluation and Comparison of Statistical Tests for Publication Bias. J. Epidemiol. 2005, 15, 235–243. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Egger, M. Systematic Reviews in Health Care: Meta-Analysis in Context, 2nd ed.; BMJ Publish Group: London, UK, 2001. [Google Scholar]

- Sterne, J.A.; Egger, M.; Smith, G.D. Systematic Reviews in Health Care: Investigating and Dealing with Publication and Other Biases in Meta-Analysis. BMJ 2001, 323, 101–105. [Google Scholar] [CrossRef]

- Bonett, D.G. Varying Coefficient Meta-Analytic Methods for Alpha Reliability. Psychol. Methods 2010, 15, 368–385. [Google Scholar] [CrossRef] [Green Version]

- Veroniki, A.A.; Jackson, D.; Bender, R.; Kuss, O.; Langan, D.; Higgins, J.P.; Knapp, G.; Salanti, G. Methods to calculate uncertainty in the estimated overall effect size from a random-effects meta-analysis. Res. Synth. Methods 2019, 10, 23–43. [Google Scholar] [CrossRef] [Green Version]

- Hartung, J.; Knapp, G. On tests of the overall treatment effect in the meta-analysis with normally distributed responses. Stat. Med. 2001, 20, 1771–1782. [Google Scholar] [CrossRef]

- Hout, J.i.; Ioannidis, J.P.; Borm, G.F. The Hartung-Knapp-Sidik-Jonkman method for random effects meta-analysis is straightforward and considerably outperforms the standard DerSimonian-Laird method. BMC Med. Res. Methodol. 2014, 14, 25. [Google Scholar] [CrossRef] [Green Version]

- Higgins, J.P.T.; Thompson, S.G.; Deeks, J.J.; Altman, D.G. Measuring inconsistency in meta-analyses. BMJ 2003, 327, 557–560. [Google Scholar] [CrossRef] [Green Version]

- Huedo-Medina, T.B.; Sánchez-Meca, J.; Marín-Martínez, F.; Botella, J. Assessing Heterogeneity in Meta-Analysis: Q statistic or I2 index? Psychol. Methods 2006, 11, 193–206. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Higgins, J.P.T.; Thompson, S.G. Quantifying heterogeneity in a meta-analysis. Stat. Med. 2002, 21, 1539–1558. [Google Scholar] [CrossRef] [PubMed]

- Harrer, M.; Cuijpers, P.; Furukawa, T.; Ebert, D.D. dmetar: Companion R Package for the Guide ‘Doing Meta-Analysis in R’. R package version 0.0.9000. 2019. Available online: http://dmetar.protectlab.org/ (accessed on 15 November 2021).

- Guasp Coll, M.; Navarro-Mateu, D.; Giménez-Espert, M.D.C.; Prado-Gascó, V.J. Emotional Intelligence, Empathy, Self-Esteem, and Life Satisfaction in Spanish Adolescents: Regression vs. QCA Models. Front. Psychol. 2020, 11, 1629. [Google Scholar] [CrossRef] [PubMed]

- Fatma, A.Y.; Polat, S.; Kashimi, T. Relationship Between the Problem-Solving Skills and Empathy Skills of Operating Room Nurses. J. Nurs. Res. 2020, 28, e75. [Google Scholar] [CrossRef]

- Khosravani, V.; Samimi Ardestani, S.M.; Alvani, A.; Amirinezhad, A. Alexithymia, empathy, negative affect and physical symptoms in patients with asthma. Clin. Psychol. Psychother. 2020, 27, 736–748. [Google Scholar] [CrossRef]

- Parlangeli, O.; Marchigiani, E.; Bracci, M.; Duguid, A.M.; Palmitesta, P.; Marti, P. Offensive acts and helping behavior on the internet: An analysis of the relationships between moral disengagement, empathy and use of social media in a sample of Italian students. Work 2019, 63, 469–477. [Google Scholar] [CrossRef]

- Triffaux, J.; Tisseron, S.; Nasello, J.A. Decline of empathy among medical students: Dehumanization or useful coping process? Encéphale 2019, 45, 3–8. [Google Scholar] [CrossRef]

- Liu, P.; Wang, X. Evaluation of Reliability and Validity of Chinese Version Borderline Personality Features Scale for Children. Med. Sci. Monit. 2019, 25, 3476–3784. [Google Scholar] [CrossRef]

- Gambin, M.; Gambin, T.; Sharp, C. Social cognition, psychopathological symptoms, and family functioning in a sample of inpatient adolescents using variable-centered and person-centered approaches. J. Adolesc. 2015, 45, 31–43. [Google Scholar] [CrossRef] [PubMed]

- Gambin, M.; Sharp, C. The Differential Relations Between Empathy and Internalizing and Externalizing Symptoms in Inpatient Adolescents. Child Psychiatry Hum. Dev. 2016, 47, 966–974. [Google Scholar] [CrossRef]

- Gambin, M.; Sharp, C. The relations between empathy, guilt, shame and depression in inpatient adolescents. J. Affect. Disord. 2018, 241, 381–387. [Google Scholar] [CrossRef] [PubMed]

- Gambin, M.; Sharp, C. Relations between empathy and anxiety dimensions in inpatient adolescents. Anxiety Stress Coping 2018, 31, 447–458. [Google Scholar] [CrossRef] [PubMed]

- Pechorro, P.; Ray, J.V.; Salas-Wright, C.P.; Maroco, J.; Gonçalves, R.A. Adaptation of the Basic Empathy Scale among a Portuguese sample of incarcerated juvenile offenders. Psychol. Crime Law 2015, 21, 699–714. [Google Scholar] [CrossRef]

- Pechorro, P.; Ribeiro da Silva, D.; Andershed, H.; Rijo, D.; Abrunhosa Gonçalves, R. The Youth Psychopathic Traits Inventory: Measurement Invariance and Psychometric Properties among Portuguese Youths. Int. J. Environ. Res. Public Health 2016, 13, 852. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pechorro, P.; Hidalgo, V.; Nunes, C.; Jiménez, L. Confirmatory Factor Analysis of the Antisocial Process Screening Device. Int. J. Offender Ther. Comp. Criminol. 2016, 60, 1856–1872. [Google Scholar] [CrossRef]

- Pechorro, P.; Ray, J.V.; Raine, A.; Maroco, J.; Gonçalves, R.A. The Reactive–Proactive Aggression Questionnaire: Validation Among a Portuguese Sample of Incarcerated Juvenile Delinquents. J. Interpers. Violence 2017, 32, 1995–2017. [Google Scholar] [CrossRef] [PubMed]

- Pechorro, P.; Simões, M.R.; Alberto, I.; Ray, J.V. Triarchic Model of Psychopathy: A Brief Measure Among Detained Female Youths. Deviant Behav. 2018, 39, 1497–1506. [Google Scholar] [CrossRef]

- Stavrinides, P.; Georgiou, S.; Theofanous, V. Bullying and empathy: A short-term longitudinal investigation. Educ. Psychol. 2010, 30, 793–802. [Google Scholar] [CrossRef]

- Pérez-Fuentes, M.D.C.; Gázquez Linares, J.J.; Molero Jurado, M.D.M.; Simón Márquez, M.D.M.; Martos Martínez, Á. The mediating role of cognitive and affective empathy in the relationship of mindfulness with engagement in nursing. BMC Public Health 2020, 20, 1–10. [Google Scholar] [CrossRef] [Green Version]

- Ventura-León, J.; Caycho-Rodríguez, T.; Dominguez-Lara, S. Invarianza Factorial Según Sexo de la Basic Empathy Scale Abreviada en Adolescents Peruanos. Psykhe 2019, 28, 1–11. [Google Scholar] [CrossRef]

- Herrera-López, M.; Gómez-Ortiz, O.; Ortega-Ruiz, R.; Jolliffe, D.; Romera, E.M. Suitability of a three-dimensional model to measure empathy and its relationship with social and normative adjustment in Spanish adolescents: A cross-sectional study. BMJ Open 2017, 7, e015347. [Google Scholar] [CrossRef]

- Salas-Wright, C.P.; Olate, R.; Vaughn, M.G. Assessing Empathy in Salvadoran High-Risk and Gang-Involved Adolescents and Young Adults. Int. J. Offender Ther. Comp. Criminol. 2013, 57, 1393–1416. [Google Scholar] [CrossRef]

- Geng, Y.; Xia, D.; Qin, B. The basic empathy scale: A Chinese validation of a measure of empathy in adolescents. Child Psychiatry Hum. Dev. 2012, 43, 499–510. [Google Scholar] [CrossRef]

- D’Ambrosio, F.; Olivier, M.; Didon, D.; Besche, C. The basic empathy scale: A French validation of a measure of empathy in youth. Pers. Individ. Differ. 2009, 46, 160–165. [Google Scholar] [CrossRef]

- Cavojová, V.; Sirota, M.; Belovicová, Z. Slovak Validation of the Basic Empathy Scale in Pre-Adolescents. Studia Psychol. 2012, 54. Available online: http://www.studiapsychologica.com/uploads/CAVOJOVA_SP_3_vol.54_2012_pp.195-208.pdf (accessed on 18 December 2021).

- McLaren, V.; Vanwoerden, S.; Sharp, C. The Basic Empathy Scale: Factor Structure and Validity in a Sample of Inpatient Adolescents. Psychol. Assess. 2019, 31, 1208–1219. [Google Scholar] [CrossRef]

- You, S.; Lee, J.; Lee, Y. Validation of Basic Empathy Scale: Exploring a Korean Version. Curr. Psychol. 2018, 37, 726–730. [Google Scholar] [CrossRef]

- Anastácio, S.; Vagos, P.; Nobre-Lima, L.; Rijo, D.; Jolliffe, D. The Portuguese version of the Basic Empathy Scale (BES): Dimensionality and measurement invariance in a community adolescent sample. Eur. J. Dev. Psychol. 2016, 13, 614–623. [Google Scholar] [CrossRef] [Green Version]

- Zych, I.; Farrington, D.P.; Nasaescu, E.; Jolliffe, D.; Twardowska-Staszek, E. Psychometric properties of the Basic Empathy Scale in Polish children and adolescents. Curr. Psychol. 2020. [CrossRef] [Green Version]

- Albiero, P.; Matricardi, G.; Speltri, D.; Toso, D. The assessment of empathy in adolescence: A contribution to the Italian validation of the “Basic Empathy Scale”. J. Adolesc. 2008, 32, 393–408. [Google Scholar] [CrossRef]

- Errasti, J.; Amigo, I.; Villadangos, M. Emotional Uses of Facebook and Twitter: Its Relation With Empathy, Narcissism, and Self-Esteem in Adolescence. Psychol. Rep. 2017, 120, 997–1018. [Google Scholar] [CrossRef]

- Delgado Rodríguez, M.; Llorca Díaz, J. Estudios longitudinales. Rev. Esp. Salud Pública 2004, 78, 141–148. Available online: https://scielo.isciii.es/scielo.php?script=sci_arttext&pid=S1135-57272004000200002 (accessed on 18 December 2021).

- Pederson, L.L.; Vingilis, E.; Wickens, C.M.; Koval, J.; Mann, R.E. Use of secondary data analyses in research: Pros and Cons. J. Addict. Med. Ther. Sci. 2020, 6, 58–60. [Google Scholar] [CrossRef]

- Teddlie, C.; Tashakkori, A. Major Issues and Contro-Versies in the Use of Mixed Methods in the Social and Behavioral Sciences. In Handbook of Mixed Methods in Social and Behavioral Research; Tashakkori, A., Teddlie, C., Eds.; Sage Publications: Thousand Oaks, CA, USA, 2003; pp. 3–50. [Google Scholar]

- Tashakkori, A.; Teddlie, C. Foundations of Mixed Methods Research: Integrating Quantitative and Qualitative Approaches in the Social and Behavioral Sciences, 1st ed.; Sage Publications: Thousand Oaks, CA, USA, 2008. [Google Scholar]

- Teddlie, C.; Tashakkori, A. Common “Core” Characteristics of Mixed Methods Research. Am. Behav. Sci. 2012, 56, 774–788. [Google Scholar] [CrossRef]

- Venkatesh, V.; Brown, S.A.; Bala, H. Bridging the Qualitative-Quantitative Divide: Guidelines for Conducting Mixed Methods Research in Information Systems. MIS Q. 2013, 37, 21–54. [Google Scholar] [CrossRef]

- Hambleton, R.K.; Li, S. Translation and Adaptation Issues and Methods for Educational and Psychological Tests. In Comprehensive Handbook of Multicultural School Psychology; Frisby, C.L., Reynolds, C.R., Eds.; John Wiley & Sons: Hoboken, NJ, USA, 2005; pp. 881–903. [Google Scholar]

- Flora, D.B. Your Coefficient Alpha Is Probably Wrong, but Which Coefficient Omega Is Right? A Tutorial on Using R to Obtain Better Reliability Estimates. Adv. Methods Pract. Psychol. Sci. 2020, 3, 484–501. [Google Scholar] [CrossRef]

- Hayes, A.F.; Coutts, J.J. Use Omega Rather than Cronbach’s Alpha for Estimating Reliability. But…. Commun. Methods Meas. 2020, 14, 1–24. [Google Scholar] [CrossRef]

- Asparouhov, T.; Muthén, B. Exploratory Structural Equation Modeling. Structural Equation Modeling. Struct. Equ. Model. A Multidiscip. J. 2009, 16, 397–438. [Google Scholar] [CrossRef]

- Marsh, H.W.; Morin, A.J.S.; Parker, P.D.; Kaur, G. Exploratory Structural Equation Modeling: An Integration of the Best Features of Exploratory and Confirmatory Factor Analysis. Annu. Rev. Clin. Psychol. 2014, 10, 85–110. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- World Health Organization. What Is the Evidence on the Methods Frameworks and Indicators Used to Evaluate Health Literacy Policies Programmes and Interventions at the Regional National and Organizational Levels? World Health Organization, Regional Office for Europe: Geneva, Switzerland, 2019. Available online: https://apps.who.int/iris/bitstream/handle/10665/326901/9789289054324-eng.pdf (accessed on 15 November 2021).

- Taylor, C.F.; Field, D.; Sansone, S.-A.; Aerts, J.; Apweiler, R.; Ashburner, M.; Ball, C.A.; Binz, P.-A.; Bogue, M.; Booth, T.; et al. Promoting coherent minimum reporting guidelines for biological and biomedical investigations: The MIBBI project. Nat. Biotechnol. 2008, 26, 889–896. [Google Scholar] [CrossRef] [Green Version]

- Enhancing the QUAlity and Transparency of Health Research [Internet]. 2021. Available online: https://www.equator-network.org/ (accessed on 4 August 2021).

- Appelbaum, M.; Cooper, H.; Kline, R.B.; Mayo-Wilson, E.; Nezu, A.M.; Rao, S.M. Journal article reporting standards for quantitative research in psychology: The APA Publications and Communications Board task force report. Am. Psychol. 2018, 73, 947. [Google Scholar] [CrossRef]

- Bland, J.M.; Altman, D.G. Statistics notes: Cronbach’s alpha. BMJ 1997, 314, 572. [Google Scholar] [CrossRef] [Green Version]

- Eser, M.T.; Asku, G. Beck Depression Inventory-II: A Study for Meta Analytical Reliability Generalization. Pegem J. Educ. Instr. 2021, 11, 88–101. [Google Scholar] [CrossRef]

- Lenz, A.S.; Ho, C.; Rocha, L.; Aras, Y. Reliability Generalization of Scores on the Post-Traumatic Growth Inventory. Meas. Eval. Couns. Dev. 2021, 54, 106–119. [Google Scholar] [CrossRef]

- McDonald, K.; Graves, R.; Yin, S.; Weese, T.; Sinnott-Armstrong, W. Valence framing effects on moral judgments: A meta-analysis. Cognition 2021, 212, 104703. [Google Scholar] [CrossRef]

- Molina Arias, M. Aspectos metodológicos del metaanálisis (1). Pediatr. Aten. Primaria 2018, 20, 297–302. Available online: http://scielo.isciii.es/scielo.php?script=sci_arttext&pid=S1139-76322018000300020 (accessed on 18 December 2021).

- López-Ibáñez, C.; Sánchez-Meca, J. The Reproducibility in Reliability Generalization Meta-Analysis. In Proceedings of the Research Synthesis & Big Data, Virtual Conference, Online. 21 May 2021; ZPID (Leibniz Institute for Psychology): Trier, Germany, 2021. [Google Scholar] [CrossRef]

- Wan, T.; Jun, H.; Hui, Z.; Hua, H. Kappa coefficient: A popular measure of rater agreement. Shanghai Arch. Psychiatry 2015, 27, 62. [Google Scholar] [CrossRef]

- Sachs, M.E.; Habibi, A.; Damasio, A.; Kaplan, J.T. Dynamic intersubject neural synchronization reflects affective responses to sad music. NeuroImage 2020, 218, 116512. [Google Scholar] [CrossRef] [PubMed]

- Sachs, M.E.; Damasio, A.; Habibi, A. Unique personality profiles predict when and why sad music is enjoyed. Psychol. Music 2020, 49, 1145–1164. [Google Scholar] [CrossRef]

- Sachs, M.E.; Habibi, A.; Damasio, A.; Kaplan, J.T. Decoding the neural signatures of emotions expressed through sound. Neuroimage 2018, 174, 1–10. [Google Scholar] [CrossRef]

- Sachs, M.E.; Damasio, A.; Habibi, A. The pleasures of sad music: A systematic review. Front. Hum. Neurosci. 2015, 9, 404. [Google Scholar] [CrossRef] [Green Version]

- Man, K.; Melo, G.; Damasio, A.; Kaplan, J. Seeing objects improves our hearing of the sounds they make. Neurosci. Conscious. 2020, 2020, niaa014. [Google Scholar] [CrossRef]

- Picard, R.W. Emotion research by the people, for the people. Emot. Rev. 2010, 2, 250–254. [Google Scholar] [CrossRef] [Green Version]

- Chiang, S.; Picard, R.W.; Chiong, W.; Moss, R.; Worrell, G.A.; Rao, V.R.; Goldenholz, D.M. Guidelines for Conducting Ethical Artificial Intelligence Research in Neurology: A Systematic Approach for Clinicians and Researchers. Neurology 2021, 97, 632–640. [Google Scholar] [CrossRef]

- Picard, R.W.; Boyer, E.W. Smartwatch biomarkers and the path to clinical use. Med 2021, 2, 797–799. [Google Scholar] [CrossRef]

- Pedrelli, P.; Fedor, S.; Ghandeharioun, A.; Howe, E.; Ionescu, D.F.; Bhathena, D.; Fisher, L.B.; Cusin, C.; Nyer, M.; Yeung, A.; et al. Monitoring changes in depression severity using wearable and mobile sensors. Front. Psychiatry 2020, 11, 1413. [Google Scholar] [CrossRef] [PubMed]

- Ortony, A. Are all “basic emotions” emotions? A problem for the (basic) emotions construct. Perspect. Psychol. Sci. 2021. [Google Scholar] [CrossRef] [PubMed]

- Bloore, R.A.; Jose, P.E.; Roseman, I.J. General emotion regulation measure (GERM): Individual differences in motives of trying to experience and trying to avoid experiencing positive and negative emotions. Pers. Individ. Differ. 2020, 166, 110174. [Google Scholar] [CrossRef]

- Schmidt, F.L.; Oh, I.-S.; Hayes, T.L. Fixed- versus random-effects models in meta-analysis: Model properties and an empirical comparison of differences in results. Br. J. Math. Stat. Psychol. 2009, 62, 97–128. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Study | Test Content | Response Processes | Internal Structure | Relation to Other Variables | Consequences of Testing | ||||

|---|---|---|---|---|---|---|---|---|---|

| Factor Analysis | Reliability | Test-Retest | Invariance | Equivalence between Versions | |||||

| Yes | 12 (57.14%) | 1 (4.76%) | 21 (100%) | 21 (100%) | 4 (19.05%) | 8 (38.1%) | 13 (61.9%) | 18 (85.71%) | 1 (4.76%) |

| No | 8 (38.1%) | 18 (85.71%) | 0 | 0 | 17 (80.95%) | 13 (61.9%) | 8 (38.1%) | 3 (14.29%) | 20 (95.24%) |

| Ambiguous | 1 (4.76%) | 2 (9.52%) | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Number of Items (BES Version) | Induced Reliability | Reported Reliability | ||||

|---|---|---|---|---|---|---|

| Omitted | Vague | Precise | Unusable | Usable | NR | |

| 20 (original) | 7 | 2 | 2 | 9 | 32 | 26 |

| 20 (adaptation to “victim”) | - | - | - | - | 1 | - |

| 40 | - | - | - | 1 | - | 1 |

| 19 (item 4 is removed) | - | - | - | - | 1 | - |

| 18 (items 1 and 6 are removed) | - | - | - | - | 1 | - |

| 18 (items 4 and 15 are removed) | - | - | - | - | 1 | 1 |

| 16 (items 2, 3, 4, and 15 are removed) | - | - | - | - | 1 | 1 |

| 16 (items 4, 5, 15, and 19 are removed) | - | - | - | - | 1 | - |

| 12 (items 1, 4, 6, 7, 13, 15, 19, and 20 are removed) | - | - | - | - | 1 | - |

| 12 (Polish version) | - | - | - | - | 1 | 1 |

| 9 (Spanish version) | - | - | - | 1 | 9 | 2 |

| 7 (El Salvador version) | - | - | - | 1 | - | 1 |

| 18 (items 4 and 7 are removed); 17 (adaptation to parents, in third person; items 4, 6, and 7 are removed) | - | - | - | - | 1 | - |

| 20 and 7 (original and reduced version) | - | - | - | - | 1 | 1 |

| TOTAL | 7 | 2 | 2 | 12 | 51 | 34 |

| Position Relative to the Global Mean | Number of Articles (% of the Total) | Mean | Median | Standard Deviation | Minimum | Maximum | |

|---|---|---|---|---|---|---|---|

| Cognitive empathy | Below | 13 (43.3) | 0.704 | 0.680 | 0.0454 | 0.660 | 0.780 |

| Shared | 10 (33.3) | 0.797 | 0.795 | 0.0189 | 0.770 | 0.830 | |

| Above | 7 (23.3) | 0.920 | 0.910 | 0.0311 | 0.880 | 0.960 | |

| Affective empathy | Below | 16 (53.3) | 0.703 | 0.700 | 0.0643 | 0.540 | 0.780 |

| Shared | 3 (10) | 0.827 | 0.830 | 0.0153 | 0.810 | 0.840 | |

| Above | 11 (36.7) | 0.881 | 0.870 | 0.0330 | 0.850 | 0.960 | |

| Variable | F (df) | QE (df) | R2 | I2 |

|---|---|---|---|---|

| Cognitive empathy | ||||

| Type | 1.94 (1.28) | 2742.25 * (28) | 3.07% | 98.77% |

| Generation | 2.13 (2.27) | 2436.63 * (27) | 7.57% | 98.7% |

| Affective empathy | ||||

| Type | 2.76 (1.28) | 1742.55 * (28) | 5.51% | 98.4% |

| Generation | 0.55 (2.27) | 1452.61 * (27) | 0.0% | 98.51% |

| Cognitive Empathy | Affective Empathy | |

|---|---|---|

| Sample | ||

| N | 14 | 13 |

| Nremov | 16 | 17 |

| Robust estimation | ||

| Mrob | 0.79 | 0.80 |

| se | 0.03 | 0.06 |

| 95% CI | (0.77, 0.80) | (0.77, 0.82) |

| Z | 45.22 * | 24.33 * |

| Δα–rob α | −0.2 (−2.4%) | −0.1 (−1.2%) |

| Heterogeneity | ||

| Q (df) | 41.82 * (13) | 148.13 * (12) |

| tau2 | 0.009 | 0.05 |

| Tau | 0.093 | 0.22 |

| I2 | 65.47% | 93.47% |

| H2 | 2.9 | 12.32 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cabedo-Peris, J.; Martí-Vilar, M.; Merino-Soto, C.; Ortiz-Morán, M. Basic Empathy Scale: A Systematic Review and Reliability Generalization Meta-Analysis. Healthcare 2022, 10, 29. https://doi.org/10.3390/healthcare10010029

Cabedo-Peris J, Martí-Vilar M, Merino-Soto C, Ortiz-Morán M. Basic Empathy Scale: A Systematic Review and Reliability Generalization Meta-Analysis. Healthcare. 2022; 10(1):29. https://doi.org/10.3390/healthcare10010029

Chicago/Turabian StyleCabedo-Peris, Javier, Manuel Martí-Vilar, César Merino-Soto, and Mafalda Ortiz-Morán. 2022. "Basic Empathy Scale: A Systematic Review and Reliability Generalization Meta-Analysis" Healthcare 10, no. 1: 29. https://doi.org/10.3390/healthcare10010029

APA StyleCabedo-Peris, J., Martí-Vilar, M., Merino-Soto, C., & Ortiz-Morán, M. (2022). Basic Empathy Scale: A Systematic Review and Reliability Generalization Meta-Analysis. Healthcare, 10(1), 29. https://doi.org/10.3390/healthcare10010029