4.2. Lemmas Related to Cluster Costs

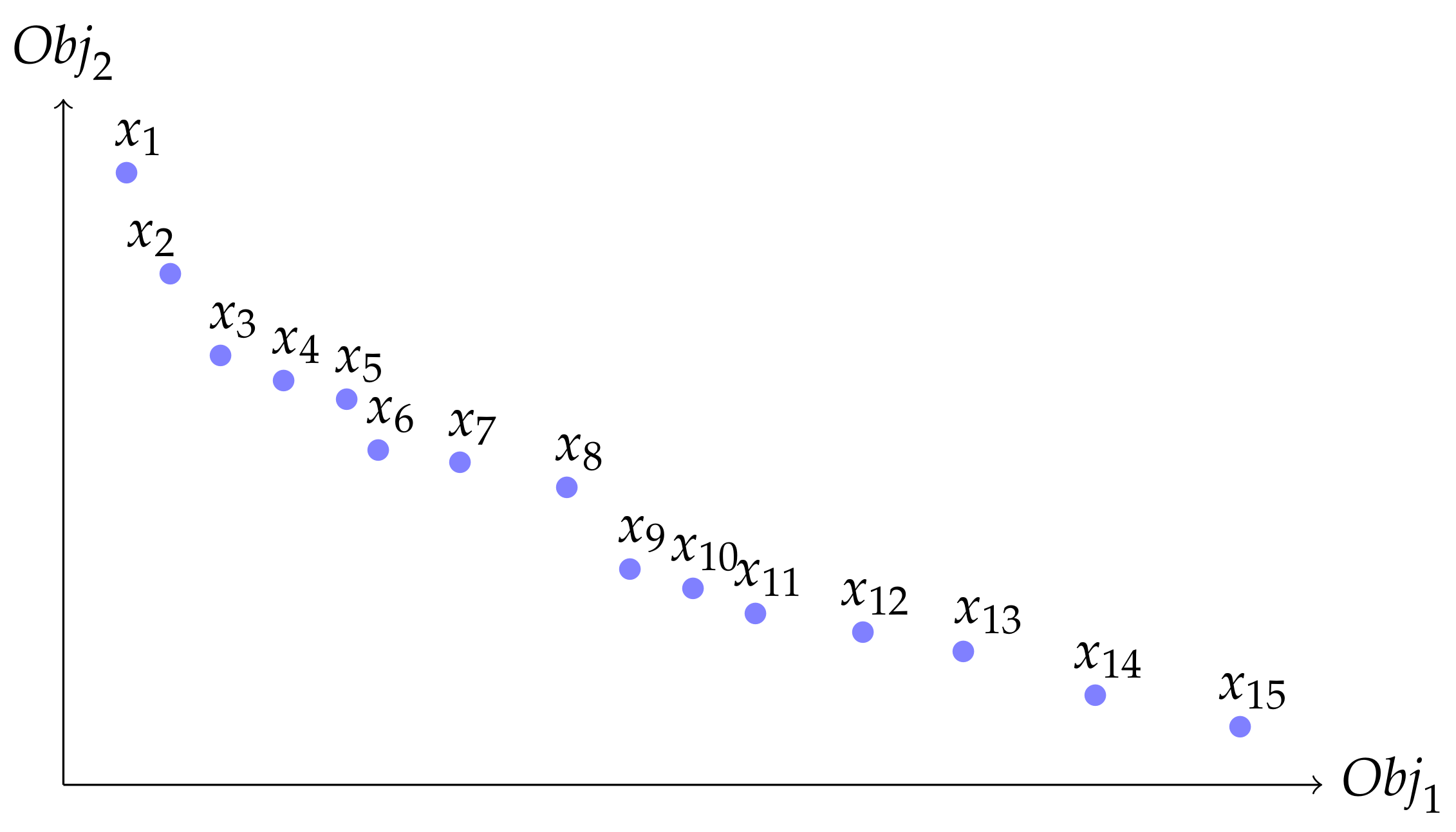

This section provides the relations needed to compute or compare cluster costs. Firstly, one notes that the computation of cluster costs is easy in a 2D PF in the continuous clustering case.

Lemma 3. Let , such that . Let i (resp ) be the minimal (respective maximal) index of points of P with the indexation of Proposition 1. Then, can be computed with .

To prove the Lemma 3, we use the Lemmas 4 and 5.

Lemma 4. Let , such that . Let i (resp ) the minimal (resp maximal) index of points of P with the indexation of Proposition 1. We denote with the midpoint of . Then, using a Minkowski or Chebyshev distance d, we have for all : .

Proof of Lemma 4: We denote with , with the equality being trivial as points are on a line and d is a distance. Let . We calculate the distances using a new system of coordinates, translating the original coordinates such that O, is a new origin (which is compatible with the definition of Pareto optimality). and have coordinates and in the new coordinate system, with and if a Minkowski distance is used, otherwise it is for the Chebyshev distance. We use to denote the coordinates of x. implies that and , i.e., and , which implies , using Minkowski or Chebyshev distances. □

Lemma 5. Let such that . Let i (respective ) be the minimal (respective maximal) index of points of P with the indexation of Proposition 1. We denote, using , the midpoint of . Then, using a Minkowski or Chebyshev distance d, we have for all : .

Proof of Lemma 5: As previously noted, let . Let . We have to prove that or . If we suppose that , this implies that . Then, having implies with Lemma 2. □

Proof of Lemma 3: We first note that , using the particular point . Using Lemma 4, , and thus with . Reciprocally, for all , using Lemma 5, and thus . This implies that . □

Lemma 6. Let such that . Let i (respective ) the minimal (respective maximal) index of points of P. Proof. Let

. We denote

, such that

. Applying Lemma 2 to

, for all

, we have

. Then

Lastly, we notice that extreme points are not optimal centers. Indeed, with Proposition 2, i.e., i, is not optimal in the last minimization, dominated by . Similarly, is dominated by . □

Lemma 7. Let . Let . We have .

Proof. Using the order of Proposition 1, let

i (respectively,

) the minimal index of points of

P (respectively,

) and let

j (respectively,

) the maximal indexes of points of

P (respectively,

).

is trivial using Lemmas 2 and 3. To prove

, we use

, and Lemmas 2 and 6

□

Lemma 8. Let . Let , such that . Let i (respectively, ) the minimal (respectively, maximal) index of points of P. For all , such that , we have

Proof. Let such that . With Lemma 7, we have . is trivial using Lemma 3, so that we have to prove .

□

4.3. Optimality of Non-Nested Clustering

In this section, we prove that non-nested clustering property, the extension of interval clustering from 1D to 2D PF, allows the computation of optimal solutions, which will be a key element for a DP algorithm. For (partial) p-center problems, i.e., K-M-max--BC2DPF, optimal solutions may exist without fulfilling the non-nested property, whereas for K-M-+--BC2DPF problems, the nested property is a necessary condition to obtaining an optimal solution.

Lemma 9. Let ; let . There is an optimal solution of 1-M-⊕--BC2DPF on the shape with .

Proof. Let represent an optimal solution of 1-M-⊕--BC2DPF, let be the optimal cost, and with and . Let i (respectively, ) be the minimal (respectively maximal) index of using order of Proposition 1. , so Lemma 8 applies and . , thus defines an optimal solution of 1-M-⊕--BC2DPF. □

Proposition 2. Let be a 2D PF, re-indexed with Proposition 1. There are optimal solutions of K-M-⊕--BC2DPF using only clusters on the shape .

Proof. We prove the results by the induction on . For , Lemma 9 gives the initialization.

Let us suppose that and the Induction Hypothesis (IH) that Proposition 2 is true for K-M-⊕--BC2DPF. Let be an optimal solution of K-M-⊕--BC2DPF; let be the optimal cost. Let be the subset of the non-selected points, , and be the K subsets defining the costs, so that is a partition of E and . Let be the maximal index, such that , which is, necessarily, . We reindex the clusters , such that . Let i be the minimal index such that .

We consider the subsets , and for all . It is clear that is a partition of , and is a partition of E. For all , , so that (Lemma 7).

is a partition of E, and . is an optimal solution of K--⊕--BC2DPF. is an optimal solution of --⊕--BC2DPF, applied to points . Letting be the optimal cost of --⊕--BC2DPF, we have . Applying IH for of --⊕--BC2DPF to points , we have an optimal solution of --⊕--BC2DPF among on the shape . , and thus . is an optimal solution of K-M-⊕--BC2DPF in E using only clusters . Hence, the result is proven by induction. □

Proposition 3. There is an optimal solution of K-M-⊕--BC2DPF, removing exactly M points in the partial clustering.

Proof. Starting with an optimal solution of K-M-+--BC2DPF, let be the optimal cost, and let be the subset of the non-selected points, , and , the K subsets defining the costs, so that is a partition of E. Removing random points in , we have clusters such that, for all , , and thus (Lemma 7). This implies , and thus the clusters and outliers define and provide the optimal solution of K-M-⊕--BC2DPF with exactly M outliers. □

Reciprocally, one may investigate if the conditions of optimality in Propositions 2 and 3 are necessary. The conditions are not necessary in general. For instance, with , and the discrete function , ie , the selection of each pair of points defines an optimal solution, with the same cost as the selection of the three points, which do not fulfill the property of Proposition 3. Having an optimal solution with the two extreme points also does not fulfill the property of Proposition 2. The optimality conditions are necessary in the case of sum-clustering, using the continuous measure of the enclosing disk.

Proposition 4. Let an optimal solution of K-M-+--BC2DPF be defined with as the subset of outliers, with , and as the K subsets defining the optimal cost. We therefore have

, in other words, exactly M points are not selected in π.

For each , defining and , we have .

Proof. Starting with an optimal solution of K-M-+--BC2DPF, let be the optimal cost, and let be the subset of the non-selected points, , and be the K subsets defining the costs, so that is a partition of E. We prove and ad absurdum.

If , one may remove one extreme point of the cluster , defining . With Lemmas 2 and 3, we have , and . This is in contraction with the optimality of , , defining a strictly better solution for K-M-+--BC2DPF. is thus proven ad absurdum.

If is not fulfilled by a cluster , there is with . If , we have a better solution than the optimal one with and . If with , we have nested clusters and . We suppose that (otherwise, reasoning is symmetrical). We define a better solution than the optimal one with and . is thus proven ad absurdum. □

4.4. Computation of Cluster Costs

Using Proposition 2, only cluster costs are computed. This section allows the efficient computation of such cluster costs. Once points are sorted using Proposition 1, cluster costs can be computed in using Lemma 3. This makes a time complexity in to compute all the cluster costs for .

Equation (

19) ensures that cluster costs

can be computed in

for all

. Actually, Algorithm 1 and Proposition 5 allow for computations in

once points are sorted following Proposition 1, with a dichotomic and logarithmic search.

Lemma 10. Letting with . decreases before reaching a minimum , , and then increases for .

Proof: We define with and .

Let . Proposition 2, applied to i and any with and , ensures that g is decreasing. Similarly, Proposition 2, applied to and any , ensures that h is increasing.

Let . and , so that . A is a non-empty and bounded subset of , so that A has a maximum. We note that . and , so that and .

Let . and , using the monotony of and . and as . Hence, . This proves that is decreasing in .

and have to be coherent with the fact that .

Let . , so using the monotony of and .This proves that is increasing in .

Lastly, the minimum of

f can be reached in

l or in

, depending on the sign of

. If

, there are two minimums

. Otherwise, there is a unique minimum

,

, which decreases before increasing.

| Algorithm 1: Computation of |

| input: indexes , a distance d |

| output: the cost |

| |

| define , , , , |

| while |

| |

| ifthen and |

| else and |

| end while |

| return |

Proposition 5. Let be N points of , such that for all , . The computing cost for any cluster has a complexity in time, using additional memory space.

Proof. Let . Let us prove the correctness and complexity of Algorithm 1. Algorithm 1 is a dichotomic and logarithmic search; it iterates times, with each iteration running in time. The correctness and complexity of Algorithm 1 is a consequence of Lemma 10 and the loop invariant, which exists as a of minimum of , with , also having and . By construction in Algorithm 1, we have , and thus . This implies that , and thus , using Lemma 10. Similarly, we always obtain , and thus , , so that with Lemma 10. At the convergence of the dichotomic search, and is or ; therefore, the optimal value is . □

Remark 1. Algorithm 1 improves the previously proposed binary search algorithm [10]. If it has the same logarithmic complexity, this leads to two times fewer calls of the distance function. Indeed, in the previous version, the dichotomic algorithm is computed at each iteration and to determine if is in the increasing or decreasing phase of . In Algorithm 1, the computations that are provided for each iteration are equivalent to the evaluation of only , computing and . Proposition 5 can compute for all in . Now, we prove that the costs of all can be computed in time instead of using -independent computations. Two schemes are proposed, computing the lines of the cost matrix in time, computing for all for a given in Algorithm 2, and computing for all for a given in Algorithm 3.

Lemma 11. Let , with . Let such that .

(i) If , then there is , such that , with .

(ii) If , then there is , such that , with .

Proof. We prove ; we suppose that and we prove that, for all , so that either c is an argmin of the minimization, and the superior minimum to c. is similarly proven. Let . , which implies and, with Lemma 10, is decreasing in , i.e., for all We thus have , and, with lemma 2, . Thus, . With lemma 2, . implies that , and then . Thus , and . □

Proposition 6. , Let be N points of , such that for all , . Algorithm 2 computes for all for a given in time using memory space.

Proof. The validity of Algorithm 2 is based on Lemmas 10 and 11: once a discrete center c is known for a , we can find a center of with , and Lemma 10 gives the stopping criterion to prove a discrete center. Let us prove the time complexity; the space complexity is obviously within memory space. In Algorithm 2, each computation is in time; we have to count the number of calls for this function. In each loop in , one computation is used for the initialization; the total number of calls for this initialization is . Then, denoting, with , the center found for , we note that the number of loops is . Lastly, there are less that computations calls ; Algorithm 2 runs in time. □

| Algorithm 2: Computing for all for a given |

| Input: indexed with Proposition 1, , , N points of , |

| Output: for all , |

| |

| define vector v with for all |

| define , |

| for to N |

| |

| while |

| |

| |

| end while |

| |

| end for |

| return vector v |

Proposition 7. Let be N points of , such that for all , . Algorithm 3 computes for all for a given in time, using memory space.

| Algorithm 3: Computing for all for a given |

| Input: indexed with Proposition 1, , , N points of , |

| Output: for all , |

| |

| define vector v with for all |

| define , |

| for to 1 with increment |

| |

| while |

| |

| |

| end while |

| |

| end for |

| return vector v |

Proof. The proof is analogous with Proposition 6, applied to Algorithm 2. □