4.1. Computational Aspects

We must mention that the DisjointTuckerALS algorithm requires more computational time (runtime) than the TuckerALS algorithm. This is explained, because, as the number of loading matrices calculated as disjoint increases, the time that is required for their calculation also increases, consuming more computational resources of memory and processor.

The computational experiments were carried out on a computer with the following hardware characteristics: (i) OS: Windows 10 for 64 bits; (ii) RAM: 8 Gigabytes; and (iii) processor: Intel Core i7-4510U 2-2.60 GHZ. Regarding the software, the following tools and programming languages were used: (i) development tool—IDE—: Microsoft Visual Studio Express; (ii) programming language: C#.NET; and, (iii) statistical software: R.

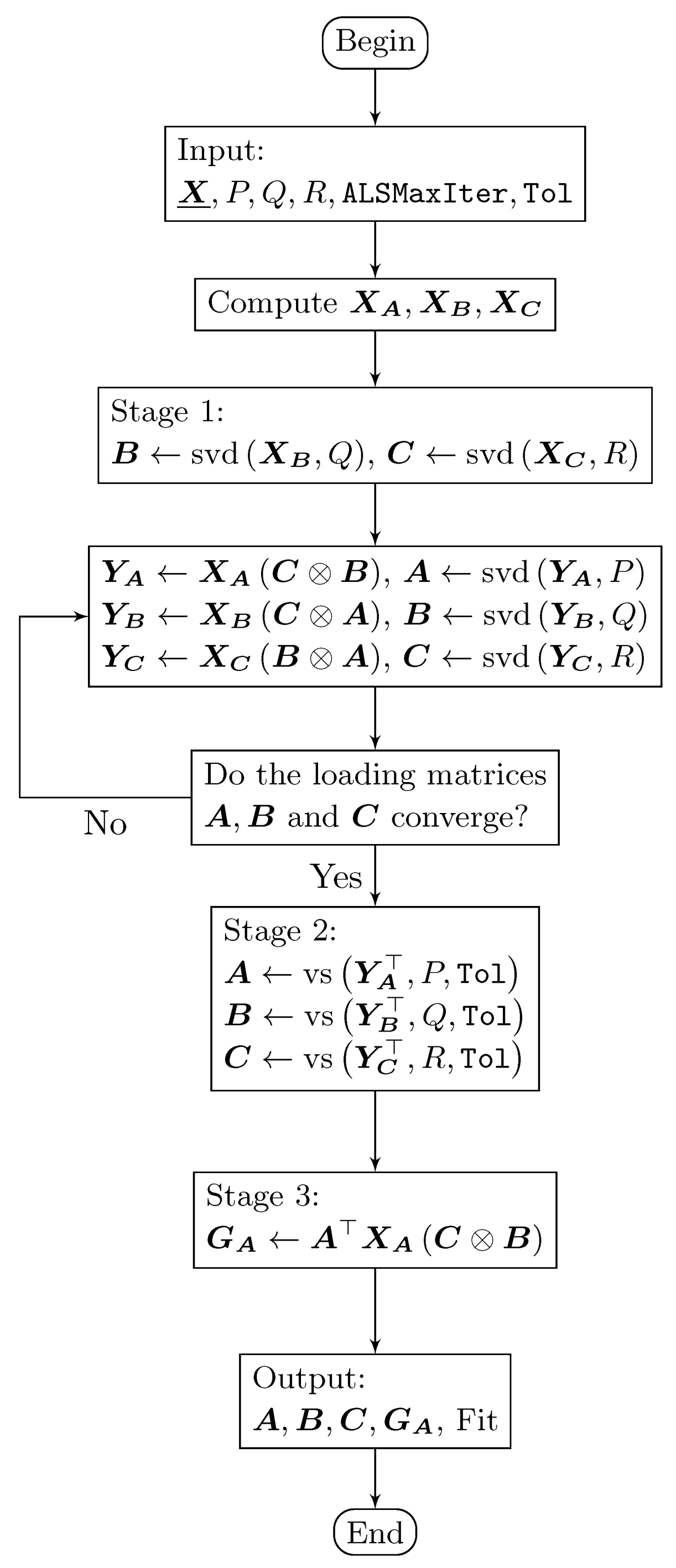

The DisjointPCA, TuckerALS, and DisjointTuckerALS algorithms that are presented in this paper to perform all of the numerical applications were implemented in C#.NET as the programming language mainly for the graphical user interface (GUI) of data entry, control of calculations, and delivery of results. Data entry and presentation of results were carried out with Excel sheets. Communication between C#.NET and Excel was established through a connector known as COM+. Some parts of the codes developed were implemented while using the R programming language for random number generation and SVD. Communication between C#.NET and R was stated with R.NET as a connector, which can be installed in Visual Studio with a package named NuGet, whereas SVD was performed with an R package named irlba. This package quickly calculates the partial SVD, that is, those SVD which use the first singular values, but we must specify the number of singular values to be calculated. Therefore, the irlba package does not use nor compute the other singular values, accelerating the calculations for big matrices, such as frontal, horizontal, and vertical slices matrices of a three-way table.

4.2. Generator of Disjoint Structure Tensors

We design and implement a simulation algorithm to randomly build a three-way table with a disjoint latent structure in its three-modes. Subsequently, the DisjointTuckerALS algorithm should be able to detect that structure, since it uses a Tucker model with disjoint orthogonal components.

Let

be a three-way table with

I individuals,

J variables, and

K times or locations. Assume that: (i) the first mode, which is related to the loading matrix

, has

P latent individuals (

); (ii) the second mode, which is related to the loading matrix

, has

Q latent variables (

); and, (iii) the third mode, which is related to the loading matrix

, has

R latent locations (

). Suppose that

are the

I original individuals. In addition,

are the

P latent individuals. We consider the linear combination that is given by

If it is required that the m original consecutive individuals, , are represented by the latent individual , then the scalars are defined as independent random variables with discrete uniform distribution, whose support is the closed set of integer numbers from 70 to 100. The other scalars in the same linear combination are defined as independent random variables with discrete uniform distribution, whose support is the closed set of integer numbers from one to 30. This procedure must be performed for each p from 1 to P, since each original individual must have a strong presence in a unique latent individual. The Gram–Schmidt orthonormalization process is applied to the matrix of order , which has the scalars from all the linear combinations. Hence, a disjoint dimensional reduction of the loading matrix is achieved.

Similarly with the loading matrix

, consider that

are the

J original variables. In addition,

are the

Q latent variables. We consider the linear combination that is stated as

If it is required that the m consecutive original variables are represented by the latent variable , then the scalars are defined as independent random variables with discrete uniform distribution, similarly as for the matrix . In the same manner as before, this procedure must be performed for each q from 1 to Q and the Gram–Schmidt orthonormalization process is again applied, as in the case of , and then a disjoint dimensional reduction of is achieved.

Analogously with

, let

be the

K times or original locations and

be the

R latent times or latent locations. We consider the linear combination that is expressed by

If it is required that the m consecutive original locations are represented by the latent location , then the scalars are defined as with and , and the procedure is applied for each r from 1 to R. Once again, the Gram–Schmidt orthonormalization process is applied to the matrix of order that has the scalars from all of the linear combinations. Thus, a disjoint dimensional reduction of the loading matrix is achieved.

The Core

must be of order

. With no loss of generality, suppose that the inputs of that three-way table are independent random variables with continuous uniform distribution in the interval

. In order to complete the creation of

, the matrix equation is defined as

The matrix

of order

stated in (

14) has the frontal slices of

, whereas the matrix

of order

has the frontal slices of

. Equations (

11)–(

13) are used in order to build the random loading matrices. The algorithm that builds the three-way random table

of order

must implement an application

that is expressed as

which is subject to

,

and

, where

is the set of all three-way tables with entries in the real numbers. Additionally, it must satisfy that

where

is the number of original individuals in the

p-th latent individual;

is the number of original variables in the

q-th latent variable; and

is the number of original locations in the

r-th latent location. The application

that is defined in (

15) must randomly provide a three-way table

of order

that has a simple structure, which is expected to be detected by the DisjointTuckerALS algorithm.

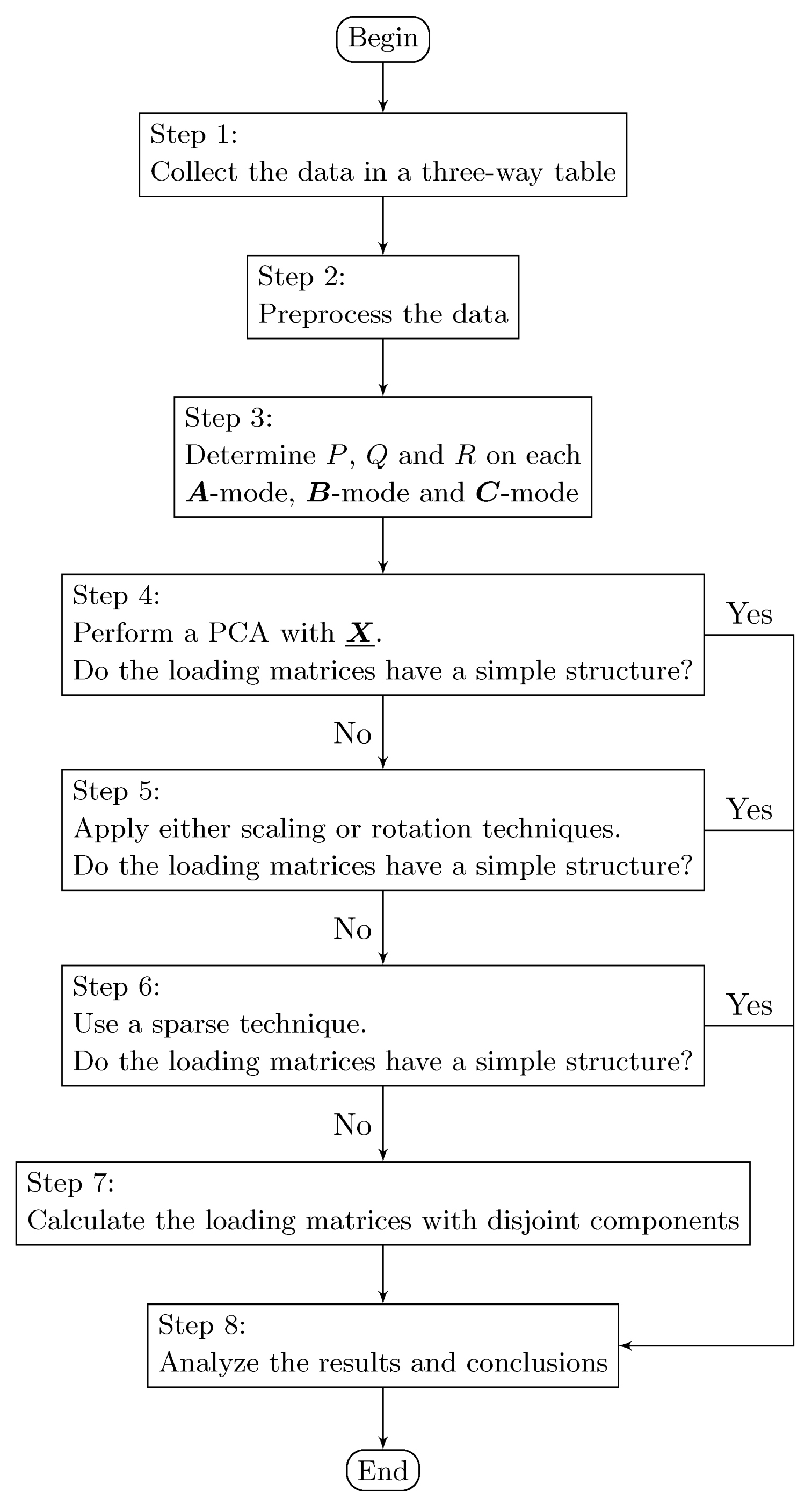

4.3. Applying the DisjointTuckerALS Algorithm to Simulated Data

Next, we show how the DisjointTuckerALS algorithm works by using the application

in order to generate a three-way table

of order

. According to the definition of

given in (

15), the values of

P,

Q,

R are

and 5 respectively. Furthermore, constraints stated in (

16) are satisfied. The other parameter setting is given by

and

. The TuckerALS and DisjointTuckerALS algorithms were executed while using the data

,

,

,

, obtaining a fit of

and

, respectively; see

Table 14. As expected, we have a loss of fit valued at 1.94%. However, there is a gain in interpretation, because a simple structure in the three loading matrices is obtained.

Table 15 reports the loading matrix

. Note that the DisjointTuckerALS algorithm is able to identify the disjoint structure in the first mode. Observe that five original individuals are represented by the first disjoint orthogonal component. The next seven original individuals are represented by the second disjoint orthogonal component. In addition, the last eight original individuals are represented by the third disjoint orthogonal component.

Table 16 shows the loading matrix

. The fact that the DisjointTuckerALS algorithm is able to identify a disjoint structure in the second mode is highlighted. The algorithm is able to recognize the way in which the latent variables group the original variables.

Table 17 presents the loading matrix

and, once again, note that the DisjointTuckerALS algorithm identifies the disjoint structure in the third mode.

The DisjointTuckerALS algorithm also recognizes the manner in which the latent locations group the original locations and the three loading matrices are easily interpreted. However, when running the TuckerALS algorithm, the three loading matrices do not allow for an easy interpretation. Note that the disjoint approach can be complemented with rotations and sparse techniques for better analysis.

4.4. Applying the DisjointTuckerALS Algorithm to Real Data

Next, the DisjointTuckerALS algorithm is executed with a three-way table

of order

with real data being taken from [

22]. Note that

Japanese university students evaluate

Chopin’s preludes while using

bipolar scales. The preprocessing of the data and the number of components in each mode have been chosen in the same manner, as in [

22].

Table 18 reports the model fit in four different scenarios with

,

, and

. The full data set can be downloaded from

http://three-mode.leidenuniv.nl.

For a comparative analysis, the loading matrix that is related to Chopin’s preludes is chosen.

Table 19 reports the loading matrix

obtained while using the TuckerALS algorithm. In [

22], this matrix is not interpreted and they proceed to make rotations.

Table 20 provides the final loading matrix

used for interpretation. The first component is named “fast+minor, slow+major” and the second component is named “fast+major, slow+minor”.

Table 21 presents the loading matrix

that was obtained with the DisjointTuckerALS algorithm. Note that, with the loading matrix

of

Table 21, the same conclusions are reached as with the loading matrix

of

Table 20.