Abstract

One of the main drawbacks of the traditional methods for computing components in the three-way Tucker model is the complex structure of the final loading matrices preventing an easy interpretation of the obtained results. In this paper, we propose a heuristic algorithm for computing disjoint orthogonal components facilitating the analysis of three-way data and the interpretation of results. We observe in the computational experiments carried out that our novel algorithm ameliorates this drawback, generating final loading matrices with a simple structure and then easier to interpret. Illustrations with real data are provided to show potential applications of the algorithm.

1. Introduction

The multivariate methods offer a simplified and descriptive look at a set of multidimensional data, where individuals and variables are numerous. These data are often organized in a matrix or two-way table and, for their analysis, there is a wide theoretical background. In addition, in this context, three-way (or three-mode) tables or three-order tensors can be obtained when a new way, such as time or location, is introduced into the two-way table; see more details in [1].

Tensor decomposition emerged during the 20th century [2] and, as mentioned in [3], Tucker [4] was responsible for its use within a multivariate context in the sixties, whereas, later on, in the seventies, Harshman [5] and Carroll and Chang [6] continued the use of tensor decomposition in multivariate methods.

The analysis of three-way tables attempts to identify patterns in the space of individuals, of variables, and of times (or situations in general), searching for robust and easy-to-interpret models in order to discover how individuals and variables are related to entities in the third mode [7].

The three-way Tucker model (or Tucker3 from hereafter as Tucker) is a tensor decomposition that allows for the generalization of a principal component analysis (PCA) [8,9] to three-way tables. This multivariate method represents the original data in lower dimensional spaces, enabling pattern recognition. Furthermore, it is possible to quantify the interactions between entities in three-modes.

Similar to what occurs with a PCA, each mode of a three-way table can be represented in spaces of lower dimension than the original spaces [10]. These spaces are featured by the principal axes (components), which maximizes the total variance and they are linear combinations of the original entities [11]. Within the space of each mode, the interpretation of these axes is done in accordance with the values of their components (loadings). Therefore, interpretation can sometimes be difficult, leading to inaccurate characterization of these new axes. Thus, it is desirable that each principal component or axis has few entities that contribute to the variability of the component in a relevant manner.

There are several theoretical approaches to yield tensor decomposition that have components with some of their loadings being equal to zero. This is useful for facilitating the analysis, and the interpretation of the three-way tables, such as the sparse parallelizable tensor decomposition used in scattered components works [12]. The sparse hierarchical Tucker model focuses on factoring high order tensors [13]. In addition, the tensor truncated power searches for a sparse decomposition by choosing variables [14]. An algorithm for the sparse Tucker decomposition that sets an orthogonality condition for loading matrices and sparse conditions in the core matrix was proposed by [15].

Unlike sparse methods, disjoint methods in two-way tables search for a decomposition with a loading matrix that has a single column (latent variable) with non-zero input for each row (original variable). Furthermore, there is at least one row with a non-zero entry for each column of the loading matrix. Hence, it is possible to obtain loading matrices of simple structure that facilitate the interpretation. A method that allows for disjoint orthogonal components in a two-way table to be calculated was presented in [16]. Recently, an algorithm that is based on particle swarm optimization was proposed by [17], which consists of a disjoint PCA with constraints for the case of two-way tables. To the best of our knowledge, there are no methods for computing disjoint orthogonal components in three-way tables.

The objective of this work is to propose a heuristic algorithm that extends the existing methods of two-way tables to three-way tables. This proposal computes disjoint orthogonal components in loading matrices of a Tucker model. We introduce a procedure that suggests routes in which the proposed algorithm can be used. We call this new algorithm as DisjointTuckerALS, because this is based on the alternating least squares (ALS) method.

The remainder of the paper is organized as follows. Section 2 defines what a disjoint orthogonal matrix is and presents an optimization mathematical problem that must be solved in order to calculate disjoint orthogonal components in the Tucker model. In Section 3, we introduce the DisjointTuckerALS algorithm. Section 4 carries out the numerical applications of the present work regarding computational experiments in order to evaluate the performance of our algorithm, as well as illustrations with real data to show its potential applications. Finally, in Section 5, the conclusions of this study are provided, together with some final remarks, limitations, a wide spectrum of additional applications that are different from those presented in the illustration with real data, and ideas for future research.

2. The Tucker Model and the Disjoint Approach

In this section, we present the structure of three-way tables and define the Tucker model, as well as a disjoint approach for this model.

2.1. Three-Way Tables

A three-way table represents a data set with three-modes as individuals, variable, and situations, which is a three-dimensional array or a third-order tensor. Note that tensors have three variation modes: -mode (with I individuals); -mode (with J variables); and, -mode (with K situations).

Let be a three-way table of order . The generic element stores the measure of individual in variable and situation . The tensor can be converted into a two-way table while using a process of matricization. In this work, we use three types of supermatrices: -mode that yields a matrix of order , -mode that yields a matrix a of order , and -mode that yields a matrix of order . These supermatrices are defined as in [3], where , , and are known as frontal, horizontal, and vertical slices matrices, respectively.

2.2. The Tucker Model

Tucker is a multilinear model that approximates the three-way table while using a dimensional reduction on its three-modes. The Tucker tensor decomposition of is given by

where , with , , and being the corresponding elements of the matrices of order , of order , and of order , which are called component or loading matrices. In addition, that is defined in (1) is the -th element of the tensor of order , which is called core and it is considered a reduced version of the tensor . Integers , , and represent the number of components required on each mode, respectively. Thus, for instance, the matrix contains P columns that represent the new referential system of the individuals. Note that of order is a tensor of model errors.

The Tucker model can be represented by matrix equations that are based on all modes [3], which are stated as

where ⊗ is the Kronecker product. Furthermore, of order , of order , and of order , defined in (2), (3), and (4), are the frontal, horizontal, and vertical slices matrices from the core , respectively. Observe that , and are the corresponding error matrices.

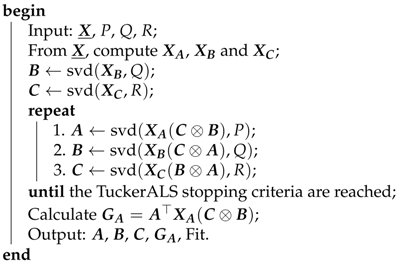

An algorithm that is based on the ALS method and singular value decomposition (SVD) is used in order to compute the orthogonal components in the Tucker model, called the TuckerALS algorithm [10]. Note that the ALS method partitions the way of computing the three loading matrices by fixing two of them and identifying the third matrix. This is done iteratively until the matrices do not differ significantly (for example, ; ; ), or if a maximum number of iterations (for example, 100) is attained, which are called TuckerALS stopping criteria.

The goodness-of-fit tells us how good an approximation between the original tensor and the solution that was obtained by the algorithm is. This goodness-of-fit is computed by the expression defined as

Algorithm 1 summarizes the TuckerALS method. The loading matrices , , , and the supermatrix are the output of the TuckerALS algorithm. The DisjointTuckerALS algorithm that we propose in this paper uses an adapted version of Algorithm 1. The notation means that is a matrix whose columns are the first Q left singular vectors of .

| Algorithm 1: TuckerALS |

|

2.3. Disjoint Approach for the Tucker Model

Let be a matrix of order . Afterwards, we say that is disjoint if and only if:

- For all , such that .

- For all , such that .

If also satisfies , where is the identity matrix of order , we say that is a disjoint orthogonal matrix. The following is an example of a disjoint orthogonal matrix:

The optimization mathematical problem to be solved with the DisjointTuckerALS algorithm, when the three loading matrices , , and are required to be disjoint orthogonal, is stated as

Subject to:

where is the Frobenius norm, and are identity matrices of order , , and , respectively. Note that the number of decision variables of this model is + + + . The constraints that are given in (7)–(9) are needed in order for columns of each loading matrix to form an orthonormal set. In the previous mathematical problem, the objective function that is defined in (6) is minimized, but, in practice, the fit is calculated according to (5). In order to obtain a simple structure in a loading matrix for three-way tables, there are some known techniques called: scaling, rotation, and sparse. We propose a disjoint technique by the design and implementation of the DisjointTuckerALS algorithm. This requires a reduction of the three-modes and can be obtained up to three disjoint orthogonal loading matrices. Several methods for obtaining disjoint orthogonal components for two-way tables have been derived; see [16,18,19]. If are disjoint matrices, the mathematical model defined in (6) can be solved while using the TuckerALS method stated in Algorithm 1 and then the orthogonal components may be obtained for the Tucker model.

2.4. Illustrative Example

We show the benefit of using the DisjointTuckerALS algorithm through a computational experiment for a three-way table that was taken from [7] and adapted by [20]. This small data set is provided in Table 1, Table 2, Table 3 and Table 4, which show three-way tables with individuals, variables, and situations, where the behavioral levels are measured.

Table 1.

Matrix of situation 1: “applying for an examen” for behavioral level data.

Table 2.

Matrix of situation 2: “giving a speech” for behavioral level data.

Table 3.

Matrix of situation 3: “family picnic” for behavioral level data.

Table 4.

Matrix of situation 4: “meeting a new date” for behavioral level data.

The four matrices of order that are presented in Table 1, Table 2, Table 3 and Table 4 correspond to the different scenarios or situations in which the levels of behavior are evaluated. Components are chosen and the TuckerALS algorithm is executed to obtain orthogonal components, with a model fit of , as in [7], and . When the DisjointTuckerALS algorithm is executed, the model fit is .

Loading matrices , , and that were obtained with both the TuckerALS and DisjointTuckerALS algorithms are reported in Table 5, Table 6, Table 7, Table 8, Table 9 and Table 10. Disjoint orthogonal components were calculated for the loading matrices , , , and reported in Table 6, Table 8, and Table 10, respectively. The first component of the loading matrix represents femininity and the second component masculinity; see Table 5 and Table 6. These tables allow us to affirm that the DisjointTuckerALS algorithm can identify the disjoint structure that lies in the individuals. For the loading matrix , the first component represents the emotional state and the second component is awareness; see Table 7 and Table 8. From these tables, note that the DisjointTuckerALS algorithm is able to identify the disjoint structure in the variables. For the loading matrix , the algorithm is able to group the four situations into two clusters; see Table 9 and Table 10. From Table 10, note that the first component is related to social situations and the second component to performance situations.

Table 5.

Loading matrix with the TuckerALS algorithm for behavioral level data.

Table 6.

Loading matrix with the DisjointTuckerALS algorithm for behavioral level data.

Table 7.

Loading matrix with the TuckerALS algorithm for behavioral level data.

Table 8.

Loading matrix with the DisjointTuckerALS algorithm for behavioral level data.

Table 9.

Loading matrix with the TuckerALS algorithm for behavioral level data.

Table 10.

Loading matrix with the DisjointTuckerALS algorithm for behavioral level data.

Table 11 and Table 12 show the core that was obtained with both of the algorithms. When observing both cores, it can be interpreted that women in social situations are mainly emotional and less aware. Conversely, men in the same situations are less emotional and more aware. In addition, in performance situations, women are mostly aware, while men are more aware than emotional in the same situations. The TuckerALS and DisjointTuckerALS algorithms yield different cores, but they are interpreted in the same manner.

Table 11.

Core with the TuckerALS algorithm for behavioral level data.

Table 12.

Core with the DisjointTuckerALS algorithm for behavioral level data.

When a three-way table is analyzed while using the Tucker model, it is important to point out that the loading matrices and are not always easily interpreted [7]. In many situations, these matrices need to be rotated (where any rotation is compensated in core ) in order to identify a simple structure that allows for interpretation. However, rotating the matrices does not guarantee that a simple structure is achieved. Therefore, the use of a sparse technique would be an alternative option. Nevertheless, it is worth mentioning that we have a loss of fit when using a sparse technique, which does not happen with rotations.

It is possible to rotate only the matrix or, alternatively, the matrices and can be simultaneously rotated in order to obtain a simple structure, thus improving the interpretation,. However, in some cases, the data analyst can opt to rotate the three loading matrices at the same time. Similarly, in the DisjointTuckerALS algorithm, disjoint orthogonal components can be chosen in a unique matrix, for example ; in two matrices, for example and ; or even in the three loading matrices.

The DisjointTuckerALS algorithm was executed with the same three-way table considering all possible combinations of disjoint orthogonal components in the loading matrices , , and . Table 13 reports the results of comparing different settings. From Table 13 and using the expression defined in (5), note that we lose fit when disjoint orthogonal components are required in the three loading matrices. It is important to consider that there is a loss of fit when using the disjoint technique, although interpretable loading matrices are achieved. Note that there is a tradeoff between interpretation and speed, because the DisjointTuckerALS algorithm takes longer than the TuckerALS algorithm. For details regarding the computational time (runtime) of the algorithms that are presented in Table 13, see Section 4.1.

Table 13.

Comparison of fit and runtime for behavioral level data.

2.5. The DisjointPCA Algorithm

The optimization mathematical model allowing for a disjoint orthogonal loading matrix in a two-way table to be obtained is stated as

subject to , with being a disjoint matrix, where is the data matrix of order , with I individuals and J variables, is the scoring matrix of order , and is the loading matrix of order . Note that is the number that is required for the components in the variable mode. Here, we use a greedy algorithm, known as the DisjointPCA algorithm proposed by [16], in order to find a solution to the minimization problem defined in (10). The DisjointPCA algorithm plays a fundamental role for the operation of the DisjointTuckerALS algorithm.

The notation means that the DisjointPCA algorithm with a tolerance Tol is applied to the data matrix , and then the disjoint orthogonal loading matrix , with Q components, is obtained as a result. Recall that the DisjointPCA algorithm was proposed by Vichi and Saporta [16] reason why we use the acronym “vs" in the above notation. Note that Tol is a tolerance parameter that represents the maximum distance allowed in the model fit for two consecutive iterations of the DisjointPCA algorithm. The above notation is used in order to explain how the DisjointTuckerALS algorithm works; see [16,21] for more details on the DisjointPCA algorithm.

3. The DisjointTuckerALS Algorithm

In this section, we derive the DisjointTuckerALS algorithm in order to compute from one to three disjoint orthogonal loading matrices for the Tucker model. Next, we explain how the DisjointTuckerALS algorithm works.

3.1. The Stages of the Algorithm

The DisjointTuckerALS algorithm has three stages and its input parameters are:

- : Three-way table of data;

- : Number of components in -mode, -mode, -mode, respectively;

- ALSMaxIter: Maximum number of iterations of the ALS algorithm; and

- Tol: Maximum distance allowed in the fit of the model for two consecutive iterations of the DisjointPCA algorithm.

Stage 1

[Initial computation of loading matrices with an adapted TuckerALS algorithm]

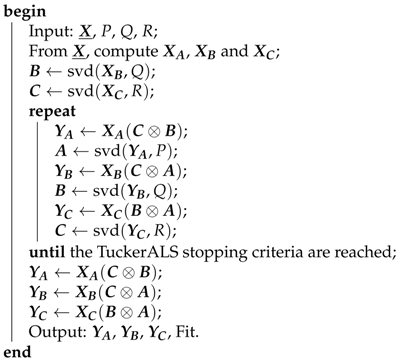

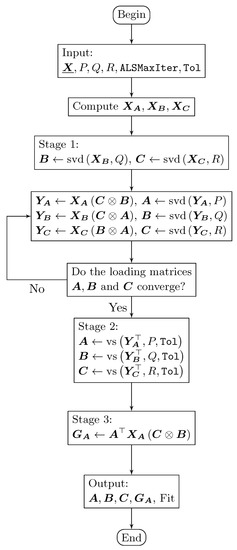

In this first stage, an initial calculation of the loading matrices is made. To do this, an adapted TuckerALS algorithm is executed, as defined in Algorithm 2. The output in Algorithm 2 are the matrices , , and of order , , and , respectively.

| Algorithm 2: Adapted TuckerALS |

|

Stage 2

[Computation of disjoint orthogonal loading matrices with the DisjointPCA algorithm]

This second stage is where the disjoint orthogonal loading matrices are computed. In order to obtain P, Q, and R disjoint orthogonal components in the loading matrices , , and , the DisjointPCA algorithm is applied to the matrices , and , respectively. If is required to be disjoint orthogonal, then we have that: . If is required to be disjoint orthogonal, then we have that: . If is required to be disjoint orthogonal, then we have that: .

Stage 3

[Computation of non-disjoint orthogonal loading matrices and of the core]

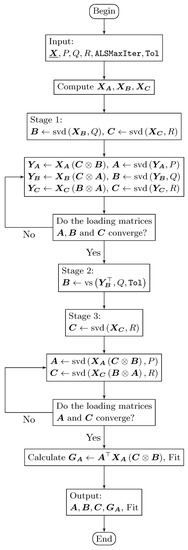

This final stage is where the non-disjoint orthogonal loading matrices are computed. For instance, if it is required that only the matrix has disjoint orthogonal components (see Figure 1), then an ALS algorithm must be applied in order to compute loading matrices and (with the matrix being fixed). The same occurs in the TuckerALS algorithm, initializing or . In addition, if it is required that the matrices and have disjoint orthogonal components (see Figure 2), then the loading matrix is calculated while using the following two steps (the matrices and are fixed), as in the TuckerALS algorithm: (1) ; and (2) . If , and are required to be disjoint orthogonal (see Figure 3), then no calculation is necessary in the loading matrices. Therefore, the DisjointTuckerALS algorithm must compute the core while using two steps. Thus, by using the frontal slices equation from , we have that: (1) ; and (2) . The DisjointTuckerALS algorithm finishes by providing the matrices , and , the core , and the fit of the model.

Figure 1.

Flowchart of the DisjointTuckerALS algorithm that computes a single disjoint orthogonal matrix (in this case, the matrix ).

Figure 2.

Flowchart of the DisjointTuckerALS algorithm that computes two disjoint orthogonal matrices (in this case the matrices and ).

Figure 3.

Flowchart of the DisjointTuckerALS algorithm that computes three disjoint orthogonal matrices: , , and .

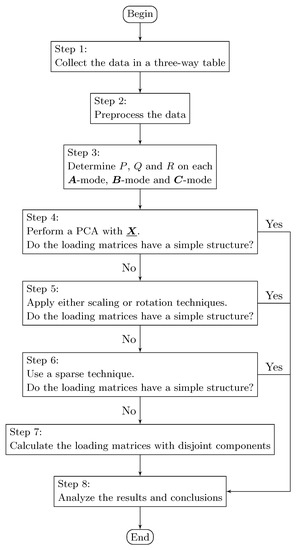

3.2. Using the DisjointTuckerALS Algorithm

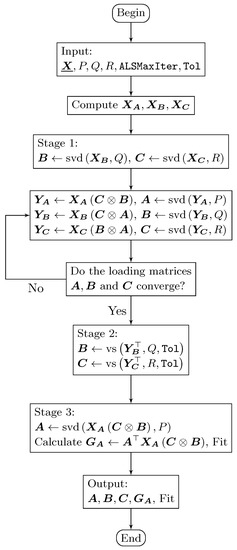

We summarize our proposal in Algorithm 3 in order to explain the use of the DisjointTuckerALS algorithm when performing a component analysis for three-way tables with the Tucker model.

Computing the disjoint orthogonal components in Step 7 simultaneously in the three loading matrices is up to the analyst. However, it is not recommendable, due to the significant loss of fit that we have observed in the different computational experiments that were carried out. When more disjoint orthogonal loading matrices are computed, there is less fit in the model and more processing time is required. More than one technique can be used by combining Step 5, Step 6, and Step 7. Subsequently, the results can be compared in Step 8; see Figure 4.

| Algorithm 3: Procedure for using DisjointTuckerALS |

|

Figure 4.

Flowchart for using the DisjointTuckerALS algorithm.

4. Numerical Results

In this section, we carry out computational experiments in order to evaluate the performance of our algorithm. The first experiment corresponds to data that are simulated to generate a three-way table with a disjoint structure according to the Tucker model. The second one is an experiment using real data that correspond to a three-way table taken from [22]. In this section, we also provide details of some computational aspects, such as runtimes of the algorithm, characteristics of the hardware, and software used, among others.

4.1. Computational Aspects

We must mention that the DisjointTuckerALS algorithm requires more computational time (runtime) than the TuckerALS algorithm. This is explained, because, as the number of loading matrices calculated as disjoint increases, the time that is required for their calculation also increases, consuming more computational resources of memory and processor.

The computational experiments were carried out on a computer with the following hardware characteristics: (i) OS: Windows 10 for 64 bits; (ii) RAM: 8 Gigabytes; and (iii) processor: Intel Core i7-4510U 2-2.60 GHZ. Regarding the software, the following tools and programming languages were used: (i) development tool—IDE—: Microsoft Visual Studio Express; (ii) programming language: C#.NET; and, (iii) statistical software: R.

The DisjointPCA, TuckerALS, and DisjointTuckerALS algorithms that are presented in this paper to perform all of the numerical applications were implemented in C#.NET as the programming language mainly for the graphical user interface (GUI) of data entry, control of calculations, and delivery of results. Data entry and presentation of results were carried out with Excel sheets. Communication between C#.NET and Excel was established through a connector known as COM+. Some parts of the codes developed were implemented while using the R programming language for random number generation and SVD. Communication between C#.NET and R was stated with R.NET as a connector, which can be installed in Visual Studio with a package named NuGet, whereas SVD was performed with an R package named irlba. This package quickly calculates the partial SVD, that is, those SVD which use the first singular values, but we must specify the number of singular values to be calculated. Therefore, the irlba package does not use nor compute the other singular values, accelerating the calculations for big matrices, such as frontal, horizontal, and vertical slices matrices of a three-way table.

4.2. Generator of Disjoint Structure Tensors

We design and implement a simulation algorithm to randomly build a three-way table with a disjoint latent structure in its three-modes. Subsequently, the DisjointTuckerALS algorithm should be able to detect that structure, since it uses a Tucker model with disjoint orthogonal components.

Let be a three-way table with I individuals, J variables, and K times or locations. Assume that: (i) the first mode, which is related to the loading matrix , has P latent individuals (); (ii) the second mode, which is related to the loading matrix , has Q latent variables (); and, (iii) the third mode, which is related to the loading matrix , has R latent locations (). Suppose that are the I original individuals. In addition, are the P latent individuals. We consider the linear combination that is given by

If it is required that the m original consecutive individuals, , are represented by the latent individual , then the scalars are defined as independent random variables with discrete uniform distribution, whose support is the closed set of integer numbers from 70 to 100. The other scalars in the same linear combination are defined as independent random variables with discrete uniform distribution, whose support is the closed set of integer numbers from one to 30. This procedure must be performed for each p from 1 to P, since each original individual must have a strong presence in a unique latent individual. The Gram–Schmidt orthonormalization process is applied to the matrix of order , which has the scalars from all the linear combinations. Hence, a disjoint dimensional reduction of the loading matrix is achieved.

Similarly with the loading matrix , consider that are the J original variables. In addition, are the Q latent variables. We consider the linear combination that is stated as

If it is required that the m consecutive original variables are represented by the latent variable , then the scalars are defined as independent random variables with discrete uniform distribution, similarly as for the matrix . In the same manner as before, this procedure must be performed for each q from 1 to Q and the Gram–Schmidt orthonormalization process is again applied, as in the case of , and then a disjoint dimensional reduction of is achieved.

Analogously with , let be the K times or original locations and be the R latent times or latent locations. We consider the linear combination that is expressed by

If it is required that the m consecutive original locations are represented by the latent location , then the scalars are defined as with and , and the procedure is applied for each r from 1 to R. Once again, the Gram–Schmidt orthonormalization process is applied to the matrix of order that has the scalars from all of the linear combinations. Thus, a disjoint dimensional reduction of the loading matrix is achieved.

The Core must be of order . With no loss of generality, suppose that the inputs of that three-way table are independent random variables with continuous uniform distribution in the interval . In order to complete the creation of , the matrix equation is defined as

The matrix of order stated in (14) has the frontal slices of , whereas the matrix of order has the frontal slices of . Equations (11)–(13) are used in order to build the random loading matrices. The algorithm that builds the three-way random table of order must implement an application that is expressed as

which is subject to , and , where is the set of all three-way tables with entries in the real numbers. Additionally, it must satisfy that

where is the number of original individuals in the p-th latent individual; is the number of original variables in the q-th latent variable; and is the number of original locations in the r-th latent location. The application that is defined in (15) must randomly provide a three-way table of order that has a simple structure, which is expected to be detected by the DisjointTuckerALS algorithm.

4.3. Applying the DisjointTuckerALS Algorithm to Simulated Data

Next, we show how the DisjointTuckerALS algorithm works by using the application in order to generate a three-way table of order . According to the definition of given in (15), the values of P, Q, R are and 5 respectively. Furthermore, constraints stated in (16) are satisfied. The other parameter setting is given by and . The TuckerALS and DisjointTuckerALS algorithms were executed while using the data , , , , obtaining a fit of and , respectively; see Table 14. As expected, we have a loss of fit valued at 1.94%. However, there is a gain in interpretation, because a simple structure in the three loading matrices is obtained. Table 15 reports the loading matrix . Note that the DisjointTuckerALS algorithm is able to identify the disjoint structure in the first mode. Observe that five original individuals are represented by the first disjoint orthogonal component. The next seven original individuals are represented by the second disjoint orthogonal component. In addition, the last eight original individuals are represented by the third disjoint orthogonal component. Table 16 shows the loading matrix . The fact that the DisjointTuckerALS algorithm is able to identify a disjoint structure in the second mode is highlighted. The algorithm is able to recognize the way in which the latent variables group the original variables. Table 17 presents the loading matrix and, once again, note that the DisjointTuckerALS algorithm identifies the disjoint structure in the third mode.

Table 14.

Comparison of fit and runtime for simulated data.

Table 15.

Loading matrix with the DisjointTuckerALS algorithm for simulated data.

Table 16.

Loading matrix with the DisjointTuckerALS algorithm for simulated data.

Table 17.

Loading matrix with DisjointTuckerALS algorithm for simulated data.

The DisjointTuckerALS algorithm also recognizes the manner in which the latent locations group the original locations and the three loading matrices are easily interpreted. However, when running the TuckerALS algorithm, the three loading matrices do not allow for an easy interpretation. Note that the disjoint approach can be complemented with rotations and sparse techniques for better analysis.

4.4. Applying the DisjointTuckerALS Algorithm to Real Data

Next, the DisjointTuckerALS algorithm is executed with a three-way table of order with real data being taken from [22]. Note that Japanese university students evaluate Chopin’s preludes while using bipolar scales. The preprocessing of the data and the number of components in each mode have been chosen in the same manner, as in [22]. Table 18 reports the model fit in four different scenarios with , , and . The full data set can be downloaded from http://three-mode.leidenuniv.nl.

Table 18.

Comparison of fit and runtime for Chopin’s preludes data.

For a comparative analysis, the loading matrix that is related to Chopin’s preludes is chosen. Table 19 reports the loading matrix obtained while using the TuckerALS algorithm. In [22], this matrix is not interpreted and they proceed to make rotations.

Table 19.

Loading matrix obtained with the TuckerALS algorithm for Chopin’s preludes data.

Table 20 provides the final loading matrix used for interpretation. The first component is named “fast+minor, slow+major” and the second component is named “fast+major, slow+minor”. Table 21 presents the loading matrix that was obtained with the DisjointTuckerALS algorithm. Note that, with the loading matrix of Table 21, the same conclusions are reached as with the loading matrix of Table 20.

Table 20.

Loading matrix obtained by rotations for Chopin’s preludes data.

Table 21.

Loading matrix obtained with the DisjointTuckerALS algorithm for Chopin’s data.

5. Conclusions, Discussion, Limitations, and Future Research

The main techniques for dimensionality reduction, pattern extraction, and classification in data obtained through tensorial analysis have been based on the Tucker model. However, a big problem of the existing techniques is the interpretability of their results. In this work, we have proposed a heuristic algorithm for computing the disjoint orthogonal components in a three-way table with the Tucker model, which facilitates the mentioned interpretability. The DisjointTuckerALS algorithm is based on a combination of the TuckerALS and DisjointPCA algorithms. The results that were obtained in the computational experiments have shown that the main benefit of the proposed algorithm is its easiness of direct interpretation in the loading matrices without using rotational methods or sparse techniques. Computational experiments have suggested that the algorithm can detect and catch disjoint structures in a three-way table according to the Tucker model. In summary, this paper reported the following findings:

- (i)

- A new algorithm for computing disjoint orthogonal components in a three-way table with the Tucker model was proposed.

- (ii)

- A measure of goodness of fit to evaluate the algorithms presented was proposed.

- (iii)

- A optimization mathematical model was used.

- (iv)

- A numerical evaluation of the proposed methodology was considered by means of Monte Carlo simulations.

- (v)

- By using a case study with real-world data, we have illustrated the new algorithm.

Numerical experiments of the proposed algorithm with simulated and real data sets allowed us to show its good performance and its potential applications. We obtained a new algorithm that can be a useful knowledge addition to the multivariate tool-kit of diverse practitioners, applied statisticians, and data scientists.

Some limitations of our study, which could be improved in future works are the following:

- (i)

- There is no guarantee that the optimal solution is attained due to the heuristic nature of the DisjointTuckerALS algorithm.

- (ii)

- In the absence of additional constraints to those inherent to the original problem, the space of feasible solutions contains the global optimum. However, by incorporating the constraints of the DisjointTuckerALS algorithm, the space of feasible solutions is compressed, which aims to find a solution that is as close as possible to the global optimum within this new set of feasible solutions. For this reason, the fit corresponding to the solution provided by the DisjointTuckerALS algorithm is less than the fit achieved by the TuckerALS algorithm. Nevertheless, the incorporated constraints allow us to put zeros in the positions of the variables with low contribution into a component of the loading matrix, which permits us to interpret the components more clearly.

- (iii)

- The proposed algorithm takes longer than the TuckerALS algorithm, so that a tradeoff between interpretation and speed exists.

In order to motivate readers and potential users, a wide spectrum of additional applications of the new algorithm with real three-way data in diverse areas is the following:

- (i)

- Functional magnetic resonance imaging (fMRI) has been successfully used by the neuroscientists for diagnosis of neurological and neurodevelopmental disorders. The fMRI has been analyzed by means of tensorial methods while using the Tucker model [23].

- (ii)

- Component analysis in three-way tables also has application in environmental sciences. For example, in [24], through the multivariate study of a sample of blue crabs, a hypothesis is tested that environmental stress weakens some organisms, since the normal immune response is not able to protect them from a bacterial infection. A Tucker model was used for this analysis.

- (iii)

- The data of the price indexes in search of behavior patterns using the Tucker decomposition were analyzed in [25]. The DisjointTuckerALS algorithm can be used for detecting these patterns.

- (iv)

- An application in economy on the specialization indexes of the electronic industries of 23 European countries of the Organisation for Economic Co-operation and Development (OECD) based on three-way tables is presented in [1]; see also http://three-mode.leidenuniv.nl. Applications in stock markets and breakpoint analysis for the COVID-19 pandemic can be also considered [26].

- (v)

- On the website "The Three-Mode Company” (see http://three-mode.leidenuniv.nl), data sets corresponding to three-way tables, including engineering, management, and medicine, are related to (a) aerosol particles in Austria; (b) diseased blue crabs in the US; (c) chromatography; (d) coping Dutch primary school children; (e) Dutch hospitals as organizations; (f) girls’ growth curves between five and 15 years old; (g) happiness, siblings, and schooling; (h) multiple personalities; (i) parental behavior in Japan; (j) peer play and a new sibling; (k) Dutch children in the strange situations; and, (l) university positions and academics.

Some open problems that arose from this study are the following:

- (i)

- We believe that the disjoint approach can be used together with existing techniques.

- (ii)

- A study that allows for obtaining a disjoint structure in the core of a Tucker model to facilitate their interpretation is of interest.

- (iii)

- A bootstrap analysis for the loading matrices can be performed.

- (iv)

- Regression modeling, errors-in-variables, functional data analysis, and PLS regression, based on the proposed methodology are also of interest [27,28,29,30].

- (v)

- Other applications of the algorithm developed in the context of multivariate methods are: discriminant analysis, correspondence analysis, and cluster analysis, as well as the already mentioned functional data analysis and PLS.

- (vi)

- There is also a promising field of applications in the so-called statistical learning; for example, for image compression.

Therefore, the new methodology that was proposed in this study promotes new challenges and opens issues to be explored from the theoretical and numerical perspectives. Future articles reporting research on these and other issues are in progress and we hope to publish their findings.

Author Contributions

Data curation, C.M.-B. and J.A.R.-F.; formal analysis, C.M.-B., J.A.R.-F., A.B.N.-L., V.L., A.M.-C., M.P.G.-V.; investigation, C.M.-B., M.P.G.-V.; methodology, C.M.-B., J.A.R.-F., A.B.N.-L., V.L., A.M.-C., M.P.G.-V.; writing—original draft, C.M.-B., J.A.R.-F., A.B.N.-L., A.M.-C., M.P.G.-V.; writing—review and editing, V.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported partially by project grant “Fondecyt 1200525” (V. Leiva) from the National Agency for Research and Development (ANID) of the Chilean government.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used to support the findings of this study are available in this paper, in the links there provided or from the corresponding author upon request.

Acknowledgments

The authors would also like to thank the Editor and Reviewers for their constructive comments which led to improve the presentation of the manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Kroonenberg, P.M. Applied Multiway Data Analysis; Wiley: New York, NY, USA, 2008. [Google Scholar]

- Hitchcock, F.L. The expression of a tensor or a polyadic as a sum of products. J. Math. Phys. 1927, 6, 164–189. [Google Scholar] [CrossRef]

- Kolda, T.G.; Bader, B.W. Tensor decompositions and applications. SIAM Rev. 2009, 51, 455–500. [Google Scholar] [CrossRef]

- Tucker, L.R. Some mathematical notes on three-mode factor analysis. Psychometrika 1966, 31, 279–311. [Google Scholar] [CrossRef]

- Harshman, R.A. Foundations of the parafac procedure: Models and conditions for an explanatory multimodal factor analysis. UCLA Work. Pap. Phon. 1970, 16, 1–84. [Google Scholar]

- Carroll, J.D.; Chang, J.J. Analysis of individual differences in multidimensional scaling via an n-way generalization of “eckart-young” decomposition. Psychometrika 1970, 35, 283–319. [Google Scholar] [CrossRef]

- Kiers, H.A.L.; Mechelen, I.V. Three-way component analysis: Principles and illustrative application. Psychol. Methods 2001, 6, 84–110. [Google Scholar] [CrossRef]

- Kolda, T.G. Orthogonal tensor decompositions. SIAM J. Matrix Anal. Appl. 2001, 23, 243–255. [Google Scholar] [CrossRef]

- Acal, C.; Aguilera, A.M.; Escabias, M. New modeling approaches based on varimax rotation of functional principal components. Mathematics 2020, 8, 2085. [Google Scholar] [CrossRef]

- Kroonenberg, P.M.; de Leeuw, J. Principal component analysis of three-mode data by means of alternating least squares algorithms. Psychometrika 1980, 45, 69–97. [Google Scholar] [CrossRef]

- Jolliffe, I.T. Principal Component Analysis; Springer: New York, NY, USA, 2002. [Google Scholar]

- Papalexakis, E.E.; Faloutsos, C.; Sidiropoulos, N.D. Parcube: Sparse parallelizable tensor decompositions. In Machine Learning and Knowledge Discovery in Databases; Springer: Berlin/Heidelberg, Germany, 2012; pp. 521–536. [Google Scholar]

- Perros, I.; Chen, R.; Vuduc, R.; Sun, J. Sparse hierarchical tucker factorization and its application to healthcare. In Proceedings of the IEEE International Conference on Data Mining, Atlantic City, NJ, USA, 14–17 November 2015; pp. 943–948. [Google Scholar]

- Sun, W.W.; Junwei, L.; Han, L.; Guang, C. Provable sparse tensor decomposition. J. R. Stat. Soc. B 2017, 79, 899–916. [Google Scholar] [CrossRef]

- Yokota, T.; Cichocki, A. Multilinear tensor rank estimation via sparse tucker decomposition. In Proceedings of the 2014 Joint 7th International Conference on Soft Computing and Intelligent Systems (SCIS) and 15th International Symposium on Advance Intelligent Systems (ISIS), Kitakyushu, Japan, 3–6 December 2014. [Google Scholar]

- Vichi, M.; Saporta, G. Clustering and disjoint principal component analysis. Comput. Stat. Data Anal. 2009, 53, 3194–3208. [Google Scholar] [CrossRef]

- Ramirez-Figueroa, J.A.; Martin-Barreiro, C.; Nieto-Librero, A.B.; Leiva, V.; Galindo, M.P. A new principal component analysis by particle swarm optimization with an environmental application for data science. Stoch. Environ. Res. Risk Assess. 2021, in press. [Google Scholar] [CrossRef]

- Ferrara, C.; Martella, F.; Vichi, M. Dimensions of well-being and their statistical measurements. In Topics in Theoretical and Applied Statistics; Alleva, G., Giommi, A., Eds.; Springer: Cham, Switzerland, 2016; pp. 85–99. [Google Scholar]

- Nieto-Librero, A.B. Inferential Version of Biplot Methods Based on Bootstrapping and its Application to Three-Way Tables. Ph.D. Thesis, Universidad de Salamanca, Salamanca, Spain, 2015. (In Spanish). [Google Scholar]

- Amaya, J.; Pacheco, P. Dynamic factor analysis using the Tucker3 method. Rev. Colomb. Estad. 2002, 25, 43–57. [Google Scholar]

- Macedo, E.; Freitas, A. The alternating least-squares algorithm for CDPCA. In Optimization in the Natural Sciences; Plakhov, A., Tchemisova, T., Freitas, A., Eds.; Springer: Cham, Switzerland, 2015; pp. 173–191. [Google Scholar]

- Murakami, T.; Kroonenberg, P.M. Three-mode models and individual differences in semantic differential data. Multivar. Behav. Res. 2003, 38, 247–283. [Google Scholar] [CrossRef]

- Hamdi, S.M.; Wu, Y.; Boubrahimi, S.F.; Angryk, R.; Krishnamurthy, L.C.; Morris, R. Tensor decomposition for neurodevelopmental disorder prediction. In Brain Informatics; Wang, S., Yamamoto, V., Su, J., Yang, Y., Jones, E., Iasemidis, L., Mitchell, T., Eds.; Springer: Cham, Switzerland, 2018; pp. 339–348. [Google Scholar]

- Gemperline, P.J.; Miller, K.H.; West, T.L.; Weinstein, J.E.; Hamilton, J.C.; Bray, J.T. Principal component analysis, trace elements, and blue crab shell disease. Anal. Chem. 1992, 64, 523–531. [Google Scholar] [CrossRef]

- Correa, F.E.; Oliveira, M.D.; Gama, J.; Correa, P.L.P.; Rady, J. Analyzing the behavior dynamics of grain price indexes using Tucker tensor decomposition and spatio-temporal trajectories. Comput. Electron. Agric. 2016, 120, 72–78. [Google Scholar] [CrossRef][Green Version]

- Chahuan-Jimenez, K.; Rubilar, R.; de la Fuente-Mella, H.; Leiva, V. Breakpoint analysis for the COVID-19 pandemic and its effect on the stock markets. Entropy 2021, 23, 100. [Google Scholar] [CrossRef]

- Huerta, M.; Leiva, V.; Liu, S.; Rodriguez, M.; Villegas, D. On a partial least squares regression model for asymmetric data with a chemical application in mining. Chemom. Intell. Lab. Syst. 2019, 190, 55–68. [Google Scholar] [CrossRef]

- Carrasco, J.M.F.; Figueroa-Zuniga, J.I.; Leiva, V.; Riquelme, M.; Aykroyd, R.G. An errors-in-variables model based on the Birnbaum-Saunders and its diagnostics with an application to earthquake data. Stoch. Environ. Res. Risk Assess. 2020, 34, 369–380. [Google Scholar] [CrossRef]

- Giraldo, R.; Herrera, L.; Leiva, V. Cokriging prediction using as secondary variable a functional random field with application in environmental pollution. Mathematics 2020, 8, 1305. [Google Scholar] [CrossRef]

- Melendez, R.; Giraldo, R.; Leiva, V. Sign, Wilcoxon and Mann-Whitney tests for functional data: An approach based on random projections. Mathematics 2021, 9, 44. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).