Abstract

Magnetorheological (MR) dampers play a crucial role in various engineering systems, and how to identify the control parameters of MR damper models without any prior knowledge has become a burning problem. In this study, to identify the control parameters of MR damper models more accurately, an improved manta ray foraging optimization (IMRFO) is proposed. The new algorithm designs a searching control factor according to a weak exploration ability of MRFO, which can effectively increase the global exploration of the algorithm. To prevent the premature convergence of the local optima, an adaptive weight coefficient based on the Levy flight is designed. Moreover, by introducing the Morlet wavelet mutation strategy to the algorithm, the mutation space is adaptively adjusted to enhance the ability of the algorithm to step out of stagnation and the convergence rate. The performance of the IMRFO is evaluated on two sets of benchmark functions and the results confirm the competitiveness of the proposed algorithm. Additionally, the IMRFO is applied in identifying the control parameters of MR dampers, the simulation results reveal the effectiveness and practicality of the IMRFO in the engineering applications.

1. Introduction

Magnetorheological (MR) dampers are a kind of intelligent semi-active control device, the output damping force of the damper can be controlled by controlling the current instruction in the coil [1,2]. MR dampers are famous for their fast reaction speed, less energy consumption and wide control range; therefore, MR dampers are widely applied in various engineering domains, e.g., vehicle systems [3,4]. However, the mathematical models of MR dampers are very complicated because of their special mechanical characteristic-hysteretic property. Xu et al. [5] introduced a new single-rod MR damper with combined volume compensator; meanwhile, the design method of the combined compensator with independent functions of volume compensation and compensation force was proposed. Yu et al. [6] proposed a novel compact rotary MR damper with variable damping and stiffness and a unique structure that contains two driven disks and an active rotary disk was designed. Boreiry et al. [7] investigated the chaotic response of a nonlinear seven-degree-of-freedom full vehicle model equipped with an MR damper, and the equations of motion was proposed by employing the modified Bouc–Wen model for an MR damper. Many MR dampers have emerged with different uses and functions. Among them, the Bouc–Wen model is a typical one, which contains numerous unknown parameters. In addition, a range of other factors, including the current size, piston speed and excitation factors, also affect the accuracy of the model, which makes parameter identification of the model more difficult. Therefore, how to find an effective method to identify the control parameters of MR dampers is of great importance and has become a challenging task. In addition, the possible magneto-thermal problem of the MR damper to MR fluid is also becoming a pressing issue [8,9,10].

With the rapid development of computer technologies, meta-heuristic algorithms, a suitable technology, are applied in MR dampers to establish a set of accurate and reliable models. Many scholars at home and abroad carry out the relevant research work and have achieved some results. Giuclea et al. [11] used a genetic algorithm (GA) to identify the control parameters of a modified Bouc–Wen model, in which the applied currents are determined using the curve fitting method. However, in addition to the implementation complexity, a GA may suffer from premature convergence and its optimization performance depends mostly on the probability election for crossover, mutation and selecting operators [12]. Kwok et al. [13] gave a parameter identification technique for a Bouc–Wen damper model using particle swarm optimization (PSO), and some experimental force–velocity data under various operating conditions were employed. Although PSO is improved by using a stop criterion in this study, it easily traps into the local optima and suffers from premature convergence [14]. Thus, the application of PSO in MR dampers is limited, owing to its drawbacks of nature.

Despite the shortcomings of PSO and GAs, meta-heuristic methods are particularly popular for their randomness, easy implementation and black box treatment of problems [15,16]. An increasing number of scholars have proposed meta-heuristic algorithms inspired from different mechanisms by studying animal behaviors and physical phenomena in nature [17,18,19]. Some of the most popular algorithms are the bat algorithm (BA) [20], squirrel search algorithm (SSA) [21], artificial ecosystem-based optimization [22], whale optimization algorithm (WOA) [23], virus colony search (VCS) [24], fruit fly optimization algorithm (FOA) [25], butterfly optimization algorithm (BOA) [26], spotted hyena optimizer (SHO) [27], grasshopper optimization algorithm (GOA) [28], flower pollination algorithm (FPA) [29], crow search algorithm (CSA) [30], grey wolf optimizer (GWO) [31], water cycle algorithm (WCA) [32], gravitational search algorithm (GSA) [33], atom search optimization (ASO) [34], henry gas solubility optimization (HGSO) [35], charged system search (CSS) [36], water evaporation optimization (WEO) [37], equilibrium optimizer (EO) [38] and supply–demand-based optimization (SDO) [39]. In the last ten years, a great number of bio-inspired optimization and nature-inspired optimization algorithms have been proposed. These algorithms are significantly distinct in their natural or biological inspirations; therefore, one can find its category easily and without any ambiguity. In addition, the algorithms are different with respect to their search behaviors, i.e., how they update new candidate solutions during the iteration process [40]. Although these meta-heuristic methods perform well in tackling some challenging real-world problems, they have some disadvantages and their optimization performance still remains to be improved, especially for some inherently high-nonlinear and non-convex problems, such as the economic dispatch (ED) problem [41]. To overall the drawbacks of these algorithms, hybridization might produce new algorithmic behaviors that are effective in improving the optimization performance of the algorithms.

Manta ray foraging optimization (MRFO) [42,43] is a new meta-heuristic algorithm, which simulates the foraging behavior of manta rays in nature. The local exploitation is performed by the chain foraging and somersault foraging behaviors, while the global exploration is performed by the cyclone foraging behavior. This algorithm shows some optimization capabilities and is very successful in applying in some engineering domains, such as geophysics [44], energy allocation [45,46,47], image processing [48] and electric power [49]. These successful applications in the literature confirm MRFO is effective in solving different complex real-world problems. Even though MRFO belongs to a category of meta-heuristic algorithms, it is significantly distinct from other widely employed meta-heuristics in terms of ideology and conception. The major difference between MRFO and PSO is their different biology behaviors. PSO is inspired by the movement of bird flocks or fish schools in nature, whereas MRFO is inspired by the social foraging behaviors of manta rays. Another distinct difference between both is the solution searching mechanism. The solutions in PSO are produced by the combination of the global best solution found thus far and the local best solution, as well as the movement velocity of the individuals; whereas, in MRFO, the solutions are produced by the combination of the global best solution found thus far and the solution in front of it by switching different movement strategies. For a GA and MRFO, both are quite different. A GA is inspired by Darwin’s theory of evolution, which is quite different from the social foraging behaviors of manta rays in MRFO. The second difference is the representation of problem variables. In GAs, the problem variables are encoded in a series of fixed-length bit strings; in MRFO, the problem variables are used directly. Moreover, in GAs, by performing the roulette wheel selection strategy, better solutions have a greater probability of creating new solutions, and worse solutions are probably replaced by new better solutions [27]. While in MRFO, all the individuals in the population have the same probability of improving their solutions. Although both MRFO and bacterial foraging optimization (BFO) [50] are swarm-based and bio-inspired meta-heuristic techniques, which model the biology foraging behaviors, there are still some significant differences. The major difference between MRFO and BFO is that the concepts of searching in the foraging behaviors are different. MRFO models three foraging behaviors of manta rays including chain foraging, cyclone foraging and somersault foraging, while BFO models the social foraging behaviors of Escherichia coli, such as chemotaxis, swarming, reproduction and elimination–dispersal. The second difference is that BFO produces new solutions by adding a random direction with a fixed-length step pace, whereas MRFO produces new solutions with respect to the best solution and the solution in front of it. Additionally, in BFO, when updating the solutions, half of the solutions with higher health are reproduced and half of the solutions with lower health are discarded; MRFO accepts new solutions that are better than current solutions. The last difference between both is that BFO uses the health degree of the bacteria as the fitness values of solutions, while MRFO generally uses the function values of the given problems as the fitness values of solutions. It is obvious that BFO and MRFO follow totally different approaches when solving optimization problems.

However, similar to other meta-heuristics, MRFO also suffers from some disadvantages, i.e., premature convergence, trapping into the local optima and weak exploration [51,52]. There are many scholars who adopt various methods to enhance the performance of MRFO in the literature. Turgut [51] integrated the best performing ten chaotic maps into MRFO and proposed a novel chaotic MRFO to the thermal design problem, this improved version can effectively escape the local optima and increase the convergence rate of the algorithm. Calasan et al. [53] enhanced the optimization ability of the algorithm by integrating the chaotic numbers produced by the Logistic map in MRFO, this variant was used to estimate the transformer parameters. Houssein et al. [52] proposed an efficient MRFO algorithm by hybridizing opposition-based learning with initialization steps of MRFO, which was used to solve the image segmentation problem. Hassan et al. [54] solved the economic emission dispatch problems through hybridizing the gradient-based optimizer with MRFO, which may lead to the avoidance of local optima and accelerate the conveyance rate. Elaziz et al. [55] utilized the heredity and non-locality properties of the Grunwald–Letnikov fractional differ-integral operator to improve the exploitation ability of the algorithm. Elaziz et al. [56] proposed the modified MRFO based on differential evolution as an effective feature selection approach to identify COVID-19 patients by analyzing their chest images. Jena et al. [57] introduced a new attacking MRFO algorithm, which was used for the maximum 3D Tsallis entropy based multilevel thresholding of a brain MR image. Yang et al. [58] devised a hybrid Framework based on MRFO and grey prediction theory to forecast and evaluate the influence of PM10 on public health. Ramadan et al. [59] used MRFO to search for a feasible configuration of the distribution network to achieve fault-tolerance and fast recovery reliable configurable DN in smart grids. Houssein et al. [60] used MRFO to extract the PV parameters of single, double and triple-diode models. It shows better abilities to exploit the search space for unimodal problems, but the weak exploration and easily trapping into the local optima for multimodal problems as well as the unbalance between exploration and exploration for hybrid problems are still a few of the major issues.

It can be obvious that it is feasible to design some improved versions of MRFO, and the above implementations are good examples of this capability. However, it seems difficult for these improved versions to simultaneously improve their overall optimization capabilities combined with the shortcomings of the algorithm, such as exploration, exploitation, balance between both and convergence rate. Therefore, it is necessary to conduct some research attempts to establish a comprehensive improvement strategy for effectively tracking complex optimization problems. Based on the investigations mentioned above, a new hybrid model by combining different operators and designing some search strategies to comprehensively enhance the optimization performance of the algorithm is one of the major research attempts. This is the main motivation of this study. An effective optimization method can accurately obtain the parameters of the complex MR damper. These selected parameters have large influences on the output damping force, which gives the damper better hysteretic and dynamic characteristics. Therefore, it has a wide application value in industry and civil fields at home and abroad.

In light of the fact mentioned above, a hybrid MR damper model based on an optimization technique is proposed in this study. To obtain the optimal control parameters of the MR damper, an improved MRFO (IMRFO) combing a searching control factor, an adaptive weight coefficient with the Levy flight and a wavelet mutation are presented. Firstly, based on the analysis for the exploration probability of MRFO, the weak exploration capability of MRFO is promoted by introducing the searching control mechanism, in which a searching control factor is proposed to adaptively adjust the search option according to the number of iterations, promoting the exploration ability of the algorithm. Secondly, the weight coefficient in the cyclone foraging is redefined by combining with the Levy flight; this search mechanism can effectively promote the diversity of search individuals and prevent the premature convergence of local optima. Finally, the Morlet wavelet mutation strategy is employed to dynamically adjust the mutation space by calculating the energy concentration of the wavelet function; this strategy can improve the ability of the algorithm to step out of stagnation and the convergence rate. The effectiveness of the IMRFO is verified on two sets of standard benchmark functions. Additionally, the proposed IMRFO has been applied to optimally identify the control parameters of the MR dampers. The results have been compared with those of other algorithms, demonstrating the superiority of the IMRFO in solving the complex engineering problems.

The novelty points of this study are highlighted below.

In this study, a searching control factor, an adaptive weight coefficient and a wavelet mutation strategy are designed and integrated into MRFO.

The searching control factor proposed is a decreasing time-dependent function with the rand oscillation, it proved to effectively improve the exploration ability of the algorithm.

The adaptive weight coefficient is equipped with the Levy flight, which can adjust the step pace of the individuals having a Levy distribution with the iterations; it strengthens the diversity of search individuals.

The Morlet wavelet mutation utilizes the multi-resolution and energy concentration characteristics of the wavelet function to step out of stagnation and increase the convergence rate of the algorithm.

The Bouc–Wen model is established under various working conditions; the results discover that the IMRFO is more successful than its competitors in identifying the control parameters of the MR models.

The rest of this paper is organized as follows: Section 2 briefly introduces the basic MRFO, and Section 3 describes the proposed IMRFO algorithm in detail. The performance analysis of the IMRFO on benchmark functions is provided in Section 4. The experiment results and analysis for identifying the control parameters of MR dampers are presented in Section 5. Section 6 gives the conclusions and suggests a direction for future research.

2. Manta Ray Foraging Optimization (MRFO)

MRFO performs the optimization process by simulating the foraging behaviors of manta rays in nature to obtain the global optimum in the search space. MRFO has the following three foraging behaviors: chain foraging, cyclone foraging and somersault foraging. The chain foraging and somersault foraging behaviors contribute mainly to the exploitation search, while the cyclone foraging focuses on the exploration search. When solving an optimization problem using MRFO, the following update mechanisms are employed via the three foraging behaviors.

The chain foraging strategy of manta rays is as follows [42]:

The cyclone foraging of manta rays is as follows [42]:

and

The somersault foraging of manta rays is as follows [42]:

where xi(t) is the position of the ith individual at iteration t, r is a random vector in [0, 1], r1, r2 and r3 are the random numbers in [0, 1], xbest(t) is the food with the highest concentration, xrand(t) is a random position randomly produced in the search space, Lb and Ub are the lower and upper boundaries of the variables, respectively and T is the maximum number of iterations.

3. Improved Manta Ray Foraging Optimization (IMRFO)

In MRFO, in the exploitation phase, each individual updates its position with respect to the individual with the best fitness by adjusting the values of α and β, which results in the decrease in the population diversity and stagnation in the local optima; meanwhile, the lack of fine-tuning ability also causes weak solution stability. To overcome these disadvantages, an improved MRFO, named IMRFO, is proposed. In MRFO, the value of t/T is employed to balance exploratory and exploitative searches, and the exploration probability of 0.25 indicates the weak exploration ability of the algorithm; therefore, a searching control factor is proposed in the IMRFO to improve the searching progress and exploration process. To enhance the search efficiency of the algorithm, an adaptive weight coefficient with the Levy flight is introduced in the algorithm, which can maximize the diversification of individuals and maintain the balance between population diversity and concentration. To avoid prematurely converging to local optima and a guaranteed solution stability, the Morlet wavelet mutation with the fine-tuning ability is incorporated into the algorithm.

3.1. Searching Control Factor

In MRFO, in the whole iteration process, there is a probability of 50% to perform the cyclone foraging in which the first half of searches contribute to exploration, according to the value of t/T. This is, the probability of exploration is only 25% and the relatively low probability happens in the first half of the optimization process, indicating the weak exploration ability of MRFO. In regard to this weak exploration, the searching behavior of MRFO is controlled by the value of t/T, which increases linearly as the iterations rise; however, in the IMRFO algorithm, the searching ability of the algorithm is controlled by a coefficient, ps, called the searching control factor, which not only enables the algorithm to perform exploration with high probability in the second half of the optimization process but also makes it possible for the algorithm to perform exploration in the second half of the optimization process. The searching control factor ps is defined as follows:

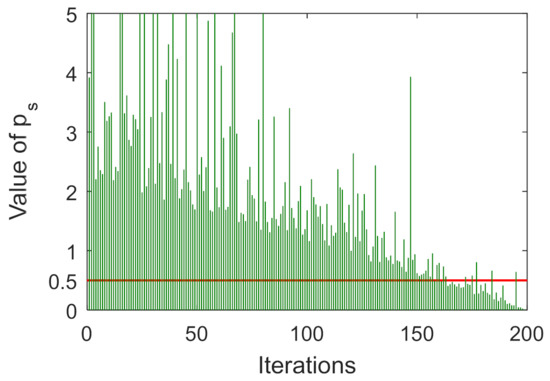

where r is the random number in the interval (0, 1]. Figure 1 gives the time-dependent curve of the searching control factor. When ps > 0.5, the IMRFO performs exploration; otherwise, the algorithm performs exploitation. Obviously, from Figure 1, the searching control factor shows a decreasing trend with random oscillation, which also obliges the algorithm to explore the search in the later iteration.

Figure 1.

Time−dependent curve of searching control factor.

Let , then , the probability of ps > 0.5 is given by the following:

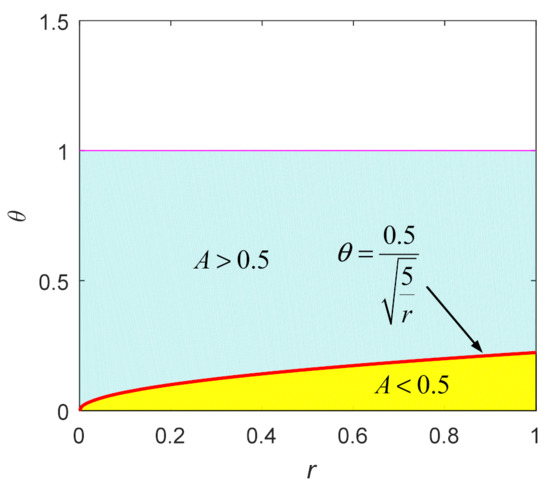

Therefore, the probability of exploration in the IMRFO algorithm is 0.5 × 0.8509 = 0.4254 over the course of optimization. Figure 2 depicts the schematic diagram of the exploration probability of the algorithm based on the searching control factor ps. Hence, the global exploration of the algorithm can be improved from 25 to 42.54% through the searching control factor ps.

Figure 2.

Schematic diagram of exploration probability of the algorithm based on the searching control probability ps.

3.2. Adaptive Weight Coefficient with Levy Flight

Levy flight, as a simulation for the foraging activities of creatures, has been developed as an efficient search mechanism for an unknown space; therefore, it is widely employed to promote the search efficiency of various meta-heuristics [61,62,63,64,65,66]. In this part, using the fat-tailed characteristic producing numerous short steps punctuated by a few long steps from the Levy flight to construct a non-local random search mechanism, we can improve the search behavior in the cyclone foraging strategy of MRFO, which can effectively promote the diversity of search individuals and prevent the premature convergence of local optima.

The random step length of the Levy flight is offered by the following Levy distribution [66]:

where λ is a stability/tail index, and s is the step length. According to Mantegna’s algorithm [66], the step length of the Levy flight is given as follows:

where u and v obey the normal distribution, respectively. That is:

Γ is the standard Gamma function and the default value of β is set to 1.5.

Therefore, an adaptive weight coefficient with the Levy flight in the cyclone foraging strategy is designed as follows:

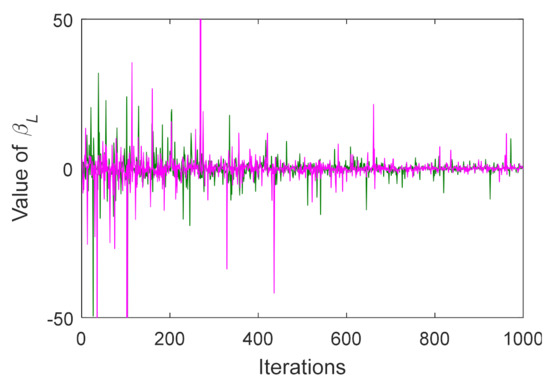

Observing Equation (14), on the one hand, the multiple short steps produced frequently by the Levy flight promote the exploitation capacity, while the long steps produced occasionally enhance the exploration capacity, guaranteeing the local optima avoidance of the algorithm; on the other hand, is a decreasing function with the iterations, depending on the decreasing trend of this function. A larger search scope is provided during the early iterations, while a smaller search scope is provided in the later iterations, this characteristic can promote the search efficiency of the algorithm and prevent the step lengths going out of the boundaries of the variables. The behavior of βL during two runs with 1000 iterations is demonstrated in Figure 3.

Figure 3.

Value of βL during two runs with 1000 iterations.

The cyclone foraging of the IMRFO algorithm can be given as follows:

3.3. Wavelet Mutation Strategy

It is easy for MRFO to trap into local optima, which results in an ineffective search for the global optimum and the instability of solutions. To improve the ability of the algorithm to step out of stagnation and the convergence rate and the stability of solutions, the Morlet wavelet mutation is incorporated into the somersault foraging strategy in the IMRFO algorithm. The wavelet mutation indicates that a dynamic adjustment of the mutation is implemented by combining the translations and dilations of a wavelet function [66,67]. To meet the fine-tuning purpose, the dilation parameters of the wavelet function are controlled to reduce its amplitude, which results in a constrain on the mutation space as the iterations increase.

Assume that pm is the mutation probability, r4 is a random number in [0, 1]. By incorporating the wavelet mutation, the somersault foraging strategy is improved by the following:

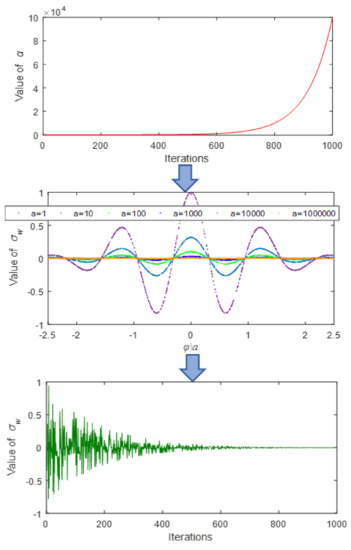

where pm is the mutation probability that is set to 0.1, and σw is the dilation parameters of a wavelet function, which can be given as follows:

where is the Morlet wavelet function and it is defined as follows:

More than 99% of the total energy of the wavelet function is contained in [−2.5, 2.5]. Thus, σw can be randomly generated from [−2.5a, 2.5a] [67]. a is the dilation parameter, which increases from 1 to s as the number of iterations increases. To avoid missing the global optimum, a monotonic increasing function can be given as follows [67]:

where g is a constant number that is set to 100,000. The calculation of the Morlet wavelet mutation is visualized in Figure 4. From Figure 4, the value of the dilation parameter increases with the decrease in t, and thus, the amplitude of the Morlet wavelet function is decreased, which gradually reduces the significance of the mutation; this merit can ensure that the algorithm jumps out of local optima and improves the precision of the solutions. Moreover, the overall positive and negative mutations are nearly the same during the iteration process, and this property also ensures good solution stability.

Figure 4.

Calculation of the Morlet wavelet mutation.

3.4. The Proposed IMRFO Algorithm

In the IMRFO, the improvements for MRFO consist of the following three parts: first, a searching control factor is designed according to a weak exploration ability of MRFO, which can effectively increase the global exploration of the algorithm; second, to prevent the premature convergence of the local optima, an adaptive weight coefficient based on the Levy flight is designed to promote the search efficiency of the algorithm; three, the Morlet wavelet mutation strategy is introduced to the algorithm, in which the mutation space is adaptively adjusted according to the energy concentration of the wavelet function, it is helpful for the algorithm to step out of stagnation and speed up the convergence rate. In the IMRFO, in the somersault foraging, when the condition of r4 < pm is met, the Morlet wavelet mutation strategy is performed. The dilation parameters of a wavelet function σw is calculated according to the position of the individual xi via Equations (18)–(20), and then the position of the individual is updated with respect to the upper boundary or lower boundary of the search space, according to the sign of σw (negative or positive) based on Equation (20). By this means, the Morlet wavelet mutation strategy is well integrated to the algorithm.

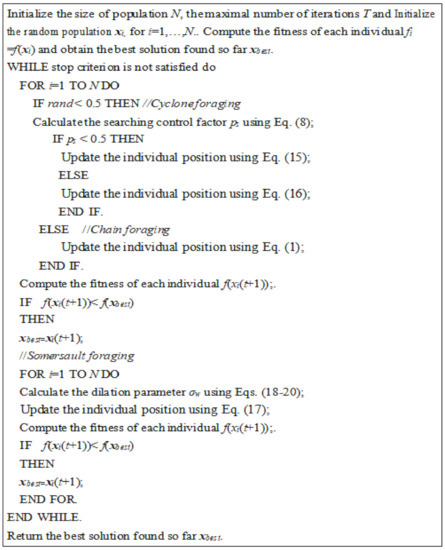

The pseudocode of the IMRFO algorithm is given in Figure 5, and the specific steps are as follows:

Figure 5.

Pseudocode of IMRFO.

Step1: The related parameters of the IMRFO are initialized, such as the size of population, N, the maximum number of iterations, T and the mutation probability, pm.

Step2: The initial population is randomly produced {x1(0), x2(0), …, xN(0)}, the fitness of each individual is evaluated and the best solution x*(0) is restored.

Step3: If t ≤ T, for each individual in the population xi(t), i = 1, …, N, if the condition of rand < 0.5 is met, the searching control factor ps is calculated according to Equation (8).

Step 4: All the individuals are sorted according to their fitness from small to large and the adaptive weight coefficient is calculated according to Equations (10)–(14); if ps < 0.5, the individual position is updated according to Equation (15), otherwise, the individual position is updated according to Equation (16); if the condition of rand < 0.5 is not met, the individual position is updated according to Equation (1).

Step 5: The individual that is out of the boundaries is relocated in the search space; the fitness value for each individual is evaluated and the best solution found thus far, x*(t), is restored.

Step 6: For each individual in the population xi(t), the dilation parameter σw is calculated according to Equations (18)–(20), and the individual position is updated according to Equation (17).

Step 7: The individual that is out of the boundaries is relocated in the search space; the fitness value for each individual is evaluated and the best solution found thus far, x*(t), is restored.

Step 8: If the stop criterion is met, the best solution found thus far, x*(t), is restored; otherwise, set t = t + 1 and go to Step 3.

4. Experimental Analysis and Results

To analyze the performance of the IMRFO algorithm, a set of benchmark functions are employed in this study. This function suite contains the following three types: unimodal (UF), multimodal (MF) and composite (CF) functions. The UF functions (f1–f7) are able to check the exploitation ability of the algorithms and the MF functions (f8–f13) are able to reveal the exploration ability of the algorithms. The CF functions (f14–f21) are selected from the CEC 2014 benchmark suite [68], which are often employed in many optimization algorithms and can check the balance ability between exploration and exploitation. Details of these benchmark problems are described in the literature [69,70]. The performance of the IMRFO is compared with that of other optimization methods. These comparative optimizers in this study include: PSO, as the most popular swarm-based algorithm; GSA, as the most well-known physics-based algorithm; WOA and MRFO, as recent optimizers; comprehensive learning particle swarm optimizer (CLPSO) [71] and evolution strategy with covariance matrix adaptation (CMA-ES) [72], as highly effective optimizers; and PSOGSA and improved grey wolf optimization (IGWO) [7], as recent improved meta-heuristics with high performance.

For all the considered optimizers, the swarm size and maximum fitness evaluations (FEs) are set to 50 and 25,000, respectively. All the results of the algorithms are based on the average performance of 30 independent runs. The parameter settings of all the algorithms are given in Table 1.

Table 1.

Parameter settings for each algorithm.

4.1. Exploitation Evaluation

Table 2 shows the results provided by the IMRFO and other optimizers in tackling UF functions f1–f7 with different dimensions. Inspecting Table 2, the IMRFO is very competitive with other optimizers on UF functions. In particular, there are significant improvements on functions f5 and f7 for all the dimensions. For the other UF functions, the IMRFO provides almost the same results as MRFO, followed by other algorithms. Therefore, the present algorithm is very effective in exploiting around the optimum and, thus, ensures a good exploitation ability.

Table 2.

Results provided algorithms for UF functions.

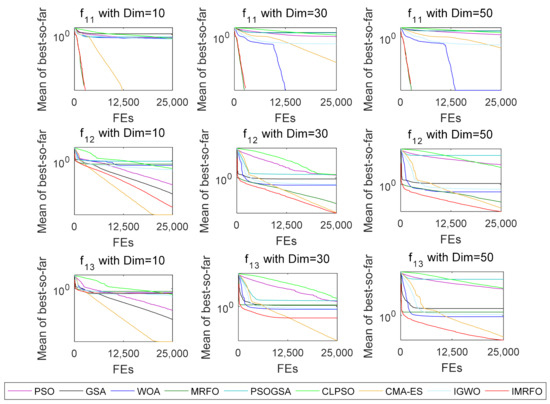

4.2. Exploration Evaluation

The MF functions with numerous local optima are useful to evaluate the exploration ability of the algorithms. Table 3 gives the results provided by the IMRFO and other optimizers in tackling MF functions f8–f13 with different dimensions. From Table 3, the IMRFO performs the best for 50 dimensions of all the MF functions, the IMRFO offers the best results for 10 dimensions of the MF functions but functions f12 and f13, and the IMRFO outperforms all the other methods for 30 dimensions of the MF functions but function f13. Therefore, the IMRFO provides excellent results on these MF functions and its performance remains consistently superior for different dimensions of 10, 30 and 50. Obviously, these superior results benefit significantly from the high exploration ability of the IMRFO. This is due to the fact that the searching control factor increases the number of exploring the search space and the Morlet wavelet mutation strategy improves the exploration ability of the algorithm.

Table 3.

Results provided algorithms for MF functions.

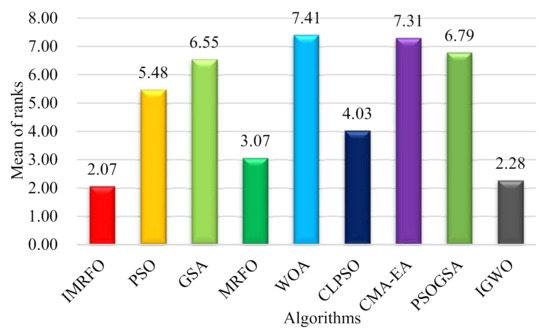

4.3. Evaluation of Local Optima Avoidance

Composite functions are the most challenging test suite; therefore, they are specifically used for evaluating the local optima avoidance of algorithms, which results from a proper balance between exploration and exploitation [73]. The results provided by the IMRFO and other optimizers in tackling CF functions f14–f21 are presented in Table 4. Based on Table 4, the results from the IMRFO are not inferior to those from other algorithms except function f17. For function f17, the results from the IMRFO are second only to those of IGWO. The IMRFO can considerably outperform other algorithms and provide the best results for 87.5% of f14–f21 problems. It can be seen that the results of the IMRFO are again significantly better than other optimizers. Therefore, the results from Table 4 confirm that the IMRFO benefits from a good balance between exploratory and exploitative searches that assist the optimizer to effectively avoid local optima.

Table 4.

The results provided algorithms for CF functions.

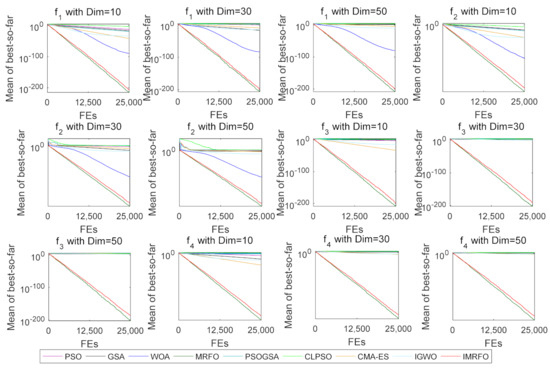

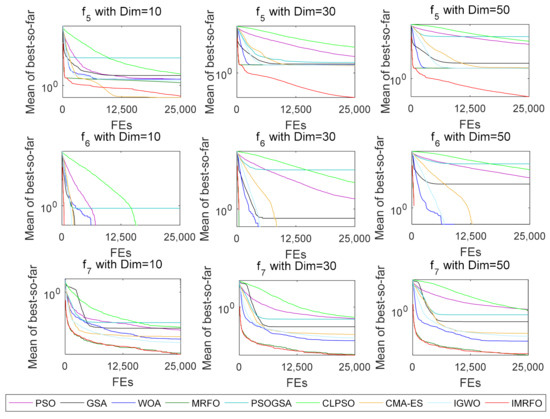

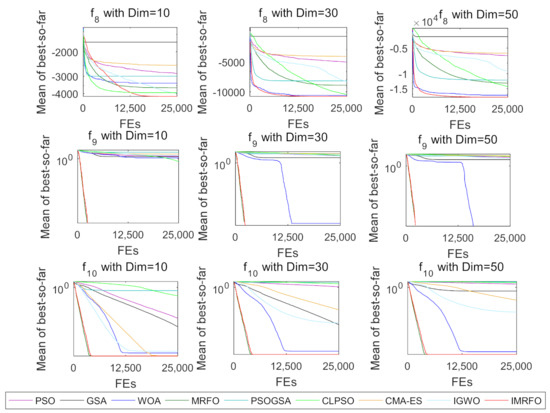

4.4. Convergence Evaluation

The convergence curves by the IMRFO and other optimizers in tackling the UF and MF functions are depicted in Figure 6, Figure 7, Figure 8, Figure 9 and Figure 10 to observe the convergence performance of the optimizers. The convergence curves provided in these figures are based on the mean of the best-so-far solutions in each iteration over 30 runs. It can be found that these curves share common characteristics: in the early stage of iterations, there are high fluctuations in the curves; the convergence rate tends to be accelerated as the iterations increase; in the later phase of iterations, the curves exhibit low variations. From the figure, compared to other algorithms, the convergence rate of the IMRFO tends to be speeded up with the increase in the iterations. This is owing to the Morlet wavelet mutation strategy for MRFO that enables the algorithm to search for the promising regions of the search space in the initial iterations and converge towards the global optimum more rapidly in the subsequent iterations. Overall, these convergence characteristics are attributed to the improved strategies in the IMRFO, by which the individuals of the population are able to effectively search the variable space and update their positions towards the global optimum solution. Thus, the excellent convergence performance of the IMRFO reveals that the algorithm achieves a better balance between exploitation and exploration than other algorithms in the optimization process.

Figure 6.

Convergence curves of algorithms for UF functions f1–f4 with different dimensions.

Figure 7.

Convergence curves of algorithms for UF functions f5–f7 with different dimensions.

Figure 8.

Convergence curves of algorithms for MF functions f8–f10 with different dimensions.

Figure 9.

Convergence curves of algorithms for MF functions f11–f13 with different dimensions.

Figure 10.

Convergence curves of algorithms for CF functions f14–f21 with 30 dimensions.

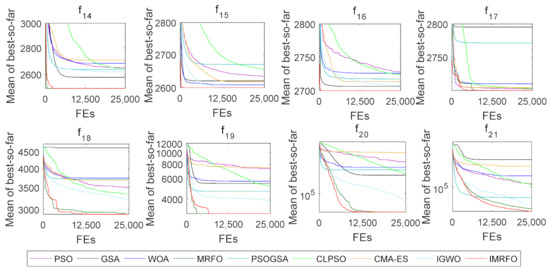

4.5. CEC 2017 Benchmarking

To further comprehensively investigate the performance of the IMRFO, a set of challenging functions CEC 2017 [74] is employed in this experiment. The comparative optimizers are the same as in the previous experiment and their parameters are set to be the same as mentioned in Table 1. The results are based on 30 independent runs of each algorithm with the population size of 50 and FEs of 50,000.

To investigate the significance difference of the performance of the IMRFO versus other algorithms and whether the IMRFO statistically outperforms other algorithms when solving optimization tasks, a Wilcoxon Signed-Rank Test (WSRT) is used. In this experiment, the WSRT is performed at 5% level of significance [75]. The WSRT results between the IMRFO and other optimizers are listed in Table 5. In Table 5 and Table 6, ‘=’ signifies that there is no significant difference between the IMRFO and other algorithms, ‘+’ signifies that the null hypothesis is rejected and the IMRFO outperforms other ones and ‘−’ vice versa. The statistical results of ‘=’, ‘−’ and ‘+’ for all the algorithms are given in the last row of each table. From Table 5 and Table 6, it can be observed that there is a statistical significance difference between the IMRFO and other optimizers and the performance of the IMRFO is superior to the other eight competitive optimizers on the majority of test tasks.

Table 5.

WSRT results between IMRFO and PSO, GSA, MRFO and WOA.

Table 6.

WSRT results between IMRFO and CLPSO, CMA-ES, PSOGSA and IGWO.

To further evaluate the difference between the IMRFO and its counterparts and rank them statistically, another statistical test, the Friedman Test (FT), is employed based on the average performance of the algorithms in this study. The performance ranks of the FT for each of the 29 functions among all the considered algorithms are given in Table 7 and their ranks, on average, are depicted in Figure 11, in which the lower rank denotes the better algorithm. Regarding the results in Table 7, the IMRFO can achieve the best results on 12 functions; a WOA can achieve the best results on 3 functions; and an IGWO can achieve the best results on 14 functions. Observing Figure 11, the IMRFO offers the lowest rank of all the comparative algorithms, indicating that the IMRFO can achieve the best performance, followed by IGWO, MRFO and CLPSO. Consequently, this statistical test proves the effectiveness and superiority of the combination of those strategies proposed in the IMRFO.

Table 7.

Performance rank of algorithms for each function.

Figure 11.

Average ranks of algorithms based on FT on 29 functions.

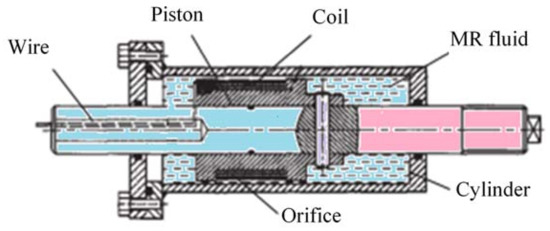

5. Optimal Parameter Identification of MR Damper

5.1. Principle of Operation

The MR damper is a kind of intelligent semi-active control device; controlling the size of the coil in the current instructions can change the strength of the magnetic field, which directly affects the viscosity coefficient of magnetorheological fluids, thus working to control the outputs of the damper. The general structure of the MR damper is plotted in Figure 12 [76]. The MR fluid is enclosed in a cylinder and flows through a small orifice; meanwhile, a magnetizing coil is enclosed in the piston. When a current is fed to the coil, the particles suspended in the MR fluid are aligned, this enables the fluid to change from the liquid state to the semisolid state within milliseconds, thus generating a controllable damping force.

Figure 12.

Structure of the MR damper.

The mechanical performance test of the MR damper is carried out on the tensile test bed. The experimental platform uses the amplitude signals of different frequencies from the exciter and the current provided by the MR shimmy damper, which are employed to generate some data signals, i.e., the damping force and cylinder rod displacement. The sinusoidal signals are used to generate the excitation in the MR damper, which can be expressed as follows:

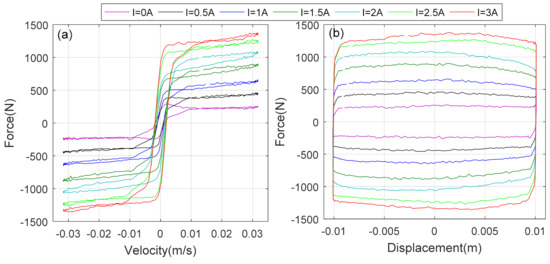

where x is the displacement of the damper piston, A is the amplitude of signals, f is the frequency of signals and t is the time. When the amplitude and frequency of the signals are constant, the displacements versus time for the different loading currents can be generated. Therefore, the dynamic responses of the MR damper, i.e., damping force–displacement and damping force–velocity, can be obtained by test data processing under various operating conditions. Figure 13 shows the typical damping force–displacement and damping force–velocity test curves for the amplitude A = 10 mm and frequency f = 0.5 Hz [76].

Figure 13.

(a) Damping force−displacement test curves, (b) and damping force–velocity test curves.

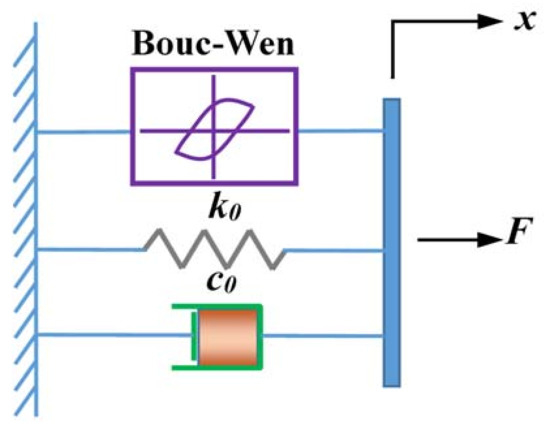

5.2. Bouc–Wen Model

Various MR models had been proposed to reproduce the dynamic responses of the MR damper, among which the Bouc–Wen model is one of the most commonly employed models owing to its good hysteretic characteristics and strong universality [77]; the Bouc–Wen model is depicted in Figure 14.

Figure 14.

Bouc−Wen model of a MR damper.

The mechanical behavior of the Bouc–Wen model is described as follows:

where x is the damper displacement, c0 is the damping coefficient of a dashpot, x0 is the initial deflection of spring with the stiffness k0, z is the hysteretic variable and, α, β, γ and n are the model parameters of the MR damper, which are given as follows [76]:

The following 15 parameters need to be determined in our study:

When these parameters are determined, the output damping force needs to be calculated using the Bouc–Wen model. Therefore, the Bouc–Wen model is established using SIMULINK.

5.3. Simulation Results and Analysis

After the establishment of the model, a fitness function should be defined that can evaluate the quality of the model with the given parameters. Thus, in this study, the mean error rate (MER) between the simulation data and experimental data is employed as the following fitness function [76]:

where m is the number of damping forces, c is the number of the constant loading currents, and are the ith experimental force and the ith simulation force from the model under the jth loading current, respectively and is the error rate under a loading current. Therefore, to find the optimal model parameters, the proposed IMRFO algorithm is adopted, and the results are compared with those from the other optimizers.

The experimental data, i.e., the damper amplitude, velocity and the outputted damper force, are obtained under a wide range of operating conditions. Table 8 gives the test operating conditions. In Table 8, the working frequencies are set to 0.5, 1 and 1.5 Hz, while the amplitude is set 10 mm, respectively; moreover, the loading current is set from 0 to 3 A with the step length 0.5 A. Thus, there are three combination cases of frequency and displacement, and there are seven current types for each of the combination cases. For each case, the results offered by the IMRFO are compared with those offered by some other algorithms. For all the algorithms, the population size and the maximum FEs are set to 30 and 6000, respectively. For different loading currents, the search space of each parameter will be slightly different. For example, when I = 0.5 A, the search space of the 15 parameters is given as follows:

Lb = [104, 104, 103, 10−2, 100, 106, 102, 102, 103, −1, −10, −100, 100, 0.1, 10−4];

Ub = [105, 105, 104, 1, 103, 107, 103, 104, 104, −0.1, −1, −1, 103, 1, 1].

Table 8.

Test operating conditions of Bouc–Wen model.

Table 9 lists the experimental results provided by the IMRFO and its competitors for three different cases. From Table 9, the IMRFO yields the most favorable performance in case one, in terms of ‘Mean’ and ‘Std’ followed by WOA, IGWO and CMA-ES; in case two, the IMRFO achieves the most reliable performance with comparison to other algorithms, followed by IGWO, PSOGSA and CLPSO; in case three, the IMRFO also offers better results than the other optimizers, followed by IGWO, WOA and CMA-EA. Therefore, Table 9 manifests that the IMRFO obtains more stable high-quality solutions than its counterparts when solving the Bouc–Wen model with different operating conditions. The 15 parameters of the Bouc–Wen are provided in Table 10 using different algorithms for the loading current = 0.5 A.

Table 9.

Results provided using different algorithms for three cases.

Table 10.

Parameters of Bouc–Wen model for loading current = 0.5 A.

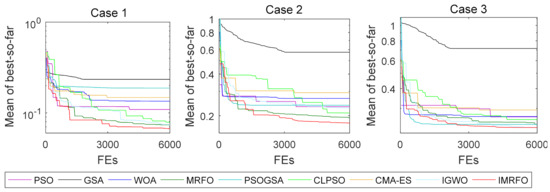

The convergence curves of all the algorithms for the three cases are depicted in Figure 15. It can be observed that the IMRFO displays the highest convergence rate during the entire optimization process for cases one and two. For case three, the convergence rate of the IMRFO is significantly superior to the other competitors at the later optimization process. Moreover, the IMRFO offers the final solutions with the highest precision in the three cases, demonstrating its superior convergence performance for finding the optimum solution.

Figure 15.

Convergence curves of algorithms for three cases.

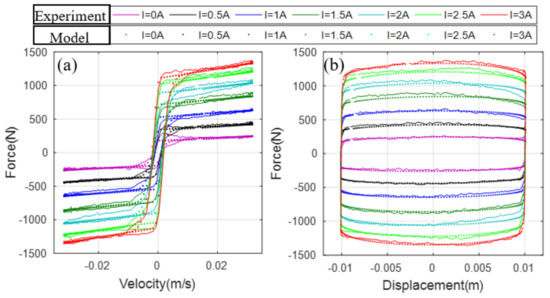

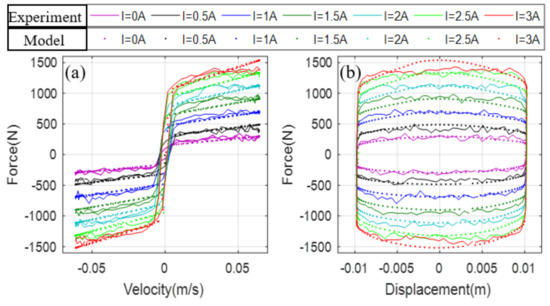

The comparisons between the experimental data and simulated results from the Bouc–Wen model using the parameters identified provided by the IMRFO are shown in Figure 16, in which the damping forces obtained from the experiment are plotted in solid lines, while the damping forces from the model are plotted in dots. From Figure 16, the damping force from the experimental data increases with the increase in the loading currents, and the increasing amplitude decreases gradually. Observing the damping force–displacement test curves and damping force–velocity test curves in Figure 16, for each of the different loading currents, the simulated data from the IMRFO-based Bouc–Wen model can agree well with the experimental data; these results show the effectiveness of the proposed method in identifying the damping parameters of the MR damper.

Figure 16.

Comparison between experimental data and simulated results for case 1 using proposed algorithm, (a) damping force–velocity test curves, (b) and damping force–displacement test curves.

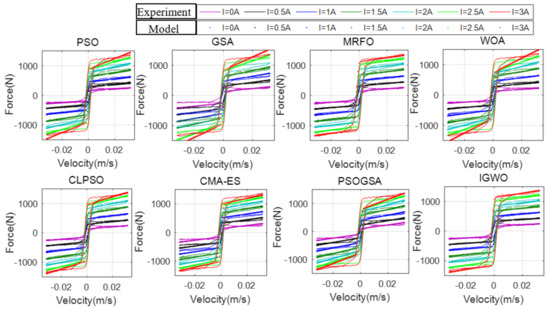

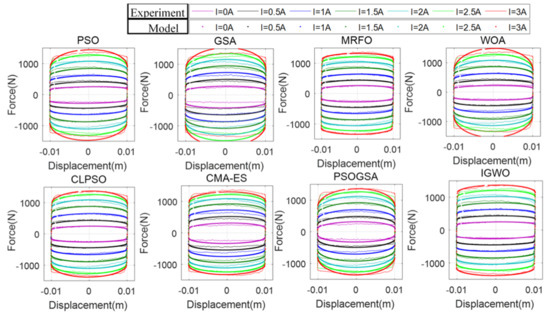

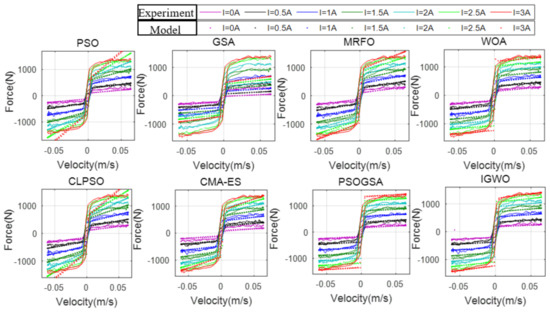

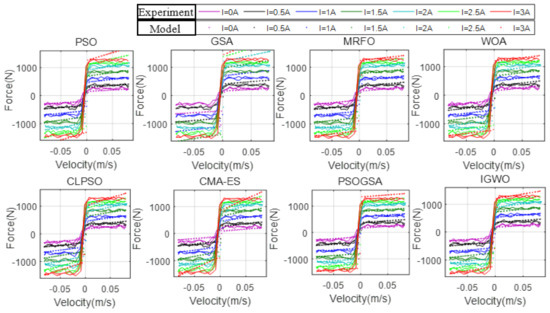

Figure 17 and Figure 18 show the comparison of the damping force–velocity and damping force–displacement test curves between the experimental data and the simulated results for case one using the other different algorithms, respectively. It can be seen from Figure 17 and Figure 18 that the matching contains some big discrepancies between the experimental data and the simulated data from the Bouc–Wen models provided by PSO, GSA, WOA, CLPSO, CMA-ES and PSOGSA. Although it is difficult to visually identify the matching quality among the IMRFO, MRFO and IGWO from these figures, the IMRFO is more competitive than the other methods from Table 9.

Figure 17.

Comparison of damping force–velocity test curves between experimental data and simulated results for case 1 using different algorithms.

Figure 18.

Comparison of damping force–displacement test curves between experimental data and simulated results for case 1 using different algorithms.

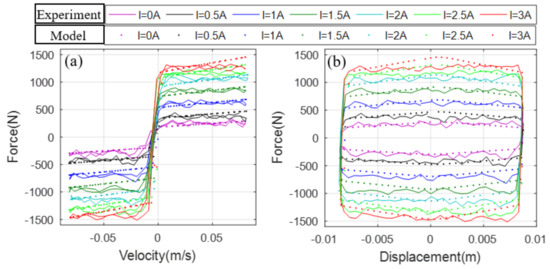

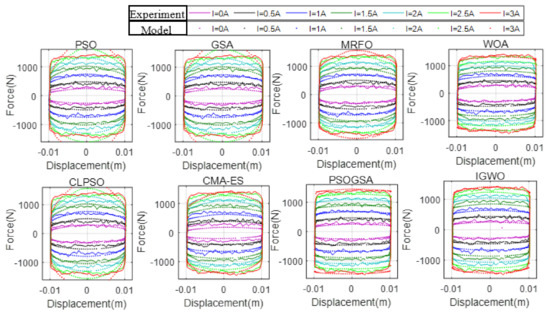

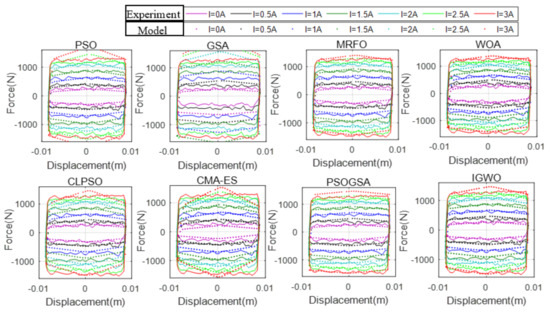

To verify whether the IMRFO-based Bouc–Wen model can reflect the mechanical properties of the MR damper, two extensive simulations, i.e., cases two and three, are performed by changing the frequency of the model. The comparisons between the experimental data and simulated data from the Bouc–Wen model using the parameters identified by the IMRFO for cases two and three are shown in Figure 19 and Figure 20, respectively; Figure 21 and Figure 22 show the comparison of the damping force–velocity test curves between the experimental data and the simulated data from the Bouc–Wen model using the parameters identified by the other different algorithms for cases two and three, respectively; and Figure 23 and Figure 24 show the comparison of the damping force–displacement test curves between the experimental data and the simulated data from the Bouc–Wen model using the parameters identified by the other different algorithms for cases two and three, respectively. These results reveal that the simulated data from the IMRFO-based model show better coincidence with the experimental data compared to its competitors, and the IMRFO-based model can accurately describe the dynamic performance of the MR damper with different frequencies.

Figure 19.

Comparison between experimental data and simulated results for case 2 using proposed algorithm, (a) damping force–velocity test curves, (b) and damping force–displacement test curves.

Figure 20.

Comparison between experimental data and simulated results for case 3 using proposed algorithm, (a) damping force–velocity test curves, (b) and damping force–displacement test curves.

Figure 21.

Comparison of damping force–velocity test curves between experimental data and simulated results for case 2 using other different algorithms.

Figure 22.

Comparison of damping force–velocity test curves between experimental data and simulated results for case 3 using other different algorithms.

Figure 23.

Comparison of damping force–displacement test curves between experimental data and simulated results for case 2 using other different algorithms.

Figure 24.

Comparison of damping force–displacement test curves between experimental data and simulated results for case 3 using other different algorithms.

To evaluate the matching performance of the MR damper models from different algorithms, an indicator named the mean Nash–Sutcliffe efficiency (MNSE) is employed; the MNSE is formulated as follows:

where is a single Nash–Sutcliffe efficiency (NSE) value under a given loading current. In general, the closer the value of the NSE is to one, the better the matching between the experimental data and simulated data from the model will be.

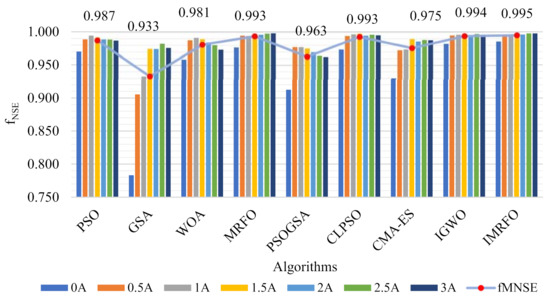

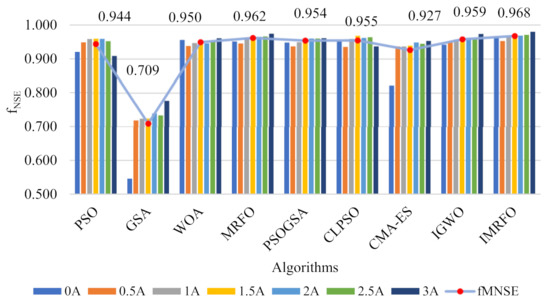

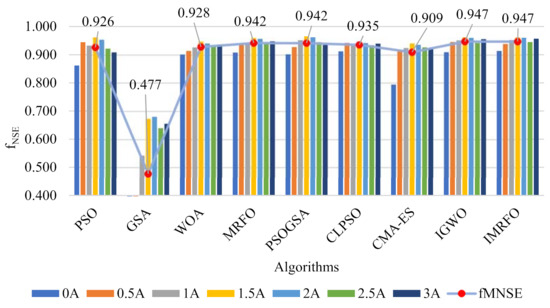

Figure 25, Figure 26 and Figure 27 show the matching comparison of the models based on different algorithms for cases 1–3 using the NSE, respectively. In these figures, the NSE of the models based on different algorithms under each of all the considered loading currents is depicted for each case, and the MNSE of the models based on each algorithm under all the considered loading currents is provided for each case. From Figure 25 (case one), the IMRFO-based model obtains the biggest NSE for each loading current and the MNSE compared to other competitors, demonstrating the superior matching performance of the model from our optimizer. Thus, the results of the NSE in Figure 25 show that the IMRFO-based model provides the best simulated results when tackling case one. From Figure 26, it can be found that the IMRFO-based model obtains the best matching performance measured using the NSE; the MRFO-based model is the second best, followed by the IGWO-based, CLPSO-based and PSOGSA-based models. Note that the model from GSA yields the worst matching performance in terms of the NSE, manifesting the model provides the unfavorable simulated data for case two. From Figure 27, for case three, the IMRFO-based model offers the most favorable matching performance that is almost the same as that offered by the IGWO-based model, followed by the PSOGSA-based, MRFO-based and CLPSO-based models.

Figure 25.

Matching comparison of models based on different algorithms for case 1 using NSE.

Figure 26.

Matching comparison of models based on different algorithms for case 2 using NSE.

Figure 27.

Matching comparison of models based on different algorithms for case 3 using NSE.

Table 11 summarizes the matching results of the models from different algorithms for cases 1–3. From Table 11, the IMRFO-based model attains the results that are significantly better than, or almost the same as, the results of the models from the other eight peer algorithms for cases 1–3. Additionally, the average MNSE of the three cases from the IMRFO-based model outperforms that from the models based on the other algorithms, indicating the best overall performance of the IMRFO-based model. Based on the above analysis, the proposed IMRFO can provide superior and very competitive optimization performance in identifying the control parameters of the MR damper model.

Table 11.

Matching results of models from different algorithms for cases 1–3.

In order to better evaluate the performance of the algorithm, we need to study not only the solution quality, but also the computational burden. Therefore, the running time of the IMRFO and its competitor are compared under identical conditions. The mean running time of the algorithms for cases 1–3 is given in Table 12. From Table 12, each algorithm spends most of the time in executing SIMULINK. The mean running time of the IMRFO is very close to that of the other algorithms; there was no significant difference between the IMRFO and others in terms of running time; however, the solution quality of the IMRFO is significantly improved compared to the other methods.

Table 12.

Mean running time of different algorithms for cases 1–3.

6. Conclusions

In this study, a new hybrid MRFO is proposed for solving the global optimization and parameter identification of the MR damper models. In this improvement, the searching control factor is introduced to the algorithm to alleviate the weak exploration ability, and the adaptive weight coefficient is employed to promote the search efficiency of the algorithm. Additionally, the Morlet wavelet mutation strategy is integrated into the algorithm to enhance the ability of the algorithm to step out of stagnation and the convergence rate. Two sets of the challenging benchmarks, i.e., CEC 2014 and CEC 2017, are used to estimate the optimization performance of the IMRFO and the results demonstrate that the IMRFO is superior to the other algorithms. A comprehensive simulation based on the MR damper models is established, including three different test working conditions, in which 15 control parameters need to be identified using the proposed IMRFO algorithm. According to these identified parameters, the damping force–velocity and damping force–displacement test curves can be obtained, which show a highly satisfactory coincidence with the experimental data. Meanwhile, the comparison analysis shows that the IMRFO-based MR damper model can provide the best simulation results.

Eventually, the results of the benchmark functions and the optimal parameter identification of the MR damper model discover that the proposed optimizer has significant potential when solving engineering optimization problems. The Bouc–Wen damper model identified by the IMRFO is not only consistent with the experimental data involved in the simulation, but it can also accurately express the dynamic response of the Bouc–Wen damper model with different amplitudes and frequencies under sinusoidal excitation.

The IMRFO introduced improved strategies that achieve competitive performance verified by the benchmark experiments and the MR damper model. However, we have found out that there are two open problems that need to be further investigated in our future work. One issue is how to design an efficient adaptive strategy to adjust the movement step of individuals according to their neighborhoods’ solutions. Another issue is how to establish an information sharing mechanism to promote the searching efficiency. Moreover, in future work, it would be interesting to further extend the IMRFO to other complex MR damper models in which more different control parameters of the models need to be identified.

Author Contributions

Conceptualization, Y.L. and W.Z.; methodology, Y.L., W.Z. and L.W.; validation, L.W; writing—original draft preparation, Y.L.; writing—review and editing, Y.L., W.Z. and L.W. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Natural Science Foundation of China under grants 11972144, 12072098, 11790282, 12072208 and 52072249, the One Hundred Outstanding Innovative Scholars of Colleges and Universities in Hebei Province under grant SLRC2019022 and the Opening Foundation of State Key Laboratory of Shijiazhuang Tiedao University Province under grant ZZ2021-13.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Tse, T.; Chang, C.C. Shear-mode rotary magnetorheological damper for small-scale structural control experiments. J. Struct. Eng. 2004, 130, 904–911. [Google Scholar] [CrossRef]

- Liu, Y.Q.; Yang, S.P.; Liao, Y.Y. Simulation analysis on lateral semi-active control of suspension system for high-speed emus. J. Vib. Shock 2010, 29, 51–54. [Google Scholar]

- Atabay, E.; Ozkol, I. Application of a magnetorheological damper modeled using the current–dependent bouc–wen model for shimmy suppression in a torsional nose landing gear with and without freeplay. J. Vib. Control 2014, 20, 1622–1644. [Google Scholar] [CrossRef]

- Yang, S.; Li, S.; Wang, X.; Gordaninejad, F.; Hitchcock, G. A hysteresis model for magneto-rheological damper. Int. J. Nonlinear Sci. Numer. Simul. 2005, 6, 139–144. [Google Scholar] [CrossRef]

- Xu, Z.D.; Xu, Y.W.; Wang, C.; Zhao, Y.L.; Ji, B.H.; Du, Y.L. Force tracking model and experimental verification on a novel magnetorheological damper with combined compensator for stay cables of bridge. Structures 2021, 32, 1971–1985. [Google Scholar] [CrossRef]

- Yu, J.; Dong, X.; Su, X.; Qi, S. Development and characterization of a novel rotary magnetorheological fluid damper with variable damping and stiffness. Mech. Syst. Signal Process. 2022, 165, 108320. [Google Scholar] [CrossRef]

- Boreiry, M.; Ebrahimi-Nejad, S.; Marzbanrad, J. Sensitivity analysis of chaotic vibrations of a full vehicle model with magnetorheological damper. Chaos Solitons Fractals 2019, 127, 428–442. [Google Scholar] [CrossRef]

- Patel, D.M.; Upadhyay, R.V. Predicting the thermal sensitivity of MR damper performance based on thermo-rheological properties. Mater. Res. Express 2018, 6, 015707. [Google Scholar] [CrossRef]

- Gołdasz, J.; Sapinski, B. Influence of Temperature on the MR Squeeze-Mode Damper. In Proceedings of the 2019 20th International Carpathian Control Conference (ICCC); IEEE: Krakow-Wieliczka, Poland, 2019; pp. 1–6. [Google Scholar]

- Versaci, M.; Cutrupi, A.; Palumbo, A. A magneto-thermo-static study of a magneto-rheological fluid damper: A finite element analysis. IEEE Trans. Magn. 2020, 57, 1–10. [Google Scholar] [CrossRef]

- Giuclea, M.; Sireteanu, T.; Stancioiu, D.; Stammers, C.W. Model parameter identification for vehicle vibration control with magnetorheological dampers using computational intelligence methods. Proc. Inst. Mech. Eng. Part I J. Syst. Control Eng. 2004, 218, 569–581. [Google Scholar] [CrossRef]

- Gogna, A.; Tayal, A. Etaheuristics: Review and application. J. Exp. Theor. Artif. Intell. 2013, 25, 503–526. [Google Scholar] [CrossRef]

- Kwok, N.M.; Ha, Q.P.; Nguyen, T.H.; Li, J.; Samali, B. A novel hysteretic model for magnetorheological fluid dampers and parameter identification using particle swarm optimization. Sens. Actuators A Phys. 2006, 132, 441–451. [Google Scholar] [CrossRef]

- Gou, J.; Lei, Y.X.; Guo, W.P.; Wang, C.; Cai, Y.Q.; Luo, W. A novel improved particle swarm optimization algorithm based on individual difference evolution. Appl. Soft Comput. 2017, 57, 468–481. [Google Scholar] [CrossRef]

- Zhao, W.; Shi, T.; Wang, L.; Cao, Q.; Zhang, H. An adaptive hybrid atom search optimization with particle swarm optimization and its application to optimal no-load PID design of hydro-turbine governor. J. Comput. Des. Eng. 2021, 8, 1204–1233. [Google Scholar]

- Caselli, N.; Soto, R.; Crawford, B.; Valdivia, S.; Olivares, R. A self-adaptive cuckoo search algorithm using a machine learning technique. Mathematics 2021, 9, 1840. [Google Scholar] [CrossRef]

- Zhao, W.; Wang, L.; Zhang, Z. A novel atom search optimization for dispersion coefficient estimation in groundwater. Future Gener. Comput. Syst. 2019, 91, 601–610. [Google Scholar] [CrossRef]

- Soleimani Amiri, M.; Ramli, R.; Ibrahim, M.F.; Abd Wahab, D.; Aliman, N. Adaptive particle swarm optimization of PID gain tuning for lower-limb human exoskeleton in virtual environment. Mathematics 2020, 8, 2040. [Google Scholar] [CrossRef]

- Mirjalili, S. Moth-flame optimization algorithm: A novel nature-inspired heuristic paradigm. Knowl.-Based Syst. 2015, 89, 228–249. [Google Scholar] [CrossRef]

- Yang, X.S.; Gandomi, A.H. Bat algorithm: A novel approach for global engineering optimization. Eng. Comput. 2012, 29, 464–483. [Google Scholar] [CrossRef] [Green Version]

- Jain, M.; Singh, V.; Rani, A. A novel nature-inspired algorithm for optimization: Squirrel search algorithm. Swarm Evol. Comput. 2019, 44, 148–175. [Google Scholar] [CrossRef]

- Zhao, W.; Wang, L.; Zhang, Z. Artificial ecosystem-based optimization: A novel nature-inspired meta-heuristic algorithm. Neural Comput. Appl. 2019, 32, 1–43. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Li, M.D.; Zhao, H.; Weng, X.W.; Han, T. A novel nature-inspired algorithm for optimization: Virus colony search. Adv. Eng. Softw. 2016, 92, 65–88. [Google Scholar] [CrossRef]

- Pan, W.T. A new fruit fly optimization algorithm: Taking the financial distress model as an example. Knowl.-Based Syst. 2012, 26, 69–74. [Google Scholar] [CrossRef]

- Arora, S.; Singh, S. Butterfly optimization algorithm: A novel approach for global optimization. Appl. Soft Comput. 2019, 23, 715–734. [Google Scholar] [CrossRef]

- Dhiman, G.; Kumar, V. Spotted hyena optimizer: A novel bio-inspired based meta-heuristic technique for engineering applications. Adv. Eng. Softw. 2017, 114, 48–70. [Google Scholar] [CrossRef]

- Saremi, S.; Mirjalili, S.; Lewis, A. Grasshopper optimisation algorithm: Theory and application. Adv. Eng. Softw. 2017, 105, 30–47. [Google Scholar] [CrossRef] [Green Version]

- Yang, X.S. Flower pollination algorithm for global optimization. In Proceedings of the International Conference on Unconventional Computing and Natural Computation; Springer: Berlin/Heidelberg, Germany, 2012; pp. 240–249. [Google Scholar]

- Askarzadeh, A. A novel meta-heuristic method for solving constrained engineering optimization problems: Crow search algorithm. Comput. Struct. 2016, 169, 1–12. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef] [Green Version]

- EskandaR, H.; Sadollah, A.; Bahreininejad, A.; Hamdi, M. Water cycle algorithm-A novel metaheuristic optimization method for solving constrained engineering optimization problems. Comput. Struct. 2012, 110, 151–166. [Google Scholar] [CrossRef]

- Rashedi, E.; Nezamabadi-Pour, H.; Saryazdi, S. GSA: A gravitational search algorithm. J. Inf. Sci. 2009, 179, 2232–2248. [Google Scholar] [CrossRef]

- Zhao, W.; Wang, L.; Zhang, Z. Atom search optimization and its application to solve a hydrogeologic parameter estimation problem. Knowl.-Based Syst. 2019, 163, 283–304. [Google Scholar] [CrossRef]

- Hashim, F.A.; Houssein, E.H.; Mabrouk, M.S.; Al-Atabany, W.; Mirjalili, S. Henry gas solubility optimization: A novel physics-based algorithm. Future Gener. Comput. Syst. 2019, 101, 646–667. [Google Scholar] [CrossRef]

- Kaveh, A.; Talatahari, S. A novel heuristic optimization method: Charged system search. Acta Mech. 2010, 213, 267–289. [Google Scholar] [CrossRef]

- Kaveh, A.; Bakhshpoori, T. Water evaporation optimization: A novel physically inspired optimization algorithm. Comput. Struct. 2016, 167, 69–85. [Google Scholar] [CrossRef]

- Faramarzi, A.; Heidarinejad, M.; Stephens, B.; Mirjalili, S. Equilibrium optimizer: A novel optimization algorithm. Knowl.-Based Syst. 2020, 191, 105190. [Google Scholar] [CrossRef]

- Zhao, W.; Wang, L.; Zhang, Z. Supply-demand-based optimization: A novel economics-inspired algorithm for global optimization. IEEE Access 2019, 7, 73182–73206. [Google Scholar] [CrossRef]

- Molina, D.; Poyatos, J.; Del Ser, J.; García, S.; Hussain, A.; Herrera, F. Comprehensive taxonomies of nature-and bio-inspired optimization: Inspiration versus algorithmic behavior, critical analysis recommendations. Cogn. Comput. 2020, 12, 897–939. [Google Scholar] [CrossRef]

- Črepinšek, M.; Liu, S.H.; Mernik, M. Replication and comparison of computational experiments in applied evolutionary computing: Common pitfalls and guidelines to avoid them. Appl. Soft Comput. 2014, 19, 161–170. [Google Scholar] [CrossRef]

- Zhao, W.; Zhang, Z.; Wang, L. Manta ray foraging optimization: An effective bio-inspired optimizer for engineering applications. Eng. Appl. Artif. Intell. 2020, 87, 103300. [Google Scholar] [CrossRef]

- Alhumade, H.; Fathy, A.; Al-Zahrani, A.; Rawa, M.J.; Rezk, H. Optimal parameter estimation methodology of solid oxide fuel cell using modern optimization. Mathematics 2021, 9, 1066. [Google Scholar] [CrossRef]

- Ben, U.C.; Akpan, A.E.; Mbonu, C.C.; Ebong, E.D. Novel methodology for interpretation of magnetic anomalies due to two-dimensional dipping dikes using the Manta Ray Foraging Optimization. J. Appl. Geophys. 2021, 192, 104405. [Google Scholar] [CrossRef]

- Shaheen, A.M.; Ginidi, A.R.; El-Sehiemy, R.A.; Ghoneim, S.S. Economic power and heat dispatch in cogeneration energy systems using manta ray foraging optimizer. IEEE Access 2020, 8, 208281–208295. [Google Scholar] [CrossRef]

- Hemeida, M.G.; Ibrahim, A.A.; Mohamed, A.A.A.; Alkhalaf, S.; El-Dine, A.M.B. Optimal allocation of distributed generators DG based Manta Ray Foraging Optimization algorithm (MRFO). Ain Shams Eng. J. 2021, 12, 609–619. [Google Scholar] [CrossRef]

- Eid, A.; Abdelaziz, A.Y.; Dardeer, M. Energy loss reduction of distribution systems equipped with multiple distributed generations considering uncertainty using manta-ray foraging optimization. Int. J. Renew. Energy Dev. 2021, 10, 779–787. [Google Scholar] [CrossRef]

- Houssein, E.H.; Ibrahim, I.E.; Neggaz, N.; Hassaballah, M.; Wazery, Y.M. An efficient ECG arrhythmia classification method based on Manta ray foraging optimization. Expert Syst. Appl. 2021, 181, 115131. [Google Scholar] [CrossRef]

- Duman, S.; Dalcalı, A.; Özbay, H. Manta ray foraging optimization algorithm–based feedforward neural network for electric energy consumption forecasting. Int. Trans. Electr. Energy Syst. 2021, e12999. [Google Scholar] [CrossRef]

- Passino, K.M. Bacterial foraging optimization. Int. J. Swarm Intell. Res. IJSIR 2010, 1, 1–16. [Google Scholar] [CrossRef]

- Turgut, O.E. A novel chaotic manta-ray foraging optimization algorithm for thermo-economic design optimization of an air-fin cooler. SN Appl. Sci. 2021, 3, 1–36. [Google Scholar] [CrossRef]

- Houssein, E.H.; Emam, M.M.; Ali, A.A. Improved manta ray foraging optimization for multi-level thresholding using COVID-19 CT images. Neural Comput. Appl. 2021, 104827. [Google Scholar]

- Ćalasan, M.P.; Jovanović, A.; Rubežić, V.; Mujičić, D.; Deriszadeh, A. Notes on parameter estimation for single-phase transformer. IEEE Trans. Ind. Appl. 2020, 56, 3710–3718. [Google Scholar] [CrossRef]

- Hassan, M.H.; Houssein, E.H.; Mahdy, M.A.; Kamel, S. An improved manta ray foraging optimizer for cost-effective emission dispatch problems. Eng. Appl. Artif. Intell. 2021, 100, 104155. [Google Scholar] [CrossRef]

- Elaziz, M.A.; Yousri, D.; Al-qaness, M.A.; AbdelAty, A.M.; Radwan, A.G.; Ewees, A.A. A Grunwald–Letnikov based Manta ray foraging optimizer for global optimization and image segmentation. Eng. Appl. Artif. Intell. 2021, 98, 104105. [Google Scholar] [CrossRef]

- Elaziz, M.A.; Hosny, K.M.; Salah, A.; Darwish, M.M.; Lu, S.; Sahlol, A.T. New machine learning method for image-based diagnosis of COVID-19. PLoS ONE 2020, 15, e0235187. [Google Scholar] [CrossRef]

- Jena, B.; Naik, M.K.; Panda, R.; Abraham, A. Maximum 3D Tsallis entropy based multilevel thresholding of brain MR image using attacking Manta Ray foraging optimization. Eng. Appl. Artif. Intell. 2021, 103, 104293. [Google Scholar] [CrossRef]

- Yang, W.; Tang, G.; Hao, Y.; Wang, J. A novel framework for forecasting, evaluation and early-warning for the influence of PM10 on public health. Atmosphere 2021, 12, 1020. [Google Scholar] [CrossRef]

- Ramadan, H.S.; Helmi, A.M. Optimal reconfiguration for vulnerable radial smart grids under uncertain operating conditions. Comput. Electr. Eng. 2021, 93, 107310. [Google Scholar] [CrossRef]

- Houssein, E.H.; Zaki, G.N.; Diab, A.A.Z.; Younis, E.M. An efficient Manta Ray Foraging Optimization algorithm for parameter extraction of three-diode photovoltaic model. Comput. Electr. Eng. 2021, 94, 107304. [Google Scholar] [CrossRef]

- Emary, E.; Zawbaa, H.M.; Sharawi, M. Impact of Lévy flight on modern meta-heuristic optimizers. Appl. Soft Comput. 2019, 75, 775–789. [Google Scholar] [CrossRef]

- Hassan, M.H.; Kamel, S.; Selim, A.; Khurshaid, T.; Domínguez-García, J.L. A modified Rao-2 algorithm for optimal power flow incorporating renewable energy sources. Mathematics 2021, 9, 1532. [Google Scholar] [CrossRef]

- Zhao, W.; Wang, L. Multiple-Kernel MRVM with LBFO algorithm for fault diagnosis of broken rotor bar in induction motor. IEEE Access 2019, 7, 182173–182184. [Google Scholar] [CrossRef]

- Sun, Y.; Wang, X.; Chen, Y.; Liu, Z. A modified whale optimization algorithm for large-scale global optimization problems. Expert Syst. Appl. 2018, 114, 563–577. [Google Scholar] [CrossRef]

- Viswanathan, G.M.; Afanasyev, V.; Buldyrev, S.V.; Murphy, E.J.; Prince, P.A.; Stanley, H.E. Lévy flight search patterns of wandering albatrosses. Nature 1996, 381, 413–415. [Google Scholar] [CrossRef]

- Mantegna, R.N. Fast, accurate algorithm for numerical simulation of Levy stable stochastic processes. Phys. Rev. E 1994, 49, 4677–4683. [Google Scholar] [CrossRef] [PubMed]

- Ling, S.H.; Lu, H.; Chan, K.Y.; Lam, H.K.; Yeung, B.; Leung, F.H. Hybrid particle swarm optimization with wavelet mutation and its industrial applications. IEEE transactions on systems, man and cybernetics. Part B Cybern. 2008, 38, 743–763. [Google Scholar] [CrossRef] [Green Version]

- Guo, W.; Liu, T.; Dai, F.; Xu, P. An improved whale optimization algorithm for forecasting water resources demand. Appl. Soft Comput. 2019, 86, 105925. [Google Scholar] [CrossRef]

- Liang, J.J.; Qu, B.Y.; Suganthan, P.N. Problem Definitions and Evaluation Criteria for the CEC 2014 Special Session and Competition on Single Objective Real-Parameter Numerical Optimization; Technical Report 201311; Nanyang Technological University: Singapore, 2013; Volume 635, p. 490. [Google Scholar]

- Suganthan, P.N.; Hansen, N.; Liang, J.J.; Deb, K.; Chen, Y.P.; Auger, A.; Tiwari, S. Problem Definitions and Evaluation Criteria for the CEC 2005 Special Session on Real-Parameter Optimization; KanGAL Report 2005005; Nanyang Technological University: Singapore, 2005; pp. 1–50. [Google Scholar]

- Liang, J.J.; Qin, A.K.; Suganthan, P.N.; Baskar, S. Comprehensive learning particle swarm optimizer for global optimization of multimodal functions. IEEE Trans. Evol. Comput. 2006, 10, 281–295. [Google Scholar] [CrossRef]

- Hansen, N.; Müller, S.D.; Koumoutsakos, P. Reducing the time complexity of the derandomized evolution strategy with covariance matrix adaptation (CMA-ES). Evol. Comput. 2003, 11, 1–18. [Google Scholar] [CrossRef]

- Nadimi-Shahraki, M.H.; Taghian, S.; Mirjalili, S. An improved grey wolf optimizer for solving engineering problems. Expert Syst. Appl. 2021, 166, 113917. [Google Scholar] [CrossRef]

- Awad, N.H.; Ali, M.Z.; Liang, J.J.; Qu, B.Y.; Suganthan, P.N. CEC 2017 Special Session on Single Objective Numerical Optimization Single Bound Constrained Real-Parameter Numerical Optimization. 2017. Available online: https://www.researchgate.net/profile/Cholmin-Rim/publication/311671283_Adaptive_Niching_Chaos_Optimization_Algorithm_CEC_2017_Competition_on_Single_Objective_Real-Parameter_Numerical_Optimization/links/58d385f892851c319e570626/Adaptive-Niching-Chaos-Optimization-Algorithm-CEC-2017-Competition-on-Single-Objective-Real-Parameter-Numerical-Optimization.pdf (accessed on 6 September 2021).

- Derrac, J.; García, S.; Molina, D.; Herrera, F. A practical tutorial on the use of nonparametric statistical tests as a methodology for comparing evolutionary and swarm intelligence algorithms. Swarm Evol. Comput. 2011, 1, 3–18. [Google Scholar] [CrossRef]

- Liu, Y.Q.; Yang, S.P.; Liao, Y.Y. A quantizing method for determination of controlled damping parameters of magnetorheological damper models. J. Intell. Mater. Syst. Struct. 2011, 22, 2127–2136. [Google Scholar] [CrossRef]

- Wen, Y.K. Method of random vibration of hysteretic systems. J. Eng. Mech. Div. ASCE 1976, 102, 249–263. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).