Advances in the Approximation of the Matrix Hyperbolic Tangent

Abstract

1. Introduction and Notation

1.1. The Matrix Exponential Function-Based Approach

1.2. The Taylor Series-Based Approach

2. Algorithms for Computing the Matrix Hyperbolic Tangent Function

2.1. The Matrix Exponential Function-Based Algorithm

| Algorithm 1: Given a matrix , this algorithm computes by means of the matrix exponential function. |

| 1 2 Calculate the scaling factor , the order of Taylor polynomial and compute by using the Taylor approximation /* Phase I (see Algorithm 2 from [26]) */ 3 /* Phase II: Work out by (3) */ 4 for to s do /* Phase III: Recover by (5) */ 5 6 Solve for X the system of linear equations 7 8 end |

| Algorithm 2: Given a matrix , this algorithm computes T = tanh(A) by means of the Taylor approximation Equation (8) and the Paterson–Stockmeyer method. |

| 1 Calculate the scaling factor , the order of Taylor approximation , and the required matrix powers of /* Phase I (Algorithm 4) */ 2 /* Phase II: Compute Equation (8) */ 3 for to s do /* Phase III: Recover by Equation (5) */ 4 5 Solve for X the system of linear equations > 6 7 end |

2.2. Taylor Approximation-Based Algorithms

- .

- and the series , where is given by Equation (6) and is the index of , is convergent at the point , .

| Algorithm 3: Given a matrix , this algorithm computes by means of the Taylor approximation Equation (8) and the Sastre formulas. |

| 1 Calculate the scaling factor , the order of Taylor approximation , and the required matrix powers of /* Phase I (Algorithm 5) */ 2 /* Phase II: Compute Equation (8) */ 3 for to s do/* Phase III: Recover by Equation (5) */ 4 5 Solve for X the system of linear equations 6 7 end |

2.3. Polynomial Order m and Scaling Value s Calculation

| Algorithm 4: Given a matrix , the values from Table 1, a minimum order , a maximum order , with , and a tolerance , this algorithm computes the order of Taylor approximation , , and the scaling factor s, together with and the necessary powers of for computing from (9). |

| 1; ; ; 2for toqdo 3 4end 5 Compute from and ; /* see [30] */ 6 while and do 7 8 if then 9 10 11 end 12 Compute from and ; /* see [30] */ 13 if and then 14 15 end 16 end 17 18 if then 19 20 if then 21 22 23 end 24 25 for toq do 26 27 end 28 end 29 |

| Algorithm 5: Given a matrix , the values from Table 2, and a tolerance , this algorithm computes the order of Taylor approximation and the scaling factor s, together with and the necessary powers of for computing from (10), (11) or (12). |

| 1; 2 Compute from and 3 ; 4 while and do 5 6 if then 7 Compute from and 8 else 9 10 Compute from and 11 end 12 if and then 13 ; 14 end 15 end 16 if then 17 18 end 19 if then 20 21 if then 22 23 end 24 25 26 27 if then 28 29 end 30 end 31 |

3. Numerical Experiments

- tanh_expm: this code corresponds to the implementation of Algorithm 1. For obtaining and s and computing , it uses function exptaynsv3 (see [26]).

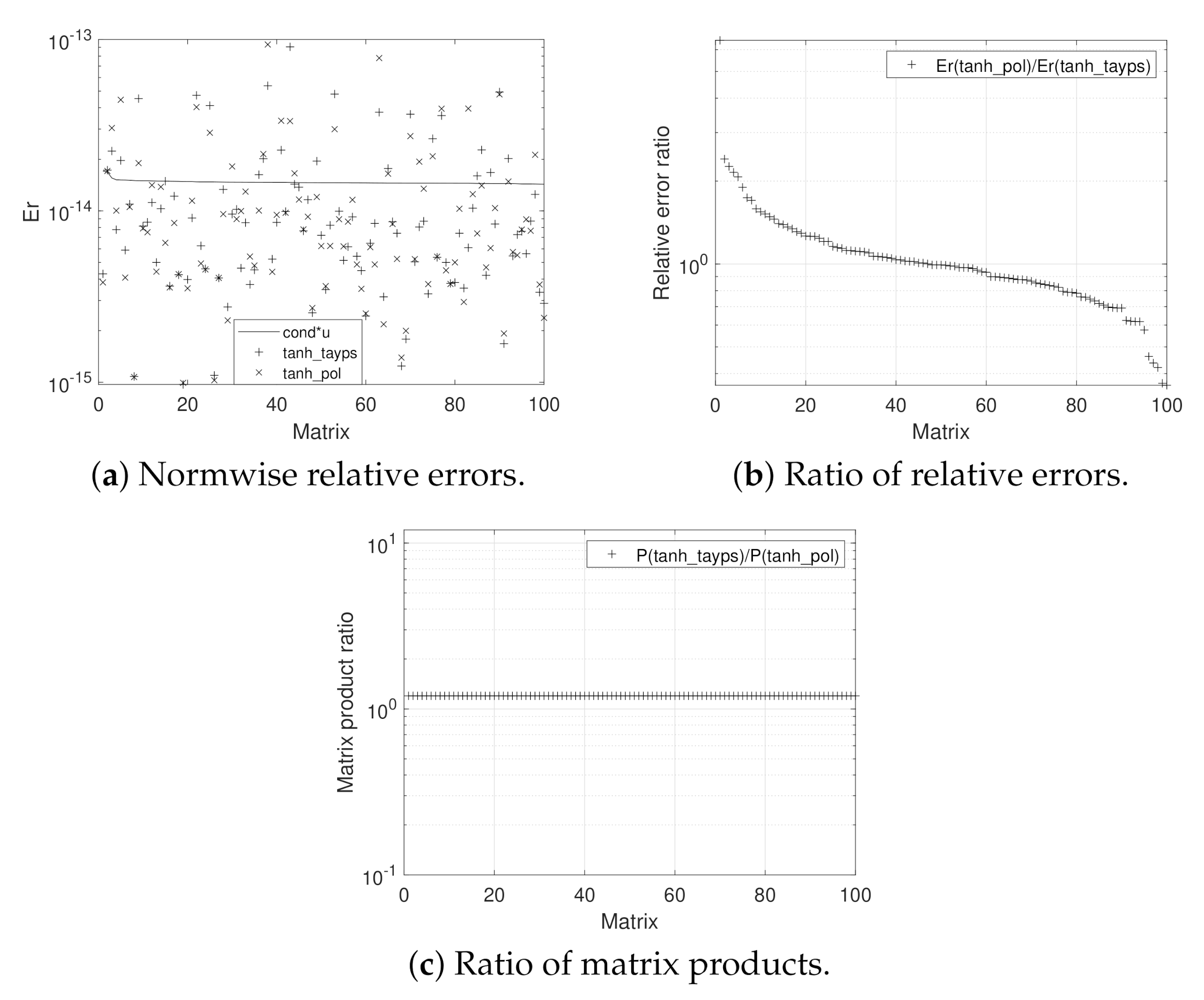

- tanh_tayps: this development, based on Algorithm 2, incorporates Algorithm 4 for computing m and s, where m takes values in the same set than the tanh_expm code. The Paterson–Stockmeyer method is considered to evaluate the Taylor matrix polynomials.

- tanh_pol: this function, corresponding to Algorithm 3, employs Algorithm 5 in the m and s calculation, where . The Taylor matrix polynomials are evaluated by means of Sastre formulas.

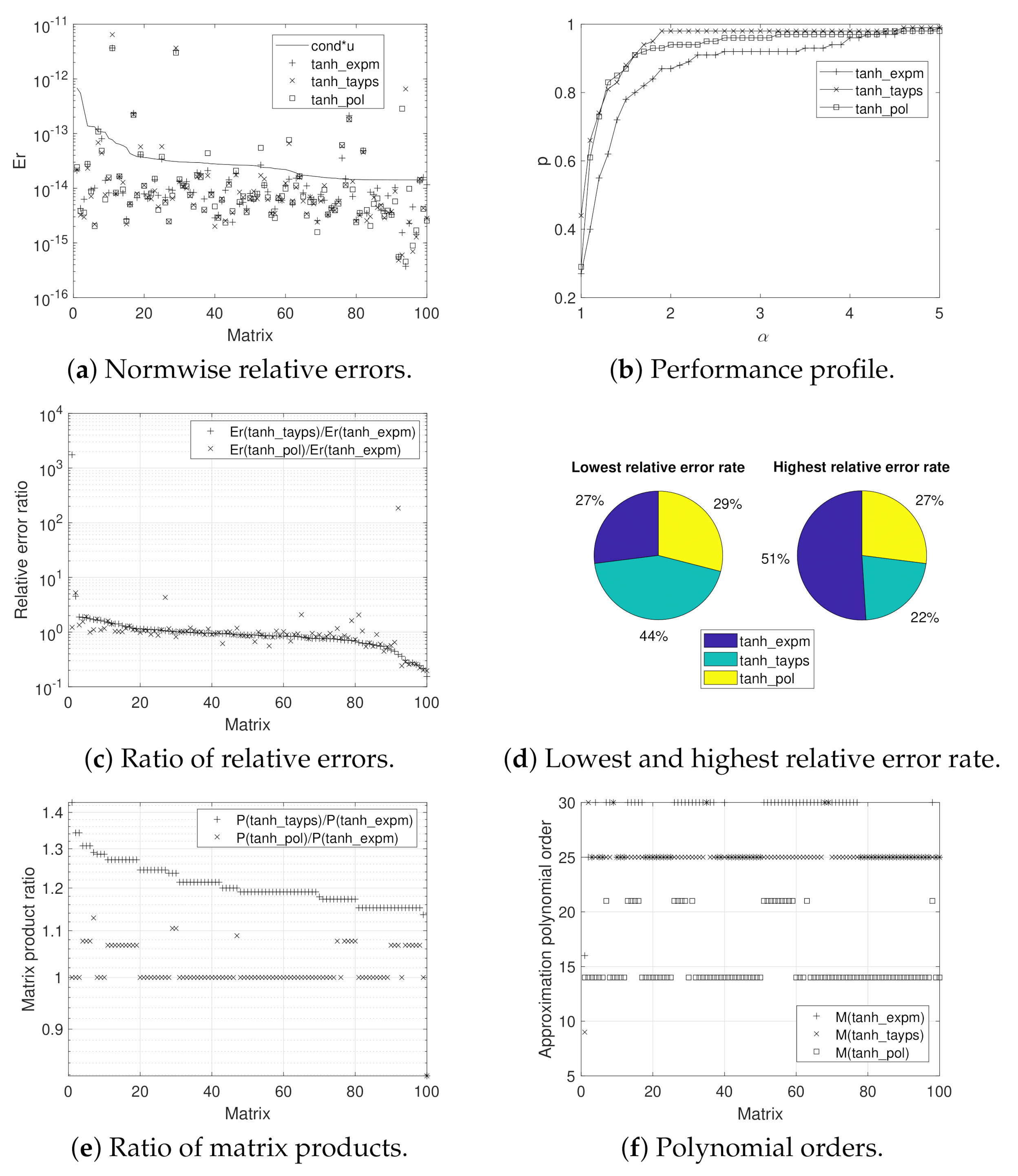

- (a)

- Diagonalizable complex matrices: one hundred diagonalizable complex matrices obtained as the result of , where D is a diagonal matrix (with real and complex eigenvalues) and matrix V is an orthogonal matrix, , being H a Hadamard matrix and n is the matrix order. As 1-norm, we have that . The matrix hyperbolic tangent was calculated “exactly” as using the vpa function.

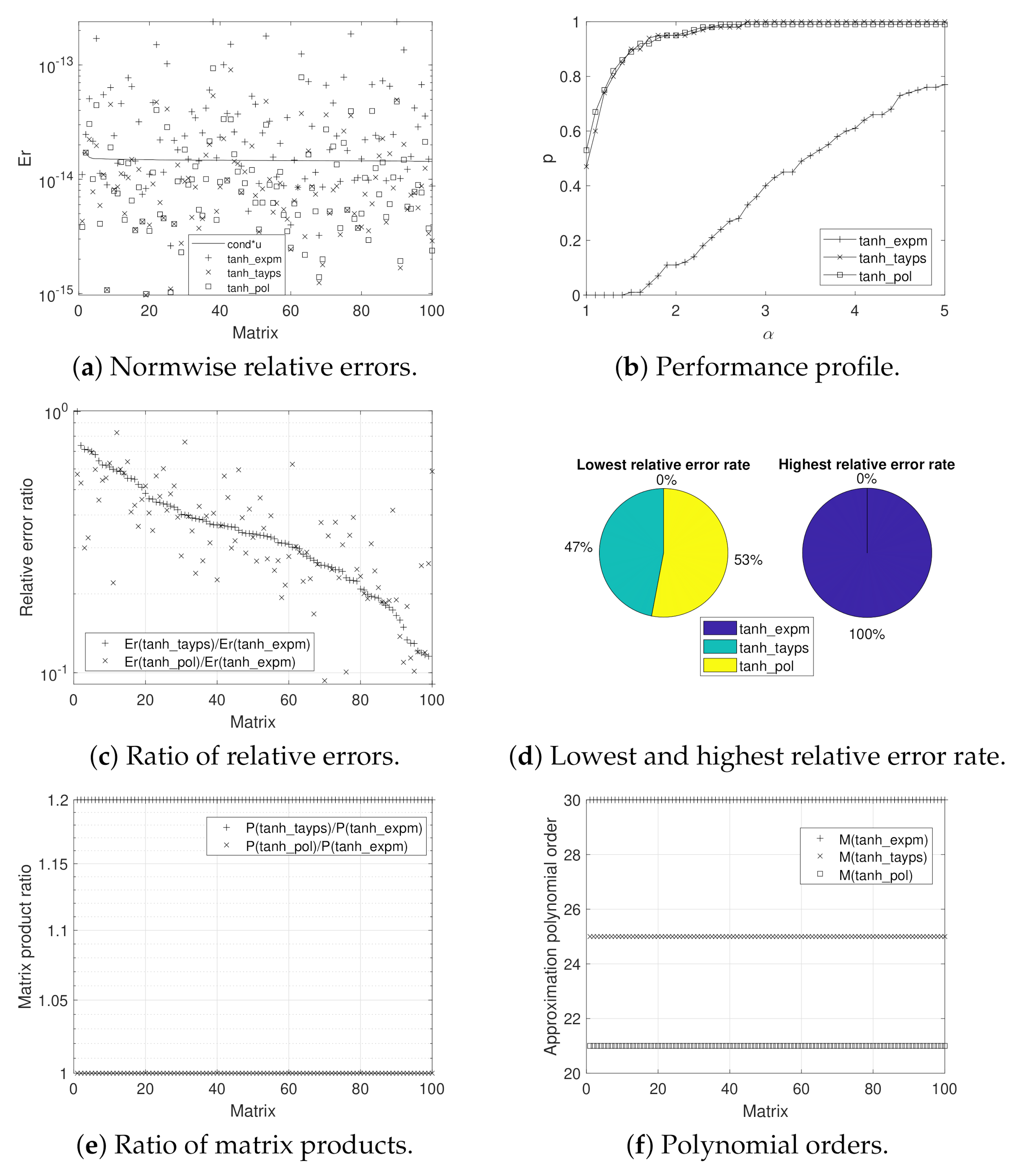

- (b)

- Non-diagonalizable complex matrices: one hundred non-diagonalizable complex matrices computed as , where J is a Jordan matrix with complex eigenvalues whose modules are less than 5 and the algebraic multiplicity is randomly generated between 1 and 4. V is an orthogonal random matrix with elements in the interval . As 1-norm, we obtained that . The “exact” matrix hyperbolic tangent was computed as by means of the vpa function.

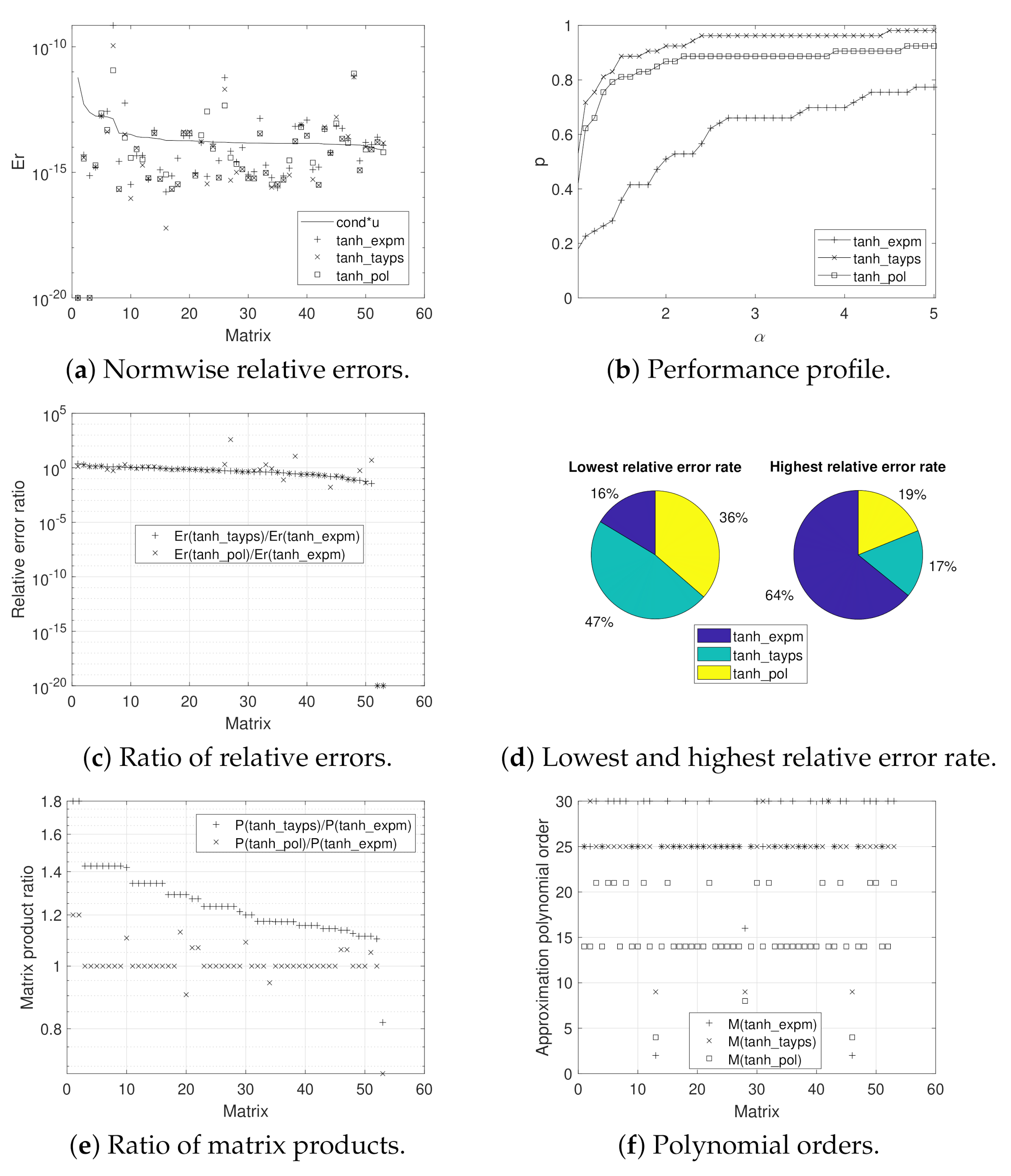

- (c)

- Matrices from the Matrix Computation Toolbox (MCT) [31] and from the Eigtool MATLAB Package (EMP) [32]: fifty-three matrices with a dimension lower than or equal to 128 were chosen because of their highly different and significant characteristics from each other. We decided to scale these matrices so that they had 1-norm not exceeding 512. As a result, we obtained that . The “exact” matrix hyperbolic tangent was calculated by using the two following methods together and the vpa function:

- Find a matrix V and a diagonal matrix D so that by using the MATLAB function eig. In this case, .

- Compute the Taylor approximation of the hyperbolic tangent function (), with different polynomial orders (m) and scaling parameters (s). This procedure is finished when the obtained result is the same for the distinct values of m and s in IEEE double precision.

The “exact” matrix hyperbolic tangent is considered only if

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Constantine, A.; Muirhead, R. Partial differential equations for hypergeometric functions of two argument matrices. J. Multivar. Anal. 1972, 2, 332–338. [Google Scholar] [CrossRef]

- James, A.T. Special functions of matrix and single argument in statistics. In Theory and Application of Special Functions; Academic Press: Cambridge, MA, USA, 1975; pp. 497–520. [Google Scholar]

- Hochbruck, M.; Ostermann, A. Exponential integrators. Acta Numer. 2010, 19, 209–286. [Google Scholar] [CrossRef]

- Higham, N.J. Functions of Matrices: Theory and Computation; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 2008; p. 425. [Google Scholar]

- Rinehart, R. The Equivalence of Definitions of a Matrix Function. Am. Math. Mon. 1955, 62, 395–414. [Google Scholar] [CrossRef]

- Estrada, E.; Higham, D.J.; Hatano, N. Communicability and multipartite structures in complex networks at negative absolute temperatures. Phys. Rev. E 2008, 78, 026102. [Google Scholar] [CrossRef] [PubMed]

- Jódar, L.; Navarro, E.; Posso, A.; Casabán, M. Constructive solution of strongly coupled continuous hyperbolic mixed problems. Appl. Numer. Math. 2003, 47, 477–492. [Google Scholar] [CrossRef]

- Defez, E.; Sastre, J.; Ibáñez, J.; Peinado, J.; Tung, M.M. A method to approximate the hyperbolic sine of a matrix. Int. J. Complex Syst. Sci. 2014, 4, 41–45. [Google Scholar]

- Defez, E.; Sastre, J.; Ibáñez, J.; Peinado, J. Solving engineering models using hyperbolic matrix functions. Appl. Math. Model. 2016, 40, 2837–2844. [Google Scholar] [CrossRef]

- Defez, E.; Sastre, J.; Ibáñez, J.; Ruiz, P. Computing hyperbolic matrix functions using orthogonal matrix polynomials. In Progress in Industrial Mathematics at ECMI 2012; Springer: Berlin/Heidelberg, Germany, 2014; pp. 403–407. [Google Scholar]

- Defez, E.; Sastre, J.; Ibánez, J.; Peinado, J.; Tung, M.M. On the computation of the hyperbolic sine and cosine matrix functions. Model. Eng. Hum. Behav. 2013, 1, 46–59. [Google Scholar]

- Efimov, G.V.; Von Waldenfels, W.; Wehrse, R. Analytical solution of the non-discretized radiative transfer equation for a slab of finite optical depth. J. Quant. Spectrosc. Radiat. Transf. 1995, 53, 59–74. [Google Scholar] [CrossRef]

- Lehtinen, A. Analytical Treatment of Heat Sinks Cooled by Forced Convection. Ph.D. Thesis, Tampere University of Technology, Tampere, Finland, 2005. [Google Scholar]

- Lampio, K. Optimization of Fin Arrays Cooled by Forced or Natural Convection. Ph.D. Thesis, Tampere University of Technology, Tampere, Finland, 2018. [Google Scholar]

- Hilscher, R.; Zemánek, P. Trigonometric and hyperbolic systems on time scales. Dyn. Syst. Appl. 2009, 18, 483. [Google Scholar]

- Zemánek, P. New Results in Theory of Symplectic Systems on Time Scales. Ph.D. Thesis, Masarykova Univerzita, Brno, Czech Republic, 2011. [Google Scholar]

- Estrada, E.; Silver, G. Accounting for the role of long walks on networks via a new matrix function. J. Math. Anal. Appl. 2017, 449, 1581–1600. [Google Scholar] [CrossRef]

- Cieśliński, J.L. Locally exact modifications of numerical schemes. Comput. Math. Appl. 2013, 65, 1920–1938. [Google Scholar] [CrossRef]

- Cieśliński, J.L.; Kobus, A. Locally Exact Integrators for the Duffing Equation. Mathematics 2020, 8, 231. [Google Scholar] [CrossRef]

- Golub, G.H.; Loan, C.V. Matrix Computations, 3rd ed.; Johns Hopkins Studies in Mathematical Sciences; The Johns Hopkins University Press: Baltimore, MD, USA, 1996. [Google Scholar]

- Moler, C.; Van Loan, C. Nineteen Dubious Ways to Compute the Exponential of a Matrix, Twenty-Five Years Later. SIAM Rev. 2003, 45, 3–49. [Google Scholar] [CrossRef]

- Sastre, J.; Ibáñez, J.; Defez, E. Boosting the computation of the matrix exponential. Appl. Math. Comput. 2019, 340, 206–220. [Google Scholar] [CrossRef]

- Sastre, J.; Ibáñez, J.; Defez, E.; Ruiz, P. Efficient orthogonal matrix polynomial based method for computing matrix exponential. Appl. Math. Comput. 2011, 217, 6451–6463. [Google Scholar] [CrossRef]

- Sastre, J.; Ibáñez, J.; Defez, E.; Ruiz, P. New scaling-squaring Taylor algorithms for computing the matrix exponential. SIAM J. Sci. Comput. 2015, 37, A439–A455. [Google Scholar] [CrossRef]

- Defez, E.; Ibánez, J.; Alonso-Jordá, P.; Alonso, J.; Peinado, J. On Bernoulli matrix polynomials and matrix exponential approximation. J. Comput. Appl. Math. 2020, 113207. [Google Scholar] [CrossRef]

- Ruiz, P.; Sastre, J.; Ibáñez, J.; Defez, E. High perfomance computing of the matrix exponential. J. Comput. Appl. Math. 2016, 291, 370–379. [Google Scholar] [CrossRef]

- Paterson, M.S.; Stockmeyer, L.J. On the Number of Nonscalar Multiplications Necessary to Evaluate Polynomials. SIAM J. Comput. 1973, 2, 60–66. [Google Scholar] [CrossRef]

- Sastre, J. Efficient evaluation of matrix polynomials. Linear Algebra Appl. 2018, 539, 229–250. [Google Scholar] [CrossRef]

- Al-Mohy, A.H.; Higham, N.J. A New Scaling and Squaring Algorithm for the Matrix Exponential. SIAM J. Matrix Anal. Appl. 2009, 31, 970–989. [Google Scholar] [CrossRef]

- Higham, N.J. FORTRAN Codes for Estimating the One-norm of a Real or Complex Matrix, with Applications to Condition Estimation. ACM Trans. Math. Softw. 1988, 14, 381–396. [Google Scholar] [CrossRef]

- Higham, N.J. The Matrix Computation Toolbox. 2002. Available online: http://www.ma.man.ac.uk/~higham/mctoolbox (accessed on 7 March 2020).

- Wright, T.G. Eigtool, Version 2.1. 2009. Available online: http://www.comlab.ox.ac.uk/pseudospectra/eigtool (accessed on 7 March 2020).

- Corwell, J.; Blair, W.D. Industry Tip: Quick and Easy Matrix Exponentials. IEEE Aerosp. Electron. Syst. Mag. 2020, 35, 49–52. [Google Scholar] [CrossRef]

| Test 1 | Test 2 | Test 3 | |

|---|---|---|---|

| Er(tanh_expm)<Er(tanh_tayps) | 32% | 0% | 22.64% |

| Er(tanh_expm)>Er(tanh_tayps) | 68% | 100% | 77.36% |

| Er(tanh_expm)=Er(tanh_tayps) | 0% | 0% | 0% |

| Er(tanh_expm)<Er(tanh_pol) | 44% | 0% | 30.19% |

| Er(tanh_expm)>Er(tanh_pol) | 56% | 100% | 69.81% |

| Er(tanh_expm)=Er(tanh_pol) | 0% | 0% | 0% |

| Test 1 | Test 2 | Test 3 | |

|---|---|---|---|

| P(tanh_expm) | 1810 | 1500 | 848 |

| P(tanh_tayps) | 2180 | 1800 | 1030 |

| P(tanh_pol) | 1847 | 1500 | 855 |

| m | s | |||||

|---|---|---|---|---|---|---|

| Min. | Max. | Average | Min. | Max. | Average | |

| tanh_expm | 16 | 30 | 27.41 | 0 | 5 | 3.55 |

| tanh_tayps | 9 | 30 | 25.09 | 0 | 6 | 4.65 |

| tanh_pol | 14 | 21 | 15.47 | 0 | 6 | 4.83 |

| tanh_expm | 30 | 30 | 30.00 | 2 | 2 | 2.00 |

| tanh_tayps | 25 | 25 | 25.00 | 3 | 3 | 3.00 |

| tanh_pol | 21 | 21 | 21.00 | 3 | 3 | 3.00 |

| tanh_expm | 2 | 30 | 26.23 | 0 | 8 | 2.77 |

| tanh_tayps | 9 | 30 | 24.38 | 0 | 9 | 3.74 |

| tanh_pol | 4 | 21 | 15.36 | 0 | 9 | 3.87 |

| Test 1 | Test 2 | Test 3 | |

|---|---|---|---|

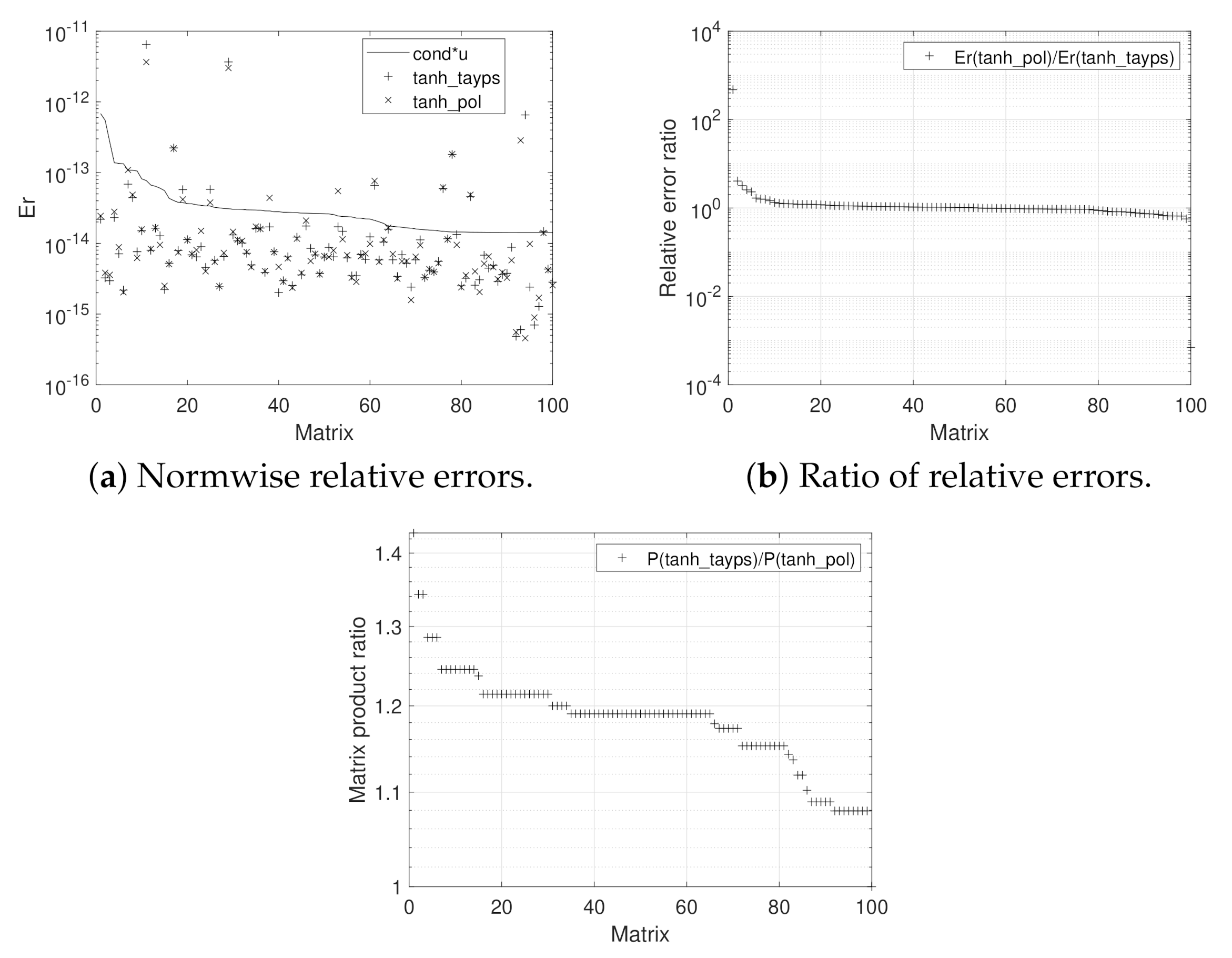

| Er(tanh_tayps)<Er(tanh_pol) | 56% | 47% | 50.94% |

| Er(tanh_tayps)>Er(tanh_pol) | 44% | 53% | 45.28% |

| Er(tanh_tayps)=Er(tanh_pol) | 0% | 0% | 3.77% |

| Minimum | Maximum | Average | Standard Deviation | |

|---|---|---|---|---|

| tanh_tayps | ||||

| tanh_pol | ||||

| tanh_tayps | ||||

| tanh_pol | ||||

| tanh_tayps | ||||

| tanh_pol |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ibáñez, J.; Alonso, J.M.; Sastre, J.; Defez, E.; Alonso-Jordá, P. Advances in the Approximation of the Matrix Hyperbolic Tangent. Mathematics 2021, 9, 1219. https://doi.org/10.3390/math9111219

Ibáñez J, Alonso JM, Sastre J, Defez E, Alonso-Jordá P. Advances in the Approximation of the Matrix Hyperbolic Tangent. Mathematics. 2021; 9(11):1219. https://doi.org/10.3390/math9111219

Chicago/Turabian StyleIbáñez, Javier, José M. Alonso, Jorge Sastre, Emilio Defez, and Pedro Alonso-Jordá. 2021. "Advances in the Approximation of the Matrix Hyperbolic Tangent" Mathematics 9, no. 11: 1219. https://doi.org/10.3390/math9111219

APA StyleIbáñez, J., Alonso, J. M., Sastre, J., Defez, E., & Alonso-Jordá, P. (2021). Advances in the Approximation of the Matrix Hyperbolic Tangent. Mathematics, 9(11), 1219. https://doi.org/10.3390/math9111219