Abstract

Epistasis, which indicates the difficulty of a problem, can be used to evaluate the basis of the space in which the problem lies. However, calculating epistasis may be challenging as it requires all solutions to be searched. In this study, a method for constructing a surrogate model, based on deep neural networks, that estimates epistasis is proposed for basis evaluation. The proposed method is applied to the Variant-OneMax problem and the -landscape problem. The method is able to make successful estimations on a similar level to basis evaluation based on actual epistasis, while significantly reducing the computation time. In addition, when compared to the epistasis-based basis evaluation, the proposed method is found to be more efficient.

1. Introduction

In terms of computation, various challenges such as black-box problems still exist. Multiple attempts have been made to resolve the difficulties by constructing surrogate models based on deep learning [1,2].

When we use a basis other than the standard basis, the structure of problem space can be quite different from the original one. Mbarek et al. [3,4] changed the standard basis of a vector space to ensure the efficient performance of an algorithm. Seo et al. [5] modified problem space by nontrivial encoding through a method changing the standard basis. The effects of basis change on a genetic algorithm (GA) in binary encoding have been investigated [6]. Furthermore, it has been shown that using this method, problem space can be fundamentally changed in graph problems, the performance of GAs gets affected. There were several studies that measured the problem difficulty from the view of epistasis [7,8]. To search a basis to smooth the ruggedness of the problem space, Lee and Kim [9,10] proposed a method that used a meta-GA and an epistasis-based basis evaluation method which largely reduced computational time over the meta-GA. In order to calculate epistasis accurately, all feasible solutions need to be first searched. However, searching all solutions becomes challenging as the complexity of the problem increases. They have resolved this issue by estimating the epistasis of solution samples.

However, estimating the epistasis using samples of the solutions still requires a large computational cost. Therefore, in this study, deep neural networks (DNNs) are used to construct an epistasis-estimating surrogate model to remarkably reduce the computational cost.

The remaining of the paper is consisted as follows. The background is explained in Section 2. In Section 3, related studies are introduced. First, a method of smoothing the ruggedness of the problem space using a meta-GA is described, and then, a study related to basis evaluation based on actual epistasis is described. Section 4 discusses the deep learning-based epistasis estimation method proposed in this study. In Section 5, the results of applying the basis change to a GA are analyzed. We draw conclusions in Section 6.

2. Backgrounds and Test Problems

In this section, we describe various backgrounds needed to understand the rest of this paper. In Section 2.1 and Section 2.2, we describe basis and epistasis, respectively. We explain how we calculated epistasis in Section 2.3. Section 2.4 and Section 2.5 discuss the deep learning and surrogate models, respectively. In Section 2.6, we introduce test problems used in our experiments.

2.1. Basis

In linear algebra, the basis of a vector space is the linear independent vectors that span the vector space [11,12]. In other words, they are vectors that give a unique representation as a linear combination to any vector in the vector space. The basis can be defined as follows:

Definition 1.

[Basis] Basis B, which is a basis of V, which is a vector space, over , which is a field, is a linear independent subset of V that spans V. Satisfying the following two conditions means that B, which is a subset of V, is a basis:

- Linear independence property: for every finite subset of B and every in , if , then necessarily

- Spanning property: it is possible to choose in and in B such that for every vector v in V.

The standard basis for vector space is , where is a column vector with the i-th component of 1 and remaining components of 0 If the basis is expressed as a 0-1 matrix, it becomes the identity matrix. Changing the identity matrix into an invertible one can lead to the transformation of the standard basis.

2.2. Epistasis

In GAs, epistasis refers to any type of gene interaction. A more complex gene interaction implies more complex problems and greater epistasis. On the other hand, if the gene interaction is independent, the epistasis becomes zero.

A method proposed by Lee and Kim [10] used the epistasis proposed by Davidor [13]. Equations for calculating the epistasis are provided in Section 2.3.

Typically, the standard basis is used in binary encoding. When the evaluation functions in the standard basis with complex interdependencies of basis vectors are changed to another basis, with the aim of smoothing the ruggedness of the problem space, the calculation of the evaluation functions becomes simpler and the epistasis decreases as well. However, as all the feasible solutions need to be searched first to calculate the epistasis, the epistasis of the sampled solutions was calculated in [10] and, based on this, an estimation was made for the actual epistasis.

2.3. How to Calculate Epistasis

In this paper, we used the formula proposed by Davidor [13] to calculate epistasis. Equations for calculating epistasis in Section 2.2 as follows. A string S is composed of l elements , where for each .

The is the group of all strings of length l,

Let denote a sample of , where the sample is selected uniformly and with replacement. is the size of the sample . is the fitness of a string S.

The average fitness value of is:

The number of string S in that match is denoted by . If is the set of all strings in with the allele a in their i-th position. The average allele value is denoted by

The excess allele value is defined by:

The excess genic value is:

The genic value of a string S is defined as:

Finally, the epistasis variance (S) is:

2.4. Deep Learning

Deep learning [14] is a high-level abstraction method that uses a combination of various non-linear techniques. This technology is currently being used in various domains of the modern society. In particular, it has brought significant developments in fields like computer vision, speech recognition, and natural language processing.

A deep neural network (DNN) is one kind of artificial neural network (ANN) that consists of various hidden layers between the input layer and output layer. Regardless of the linearity, a DNN can model complex relationships that change from the input to the output. In addition, there are various ANN models such as convolutional neural network (CNN) [15], recurrent neural network [16], and restricted Boltzmann machine [17].

2.5. Surrogate Model

Surrogate model is a model used to replace tasks such as those of complex and time-consuming calculations [18]. There are several real-world simulations that are extremely time-consuming or difficult to implement in a realistic manner. These simulations may take days or even weeks to produce the results. As these tasks are highly time intensive, performing simulations in many cases is almost infeasible. In such cases, surrogate models are used for the simulations. Sreekanth and Datte [19] built a surrogate model for coastal aquifer management. Eason and Cremaschi [20] used an ANN-based surrogate model for a chemical simulation.

Surrogate models are also used in the fields of mathematics and optimization. Some studies have been conducted to optimize various objective functions using surrogate models [21,22,23,24,25,26]. Specifically, these studies attempted to optimize the cost-expensive black-box problems. In particular, several studies have proposed the use of Walsh-based surrogate models to reduce the computational cost associated with pseudo-Boolean problems [27,28,29].

Similarly, these models have been adopted to reduce computational costs in several other fields as well. Deep learning techniques can also be used to replace black-box evaluation. To this end, surrogate models using deep learning have been developed [2,30,31,32,33,34,35]. Deep learning can be a powerful method for estimating the evaluation values that are difficult to compute in a realistic manner. This study intends to reduce the computation time by constructing a DNN-based surrogate model that estimates the epistasis corresponding to a basis.

2.6. Test Problems

This study tested the same problems as those in the experiments conducted by Lee and Kim [10]. The OneMax problem is the maximization of the number of 1s in a binary vector. Variant-OneMax is defined as the problem that maximizes the number of 1s in the binary vectors that are changed from the standard basis to another basis. When is the standard basis and B is another basis, the change of to B can be expressed as an invertible matrix . When we change the basis, a vector v can be represented as . The fitness value of counts the number of 1s in . The optimal solution to the Variant-OneMax problem is that all elements are 1.

The -landscape problem defined by Kauffman [36] comprises of chromosome S of length N, and each gene has a dependency on the other K numbers of genes. The fitness contribution of the gene at locus i depends on the allele and K other alleles . The fitness f of a point can then be expressed as follows:

As the -landscape problem is an NP-complete problem, it is difficult to find the global optimum, and therefore, it is employed extensively in the optimization field [37]. Additionally, the level of difficulty of the problem can be adjusted through N, which represents the overall size of the landscape and K, which represents the number of its local hills and valleys. The higher K is, the more rugged the problem space is.

3. Prior Work on Searching Basis

In this section, we introduce prior work on searching basis. We describe the work of finding a good basis using a meta-GA and the GA combined with epistasis-based basis evaluation.

3.1. Basis Searching with a Meta-GA

A good basis can improve the performance of a GA. Lee and Kim [9] proposed a method of using a meta-GA to search a good basis.

The performance improvement was demonstrated for the -landscape problem by changing the standard basis to the other basis obtained using the meta-GA. However, the time complexity of searching the basis with their meta-GA was found to be . Hence, the method of obtaining a good basis using the meta-GA is not practical as it is extremely time-intensive.

3.2. Epistasis-based Basis Evaluation

Lee and Kim [10] proposed an epistasis-based basis evaluation method and subsequently applied the proposed method to a GA in order to search a good basis. They converted the complex problem into a simpler one by changing the basis.

Their epistasis-based basis evaluation method was conducted as follows. With a certain given basis, the sampled solution set S was obtained from the problem, following which was obtained by a basis change. The epistasis in was calculated. A low value of the epistasis represented the higher appropriateness of the basis for the problem. When the gene size was represented by l and the number of the sampled solution set S was represented by s, the time required for calculating the epistasis was .

Next, GAs using the epistasis evaluation were described. A basis could be represented as an invertible matrix. If s were elementary matrices, any invertible matrix T could be expressed as . Each basis was represented as a variable-length string encoding meaning the multiplication of the elementary matrices [38].

The parents were aligned according to the Wagner–Fischer algorithm [39], after which a uniform crossover operator was applied. For the selection operator, the tournament operator was applied to select the best solution out of three parents. The mutation operator was used by applying either insertion, deletion, or replacement. The replacement was applied by replacing the parent households with child households.

Experiments were conducted on the Variant-OneMax problem and the -landscape problem that are described in Section 2.6. After performing the GA, to identify another basis for each problem, the optimal solutions were found by searching solutions for each problem one hundred times, independently. This was conducted by changing to the basis found by the GA. Thereafter, the experimental results were compared for three cases: the case where the basis change was not conducted, the case where a meta-GA was applied, and the case where the epistasis-based basis evaluation method was used. The case with using the meta-GA showed better results overall. However, as the computation of the meta-GA is time intensive, it is difficult to use in practice. The epistasis-based basis evaluation method yielded better results compared to the basis with no change made, and required less time compared with the meta-GA. Thus, considering both the experimental results and process time, the epistasis-based basis evaluation method was shown to be the most efficient method. Further, the calculation of the epistasis before and after the basis change showed a decrease in the epistasis, thereby indicating the effectiveness of the presented model. However, this method was still time-consuming as it required calculating the epistasis for each solution in the solution set. We try to remarkably reduce the computational cost by estimating epistasis using deep learning.

4. Proposed Method Based on Surrogate Model

In this section, we describe the proposed epistasis estimation method based on a surrogate model. We present how to estimate epistasis using deep learning in Section 4.1. In Section 4.2, we introduce our GA combined with the proposed surrogate model.

4.1. Surrogate Model-Based Epistasis Estimation Using Deep Learning

In Section 4.2, we confirmed that the complexity of a problem on a basis could be represented by an epistasis. This was done by estimating the actual epistasis using a sampled solution set as the problem of searching all the solutions to calculate the epistasis. However, as this estimated method of computing the epistasis was still time-consuming, we expected that deep learning could be used to further reduce the computation time.

We intended to apply a deep learning model when the basis and the estimated epistasis, in Section 4.2, were considered to be input and output, respectively. In practice, it is nearly impossible to search all the solutions to calculate epistasis. However, if the epistasis can be successfully estimated using a deep learning model, the deep learning model can be viewed as an objective function for estimating the epistasis. A surrogate model estimates an unknown objective function based on the accumulated input and output data. Therefore, the corresponding deep learning model can be considered to be a surrogate model.

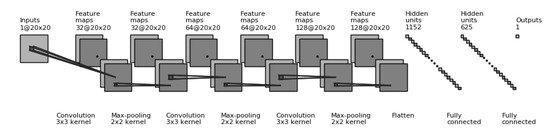

Kim et al. [40] and Kim and Kim [41] introduced an epistasis estimation method using a DNN model. Experiments were conducted using DNN and CNN to determine the deep learning model that was more suitable for epistasis estimation. The hyperparameters for the DNN and CNN models are detailed in Table 1, and the used structure of the CNN model is shown in Figure 1.

Table 1.

The hyperparameter configuration of deep neural network (DNN) and convolutional neural network (CNN).

Figure 1.

Structure of the CNN model used in this study, when .

The data used in our experiments were from the populations obtained by the GA experiments of Lee and Kim [10] for searching the basis on each problem. Further, 10-fold cross-validation was conducted after removing duplicated data within the dataset. The results of the experiment are summarized in Table 2. The ratio shown there was the result calculated by , where denotes the calculated epistasis by sampling, and represents the estimated epistasis using deep learning. A lower ratio implies that the epistasis estimated using deep learning could be successfully replaced by epistasis of a sampled solution set.

Table 2.

Experimental results of epistasis estimation using DNN and CNN.

According to the experimental results are present in Table 2, there was no significant difference between the ratio in DNN and the ratio in CNN. However, the CNN model required 6.5 times more training time than the DNN model. When we considered both the estimation results and the training time taken, the DNN model was more efficient.

There are various hyperparameters that can be configured in the DNN model, and depending on the hyperparameter configuration, the performance of the DNN model may vary. Kim et al. [40] used the dropout technique [42] to resolve the vanishing gradient problem of the DNN. The initial weights also affect the model performance; the two popular initialization techniques are “Xavier” [43] and “He” [44]. Finally, the experiment was conducted using the best composition: three layers, the “Xavier” initializer, and a dropout rate of 0.5.

4.2. A Genetic Algorithm with Our Surrogate Model

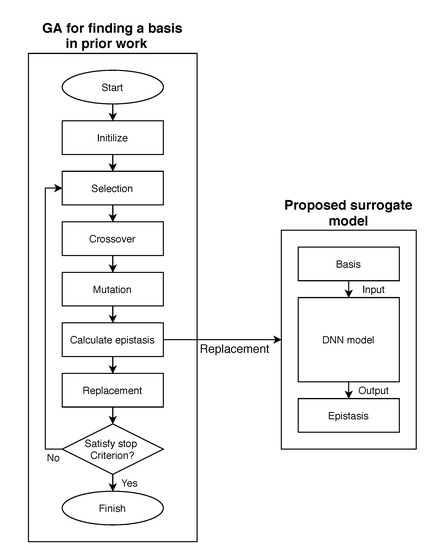

In this section, we present a GA to find a good basis with our surrogate model. As shown in Figure 2, we replaced the part of calculating epistasis in our GA with the proposed surrogate model. It is known that any basis is representable as an invertible matrix [10]. Every invertible matrix can be expressed as a product of elementary matrices. In our GA, we used a variable-length string for the encoding of the elementary matrix product as a way to represent a basis.

Figure 2.

Flowchart of our genetic algorithm (GA) [10] combined with the proposed surrogate model.

For selection, we applied a tournament operator three times in order to get three solutions. The best among the three became a parent. Parents were gathered as many as the size of population, and two parents randomly paired up and we applied a crossover operator between them. In both parents, one string was stretched to match the other string. The stretched string was inserted with “-” symbols to adjust the length to minimize the Hamming distance between the two strings. Two offspring were generated by applying a uniform crossover to align and removing the “-” symbols. The mutation operator applied one of insertion, deletion, and replacement. The probability of applying the mutation operator was 0.05 for each gene, and 0.2 for each individual. Afterwards, fitness was evaluated by applying the proposed surrogate model. Finally, the parent population was replaced by the offspring population.

5. Results of Experiments and Discussion

We introduce our computing environments and dataset in Section 5.1. In Section 5.2, we present the experimental results when we applied the proposed method to the Variant-OneMax problem and the -landscape problem. In Section 5.3, we describe how well the proposed method estimates epistasis.

5.1. Test Environments and Dataset

Our experiments were conducted on the computing environments presented in Table 3. Further, GPU computation was used for tensor calculation to reduce the time of the DNN model training. The amount of data was reduced after removing the duplicated data from Table 2. If the training process was conducted repeatedly on a small amount of data, it may result in an overfit. To prevent an overfit, additional data were generated in a manner similar to the one used by Lee and Kim [10]. The amount of data generated for each problem became 100 times the amount of data before the duplication removal; the generated data were then used for the DNN model training.

Table 3.

Test environments.

5.2. Results

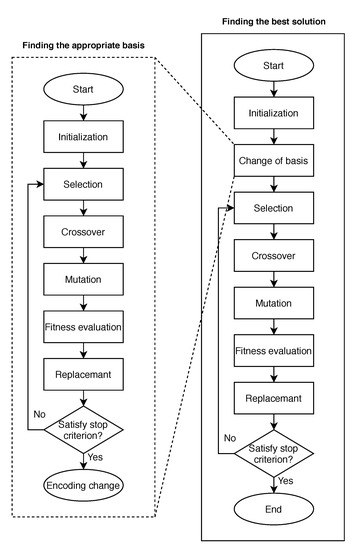

We conducted experiments on the Variant-OneMax and -landscape problems. As shown in Figure 3, the experiments were split into two parts. The first part involved searching for another basis for each problem through a GA, whereas the second part consisted of finding the optimal solution for each problem by changing the basis found through another GA. In this study, we used the DNN model trained by the basis and the epistasis that were used in the experiments by Lee and Kim [10].

Figure 3.

Flowchart of a GA (right-side solid box) with a change of basis which is found by another GA (left-side dotted box).

On the Variant-OneMax problem, experiments were conducted for and . This experiments was conducted under the same conditions as in [41], and the same result was obtained. In both cases, the basis was searched for using a GA. Then, after changing as the basis obtained by the GA, the optimal solution was obtained by independently searching for solutions one hundred times on each of the problem instances. For finding the best solution on the problem, our GA used one-point crossover and bit-flip mutation with probability 0.05. The remaining part including selection and replacement were the same as GA for finding a good basis in Section 4.2. This process was repeated 10 times to obtain the average values. Table 4 shows how well the optimal solution could be found when the estimation method using the DNN was applied to the Variant-OneMax problem.

Table 4.

Experimental results on the Variant-OneMax Problem [41].

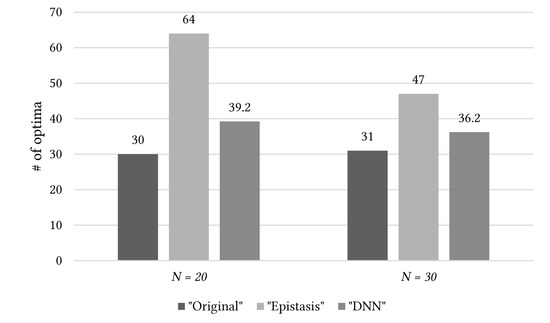

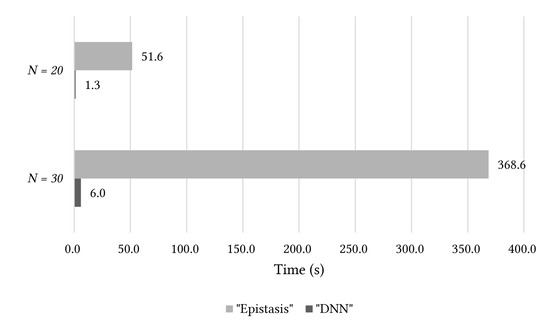

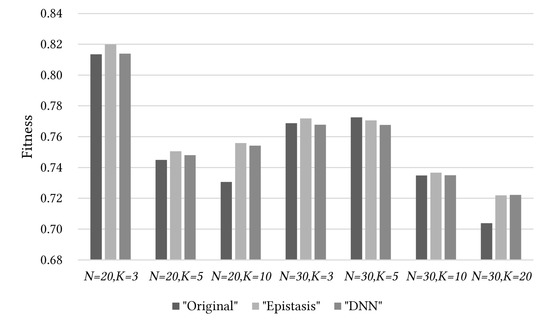

In Figure 4 and Figure 5, “Original” corresponds to the case where the optimal solution is found without applying the basis change. “Epistasis” corresponds to the case of finding the optimal solution using the epistasis-based basis evaluation method proposed by Lee and Kim [10]. “DNN” is the result of applying the method proposed in this study. Considering the results in Figure 4, “Epistasis” provided the best results among all the cases. “DNN” provided better results than “Original”, but performed slightly poorer than “Epistasis.” However, in Table 4, “DNN” showed almost similar results to “Epistasis.” Considering the computation time results in Figure 5, there was a 40 to 60 times difference between “DNN” and “Epistasis.”

Figure 4.

Performance comparison of the experiment in this study (“DNN”) and those in previous studies (“Original” and “Epistasis”) on the Variant-OneMax problem [41].

Figure 5.

Comparison of processing time for the experiment in this study (“DNN”) and that in previous study (“Epistasis”) on the Variant-OneMax problem [41].

On the -landscape problem, experiments were conducted for seven different cases: (, ), (, ), (, ), (, ), (, ), (, ), and (, ). Similar to the Variant-OneMax problem, the basis was searched for using a GA, following that the change was made on the obtained basis. This was followed by ten repetitions of the independent GA search that was conducted 100 times. The results of the experiments are summarized in Table 5.

Table 5.

Experimental results for the -landscape problem.

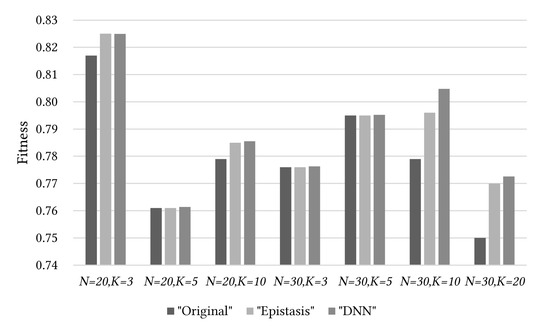

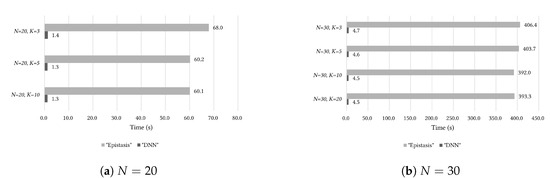

In Figure 6, Figure 7 and Figure 8, “Original,” “Epistasis,” and “DNN” illustrate the same trends as obtained from the Variant-OneMax problem. Figure 6 compares the entire population averages. The best quality of population was obtained when “Epistasis” was used. “DNN” had better results than “Original”, but showed slightly poorer results than “Epistasis.” However, the comparison results for the optimal solutions were more important because the best solution of the population was the optimal solution. In Figure 7, “DNN” generally found better optimal solutions than “Original,” and also found almost identical or slightly poorer solutions when compared with “Epistasis.” In Figure 8, when we compared the computing time taken, “Epistasis” required 46 to 87 times longer times compared with that required by “DNN.”

Figure 6.

Performance comparison of the experiment in this study (“DNN”) and those in previous studies (“Original” and “Epistasis”) on the average of population for the -landscape problem.

Figure 7.

Comparison of best solution results for the experiments in this study (“DNN”) and previous studies (“Original” and “Epistasis”) on the -landscape problem.

Figure 8.

Comparison of processing time for the experiments in this study (“DNN”) and previous study (“Epistasis”) on the NK-landscape problem.

In this study, it was confirmed only how well the best solution of the problems was found, and we did not perform a statistical analysis. Derrac et al. [45] explained how to use statistical tests to compare evolutionary algorithms. In future work, it is valuable to check the significance of our improvement through a statistical analysis.

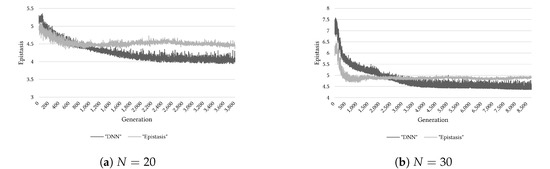

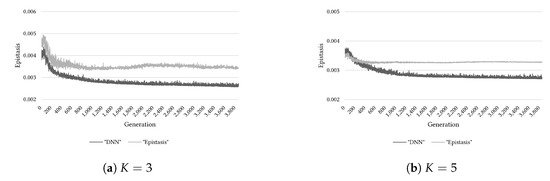

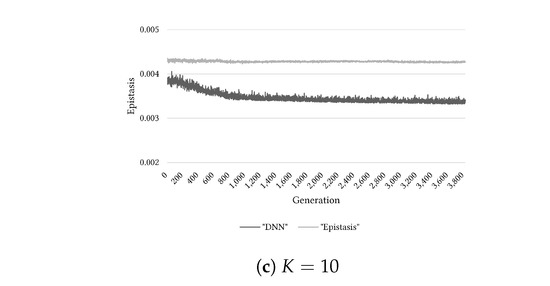

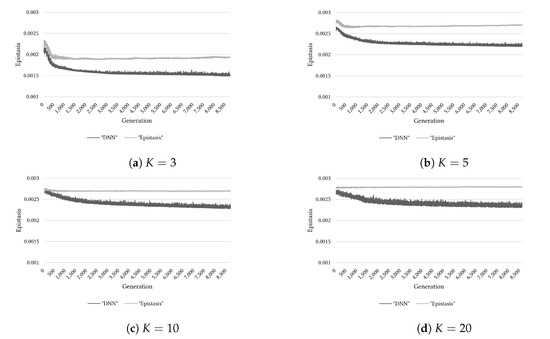

5.3. Epistasis Estimation Based on Basis

This section examines the effectiveness of the epistasis estimation method based on the DNN. Through Figure 9, Figure 10 and Figure 11, we can know the comparison results of estimating the epistasis with the DNN and calculating the epistasis of sampled solutions in the basis search using a GA. Considering Figure 9, the estimation results using the DNN model appeared to be similar to the calculated epistasis for the Variant-OneMax problem. However, from Figure 10 and Figure 11, when we considered the -landscape problem, the difference between the DNN model estimation and the calculated epistasis is observed to increase with the growth in problem complexity. Owing to this tendency, the reason for the lower quality of the population by “DNN” than that by “Epistasis” for the cases of (, ) and (, ) can be guessed as follows. As the Variant-OneMax problem is a relatively simple problem, the epistasis estimation based on the basis was not presented with any significant difficulties; however, on the -landscape problem, as the problem complexity increased, the estimation by the DNN became more difficult.

Figure 9.

Comparison of target epistasis values (“Epistasis”) and ones estimated by DNN (“DNN”) on the Variant-OneMax problem.

Figure 10.

Comparison of target epistasis values (“Epistasis”) and ones estimated by DNN (“DNN”) on the -landscape problem ().

Figure 11.

Comparison of target epistasis values (“Epistasis”) and ones estimated by DNN (“DNN”) on the NK-landscape problem

().

6. Conclusions

Lee and Kim [10] introduced an epistasis-based basis evaluation method; in this study, however, we propose a surrogate model-based epistasis estimation method, which uses deep neural networks (DNNs) for epistasis estimation.The proposed method was applied to two types of problems, as discussed in Section 2.6, then experiments were conducted. The results were compared with those obtained using the epistasis-based basis evaluation method. Regarding the optimal solution search, estimating the epistasis of the basis using the DNN model was nearly always better than that with no basis change. In addition, the method using the DNN showed nearly similar or slightly poorer results than the epistasis-based basis evaluation. In particular, on the -landscape problem, the method using the DNN provided optimal solutions that were quite similar to those obtained using the epistasis-based basis evaluation. However, using the DNN, the time required for the epistasis estimation was 87 times less as compared with the one conventionally used. Although our method spent time to construct a surrogate model using DNN, the time was just for pre-processing, and it was a low overhead that was much less than the time taken by the previous method [10] that repeatedly calculated epistasis. Therefore, by applying our method, a successful estimation of the epistasis was achieved, along with effective reduction in the computation time. (See Table 2, and Figure 5 and Figure 8).

The contributions of our study can be described as follows. Kim and Yoon [6] presented the effect of basis change. Lee and Kim [9] obtained a good basis by using a meta-GA, and also obtained better results on the -landscape problem using this basis. However, the meta-GA is time intensive and is therefore very impractical. To address this issue, they proposed an epistasis-based basis evaluation method [10] that showed better efficiency than the meta-GA method; however, their method was still computationally intensive. To this end, we proposed a surrogate model method of estimating the epistasis by using a DNN model. The proposed method showed similar qualities as the epistasis-based basis evaluation method while significantly reducing the computation time. Thus, our epistasis estimation using the DNN was more efficient than the previous methods; furthermore, our method was found to be more practical.

However, the DNN model was presented with challenges when we estimate the epistasis as the problem complexity increased, due to the following reasons: the use of the estimated epistasis values instead of the actual calculated values for DNN training resulted in a lower estimation accuracy, or that each problem required different optimal hyperparameters for the DNN. Thus, in the future, we intend to improve the results of the present study by improving the method of searching for the better optimal solution or by developing separate DNN models for each problem.

Author Contributions

Conceptualization, Y.-H.K. (Yong-Hyuk Kim); methodology, Y.-H.K. (Yong-Hyuk Kim); software, Y.-H.K. (Yong-Hoon Kim); validation, Y.Y.; formal analysis, Y.-H.K. (Yong-Hyuk Kim); investigation, Y.-H.K. (Yong-Hoon Kim); resources, Y.-H.K. (Yong-Hoon Kim); data curation, Y.-H.K. (Yong-Hoon Kim); writing—original draft preparation, Y.-H.K. (Yong-Hoon Kim); writing—review and editing, Y.Y.; visualization, Y.-H.K. (Yong-Hoon Kim); supervision, Y.-H.K. (Yong-Hyuk Kim); project administration, Y.-H.K. (Yong-Hyuk Kim); funding acquisition, Y.-H.K. (Yong-Hyuk Kim) All authors have read and agreed to the published version of the manuscript.

Funding

The present research was conducted by the Research Grant of Kwangwoon University in 2020. This research was a part of the project titled ‘Marine Oil Spill Risk Assessment and Development of Response Support System through Big Data Analysis’ funded by the Korea Coast Guard. This work was also supported by the National Research Foundation of Korea (NRF) grant funded by the Korea government (Ministry of Science, ICT and Future Planning) (No. 2017R1C1B1010768).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Tripathy, R.K.; Bilionis, I. Deep UQ: Learning deep neural network surrogate models for high dimensional uncertainty quantification. J. Comput. Phys. 2018, 375, 565–588. [Google Scholar] [CrossRef]

- Pfrommer, J.; Zimmerling, C.; Liu, J.; Kärger, L.; Henning, F.; Beyerer, J. Optimisation of manufacturing process parameters using deep neural networks as surrogate models. Procedia CiRP 2018, 72, 426–431. [Google Scholar] [CrossRef]

- Mbarek, R.; Tmar, M.; Hattab, H. Vector space basis change in information retrieval. Computación y Sistemas 2014, 18, 569–579. [Google Scholar] [CrossRef]

- Mbarek, R.; Tmar, M.; Hattab, H. A new relevance feedback algorithm based on vector space basis change. In International Conference on Intelligent Text Processing and Computational Linguistics; Springer: Berlin/Heidelberg, Germany, 2014; pp. 355–366. [Google Scholar]

- Seo, K.; Hyun, S.; Kim, Y.H. An edge-set representation based on a spanning tree for searching cut space. IEEE Trans. Evol. Comput. 2014, 19, 465–473. [Google Scholar] [CrossRef]

- Kim, Y.H.; Yoon, Y. Effect of changing the basis in genetic algorithms using binary encoding. KSII Trans. Internet Inf. Syst. 2008, 2. [Google Scholar] [CrossRef]

- Reeves, C.R.; Wright, C.C. Epistasis in genetic algorithms: An experimental design perspective. In Proceedings of the International Conference on Genetic Algorithms, Pittsburgh, PA, USA, 15–19 July 1995; pp. 217–224. [Google Scholar]

- Naudts, B.; Kallel, L. A comparison of predictive measures of problem difficulty in evolutionary algorithms. IEEE Trans. Evol. Comput. 2000, 4, 1–15. [Google Scholar] [CrossRef]

- Lee, J.; Kim, Y.H. Importance of finding a good basis in binary representation. In Proceedings of the Genetic and Evolutionary Computation Conference Companion, Kyoto, Japan, 15–19 July 2018; pp. 49–50. [Google Scholar]

- Lee, J.; Kim, Y.H. Epistasis-based basis estimation method for simplifying the problem space of an evolutionary search in binary representation. Complexity 2019. [Google Scholar] [CrossRef]

- Meyer, C.D. Matrix Analysis and Applied Linear Algebra; Siam: Philadelphia, PA, USA, 2000; Volume 71. [Google Scholar]

- Hirsch, M.W.; Devaney, R.L.; Smale, S. Differential Equations, Dynamical Systems, and Linear Algebra; Academic Press: Cambridge, MA, USA, 1974; Volume 60. [Google Scholar]

- Davidor, Y. Epistasis variance: Suitability of a representation to genetic algorithms. Complex Syst. 1990, 4, 369–383. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Smolensky, P. Information Processing in Dynamical Systems: Foundations of Harmony Theory; Technical Report; Colorado Univ at Boulder Dept of Computer Science: Boulder, CO, USA, 1986. [Google Scholar]

- Ong, Y.S.; Nair, P.B.; Keane, A.J. Evolutionary optimization of computationally expensive problems via surrogate modeling. AIAA J. 2003, 41, 687–696. [Google Scholar] [CrossRef]

- Sreekanth, J.; Datta, B. Multi-objective management of saltwater intrusion in coastal aquifers using genetic programming and modular neural network based surrogate models. J. Hydrol. 2010, 393, 245–256. [Google Scholar] [CrossRef]

- Eason, J.; Cremaschi, S. Adaptive sequential sampling for surrogate model generation with artificial neural networks. Comput. Chem. Eng. 2014, 68, 220–232. [Google Scholar] [CrossRef]

- Lim, D.; Jin, Y.; Ong, Y.S.; Sendhoff, B. Generalizing surrogate-assisted evolutionary computation. IEEE Trans. Evol. Comput. 2009, 14, 329–355. [Google Scholar] [CrossRef]

- Amouzgar, K.; Bandaru, S.; Ng, A.H. Radial basis functions with a priori bias as surrogate models: A comparative study. Eng. Appl. Artif. Intell. 2018, 71, 28–44. [Google Scholar] [CrossRef]

- Kattan, A.; Galvan, E. Evolving radial basis function networks via GP for estimating fitness values using surrogate models. In Proceedings of the IEEE Congress on Evolutionary Computation, Brisbane, Australia, 10–15 June 2012. [Google Scholar]

- Lange, K.; Hunter, D.R.; Yang, I. Optimization transfer using surrogate objective functions. J. Comput. Graph. Stat. 2000, 9, 1–20. [Google Scholar]

- Manzoni, L.; Papetti, D.M.; Cazzaniga, P.; Spolaor, S.; Mauri, G.; Besozzi, D.; Nobile, M.S. Surfing on fitness landscapes: A boost on optimization by Fourier surrogate modeling. Entropy 2020, 22, 285. [Google Scholar] [CrossRef]

- Yu, D.P.; Kim, Y.H. Predictability on performance of surrogate-assisted evolutionary algorithm according to problem dimension. In Proceedings of the Genetic and Evolutionary Computation Conference Companion, Prague, Czech Republic, 13–17 July 2019; pp. 91–92. [Google Scholar]

- Verel, S.; Derbel, B.; Liefooghe, A.; Aguirre, H.; Tanaka, K. A surrogate model based on Walsh decomposition for pseudo-Boolean functions. In International Conference on Parallel Problem Solving from Nature; Springer: Berlin/Heidelberg, Germany, 2018; pp. 181–193. [Google Scholar]

- Leprêtre, F.; Verel, S.; Fonlupt, C.; Marion, V. Walsh functions as surrogate model for pseudo-Boolean optimization problems. In Proceedings of the Genetic and Evolutionary Computation Conference, Prague, Czech Republic, 13–17 July 2019; pp. 303–311. [Google Scholar]

- Swingler, K. Learning and searching pseudo-Boolean surrogate functions from small samples. Evol. Comput. 2020, 28, 317–338. [Google Scholar] [CrossRef]

- Zhu, Y.; Zabaras, N. Bayesian deep convolutional encoder–decoder networks for surrogate modeling and uncertainty quantification. J. Comput. Phys. 2018, 366, 415–447. [Google Scholar] [CrossRef]

- Zhu, Y.; Zabaras, N.; Koutsourelakis, P.S.; Perdikaris, P. Physics-constrained deep learning for high-dimensional surrogate modeling and uncertainty quantification without labeled data. J. Comput. Phys. 2019, 394, 56–81. [Google Scholar] [CrossRef]

- Karavolos, D.; Liapis, A.; Yannakakis, G.N. Using a surrogate model of gameplay for automated level design. In Proceedings of the 2018 IEEE Conference on Computational Intelligence and Games (CIG), Maastricht, The Netherlands, 14–17 August 2018; pp. 1–8. [Google Scholar]

- Melo, A.; Cóstola, D.; Lamberts, R.; Hensen, J. Development of surrogate models using artificial neural network for building shell energy labelling. Energy Policy 2014, 69, 457–466. [Google Scholar] [CrossRef]

- Kourakos, G.; Mantoglou, A. Pumping optimization of coastal aquifers based on evolutionary algorithms and surrogate modular neural network models. Adv. Water Resour. 2009, 32, 507–521. [Google Scholar] [CrossRef]

- Kim, H.J.; Kim, Y.H. A Surrogate Model Using Deep Neural Networks for Optimal Oil Skimmer Assignment. In Proceedings of the Genetic and Evolutionary Computation Conference Companion, Cancun, Mexico, 8–12 July 2020; pp. 39–40. [Google Scholar]

- Kauffman, S.A.; Weinberger, E.D. The NK model of rugged fitness landscapes and its application to maturation of the immune response. J. Theor. Biol. 1989, 141, 211–245. [Google Scholar] [CrossRef]

- Weinberger, E.D. NP Completeness of Kauffman’S NK Model, a Tuneably Rugged Fitness Landscape; Santa Fe Institute Technical Reports; Santa Fe Institute: Santa Fe, NM, USA, 1996. [Google Scholar]

- Yoon, Y.; Kim, Y.H. A mathematical design of genetic operators on GLn(ℤ2). Math. Probl. Eng. 2014, 2014. [Google Scholar] [CrossRef]

- Wagner, R.A.; Fischer, M.J. The string-to-string correction problem. J. ACM (JACM) 1974, 21, 168–173. [Google Scholar] [CrossRef]

- Kim, Y.H.; Lee, J.; Kim, Y.H. Predictive model for epistasis-based basis evaluation on pseudo-Boolean function using deep neural networks. In Proceedings of the Genetic and Evolutionary Computation Conference Companion, Prague, Czech Republic, 13–17 July 2019; pp. 61–62. [Google Scholar]

- Kim, Y.H.; Kim, Y.H. Finding a better basis on binary representation through DNN-based epistasis estimation. In Proceedings of the Genetic and Evolutionary Computation Conference Companion, Cancun, Mexico, 8–12 July 2020; pp. 229–230. [Google Scholar]

- Dahl, G.E.; Sainath, T.N.; Hinton, G.E. Improving deep neural networks for LVCSR using rectified linear units and dropout. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; pp. 8609–8613. [Google Scholar]

- Glorot, X.; Bengio, Y. Understanding the difficulty of training deep feedforward neural networks. In Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics, Sardinia, Italy, 13–15 May 2010; pp. 249–256. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1026–1034. [Google Scholar]

- Derrac, J.; García, S.; Molina, D.; Herrera, F. A practical tutorial on the use of nonparametric statistical tests as a methodology for comparing evolutionary and swarm intelligence algorithms. Swarm Evol. Comput. 2011, 1, 3–18. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).