Mean Shift versus Variance Inflation Approach for Outlier Detection—A Comparative Study

Abstract

1. Introduction

- Definition of the generally accepted null model and specification of alternative MS and VI models (Section 2). Multiple outliers are allowed for in both alternative models to keep the models equivalent.

- True maximization of the likelihood functions of the null and alternative models, not only for the common MS model, but also for the VI model. This means, we do not resort to the REML approach of Thompson [11], Gumedze et al. [12], and Gumedze [13]. This is important for the purpose of an insightful comparison of MS and VI (Section 3).

- Application of likelihood ratio (LR) test for outlier detection by hypothesis testing and derivation of the test statistics for both the MS and the VI model. For this purpose, a completely new rigorous likelihood ratio test in the VI model is developed and an also completely new comparison with the equivalent test in the MS model is elaborated (Section 3).

- Comparison of both approaches using the illustrative example of repeated observations, which is worked out in full detail (Section 4).

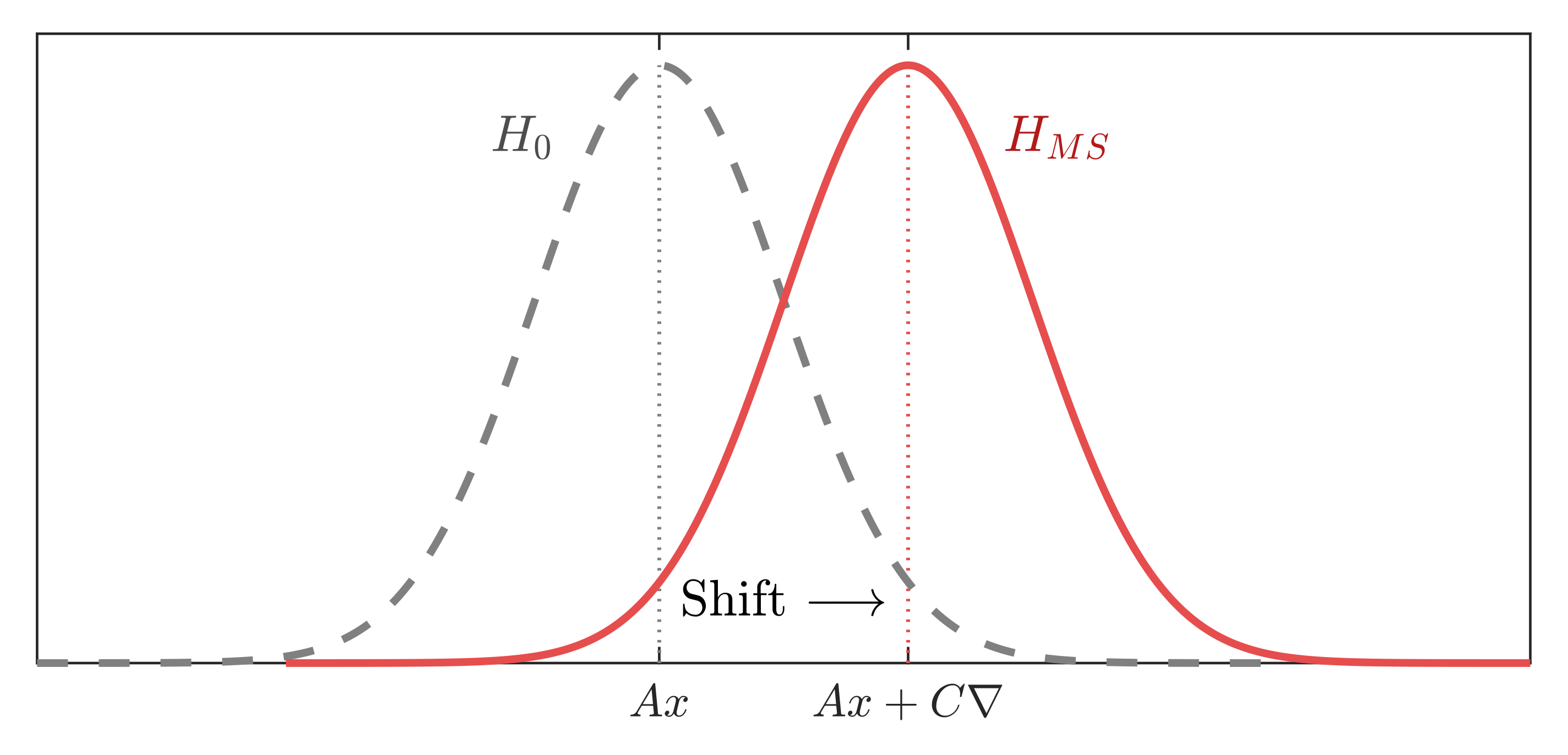

2. Null Model, Mean Shift Model, and Variance Inflation Model

3. Outlier Detection by Hypothesis Tests

3.1. Mean Shift Model

- has the least probability of type 2 decision error (failure to reject when it is false) (most powerful);

- is independent of ∇ (uniform); and,

- but only for some transformed test problem (invariant).

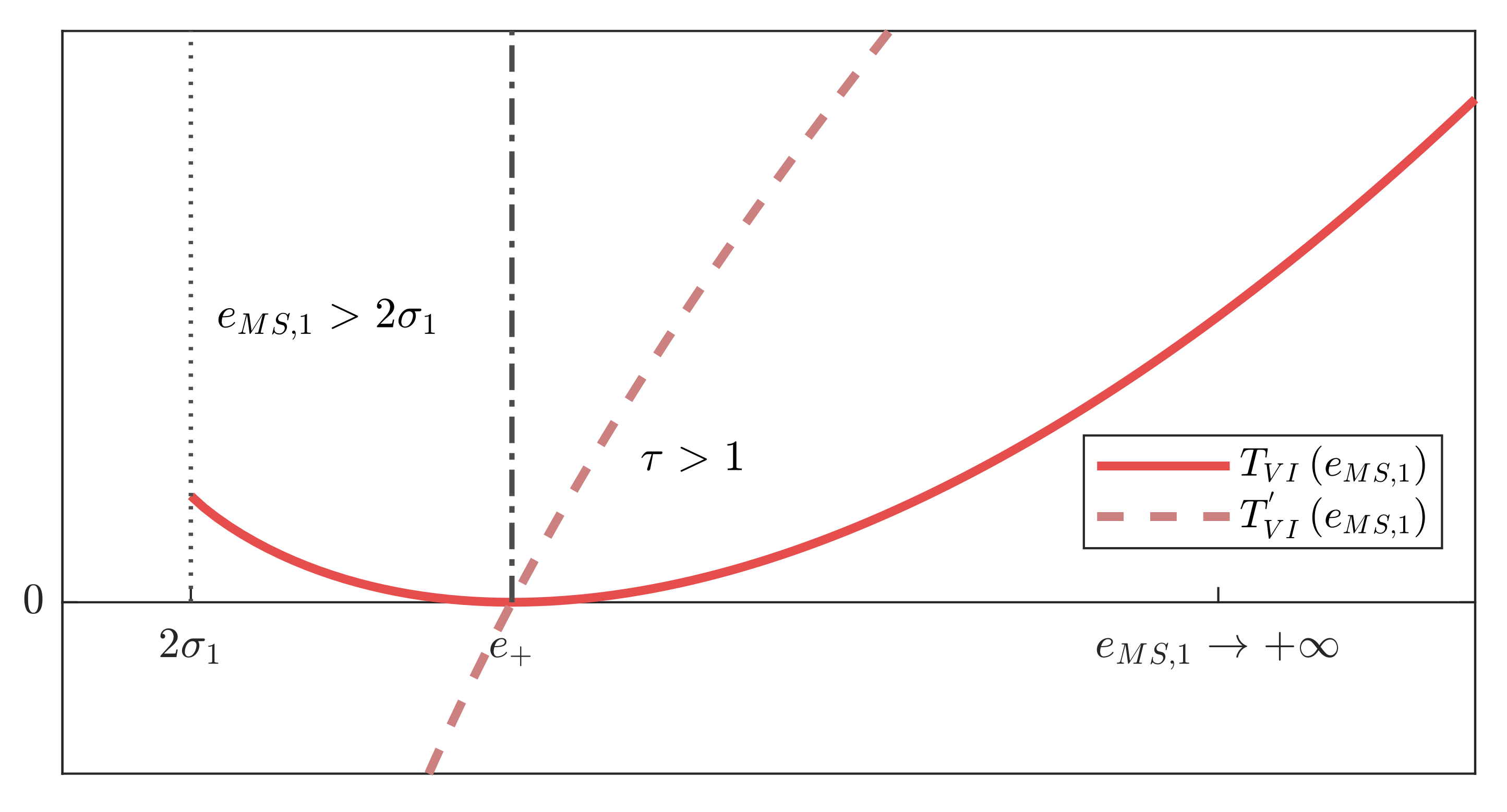

3.2. Variance Inflation Model

4. Repeated Observations

4.1. Mean Shift Model

4.2. Variance Inflation Model—General Considerations

4.3. Variance Inflation Model—Test for One Outlier

4.4. Variance Inflation Model—Test for Two Outliers

- both below , if , or

- both above , if , or

- both between and , if .

- (16) has no local minimum, and if it has, that

- or vice versa

4.5. Outlier Identification

5. Conclusions

- strived for a true (non-restricted) maximization of the likelihood function;

- allowed for multiple outliers;

- fully worked out the case of repeated observations; and,

- computated the corresponding test power by MC method for the first time.

- the maximization of the likelihood function requires the solution of a system of u polynomial equations of degree , where u is the number of model parameters and m is the number of suspected outliers;

- it is neither guaranteed that the likelihood function actually has such a local maximum, nor that it is unique;

- the maximum might be at a point where some variance is deflated rather than inflated. It is debatable, what the result of the test should be in such a case;

- the critical value of this test must be computed numerically by Monte Carlo integration. This must even be done for each model separately; and,

- there is an upper limit (19) for the choice of the critical value, which may become small in some cases.

Author Contributions

Funding

Conflicts of Interest

Appendix A

References

- Rofatto, V.F.; Matsuoka, M.T.; Klein, I.; Veronez, M.R.; Bonimani, M.L.; Lehmann, R. A half-century of Baarda’s concept of reliability: A review, new perspectives, and applications. Surv. Rev. 2018, 1–17. [Google Scholar] [CrossRef]

- Baarda, W. A Testing Procedure for Use in Geodetic Networks, 2nd ed.; Netherlands Geodetic Commission, Publication on Geodesy: Delft, The Netherlands, 1968. [Google Scholar]

- Teunissen, P.J.G. Distributional theory for the DIA method. J. Geod. 2018, 92, 59–80. [Google Scholar] [CrossRef]

- Lehmann, R. On the formulation of the alternative hypothesis for geodetic outlier detection. J. Geod. 2013, 87, 373–386. [Google Scholar] [CrossRef]

- Lehmann, R.; Lösler, M. Multiple outlier detection: Hypothesis tests versus model selection by information criteria. J. Surv. Eng. 2016, 142, 04016017. [Google Scholar] [CrossRef]

- Lehmann, R.; Lösler, M. Congruence analysis of geodetic networks—Hypothesis tests versus model selection by information criteria. J. Appl. Geod. 2017, 11. [Google Scholar] [CrossRef]

- Teunissen, P.J.G. Testing Theory—An Introduction, 2nd ed.; Series of Mathematical Geodesy and Positioning; VSSD: Delft, The Netherlands, 2006. [Google Scholar]

- Kargoll, B. On the Theory and Application of Model Misspecification Tests in Geodesy; C 674; German Geodetic Commission: Munich, Germany, 2012. [Google Scholar]

- Bhar, L.; Gupta, V. Study of outliers under variance-inflation model in experimental designs. J. Indian Soc. Agric. Stat. 2003, 56, 142–154. [Google Scholar]

- Cook, R.D. Influential observations in linear regression. J. Am. Stat. Assoc. 1979, 74, 169–174. [Google Scholar] [CrossRef]

- Thompson, R. A note on restricted maximum likelihood estimation with an alternative outlier model. J. R. Stat. Soc. Ser. B (Methodological) 1985, 47, 53–55. [Google Scholar] [CrossRef]

- Gumedze, F.N.; Welhamb, S.J.; Gogel, B.J.; Thompson, R. A variance shift model for detection of outliers in the linear mixed model. Comput. Stat. Data Anal. 2010, 54, 2128–2144. [Google Scholar] [CrossRef]

- Gumedze, F.N. Use of likelihood ratio tests to detect outliers under the variance shift outlier model. J. Appl. Stat. 2018. [Google Scholar] [CrossRef]

- Koch, K.R.; Kargoll, B. Expectation maximization algorithm for the variance-inflation model by applying the t-distribution. J. Appl. Geod. 2013, 7, 217–225. [Google Scholar] [CrossRef]

- Koch, K.R. Outlier detection for the nonlinear Gauss Helmert model with variance components by the expectation maximization algorithm. J. Appl. Geod. 2014, 8, 185–194. [Google Scholar] [CrossRef]

- Koch, K.R. Parameter Estimation and Hypothesis Testing in Linear Models; Springer: Berlin/Heidelberg, Germany, 1999. [Google Scholar] [CrossRef]

- James, G.; Witten, D.; Hastie, T.; Tibshirani, R. An Introduction to Statistical Learning: With Applications in R; Springer Texts in Statistics; Springer: New York, NY, USA, 2013. [Google Scholar] [CrossRef]

- Arnold, S.F. The Theory of Linear Models and Multivariate Analysis; Wiley Series in Probability and Statistics; John Wiley & Sons Inc.: New York, NY, USA, 1981. [Google Scholar]

- Lehmann, R.; Voß-Böhme, A. On the statistical power of Baarda’s outlier test and some alternative. Surv. Rev. 2017, 7, 68–78. [Google Scholar] [CrossRef]

- Neyman, J.; Pearson, E.S. On the problem of the most efficient tests of statistical hypotheses. Philos. Trans. R. Soc. Lond. Ser. A Contain. Pap. Math. Phys. Character 1933, 231, 289–337. [Google Scholar] [CrossRef]

- Lehmann, R. Improved critical values for extreme normalized and studentized residuals in Gauss-Markov models. J. Geod. 2012, 86, 1137–1146. [Google Scholar] [CrossRef]

- Sherman, J.; Morrison, W.J. Adjustment of an inverse matrix corresponding to a change in one element of a given matrix. Ann. Math. Stat. 1950, 21, 124–127. [Google Scholar] [CrossRef]

- Nocedal, J.; Wright, S.J. Numerical Optimization, 2nd ed.; Springer: New York, NY, USA, 2006. [Google Scholar] [CrossRef]

- Prussing, J.E. The principal minor test for semidefinite matrices. J. Guid. Control Dyn. 1986, 9, 121–122. [Google Scholar] [CrossRef]

- Gilbert, G.T. Positive definite matrices and Sylveste’s criterion. Am. Math. Mon. 1991, 98, 44. [Google Scholar] [CrossRef]

| 0.05 | 0.317 | 1.50 |

| 0.10 | 0.315 | 1.49 |

| 0.20 | 0.308 | 1.47 |

| 0.30 | 0.297 | 1.43 |

| 0.50 | 0.264 | 1.33 |

| Probabilities for | ||||||

|---|---|---|---|---|---|---|

| no | 0 | 1 | 2 | |||

| min | Inflated Variances | |||||

| 0.01 | 0.52 | 4.68 | 0.03 | 0.45 | 0.42 | 0.10 |

| 0.02 | 0.51 | 4.36 | 0.06 | 0.42 | 0.41 | 0.10 |

| 0.03 | 0.50 | 4.11 | 0.09 | 0.40 | 0.40 | 0.10 |

| 0.05 | 0.48 | 3.69 | 0.15 | 0.36 | 0.38 | 0.10 |

| 0.10 | 0.43 | 3.00 | 0.29 | 0.27 | 0.33 | 0.10 |

| 0.20 | 0.33 | 2.32 | 0.53 | 0.14 | 0.24 | 0.09 |

| 0.30 | 0.24 | 1.82 | 0.69 | 0.06 | 0.16 | 0.09 |

| 0.50 | 0.12 | 0.97 | 0.88 | 0.01 | 0.05 | 0.07 |

| Probabilities for | |||||||

|---|---|---|---|---|---|---|---|

| Test Power | no | 0 | 1 | 2 | |||

| min | Inflated Variances | ||||||

| 1.0 | 1.0 | 0.05 | 0.05 | 0.29 | 0.27 | 0.33 | 0.10 |

| 1.2 | 1.2 | 0.08 | 0.08 | 0.27 | 0.24 | 0.35 | 0.13 |

| 1.5 | 1.5 | 0.13 | 0.12 | 0.24 | 0.21 | 0.38 | 0.17 |

| 2.0 | 2.0 | 0.22 | 0.20 | 0.21 | 0.17 | 0.39 | 0.23 |

| 3.0 | 3.0 | 0.36 | 0.32 | 0.18 | 0.12 | 0.39 | 0.31 |

| 5.0 | 5.0 | 0.55 | 0.49 | 0.14 | 0.08 | 0.36 | 0.42 |

| 1.0 | 1.5 | 0.09 | 0.09 | 0.27 | 0.24 | 0.36 | 0.13 |

| 1.0 | 3.0 | 0.21 | 0.19 | 0.24 | 0.18 | 0.41 | 0.18 |

| 2.0 | 3.0 | 0.30 | 0.26 | 0.19 | 0.14 | 0.40 | 0.27 |

| 2.0 | 5.0 | 0.40 | 0.36 | 0.18 | 0.11 | 0.40 | 0.31 |

| Success Probabilities for | Success Probabilities for | |||

|---|---|---|---|---|

| ofin (31) | ofin (59) | ofin (31) | ofin (59) | |

| 1.0 | 0.022 | 0.021 | 0.005 | 0.005 |

| 2.0 | 0.065 | 0.061 | 0.028 | 0.026 |

| 3.0 | 0.108 | 0.104 | 0.061 | 0.057 |

| 4.0 | 0.150 | 0.144 | 0.095 | 0.089 |

| 5.0 | 0.185 | 0.180 | 0.128 | 0.121 |

| 6.0 | 0.218 | 0.212 | 0.156 | 0.151 |

| ofin (31) | ofin (59) | ofin (31) | ofin (59) | |

| 0.0 | 0.022 | 0.021 | 0.005 | 0.005 |

| 1 | 0.081 | 0.065 | 0.035 | 0.028 |

| 2 | 0.325 | 0.286 | 0.226 | 0.202 |

| 3 | 0.683 | 0.652 | 0.606 | 0.586 |

| 4 | 0.912 | 0.902 | 0.892 | 0.886 |

| 5 | 0.985 | 0.984 | 0.984 | 0.984 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lehmann, R.; Lösler, M.; Neitzel, F. Mean Shift versus Variance Inflation Approach for Outlier Detection—A Comparative Study. Mathematics 2020, 8, 991. https://doi.org/10.3390/math8060991

Lehmann R, Lösler M, Neitzel F. Mean Shift versus Variance Inflation Approach for Outlier Detection—A Comparative Study. Mathematics. 2020; 8(6):991. https://doi.org/10.3390/math8060991

Chicago/Turabian StyleLehmann, Rüdiger, Michael Lösler, and Frank Neitzel. 2020. "Mean Shift versus Variance Inflation Approach for Outlier Detection—A Comparative Study" Mathematics 8, no. 6: 991. https://doi.org/10.3390/math8060991

APA StyleLehmann, R., Lösler, M., & Neitzel, F. (2020). Mean Shift versus Variance Inflation Approach for Outlier Detection—A Comparative Study. Mathematics, 8(6), 991. https://doi.org/10.3390/math8060991