A GPU-Enabled Compact Genetic Algorithm for Very Large-Scale Optimization Problems

Abstract

1. Introduction

- We adapt the binary compact Genetic Algorithm in order to handle integer variables, represented in binary form.

- We present a fully GPU-enabled implementation of the compact Genetic Algorithm, where all the algorithmic operations (sampling, model update, solution evaluation, and comparison) can be optionally executed on the GPU.

- For the ILP problem, we include in the compact Genetic Algorithm custom (problem-specific) operators and a smart initialization technique inspired by Deb and Myburgh [4] that, by acting on the PV, guide the sampling process towards feasible solutions.

- We show that on the OneMax problem our proposed GPU-enabled compact Genetic algorithm obtains partial improvements with respect to the results reported in [46]; on the casting scheduling problem, we obtain at least comparable–and in some cases better—results with respect to those in [4,6], despite a much more limited usage of computational resources.

2. Problem Formulations

2.1. OneMax

2.2. Casting Scheduling Problem

3. Proposed Algorithms

| Algorithm 1 Binary cGA: general structure. |

|

3.1. Binary cGA

3.1.1. Binary cGA-Base

- -

- generateTrial(): This function samples, for each variable, a random number in , and compares it to the corresponding element of PV. If the random number is greater than the element of PV, then the relative variable of trial is set to 1; otherwise, it is set to 0. Each variable is handled by a separate GPU thread.

- -

- compete(): This function compares fitnessTrial and fitnessElite, and sets winner to 1 (0) if trial is better (worse) than elite. This operation is performed without GPU-parallelization.

- -

- evaluate(): This function loops through all the variables of the argument and sums 1 if the ith variable is set to 1. This operation is performed without GPU-parallelization, since its output (the fitness of the argument) depends on all variables.Note: This function is specific for the OneMax problem.

- -

- updatePV(): This function biases PV towards the winner between trial and elite, i.e., it makes more probable that the next trial will be more similar to the winner, increasing or decreasing each element of PV accordingly (see Algorithm 2). Each variable is handled by a separate GPU thread.

| Algorithm 2 Binary cGA-Base: updatePV(). |

|

3.1.2. Binary cGA-A100

- -

- evaluate(): This function evaluates the argument by processing each sub-problem independently on the GPU threads. Each thread takes a portion of the argument and calculates its partial fitness. The operations executed by each thread, illustrated in the outer for loop in Algorithm 3, can be parallelized since each sub-problem operates over disjoint portions of the argument. The function getSubProblem() returns the ith sub-problem, i.e., a vector of 100 variables. Note that in this case evaluate() returns a vector, fitness, rather than a scalar. The partial result of each sub-problem is stored by each GPU thread in the corresponding position of fitness.Note: This function is specific for the OneMax problem.

- -

- compete(): This function operates over the vectors fitnessTrial and fitnessElite returned by the evaluate() shown in Algorithm 3. As shown in Algorithm 4, each separate GPU thread simply checks the winner on its sub-problem, i.e., it operates on a single index of fitnessTrial, fitnessElite, and winner, which are all vector of size problemSize/100.Note: This function is specific for the OneMax problem.

- -

- updatePV(): This function is implemented similarly to the evaluate(). As shown in Algorithm 5, each GPU thread loads its portion of data, composed by both corresponding sub-problem of elite and trial and the relative portion of winner and PV. After that, the procedure is similar to the one described in the cGA-Base (see Algorithm 2), with the only difference being that in this case the for loop in Algorithm 2 is performed within the same GPU thread and not in parallel. The ancillary function setPartialPV() updates PV with the result of the partial pPV.Note: This function is specific for the OneMax problem.

| Algorithm 3 Binary cGA-A100: evaluate(). |

|

| Algorithm 4 Binary cGA-A100: compete(). |

|

| Algorithm 5 Binary cGA-A100: updatePV(). |

|

3.1.3. Binary cGA-A1

- -

- updatePV(): This function includes, in this case, also the operations performed in evaluate() and compete() and results quite simpler than the previous cases. The process is shown in Algorithm 6. Since PV is updated only when the variables of trial and elite are different, each GPU thread checks if one of the two variables is set to 1 and the other to 0. Consequently, PV is only reduced, which increases the probability of sampling 1. Finally, if the relative element of trial is set to 1, each GPU thread adds 1 to the fitnessTrial variable to calculate the total fitness. Note that in this case there is no need for using winner, which reduces the memory consumption.Note: This function is specific for the OneMax problem.

| Algorithm 6 Binary cGA-A1: updatePV(). |

|

3.2. Discrete cGA

| Algorithm 7 Discrete cGA: specific structure for the cast scheduling problem. |

|

- -

- smartInitialization(): This function performs the initialization process, divided into three steps. Firstly, the function estimateH() estimates the value H to get an efficiency equal to , as described in Section 2.2. This value is also used to define the size of elite and PV. Secondly, an elite of size is created in initializeElite(), repaired by the two mutations, and evaluated. Finally, PV is created, with a size of and processed as described in initializePV() below.

- o

- initializeElite(): This function initializes the elite as described in [4], i.e., creates the elite by creating N vectors of size H, which are initialized respecting as much as possible the number of copies required for each object. This operation is GPU-parallelized through two steps: firstly, a vector of random numbers is created, and a correction factor is calculated; and, secondly, the correction is applied to the vector. After this, to repair any possible violated constraint, the elite is passed to the two mutation operators mutationOne() and mutationTwo(), and finally evaluated by evaluate(). The implementation of these functions is shown below.

- o

- initializePV(): This function initializes the PV such that it generates solutions that satisfy the inequality constraints, and which are biased towards the elite. This initialization is performed in two functions. The first function, shown in Algorithm 8, blocks the PV values for the variables which, if set to 1, would cause the violation of an inequality constraint. For example, if the crucible size is 600 kg and an object weights 200 kg, obviously no more than three copies can be cast for that object without a penalty: in this case, the inhibitor blocks (i.e., sets to NaN) the third and fourth bit of the variable, preventing it from assuming values greater than 3 for that object. This operation is GPU-parallelized, with each thread checking a single element of PV. The second function aims at modifying the PV values to produce solutions closer to the elite. This operation is done by setting each PV element to if the bit of the corresponding element in the elite is 1, and otherwise, unless that element is blocked by inhibitor(), as shown in Algorithm 9.

- -

- generateTrial(): This function is divided into four steps: generation of the trial, crossover and two mutation operators to repair the trial. The problem-specific crossover and mutation operators were implemented as described in [6]. In addition, we operated some modifications in order to parallelize the operators on the GPU and save the information necessary to simplify the successive calculation of the fitness. This information consists of two vectors, one of size H, called heatsTrial, and one of size N called copiesTrial. The first one saves the available space in the crucible for each heat, and it is created during newTrial() in two steps: firstly, the vector is initialized with the crucible size; then, while trial is created, its values are decreased, as shown in Algorithm 10. Moreover, heatsTrial is updated also during crossover and mutations, as shown below. The second vector, copiesTrial, stores instead the total number of objects cast by the trial, and is calculated during mutationOne() (see Algorithm 12). The other details of the four steps are presented below.

- o

- newTrial(): This function, as shown in Algorithm 10, is implemented such that each GPU thread handles one variable of trial and its corresponding four values of PV. More specifically, each thread samples 4 bits based on the corresponding probabilities of PV, converts them to a value in , and assigns this value to the corresponding variable in trial. Finally, the element of heatsTrial relative to this variable is updated accordingly to the value just assigned. Note that this last operation is implemented as thread-safe, as the same heat can be updated asynchronously by different threads.

- o

- crossover(): The function, as shown in Algorithm 11, is implemented such that each GPU thread handles one variable of trial. The crossover operator compares each heat of trial with the corresponding one in elite. If elite has a better heat (i.e., has a higher (lower) value in case both trial and elite have negative (positive) heats), then all the elite variables referred to that heat are assigned to trial, updating heatsTrial accordingly.

- o

- mutationOne(): The first mutation is meant to repair trial with respect to the equality constraint (see Equation (4)). The procedure is divided into two steps, as shown in Algorithm 12: firstly, the total number of copies cast by trial for each object, copiesTrial, is calculated, with each GPU thread handling one variable of trial and performing a thread-safe sum over copiesTrial. Next, for each object, if the copies required (stored in copies) are less than the ones that are cast (stored in copiesTrial), this means that some copies in excess should be removed from trial: to do that, first the heat with the greatest inequality violation (i.e., the lowest value of heatsTrial, returned by argmin()) is found, then the corresponding variable in trial is decreased by one, and heatsTrial and copiesTrial are updated accordingly. On the other hand, if the copies required are more than the ones that are cast, this means that some more copies should be added to trial: to do that, first the heat with the lowest crucible utilization (i.e., the highest value of available space, stored in heatsTrial, returned by argmax()) is found, then the corresponding variable in trial is increased by one, and heatsTrial and copiesTrial are updated accordingly. This operation is repeated until the equality constraint is satisfied. Note that the functions used to find the two heats, i.e., argmin() and argmax(), are GPU-parallelized. Moreover, during the repair process heatsTrial and copiesTrial vectors are updated in order to be reused later to calculate the fitness.

- o

- mutationTwo(): The second mutation tries to reduce the heats which use more space than the one available in the crucible, as described in [4]. The procedure consists in finding two heats: the one with the greatest inequality violation, and the one with the greatest available space. After that, a random object is selected and removed from the first heat and added to the second one, in order to preserve the total number of copies. These operations are repeated the number of times indicated by the parameter iterationLimit. In the original version in [4], this value was fixed during all the computation (set to 30), while, in our implementation, due to the compact nature of the algorithm, we needed to double this value at each iteration, starting from 30. This process leads to an exponential increase of the time of mutationTwo(), as shown in the next section. As for the parallelization process, similar to mutationOne(), it is obtained by loading trial into the GPU and then performing the argmin() and argmax() operations parallelized with a one-to-one mapping between GPU threads and variables.

- -

- evaluate(): This function evaluates the solution in a time-efficient way, using the information already calculated during generateTrial(). The two penalty factors related to the inequality and equality constraints (see Equations (3) and (4)) are determined, respectively, through the heatsTrial and copiesTrial vectors, and added to fitnessTrial, as shown in Algorithm 13. The total time required is , and it is further decreased due to the parallelization over H heats. Note that the second loop over N objects is not parallelized as N is typically much smaller than H.

- -

- updatePV(): This function is parallelized on the size of PV (one bit per GPU thread) and operates similarly to Algorithm 2, with the only difference that each thread has to extract the relative bit before performing the update.

| Algorithm 8 Discrete cGA: inhibitor(). |

|

| Algorithm 9 Discrete cGA: initializePV(). |

|

| Algorithm 10 Discrete cGA: newTrial(). |

|

| Algorithm 11 Discrete cGA: crossover(). |

|

| Algorithm 12 Discrete cGA: mutationOne(). |

|

| Algorithm 13 Discrete cGA: evaluate(). |

|

4. Results

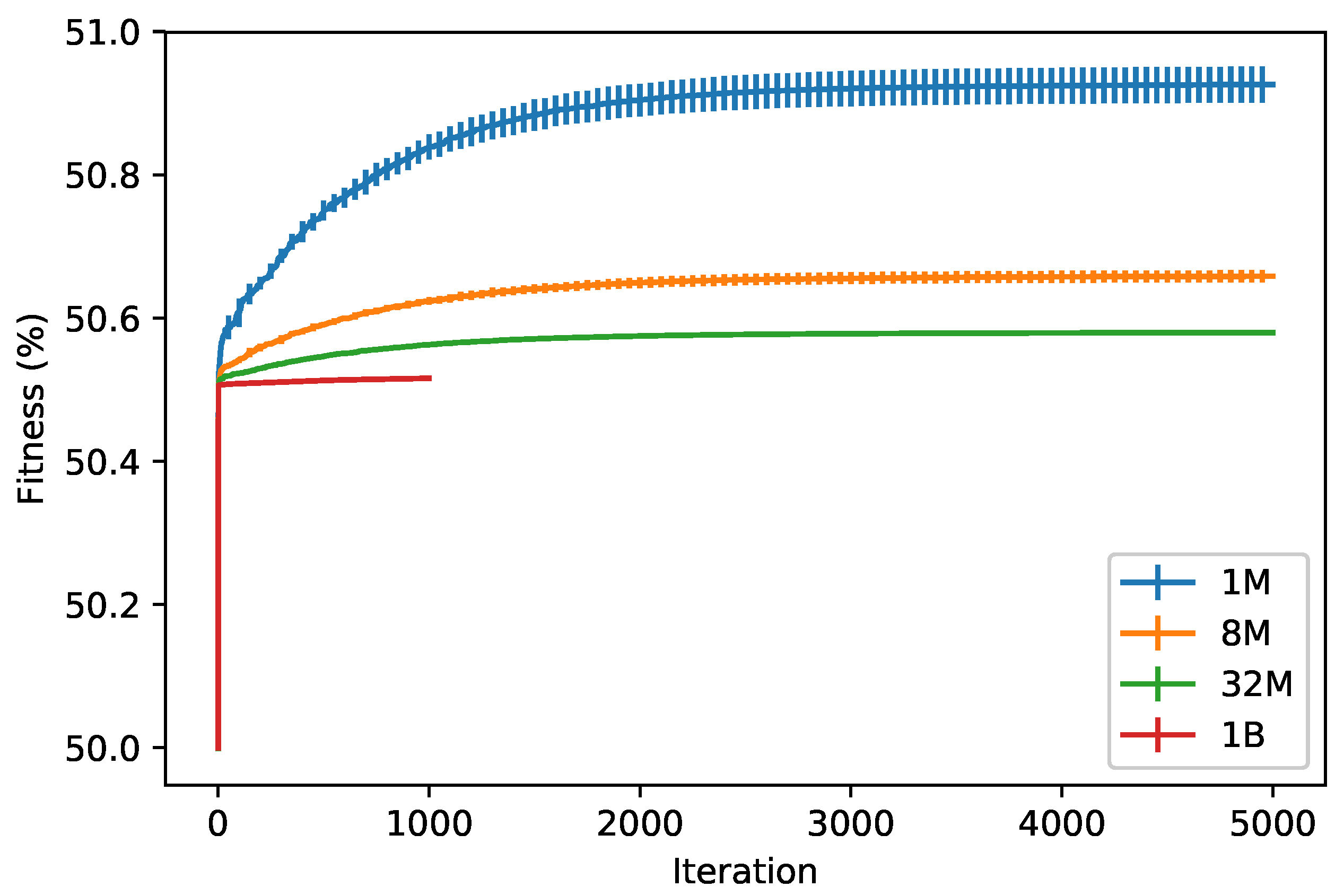

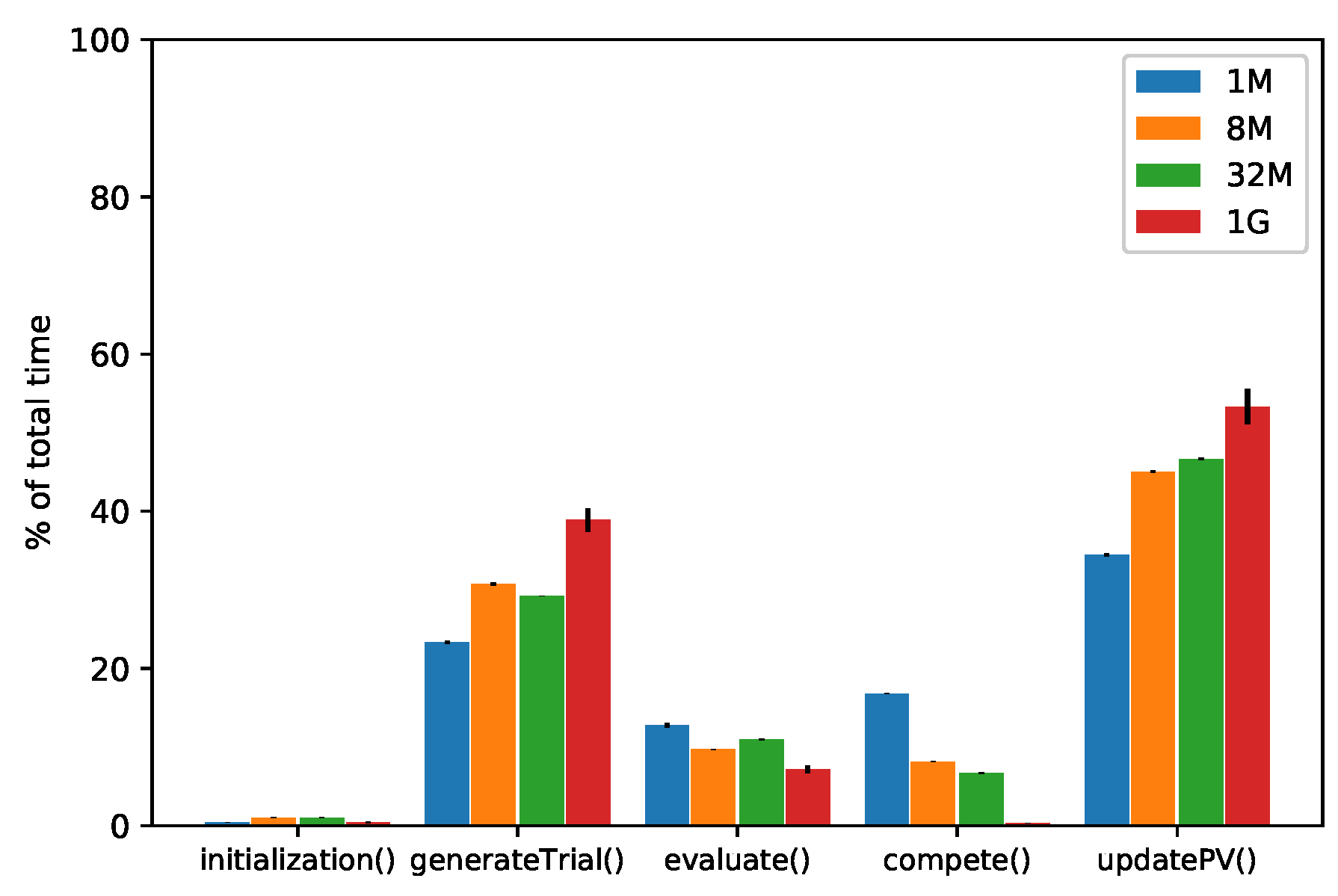

4.1. OneMax

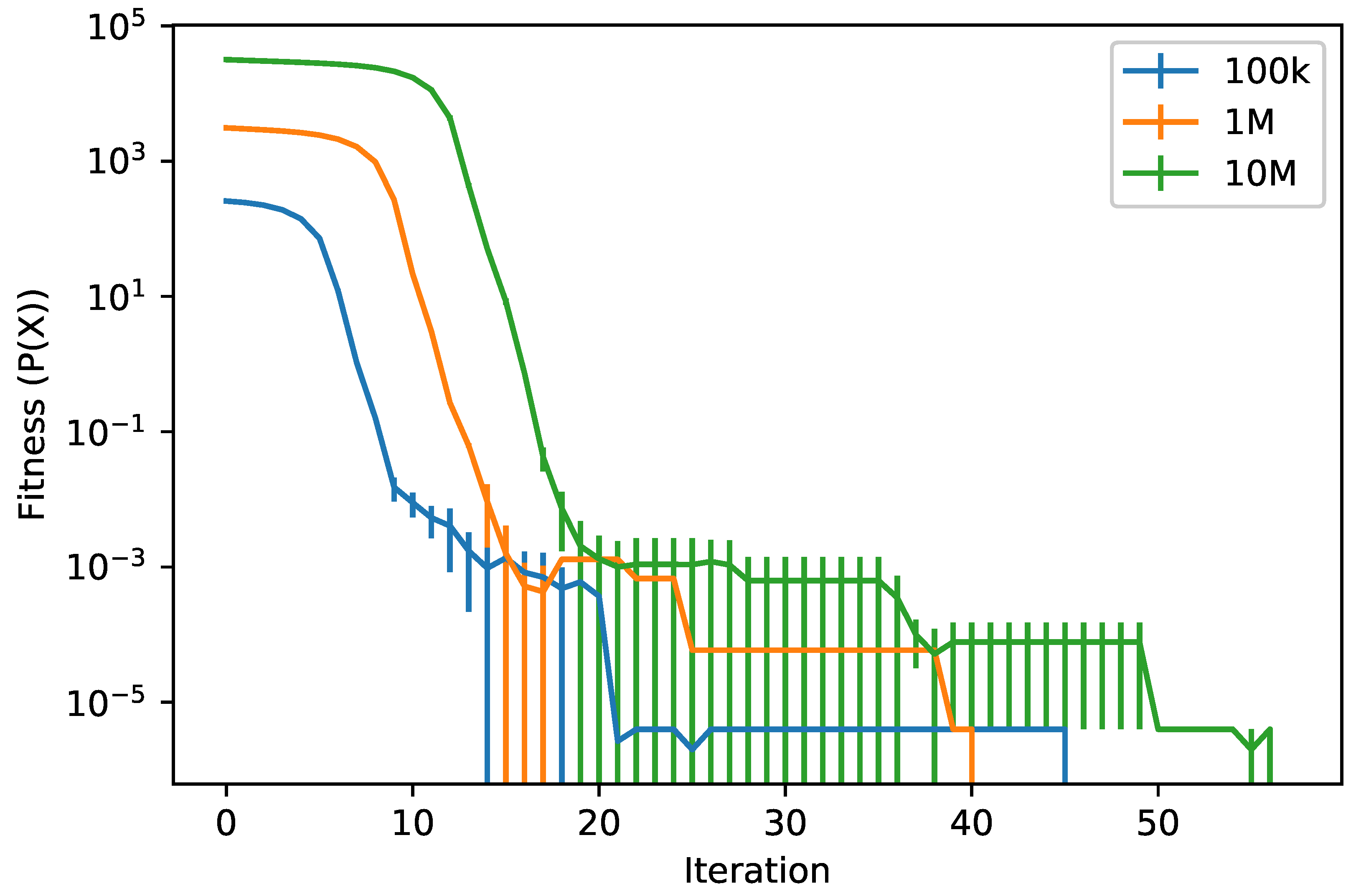

4.2. Casting Scheduling Problem

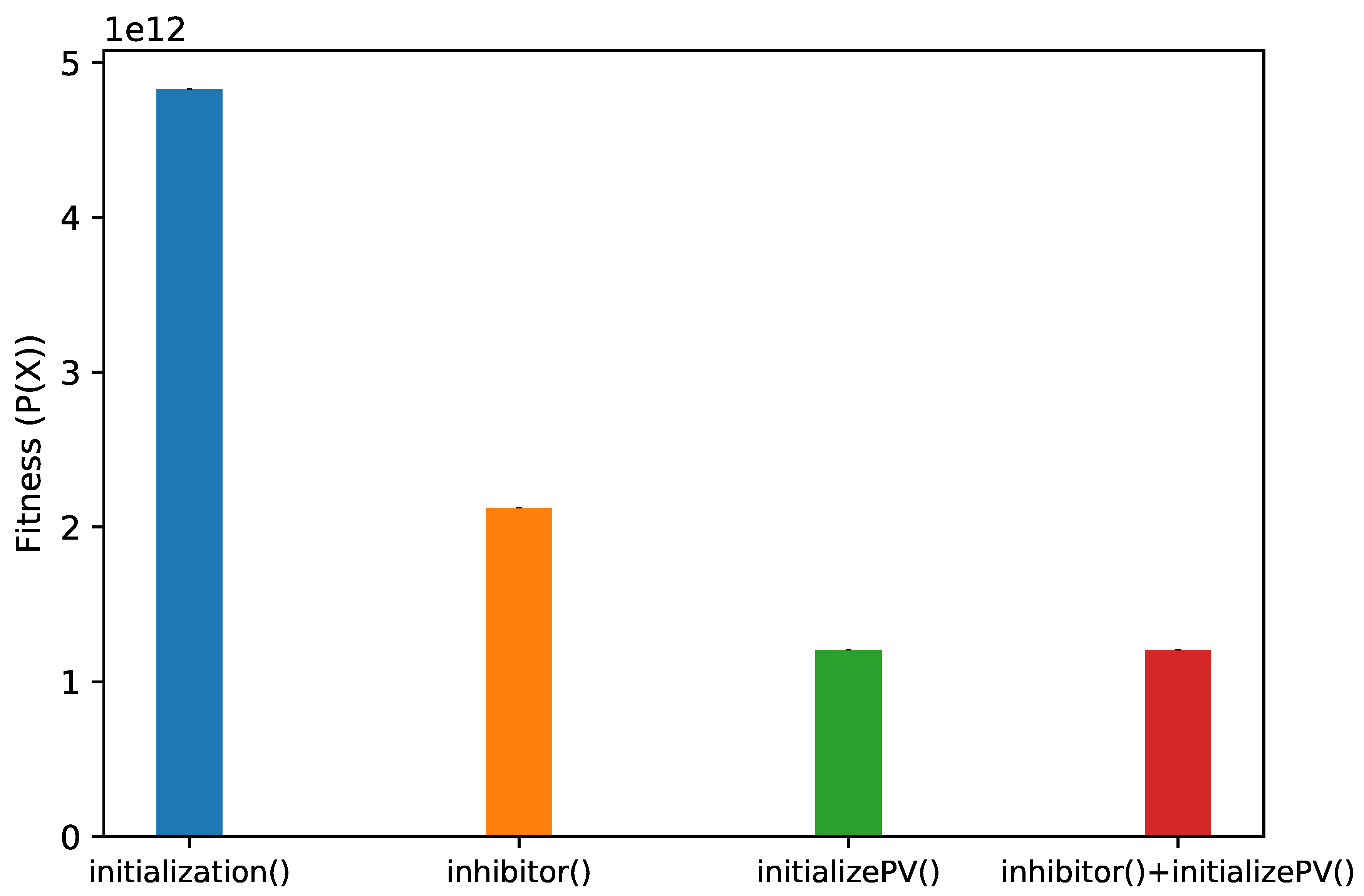

Effect of the Smart PV Initialization

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A. Additional Results on OneMax Problem

Appendix A.1. Discrete OneMax

- -

- Results: We performed 10 runs on four dimensionalities, setting the virtualPopulation to 100. The maximum number of iterations was set to 5000 for problem instances up to 32M variables and 1000 for the 1B case. All experiments were executed on the Google®Colab service. The results obtained, reported in Table A1, are similar to the results reported in Section 4.1 for the binary OneMax problem, although times are (up to) twice as big. The main reason for this time increase is the fact that in this case the algorithm handles 4 bits per variable in the newTrial() and updatePV() functions, instead of just one as in the binary cGA.

| Discrete cGA | Continuous cGA | |||||||

|---|---|---|---|---|---|---|---|---|

| 1M | 8M | 32M | 1B | 1M | 8M | 32M | 1B | |

| Time (s) | 72.463 (2.038) | 395.806 (3.985) | 1958.932 (485.963) | 13,143.343 (3201.053) | 156.647 (1.487) | 985.936 (118.716) | 3366.755 (20.297) | 20,895.186 (4131.433) |

| Fitness (%) | 50.926 (0.0250) | 50.658 (0.008) | 50.579 (0.002) | 50.515 (0.0005) | 50.870 (0.039) | 50.102 (0.024) | 50.034 (0.0072) | 50.0055 (0.0003) |

| Iterations | 5000 | 5000 | 5000 | 1000 | 5000 | 5000 | 5000 | 1000 |

Appendix A.2. Continuous OneMax

- -

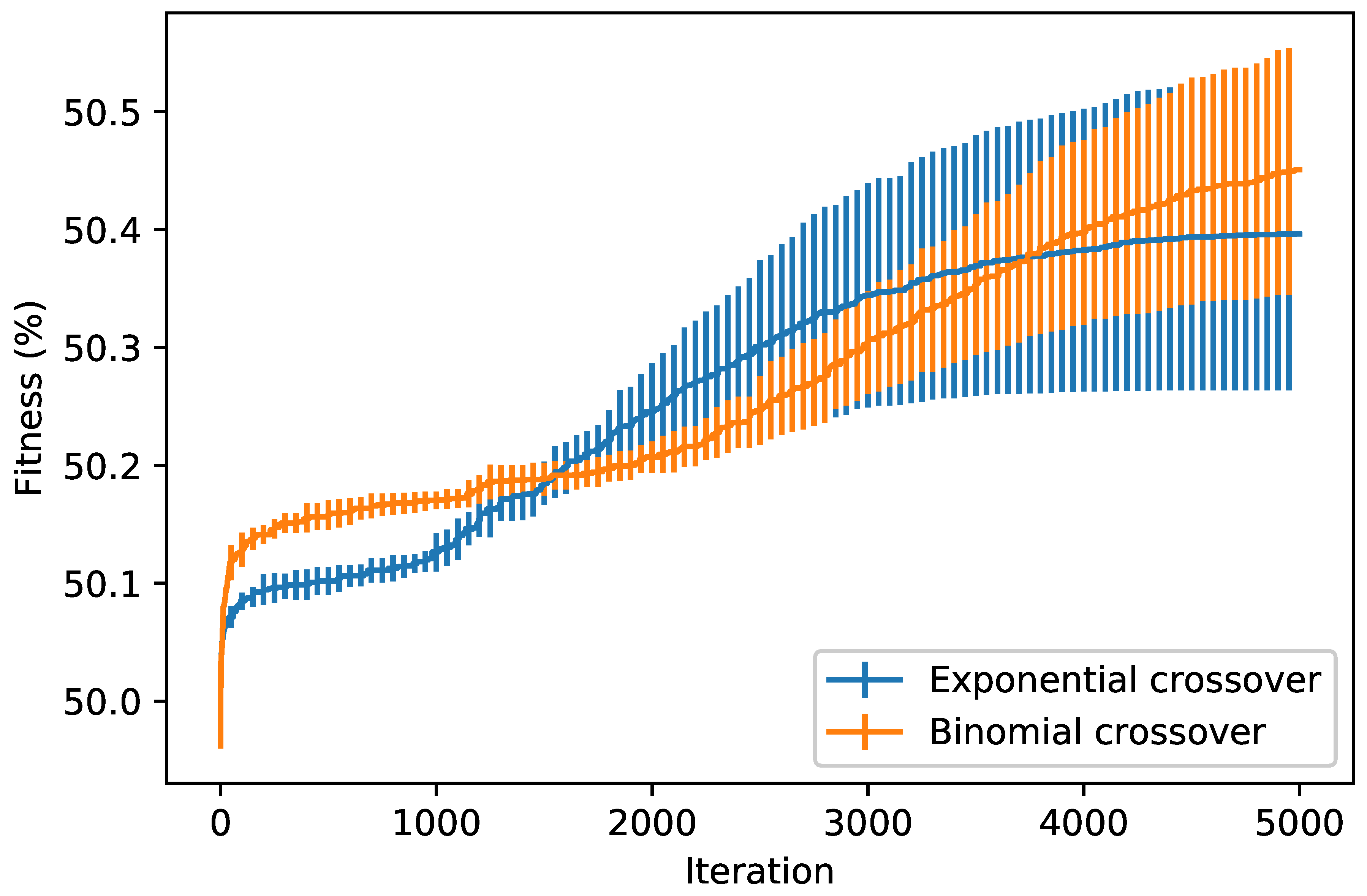

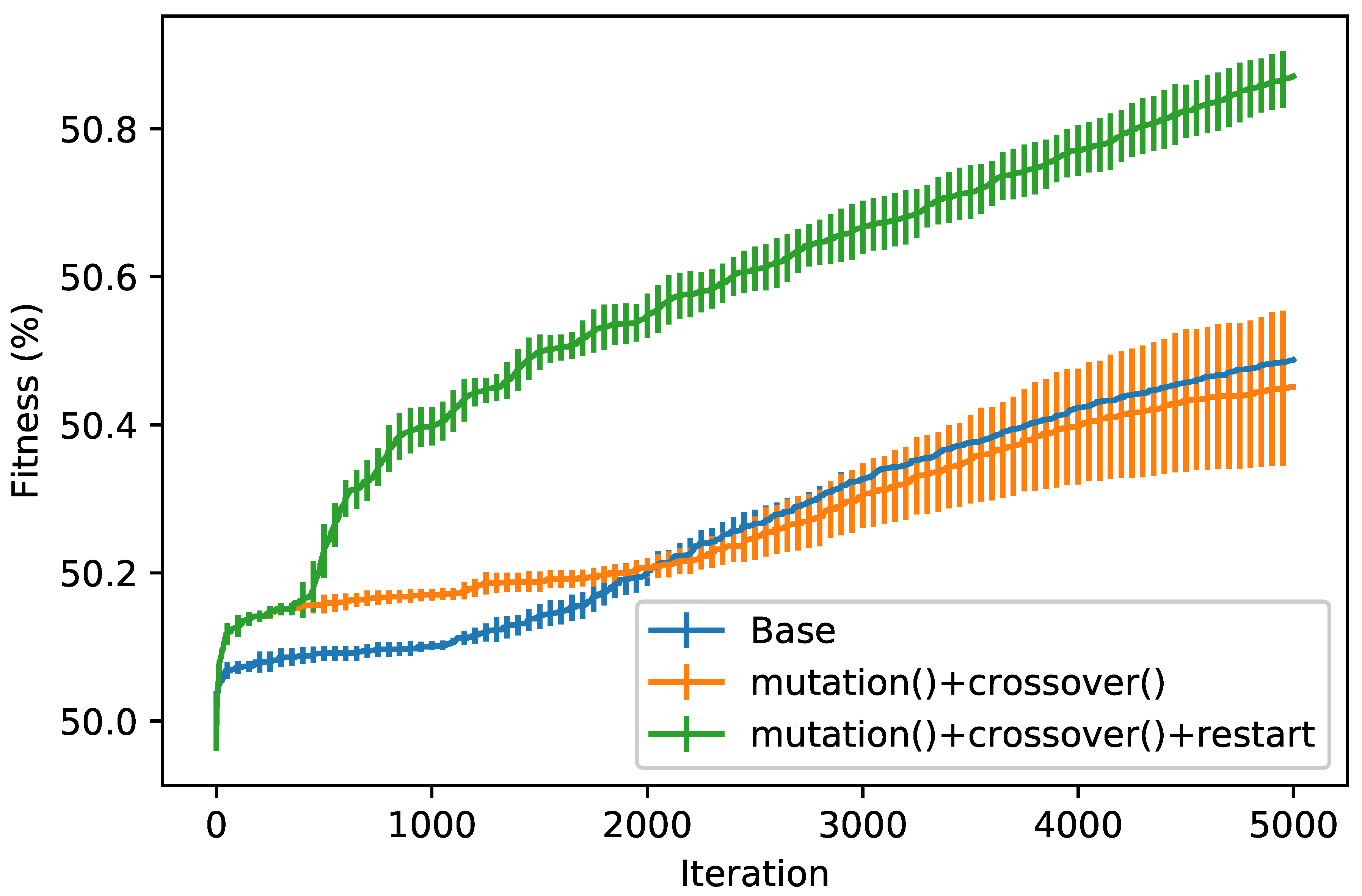

- Algorithm: The main modifications we added to the original cGA scheme illustrated in Algorithm 1 include mutation and crossover operators, inspired by Differential Evolution (DE) [13], and an adaptive restart mechanism. All these mechanisms are problem-independent.

- o

- Mutation: The value of each variable is obtained by sampling three values from the relative Gaussian PDF, and then combining them as in the rand/1 DE [13]:where F is a parameter, and sample() is the procedure to sample from the Gaussian PDF.

- o

- Crossover: We implemented two different strategies, based on the binomial and exponential crossover used in DE. Both are based on a parameter , but their behavior is different. In the binomial crossover, each variable in a trial copies with probability the corresponding variable from the elite. In the exponential crossover, starting from a random position, the variables of the trial are copied from the elite, until a random number is greater than .

- o

- Restart: The adaptive restart mechanism partially restarts the evolution by resetting the values trough two parameters. The first parameter, invariant, controls when to apply the restart, which can occur for two reasons: either if for invariant consecutive iterations the trial does not improve the elite, or if the trial improves it but the improvement (i.e., the difference between its fitness and that of the elite) is less than . The second parameter regulates the portion of variables involved, i.e., each variable as a probability to be reset. Finally, the new value of after each restart changes dynamically during the evolution process: in particular, it starts from 10, it is doubled every time a restart occurs and it is halved every time there are invariant iterations without a restart.

- -

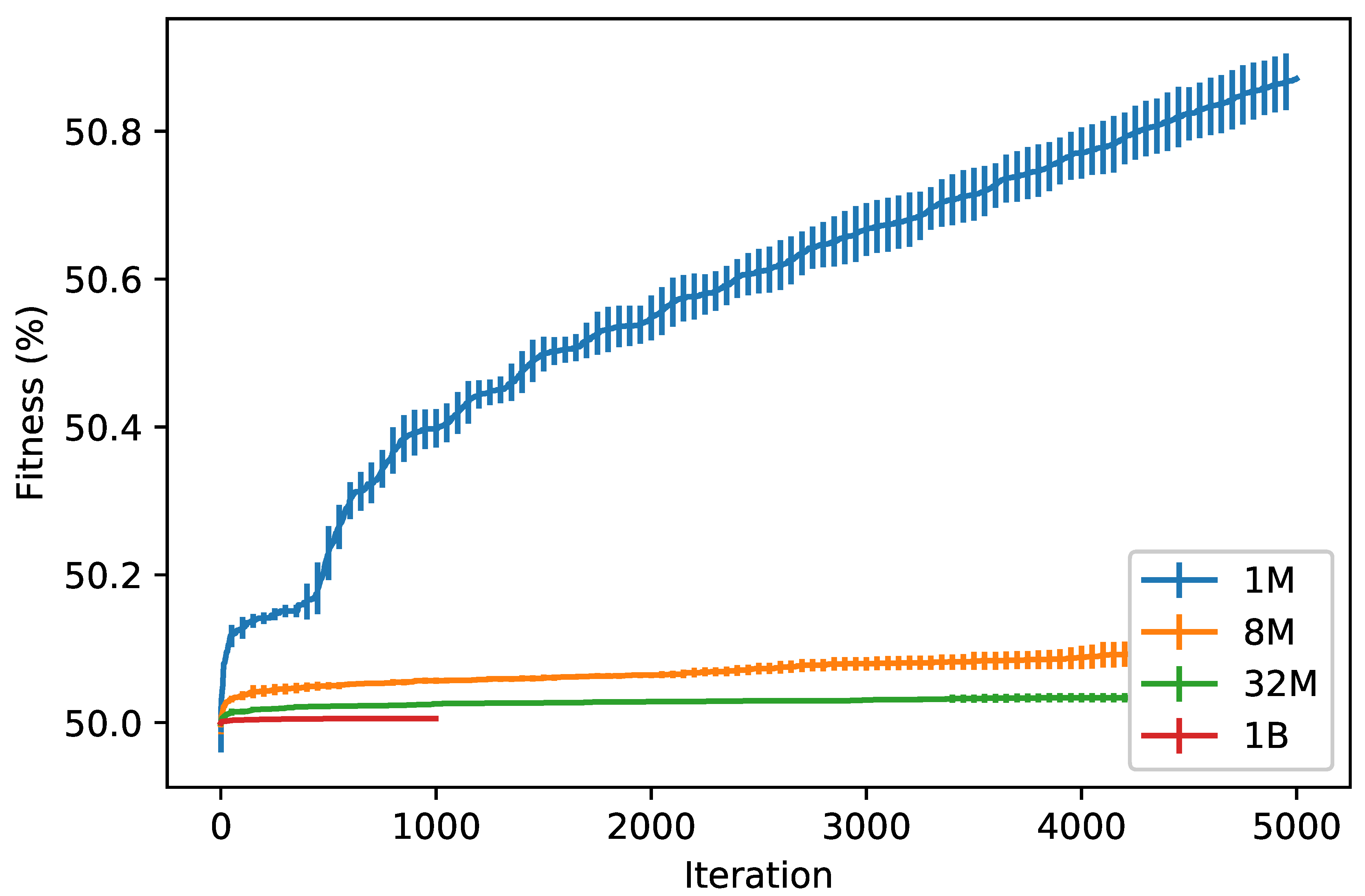

- Results: We tested separately the different behavior of the two crossover strategies, the impact of three different algorithm configurations (base, i.e., without DE-mutation and crossover; with mutation and crossover; and with mutation, crossover and restart), and how the algorithm scales. For this analysis, we used the parameters shown in Table A2. All the experiments were executed on the Google®Colab service.

- o

- Crossover strategies: We tested the two crossover strategies over 5000 iterations on the continuous OneMax problem with 1M variables. As shown in Figure A2, the two crossover strategies show a similar behavior, although the exponential crossover tends to reach a plateau earlier than the binomial crossover.

- o

- Algorithm configurations: In this case as well, the different configurations were tested over 5000 iterations on the continuous OneMax problem with 1M variables, using the binomial crossover. As shown in Figure A3, the restart greatly enhances the algorithm’s performance, avoiding premature convergence and also increasing the fitness achieved. It is important to consider that the parameters involved in the restart procedure must be chosen carefully in order to avoid making the search ineffective.

- o

- Scalability: As shown in Figure A4, the results are similar, in terms of behavior, to the binary and discrete cases, shown earlier in Figure 1 and Figure A1. However, the final fitness values are slightly worse. This is mainly due to two reasons: firstly, the continuous domain leads to smaller fitness increases; and, secondly, the chosen restart parameters seem to work well in the 1M case, but not on larger dimensionalities. It is also of note that the total execution time results from two to four times bigger than the equivalent binary and discrete cases.

| Parameter | Value |

|---|---|

| virtualPopulation | 100 |

| F | |

| (binomial) | |

| (exponential) | |

| invariant | 300 |

| resetPR |

References

- CPLEX IBM ILOG, User’s Manual for CPLEX. Available online: https://www.ibm.com/support/knowledgecenter/SSSA5P_12.7.1/ilog.odms.cplex.help/CPLEX/homepages/usrmancplex.html (accessed on 1 April 2020).

- Gurobi Optimization Inc. Gurobi Optimizer Reference Manual. Available online: https://www.gurobi.com/wp-content/plugins/hd_documentations/documentation/9.0/refman.pdf (accessed on 1 April 2020).

- Makhorin, A. GLPK (GNU Linear Programming Kit). Available online: https://www.gnu.org/software/glpk/ (accessed on 1 April 2020).

- Deb, K.; Myburgh, C. A population-based fast algorithm for a billion-dimensional resource allocation problem with integer variables. Eur. J. Oper. Res. 2017, 261, 460–474. [Google Scholar] [CrossRef]

- Omidvar, M.N.; Li, X. Evolutionary large-scale global optimization: An introduction. In Proceedings of the Genetic and Evolutionary Computation Conference Companion, Berlin, Germany, 15–19 July 2017; pp. 807–827. [Google Scholar]

- Deb, K.; Myburgh, C. Breaking the billion-variable barrier in real-world optimization using a customized evolutionary algorithm. In Proceedings of the Genetic and Evolutionary Computation Conference, Denver, CO, USA, 20–24 July 2016; pp. 653–660. [Google Scholar]

- Neri, F.; Iacca, G.; Mininno, E. Compact Optimization. In Handbook of Optimization: From Classical to Modern Approach; Springer: New York, NY, USA, 2013; pp. 337–364. [Google Scholar]

- Lozano, J.A.; Larra naga, P.; Inza, I.; Bengoetxea, E. Towards a New Evolutionary Computation: Advances on Estimation of Distribution Algorithms; Springer: New York, NY, USA, 2006; Volume 192. [Google Scholar]

- Harik, G.R.; Lobo, F.G.; Goldberg, D.E. The compact genetic algorithm. IEEE Trans. Evol. Comput. 1999, 3, 287–297. [Google Scholar] [CrossRef]

- Ahn, C.W.; Ramakrishna, R.S. Elitism-based compact genetic algorithms. IEEE Trans. Evol. Comput. 2003, 7, 367–385. [Google Scholar]

- Mininno, E.; Cupertino, F.; Naso, D. Real-Valued Compact Genetic Algorithms for Embedded Microcontroller Optimization. IEEE Trans. Evol. Comput. 2008, 12, 203–219. [Google Scholar] [CrossRef]

- Corno, F.; Reorda, M.S.; Squillero, G. The Selfish Gene Algorithm: A New Evolutionary Optimization Strategy. In ACM Symposium on Applied Computing; ACM: New York, NY, USA, 1998; pp. 349–355. [Google Scholar]

- Mininno, E.; Neri, F.; Cupertino, F.; Naso, D. Compact differential evolution. IEEE Trans. Evol. Comput. 2011, 15, 32–54. [Google Scholar] [CrossRef]

- Iacca, G.; Caraffini, F.; Neri, F. Compact Differential Evolution Light: High Performance Despite Limited Memory Requirement and Modest Computational Overhead. J. Comput. Sci. Technol. 2012, 27, 1056–1076. [Google Scholar] [CrossRef]

- Mallipeddi, R.; Iacca, G.; Suganthan, P.N.; Neri, F.; Mininno, E. Ensemble strategies in Compact Differential Evolution. In Proceedings of the Congress on Evolutionary Computation, New Orleans, LA, USA, 5–8 June 2011; pp. 1972–1977. [Google Scholar]

- Iacca, G.; Mallipeddi, R.; Mininno, E.; Neri, F.; Suganthan, P.N. Super-fit and population size reduction in compact Differential Evolution. In Proceedings of the Workshop on Memetic Computing, Paris, France, 11–15 April 2011; pp. 1–8. [Google Scholar]

- Iacca, G.; Mallipeddi, R.; Mininno, E.; Neri, F.; Suganthan, P.N. Global supervision for compact Differential Evolution. In Proceedings of the Symposium on Differential Evolution, Paris, France, 11–15 April 2011; pp. 1–8. [Google Scholar]

- Iacca, G.; Neri, F.; Mininno, E. Opposition-Based Learning in Compact Differential Evolution. In Proceedings of the Conference on the Applications of Evolutionary Computation, Dublin, Ireland, 27–29 April 2011; pp. 264–273. [Google Scholar]

- Iacca, G.; Mininno, E.; Neri, F. Composed compact differential evolution. Evol. Intell. 2011, 4, 17–29. [Google Scholar] [CrossRef]

- Neri, F.; Iacca, G.; Mininno, E. Disturbed Exploitation compact Differential Evolution for limited memory optimization problems. Inf. Sci. 2011, 181, 2469–2487. [Google Scholar] [CrossRef]

- Iacca, G.; Neri, F.; Mininno, E. Noise analysis compact differential evolution. Int. J. Syst. Sci. 2012, 43, 1248–1267. [Google Scholar] [CrossRef]

- Neri, F.; Mininno, E.; Iacca, G. Compact Particle Swarm Optimization. Inf. Sci. 2013, 239, 96–121. [Google Scholar] [CrossRef]

- Iacca, G.; Neri, F.; Mininno, E. Compact Bacterial Foraging Optimization. In Swarm and Evolutionary Computation; Springer: Berlin/Heidelberg, Germany, 2012; pp. 84–92. [Google Scholar]

- Yang, Z.; Li, K.; Guo, Y. A new compact teaching-learning-based optimization method. In International Conference on Intelligent Computing; Springer: Cham, Switzerland, 2014; pp. 717–726. [Google Scholar]

- Dao, T.K.; Pan, T.S.; Nguyen, T.T.; Chu, S.C.; Pan, J.S. A Compact Flower Pollination Algorithm Optimization. In Proceedings of the International Conference on Computing Measurement Control and Sensor Network, Matsue, Japan, 20–22 May 2016; pp. 76–79. [Google Scholar]

- Tighzert, L.; Fonlupt, C.; Mendil, B. A set of new compact firefly algorithms. Swarm Evol. Comput. 2018, 40, 92–115. [Google Scholar] [CrossRef]

- Dao, T.K.; Chu, S.C.; Shieh, C.S.; Horng, M.F. Compact artificial bee colony. In Proceedings of the International Conference on Industrial, Engineering and Other Applications of Applied Intelligent Systems, Kaohsiung, Taiwan, 3–6 June 2014; pp. 96–105. [Google Scholar]

- Banitalebi, A.; Aziz, M.I.A.; Bahar, A.; Aziz, Z.A. Enhanced compact artificial bee colony. Inf. Sci. 2015, 298, 491–511. [Google Scholar] [CrossRef]

- Jewajinda, Y. Covariance matrix compact differential evolution for embedded intelligence. In Proceedings of the IEEE Region 10 Symposium, Bali, Indonesia, 9–11 May 2016; pp. 349–354. [Google Scholar]

- Neri, F.; Mininno, E. Memetic compact differential evolution for Cartesian robot control. IEEE Comput. Intell. Mag. 2010, 5, 54–65. [Google Scholar] [CrossRef]

- Iacca, G.; Caraffini, F.; Neri, F. Memory-saving memetic computing for path-following mobile robots. Appl. Soft Comput. 2013, 13, 2003–2016. [Google Scholar] [CrossRef]

- Iacca, G.; Caraffini, F.; Neri, F.; Mininno, E. Robot Base Disturbance Optimization with Compact Differential Evolution Light. In Conference on the Applications of Evolutionary Computation; Springer: Berlin/Heidelberg, Germany, 2012; pp. 285–294. [Google Scholar]

- Gallagher, J.C.; Vigraham, S.; Kramer, G. A family of compact genetic algorithms for intrinsic evolvable hardware. IEEE Trans. Evol. Comput. 2004, 8, 111–126. [Google Scholar] [CrossRef]

- Yang, Z.; Li, K.; Guo, Y.; Ma, H.; Zheng, M. Compact real-valued teaching-learning based optimization with the applications to neural network training. Knowl. -Based Syst. 2018, 159, 51–62. [Google Scholar] [CrossRef]

- Dao, T.K.; Pan, T.S.; Nguyen, T.T.; Chu, S.C. A compact artificial bee colony optimization for topology control scheme in wireless sensor networks. J. Inf. Hiding Multimed. Signal Process. 2015, 6, 297–310. [Google Scholar]

- Prugel-Bennett, A. Benefits of a Population: Five Mechanisms That Advantage Population-Based Algorithms. IEEE Trans. Evol. Comput. 2010, 14, 500–517. [Google Scholar] [CrossRef]

- Caraffini, F.; Neri, F.; Passow, B.N.; Iacca, G. Re-sampled inheritance search: High performance despite the simplicity. Soft Comput. 2013, 17, 2235–2256. [Google Scholar] [CrossRef]

- Iacca, G.; Caraffini, F. Compact Optimization Algorithms with Re-Sampled Inheritance. In Conference on the Applications of Evolutionary Computation; Springer: Cham, Switzerland, 2019; pp. 523–534. [Google Scholar]

- Hansen, N.; Müller, S.D.; Koumoutsakos, P. Reducing the time complexity of the derandomized evolution strategy with covariance matrix adaptation (CMA-ES). Evol. Comput. 2003, 11, 1–18. [Google Scholar] [CrossRef]

- Loshchilov, I.; Glasmachers, T.; Beyer, H.G. Large scale black-box optimization by limited-memory matrix adaptation. IEEE Trans. Evol. Computat. 2018, 23, 353–358. [Google Scholar] [CrossRef]

- Halman, N.; Kellerer, H.; Strusevich, V.A. Approximation schemes for non-separable non-linear boolean programming problems under nested knapsack constraints. Eur. J. Oper. Res. 2018, 270, 435–447. [Google Scholar] [CrossRef]

- Caraffini, F.; Neri, F.; Iacca, G. Large scale problems in practice: the effect of dimensionality on the interaction among variables. In Conference on the Applications of Evolutionary Computation; Springer: Cham, Switzerland, 2017; pp. 636–652. [Google Scholar]

- Schaffer, J.; Eshelman, L. On crossover as an evolutionarily viable strategy. In Proceedings of the International Conference on Genetic Algorithms, San Diego, CA, USA, 13–16 July 1991; pp. 61–68. [Google Scholar]

- Goldberg, D.E.; Sastry, K.; Llorà, X. Toward routine billion-variable optimization using genetic algorithms. Complexity 2007, 12, 27–29. [Google Scholar] [CrossRef]

- Wang, Z.; Hutter, F.; Zoghi, M.; Matheson, D.; de Feitas, N. Bayesian optimization in a billion dimensions via random embeddings. J. Artif. Intell. Res. 2016, 55, 361–387. [Google Scholar] [CrossRef]

- Iturriaga, S.; Nesmachnow, S. Solving very large optimization problems (up to one billion variables) with a parallel evolutionary algorithm in CPU and GPU. In Proceedings of the IEEE International Conference on P2P, Parallel, Grid, Cloud and Internet Computing, Victoria, BC, Canada, 12–14 November 2012; pp. 267–272. [Google Scholar]

- Xinchao, Z. Simulated annealing algorithm with adaptive neighborhood. Appl. Soft Comput. 2011, 11, 1827–1836. [Google Scholar] [CrossRef]

- Iacca, G. Distributed optimization in wireless sensor networks: an island-model framework. Soft Comput. 2013, 17, 2257–2277. [Google Scholar] [CrossRef]

- Whitley, D. Next generation genetic algorithms: A user’s guide and tutorial. In Handb. Metaheuristics; Springer: Cham, Switzerland, 2019; pp. 245–274. [Google Scholar]

- Varadarajan, S.; Whitley, D. The massively parallel mixing genetic algorithm for the traveling salesman problem. In Proceedings of the Genetic and Evolutionary Computation Conference, Prague, Czech Republic, 13–17 July 2019; pp. 872–879. [Google Scholar]

| Name | Description |

|---|---|

| problemSize | Problem dimensionality (scalar) |

| virtualPopulation | Virtual population (scalar) |

| PV | Probability Vector (vector) |

| trial | Solution sampled from PV (vector) |

| elite | Best solution found so far (vector) |

| fitnessTrial | Fitness of trial (scalar or vector) |

| fitnessElite | Fitness of elite (scalar or vector) |

| winner | Flag(s) indicating if trial (1) or elite (0) is better (scalar or vector) |

| generateTrial() | Sample trial from PV (return a solution) |

| evaluate() | Evaluate a solution (return its fitness) |

| compete() | Compare two solutions (return winner) |

| updatePV() | Update PV (return PV) |

| cGA-Base | cGA-A100 | cGA-A1 | ||||

|---|---|---|---|---|---|---|

| Data | Type | Size | Type | Size | Type | Size |

| PV | Float32 | problemSize | Float32 | problemSize | Float32 | problemSize |

| trial | Bool | problemSize | Bool | problemSize | Bool | problemSize |

| elite | Bool | problemSize | Bool | problemSize | Bool | problemSize |

| fitnessTrial | Int32 | 1 | Int8 | problemSize/100 | Int32 | 1 |

| fitnessElite | Int32 | 1 | Int8 | problemSize/100 | Int32 | 1 |

| winner | Int32 | 1 | Bool | problemSize/100 | - | - |

| Name | Description |

|---|---|

| N | Number of objects (scalar) |

| W | Crucible sizes (vector) |

| Desired efficiency (scalar) | |

| H | Number of heats to achieve () (scalar) |

| copies | Copies to be cast for each object (vector) |

| weights | Weights (vector) |

| virtualPopulation | Virtual population (scalar) |

| PV | Probability Vector (vector) |

| trial | Solution sampled from PV (vector) |

| elite | Best solution found so far (vector) |

| copiesTrial | Copies cast by trial (vector) |

| heatsTrial | Available space in the heats of trial (vector) |

| fitnessTrial | Fitness of trial (scalar) |

| fitnessElite | Fitness of elite (scalar) |

| winner | Flag indicating if trial (1) or elite (0) is better (scalar) |

| estimateH() | Estimate the value of H based on , copies, weights and W (return H) |

| smartInitialization() | Initialize elite and PV (return elite and PV) |

| initializeElite() | Initialize elite (return elite) |

| inhibitor() | Blocks the unusable elements of PV (return PV) |

| initializePV() | Initialize PV (return PV) |

| generateTrial() | Sample trial from PV and apply to it mutations and crossover |

| newTrial() | Sample trial from PV (return trial and heatsTrial) |

| mutationOne() | Repair trial with respect to the equality constraints |

| mutationTwo() | Repair trial with respect to the inequality constraints |

| crossover() | Operate a heat-wise crossover between trial and elite |

| evaluate() | Evaluate a solution (return its fitness) |

| compete() | Compare two solutions (return winner) |

| updatePV() | Update PV (return PV) |

| cGA-Sync [46] | cGA-Base [Ours] | |||||||

|---|---|---|---|---|---|---|---|---|

| 1M | 8M | 32M | 1B | 1M | 8M | 32M | 1B | |

| Time (s) | 600 (-) | 10,680 (-) | 49,140(-) | 348,000(-) | 52.022 (1.552) | 256.923(2.169) | 969.419 (5.971) | 9085.140 (1136.925) |

| Fitness (%) | 82.5 (-) | 79.1(-) | 74.1(-) | 62.3 (-) | 51.192 (0.0570) | 50.750(0.0194) | 50.634(0.0053) | 50.525 (0.0012) |

| Iterations | 50,000 (-) | 50,000 (-) | 50,000 (-) | 50,000 (-) | 5000 (0) | 5000 (0) | 5000 (0) | 1600 (0) |

| cGA-Async [46] | cGA-A100 [Ours] | cGA-A1 [ours] | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1M | 8M | 32M | 1B | 1M | 8M | 32M | 1B | 1M | 8M | 32M | 1B | |

| Time (s) | 324 (-) | 7560 (-) | 35,400 (-) | 315,060 (-) | 58.745 (0.837) | 177.476 (2.249) | 789.158 (3.314) | 6798.995 (1155.062) | 9.913 (0.269) | 66.898 (1.513) | 286.802 (3.452) | 2278.10 (18.273) |

| Fitness (%) | 91.0 (-) | 82.6 (-) | 77.8 (-) | 66.8 (-) | 99.317 (0.0293) | 95.785 (0.174) | 92.548 (0.145) | 66.968 (0.163) | 100 (0.0) | 100 (0.0) | 100 (0.0) | 99.946 (9.946e-5) |

| Iterations | 50,000 (-) | 50,000 (-) | 50,000 (-) | 50,000 (-) | 5000 (0) | 5000 (0) | 5000 (0) | 1600 (0) | 986.6 (33.242) | 1208.5 (19.448) | 1357.7 (17.257) | 500 (0) |

| W | {500, 650} | ||||||||||

| Size | Object id | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

| weights | 79 | 66 | 31 | 26 | 44 | 35 | 88 | 9 | 57 | 22 | |

| 100K | 12560 | 12,562 | 12,517 | 12,567 | 12,562 | 12,172 | 12,076 | 12,052 | 12,017 | 12,012 | |

| 1M | copies | 125,600 | 125,620 | 125,170 | 125,670 | 125,620 | 121,720 | 120,760 | 120,520 | 120,170 | 120,120 |

| 10M | 1255980 | 1,256,200 | 1,251,700 | 1,256,700 | 1,256,200 | 1,217,200 | 1,207,600 | 1,205,200 | 1,201,700 | 1,201,200 | |

| Algorithm | Size | Solution evals. | Heat Update | Total Time (s) | initialization() (s) | generateTrial() (s) | evaluate() (s) | compete() (s) | update() (s) |

|---|---|---|---|---|---|---|---|---|---|

| discrete cGA [ours] | 100K | 20.2 (9.249) | 431,027.2 (145,724.751) | 360.703 (114.444) | 5.711 (2.827) | 354.707 (114.993) | 0.0636 (0.0958) | 0.0431 (0.0813) | 0.173 (0.266) |

| 1M | 18.1 (7.687) | 3,300,602.6 (28,057.973) | 2538.747 (405.0527) | 38.757 (9.796) | 2499.556 (398.775) | 0.0444 (0.0396) | 0.0230 (0.031) | 0.358 (0.315) | |

| 10M | 29.3 (14.423) | 33,720,256.6 (867,688.014) | 29,785.986 (6790.176) | 460.459 (136.859) | 29,322.144 (6679.297) | 0.177 (0.0883) | 0.0910 (0.0626) | 3.101 (1.367) | |

| PILP [4] | 100K | 1032 (17) | 8,807,564 (167,494) | 26 (0.6) | - | - | - | - | |

| 1M | 1080 (35) | 91,345,801 (2,048,330) | 308 (9) | - | - | - | - | - | |

| 10M | 1104 (35) | 976,903,439 (19,038,115) | 4207 (124) | - | - | - | - | - |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ferigo, A.; Iacca, G. A GPU-Enabled Compact Genetic Algorithm for Very Large-Scale Optimization Problems. Mathematics 2020, 8, 758. https://doi.org/10.3390/math8050758

Ferigo A, Iacca G. A GPU-Enabled Compact Genetic Algorithm for Very Large-Scale Optimization Problems. Mathematics. 2020; 8(5):758. https://doi.org/10.3390/math8050758

Chicago/Turabian StyleFerigo, Andrea, and Giovanni Iacca. 2020. "A GPU-Enabled Compact Genetic Algorithm for Very Large-Scale Optimization Problems" Mathematics 8, no. 5: 758. https://doi.org/10.3390/math8050758

APA StyleFerigo, A., & Iacca, G. (2020). A GPU-Enabled Compact Genetic Algorithm for Very Large-Scale Optimization Problems. Mathematics, 8(5), 758. https://doi.org/10.3390/math8050758