A Fast Image Restoration Algorithm Based on a Fixed Point and Optimization Method

Abstract

1. Introduction

2. Background and Related Algorithms

3. Preliminaries

- (i)

- (ii)

- (i)

- For everyexists;

- (ii)

- Each weak-cluster point of the sequenceis in

4. Main Results

- H is a real Hilbert space;

- is a family of nonexpansive operators;

- satisfies the NST*-condition;

| Algorithm 1: (MWA): A modified W-algorithm |

|

- (i)

- whereand

- (ii)

- converges weakly to a point in

| Algorithm 2: (FBMWA): A forward-backward modified W-algorithm. |

|

- (i)

- whereand

- (ii)

- converges weakly to a point in

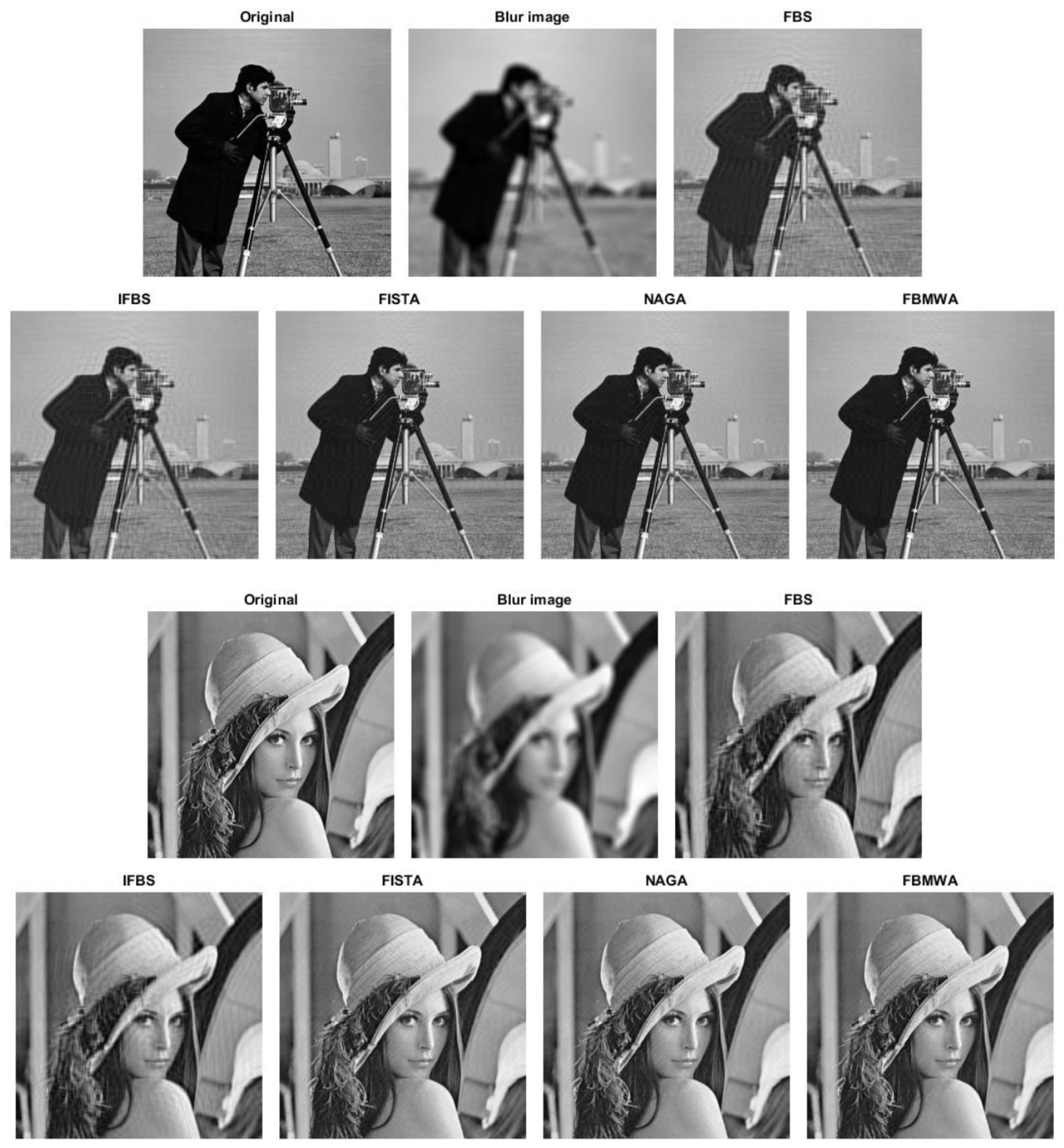

5. Simulated Results for the Image Restoration Problem

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Bauschke, H.H. The approximation of fixed points of compositions of nonexpansive mappings in Hilbert space. J. Math. Anal. Appl. 1996, 202, 150–159. [Google Scholar] [CrossRef]

- Chidume, C.E.; Bashir, A. Convergence of path and iterative method for families of nonexpansive mappings. Appl. Anal. 2008, 67, 117–129. [Google Scholar] [CrossRef]

- Halpern, B. Fixed points of nonexpansive maps. Bull. Am. Math. Soc. 1967, 73, 957–961. [Google Scholar] [CrossRef]

- Ishikawa, S. Fixed points by a new iteration method. Proc. Am. Math. Soc. 1974, 44, 147–150. [Google Scholar] [CrossRef]

- Klen, R.; Manojlović, V.; Simić, S.; Vuorinen, M. Bernoulli inequality and hypergeometric functions. Proc. Am. Math. Soc. 2014, 142, 559–573. [Google Scholar] [CrossRef]

- Kunze, H.; La Torre, D.; Mendivil, F.; Vrscay, E.R. Generalized fractal ntransforms and self-similar objects in cone metric spaces. Comut. Math. Appl. 2012, 64, 1761–1769. [Google Scholar] [CrossRef]

- Mann, W.R. Mean value methods in iteration. Proc. Am. Math. Soc. 1953, 4, 506–510. [Google Scholar] [CrossRef]

- Radenović, S.; Rhoades, B.E. Fixed point theorem for two non-self mappings in cone metric spaces. Comput. Math. Appl. 2009, 57, 1701–1707. [Google Scholar] [CrossRef]

- Todorcević, V. Harmonic Quasiconformal Mappings and Hyperbolic Type Metrics; Springer Nature Switzerland AG: Basel, Switzerland, 2019. [Google Scholar]

- Byrne, C. Iterative oblique projection onto convex subsets and the split feasibility problem. Inverse Probl. 2002, 18, 441–453. [Google Scholar] [CrossRef]

- Byrne, C. Aunified treatment of some iterative algorithms in signal processing and image reconstruction. Inverse Probl. 2004, 20, 103–120. [Google Scholar] [CrossRef]

- Cholamjiak, P.; Shehu, Y. Inertial forward-backward splitting method in Banach spaces with application to compressed sensing. Appl. Math. 2019, 64, 409–435. [Google Scholar] [CrossRef]

- Combettes, P.L.; Wajs, V. Signal recovery by proximal forward–backward splitting. Multiscale Model. Simul. 2005, 4, 1168–1200. [Google Scholar] [CrossRef]

- Kunrada, K.; Pholasa, N.; Cholamjiak, P. On convergence and complexity of the modified forward-backward method involving new linesearches for convex minimization. Math. Meth. Appl. Sci. 2019, 42, 1352–1362. [Google Scholar]

- Suantai, S.; Eiamniran, N.; Pholasa, N.; Cholamjiak, P. Three-step projective methods for solving the split feasibility problems. Mathematics 2019, 7, 712. [Google Scholar] [CrossRef]

- Suantai, S.; Kesornprom, S.; Cholamjiak, P. Modified proximal algorithms for finding solutions of the split variational inclusions. Mathematics 2019, 7, 708. [Google Scholar] [CrossRef]

- Thong, D.V.; Cholamjiak, P. Strong convergence of a forward–backward splitting method with a new step size for solving monotone inclusions. Comput. Appl. Math. 2019, 38. [Google Scholar] [CrossRef]

- Censor, Y.; Bortfeld, T.; Martin, B.; Trofimov, A. A unified approach for inversion problems in intensity-modulated radiation therapy. Phys. Med. Biol. 2006, 51, 2353–2365. [Google Scholar] [CrossRef]

- Censor, Y.; Elfving, T.; Kopf, N.; Bortfeld, T. The multiple set split feasibility problem and its applications. Inverse Probl. 2005, 21, 2071–2084. [Google Scholar] [CrossRef]

- Censor, Y.; Motova, A.; Segal, A. Perturbed projections and subgradient projections for the multiple-sets feasibility problem. J. Math. Anal. 2007, 327, 1244–1256. [Google Scholar] [CrossRef]

- Phuengrattana, W.; Suantai, S. On the rate of convergence of Mann, Ishikawa, Noor and SP-iterations for continuousfunctions on an arbitrary interval. J. Comput. Appl. Math. 2011, 235, 3006–3014. [Google Scholar] [CrossRef]

- Wongyai, S.; Suantai, S. Convergence Theorem and Rate of Convergence of a New Iterative Method for Continuous Functions on Closed Interval. In Proceedings of the AMM and APAM Conference Proceedings, Bankok, Thailand, 23–25 May 2016; pp. 111–118. [Google Scholar]

- Ben-Tal, A.; Nemirovski, A. Lectures on Modern Convex Optimization, Analysis, Algorithms, and Engineering Applications; MPS/SIAM Ser. Optim.; SIAM: Philadelphia, PA, USA, 2001. [Google Scholar]

- Bioucas-Dias, J.; Figueiredo, M. A new TwIST: Two-step iterative shrinkage/thresholding algorithms for image restoration. IEEE Trans. Image Process. 2007, 16, 2992–3004. [Google Scholar] [CrossRef] [PubMed]

- Chen, S.S.; Donoho, D.L.; Saunders, M.A. Atomic decomposition by basis pursuit. SIAM J. Sci. Comput. 1998, 20, 33–61. [Google Scholar] [CrossRef]

- Donoho, D.L.; Johnstone, I.M. Adapting to unknown smoothness via wavelet shrinkage. J. Am. Statist. Assoc. 1995, 90, 1200–1224. [Google Scholar] [CrossRef]

- Figueiredo, M.A.T.; Nowak, R.D.; Wright, S.J. Gradient projection for sparse reconstruction: Application to compressed sensing and other inverse problems. IEEE J. Sel. Top. Signal Process. 2007, 1, 586–597. [Google Scholar] [CrossRef]

- Beck, A.; Teboulle, M. A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2009, 2, 183–202. [Google Scholar] [CrossRef]

- Tibshirani, R. Regression shrinkage and selection via the lasso. J. R. Stat. Soc. B Methodol. 1996, 58, 267–288. [Google Scholar] [CrossRef]

- Lions, P.L.; Mercier, B. Splitting algorithms for the sum of two nonlinear operators. SIAM J. Numer. Anal. 1979, 16, 964–979. [Google Scholar] [CrossRef]

- Moreau, J.J. Proximité et dualité dans un espace hilbertien. Bull. Soc. Math. Fr. 1965, 93, 273–299. [Google Scholar] [CrossRef]

- Figueiredo, M.A.T.; Nowak, R.D. An EM algorithm for wavelet-based image restoration. IEEE Trans. Image Process. 2003, 12, 906–916. [Google Scholar] [CrossRef]

- Daubechies, I.; Defrise, M.; Mol, C.D. An iterative thresholding algorithm for linear inverse problems with a sparsity constraint. Commun. Pure Appl. Math. 2004, 57, 1413–1457. [Google Scholar] [CrossRef]

- Hale, E.T.; Yin, W.; Zhang, Y. A Fixed-Point Continuation Method for l1-Regularized Minimization. Siam J. Optim. 2008, 19, 1107–1130. [Google Scholar] [CrossRef]

- Moudafi, A.; Oliny, M. Convergence of a splitting inertial proximal method for monotone operators. J. Comput. Appl. Math. 2003, 155, 447–454. [Google Scholar] [CrossRef]

- Liang, J.; Schonlieb, C.B. Improving fista: Faster, smarter and greedier. arXiv 2018, arXiv:1811.01430. [Google Scholar]

- Verma, M.; Shukla, K.K. A new accelerated proximal gradient technique for regularized multitask learning framework. Pattern Recogn. Lett. 2017, 95, 98–103. [Google Scholar] [CrossRef]

- Nakajo, K.; Shimoji, K.; Takahashi, W. Strong convergence to common fixed points of families of nonexpansive mappings in Banach spaces. J. Nonlinear Convex Anal. 2007, 8, 11–34. [Google Scholar]

- Nakajo, K.; Shimoji, K.; Takahashi, W. On strong convergence by the hybrid method for families of mappings in Hilbert spaces. Nonlinear Anal. Theor. Methods Appl. 2009, 71, 112–119. [Google Scholar] [CrossRef]

- Boyd, S.; Vandenberghe, L. Convex Optimization; Cambridge University Press: New York, NY, USA, 2004. [Google Scholar]

- Bauschke, H.H.; Combettes, P.L. Convex Analysis and Monotone Operator Theory in Hilbert Spaces; Springer: New York, NY, USA, 2011. [Google Scholar]

- Burachik, R.S.; Iusem, A.N. Set-Valued Mappings and Enlargements of Monotone Operator; Springer Science Business Media: New York, NY, USA, 2007. [Google Scholar]

- Bussaban, L.; Suantai, S.; Kaewkhao, A. A parallel inertial S-iteration forward-backward algorithm for regression and classification problems. Carpathian J. Math. 2020, 36, 21–30. [Google Scholar]

- Takahashi, W. Introduction to Nonlinear and Convex Analysis; Yokohama Publishers: Yokohama, Japan, 2009. [Google Scholar]

- Tan, K.; Xu, H.K. Approximating fixed points of nonexpansive mappings by the ishikawa iteration process. J. Math. Anal. Appl. 1993, 178, 301–308. [Google Scholar] [CrossRef]

- Moudafi, A.; Al-Shemas, E. Simultaneous iterative methods for split equality problem. Trans. Math. Program. Appl. 2013, 1, 1–11. [Google Scholar]

- Thung, K.; Raveendran, P. A survey of image quality measures. In Proceedings of the International Conference for Technical Postgraduates (TECHPOS), Kuala Lumpur, Malaysia, 14–15 December 2009; pp. 1–4. [Google Scholar]

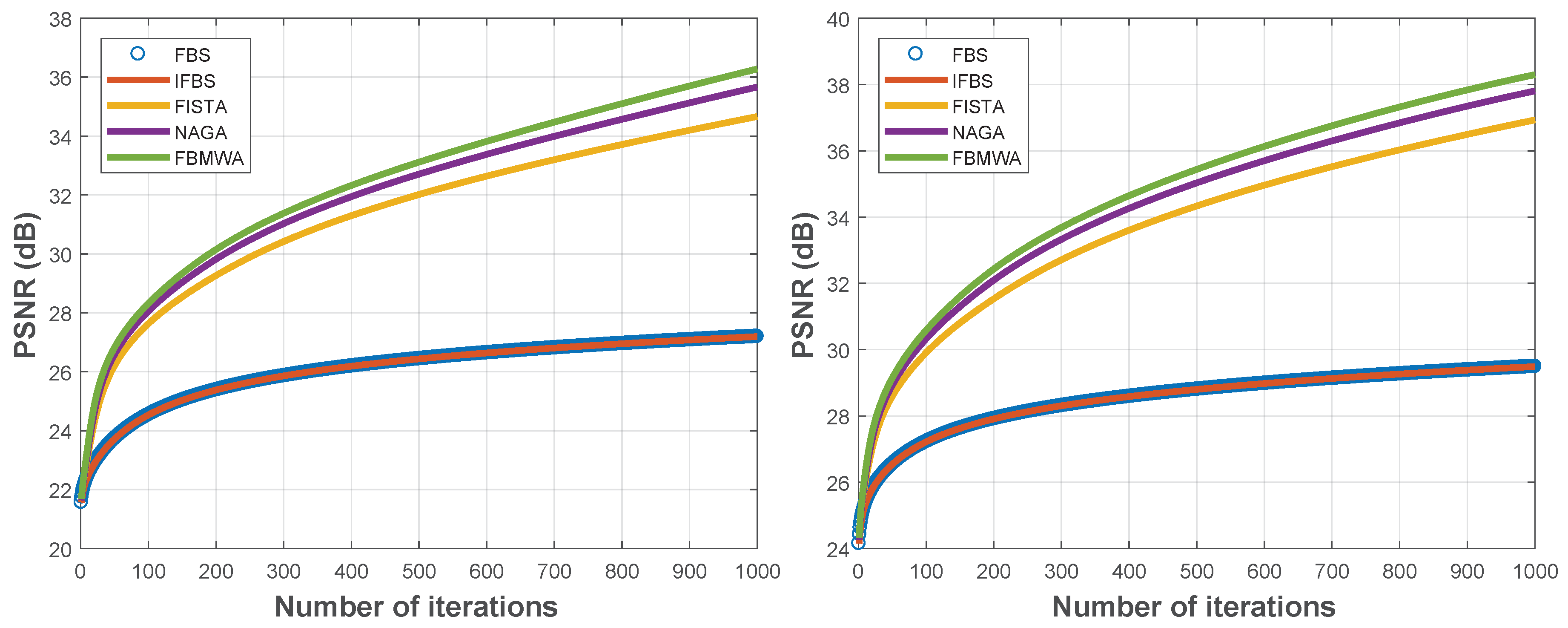

| Cameraman | Lenna | |||

|---|---|---|---|---|

| Algorithms | PSNR | Tol. | PSNR | Tol. |

| FBS | 27.1953 | 2.32 × | 29.4907 | 1.73 × |

| IFBS | 27.1953 | 2.32 × | 29.4907 | 1.73 × |

| FISTA | 34.6659 | 4.13 × | 36.9324 | 3.34 × |

| NAGA | 35.6670 | 4.15 × | 37.8088 | 3.32 × |

| FBMWA | 36.2783 | 4.21 × | 38.2989 | 3.31 × |

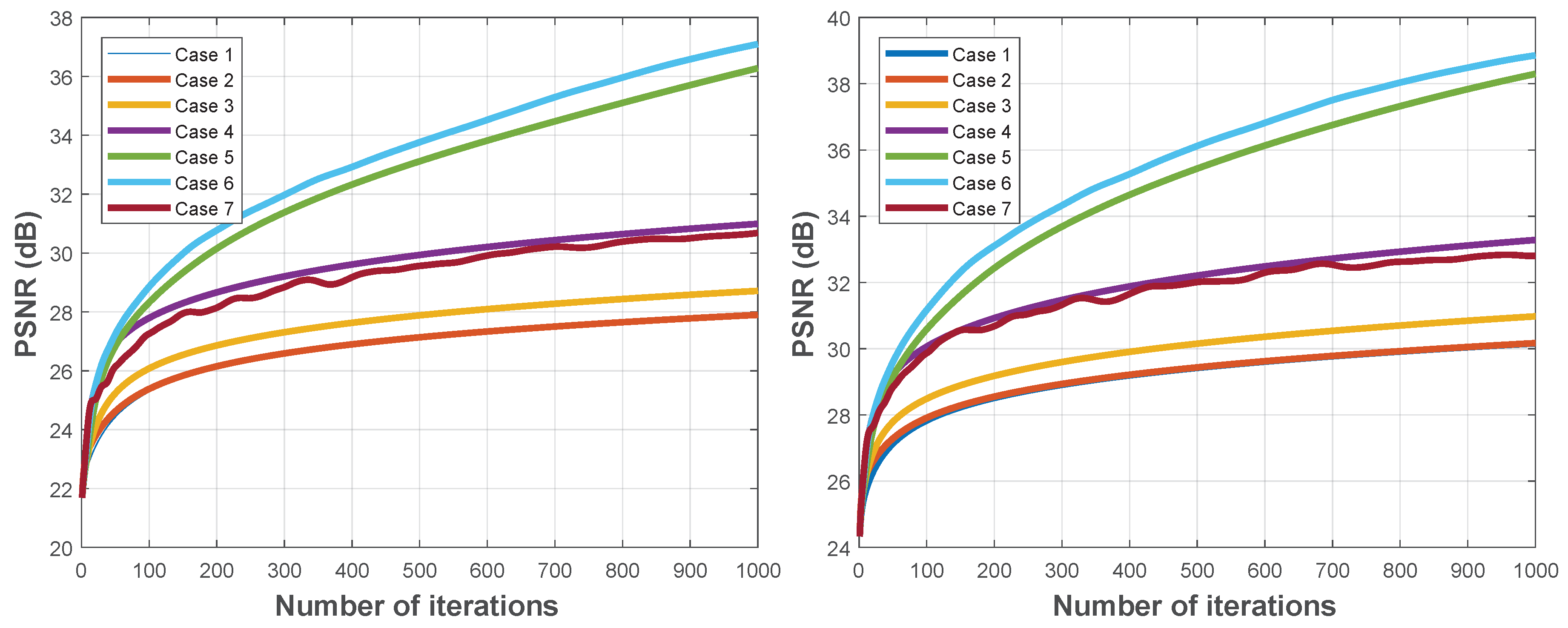

| Cameraman | Lenna | ||||

|---|---|---|---|---|---|

| Case | Parameters | PSNR | Tol. | PSNR | Tol. |

| 1 | 27.8911 | 2.13 × | 30.1603 | 1.66 × | |

| 2 | 27.9003 | 2.12 × | 30.1693 | 1.65 × | |

| 3 | 28.7146 | 2.00 × | 30.9771 | 1.60 × | |

| 4 | 30.9920 | 1.81 × | 33.2838 | 1.47 × | |

| 5 | 36.2783 | 4.21 × | 38.2989 | 3.31 × | |

| 6 | 37.0979 | 1.63 × | 38.8562 | 1.30 × | |

| 7 | 30.6832 | 9.13 × | 32.7996 | 7.07 × | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hanjing, A.; Suantai, S. A Fast Image Restoration Algorithm Based on a Fixed Point and Optimization Method. Mathematics 2020, 8, 378. https://doi.org/10.3390/math8030378

Hanjing A, Suantai S. A Fast Image Restoration Algorithm Based on a Fixed Point and Optimization Method. Mathematics. 2020; 8(3):378. https://doi.org/10.3390/math8030378

Chicago/Turabian StyleHanjing, Adisak, and Suthep Suantai. 2020. "A Fast Image Restoration Algorithm Based on a Fixed Point and Optimization Method" Mathematics 8, no. 3: 378. https://doi.org/10.3390/math8030378

APA StyleHanjing, A., & Suantai, S. (2020). A Fast Image Restoration Algorithm Based on a Fixed Point and Optimization Method. Mathematics, 8(3), 378. https://doi.org/10.3390/math8030378