Abstract

In this paper, Landweber iteration with a relaxation factor is proposed to solve nonlinear ill-posed integral equations. A compression multiscale Galerkin method that retains the properties of the Landweber iteration is used to discretize the Landweber iteration. This method leads to the optimal convergence rates under certain conditions. As a consequence, we propose a multiscale compression algorithm to solve nonlinear ill-posed integral equations. Finally, the theoretical analysis is verified by numerical results.

1. Introduction

Ill-posed problems [1,2] include linear [3] and nonlinear [4,5,6,7] ill-posed problems. With the development of applied science, studies on nonlinear ill-posed problems are attracting increased attention, and a variety of methods have emerged. Some of the most widely known methods are Tikhonov regularization [8,9,10,11,12,13] and Landweber iteration [14,15,16,17]. The purpose of this study was to explore a compression multiscale Galerkin method for solving nonlinear ill-posed integral equations via Landweber iterations.

Landweber iteration is a regularization method when the iteration is terminated by the generalized discrepancy principle. Hanke et al. [14] used Landweber iteration to solve nonlinear ill-posed problems for the first time, and proved the convergence and convergence rate of the Landweber iteration. However, they did not consider the case of finite dimensions. Based on the gradient method, Neubauer [18] presented a new iterative method that greatly reduces iteration number. The convergence and convergence rate of the new method were proven. Neubauer did not consider the case of finite dimensions either. However, in numerical simulations and practical applications, we should consider regularization methods of finite dimension to solve nonlinear ill-posed problems. For this, Jin and Scherzer provided their results [16,19] in this field. In previous studies [14,16,18,19], the important parameter in the generalized discrepancy principle was greater than two. This is not satisfactory. Hanke [15] derived a smaller parameter for the generalized discrepancy principle that may be close to one under certain conditions. To obtain a better approximation solution, we hope the parameter in the generalized discrepancy principle is as close to one as possible.

From the development of Landweber iteration, the estimate of the parameter in the generalized discrepancy principle depends on an unknown constant, but the selection range of the parameter was not optimal in previous studies [14,16,18,19]. Therefore, the approximate solution obtained by this parameter is also not optimal. To obtain a better approximate solution, this paper introduces the radius of the field and the relaxation factor under the strong Scherzer condition to improve the selection range of the parameter. Simultaneously, by combination with the generalized discrepancy principle, the convergence rates of the Landweber iteration are proven in finite dimensional space in this paper.

This paper is organized as follows. In Section 2, we outline some lemmas and propositions of Landweber iteration under the strong Scherzer condition. In Section 3, we describe the matrix form of the Landweber iteration discretized using the multiscale Galerkin method, and the convergence of the Landweber iteration is proven in finite dimensions. In Section 4, we develop a compression multiscale Galerkin method for Landweber iteration to solve nonlinear ill-posed integral equations, which leads to an optimal approximate solution under certain conditions. A multiscale compression algorithm is proposed to solve nonlinear ill-posed integral equations. Finally, in Section 5, we provide numerical example to verify the theoretical results.

2. Landweber Iteration

In this section, we will describe some lemmas and propositions of Landweber iteration for solving nonlinear integral equations in detail. These results are based on [14,15].

Suppose that is a bounded domain with . Let spaces and denote Hilbert spaces. For the sake of simplicity, inner products and norms of Hilbert space are denoted as and , respectively, unless otherwise specified. The nonlinear compact operator is defined by

where is nonlinear mapping. We focus on the following nonlinear integral equation,

where is given and is the unknown to be determined. Note that represents the range of the operator F and represents the domain of the operator F. Without loss of generality, we assume that the nonlinear operator F satisfies the following two local properties.

The derivative of nonlinear operator F is denoted by:

with , which satisfies

where denotes the neighborhood of with radius .

The derivative of nonlinear operator F satisfies the strong Scherzer condition. In other words, a bounded linear operator exists: , such that

holds for any elements , where the linear operator satisfies

for .

The accurate data y in Equation (1) may not be known; instead, we have noisy data , satisfying:

where is a given small number. As F is a compact operator defined on an infinity dimensional space, (1) is an ill-posed problem. In other words, the solution does not depend continuously on the right-hand side. The Landweber iteration [3,4] is one of the prominent methods in which a sequence of iterative solutions is defined by

from , where is a relaxation factor. If the noise is free, i.e., the right-hand side is y, then we replace with . Note that is not the solution of problem (1); it is only a given initial function used to solve (1).

Similar to Proposition 2.1 of [14], we provide the following properties.

Lemma 1.

Proof.

Thus,

From Equation (7), the conclusion is established. □

Let Problem (1) be solvable on and let

be a solution set of Problem (1) on . From [18], a unique local minimum norm solution exists for , i.e.,

Proposition 1.

Let be a solution to Problem (1). If condition holds, then a unique local minimum norm solution existsfor .

Proof.

See Proposition 2.1 of [18]. □

Proposition 2.

Proof.

From Conditions , , and (6), using the induction method, we can conclude that

with . Combining Equations (8) and (9) and Condition (12), we have and

It follows from Equation (7) that Equation (13) holds. Combining the above inequality and Equation (13), we have

Then, Equation (14) holds. □

To illustrate the convergence of the Landweber iteration with the noise-free case, the following lemma is given. The proof of this lemma refers to Theorem 2.3 in [14].

Lemma 2.

Proof.

Let be a solution to Problem (1), and

From Proposition 2, is monotonically decreasing and converges to a constant . Now, we prove that is a Cauchy sequence. Without loss of generality, we assume that there exists a positive integer N such that integers . By the Minkowski inequality, we have

where the integer we chose satisfies

for any integer . Therefore, proving that is a Cauchy sequence, we only need to prove that and when . We prove the first: . From the definition of inner products and norms, we know that

By Condition and Equation (6), we can conclude that:

Combining the monotonicity of the sequence and Equation (14), we have

This means that . Similarly, we can prove that . So, sequences and are Cauchy sequences. From , the limit of the sequence is also a solution to Problem (1). Using Equation (3), we can obtain . Therefore, it follows from Equation (6) that

Thus, . Because , we have

From Proposition 1 and the above, we can conclude that . □

3. A Multiscale Galerkin Method of Landweber Iteration

The multiscale Galerkin method is a classical and effective projection method (cf. [20]), and is often used in integral equations (cf. [21,22,23,24]). We next discuss using the multiscale Galerkin method to discrete the iteration scheme (6). The purpose of this section is to analyze the convergence of the multiscale Galerkin method for Landweber iterations. Here, we only provide a brief description of the multiscale Galerkin method. For a more in-depth understanding of its specific structure and numerical implementation, please refer to [22,25,26].

Let denote a set of natural numbera, and define . Suppose there is a nested and consistently dense space sequences , i.e.,

We further assume that a subspace exists satisfying for :

with . Therefore, we conclude the multiscale and orthogonal subspace

for . For the specific structure of this space, refer to Chapter 4 in [20]. We need to pay attention to spaces and , which are two polynomial function spaces of degree . For , subspace can be generated by subspace . We define dim and dim for some positive integer . Let the indicator set with . Assume that the family base function of space is for , i.e.,

Thus, we have

Assume that is the linear orthogonal projection from onto , and a positive constant c exists such that

where denotes the linear subspace of , which is equipped with the norm . Here, is some linear operator acting from and c denotes a generic constant. For convergence of projection operator , the following condition is needed.

Assume that holds. Then, a positive constant c exists such that:

Some related lemmas are outlined in the following that are similar to the case using Tikhonov regularization in [22,24]. We omit their proofs.

Lemma 3.

If condition holds, then there exists a constant c independent of n such that

Proof.

See (3.10) of Lemma 3.1 in [24]. □

Lemma 4.

If condition holds, then there exists a constant c independent of n such that

Proof.

See Lemma 2.1 of [22]. □

We apply the multiscale Galerkin method to solve iterative Scheme (6) for the free noise case, i.e., finding such that

holds, where denotes the number n depending on iteration l, is a given initial function, and the space is the selected initial space. From the definition of space , the approximate solution can be denoted as

Format (18) is unique to the multiscale Galerkin projection. This is one of our motivations for using multiscale Galerkin projection.

To show that the multiscale Galerkin method maintains the convergence of Landweber iteration, we imply the following property:

Proposition 3.

Proof.

From Conditions and , and iteration scheme (16), we can conclude that

for . Now, we use the induction method to prove Equation (20). Combined with Lemma 3 and Equation (19), we can conclude that

Therefore, (20) is established. □

From the above analysis, we know that if

with n depending on iterative steps l. Then,

Note that the larger the iteration step l, the larger the number of discrete layers n. Due to the multiscale and orthogonal of spatial sequences (cf. Chapter 4 of [20]), the multiscale Galerkin scheme is more suitable for this iterative process than the general Galerkin scheme.

Theorem 1.

Proof.

From Lemma 2 and Proposition 3, we can obtain the result. □

4. Rates of Convergence and Algorithm

In this section, the compression multiscale Galerkin method for Landweber iteration is used to solve Problem (1) with noisy data . Convergence rates of this method are proved under certain conditions.

We apply the multiscale Galerkin method to solve the iterative Scheme (6) for the noisy case, i.e., finding such that

holds, where is a given initial function and . Note that the above number n does not depend on l. From the definition of space , the approximate solution can be denoted as

As Lemma 4 shows that most entries of are very small, these small entries can be neglected without affecting the overall accuracy of the approximation. To reduce the computational cost, the compression strategy is defined by

with and . Using the basis of space , the equivalent matrix form of operator is

with (cf. [22])

We replace with in Equation (16). Then, a fast discrete scheme for the iterative scheme (6) with noise is established, i.e.,

where is a given initial function and

To analyze the convergence rates of the compression multiscale Galerkin Scheme (23), we need the following estimates.

Lemma 5.

If Condition holds, then there exists a positive constant such that for any :

where .

Proof.

See Lemma 2.3 of [22]. We have . Combining this result and Lemma 3, the assertion is proved. □

To ensure the convergence rate of the approximate solution, we need some conditions: one is the stopping criterion [14,15,18], which is a generalized discrepancy principle; another is the smoothness condition of the initial function and -minimum-norm solution [5,12]; and the last is the discrete error control criterion [16,19,22].

A positive integer exists such that

where the discrete number n depends on the noise level for and satisfies

Let be a -minimum-norm solution of Problem (1) that satisfies

There exists a positive integer that satisfies the following condition,

with .

Condition is a posteriori parameter selection criterion, which leads to the appropriate approximate solution. Condition is a necessary condition for the order optimal convergence rates. Condition ensures that the projection error does not affect the iterative process.

We next provide the proof of convergence rates for the compression multiscale Galerkin method of Landweber iteration under conditions (H1)–(H6).

Proposition 4.

For any , and , two positive numbers and exist satisfying

and

where and both depend on ν.

Proof.

Proof of the general case was provided in [4] and will not be repeated here. □

Theorem 2.

Proof.

For convenience, let , , and . It follows from Condition and Equation (23) that

where . For , this yields the closed expression for the error

and consequently,

with . For , we next turn to the estimates of and . Using Equation (8) implies that

Thus,

Similarly, it follows with Lemma 5 and Equation (37) that

Now, we use induction to show that for all

hold with . Here,

For , Equation (40) is always true. We assume that Equation (40) holds for all with . Thus, we have to verify Equation (40) for . Combining and , we have [14,16]

Using Equation (37), we can conclude that for

By combining with Equation (9), it follows that

Thus, using Proposition 4, we can obtain

and

Combining Condition and Equation (41), it follows that

and

with . Therefore, it follows that

with . Similarly, we have

This means that a constant exists that depends on , , and , but is independent of , k and . Thus, we can obtain

and

Theorem 3.

If the conditions of Theorem 2 hold, then

where is a constant and depends on ν.

Proof.

From Theorem 2, we have

Combining the above and Proposition 4, we have

with depending on , but being independent of . It follows from (H1)–(H6), and Equations (9) and (45) that

Thus, by the interpolation inequality [3], we can conclude

with depends on .

From Equation (45) and Proposition 4, we have

with depending on . If , then the assertion holds. Otherwise, we apply (43) with to obtain:

Therefore, Equation (44) holds. □

For the convenience of numerical calculation, we wrote the above analysis process as an algorithm. This algorithm includes three parts: constructing space (Algorithm 1), updating the iteration (Algorithm 2), and stopping criterion (Algorithm 3).

| Algorithm 1 Constructing space |

| Step 1. Give initial function , perturbed level , and constants r, . Determine constants , , , and . |

| Step 2. Solve n from inequality

|

| with . |

| Step 3. Construct multiscale bases for . |

| Algorithm 2 Updating iteration |

| Step 1. Compute vector , and matrix , . |

| Step 2. Suppose that vector has been obtained. |

| ● Compute and . |

| ● Solve from

|

| for . |

| Algorithm 3 Stopping criterion |

| Step 1. Restore by bases and vector . |

| Step 2. Continue iteration until the approximate solution satisfies: |

5. Numerical Experiment

This section provides a numerical example of nonlinear integral equations using the compression multiscale Galerkin method of Landweber iteration. The purpose was to verify the theoretical results.

Consider nonlinear integral equations [9] , where is defined as

Here, denotes the linear space of all real-valued square integrable functions, and denotes

where D represents first-order differential operators. The derivative of the operator F is

To complete numerical calculations, we provide the concrete construction of the sequence space (cf. [25]). For the space , we choose the linear basis function as

For space , we choose the linear basis function as

Next, we can construct all the base functions of the subspace for through the following recursive formula [25],

Therefore, we can obtain a linear system (18) using these bases. Here, and .

Let be a -minimum-norm solution of . Thus, we have

with and . We choose initial function for . Therefore, we can obtain

We can conclude that the rates of convergence are . From the definition of linear operator , we know that

Thus, we take , and . Let and with:

for . From Equation (31), we know that the relaxation factor , then we choose . When constant , , and noise level , it follows from Equation (28) that .

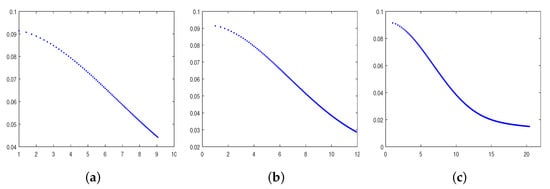

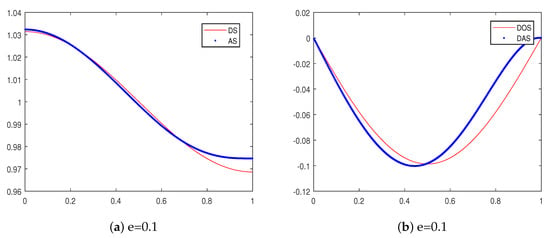

All our numerical experiments were conducted in MATLAB (Sun Yat-sen University, Guangzhou) on a computer with a 3.0 GHz CPU and 8 GB memory. The numerical results in Table 1 show that the compression multiscale Galerkin method for Landweber iteration can effectively solve nonlinear ill-posed integral equations. The results in Table 1 are consistent with the assertion of Theorem 3. Figure 1 shows the relationship between the error and the iteration step . Through the results in Figure 1, we further verified Theorem 2. Figure 2 shows the close degree of and and their generalized derivatives.

Table 1.

Compression multiscale Galerkin method of Landweber iteration based on the discrepancy principle.

Figure 1.

(a) For e = 0.9, the trend in error ; (b) for e = 0.5, the trend in error ; (c) for e = 0.1, the trend of error . The horizontal axis is , the vertical axis is .

Figure 2.

(a) For e = 0.1, the approximate solutions (AS) and -minimum-norm solution (OS); (b) for e = 0.1, the generalized derivatives of (DAS) and (DOS).

Author Contributions

R.Z. designed the paper and completed the research; F.L. and X.L. proposed the compression strategy and completed some proofs. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partially supported by the National Natural Science Foundation of China under grants 11571386, 11771464, and 11761010.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Scherzer, O.; Grasmair, M.; Grossauer, H.; Haltmeier, M.; Lenzen, F. Variational Methods in Imaging; Springer: New York, NY, USA, 2009; p. 297. [Google Scholar]

- Schuster, T.; Kaltenbacher, B.; Hofmann, B.; Kazimierski, K.S. Regularization Methods in Banach Spaces; Walter de Gruyter: Berlin, Germany, 2012; p. 323. [Google Scholar]

- Engl, H.W.; Neubauer, A.; Scherzer, O. Regularization of Inverse Problems; Kluwer Academic Publishers: Dordrecht, The Netherlands, 1996; p. 329. [Google Scholar]

- Kaltenbacher, B.; Hanke, M.; Neubauer, A. Iterative Regularization Methods for Nonlinear Ill-Posed Problems. Walter de Gruyter: Berlin, Germany; New York, NY, USA, 2008; p. 200. [Google Scholar]

- Jin, Q. On a regularized Levenberg-Marquardt method for solving nonlinear inverse problems. Numer. Math. 2010, 115, 229–259. [Google Scholar] [CrossRef]

- Hochbruck, M.; Honig, M.; Ostermann, A. A convergence analysis of the exponential Euler iteration for nonlinear ill-posed problems. Inverse Probl. 2009, 25, 075009. [Google Scholar] [CrossRef][Green Version]

- Hanke, M. The regularizing Levenberg-Marquardt scheme of optimal order. J. Integral Equ. Appl. 2010, 2, 259–283. [Google Scholar] [CrossRef]

- Ito, K.; Jin, B. Inverse Problems: Tikhonov Theory and Algorithms; World Scientific Publishing Co. Pte. Ltd.: Hackensack, NJ, USA, 2015; p. 330. [Google Scholar]

- Engl, H.W.; Kunisch, K.; Neubauer, A. Convergence rates of Tikhonov regularization of nonlinear ill-posed problems. Inverse Probl. 1989, 5, 523–540. [Google Scholar] [CrossRef]

- Jin, Q. Applications of the modified discrepancy principle to Tikhonov regularization of nonlinear ill-posed problems. SIAM J. Numer. Anal. 1999, 36, 475–490. [Google Scholar]

- Hou, Z.; Jin, Q. Tikhonov regularization for nonlinear ill-posed problems. Nonlinear Anal. 1997, 28, 1799–1809. [Google Scholar] [CrossRef]

- Scherzer, O.; Engl, H.W.; Kunisch, K. Optimal a-posteriori parameter choice for Tikhonov regularization for solving nonlinear ill-posed problems. SIAM J. Numer. Anal. 1993, 30, 1796–1838. [Google Scholar] [CrossRef]

- Jin, Q.; Hou, Z. On an a posteriori parameter choice strategy for Tikhonov regularization of nonlinear ill-posed problems. Numer. Math. 1999, 83, 139–159. [Google Scholar] [CrossRef]

- Hanke, M.; Neubauer, A.; Scherzer, O. A convergence analysis of the Landweber iteration for nonlinear ill-posed problems. Numer. Math. 1995, 72, 21–37. [Google Scholar] [CrossRef]

- Hanke, M. A note on the nonlinear Landweber iteration. Numer. Funct. Anal. Optim. 2014, 35, 1500–1510. [Google Scholar] [CrossRef]

- Jin, Q.; Amato, U. A Discrete Scheme of Landweber Iteration for Solving Nonlinear Ill-Posed Problems. J. Math. Anal. Appl. 2001, 253, 187–203. [Google Scholar] [CrossRef]

- Neubauer, A. Some generalizations for Landweber iteration for nonlinear ill-posed problems in Hilbert scales. J. Inv. Ill-Posed Probl. 2016, 24, 393–406. [Google Scholar] [CrossRef]

- Neubauer, A. A new gradient method for ill-posed problems. Numer. Funct. Anal. Optim. 2017, 39. [Google Scholar] [CrossRef]

- Scherzer, O. A iterative multi-level algorithm for solving nonlinear ill-posed problems. Numer. Math. 1998, 80, 579–600. [Google Scholar] [CrossRef]

- Chen, Z.; Micchelli, C.A.; Xu, Y. Multiscale Methods for Fredholm Integral Equations; Cambridge University Press: Cambridge, UK, 2015; p. 552. [Google Scholar]

- Dicken, V.; Maass, P. Wavelet-Galerkin methods for ill-posed problems. J. Inverse Ill-Posed Probl. 1996, 4, 203–221. [Google Scholar] [CrossRef]

- Chen, Z.; Cheng, S.; Nelakanti, G.; Yang, H. A fast multiscale Galerkin method for the first kind ill-posed integral equations via Tikhonov regularization. Int. J. Comput. Math. 2010, 87, 565–582. [Google Scholar] [CrossRef]

- Fang, W.; Wang, Y.; Xu, Y. An implementation of fast wavelet Galerkin methods for integral equations of the second kind. J. Sci. Comput. 2004, 20, 277–302. [Google Scholar] [CrossRef]

- Luo, X.; Li, F.; Yang, S. A posteriori parameter choice strategy for fast multiscale methods solving ill-posed integral equations. Adv. Comput. Math. 2012, 36, 299–314. [Google Scholar] [CrossRef]

- Chen, Z.; Wu, B.; Xu, Y. Multilevel Augmentation Methods for Differential Equations. Adv. Comput. Math. 2006, 24, 213–238. [Google Scholar] [CrossRef]

- Thongchuay, W.; Puntip, T.; Maleewong, M. Multilevel augmentation method with wavelet bases for singularly perturbed problem. J. Math. Chem. 2013, 51, 2328–2339. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).