Abstract

In this paper, based on the iterative technique, a new explicit Magnus expansion is proposed for the nonlinear stochastic equation One of the most important features of the explicit Magnus method is that it can preserve the positivity of the solution for the above stochastic differential equation. We study the explicit Magnus method in which the drift term only satisfies the one-sided Lipschitz condition, and discuss the numerical truncated algorithms. Numerical simulation results are also given to support the theoretical predictions.

1. Introduction

Stochastic differential equations (SDE) have been widely applied in describing and understanding the random phenomena in different areas, such as biology, chemical reaction engineering, physics, finance and so on. The stochastic differential equations can also be applied in 5G wireless networks to address the problem of joint transmission power [1,2], and the novel frameworks were showed in [3,4]. However, in most cases, explicit solutions of stochastic differential equations are not easy to get. Therefore, it is necessary and important to exploit the algorithms for the stochastic differential equations. So far, there is a lot of literature on the numerical approximation of stochastic differential equations [5,6]. One kind of approximation which aims to preserve the essential properties of corresponding systems is getting more and more attention. For example, a conservative method for stochastic Hamiltonian systems with additive and multiplicative noise [7], the stochastic Lie group integrators [8]. In this paper, we will focus on the stochastic Magnus expansions [9].

As is well known, the deterministic Magnus expansion was first investigated by Magnus [10] in 1960s. This topic was further pursued in [11,12]. With the development of stochastic differential equations, the stochastic Magnus expansion got more and more attention when approximating solutions of linear and nonlinear stochastic differential equations [13,14].

The linear stochastic differential equation is as follows

where and are matrices, is the standard Wiener process, is expressed by , where can be represented by an infinite series , whose terms are linear combinations of multiple stochastic integrals.

For example, the first two terms are as follows,

where is the matrix commutator of X and Y.

In this paper, we design explicit stochastic Magnus expansions [9] for the nonlinear equation

where G denotes a matrix Lie group, and g is the corresponding Lie algebra of G. The Equation (4) has lots of applications in the calculation of highly oscillatory systems of stochastic differential equations, Hamiltonian dynamics and finance engineering. It is necessary to construct efficient numerical integrators for the above equation. The general procedure of devising Magnus expansions for the above stochastic Equation (4) was obtained by applying Picard’s iteration to the new derived stochastic differential equation with the Lie algebra, which is an extension of the stochastic case [11]. G. Lord, J.A. Malham and A.wiese [14] proposed the stochastic Magnus integrators using different techniques.

With the application of the stochastic Magnus methods, we can recapture some main features of the stochastic differential Equations (4) successfully. When Equation (4) is used to describe the asset pricing model in finance, the positivity of the solution is a very important factor to be maintained in the numerical simulation. This task can be accomplished by the nonlinear Magnus methods. We also notice that lots of works [15,16] have dealt with this issue using the methods of balancing and dominating. However, the Magnus methods using the Lie group methods are essentially different from the aforementioned methods. If Equation (4) has an almost sure exponential stable solution, it is necessary to construct numerical methods to preserve this property. The numerical methods which preserve the exponential stability usually have long-time numerical behavior. We prove that the explicit Magnus methods succeed in reproducing the almost sure exponential stability for the stochastic differential Equation (4) with a one-sided Lipschitz condition. Numerical simulation results are also given to support the theoretical predictions.

This paper is organized as follows. We introduce and discuss the nonlinear Magnus in Section 2. The numerical truncated algorithms are discussed in Section 3. Then several numerical applications and experiments strongly support the theoretical analysis. In Section 4, we present the applications in the highly oscillating nonlinear stochastic differential equations. Section 5 consists of the simulations of processes for the dynamic asset pricing models. In Section 6, we show that the explicit Magnus simulation can preserve the almost sure stability and numerical experiments are presented to support the analysis.

Notation

In this paper, is the complete probability space with the filtration . is the scalar Brownian motion defined on the probability space. denotes the Stratonovich integral and denotes the Itô integral. is the matrix commutator of X and Y. A and B are matrices and smooth in the domain, is the standard Wiener process and .

2. The Nonlinear Stochastic Magnus Expansion

In this section, we consider a stochastic differential Equation (4).

Here, A and B have uniformly bounded partial derivatives, and satisfy the global Lipschtiz conditions. Therefore, Equation (4) has a unique strong solution [17].

Equation (7) holds since the integral is in the Stratnovitch sense.

According to Equation (8), we obtain

It is not difficult to check that

and

where and for .

Then it is true that

Finally, we get

Then, applying the Picard’s iteration to (17), we obtain

In order to get explicit numerical methods which can be implemented in the computer, we need to truncate the infinite series in (18). The iterate function series only reproduce the expansion of the solution up to certain orders in the mean-square sense.

For example, if , we obtain

From

we can get

Finally, we devise the following general Magnus expansion

Obviously, Equation (21) consists of a linear combination of multiple stochastic integrals of nested commutators. It is easy to prove that succeeds in reproducing the sum of the series with the Magnus expansion. This scheme is called an explicit stochastic Magnus expansion for the nonlinear stochastic differential equation.

Remark 1.

The general Magnus expansion is

Comparing the Taylor expansion of the with the expansion of [12], the conclusion is proven.

3. Numerical Schemes

In this section, we present a new way of constructing efficient numerical methods based on the nonlinear stochastic Magnus expansion. It should be mentioned that highly efficient schemes always involve multiple stochastic integrals. In most cases, however, the half-order approximation of (21) can only be exactly evaluated. In order to get higher order integrator, more complicated multiple stochastic integrals, which are hard to approximate, must be included.

We will investigate the schemes of order (mean-square order) and 1 concretely, and choose the quadrature rules with equispaced points along the interval .

3.1. Methods of Order 1/2

When , the expansion (21) turns into

Using the Taylor formula, we can get the expansion of the solution

and the approximate solution can be expanded in the following form

where,

It is easy to check that

According to the Milstein mean-square convergence theorem [18], the strong convergence order is .

Remark 2.

If cannot be evaluated exactly, we need to approximate it with a quadrature rule of order .

For example, using the Euler method (see reference [19]), we can get

Notice that other numerical methods can also be used to approximate (24). we can get the strong convergence order is .

3.2. Methods of Order 1

The proof of the convergence order is the same as the method of order .

Remark 3.

Note that if (28) can be computed exactly, all that is required is to replace (29) with a quadrature of order 1. Using the stochastic Taylor expansion, we approximate in the following way

As a matter of fact, there is no need to compute (28) or (29) exactly. If we approximate (28) or (29) with the methods of order 1,

The convergence order will also be order 1.

4. Numerical Experiment for the Highly Oscillatory Nonlinear Stochastic Differential Equations

We consider the following stochastic system

where A and B are matrices, W is the Wiener process. It is supposed that the solution of (31) oscillates rapidly.

If A and B are commutative, in order to compute the value of , a new is considered,

and

where , .

We can consider as a correction of the solution provided by . For this reason, if Equation (34) is solved by the method of the nonlinear Magnus expansion, the errors corresponding will be much smaller than the previous algorithms [13,14].

When A and B are not commutative with each other, we have [20]

and

with

here,

To illustrate the main feature of the nonlinear modified Magnus expansion, we consider a system

where, W is the standard Wiener process.

If , Equation (42) becomes

,

It is easy to check that A and B are not commutative. By the use of the scheme (37), we obtain the following scheme

and

where , , are computed by (39), (40), (42) and .

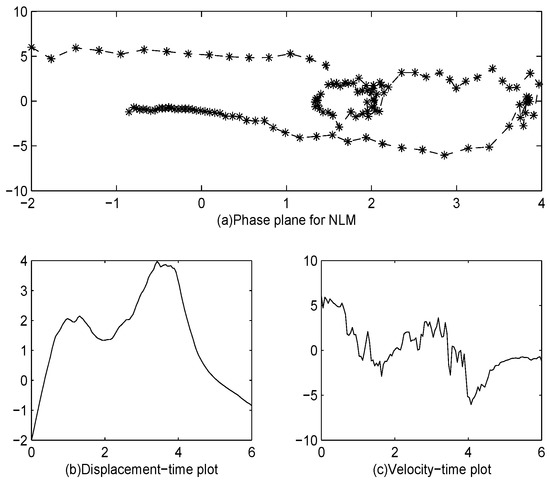

In Figure 1, we can see that the two points initially attract the noisy trajectories, and after some time, the trajectories switch to the other point which coincides with the tunneling phenomena described in [21].

Figure 1.

The numerical scheme for the stochastic Duffing-Van der Pol oscillator equation with the nonlinear Magnus method (NLM), . The stepsize is .

5. Application to the Stochastic Differential Equations with Boundary Conditions

In this section, we will deal with the Itô-type stochastic differential equation

we assume Equation (46) is well-defined with a certain boundary condition, that is, lies in the domain .

There are lots of works on the efficient approximate solution of (46) [15,16,22]. Most of them use the balancing or dominating technique to restrict the numerical solution to stay in the domain. In this paper, the proposed explicit nonlinear Magnus methods based on the stochastic Lie group are efficient at approximating the solutions of stochastic differential Equations (46) with unattainable boundary conditions. Our methods are really different from the methods proposed in [23].

In the following, we will approximate two types of finance models using the nonlinear stochastic Magnus methods.

Firstly, we study the financial model defined as:

This model has been widely used in financial engineering. For example, when , the solution is strictly positive. As well known, the traditional Euler method fails to preserve the positivity of the solution. We also notice that several methods such as the balanced implicit method (BIM) [22] have been proposed. The explicit or implicit Milstein method needs stepsize control [24]. In the following, we show that the nonlinear Magnus methods preserve the positivity independent of the stepsize.

For Equation (47), its equivalent Stratonovich form is defined as:

The system (49) is simulated with the nonlinear stochastic Magnus method and (50) is approximated with Euler method.

Combining the nonlinear Magnus method (27) and the Euler method, we obtain one step method on the interval

In the numerical experiments, we use the scheme (51) to simulate the financial models. When , it is the famous interest rate model of Cox, Ingeesoll, and Ross (for more detail, see [16]).

Table 1 illustrates that both the Euler and the Milstein methods have a certain percentage of negative paths. When the stepsize decreases, the number of negative paths also decreases. However, the nonlinear Magnus method (NLM) is as good as the balanced Milstein method (BMM) preserving the positivity of the solution independent of time interval and the time stepsize .

Table 1.

Numerical simulation for the equation , the time interval: , stepsize: , weight functions of balanced Milstein method (BMM): , the number of simulated paths: 1500.

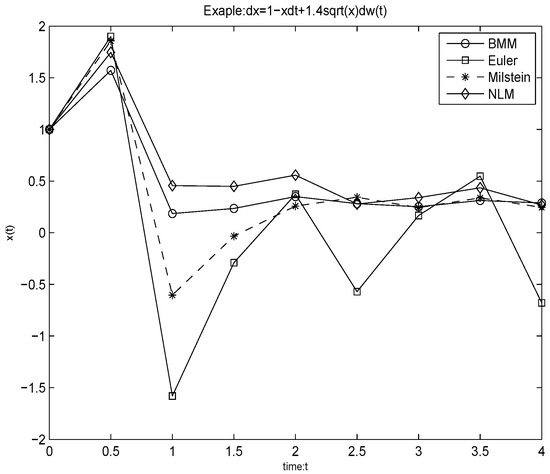

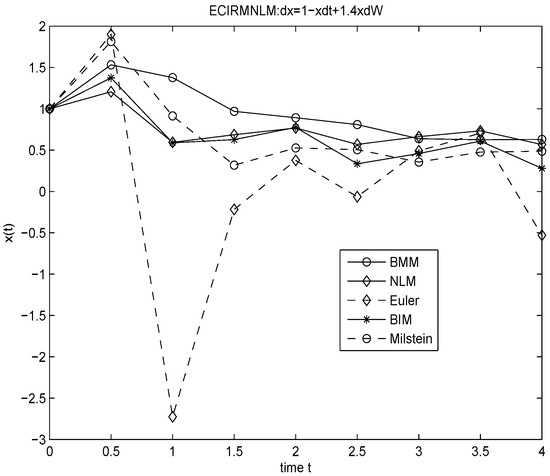

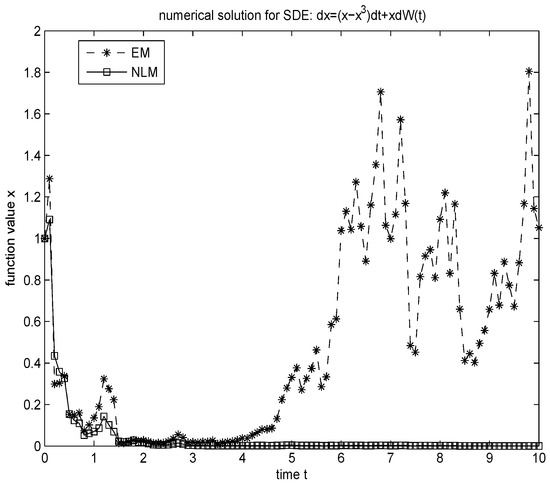

In Figure 2 and Figure 3, we present the numerical simulations for two finance equations. We can see that both the Euler and the Milstein methods fail to preserve the positivity. However, the NLM is as good as the BMM and the BIM at preserving the positivity of the solution.

Figure 2.

Numerical simulation for the equation: , the time interval: [0,4], the stepsize: , .

Figure 3.

Numerical simulation for the equation: , the time interval: [0,4], the stepsize: , .

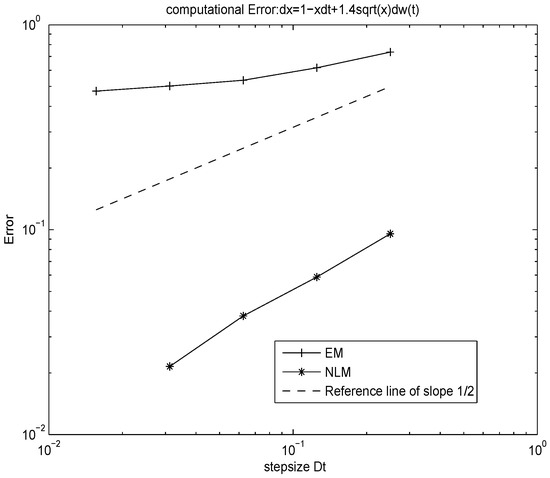

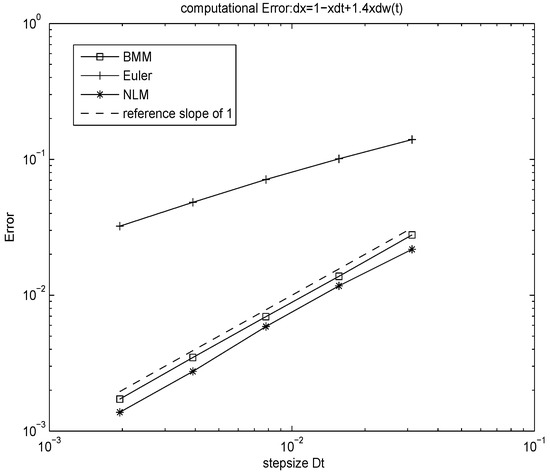

In Figure 4, we see that the explicit NLM which is of the order in mean square sense verifies the analysis in Section 3. In Figure 5, we can see clearly that the simulation is of the order 1 in mean square sense with the linear model, which is the same order as in the BMM.

Figure 4.

-error for the equation: . The time interval: , the number of simulated paths: 1000.

Figure 5.

-error for the equation: . The time interval: , the number of simulated paths: 1000.

6. Application to the Nonlinear Itô Scalar Stochastic Differential Equations

In this part, we will focus on the nonlinear Itô scalar stochastic differential equation

where is independent of y. The Equation (52) satisfies the following conditions

and

For convenience, we transform it into its Stratonovich form

Proof.

According to the Itô Taylor formula, we have

It is easy to prove that

By the use of the condition (54), we obtain

Let , (56) is proven.

It means that the solution of the stochastic differential equation is almost surely stable.

In the following, we will approximate the model using the nonlinear stochastic Magnus methods.

Proof.

Based on (63), we can get

Then the theorem is proven.

We prove that the proposed Magnus method succeeds in preserving the exponential stability which is independent of the time-step size.

Cubic Stochastic Differential Equations

For the cubic stochastic differential equation with the form

We can get [25]

Equation (66) implies that the solution has asymptotic stability. It is well-known that the EM method fails to preserve this property (see reference [25]).

Theorem 3.

Let be any stepsize, the scheme (67) satisfies

Equation (68) is obtained. Almost sure exponential stability analysis which is independent of the time-step size is proven.

In Figure 6, a single trajectory is simulated using the Euler method and the proposed NLM, respectively. One can see that the Euler method fails to preserve the asymptotic stability. However, the NLM can exactly preserve this property.

Figure 6.

Numerical simulation for the equation: . The time interval: [0,10], the stepsize: , the initial value: .

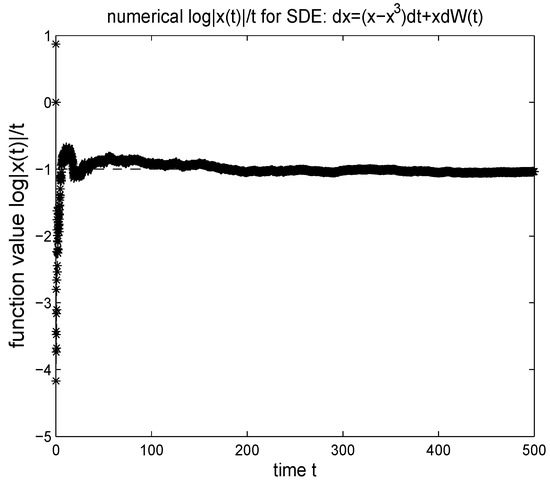

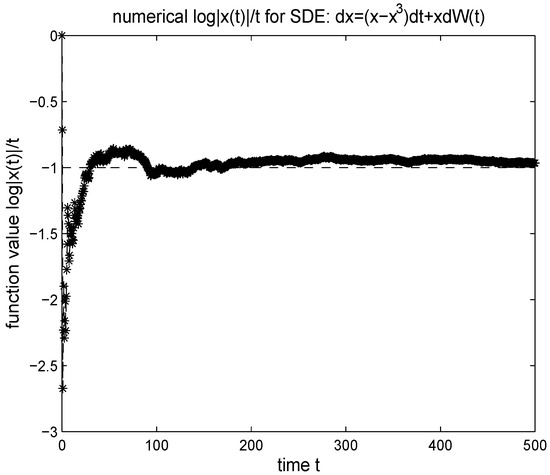

In Figure 7 and Figure 8, we simulate the function using the NLM. As proved in theorem 6.11, the property (68) can be exactly preserved independent of the stepsize.

Figure 7.

Numerical simulation of for the equation: . The time-interval: [0,500], the stepsize: , the initial value: .

Figure 8.

Numerical simulation of for the stochastic differential equation: . The time interval: [0,500], the stepsize: , the initial value .

7. Conclusions

In this paper, we introduce the nonlinear Magnus expansion and present a new way of constructing numerical Magnus methods. Based on the new Magnus method, we discuss the numerical truncated algorithms, and prove that the errors of the corresponding approximations will be smaller than the previous algorithms. Several numerical applications and experiments strongly support the theoretical analysis. For more applications of the new Magnus method, we believe that they can be applied to the semi-discretized stochastic partial differential equations such as stochastic Korteweg–de Vries equations.

Author Contributions

Formal analysis, X.G.; methodology, X.W.; software, X.G.; review and editing, P.Y. All authors have read and agreed to the published version of the manuscript.

Funding

The work was supported by the National Natural Science Foundation of China [Number 11301392, 11501406,71601119],“Chenguang” Program supported by Shanghai Education Development Foundation and Shanghai Municipal Education Commission(16CG53), and the Tianjin Municipal University Science and Technology Development Fund Project [Number 20140519].

Conflicts of Interest

The authors declare no conflict of interest.

References

- Tsiropoulou, E.E.; Vamvakas, P.; Papavassiliou, S. Joint utility-based uplink power and rate allocation in wireless networks: A non-cooperative game theoretic framework. Phys. Commun-Amst. 2013, 9, 299–307. [Google Scholar] [CrossRef]

- Rahman, A.; Mohammad, J.; AbdelRaheem, M.; MacKenzie, A.B. Stochastic resource allocation in opportunistic LTE-A networks with heterogeneous self-interference cancellation capabilities. In Proceedings of the 2015 IEEE International Symposium on Dynamic Spectrum Access Networks (DySPAN), Stockholm, Sweden, 29 September–2 October 2015. [Google Scholar]

- Tsiropoulou, E.E.; Katsinis, G.K.; Papavassiliou, S. Utility-based power control via convex pricing for the uplink in CDMA wireless networks. In Proceedings of the 2010 European Wireless Conference, Lucca, Italy, 12–15 April 2010. [Google Scholar]

- Singhal, C.; De, S.; Trestian, R.; Muntean, G.M. Joint Optimization of User-Experience and Energy-Efficiency in Wireless Multimedia Broadcast. IEEE Trans. Mob. Comput. 2014, 13, 1522–1535. [Google Scholar] [CrossRef]

- Misawa, T. Numerical integration of stochastic differential equations by composition methods. Rims Kokyuroku 2000, 1180, 166–190. [Google Scholar]

- Burkardt, J.; Gunzburger, M.D.; Webster, C. Reduced order modeling of some nonlinear stochastic partial differential equations. Int. J Numer. Anal. Mod. 2007, 4, 368–391. [Google Scholar]

- Milstein, G.N.; Repin, M.; Tretyakov, M.V. Numerical methods for stochastic systems preserving symplectic structure. SIAM J. Number. Anal. 2002, 30, 2066–2088. [Google Scholar] [CrossRef]

- Malham, J.A.; Wiese, A. Stochastic Lie group integrators. SIAM J. Sci. Comput. 2008, 30, 597–617. [Google Scholar] [CrossRef][Green Version]

- Chuluunbaatar, O.; Derbov, V.L.; Galtbayar, A. Explicit Magnus expansions for solving the time-dependent Schrödinger equation. J. Phys. A-Math. Theor. 2008, 41, 203–295. [Google Scholar] [CrossRef]

- Magnus, W. On the exponential solution of differential equations for a linear operator. Comm. Pure Appl. Math. 1954, 7, 649–673. [Google Scholar] [CrossRef]

- Casas, F.; Iserles, A. Explicit Magnus expansions for nonlinear equations. J. Phys. A Math. Gen. 2006, 39, 5445–5461. [Google Scholar] [CrossRef][Green Version]

- Iserles, A.; Nørsett, S.P. On the solution of linear differential equations in Lie groups. Philos. Trans. Royal Soc. A 1999, 357, 983–1019. [Google Scholar] [CrossRef]

- Burrage, K.; Burrage, P.M. High strong order methods for non-commutative stochastic ordinary differential equation systems and the Magnus formula. Phys. D 1999, 33, 34–48. [Google Scholar] [CrossRef]

- Lord, G.; Malham, J.A.; Wiese, A. Efficient strong integrators for linear stochastic systems. SIAM J. Numer. Anal. 2008, 46, 2892–2919. [Google Scholar] [CrossRef][Green Version]

- Kahl, C.; Schurz, H. Balanced Milstein Methods for Ordinary SDEs. Monte Carlo Methods Appl. 2006, 12, 143–170. [Google Scholar] [CrossRef]

- Moro, E.; Schurz, H. Boundary preserving semianalytic numerical algorithm for stochastic differential equations. SIAM J. Sci. Comput. 2017, 29, 1525–1540. [Google Scholar] [CrossRef]

- Donez, N.P.; Yurchenko, I.V.; Yasynskyy, V.K. Mean Square Behavior of the Strong Solution of a Linear non-Autonomous Stochastic Partial Differential Equation with Markov Parameters. Cybernet. Syst. 2014, 50, 930–939. [Google Scholar] [CrossRef]

- Jinran, Y.; Siqing, G. Stability of the drift-implicit and double-implicit Milstein schemes for nonlinear SDEs. AMC 2018, 339, 294–301. [Google Scholar]

- Kamont, Z.; Nadolski, A. Generalized euler method for nonlinear first order partial differential functional equations. Demonstr. Math. 2018, 38, 977–996. [Google Scholar]

- Połtowicz, J.; Tabor, E.; Pamin, K.; Haber, J. Effect of substituents in the manganese oxo porphyrins catalyzed oxidation of cyclooctane with molecular oxygen. Inorg. Chem. Communi. 2005, 8, 1125–1127. [Google Scholar] [CrossRef]

- Kloeden, P.E.; Platen, E. Numerical Solution of Stochastic Differential Equations; Springer-Verlag: New York, NY, USA, 1998. [Google Scholar]

- Milstein, G.N.; Planten, E.; Schurz, H. Balanced implicit methods for stiff stochastic systems. SIAM J. Numer. Anal. 1998, 35, 1010–1019. [Google Scholar] [CrossRef]

- Misawa, T.A. Lie algebraic approach to numerical integration of stochastic differential equations. SIAM J. Sci. Comput. 2001, 23, 866–890. [Google Scholar] [CrossRef]

- Kahl, C. Positive Numerical Integration of Stochatic Differential Equations. Master’s Thesis, Department of Mathematics, University of Wuppertal, Wuppertal, Germany, 2004. [Google Scholar]

- Higham, D.J.; Mao, X.R.; Yuan, C.G. Almost sure and moment exponential stability in the numerical simulation of stochastic differential equations. SIAM J. Numer. Anal. 2014, 45, 592–609. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).