Abstract

One of the most popular applications for the recommender systems is a movie recommendation system that suggests a few movies to a user based on the user’s preferences. Although there is a wealth of available data on movies, such as their genres, directors and actors, there is little information on a new user, making it hard for the recommender system to suggest what might interest the user. Accordingly, several recommendation services explicitly ask users to evaluate a certain number of movies, which are then used to create a user profile in the system. In general, one can create a better user profile if the user evaluates many movies at the beginning. However, most users do not want to evaluate many movies when they join the service. This motivates us to examine the minimum number of inputs needed to create a reliable user preference. We call this the magic number for determining user preferences. A recommender system based on this magic number can reduce user inconvenience while also making reliable suggestions. Based on user, item and content-based filtering, we calculate the magic number by comparing the accuracy resulting from the use of different numbers for predicting user preferences.

1. Introduction

The Internet environment has changed in recent years based on the development of wireless network technology and the spreading use of mobile devices. In the past, Internet users mostly accessed content created by content providers. Currently, users not only receive content but also access, modify and even produce new content, and they often consume content through mobile devices and wireless networks. For example, on YouTube, users can upload their own video content, watch media content provided by other users, evaluate content and add comments. These user activities are all valuable in providing users with better service via user profiling [1] and content evaluations [2]. In recent years, both the content uploaded to the Internet by users and the content provided by conventional providers has steadily increased. This gives rise to a challenging problem: How can users effectively find what they really want from the vast available content?

Users can rely on recommendation systems to address this problem. A recommendation system effectively provides content, such as movies, books or songs, to users based on various methods that include clustering and profiling of content and users [3,4,5,6,7]. In most recommendation systems, users can expect better recommendations when they provide own user preferences or activity histories for specific items. Then, the recommendation systems identify similar users or items based on user information, such as preferences or purchase histories, and suggest new items based on similar user data or items that are clustered by the systems [8,9,10]. However, every recommendation system has one problem in common, which is when there is a lack of sufficient information to provide a proper recommendation; this problem is called the cold-start problem [11,12]. For instance, assume that a new user joins a movie recommendation system. User activity is invaluable for predicting the user’s preference, but there is little activity on record for a new user, and thus the recommendation system cannot provide customized suggestions. In web or mobile applications, some movie recommendation services, such as Rotten Tomatoes or Netflix, explicitly require new users to provide certain information such as gender, age, location or preferred genres to alleviate this problem. These service providers ask new users to evaluate movies, and thereby infer user preferences. Table 1 shows the number of movies that some popular services ask new users to evaluate.

Table 1.

Number of movies that a new user has to evaluate.

As shown in Table 1, each site requires at least ten ratings as initial inputs by new users. This implies an agreement by these services that a certain number of initial inputs is needed to establish meaningful clusters and proper recommendations. However, there is no solid evidence that ten ratings will guarantee good recommendations. This motivates us to consider the following question: How many inputs are necessary for proper movie recommendations? Note that making the initial inputs is a burden on new users. We wonder whether it is absolutely necessary to ask users to make ten ratings since many users will want to spend as little time as possible in the initial process. Another common belief is that the recommendation systems would provide better suggestions if there were more initial inputs. Statistically speaking, when the system recommends movies to users, the more initial inputs exist, the more effectively the system analyzes user preferences.

Herein, we try to verify that requiring more than ten ratings guarantees reliable recommendations. Moreover, we aim to find the minimum number of inputs that will guarantee reliable recommendations. We expect that using the minimum number will guarantee a certain precision in the recommendations and will ease the burden on new users. We define the number of initial inputs that satisfies these conditions to be the magic number for movie recommendations. If one can identify the magic number, then we can reduce user inconvenience while ensuring a certain level of system efficiency.

The approaches for the recommender systems are largely memory-based and model-based [6,8,10,17]. First of all, memory-based approaches are to select similar users or items and use them to predict users’ preferences [6,10]. The model-based approaches are to predict preferences by applying matrix factorization techniques based on a user–item matrix [8,17]. In memory-based approaches, similar users are selected based on their preferences while, in the case of matrix factorization, which means model-based approaches, the entire matrix is then reconstructed. More intuitive comparisons are possible since the input size of user preferences increases the dimensions of vectors used to select similar users. As dimensions have increased, the dimensions of other users’ vectors for calculating similar users also increase. Thus, more intuitive memory-based methods are used in this paper to observe the consequences of changing user input size.

The rest of this paper is organized as follows. In Section 2, we briefly review related research on the cold-start problem in recommendation systems. In Section 3, we introduce the magic number and compute the magic number for movie recommendations; in Section 4, we verify this calculation. Finally, we present our conclusions in Section 5.

2. Related Work

Recent research in the area of recommendation systems has been quite active, with researchers approaching the recommendation accuracy and cold-start problems from various points of view [18,19]. The research concerned with improving accuracy has included collaborative filtering based on clustering methods [6], the roles of users [20] and matrix factorization [17]. The cold-start problem usually occurs for the user-side and the item-side in recommendation systems [21]. Previous work attempted to alleviate the problems by relying on a user’s reputation in a social network group [1,22,23], user classification based on demographic data [19,24,25], a Boltzmann machine in a neural network [26] or item attributes such as categories, creators and actors [27,28]. We can consider the magic number in recommendation systems as one of the alleviative solutions for the user-side cold-start problem. Thus, we briefly summarize recent studies on this problem in Table 2.

Table 2.

Studies on the cold-start problem.

All the reported methods in the studies listed in Table 2 alleviated the user-side cold-start problem by machine learning algorithms, tagging or the use of item attributes. Nevertheless, several websites, such as MovieLens and Rotten Tomatoes, still require initial inputs from users (recall Table 1), since user inputs are one of the most important features in resolving the cold-start problem. This leads us to investigate the minimum number of user inputs that can guarantee a certain level of reliability of the results.

3. Materials and Methods

3.1. Computing the Magic Number

Most personalized recommendation systems make suggestions based on user preference [38,39] and, often, initially ask users to choose a certain number of items before suggesting new items. This procedure is the first step that a new user takes in making their own user profile to receive better recommendations. A recommendation system provides better suggestions if more initial inputs are available from the user. However, we notice that it is often difficult to ask users to make many such initial inputs because they do not want to spend too much time or effort in this initial procedure to receive better recommendations. This leads us to examine the small number of initial items that can guarantee a certain degree of precision in recommendations for users. We call this number the magic number.

In particular, we consider a movie recommendation system since it is one of the most common recommendation systems based on user profiles and it provides personalized movie recommendations. Herein, we use the GroupLens [40] movie database. We use two types of GroupLens databases: 1 M and 10 M databases. Each database has three sub-datasets: movies, users and ratings. In the database, there are 18 different genres and each movie has a few associated genres. Table 3 and Table 4 summarize the GroupLens database.

Table 3.

GroupLens movie database.

Table 4.

The 18 genres in the GroupLens database.

A difference between the 1 M and 10 M databases is the number of users and movies. In the 1 M database, there are 3900 movies and 6040 users and 10 M has 10,681 movies and 69,898 users. In the two databases, each user rates at least twenty movies.

We test recommendation systems based on user-based, item-based and content-based filtering. In user-based and item-based filtering, we use Pearson correlation coefficients and cosine similarity for selecting similar users or items [6,10]. In memory-based collaborative filtering approaches, the important part to determine the results of the accuracy of the recommendation is to select similar user groups. Conventionally, Pearson correlation coefficients and cosine similarity are addressed to calculate similarities between users or items. Because of this reason, we utilize those two similarity measures in our analysis to determine the magic number for the recommendation systems. We utilize these processes with the different numbers of users, items and thresholds to identify the magic number. We also apply the database to the recommendation systems based on genre correlations as content-based filtering [21]; the system takes genre combinations as inputs. In other words, new users only need to select preferred genres to receive movie recommendations in this system. In the content-based filtering, we test the error rates and the Pearson correlation coefficient based on the genres extracted from different numbers of movies randomly selected from each user’s choices to identify the magic number. We apply the 1 M database to user and item-based filtering and 10 M to content-based filtering.

Our tests for user and item-based filtering use the data from only those users who have made at least fifty-one ratings and, for content-based filtering, utilize users who have made at least fifty ratings. If we were to use all the users in the database, we would have to limit the maximum number of inputs to twenty ratings. We need to design our tests based on a large number of ratings to justify the accuracy of the magic number; hence, the fifty-one or fifty ratings minimum.

The user- and item-based filtering have similar processes, whereas the content-based filtering has different steps from these two filtering methods. In the user- and item-based filtering, fifty ratings become training data and one rating is a probe for computing the magic number, while the fifty ratings are enough to compute the magic number in the content-based filtering.

3.2. Experiments for Identifying the Magic Number Based on User-Based Filtering

We apply the database to the recommendation systems based on user-based filtering. The recommendation systems have two steps: the first step is to calculate the similarity between users to select similar users and the second step is to calculate the prediction scores based on the similar users’ ratings [6].

In the first step of the system, the similarity is calculated by utilizing the number of users’ inputs. The system conventionally uses the Pearson correlation coefficient and the cosine similarity in calculating similarity between users. After the calculation of the similarity, the system selects similar users based on a threshold that is already decided by the system designer. The second step is calculation of the prediction scores. This step is based on the ratings of similar users, thus, we can consider that the factors of the first step, such as the number of users and thresholds, decide the prediction scores. Because of this reason, we focus on the factors of the first step to identify the magic number.

We have two parameters in our tests: the numbers of inputs and thresholds. We use those who have made at least fifty-one ratings. In these ratings, fifty ratings are training data and one rating is a probe. We consider the training data as initial inputs. Namely, we calculate the similarity by changing the number of ratings. The number of ratings ranges from two to 50. For example, if the number of ratings is two, we can consider the situation that a certain user has two initial inputs. In this situation, the similarity is calculated based on these two ratings. Thus, if we use different ranges, we can observe the prediction score of an item drawn from a variety of ranges of the inputs. Why we use the minimum input as two is because the similarity measures that we used in our tests require two ratings as minimum. If we use one rating, we cannot draw the similarities. Because of this reason, we start with two as the input size. We utilize the Pearson correlation coefficient and cosine similarity to calculate the similarities between users and use the thresholds, ranging from 0.1 to 0.8.

We draw the prediction scores according to these parameters. We compute the magic number by utilizing the prediction scores. Namely, we determine that the number of inputs converged to a consistent point for the prediction scores is the magic number.

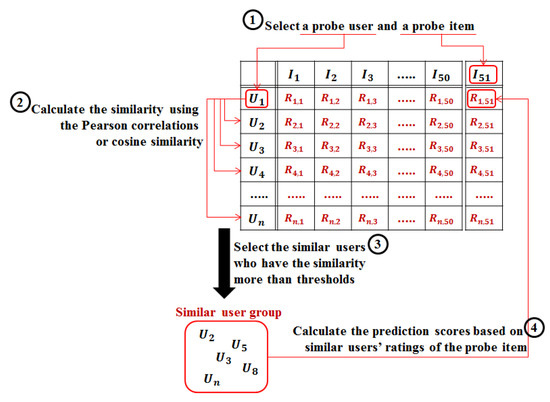

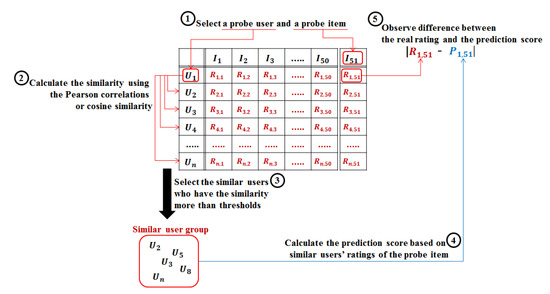

In each similarity method, we use same test processes. Figure 1 shows an example of the entire test process.

Figure 1.

Entire process for tests of user-based filtering.

In Figure 1, n users are selected as target users and these users have more than fifty-one ratings. We use fifty ratings as inputs and one rating as a probe item. In this Figure, Rn,m means a rating for user n’s item m. The processes for the tests of the recommendation systems based on user-based filtering have a total four steps. The first step is to select a probe user and a probe item. In this step, we randomly select a probe user from the all target users who have fifty-one ratings. Then we pick the fifty-first item as a probe item. In Figure 1, user U1 is selected as a probe user and item I51 is selected as a probe item. Second, we calculate the similarity between the probe user and other users utilizing the Pearson correlations or cosine similarity. In the third step, we select the similar users who have higher similarity than the thresholds. Finally, we calculate the prediction scores based on similar users’ ratings of the probe item. We apply these steps to a hundred users per the number of movies and provide average prediction scores.

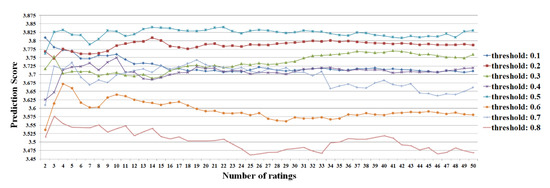

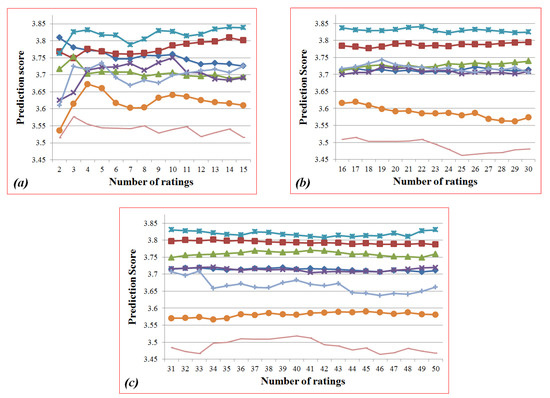

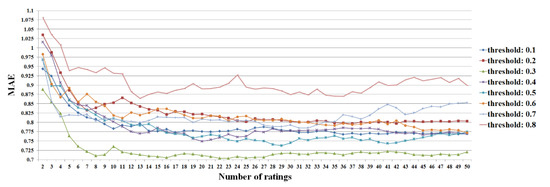

3.2.1. Selecting the Magic Number Based on the Pearson Correlation Coefficient

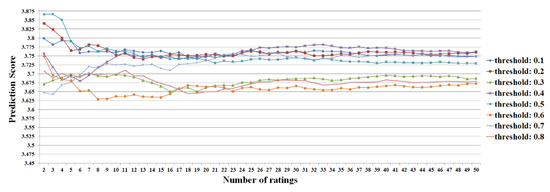

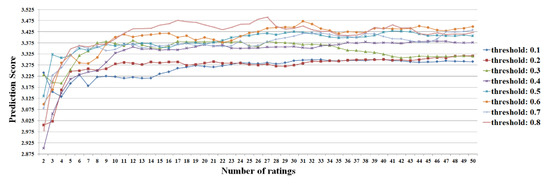

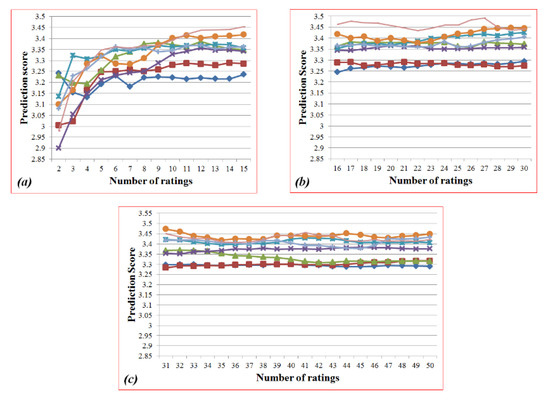

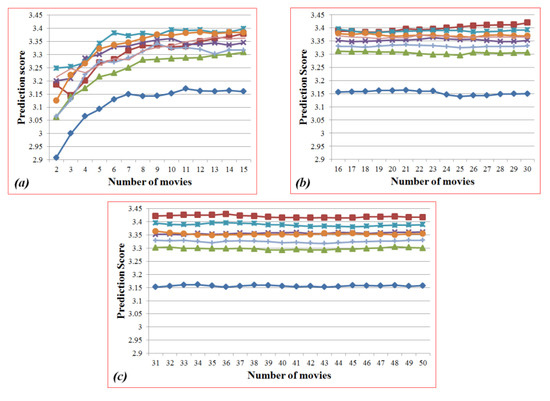

Figure 2 shows the results of the prediction scores when we use the Pearson correlation coefficient for calculating similarities and Figure 3 shows the sub-graphs in Figure 2. In each graph, the y-axis represents the prediction scores and the x-axis represents the number of ratings (i.e., the number of inputs). Figure 3a–c depict the ranges from 2 to 15, from 16 to 30, and from 31 to 50, respectively. Each graph shows the prediction scores per threshold from 0.1 to 0.8 according to the different number of ratings.

Figure 2.

Results of the prediction scores based on the Pearson correlation coefficient in the user-based filtering.

Figure 3.

Sub-graphs of Figure 2: (a) the ranges from 2 to 15; (b) from 16 to 30; (c) from 31 to 50.

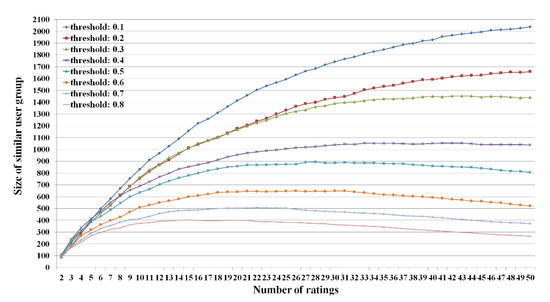

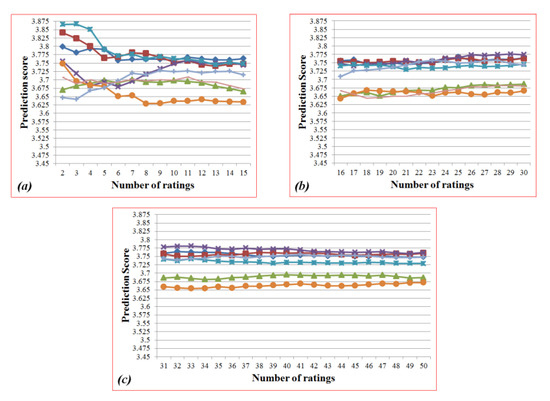

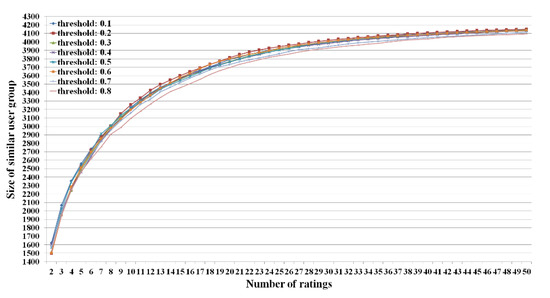

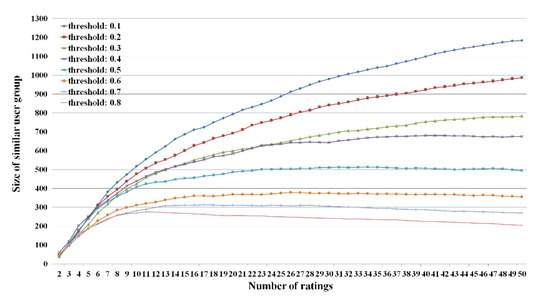

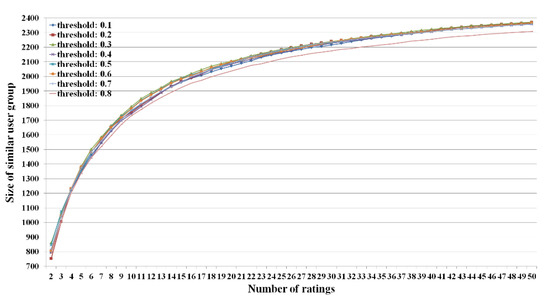

The reason why each threshold shows different results is concerned with the size of the similar user group. If we increase the thresholds in utilizing the Pearson correlation coefficient to select similar users, the size of the similar user group decreases. Figure 4 shows this phenomenon.

Figure 4.

Size of similar user group according to the different numbers of ratings based on the Pearson correlation coefficient.

The different sizes for the similar user group also affect the accuracy of the results. The high thresholds, such as 0.7 and 0.8, show a smaller size of the group than others. It means that the prediction scores are calculated based on a smaller number of ratings than other thresholds. The results of these thresholds are non-consistent, thus, the prediction scores are untrustworthy. Although the accuracy of the prediction scores and the size of the similar user group are important factors in recommendation systems, we only focus on the observations that identify the number of inputs converged to a consistent point for the prediction scores since the accuracy is not an important factor for identifying the magic number.

The results of the sub-graph (a) show larger differences than the sub-graphs (b) and (c). After the start point of the sub-graph (b), each result shows gradual changes. Table 5 shows the average of the prediction scores based on the Pearson correlation coefficient for all thresholds according to the number of movies. In this table, difference means the absolute difference between the prediction scores calculated by m and m − 1 movies. Equation (1) shows more detailed results:

where Avg denotes the average for the prediction scores, Dm is the absolute difference and 3 ≤ m ≤ 50 is the range of the differences. The results of Table 5 support our observation. The differences after fifteen movies are more gradual than the range from three to 14. Thus, we can consider fifteen as the magic number.

Table 5.

Changing average of prediction and difference for each range (the user-based filtering: the Pearson correlation coefficient).

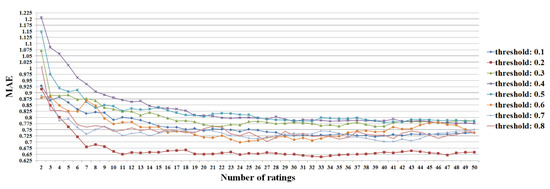

3.2.2. Selecting the Magic Number Based on the Cosine Similarity

Figure 5 shows the results of the prediction scores when we use the cosine similarity for calculating similarities and Figure 6 shows the sub-graphs of Figure 2. In each graph, the y-axis represents the prediction scores and the x-axis represents the number of ratings (i.e., the number of inputs). The range of the y-axis in Figure 5 and Figure 6 is the same as the one in Figure 2 and Figure 3. This implies that the results of the prediction scores based on the cosine similarity are more consistent than the Pearson correlation coefficient. It is also concerned with the size of the similar user group. Figure 7 shows the size of the similar user group according to the different numbers of ratings.

Figure 5.

Results of the prediction score based on the cosine similarity in the user-based filtering.

Figure 6.

Sub-graphs of Figure 5: (a) the ranges from 2 to 15; (b) from 16 to 30; (c) from 31 to 50.

Figure 7.

Size of similar user group according to the different numbers of ratings based on the cosine similarity.

In Figure 7, all thresholds have similar increasing shapes for the size of the similar user group. The size of the similar user group increases according to increase in the number of inputs. All thresholds have the same trends. Because of this reason, the results are more gradual than in Figure 2.

In Figure 6a, the results ranging from two to 11 show larger differences than the range after twelve. After twelve ratings, each result shows gradual changes. Table 6 shows the average of the prediction scores based on the cosine similarity for all thresholds. We use Equation (4) to calculate the difference in Table 6. The results of Table 6 support that the magic number is twelve since we can see that the differences are gradual after twelve.

Table 6.

Changing average of prediction and difference for each range (the user-based filtering: the cosine similarity).

Thus, we can consider that twelve is the magic number.

3.3. Experiments for Identifying the Magic Number Based on Item-Based Filtering

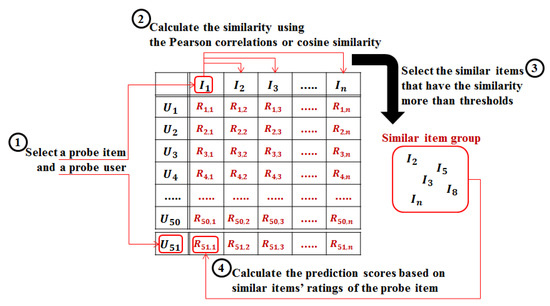

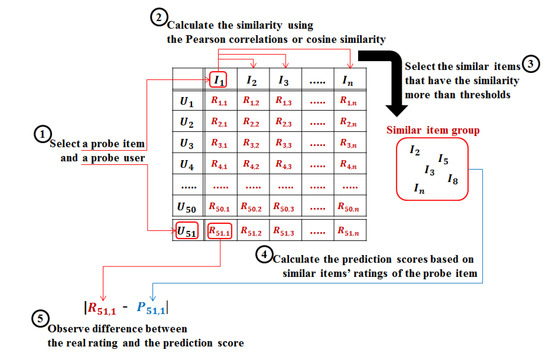

The recommendation systems based on the item-based filtering have similar processes to user-based filtering. The difference between the two systems is the targets used to calculate the similarity methods. In user-based filtering, users become targets for calculating similarity, whereas items are utilized as targets in item-based filtering. Figure 8 shows the entire test process in item-based filtering.

Figure 8.

Entire process for tests of the recommendation systems based on the item-based filtering.

In Figure 8, n items are selected as targets and these items have more than fifty-one ratings. Thus, there exists n items and each item has 51 ratings. Rn,m means a rating for the nth user’s mth item. We can see that the criterion for calculating similarity in the user-based filtering is a user, while the system based on item-based filtering addresses an item as the criterion in calculating similarity through Figure 1 and Figure 8.

The processes for the tests of the recommendation systems based on item-based filtering also have a total of four steps. The first step is to select a probe item and a probe user. In this step, we randomly select a probe item from all target items that have fifty-one ratings. Then we pick the fifty-first user as a probe user. In Figure 8, item I1 is selected as a probe item and user U51 is selected as a probe user. Second, we calculate the similarity between the probe item and other items using the Pearson correlations or cosine similarity. In the third step, we select the similar items that have a greater similarity than the thresholds. Finally, we calculate the prediction scores based on similar items’ ratings of the probe user’s probe item. We use same parameters as user-based filtering in this test. We apply these steps to a hundred users per the number of movies.

3.3.1. Selecting the Magic Number Based on the Pearson Correlation Coefficient

Figure 9 shows the results of the prediction scores when we use the Pearson correlation coefficient for calculating similarities and Figure 10 shows the sub-graphs in Figure 9. In each graph, the y-axis and the x-axis are same as the graphs in Section 3.2.1. Figure 3a–c depict the ranges from 2 to 15, from 16 to 30 and from 31 to 50, respectively. Each graph shows the prediction scores per threshold from 0.1 to 0.8 according to the different numbers of ratings. The reason why each threshold shows different results is the same as for the user-side filtering. Figure 11 shows the size of the similar user group.

Figure 9.

Results of the prediction scores based on the Pearson correlation coefficient in the item-based filtering.

Figure 10.

Sub-graphs of Figure 9: (a) the ranges from 2 to 15; (b) from 16 to 30; (c) from 31 to 50.

Figure 11.

Size of similar user group according to the different numbers of ratings based on the Pearson correlation coefficient.

Compared to the results in Section 3.2.1, the prediction scores are consistent ahead of the magic number in the user-side. The user-side filtering based on the Pearson correlation coefficient has a point of fifteen as the magic number. Although the system uses the same similarity measure, the item-side filtering shows different points from the user-side. In the sub-graph (a) of Figure 10, the results ranging from two to 11 show larger differences than the range after twelve. Namely, the trends of the prediction scores are similar to the cosine similarity of the user-side. Thus, we also decide twelve is the magic number from the Pearson correlation coefficient of the item-side.

Table 7 shows the average of prediction scores and differences for each range based on the Pearson correlation coefficient. In this table, we show the gradual change of the average difference after twelve that is selected as the magic number.

Table 7.

Changing average of prediction and difference for each range (the item-based filtering: the Pearson correlation coefficient).

3.3.2. Selecting the Magic Number Based on the Cosine Similarity

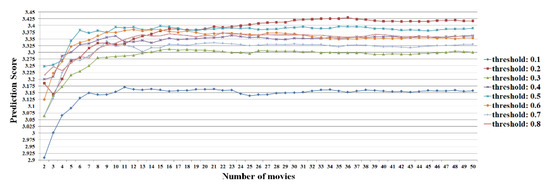

Figure 12 shows the results of prediction scores when we use the cosine similarity for calculating similarities and Figure 13 shows the sub-graphs of Figure 12. The y-axis and x-axis in each graph are the same as in Section 3.2.1. We can show the similar trends for the size of the similar user group per the number of movies with the results of the user-based filtering using the cosine similarity in Figure 14.

Figure 12.

Results of the prediction scores based on the cosine similarity in the item-based filtering.

Figure 13.

Sub-graphs of Figure 12: (a) the ranges from 2 to 15; (b) from 16 to 30; (c) from 31 to 50.

Figure 14.

Size of similar user group according to the different numbers of ratings based on the cosine similarity.

The converged point of each threshold is similar to the graphs in the previous section. Namely, the prediction scores show large changes before twelve. Table 8 shows changes in the average of the prediction scores based on the cosine similarity and the average difference for each range. The trends showing that the average differences are gradual after twelve in this table support our claim. Thus, the magic number is twelve.

Table 8.

Changing average of prediction and difference for each range (the item-based filtering: the cosine similarity).

3.4. Experiments for Identifying the Magic Number Based on Content-Based Filtering

We search for the magic number by analyzing genre preferences for each user in content-based filtering. In other words, we need to compute the number of initial inputs that can reflect the user’s preferences among movie genres. For each user, we compute the genre distribution of movies rated by the user for different numbers of movies selected from all the movies rated by that user. We consider the genre distribution of all movies evaluated by a user to be that user’s true genre preference. Once we have completed the calculation, for each user, we compute the Pearson correlation coefficient and error rates (we provide a formal definition later) between the user’s preference and the estimated preference using a limited number of movies. Based on the analysis of the computation results, we suggest the magic number.

3.4.1. Computing Genre Preferences for Users

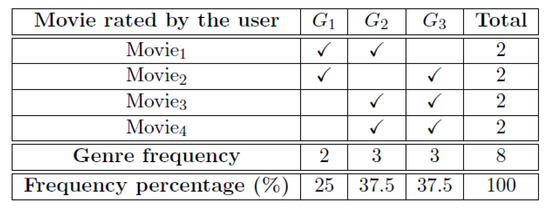

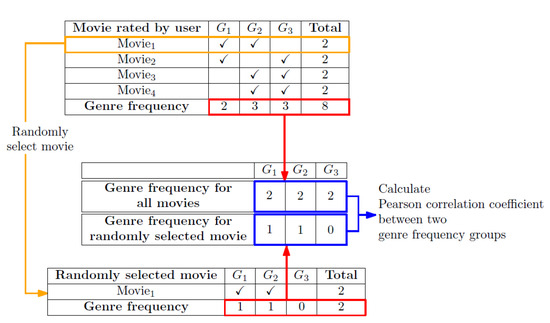

For each user, among all the movies rated by that user, we randomly select a number of movies from one to forty, and count the total number of genre appearances among all the selected movies. We call the total number of appearances by each genre the genre frequency. We normalize the genre frequency as a percentage of all the genres’ appearances. Then, the frequency percentage is taken as the genre preference of a user. Figure 15 shows an example computation of a user’s preference.

Figure 15.

Example of computing the genre frequency and frequency percentage for a user.

In the example in Figure 15, the user has rated four movies whose genres are G1, G2 and G3. The genre frequencies of G1, G2 and G3 are two, three and three, respectively. The ratio of the genre frequency is the frequency percentage. Thus, a large percentage or high frequency for a specific genre implies that a user strongly prefers that genre.

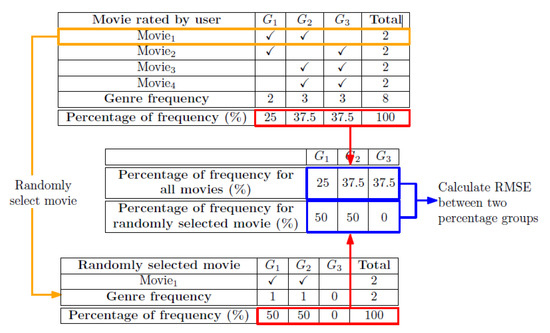

3.4.2. Computing Error Rates

We now calculate the error rates of genre frequency of randomly selected groups of movies with respect to the true genre preference computed from all movie genres for each user. We rely on the root mean square error (RMSE) for computing the error rates between two percentage groups. RMSE is often used for measurement of the difference between the actual values and the values predicted by a model [41]. We slightly modify the RMSE formula for computing the error rate. We study two types of frequency percentages for the eighteen genres: the first type is based on all the movies selected by a user and the second type is based on movies randomly selected from all the movies. Equation (2) is the formula for calculating the error rate between the two types:

where oGn and kGn are the nth frequency percentage among the eighteen frequency percentages extracted from all movies and extracted from the k randomly selected movies, respectively.

Figure 16 shows an example of computing the error rate for Movie1 from Figure 15. In this case, Movie1 is an initial input. If there is a significant difference between two percentage groups, this implies that the initial input size, which is the number of randomly selected movies in our experiments, cannot properly reflect the genre preferences of a user. On the other hand, if the error rate is not large, then we may conclude that the randomly selected movies reflect the user preference. In our experiments, we randomly select from one to forty movies and calculate the error rates for all users, repeating this process one hundred times and computing the average error rates.

Figure 16.

Procedure example of calculating error rates.

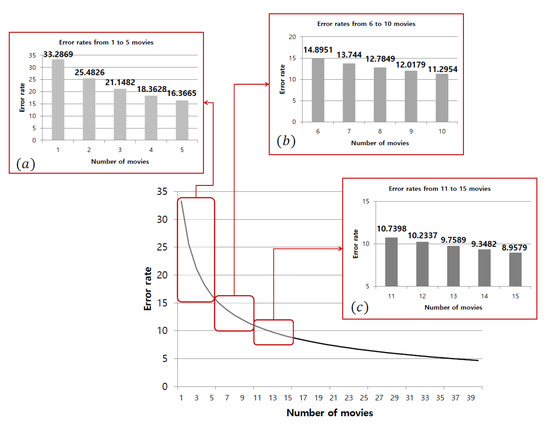

Figure 17 shows the relationship between the error rate and the number of randomly selected movies from one to forty. The sub-graphs (a), (b) and (c) in Figure 17 show the error rates for the ranges from one to five, from six to ten and from eleven to fifteen, respectively. In each graph in Figure 17, the y-axis represents the error rates obtained from Equation (2) and the x-axis represents the number of randomly selected movies. Notice the decrease in the gradient in Figure 17 after five movies.

Figure 17.

Error rates for different sample sizes.

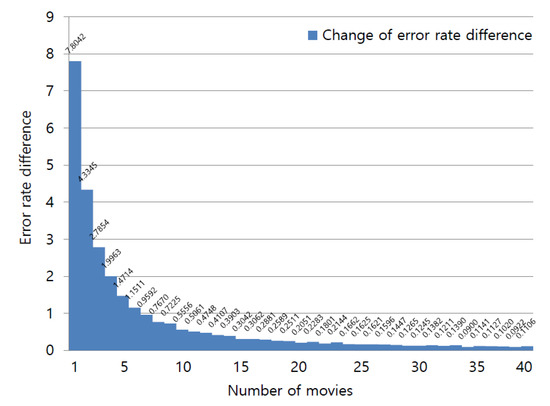

We next consider the difference in error rates between two adjacent ranges in Figure 10 using Equation (3):

where Dim is the difference between the error rates based on m movies and based on m − 1 movies and 2 ≤ m ≤ 40 is the number of randomly selected movies.

Figure 18 shows the rates of decrease in the error rate for the data shown in Figure 17. The y-axis represents the difference between the error rates of the two consecutive intervals and the x-axis represents the number of randomly selected movies. We observe that the rate of change in the error rate decreases as the number of randomly selected movies increases. This implies that additional inputs beyond a certain number of movies will not greatly affect the recommendation results.

Figure 18.

Rates of decrease in error rates.

3.4.3. Computing the Pearson Correlation Coefficients

We determine the Pearson correlation coefficient using the genre frequency [42]. The test procedure is similar to the test procedure in Section 3.3.2; we calculate the error rates using frequency percentages, and here we use genre frequency to calculate the Pearson correlation coefficient. For example, if the randomly selected movie is Movie1 from Figure 8, then we determine the correlations between the genre frequencies for Movie1 and all movies. See Figure 19 for an example of the procedure.

Figure 19.

Example of the procedure for calculating the Pearson correlation coefficient.

We calculate the correlations between the frequencies of the randomly selected movies and those of all movies rated by a user. For our experiments, we randomly select from one to forty movies and calculate the correlations, repeating this process one hundred times and computing the average correlations.

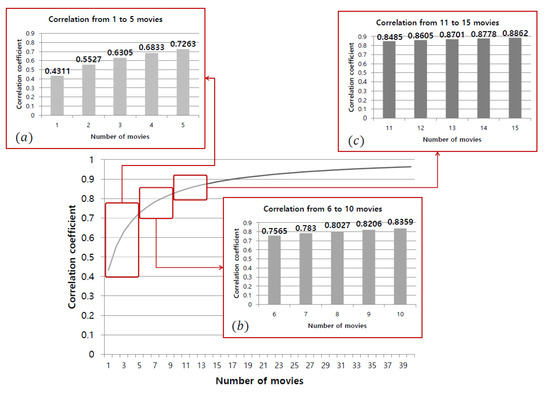

Figure 20 shows the trends in the correlation coefficient for different numbers of randomly selected movies. The sub-graphs (a), (b) and (c) in Figure 20 depict the ranges from 1 to 5, from 6 to 10 and from 11 to 15, respectively. In each graph in Figure 20, the y-axis represents the correlation coefficient and the x-axis represents the number of randomly selected movies. In other words, Figure 20 shows the correlations between the genre frequencies of randomly selected movies and those of all movies rated by each user.

Figure 20.

Pearson correlation coefficient for different random sample sizes.

Table 9 provides the computed values shown in Figure 20. In Table 9, No. and Corr. denote the number of movies and the Pearson correlation coefficient, respectively. As shown in Table 9 and the sub-graphs in Figure 20, the rates of increase in the correlation coefficient are greater when the number of movies is below five than when there are more than five movies. This implies that the distribution of the genre frequency when the number of randomly selected movies becomes five is similar to the genre distribution for all movies.

Table 9.

Correlation coefficients for each random sample size.

3.4.4. Selecting the Magic Number

Based on the two experiments in Section 3.4.2 and Section 3.4.3, we claim that five is the magic number for movie recommendations. We have two reasons for making this claim.

- After five, the rates of change decrease in all graphs in Figure 17, Figure 18 and Figure 20. This signifies that as the number of movies as initial inputs increases over five, the increase in the reliability of the recommendation results is gradual. In other words, even if a user selects more than five movies, we cannot expect a significant increase in the reliability of recommendation results; five movies are already enough to guarantee a certain level of reliability. Functional modeling focuses on what tasks are being performed, and what informational elements are involved in these tasks.

- The rate of increase in the correlation coefficient becomes small after five. The selection of more than five movies hardly affects the results of the recommendations since there are almost no decreases in error rates and the correlations.

Because of these two observations, we claim that five is the magic number.

4. Results

4.1. Verifying the Magic Number

We test the usability of the magic number by applying the magic number to a recommendation system based on user-based, item-based and content-based filtering; the recommendation system using user- or item-based filtering utilizes users’ ratings as its initial inputs, whereas using content-based filtering (the recommendation systems based on genre correlations) employs a genre.

We verify the magic number determined by Section 3 in real situations. The systems based on user- or item-based filtering consists of similar processes to recommend items, whereas the content-based filtering has a diametrically opposed process. Thus, we address similar tests for user- and item-based filtering and verify the usability of the content-based filtering by utilizing a different method from the other two filtering approaches.

We draw the mean absolute error (MAE) for the predicted items to verify the usability of user- or item-based filtering [6,10,41]. Namely, we check the difference between the predicted ratings and the real ratings found through the different numbers of inputs. We also use from two to 50 items as input sizes and repeat our test processes for one hundred users.

We carry out the tests for the content-based filtering using the characteristics of the recommendation systems based on the genre correlations. The system does not consider genre weights or input orders. Thus, if different users provide the same genre combination as the recommendation system, then the results will yield the same recommendation lists regardless of input orders. Thus, we can determine from the input genres whether the recommendation results are the same. In other words, instead of comparing the system output, we compare the inputs to verify the usability of the magic number. For experiments on content-based filtering, we randomly select from one to forty movies and apply our methods to each selected movie. We repeat this step one hundred times and provide average results.

4.2. The Verification of the Magic Number Based on User-Based Filtering

We use similar processes as in Section 3.1 to verify the magic number. The test process in this section has only one more step than the process in Section 3.1. That is the step for calculating the MAE value. The result of the MAE shows the accuracy of a recommendation score. Figure 21 shows the entire verification process of the user-based filtering.

Figure 21.

Verification process of the user-based filtering.

In Figure 21, a fifth step is added to Figure 1. R1,51 means the rating of user U1’s item I51 and P1,51 is the predicted score for this rating. Thus, we can check the accuracy of the predicted score by calculating the absolute difference between the two values. One important factor in this test is that we calculate the MAE for the predicted scores in Section 3.1. Namely, we apply our verification tests to the same users and items as in Section 3.1. Although we can show similar results when we apply the MAE to other probe items or users followed by the central limit theorem, we can expect more precise results and comparisons for the magic number by applying the MAE to the same probe sets [42].

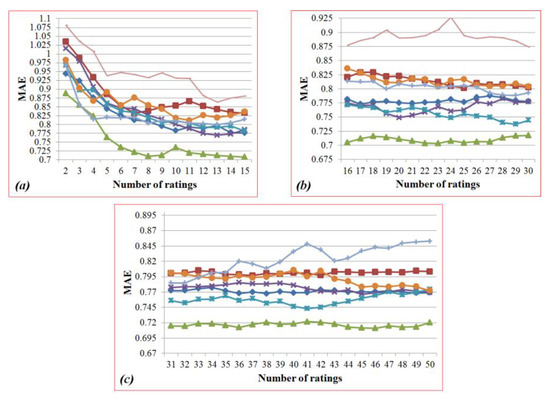

4.2.1. Results and Analysis of the Verification Procedure for the Pearson Correlation Coefficient

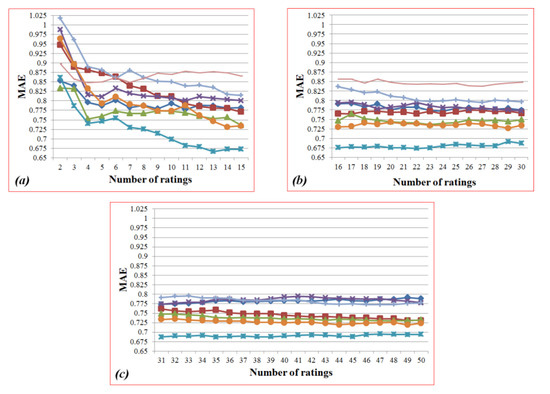

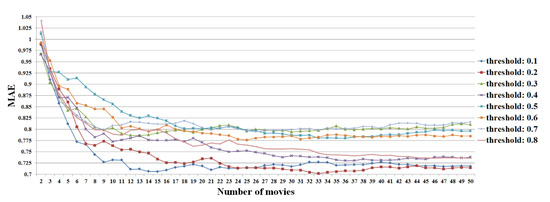

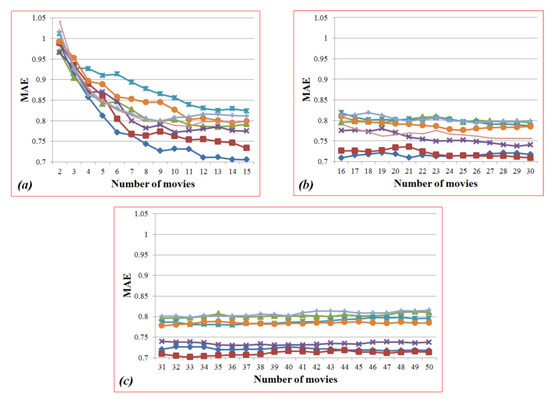

Figure 22 shows MAE values for the different numbers of inputs when we use the Pearson correlation coefficient to calculate the similarities between users and Figure 23 shows the sub-graphs of Figure 22. In each graph, the y-axis is the MAE and the x-axis is the different numbers of ratings. Figure 23a–c depict the ranges from 2 to 15, from 16 to 30 and from 31 to 50, respectively.

Figure 22.

Mean absolute error (MAE) values for the different numbers of ratings based on the Pearson correlation coefficient in the user-based filtering.

Figure 23.

Sub-graphs of Figure 16: (a) the ranges from 2 to 15; (b) from 16 to 30; (c) from 31 to 50.

The MAE is generally used in checking the accuracy of the recommendation results. Figure 22 shows that the MAE values have the most precise results when the threshold is 0.3. However, we do not focus on the accuracy of the results but on identifying the points that converge on a specific value. Thus, we concentrate on the points before the gradual changes of MAE values.

In Section 3.1, the prediction scores have consistency after fifteen ratings. If there are gradual changes for MAE values after fifteen ratings, we can expect that a prediction score is no longer close to a real rating. Namely, more inputs than the magic number do not guarantee an increase in the accuracy of the recommendation results.

MAE values of Figure 23a have larger changes than the other two graphs. This leads to the same conclusion as the selection in Section 3.2.1. Namely, we can guarantee that fifteen ratings can reduce user inconvenience while also providing reliable results based on the Pearson correlation coefficient as the magic number. Table 10 shows change in the average of the prediction scores based on the Pearson correlation coefficient and the average difference for each range. After the magic number, the differences show slight changes. Thus, our claim that the magic number is twelve in Section 3.2.1 is valid.

Table 10.

Change in the average of MAE and average difference for each range (the user-based filtering: the Pearson correlation coefficient).

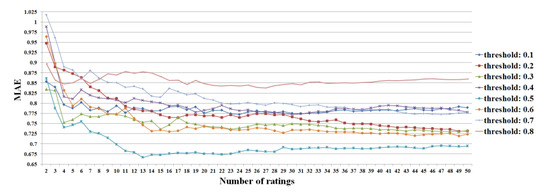

4.2.2. Results and Analysis of the Verification Procedure for the Cosine Similarity

Figure 24 shows MAE values for the different numbers of inputs when we use the cosine similarity to calculate the similarities between users and Figure 25 shows the sub-graphs of Figure 24. All axes and ranges in both figures are same as in the graphs in Section 4.2.1.

Figure 24.

MAE values for the different numbers of ratings based on the cosine similarity in the user-based filtering.

Figure 25.

Sub-graphs of Figure 24: (a) the ranges from 2 to 15; (b) from 16 to 30; (c) from 31 to 50.

In Section 3.2.2, the prediction scores have consistency after twelve movies. The MAE values per the number of movies are consistent after the magic number decided on in Section 3.2.2. Table 11 shows the change in the average of the MAE and the average difference for each range when we use the cosine similarity. This also supports our decision, and thus, we can use twelve as the magic number in this similarity measure for the user-based filtering.

Table 11.

Change in the average of MAE and average difference for each range (the user-based filtering: the cosine similarity).

4.3. The Verification of the Magic Number Based on Item-Based Filtering

The test in this section is similar to that in Section 4.1. In Section 4.1, the test for the verification of the magic number based on the user-side filtering has one more step than the test in Section 3.2. The test for the verification of the magic number based on the item-side filtering also has one more step, which is the step for calculating the MAE value. Figure 26 shows the entire verification process of the item-based filtering. In Figure 26, a fifth step is added to Figure 1. R51,1 means the rating of user U51’s item I1 and P51,1 is the predicted score for this rating. Thus, we can check the accuracy of the predicted score by calculating the absolute difference between two values. One important factor in this test is that we calculate the MAE for the predicted scores in Section 3.2. Namely, we apply our verification tests to same users and items as in Section 3.2.

Figure 26.

Verification process of the item-based filtering.

4.3.1. Results and Analysis of the Verification Procedure for the Pearson Correlation Coefficient

Figure 27 shows MAE values for the different numbers of inputs when we use the Pearson correlation coefficient to calculate the similarities between users and Figure 28 shows the sub-graphs of Figure 27. All ranges and axes in both figures are the same as in Figure 24 and Figure 25.

Figure 27.

MAE values for the different numbers of ratings based on the Pearson correlation coefficient in the item-based filtering.

Figure 28.

Sub-graphs in Figure 27: (a) the ranges from 2 to 15; (b) from 16 to 30; (c) from 31 to 50.

In the sub-graph (a), the MAE values sharply decrease before twelve movies. This means that twelve can assure the consistency of the results. Namely, more inputs than twelve movies no longer improve the accuracy of the results. Table 12 shows the change in the average of the MAE based on the cosine similarity and the average difference for each range. After the magic number, the differences have slight changes. Thus, the magic number is twelve.

Table 12.

Change in the average of MAE and difference for each range (the item-based filtering: the Pearson correlation coefficient).

4.3.2. Results and Analysis of the Verification Procedure for the Cosine Similarity

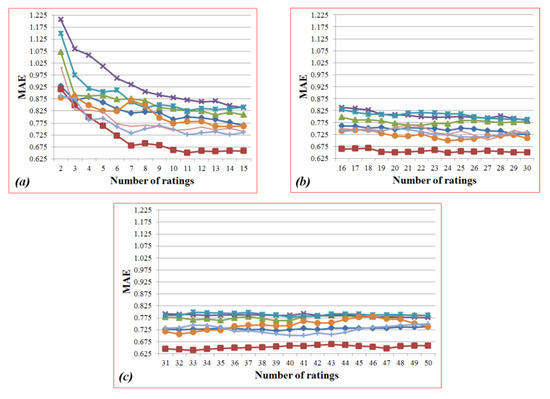

Figure 29 shows the MAE values for the different numbers of inputs when we use the cosine similarity and Figure 30 shows the sub-graphs of Figure 29. In each graph, all axes and the ranges are the same as in the graphs in the previous section.

Figure 29.

MAE values for the different numbers of ratings based on the cosine similarity in the item-based filtering.

Figure 30.

Sub-graphs in Figure 29: (a) the ranges from 2 to 15; (b) from 16 to 30; (c) from 31 to 50.

In Section 3.2.2, the prediction scores have consistency after twelve ratings. If there are gradual changes for the MAE values after twelve ratings, we can expect that a prediction score is no longer close to a real rating. Namely, more inputs than the magic number do not guarantee an increase in the accuracy of the recommendation results.

MAE values ranging from two to 11 in Figure 30a show larger changes than after the magic number decided on in Section 3.2.2. Table 13 shows the change in the average of the MAE and differences for each range based on the item-based filtering. This table also shows the validation of our decision that the magic number is twelve in the item-based filtering based on the cosine similarity. Namely, in Table 13, it was confirmed that the decreasing rate of average MAE values was reduced before the magic number.

Table 13.

Change in the average MAE and average difference for each range (the item-based filtering: the cosine similarity).

4.4. The Verification the Magic Number Based on Content-Based Filtering

4.4.1. Design of the Verification Procedure

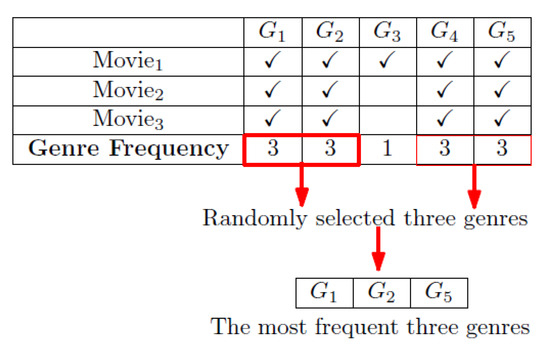

We first determine whether the three most frequent genres in a list of randomly selected movies coincide with the most frequent genres among all the movies rated by a user. If there are ties among the most frequent genres, we randomly select three genres among them as the top three. See Figure 31 for an example. In the example shown in Figure 31, a user has selected three movies (Movie1, Movie2 and Movie3), which give rise to five genres (G1, G2, G3, G4 and G5). Note that the genres G1, G2, G3, G4 and G5 have three, three, one, three and three as their respective genre frequencies. Since there are four genres with the same top frequency, we randomly select three genres among these four genres. In this example, genres G1, G2 and G5 are randomly selected as the top three.

Figure 31.

Example of selecting the top three genres.

We assume that if these top three genres include the user’s top preferred genres, then these three genres reflect the user’s preferences. Equation (4) shows how to calculate the scores for the results of comparisons between the top three genres in this sample and the top preferred genres overall:

where HPG is the set of the top preferred genres and RSG is a set of top three genres extracted from a randomly selected list of movies. HPG means the genre that has appeared most frequently in user-selected films.

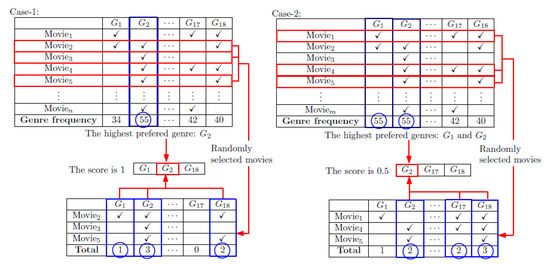

Figure 32 illustrates an example of calculating the score using Equation (4). In Figure 32, Case-1 and Case-2 are when |HPG| = 1 and |HPG| > 1, respectively. More details on each case are as follows:

Figure 32.

Example of calculating the score using Equation (3).

- Case-1: A user has selected n movies in total. The top preferred genre is G2. We randomly select three movies (Movie2, Movie3 and Movie5). The top three genres calculated from these selected movies are G1, G2 and G18. Finally, we check whether these top three genres include the top preferred genre. The three genres G1, G2 and G18 include the top genre G2, and thus, the score is 1.

- Case-2: A user has selected m movies in total. The top preferred genres are G1 and G2. We randomly select three movies (Movie1, Movie4 and Movie5). The top three genres calculated from these selected movies are G2, G17 and G18. Finally, we check whether these top three genres include the top preferred genres. The three genres G2, G17 and G18 include only G2 among the top preferred genres, and thus, the score is 0.5.

4.4.2. Results and Analysis of the Verification Procedure

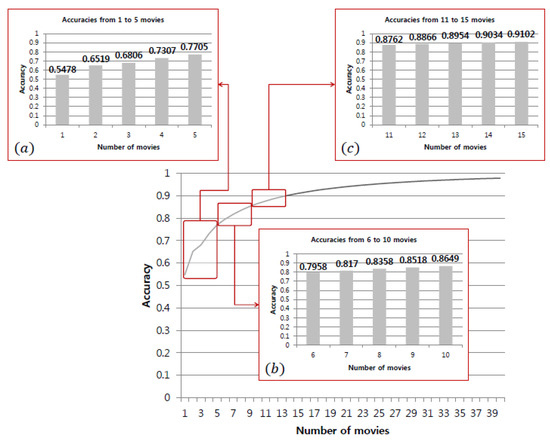

We apply this test to all users. Note that the number of randomly selected movies is the input size. Therefore, the results of this test can show whether or not the number of selected movies reflects the user’s preferences as computed from all rated movies. Figure 33 shows the results of this test.

Figure 33.

Accuracy of different random sample sizes.

Figure 33 shows the accuracy of different numbers of randomly selected movies. Figure 33a–c depict the ranges from 1 to 5, from 6 to 10 and from 11 to 15, respectively. In each graph in Figure 33, the y-axis represents the accuracy and the x-axis represents the number of randomly selected movies. We notice that the sharpest increase occurs in the range 1 to 5. This means that the increase is gradual after five, which is the magic number claimed herein. We also consider Equation (5) for more detailed analysis of the results:

where AC denotes accuracy, VRm is the absolute difference between the accuracies calculated using m and m − 1 movies and 2 ≤ m ≤ 40 is the number of randomly selected movies. Table 14 provides the computed values shown in Figure 8 and their corresponding differences with each increase in the number of movies selected.

Table 14.

Accuracy change and average difference for each range.

It can be seen in Table 14 that the accuracies increase steadily with the number of movies selected. However, the tendency of this increase is gradual after five. We can expect 55.78% accuracy in the recommendation results for the results when we input one movie, compared with 77.05% for five movies. The difference between these two accuracies is 21.27%; that is to say, a user can expect 21.27% better accuracy from the results by providing an additional four movies to the system as inputs. To obtain an equal increase in accuracy after five movies would require providing more than thirty-five extra movies, since forty movies provide 97.82% accuracy. Although the accuracy is observed to improve, when more than five movies are selected, the degree of improvement diminishes beyond the magic number. Therefore, when a user selects five movies, which is the magic number, the recommendation system can provide good recommendation results.

5. Conclusions

Recommendation systems based on collaborative filtering should analyze user preferences to provide better suggestions to users. In many applications on web or mobile devices providing recommendation or curation services to users, the services ask the user for some information, such as demographic information, preferred category and more than the number of ratings for items provided in the services. However, many users may be inconvenienced by the requirement that they provide initial inputs to define their preferences. Moreover, new users must input their preferences to receive recommendations. If a system requires large amounts of input from new users, those users may become discouraged before using the recommendation system.

The magic number proposed herein can provide technical guidance in addressing the cold-start problem between users and systems when the systems analyze user preferences. We have claimed that the magic number represents different numbers of movies according to the filtering algorithms: user-, item- and content-based. Table 15 shows the identified magic number in our experiments; namely, when a user provides fifteen, twelve or five movies as initial inputs to the system based on user-, item- or content-based filtering, the recommendation system can provide sufficiently reliable results to the user. We have justified the reliability of the magic number through statistical experiments such as analyzing the prediction scores based on the Pearson correlation coefficient and the cosine similarity, MAE and error rates with different numbers of movies.

Table 15.

The magic number of each filtering algorithm.

Based on Table 15, we can determine the magic number as 15 for user-based collaborative filtering approaches. In this case, each similarity measure has different magic numbers: 15 in Pearson correlation coefficient and 12 in cosine similarity. This means that if we utilize Pearson correlation coefficient as a similarity measure for user-based collaborative filtering approaches, we can expect reliable recommendation results by using 15 inputs. Namely, the minimum number of inputs to expect reliable recommendation results in user-based collaborative filtering based on Pearson correlation coefficient is 15. The results in Table 15 also show that if we utilize cosine similarity as a similarity measure for user-based collaborative filtering approaches, we can expect reliable recommendation results by using 12 inputs. Similarly, our results show that in the case of item-based collaborative filtering approaches, the minimum number of inputs to expect reliable recommendation results is 12 for each similarity measure.

Moreover, we have verified the magic number by applying this to a real recommendation system that requires ratings or genre combinations as initial inputs. Through a series of experiments, we conclude that the use of the magic number can provide highly accurate recommendation results and ease the burden on users.

In future works, we will apply the magic number to other domains, such as music or e-commerce, and observe the stability of the accuracy by validating the MAE. In addition, based on the insight of the magic number, we will also utilize other inputs, such as category or tag information for the contents, to alleviate user-side cold-start problems in recommender systems.

Author Contributions

Conceptualization, S.-M.C., D.L. and C.P.; Formal analysis, S.-M.C.; Investigation, D.L.; Methodology, S.-M.C. and D.L.; Project administration, C.P.; Supervision, C.P.; Validation, S.-M.C. and D.L.; Writing—original draft, S.-M.C. and C.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (NRF-2019R1I1A1A01058458). This study was supported by a 2020 Research Grant from Kangwon National University.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Han, Y.S.; Kim, L.; Cha, J.W. Computing user reputation in a social network of web 2.0. Comput. Inform. 2012, 31, 447–462. [Google Scholar]

- Wiyartanti, L.; Han, Y.S.; Kim, L. A ranking algorithm for user-generated video contents based on social activities. In Proceedings of the 3rd International Conference on Data Mining, London, UK, 13–16 November 2008. [Google Scholar]

- Eckhardt, A. Similarity of users’ (content-based) preference models for collaborative filtering in few ratings scenario. Expert Syst. Appl. 2012, 39, 11511–11516. [Google Scholar] [CrossRef]

- Linden, G.; Smith, B.; York, J. Amazon.com recommendations: Item-to-item collaborative filtering. IEEE Internet Comput. 2003, 7, 76–80. [Google Scholar] [CrossRef]

- Middleton, S.E.; Shadbolt, N.R.; De Roure, D.C. Ontological user profiling in recommender systems. ACM Trans. Inf. Syst. 2004, 22, 54–88. [Google Scholar] [CrossRef]

- Sarwar, B.; Karypis, G.; Konstan, J.; Riedl, J. Item-based collaborative filtering recommendation algorithms. In Proceedings of the 10th International Conference on World Wide Web, Hong Kong, China, 1–5 May 2001. [Google Scholar]

- Choi, S.M.; Jang, K.; Lee, T.D.; Khreishah, A.; Noh, W. Alleviating Item-Side Cold-Start Problems in Recommender Systems. IEEE Access 2020, 8, 167747–167756. [Google Scholar] [CrossRef]

- Bell, R.M.; Koren, Y. Lessons from the Netflix prize challenge. ACM SIGKDD Explor. Newsl. 2007, 9, 75–79. [Google Scholar] [CrossRef]

- Billsus, D.; Pazzani, M.J. Learning collaborative information filters. In Proceedings of the 15th International Conference on Machine Learning, Madison, WI, USA, 24–27 July 1998. [Google Scholar]

- Sarwar, B.; Karypis, G.; Konstan, J.; Riedl, J. Analysis of recommendation algorithms for e-commerce. In Proceedings of the 2nd ACM Conference on Electronic Commerce, Minneapolis, MN, USA, 17–20 October 2000. [Google Scholar]

- Adomavicius, G.; Tuzhilin, A. Toward the next generation of recommender systems: A survey of the state-of-the-art and possible extensions. IEEE Trans. Knowl. Data Eng. 2005, 17, 734–749. [Google Scholar] [CrossRef]

- Schein, A.I.; Popescul, A.; Ungar, L.H.; Pennock, D.M. Methods and metrics for cold-start recommendations. In Proceedings of the 25th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, Tampere, Finland, 11–15 August 2002. [Google Scholar]

- Available online: http://www.jinni.com (accessed on 26 November 2020).

- Available online: https://www.criticker.com (accessed on 26 November 2020).

- Available online: https://www.rottentomatoes.com (accessed on 26 November 2020).

- Available online: https://movielens.org (accessed on 26 November 2020).

- Koren, Y.; Bell, R.M.; Volinsky, C. Matrix factorization techniques for recommender systems. Computer 2009, 42, 30–37. [Google Scholar] [CrossRef]

- Bell, R.M.; Koren, Y.; Volinsky, C. Modeling relationships at multiple scales to improve accuracy of large recommender systems. In Proceedings of the 13th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Jose, CA, USA, 12–15 August 2007. [Google Scholar]

- Lam, X.N.; Vu, T.; Le, T.D.; Duong, A.D. Addressing cold-start problem in recommendation systems. In Proceedings of the 2nd International Conference on Ubiquitous Information Management and Communication, Suwon, Korea, 31 January–1 February 2008. [Google Scholar]

- Jung, J.J. Attribute selection-based recommendation framework for short-head user group: An empirical study by Movielens and IMDB. Expert Syst. Appl. 2012, 39, 4049–4054. [Google Scholar] [CrossRef]

- Choi, S.M.; Han, Y.S. A content recommendation system based on category correlations. In Proceedings of the 5th International Multi-Conference on Computing in the Global Information Technology, Valencia, Spain, 20–25 September 2010. [Google Scholar]

- Choi, S.M.; Han, Y.S. Representative reviewers for internet social media. Expert Syst. Appl. 2013, 40, 1274–1282. [Google Scholar] [CrossRef]

- Jung, J.J. Computational reputation model based on selecting consensus choices: An empirical study on semantic wiki platform. Expert Syst. Appl. 2012, 39, 9002–9007. [Google Scholar] [CrossRef]

- Lika, B.; Kolomvatsos, K.; Hadjiefthymiades, S. Facing the cold start problem in recommender systems. Expert Syst. Appl. 2014, 41, 2065–2073. [Google Scholar] [CrossRef]

- Zhang, Z.K.; Liu, C.; Zhang, Y.C.; Zhou, T. Solving the cold-start problem in recommender systems with social tags. EPL 2010, 92, 28002. [Google Scholar] [CrossRef]

- Gunawardana, A.; Meek, C. Tied boltzmann machines for cold start recommendations. In Proceedings of the ACM Conference on Recommender Systems, Lausanne, Switzerland, 23–25 October 2008. [Google Scholar]

- Gantner, Z.; Drumond, L.; Freudenthaler, C.; Rendle, S.; Schmidt-Thieme, L. Learning attribute-to-feature mappings for cold-start recommendations. In Proceedings of the 10th IEEE International Conference on Data Mining, Sydney, Australia, 13–17 December 2010. [Google Scholar]

- Sun, D.; Luo, Z.; Zhang, F. A novel approach for collaborative filtering to alleviate the new item cold-start problem. In Proceedings of the 11th International Symposium on Communications and Information Technologies, Hangzhou, China, 12–14 December 2011. [Google Scholar]

- Deldjoo, Y.; Dacrema, M.F.; Constantin, M.G.; Eghbal-zadeh, H.; Cereda, S.; Schedl, M.; Ionescu, B.; Cremonesi, P. Movie genome: Alleviating new item cold start in movie recommendation. User Model. User Adapt. Interact. 2019, 29, 291–343. [Google Scholar] [CrossRef]

- Tu, Z.; Fan, Y.; Li, Y.; Chen, X.; Su, L.; Jin, D. From Fingerprint to Footprint: Cold-start Location Recommendation by Learning User Interest from App Data. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2019, 3, 1–22. [Google Scholar] [CrossRef]

- Jazayeriy, H.; Mohammadi, S.; Shamshirband, S. A Fast Recommender System for Cold User Using Categorized Items. Math. Comput. Appl. 2018, 23, 1. [Google Scholar] [CrossRef]

- Zhang, J.D.; Chow, C.Y.; Xu, J. Enabling Kernel-Based Attribute-Aware Matrix Factorization for Rating Prediction. IEEE Trans. Knowl. Data Eng. 2017, 29, 798–812. [Google Scholar] [CrossRef]

- Zheng, X.; Luo, Y.; Xu, Z.; Yu, Q.; Lu, L. Tourism Destination Recommender System for the Cold Start Problem. KSII Trans. Internet Inf. Syst. 2016, 10, 3192–3212. [Google Scholar]

- Xu, J.; Yao, Y.; Tong, H.; Tao, X. RaPare: A Generic Strategy for Cold-Start Rating Prediction Problem. IEEE Trans. Knowl. Data Eng. 2016, 29, 1296–1309. [Google Scholar] [CrossRef]

- Xu, J.; Yao, Y.; Tong, H.; Tao, X.; Lu, J. Ice-Breaking: Mitigating Cold-Start Recommendation Problem by Rating Comparison. In Proceedings of the Twenty-Fourth International Joint Conference on Artificial Intelligence, Buenos Aires, Argentina, 25–31 July 2015. [Google Scholar]

- Gogna, A.; Majumdar, A. A Comprehensive Recommender System Model: Improving Accuracy for Both Warm and Cold Start Users. IEEE Access 2015, 3, 2803–2813. [Google Scholar] [CrossRef]

- Bobadilla, J.; Ortega, F.; Ernando, A.H.; Bernal, J. A collaborative filtering approach to mitigate the new user cold start problem. Knowl. Based Syst. 2012, 26, 225–238. [Google Scholar] [CrossRef]

- Kim, T.H.; Yang, S.B. An effective recommendation algorithm for clustering-based recommender systems. In Proceedings of the 18th Australian Joint Conference on Advances in Artificial Intelligence, Sydney, Australia, 5–9 December 2005. [Google Scholar]

- Sarwar, B.M.; Konstan, J.A.; Borchers, A.; Herlocker, J.L.; Miller, B.N.; Riedl, J. Using filtering agents to improve prediction quality in the Grouplens research collaborative filtering system. In Proceedings of the ACM Conference on Computer Supported Cooperative Work, Seattle, WA, USA, 14–18 November 1998. [Google Scholar]

- Available online: https://grouplens.org/datasets/movielens (accessed on 26 November 2020).

- Bennett, J.; Lanning, S. The Netflix prize. In Proceedings of the KDD Cup Workshop, San Jose, CA, USA, 12–15 August 2007. [Google Scholar]

- Bulmer, M.G. Principle of Statistics, 3rd ed.; Dover Publications: Mineola, NY, USA, 1979. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).