Abstract

In this manuscript we provide an exact solution to the maxmin problem subject to , where A and B are real matrices. This problem comes from a remodeling of subject to , because the latter problem has no solution. Our mathematical method comes from the Abstract Operator Theory, whose strong machinery allows us to reduce the first problem to subject to , which can be solved exactly by relying on supporting vectors. Finally, as appendices, we provide two applications of our solution: first, we construct a truly optimal minimum stored-energy Transcranian Magnetic Stimulation (TMS) coil, and second, we find an optimal geolocation involving statistical variables.

MSC:

47L05, 47L90, 49J30, 90B50

1. Introduction

1.1. Scope

Different scientific fields, such as Physics, Statistics, Economics, or Engineering, deal with real-life problems that are usually modelled by the experts on those fields using matrices and their norms (see [1,2,3,4,5,6]). A typical modelling is the following original maxmin problem

One of the most iconic results in this manuscript (Theorem 2) shows that the previous problem, regarded strictly as a multiple optimization problem, has no solutions. To save this obstacle we provide a different model, such as

Here in this article we justify the remodelling of the original maxmin problem and we solve it by making use of supporting vectors. This concept comes from the Theory of Banach Spaces and Operator Theory. Given a matrix A, a supporting vector is a unit vector x such that A attains its norm at x, that is, x is a solution of the following single optimization problem:

The geometric and topological structure of supporting vectors can be consulted in [7,8,9]. On the other hand, generalized supporting vectors are defined and studied in [7,8]. The generalized supporting vectors of a finite sequence of matrices , for the Euclidean norm , are the solutions of

This optimization problem clearly generalizes the previous one.

Supporting vectors were originally applied in [10] to truly optimally design a TMS coil, because until that moment TMS coils had only been designed by means of heuristic methods, which were never proved to be convergent. In [10] a three-component TMS coil problem is posed but only the one-component case was resolved. The three-component case was stated and solved by means of the generalized supporting vectors in [8]. In this manuscript, we model a TMS coil with a maxmin problem and solve it exactly with our method.

A second application of supporting vectors was given in [8], where an optimal location situation using Principal Component Analysis (PCA) was solved. In this manuscript, we model a more complex PCA problem as an optimal maxmin geolocation involving statistical variables.

For other perspective on supporting vectors and generalized supporting vectors, we refer the reader to [9].

1.2. Background

In the first place, we refer the reader to [8] (Preliminaries) for a general review of multiobjective optimization problems and their reformulations to avoid the lack of solutions (generally caused by the existence of many objective functions).

The original maxmin optimization problem has the form

where are real-valued functions and X is a nonempty set. Notice that

Many real-life problems can be mathematically model, such as a maxmin. However, this kind of multiobjective optimization problems may have the inconvenience of lacking a solution. If this occurs, then we are in need of remodeling the real-life problem with another mathematical optimization problem that has a solution and still models the real-life problem very accurately.

According to [10] (Theorem 5.1), one can realize that, in case , the following optimization problems are good alternatives to keep modeling the real-life problem accurately:

- .

- .

- .

- .

We will prove in the third section that all four previous reformulations are equivalent for the original maxmin . In the fourth section, we will solve the reformulation .

2. Characterizations of Operators with Null Kernel

Kernels will play a fundamental role towards solving the general reformulated maxmin (2) as shown in the next section. This is why we first study the operators with null kernel.

Throughout this section, all monoid actions considered will be left, all rings will be associative, all rings will be unitary rngs, all absolute semi-values and all semi-norms will be non-zero, all modules over rings will be unital, all normed spaces will be real or complex and all algebras will be unitary and complex.

Given a rng R and an element , we will denote by to the set of left divisors of s, that is,

Similarly, stands for the set of right divisors of s. If R is a ring, then the set of its invertibles is usually denoted by . Notice that () is precisely the subset of elements of R which are right-(left) invertible. As a consequence, . Observe also that . In general we have that and . Later on in Example 1 we will provide an example of a ring where .

Recall that an element p of a monoid is called involutive if . Given a rng R, an involution is an additive, antimultiplicative, composition-involutive map . A -rng is a rng endowed with an involution.

The categorical concept of monomorphism will play an important role in this manuscript. A morphism between objects A and B in a category is said to be a monomorphism provided that implies for all and all . Once can check that if and there exist and such that is a monomorphism, then f is also a monomorphism. In particular, if is a section, that is, exists such that , then f is a monomorphism. As a consequence, the elements of that have a left inverse are monomorphisms. In some categories, the last condition suffices to characterize monomorphisms. This is the case, for instance, of the category of vector spaces over a division ring.

Recall that denotes the space of continuous linear operators from a topological vector space X to another topological vector space Y.

Proposition 1.

A continuous linear operator between locally convex Hausdorff topological vector spaces X and Y verifies that if and only if exists with . In particular, if , then if and only if in .

Proof.

Let such that . Fix any , then and so . Conversely, if , then fix and (the existence of is guaranteed by the Hahn-Banach Theorem on the Hausdorff locally convex topological vector space Y). Next, consider

Notice that and . □

Theorem 1.

Let be a continuous linear operator between locally convex Hausdorff topological vector spaces X and Y. Then:

- 1.

- If T is a section, then

- 2.

- In case X and Y are Banach spaces, is topologically complemented in Y and , then T is a section.

Proof.

- Trivial since sections are monomorphisms.

- Consider . Since is topologically complemented in Y we have that is closed in Y, thus it is a Banach space. Therefore, the Open Mapping Theorem assures that is an isomorphism. Let be the inverse of . Now consider to be a continuous linear projection such that . Finally, it suffices to define since .

□

We will finalize this section with a trivial example of a matrix such that .

Example 1.

Consider

It is not hard to check that thus A is left-invertible by Theorem 1(2) and so . In fact,

Finally,

3. Remodeling the Original Maxmin Problem Subject to

3.1. The Original Maxmin Problem Has No Solutions

This subsection begins with the following theorem:

Theorem 2.

Let be nonzero continuous linear operators between Banach spaces X and Y. Then the original maxmin problem

has trivially no solution.

Proof.

Observe that and because . Then the set of solutions of Problem (1) is

□

As a consequence, Problem (1) must be reformulated or remodeled.

3.2. Equivalent Reformulations for the Original Maxmin Problem

According to the Background section, we begin with the following reformulation:

Please note that is a -symmetric set, where , in other words, if and , then for every . The finite dimensional version of the previous reformulation is

where .

Recall that denotes the space of bounded operators from X to Y.

Lemma 1.

Let where X and Y are Banach spaces. If the general reformulated maxmin problem

has a solution, then .

Proof.

If , then it suffices to consider the sequence for , since for all and as . □

The general maxmin (1) can also be reformulated as

Lemma 2.

Let where X and Y are Banach spaces. If the second general reformulated maxmin problem

has a solution, then .

Proof.

Suppose there exists . Then fix an arbitrary . Notice that

as . □

The next theorem shows that the previous two reformulations are in fact equivalent.

Theorem 3.

Let where X and Y are Banach spaces. Then

Proof.

Let and . Fix an arbitrary . Notice that in virtue of Theorem 1. Then

therefore

Conversely, let . Fix an arbitrary with . Then

which means that

and thus

□

The reformulation

is slightly different from the previous two reformulations. In fact, if , then . The previous reformulation is equivalent to the following one as shown in the next theorem:

Theorem 4.

Let where X and Y are Banach spaces. Then

We spare of the details of the proof of the previous theorem to the reader. Notice that if , then . However, if , then all four reformulations are equivalent, as shown in the next theorem, whose proof’s details we spare again to the reader.

Theorem 5.

Let where X and Y are Banach spaces. If , then

4. Solving the Maxmin Problem Subject to

We will distinguish between two cases.

4.1. First Case: S Is an Isomorphism Over Its Image

By bearing in mind Theorem 5, we can focus on the first reformulation proposed at the beginning of the previous section:

The idea we propose to solve the previous reformulation is to make use of supporting vectors (see [7,8,9,10]). Recall that if is a continuous linear operator between Banach spaces, then the set of supporting vectors of R is defined by

The idea of using supporting vectors is that the optimization problem

whose solutions are by definition the supporting vectors of R, can be easily solved theoretically and computationally (see [8]).

Our first result towards this direction considers the case where S is an isomorphism over its image.

Theorem 6.

Let where X and Y are Banach spaces. Suppose that S is an isomorphism over its image and denotes its inverse. Suppose also that is complemented in Y, being a continuous linear projection onto . Then

If, in addition, , then

Proof.

We will show first that

Let . We will show that . Indeed, let with . Since , by assumption we obtain

Now assume that . We will show that

Let , we will show that . Indeed, let . Observe that

so by assumption

□

Notice that, in the settings of Theorem 6, is a left-inverse of S, in other words, S is a section, as in Theorem 1(2).

Taking into consideration that every closed subspace of a Hilbert space is 1-complemented (see [11,12] to realize that this fact characterizes Hilbert spaces of dimension ), we directly obtain the following corollary.

Corollary 1.

Let where X is a Banach space and Y a Hilbert space. Suppose that S is an isomorphism over its image and let be its inverse. Then

where is the orthogonal projection on .

4.2. The Moore–Penrose Inverse

If , then the Moore–Penrose inverse of B, denoted by , is the only matrix which verifies the following:

- .

- .

- .

- .

If , then is a left-inverse of B. Even more, is the orthogonal projection onto the range of B, thus we have the following result from Corollary 1.

Corollary 2.

Let such that . Then

According to the previous Corollary, in its settings, if and there exists such that , then and can be computed as

4.3. Second Case: S Is Not an Isomorphism Over Its Image

What happens if S is not an isomorphism over its image? Next theorem answers this question.

Theorem 7.

Let where X and Y are Banach spaces. Suppose that . If

denotes the quotient map, then

where

and

Proof.

Let . Fix an arbitrary with . Then therefore

This shows that . Conversely, let

Fix an arbitrary with . Then therefore

This shows that . □

Please note that in the settings of Theorem 7, if is closed in Y, then is an isomorphism over its image , and thus in this case Theorem 7 reduces the reformulated maxmin to Theorem 6.

4.4. Characterizing When the Finite Dimensional Reformulated Maxmin Has a Solution

The final part of this section is aimed at characterizing when the finite dimensional reformulated maxmin has a solution.

Lemma 3.

Let be a bounded operator between finite dimensional Banach spaces X and Y. If is a sequence in , then there is a sequence in so that is bounded.

Proof.

Consider the linear operator

Please note that

for all , therefore the sequence is bounded in because is finite dimensional and has null kernel so its inverse is continuous. Finally, choose such that for all . □

Lemma 4.

Let . If , then A is bounded on and attains its maximum on that set.

Proof.

Let be a sequence in . In accordance with Lemma 3, there exists a sequence in such that is bounded. Since by hypothesis (recall that ), we conclude that A is bounded on . Finally, let be a sequence in such that as . Please note that for all , so is bounded in and so is in . Fix such that for all . This means that is a bounded sequence in so we can extract a convergent subsequence to some . At this stage, notice that for all and converges to , so . Note also that, since , converges to , which implies that

□

Theorem 8.

Let . The reformulated maxmin problem

has a solution if and only if .

Proof.

If , then we just need to call on Lemma 4. Conversely, if , then it suffices to consider the sequence for , since for all and as . □

4.5. Matrices on Quotient Spaces

Consider the maxmin

being X and Y Banach spaces and with . Notice that if is a Hamel basis of X, then is a generator system of . By making use of the Zorn’s Lemma, it can be shown that contains a Hamel basis of . Observe that a subset C of is linearly independent if and only if is a linearly independent subset of Y.

In the finite dimensional case, we have

and

If denotes the canonical basis of , then is a generator system of . This generator system contains a basis of so let be a basis of . Please note that and for every . Therefore, the matrix associated with the linear map defined by can be obtained from the matrix B by removing the columns corresponding to the indices , in other words, the matrix associated with is . Similarly, the matrix associated with the linear map defined by is . As we mentioned above, recall that a subset C of is linearly independent if and only if is a linearly independent subset of . As a consequence, in order to obtain the basis , it suffices to look at the rank of B and consider the columns of B that allow such rank, which automatically gives us the matrix associated with , that is, .

Finally, let

denote the quotient map. Let . If , then . The vector defined by

verifies that

To simplify the notation, we can define the map

where z is the vector described right above.

5. Discussion

Here we compile all the results from the previous subsections and define the structure of the algorithm that solves the maxmin (3).

Let with . Then

- Case 1:

- . denotes the Moore–Penrose inverse of B.

- Case 2:

- . where and .

In case a real-life problem is modeled like a maxmin involving more operators, we proceed as the following remark establishes in accordance with the preliminaries of this manuscript (reducing the number of multiobjective functions to avoid the lack of solutions):

Remark 1.

Let and be sequences of continuous linear operators between Banach spaces X and Y. The maxmin

can be reformulated as (recall the second typical reformulation)

which can be transformed into a regular maxmin as in (1) by considering the operators

and

obtaining then

which is equivalent to

Observe that for the operators T and S to be well defined it is sufficient that and be in .

6. Materials and Methods

The initial methodology employed in this research work is the Mathematical Modelling of real-life problems. The subsequent methodology followed is given by the Axiomatic-Deductive Method framed in the First-Order Mathematical language. Inside this framework, we deal with the Category Theory (the main category involved is the Category of Banach spaces with the Bounded Operators). The final methodology used is the implementation of our mathematical results in the MATLAB programming language.

7. Conclusions

We finally enumerate the novelties provided in this work, which serve as conclusions for our research:

- We prove that the original maxmin problemhas no solution (Theorem 2).

- We then rewrite (6) aswhich still models the real-life problem very accurately and has a solution if and only if (Theorem 8).

- A MATLAB code is provided for computing the solution to the maxmin problem. See Appendix C.

- Our solution applies to design truly optimal minimum stored-energy TMS coils and to find more complex optimal geolocations involving statistical variables. See Appendixes Appendix A and Appendix B.

- This article represents an interdisciplinary work involving pure abstract nontrivial proven theorems and programming codes that can be directly applied to different situations in the real world.

Author Contributions

Conceptualization, S.M.-P., F.J.G.-P., C.C.-S. and A.S.-A.; methodology, S.M.-P., F.J.G.-P., C.C.-S. and A.S.-A.; software, S.M.-P., F.J.G.-P., C.C.-S. and A.S.-A.; validation, S.M.-P., F.J.G.-P., C.C.-S. and A.S.-A.; formal analysis, S.M.-P., F.J.G.-P., C.C.-S. and A.S.-A.; investigation, S.M.-P., F.J.G.-P., C.C.-S. and A.S.-A.; resources, S.M.-P., F.J.G.-P., C.C.-S. and A.S.-A.; data curation, S.M.-P., F.J.G.-P., C.C.-S. and A.S.-A.; writing—original draft preparation, S.M.-P., F.J.G.-P., C.C.-S. and A.S.-A.; writing—review and editing, S.M.-P., F.J.G.-P., C.C.-S. and A.S.-A.; visualization, S.M.-P., F.J.G.-P., C.C.-S. and A.S.-A.; supervision, S.M.-P., F.J.G.-P., C.C.-S. and A.S.-A.; project administration, S.M.-P., F.J.G.-P., C.C.-S. and A.S.-A.; funding acquisition, S.M.-P., F.J.G.-P., C.C.-S. and A.S.-A. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Research Grant PGC-101514-B-100 awarded by the Spanish Ministry of Science, Innovation and Universities and partially funded by FEDER.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

Appendix A. Applications to Optimal TMS Coils

Appendix A.1. Introduction to TMS Coils

Transcranial Magnetic Stimulation (TMS) is a non-invasive technique to stimulate the brain. We refer the reader to [8,10,13,14,15,16,17,18,19,20,21,22,23] for a description on the development of TMS coils desing as an optimization problem.

An important safety issue in TMS is the minimization of the stimulation of non-target areas. Therefore, the development of TMS as a medical tool would be benefited with the design of TMS stimulators capable of inducing a maximum electric field in the region of interest, while minimizing the undesired stimulation in other prescribed regions.

Appendix A.2. Minimum Stored-Energy TMS Coil

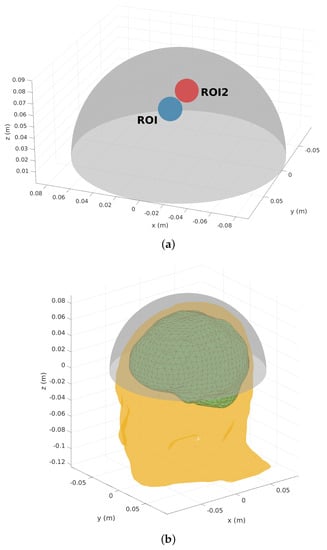

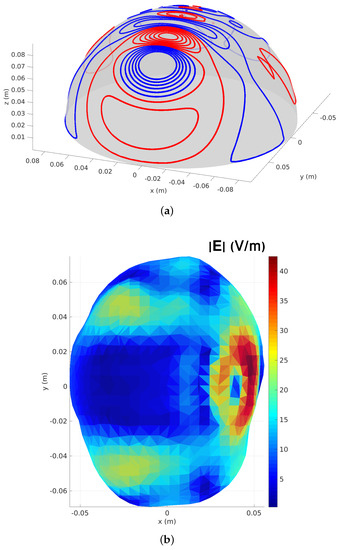

In the following section, in order to illustrate an application of the theoretical model developed in this manuscript, we are going to tackle the design of a minimum stored-energy hemispherical TMS coil of radius 9 cm, constructed to stimulate only one cerebral hemisphere. To this end, the coil must produce an E-field which is both maximum in a spherical region of interest (ROI) and minimum in a second region (ROI2). Both volumes of interest are of 1 cm radius and formed by 400 points, where ROI is shifted by 5 cm in the positive z-direction and by 2 cm in the positive y-direction; and ROI2 is shifted by 5 cm in the positive z-direction and by 2 cm in the negative y-direction, as shown in Figure A1a. In Figure A1b a simple human head made of two compartments, scalp and brain, used to evaluate the performance of the designed stimulator is shown.

Figure A1.

(a) Description of hemispherical surface where the optimal must been found along with the spherical regions of interest ROI and ROI2 where the electric field must be maximized and minimized respectively. (b) Description of the two compartment scalp-brain model.

Figure A1.

(a) Description of hemispherical surface where the optimal must been found along with the spherical regions of interest ROI and ROI2 where the electric field must be maximized and minimized respectively. (b) Description of the two compartment scalp-brain model.

By using the formalism presented in [10] this TMS coil design problem can be posed as the following optimization problem:

where is the stream function (the optimization variable), are the number of points in the ROI and ROI2, the number of mesh nodes, is the inductance matrix, and and are the E-field matrices in the prescribe x-direction.

Figure A2.

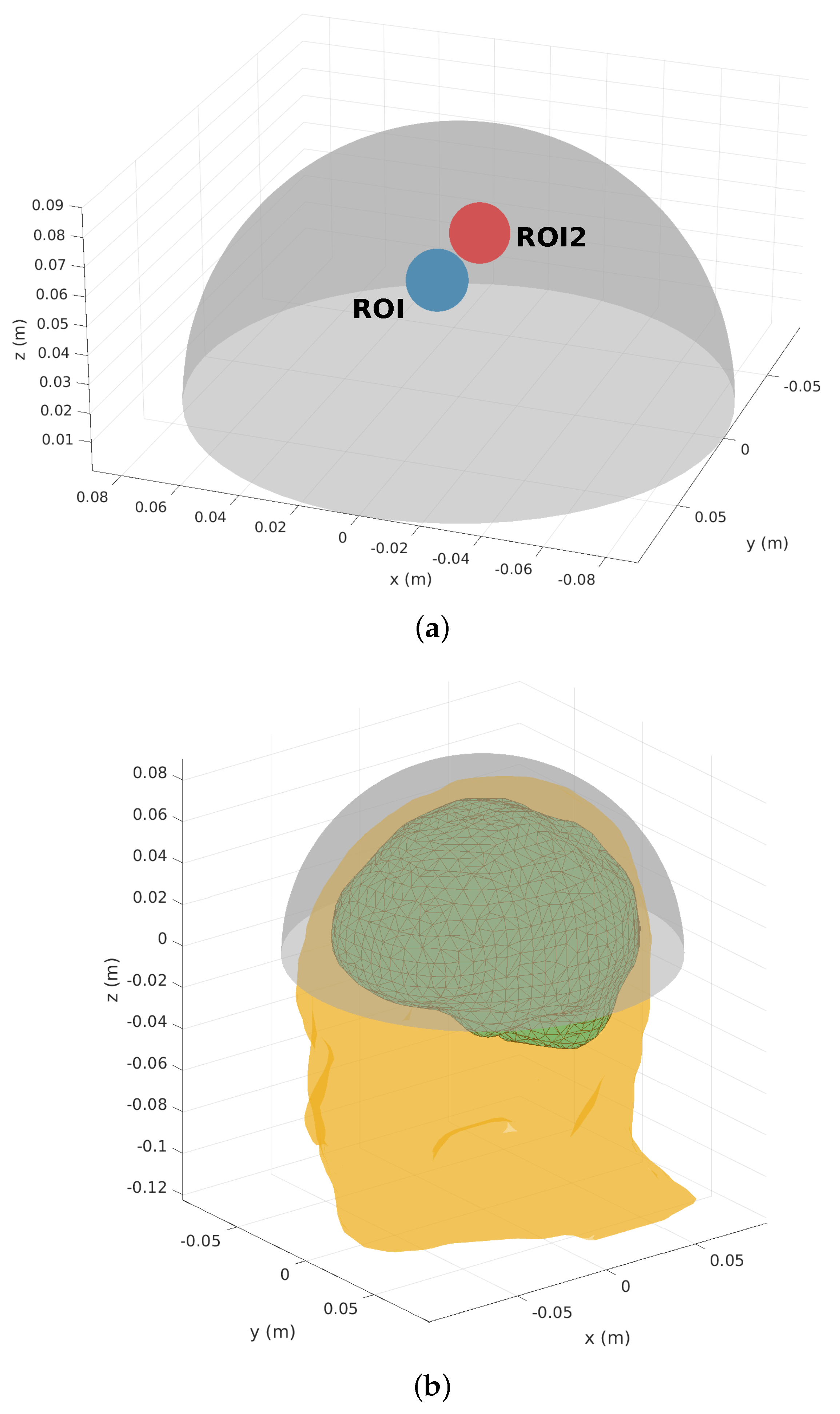

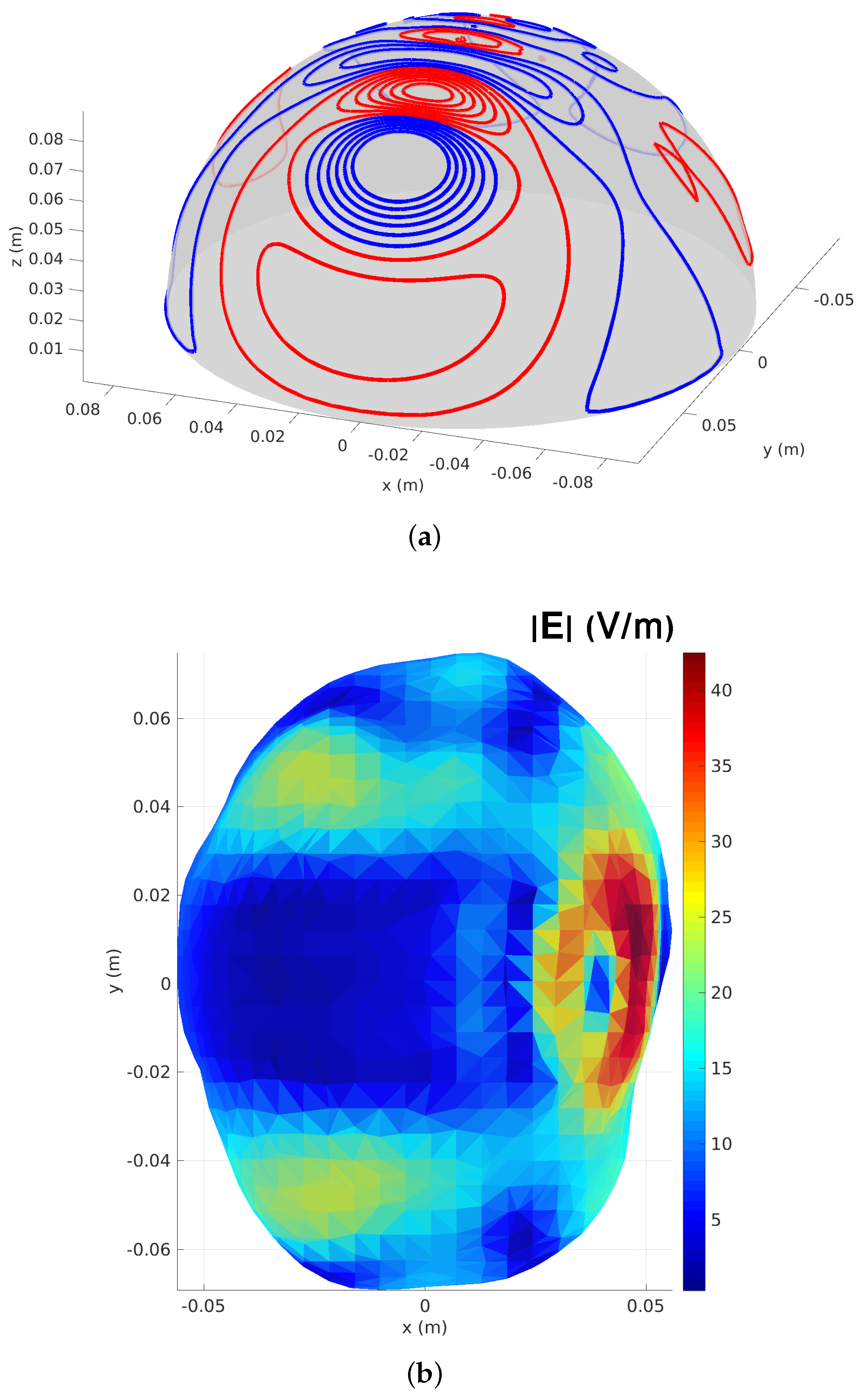

(a) Wirepaths with 18 turns of the TMS coil solution (red wires indicate reversed current flow with respect to blue). (b) E-field modulus induced at the surface of the brain by the designed TMS coil.

Figure A2.

(a) Wirepaths with 18 turns of the TMS coil solution (red wires indicate reversed current flow with respect to blue). (b) E-field modulus induced at the surface of the brain by the designed TMS coil.

Figure A2a shows the coil solution of problem in Equation (A1) computed by using the theoretical model proposed in this manuscript (see Section 5 and Appendix A.3), and as expected, the wire arrangements is remarkably concentrated over the region of stimulation.

To evaluate the stimulation of the coil, we resort to the direct BEM [24], which permits the computation of the electric field induced by the coils in conducting systems. As can be seen in Figure A2b, the TMS coil fulfils the initial requirements of stimulating only one hemisphere of the brain (the one where ROI is found); whereas the electric field induced in the other cerebral hemisphere (where ROI2 can be found) is minimum.

Appendix A.3. Reformulation of Problem (A1) to Turn it into a Maxmin

Now it is time to reformulate the multiobjective optimization problem given in (A1), because it has no solution in virtue of Theorem 2. We will transform it into a maxmin problem as in (7) so that we can apply the theoretical model described in Section 5:

Since raising to the square is a strictly increasing function on , the previous problem is trivially equivalent to the following one:

Next, we apply Cholesky decomposition to L to obtain so we have that so we obtain

Since C is an invertible square matrix, so the previous multiobjective optimization problem has no solution. Therefore it must be reformulated. We call then on Remark 1 to obtain:

which in essence is

where . The matrix D in this specific case has null kernel. In accordance with the previous sections, Problem (A5) is remodeled as

Finally, we can refer to Section 5 to solve the latter problem.

Appendix B. Applications to Optimal Geolocation

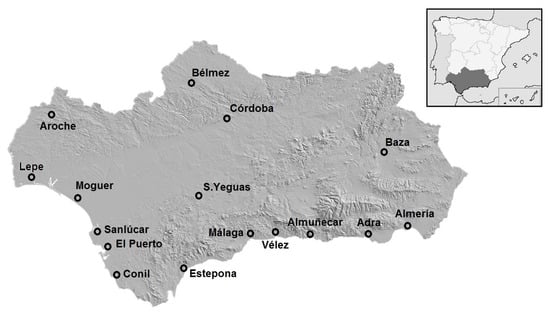

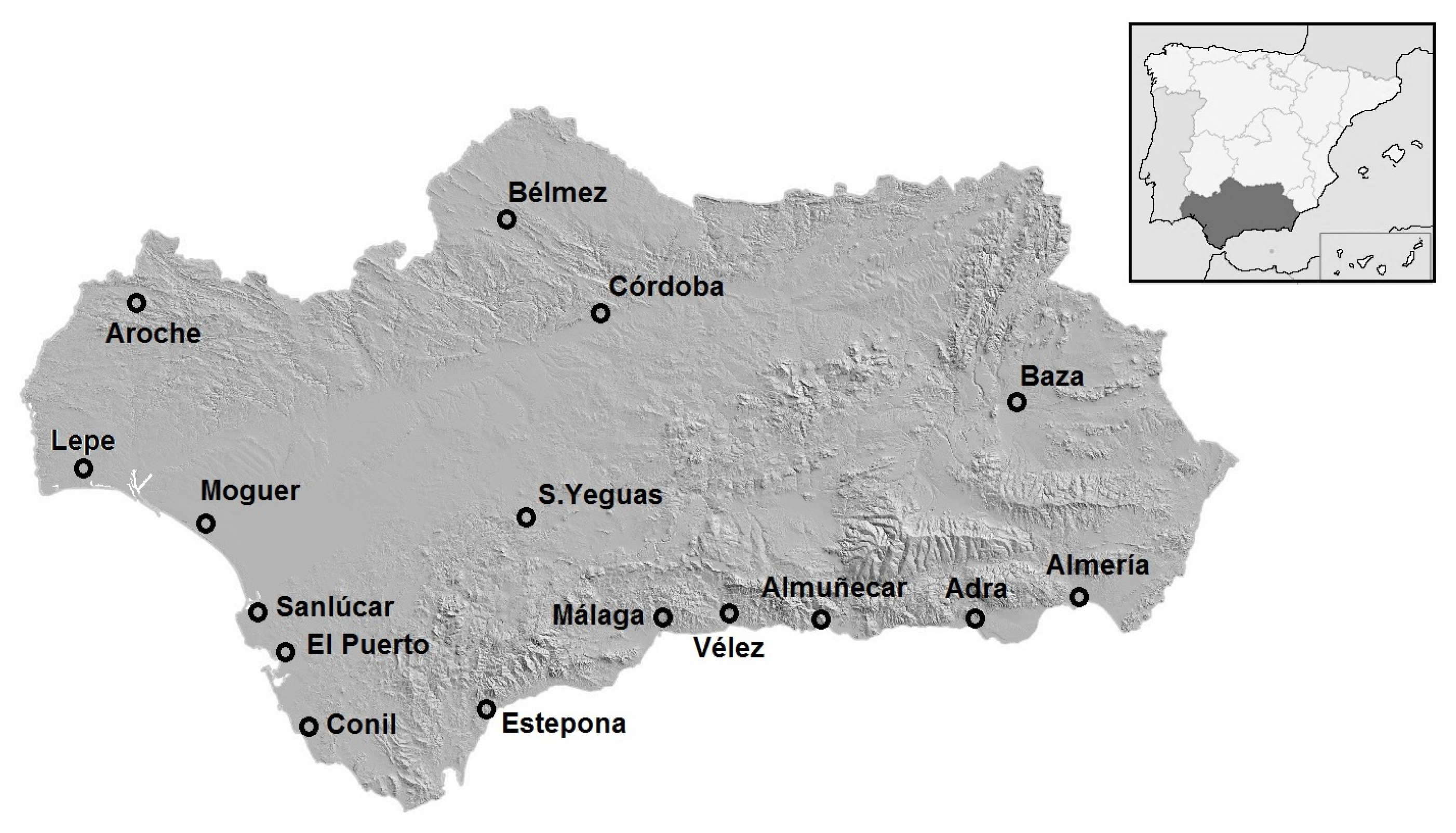

Several studies involving optimal geolocation [25], multivariate statistics [26,27] and multiobjective problems [28,29,30] were carried out recently. To show another application of maxmin multiobjective problems, we consider in this work the best situation of a tourism rural inn considering several measured climate variables. Locations with low highest temperature , radiation and evapotranspiration in summer time and high values in winter time are sites with climatic characteristics desirable for potential visitors. To solve this problem, we choose 11 locations in the Andalusian coastline and 2 in the inner, near the mountains. We have collected the data from the official Andalusian government webpage [31] evaluating the mean values of these variables on the last 5 years 2013–2019. The referred months of the study were January and July.

Table A1.

Mean values of high temperature (T) in Celsius Degrees, radiation (R) in , and evapotranspiration (E) in mm/day, measures in January (winter time) and July (summer time) between 2013 and 2018.

Table A1.

Mean values of high temperature (T) in Celsius Degrees, radiation (R) in , and evapotranspiration (E) in mm/day, measures in January (winter time) and July (summer time) between 2013 and 2018.

| T-Winter | R-Winter | E-Winter | T-Summer | R-Summer | E-Summer | |

|---|---|---|---|---|---|---|

| Sanlúcar | 15.959 | 9.572 | 1.520 | 30.086 | 27.758 | 6.103 |

| Moguer | 16.698 | 9.272 | 0.925 | 30.424 | 27.751 | 5.222 |

| Lepe | 16.659 | 9.503 | 1.242 | 30.610 | 28.297 | 6.836 |

| Conil | 16.322 | 9.940 | 1.331 | 28.913 | 26.669 | 5.596 |

| El Puerto | 16.504 | 9.767 | 1.625 | 31.052 | 28.216 | 6.829 |

| Estepona | 16.908 | 10.194 | 1.773 | 31.233 | 27.298 | 6.246 |

| Málaga | 17.663 | 9.968 | 1.606 | 32.358 | 27.528 | 6.378 |

| Vélez | 18.204 | 9.819 | 1.905 | 31.912 | 26.534 | 5.911 |

| Almuñécar | 17.733 | 10.247 | 1.404 | 29.684 | 25.370 | 4.952 |

| Adra | 17.784 | 10.198 | 1.637 | 28.929 | 26.463 | 5.143 |

| Almería | 17.468 | 10.068 | 1.561 | 30.342 | 27.335 | 5.793 |

| Aroche | 16.477 | 9.797 | 1.434 | 34.616 | 27.806 | 6.270 |

| Córdoba | 14.871 | 8.952 | 1.149 | 36.375 | 28.503 | 7.615 |

| Baza | 13.386 | 8.303 | 3.054 | 35.754 | 27.824 | 1.673 |

| Bélmez | 13.150 | 8.216 | 1.215 | 35.272 | 28.478 | 7.400 |

| S. Yeguas | 13.656 | 9.155 | 1.247 | 33.660 | 28.727 | 7.825 |

To find the optimal location, let us evaluate the site where the variables mean values are maximum in January and minimum in July. Here we have a typical multiobjective problem with two data matrices that can be formulated as follows:

where A and B are real 16 × 3 matrices with the values of the three variables taking into account (highest temperature, radiation and evapotranspiration) in January and July respectively. To avoid unit effects, we standarized the variables ( and ). The vector x is the solution of the multiobjective problem.

Since (A7) lacks any solution in view of Theorem 2, we reformulate it as we showed in Remark 1 by the following:

with matrix , where is the identity matrix with . Notice that it also verifies that . Observe that, according to the previous sections, (A8) can be remodeled into

and solved accordingly.

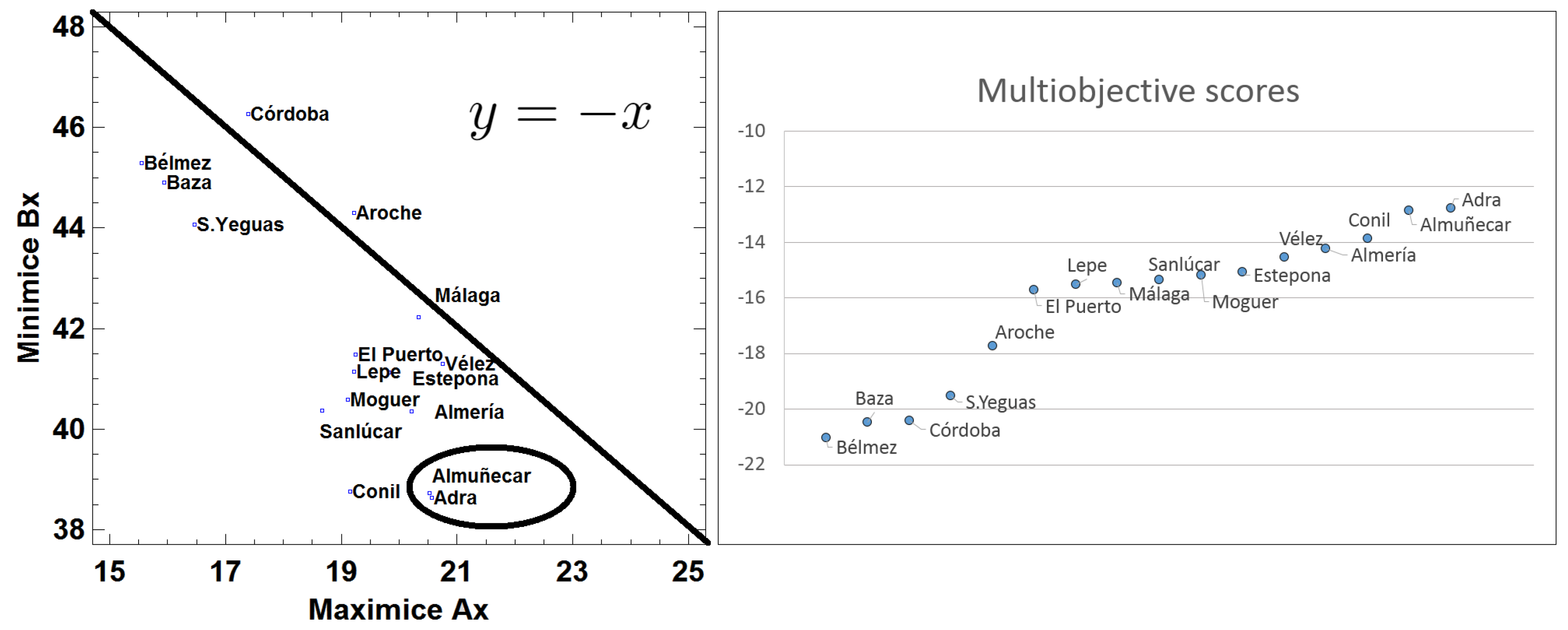

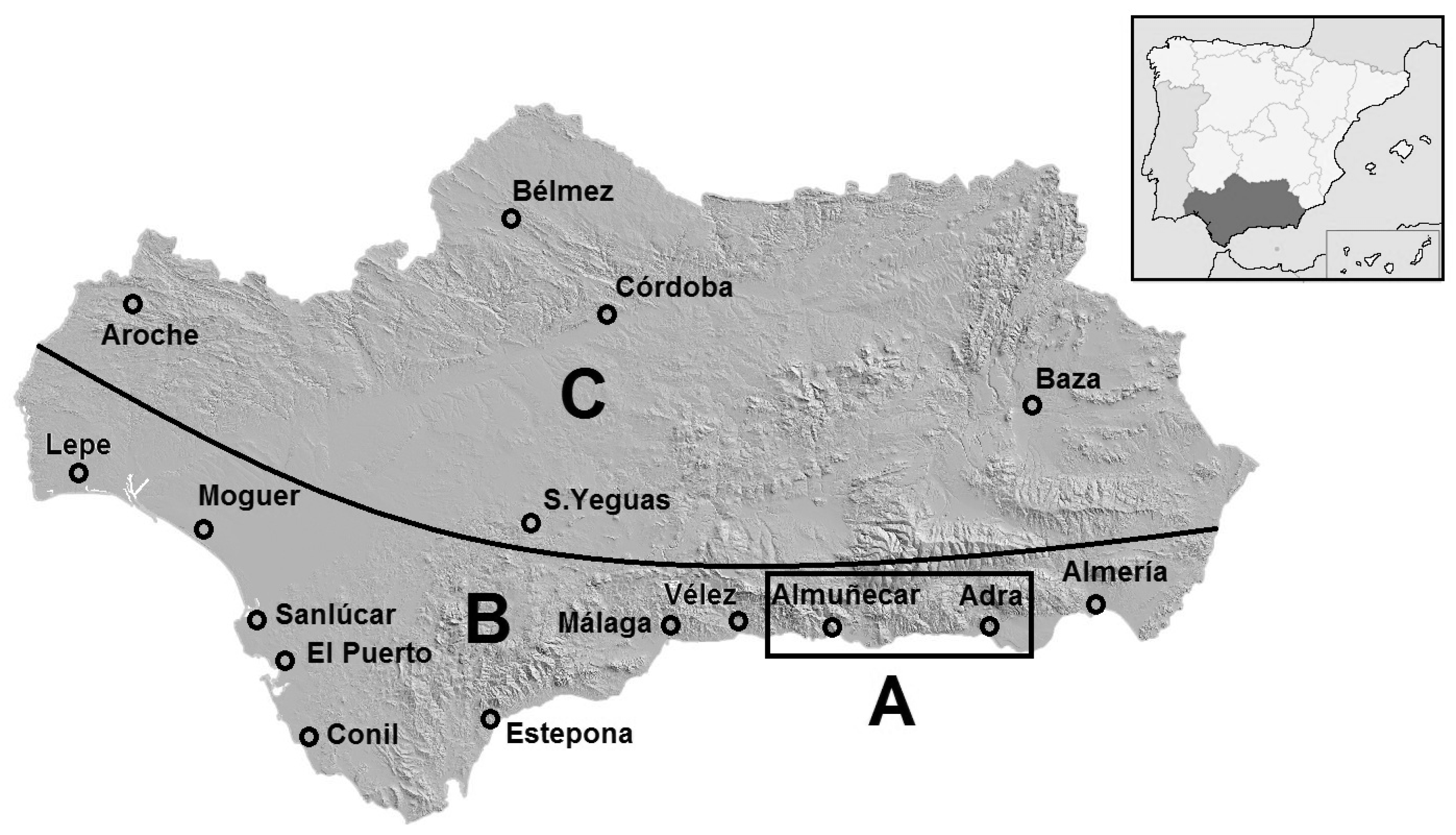

Figure A3.

Geographic distribution of the sites considered in the study. 11 places are in the coastline of the region and 5 in the inner.

Figure A3.

Geographic distribution of the sites considered in the study. 11 places are in the coastline of the region and 5 in the inner.

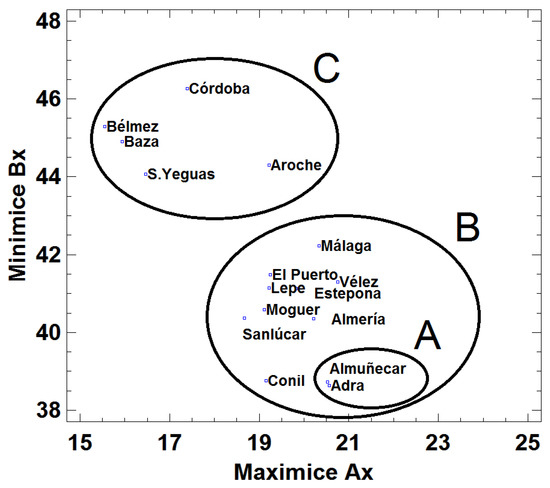

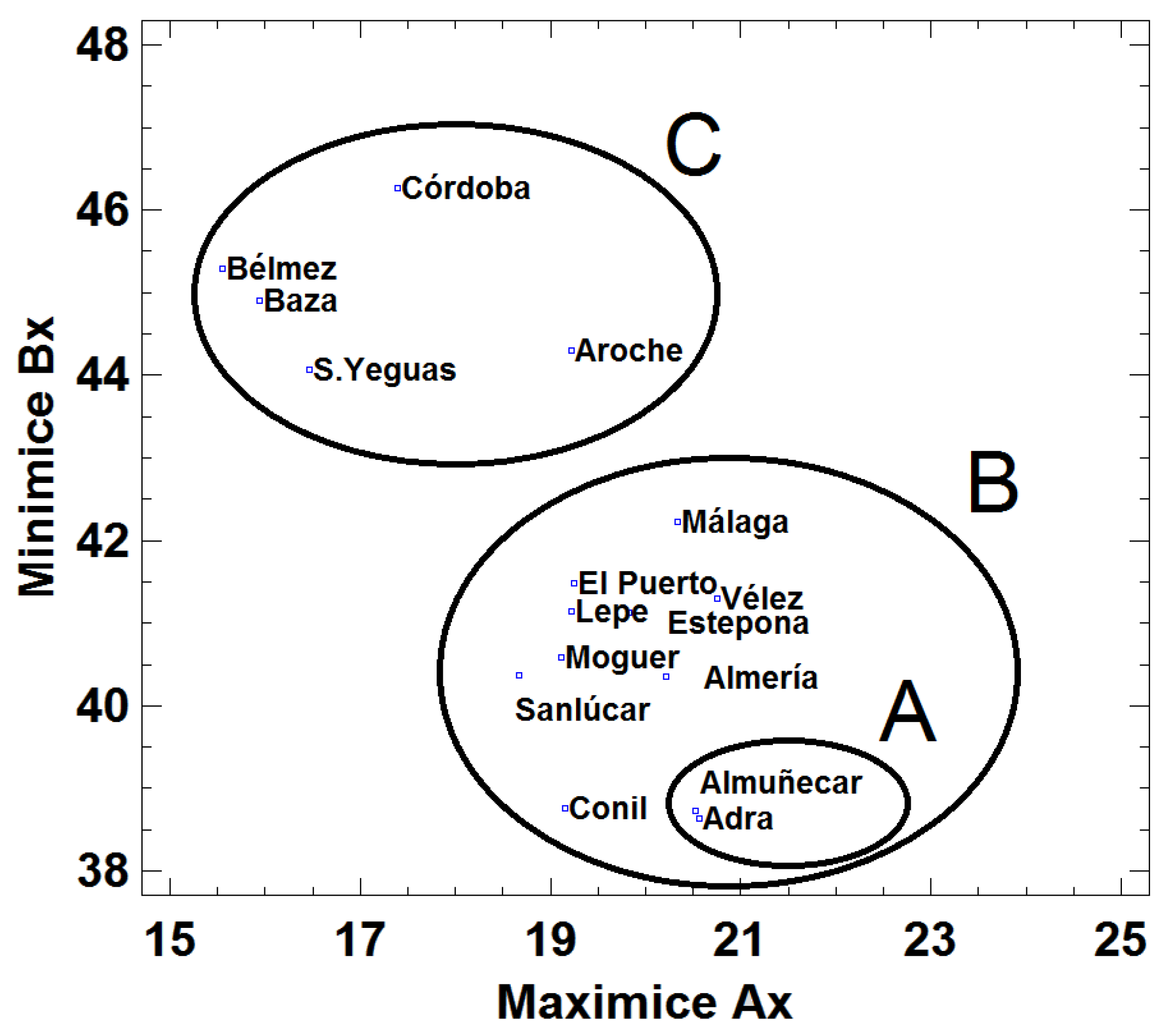

Figure A4.

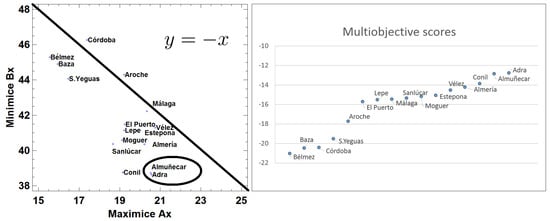

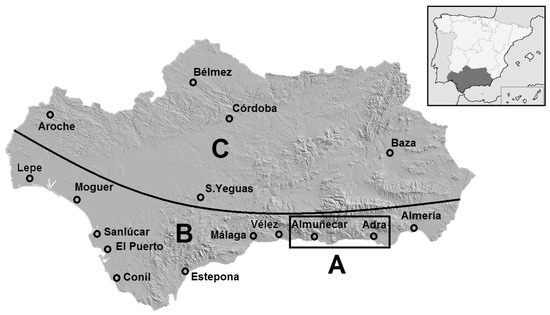

Locations considering Ax and Bx axes. Group named represents the best places for the tourism rural inn, near Costa Tropical (Granada province). Sites on are also in the coastline of the region. Sites on are the worst locations considering the multiobjective problem, they are situated inside the region.

Figure A4.

Locations considering Ax and Bx axes. Group named represents the best places for the tourism rural inn, near Costa Tropical (Granada province). Sites on are also in the coastline of the region. Sites on are the worst locations considering the multiobjective problem, they are situated inside the region.

Figure A5.

(left) Sites considering Ax and Bx and the function . The places with high values of Ax (max) and low values of Bx (min) are the best locations for the solution of the multiobjective problem (round). (right) Multiobjective scores values obtained for each site projecting the point in the function . High values of this score indicate better places to locate the tourism rural inn.

Figure A5.

(left) Sites considering Ax and Bx and the function . The places with high values of Ax (max) and low values of Bx (min) are the best locations for the solution of the multiobjective problem (round). (right) Multiobjective scores values obtained for each site projecting the point in the function . High values of this score indicate better places to locate the tourism rural inn.

Figure A6.

Distribution of the three areas described in Figure A4. A and B areas are in the coastline and C in the inner.

Figure A6.

Distribution of the three areas described in Figure A4. A and B areas are in the coastline and C in the inner.

The solution of (A9) allow us to draw the sites with a plot considering the X axe as and the Y axe as . We observe that better places have high values of and low values of . Hence, we can sort the sites in order to achieve the objectives in a similar way as factorial analysis works (two factors, the maximum and the minimum, instead of m variables).

Appendix C. Algorithms

To solve the real problems posed in this work, the algorithms were developed in MATLAB. As pointed out in Section 5, our method relies on finding the generalized supporting vectors. Thus, we refer the reader to [8] (Appendix A.1) for the MATLAB code “sol_1.m” to compute a basis of generalized supporting vectors of a finite number of matrices , in other words, a solution of Problem (A10), which was originally posed and solved in [7]:

The solution of the previous problem (see [7] (Theorem 3.3)) is given by

and

where denotes the greatest eigenvalue and V denotes the associated eigenvector space. We refer the reader to [8] (Theorem 4.2) for a generalization of [7] (Theorem 3.3) to a infinite number of operators on an infinite dimensional Hilbert space.

As we pointed out in Theorem 8, the solution of the problem

exists if and only if . Here is a simple code to check this.

|

Now we present the code to solve the first case of the previous maxmin problem, that is, the case where . We refer the reader to Section 5 on which this code is based.

|

Next, we can compute the global solution of the maxmin problem by means of the following code. Again, we refer the reader to Section 5 on which this code is based.

|

Notice that we use the case_1 function described above and a new function named colsindep.We include the code to implement this new function below.

|

The MATLAB code to compute the solution of the TMS coil problem (A6):

with the matrix , where C is the Cholesky matrix of L, and in this case it verifies that . Recall that (A6) comes from (A1):

|

Finally, we provide the code to compute the solution of the optimal geolocation problem (A9):

with matrix . Notice that it also verifies that and A and B are composed by standardized variables. Recall that (A9) comes from (A7):

|

References

- Huang, N.; Ma, C.F. Modified conjugate gradient method for obtaining the minimum-norm solution of the generalized coupled Sylvester-conjugate matrix equations. Appl. Math. Model. 2016, 40, 1260–1275. [Google Scholar] [CrossRef]

- Yassin, B.; Lahcen, A.; Zeriab, E.S.M. Hybrid optimization procedure applied to optimal location finding for piezoelectric actuators and sensors for active vibration control. Appl. Math. Model. 2018, 62, 701–716. [Google Scholar] [CrossRef]

- Bishop, E.; Phelps, R.R. A proof that every Banach space is subreflexive. Bull. Am. Math. Soc. 1961, 67, 97–98. [Google Scholar] [CrossRef]

- Bishop, E.; Phelps, R.R. The support functionals of a convex set. In Proceedings of Symposia in Pure Mathematics; American Mathematical Society: Providence, RI, USA, 1963; Volume VII, pp. 27–35. [Google Scholar]

- Lindenstrauss, J. On operators which attain their norm. Israel J. Math. 1963, 1, 139–148. [Google Scholar] [CrossRef]

- James, R.C. Characterizations of reflexivity. Stud. Math. 1964, 23, 205–216. [Google Scholar] [CrossRef]

- Cobos-Sánchez, C.; García-Pacheco, F.J.; Moreno-Pulido, S.; Sáez-Martínez, S. Supporting vectors of continuous linear operators. Ann. Funct. Anal. 2017, 8, 520–530. [Google Scholar] [CrossRef]

- Garcia-Pacheco, F.J.; Cobos-Sanchez, C.; Moreno-Pulido, S.; Sanchez-Alzola, A. Exact solutions to max∥x∥=1∑i=1∞∥Ti(x)∥2 with applications to Physics, Bioengineering and Statistics. Commun. Nonlinear Sci. Numer. Simul. 2020, 82, 105054. [Google Scholar] [CrossRef]

- García-Pacheco, F.J.; Naranjo-Guerra, E. Supporting vectors of continuous linear projections. Int. J. Funct. Anal. Oper. Theory Appl. 2017, 9, 85–95. [Google Scholar] [CrossRef]

- Cobos Sánchez, C.; Garcia-Pacheco, F.J.; Guerrero Rodriguez, J.M.; Hill, J.R. An inverse boundary element method computational framework for designing optimal TMS coils. Eng. Anal. Bound. Elem. 2018, 88, 156–169. [Google Scholar] [CrossRef]

- Bohnenblust, F. A characterization of complex Hilbert spaces. Portugal. Math. 1942, 3, 103–109. [Google Scholar]

- Kakutani, S. Some characterizations of Euclidean space. Jpn. J. Math. 1939, 16, 93–97. [Google Scholar] [CrossRef]

- Sánchez, C.C.; Rodriguez, J.M.G.; Olozábal, Á.Q.; Blanco-Navarro, D. Novel TMS coils designed using an inverse boundary element method. Phys. Med. Biol. 2016, 62, 73–90. [Google Scholar] [CrossRef]

- Marin, L.; Power, H.; Bowtell, R.W.; Cobos Sanchez, C.; Becker, A.A.; Glover, P.; Jones, A. Boundary element method for an inverse problem in magnetic resonance imaging gradient coils. Comput. Model. Eng. Sci. 2008, 23, 149–173. [Google Scholar]

- Marin, L.; Power, H.; Bowtell, R.W.; Cobos Sanchez, C.; Becker, A.A.; Glover, P.; Jones, I.A. Numerical solution of an inverse problem in magnetic resonance imaging using a regularized higher-order boundary element method. In Boundary Elements and Other Mesh Reduction Methods XXIX; WIT Press: Southampton, UK, 2007; Volume 44, pp. 323–332. [Google Scholar] [CrossRef]

- Wassermann, E.; Epstein, C.; Ziemann, U.; Walsh, V.; Paus, T.; Lisanby, S. Oxford Handbook of Transcranial Stimulation (Oxford Handbooks), 1st ed.; Oxford University Press: New York, NY, USA, 2008. [Google Scholar]

- Romei, V.; Murray, M.M.; Merabet, L.B.; Thut, G. Occipital Transcranial Magnetic Stimulation Has Opposing Effects on Visual and Auditory Stimulus Detection: Implications for Multisensory Interactions. J. Neurosci. 2007, 27, 11465–11472. [Google Scholar] [CrossRef]

- Koponen, L.M.; Nieminen, J.O.; Ilmoniemi, R.J. Minimum-energy Coils for Transcranial Magnetic Stimulation: Application to Focal Stimulation. Brain Stimul. 2015, 8, 124–134. [Google Scholar] [CrossRef]

- Koponen, L.M.; Nieminen, J.O.; Mutanen, T.P.; Stenroos, M.; Ilmoniemi, R.J. Coil optimisation for transcranial magnetic stimulation in realistic head geometry. Brain Stimul. 2017, 10, 795–805. [Google Scholar] [CrossRef]

- Gomez, L.J.; Goetz, S.M.; Peterchev, A.V. Design of transcranial magnetic stimulation coils with optimal trade-off between depth, focality, and energy. J. Neural Eng. 2018, 15, 046033. [Google Scholar] [CrossRef]

- Wang, B.; Shen, M.R.; Deng, Z.D.; Smith, J.E.; Tharayil, J.J.; Gurrey, C.J.; Gomez, L.J.; Peterchev, A.V. Redesigning existing transcranial magnetic stimulation coils to reduce energy: application to low field magnetic stimulation. J. Neural Eng. 2018, 15, 036022. [Google Scholar] [CrossRef]

- Grandy, W.T. Time Evolution in Macroscopic Systems. I. Equations of Motion. Found. Phys. 2004, 34, 1–20. [Google Scholar] [CrossRef]

- Sakurai, J.J. Modern Quantum Mechanics; Addison-Wesley Publishing Company: Reading, MA, USA, 1993. [Google Scholar]

- Sanchez, C.C.; Bowtell, R.W.; Power, H.; Glover, P.; Marin, L.; Becker, A.A.; Jones, A. Forward electric field calculation using BEM for time-varying magnetic field gradients and motion in strong static fields. Eng. Anal. Bound. Elem. 2009, 33, 1074–1088. [Google Scholar] [CrossRef]

- Jäntschi, L.; Bálint, D.; Bolboaca, S. Multiple Linear Regressions by Maximizing the Likelihood under Assumption of Generalized Gauss-Laplace Distribution of the Error. Comput. Math. Methods Med. 2016, 2016, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Gil-García, I.C.; García-Cascales, M.S.; Fernández-Guillamón, A.; Molina-García, A. Categorization and Analysis of Relevant Factors for Optimal Locations in Onshore and Offshore Wind Power Plants: A Taxonomic Review. J. Mar. Sci. Eng. 2019, 7, 391. [Google Scholar] [CrossRef]

- Pérez Morales, A.; Castillo, F.; Pardo-Zaragoza, P. Vulnerability of Transport Networks to Multi-Scenario Flooding and Optimum Location of Emergency Management Centers. Water 2019, 11, 1197. [Google Scholar] [CrossRef]

- Choi, J.W.; Kim, M.K. Multi-Objective Optimization of Voltage-Stability Based on Congestion Management for Integrating Wind Power into the Electricity Market. Appl. Sci. 2017, 7, 573. [Google Scholar] [CrossRef]

- Zavala, G.R.; García-Nieto, J.; Nebro, A.J. Qom—A New Hydrologic Prediction Model Enhanced with Multi-Objective Optimization. Appl. Sci. 2019, 10, 251. [Google Scholar] [CrossRef]

- Susowake, Y.; Masrur, H.; Yabiku, T.; Senjyu, T.; Motin Howlader, A.; Abdel-Akher, M.; Hemeida, A.M. A Multi-Objective Optimization Approach towards a Proposed Smart Apartment with Demand-Response in Japan. Energies 2019, 13, 127. [Google Scholar] [CrossRef]

- ESTACIONES AGROCLIMÁTICAS. Available online: https://www.juntadeandalucia.es/agriculturaypesca/ifapa/ria/servlet/FrontController (accessed on 18 September 2019).

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).