1. Introduction

The trustworthiness of software is a hot topic of research. Software performance is one of the attributes that affect software trustworthiness. With the expansion of software scale and the increase in software complexity, many software products encounter performance problems. Undoubtedly, many of these problems lead to lower software trustworthiness. In recent years, accidents caused by software performance problems have had a significant impact on our society. The third largest mobile phone company of Japan, Softbank, planned to attract users by reducing mobile fees. However, the computer system became paralyzed, and Softbank lost more than 100 million yen, as a result of the influx of a large number of users in a short time. Software in some safety-critical areas, such as aerospace control, finance, transportation and communication, need to achieve higher trustworthy degree [

1,

2].

Performance measurement is often a challenging task [

3,

4,

5,

6]. Automated assistance for software performance improvement has been described before based on measurements, performance models or both. To analyze measurements, the Paradyn tools [

5] have extensive facilities for automated instrumentation and bottleneck searches for parallel applications, enhanced in [

4] with historical data. IT flexibility has been posited as being a critical enabler in attaining competitive performance gains [

7]. Other tools for the causal analysis of measurements include Poirot [

8] and those in [

9,

10].

Performance is a crucial attribute of software systems [

11]. When performance is measured early in the development process, the aim is to derive the appropriate architectural decisions that improve the performance of the system. Performance models are used to describe how system operations use resources and how resource contention affects operations [

12]. Performance estimation at early stages of software development is difficult, as there are many aspects that may be unknown or uncertain, such as the design decisions, code, and execution environment. In many application domains, such as heterogeneous distributed systems, enterprise applications, and cloud computing, performance evaluation is also affected by external factors, such as increasingly fluctuating workloads and changing scenarios [

13]. Wang and Casale used Bayesian inference and Gibbs sampling to model service demand from queue length data [

14].

Software component technology is the mainstream technology of software development. How to obtain the performance of component systems efficiently and accurately is a challenging issue. This paper puts forward a performance quantification method of a component system. Component-Based Software Development (CBSD) has been an important area of research for almost three decades [

15,

16]. CBSD avoids duplication of effort, reduces the development cost and improves productivity [

17,

18]. Reusing the software component can reduce time and cost [

19]. By reusing software components, CBSD avoids the repeated emergence of errors and improves the trustworthiness of software [

20]. The software component has several definitions. A component is an opaque implementation of functionality, subject to third-party composition, and conformation with a component model (CMU/SEI definition) [

21]. Szyperski’s definition is that the software component is a unit of composition with contractually specified interfaces and explicit context dependencies [

22]. The authors of [

23,

24,

25] adopted Szyperski’s definition. This paper also adopts Szyperski’s definition.

One of the most critical processes in CBSD is the selection of a set of software components [

26,

27]. How to obtain the performance of component systems efficiently and accurately is a challenging issue for component system development. In this paper, the performance quantification method of the component is proposed. First, performance specification is formally defined. Second, a refinement relation is introduced, and the performance quantification method of the component system is presented. Finally, a case study is given to illustrate the effectiveness of the method.

The remainder of this paper is organized as follows. In

Section 2, we introduce the performance and its metric elements.

Section 3 proposes the refinement relationship.

Section 4 presents the quantification method of the metric element and the computation model of performance. In

Section 5, the performance computing method of the component system is presented.

Section 6 gives a case study.

Section 7 is the conclusion section.

2. Performance and Metric Elements

Performance is a non-functional feature of software [

20]. It focuses on the timeliness displayed when it completes the function. The software system provides proper performance relative to the number of resources used under specified conditions. The object of performance measurement is called the metric element.The metric elements of the performance include the CPU utilization ratio, memory utilization ratio, disk I/O, number of concurrent users, throughput and average response time.

The CPU utilization ratio refers to the percentage of CPU time consumed by the user process and the system process. In a longer period of use, there is generally an acceptable upper limit, and many systems set the CPU utilization ratio to 85%.

The calculation of the memory utilization ratio is: (1− free memory/total memory size) × 100%. The system requires at least a certain amount of free memory, which has an acceptable upper limit. Disk I/O is mainly used to measure the reading and writing ratio of the disk. It is measured by the percentage of unit time for reading and writing operations.

The maximum number of users is the number of users who simultaneously submit requests to the system at the same time.

Throughput is the number of unit time required of the system to complete users’ transactions. It reflects the system’s processing capabilities.

The average response time is the average time of transaction processing. The response time of the transaction is the time from submitting the request to completing it.

Component specification is a window for the component to transmit information [

28]. Component specification not only provides an abstract definition of the internal structure of the component but also provides all the information needed for the component user to understand and use the component. Performance includes some metric elements. The trustworthiness of performance is directly calculated by metric elements.

3. The Refinement Relationship Based on the Specification

The performance is composed of metric elements. The trustworthiness of performance is calculated directly by metric elements. The specification of the performance’s metric element consists of four parts: name, checkpoint, flag and value.

Definition 1. (The specification of the metric element) A metric element consists of four parts: the name of the metric element, the checkpoint of the metric element, the flag of the metric element and the value of the metric element. It is denoted as a quadruple element: =.

The flag of a metric element takes the values 1 and −1. The flag of a metric element represents the relation between the metric element and its value. When flag = 1, the larger the value of a metric element is, the better this metric element is. However, when flag = −1, the smaller the value of the metric element is, the better this metric element is. The flags of the metric elements are shown in

Table 1.

Definition 2. (The specification of the Performance) A performance specification is a set consisting of n metric elements. It is denoted as a set: ,where is the performance specification and is the specification of the metric element.

For example, a performance represents the throughput and the response time. Its description is that the throughput of the payment function is no less than 200 users per second when 100 users use the payment function, and the response time of the payment function is no more than 5 s when 200 users use the payment function. We use = (“To”, “100 users”, 1, 20) to represent the throughput and = (“Rt”, “200 users”, −1, 5) to represent the response time. Then, the specification of the performance is: ={(“To”, “100 users”, 1, 20),(“Rt”, “200 users”, −1, 5)}.

After completing the specification of the metric element and performance, a refinement relation-based specification is introduced below.

Definition 3. (Refinement relation) For two given metric elements and , we call the refinement of , expressed as , if .

For example, if a metric element

is (“To”, “100 users”, 1, 20) and a metric element

is (“To”, “100 users”, 1, 18), then

. If a metric element

is (“Rt”, “200 users”, −1, 3) and a metric element

is ("Rt”, "200 users”, −1, 5), then

. The refinement relationship has the following propositions:

Proposition 1. (Reflexivity) The refinement relation of the metric element satisfies the reflexivity, .

A metric element is satisfied with itself.

Proposition 2. (Antisymmetry) If and , then

Proof. Because two cases of flag are discussed:

Case 1: When .

Because and .

As a result,

Case 2:

With the same proof of Case 1,

Therefore,

As a result,

□

Proposition 3. (Transitivity) If and , then

Proof. Because two cases of flag are discussed:

Case 1: When .

Because

Because .

Two cases of flag are discussed:

Case 1:

.

.

Thus, .

Case 2:

This is similar to the proof of Case 1.

.

Therefore, .

Thus, .

□

For given two performance specifications and , we call the refinement of , expressed as , if for every i.

From these propositions, the refinement relation is a partial order. The relation of performance specifications is introduced below.

4. Quantification of Performance and the Metric Elements

In the following, a quantitative measurement method can help us choose the optimized performance. After quantifying the performance’s metric elements, the quantitative measurement of performance is computed.

Definition 4. (Quantitative measurement of the metric element) For the given specifications of two metric elements and , we say that is refined in a quantitative way, donated by , calculated as follows. When ,

When ,

The quantitative method has the following properties.

Proposition 4. (Boundness) Boundness is used to describe that is in a certain range.

Proof. Since is different depending on the flag, different cases are discussed.

Case 1: ()

When , it is obtained that .

When , it is true that .

As a result, .

Case 2: ()

When , .

When , it is true that .

When , .

As a result, .

In other words, is a bounded function.

This proposition shows that a component’s metric element has a certain range in satisfying the target metric element.

□

Proposition 5. (Keeping order) Keeping order means that .

Proof. Two cases of the flag are discussed.

Case 1: ()

Since , is an increasing function for .

Because can imply , .

As a result, if , then

Case 2: ()

Since , is a decreasing function for .

Because implies , .

Then, implies .

□

Proposition 6. (Approximation) Approximation means that, when is closing in a refinement way to , is increasing.

Proof. It is proved in the two cases of the flag of

Case 1: ()

In this case, it is true that .

Suppose

, i.e.,

x denotes the distance from

to

, then

, we have

Case 2: ()

This case implies that Suppose , i.e., x denotes the distance from to . Then, .

Since ,

Both cases show that is a decreasing function over x. Therefore, when is closing to in a refinement way, the degree of the metric element increases.

□

This proposition shows that the quantification is consistent with the refinement relation and that the quantitative model is order preserving.

Definition 5. (Quantitative measurement of component performance) Assuming that the component’s performance specification and the target performance specification have the same metric elements, we say that the component’s performance specification satisfies the target performance specification in a quantitative way, and it is represented as , where and is the quantification of the ith metric element.

For example, there are the component’s performance specification and the target performance specification . The component’s performance specification is {(“Rt”, “300 users”, −1, 5)}, and the target performance specification is {(“Rt”, “300 users”, −1, 6)}. It can be obtained that and =1.

For another example, there are the component’s performance specification and the target performance specification . The component’s performance specification is {(“Rt”, “200 users”, −1, 5), (To, 300 users, 1, 15)}, and the target performance specification is {(“Rt”, “200 users”, −1, 6), (“To”, “300 users”, 1, 20)}. Through the calculation, we have and .

The two target performance specifications are {(“Rt”, “1000 users”, 1, 6)} and {(“Rt”, “1000 users”, 1, 6), (“To”, “1000 users”, 1, 20)}. For each target performance specification, the performance specifications of the components are given to quantify, and the quantification is shown in

Table 2. The examples illustrate the validity of quantitative method.

6. An Illustrative Example

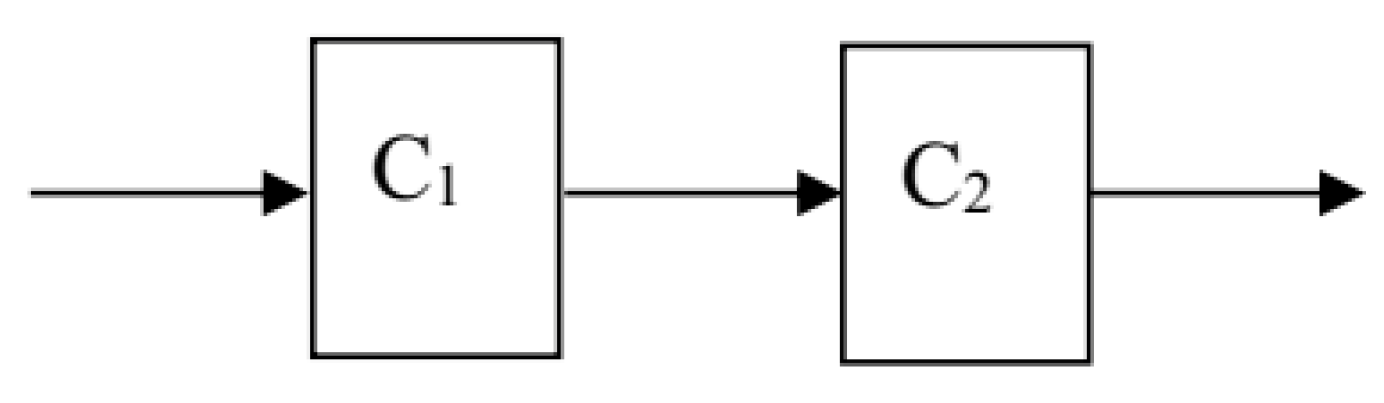

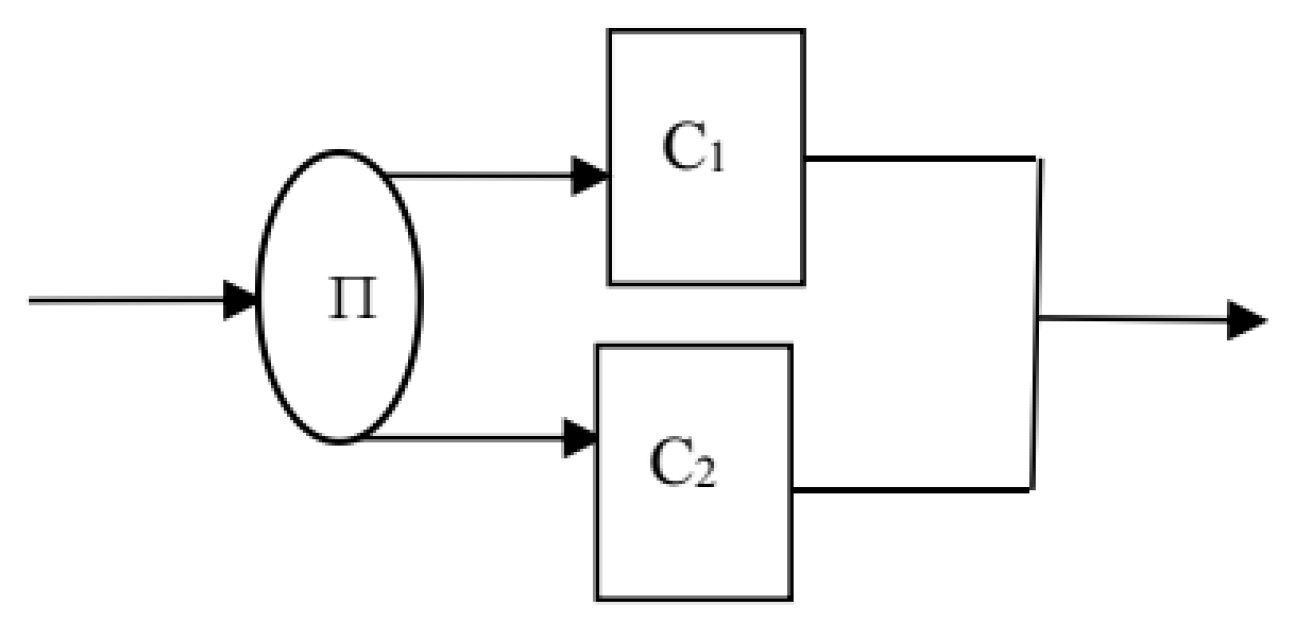

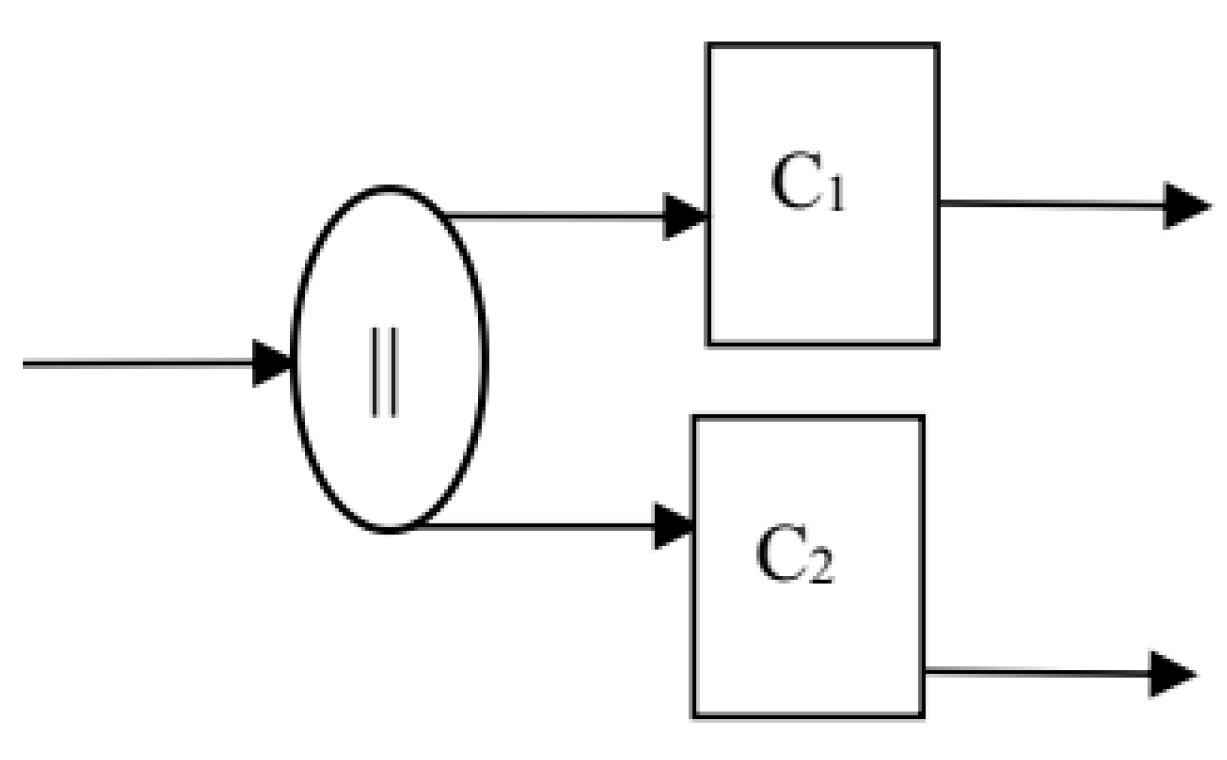

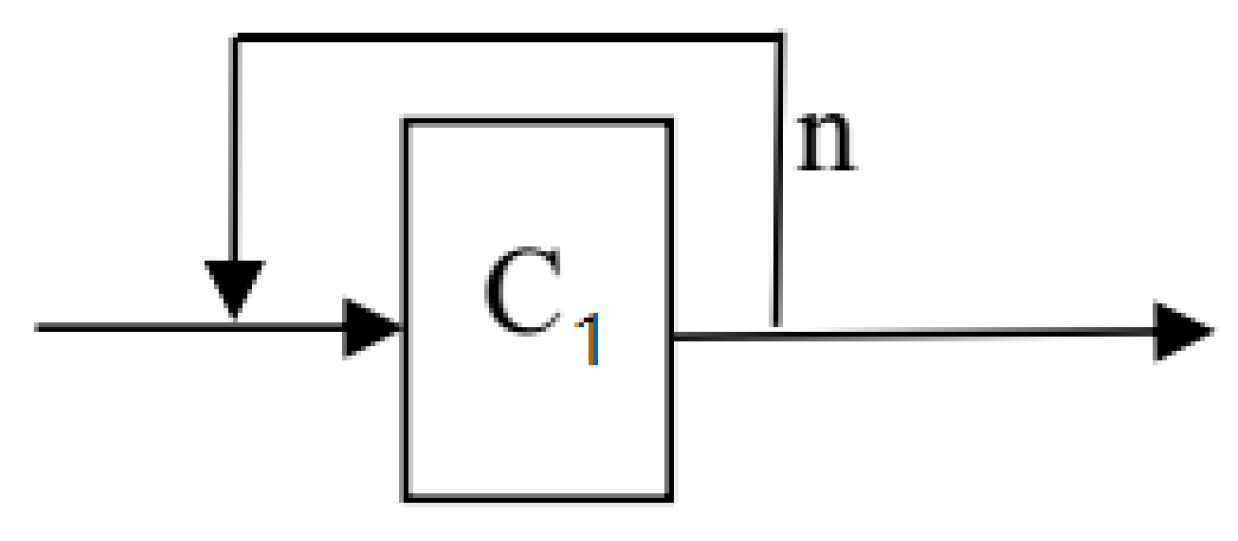

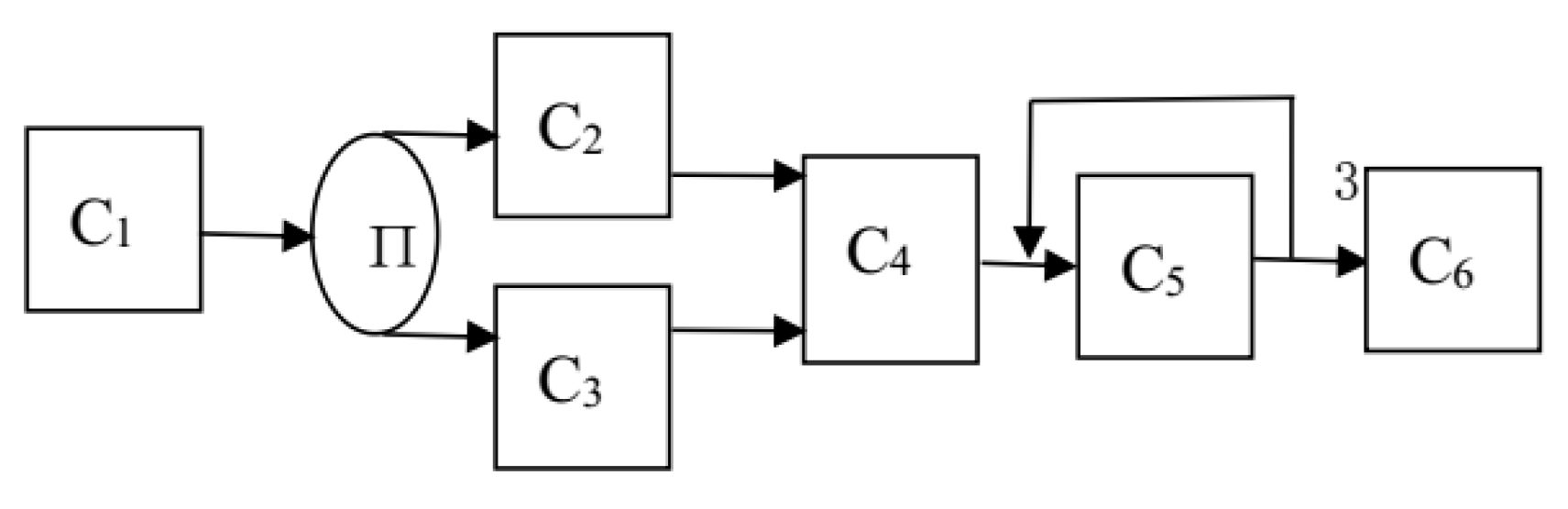

The following example shows the availability and effectiveness of the measurement model. Firstly, we give a logical structure of a component system, as shown in

Figure 6.

The CPU utilization, memory utilization, disk I/O, maximum user number, throughput and average response time are shown in

Table 8.

The calculation process is calculated according to the formula given above. The calculation of the metric element is shown as follows.

Table 9 is the computational results of performance metrics for component systems.

Table 10 shows the target value of the metric element of the component system.

According to the computation, , , , , , and are 1, 1, 1, , 1, and 1, respectively. Calculating the trustworthiness of the component system’s performance,

According to the computation, “maximum number of users” is the weak metric element of the component system. “Maximum number of users” of component is the minimum, thus component should be improved to obtain a better performance of the system.

The illustrative example is used to show the computational process of performance quantization. The weights of the metric elements are the same in the calculation; however, the weights could be different and determined according to the requirements of the component system.