Abstract

We consider the extreme value problem of the minimum-maximum models for the independent and identically distributed random sequence and stationary random sequence, respectively. By invoking some probability formulas and Taylor’s expansions of the distribution functions, the limiting distributions for these two kinds of sequences are obtained. Moreover, convergence analysis is carried out for those extreme value distributions. Several numerical experiments are conducted to validate our theoretical results.

1. Introduction

Consider a collection of random variables for following a common distribution function F. Let’s denote the minimum-maximum model as:

where with A being a fixed positive constant.

The minimum-maximum model (1) provides a novel framework for a variety of applications. For instance, consider the risk management strategies by a risk-averse investor. Let i denote the i-th asset in the asset pool, and j denote the time interval. Assume that is the risk measurement of i-th asset at time interval j. Suppose the risk-averse investor always chooses to buy an asset when its risk reaches the bottom, then implies the largest risk the investor would bear, and the limiting distribution is applicable for controlling the risk management processes.

Note that if we fix , then denotes the partial maximum of the random sequence . We further assume that being independently identically distributed (i.i.d.), then, according to the classical extreme value theory [1,2,3,4,5], there exists a non-degenerate distribution with some normalized constants and such that

for every x. Here, based on the difference in domains of attraction with respect to the distribution F, belongs to one of the three fundamental classes:

with parameter .

The methods of determining the normalized constants and are provided in [4,5]. Similar conclusions have been drawn for the random sequences under strong or weak mixing conditions by [6,7,8]. Moreover, the convergence rate of with respect to each of the three types can be found in [9,10,11,12,13,14,15]. In particular, the authors in [10,12,13,14] obtained the uniform convergence rate of extremes. Although extreme value theory has been extensively studied in a large variety of models, existing literature gives little insight into the limiting distribution for the minimum-maximum model. To fill this gap, we present some theoretical results of limiting distributions for the minimum-maximum model (1), which probably give some insights on application.

In this paper, we focus on the problem of obtaining the limiting distributions for the minimum-maximum model (1), as well as the convergence rate of to its extreme value limit. Motivated by [1,4,5], we first provide the methods for selecting the normalized constants , . Then, combining their properties with Taylor’s expansions of the distribution functions, we obtain the limiting distributions for i.i.d. and stationary random sequence, respectively. Our results show that converges to a non-standard Gumbel distribution as long as the distribution functions have continuous and bounded first derivatives. In order to obtain the convergence rate of extremes, we take the advantage of an important inequality and a classical probability result adopted from [12]. A closer examination of convergence results reveals a uniform convergence rate of for some probability distributions. Finally, numerical examples are provided to verify our theoretical results.

The rest of the paper is organized as follows: In Section 2 and Section 3, we derive the extreme value distribution for model (1) with i.i.d. and stationary sequence, respectively. The convergence analysis for the limiting functions is presented in Section 4. Section 5 is devoted to the numerical experiments implying the asymptotic behaviors and uniform convergence of different distribution functions.

2. Extreme Value Distribution for i.i.d. Sequences

Suppose , , ⋯, is a sequence of i.i.d. random variables with common distribution function F. Note that model (1) can be rewritten as

The following theorem implies that the limiting distribution of (4) with i.i.d. random sequence belongs to the Gumbel class.

Theorem 1.

Suppose are i.i.d. random variables with common distribution function F having a continuous and bounded first derivative , then there exist some normalized constants , such that

Proof.

Since are i.i.d., we have

According to the expressions of and , it follows that

Combining Taylor’s expansion of with (7) yields

where .

The right-hand side of the first line in (9) is by the assumption . On the right-hand side of the third line in (9), we applied (7) to replace by .

Note that

thus we obtain the following limit:

Remark 1.

It can be easily seen that the probability density function of will converge to , which will be verified by numerical experiments in Section 5.

3. Extreme Value Distribution for Stationary Sequences

In this section, we turn to the case for the strictly stationary sequence for . Similarly, the minimum-maximum model of can be defined as

where with is a fixed positive constant.

In order to get asymptotic results, it is necessary to put some restrictions on the distribution functions. We further assume that the random sequence satisfies the following conditions:

For any fixed i, the joint distribution of the random sequence , denoted as , satisfying the following strong mixing condition defined as:

for and . Here, is a sequence of real numbers and

Motivated by [16], under the conditions stated above, we can prove that can be approximated by . The analysis results are provided by the following theorem.

Theorem 2.

Suppose is a strictly stationary sequence with joint distribution for satisfying the condition (13), then for any , there exists a sequence with and such that the following approximate results hold:

Proof.

Under the condition and by the definition of , it follows that

Note that, for any , we have . Therefore, for any sufficiently small , there exists an integer such that, if , then . Consequently, if , then where is a positive constant. We now proceed by dividing (17) into two parts:

To deal with the first term on the right-hand side of (18), we use Taylor’s expansion of to get

as N goes to infinity, where the parameter .

In the same manner, we bound the second term on the right-hand side of (18) by

With the help of Theorem 2, we can now proceed to obtain the limiting distribution of .

Theorem 3.

Suppose the strictly stationary sequence satisfies the conditions of Theorem 2. Moreover, assume that the joint distribution has continuous first derivative, then there exist normalized constants and as defined in Theorem 2 such that

Proof.

By the definitions of and , we have

According to asymptotic results implied by Theorem 2, it follows easily that

4. Rate of Convergence of the Minimum-Maximum Model

In this section, we will be concerned with the convergence rate of . Here, we present the proofs for the i.i.d. random sequence. It can be proved in much the same way for the strictly stationary sequence. Our convergence analysis needs the following result given by [12] in Proposition 1:

Theorem 4.

Suppose the random sequence satisfies the conditions of Theorem 1, and the normalized constants and as defined in Theorem 1, then the following convergence rate is achieved for and ,

where and .

Proof.

We now turn to the first term, it is easy to check that

where we have used the following inequality:

Combining Theorem 1 with the monotone of in n, the first part on the right-hand side of “≤” sign in (27) can be controlled by . Meanwhile, note that we assume in our model, then the rest part of the term can be handled as

Remark 2.

Note that , according to (11), we have . When , it is sufficient to show that , hence for n large enough, we consider that is free of n.

Note that Theorem 4 shows the point-wise convergence of the distribution of (1) to extreme value distribution . However, a closer examination of this theorem yields a uniform rate of convergence for some probability distributions. With the help of Taylor’s expansion, we are now in a position to show the uniform convergence rate.

Corollary 1.

Suppose the random sequence satisfies the conditions of Theorem 4 with distributions F having continuous and bounded first and second derivates, then there exist some constants K, independent of n such that the following uniform convergence rate holds for ,

Proof.

According to the proofs of Theorem 4, what is left is to verify . Combining Taylor ’s expansion of in with (7) yields that

Therefore,

Note that and are bounded on and , thus we can assert that

where K is a positive constant which should not rely on n and satisfy

Take (36) into (4) to obtain

It can be easily proved that the function is bounded by for , which implies the convergence is uniform on . □

5. Numerical Experiments

In this section, numerical experiments are conducted to validate the approximation capability of and the uniform convergence rate provided by Theorem 1 and Corollary 1, respectively.

We begin by generating random sequences following three types of distributions (i.e., uniform distribution, exponential distribution and Cauchy distribution); then Theorem 1 leads to explicit expression of the corresponding normalized constants and , which are given in the following Table 1.

Table 1.

Normalized constants and for the corresponding distributions.

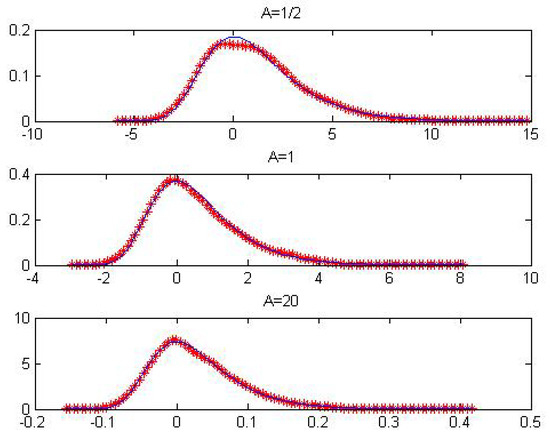

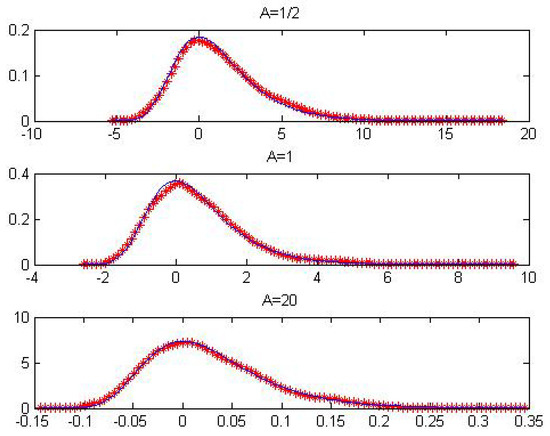

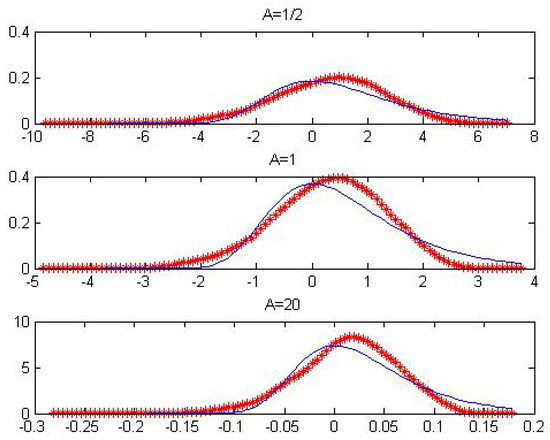

Here, we choose , respectively. The results are presented in Table 2 and Table 3 and Figure 1, Figure 2 and Figure 3. Note that the second derivative of Cauchy distribution function is unbounded on ; consequently, this kind of distribution could not converge to uniformly in this domain. For this reason, we only test the asymptotic behavior of extremes by feedback sketch. The results are presented in Figure 3. These results suggest that the uniform convergence rate is of order for different A as predicted by Theorem 1. Figure 1, Figure 2 and Figure 3 show that the asymptotic density is essentially coincident with the extreme value density as posed in Remark 1.

Table 2.

Uniform convergence rate and convergence order for uniform distribution.

Table 3.

Uniform convergence rate and convergence order for exponential distribution.

Figure 1.

The sketchs above show the figures of (red star) and (blue line) for i.i.d. uniform sequence when , 1 and 20, respectively.

Figure 2.

The sketches above show the figures of (red star) and (blue line) for i.i.d. exponential sequence when , 1 and 20, respectively.

Figure 3.

The sketches above show the figures of (red star) and (blue line) for i.i.d. Cauchy’s sequence when , 1 and 20, respectively.

6. Conclusions

In this paper we have provided the analysis of deriving the limiting distributions and convergence rates for the minimum-maximum model. Under the framework of this model, we considered the i.i.d. random sequence and the stationary random sequence satisfying the strong mixing condition respectively. Without specifying the form of the distribution functions of the two sequences, our results yield that the limiting distribution of converges to a non-standard Gumbel distribution. For the distribution functions having continuous and bounded first derivatives, the limiting distribution has the convergence properties as shown in Theorem 4. In particular, for the distribution functions having continuous and bounded second derivates, our result reveals a uniform convergence rate of .

Author Contributions

Conceptualization, L.P. and L.G.; methodology, L.P. and L.G.; software, L.P.; validation, L.P.; formal analysis, L.P.; investigation, L.P.; resources, L.P.; data curation, L.P.; writing—original draft preparation, L.P.; writing—review and editing, L.P.; visualization, L.P.; supervision, L.P. and L.G.; project administration, L.P.

Funding

This research is supported by the Major State Basic Research Development Program of China under Grant No. 2017YFA60700602.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Fisher, R.A.; Tippett, L.H.C. Limiting forms of the frequency distribution of the largest or smallest member of a sample. Math. Proc. Camb. Philos. Soc. 1928, 24, 180–190. [Google Scholar] [CrossRef]

- Gnedenko, B. Sur la distribution limite du terme maximum d’une serie aleatoire. Ann. Math. 1943, 44, 423–453. [Google Scholar] [CrossRef]

- Haan, L.D. Sample extremes: An elementary introduction. Stat. Neerl. 1976, 30, 161–172. [Google Scholar] [CrossRef]

- Leadbetter, M.R.; Lindgren, G.; Rootzén, H. Extremes and Related Properties of Random Sequences and Processes; Springer: Berlin/Heidelberg, Germany, 1983. [Google Scholar]

- Resnick, S.I. Extreme Values, Regular Variation and Point Processes; World Book Inc.: Chicago, IL, USA, 2011. [Google Scholar]

- Watson, G.S. Extreme Values in Samples from m-Dependent Stationary Stochastic Processes. Ann. Math. Stat. 1954, 25, 798–800. [Google Scholar] [CrossRef]

- Loynes, R.M. Extreme Values in Uniformly Mixing Stationary Stochastic Processes. Ann. Math. Stat. 1965, 36, 993–999. [Google Scholar] [CrossRef]

- Deo, C.M. A Note on Strong-Mixing Gaussian Sequences. Ann. Probab. 1973, 1, 186–187. [Google Scholar] [CrossRef]

- Anderson, C. Contributions to the Asymptotic Theory of Extreme Values. Ph.D. Thesis, University of London, London, UK, 1971. [Google Scholar]

- Cohen, J.P. Convergence rates for the ultimate and pentultimate approximations in extreme-value theory. Adv. Appl. Probab. 1982, 14, 833–854. [Google Scholar] [CrossRef]

- Hall, P. On the rate of convergence of normal extremes. J. Appl. Probab. 1979, 16, 433–439. [Google Scholar] [CrossRef]

- Hall, W.; Wellner, J.A. The rate of convergence in law of the maximum of an exponential sample. Stat. Neerl. 1979, 33, 151–154. [Google Scholar] [CrossRef]

- Peng, Z.; Nadarajah, S.; Lin, F. Convergence rate of extremes for the general error distribution. J. Appl. Probab. 2010, 47, 668–679. [Google Scholar] [CrossRef]

- Smith, R.L. Uniform rates of convergence in extreme-value theory. Adv. Appl. Probab. 1982, 14, 600–622. [Google Scholar] [CrossRef]

- Welsch, R.E. A Weak Convergence Theorem for Order Statistics From Strong-Mixing Processes. Ann. Math. Stat. 1971, 42, 1637–1646. [Google Scholar] [CrossRef]

- Leadbetter, M.R. On extreme values in stationary sequences. Z. Wahrscheinlichkeitstheorie Verw. Geb. 1974, 28, 289–303. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).