Long-Time Asymptotics of a Three-Component Coupled mKdV System

Abstract

:1. Introduction

2. An Integrable Three-Component Coupled mKdV Hierarchy

2.1. Zero Curvature Formulation

2.2. Three-Component mKdV Hierarchy

3. An Associated Oscillatory Riemann-Hilbert Problem

3.1. An Equivalent Matrix Spectral Problem

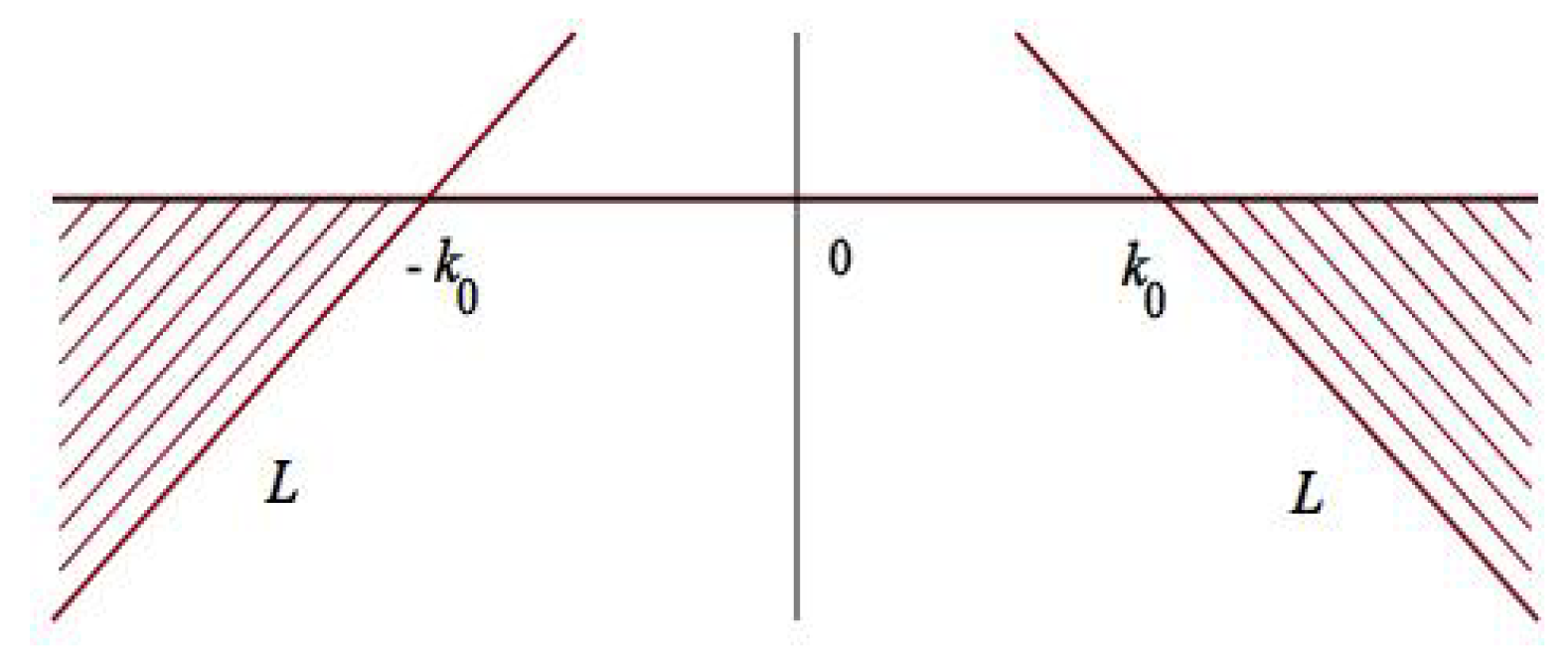

3.2. An Oscillatory Riemann-Hilbert Problem

4. Long-Time Asymptotic Behavior

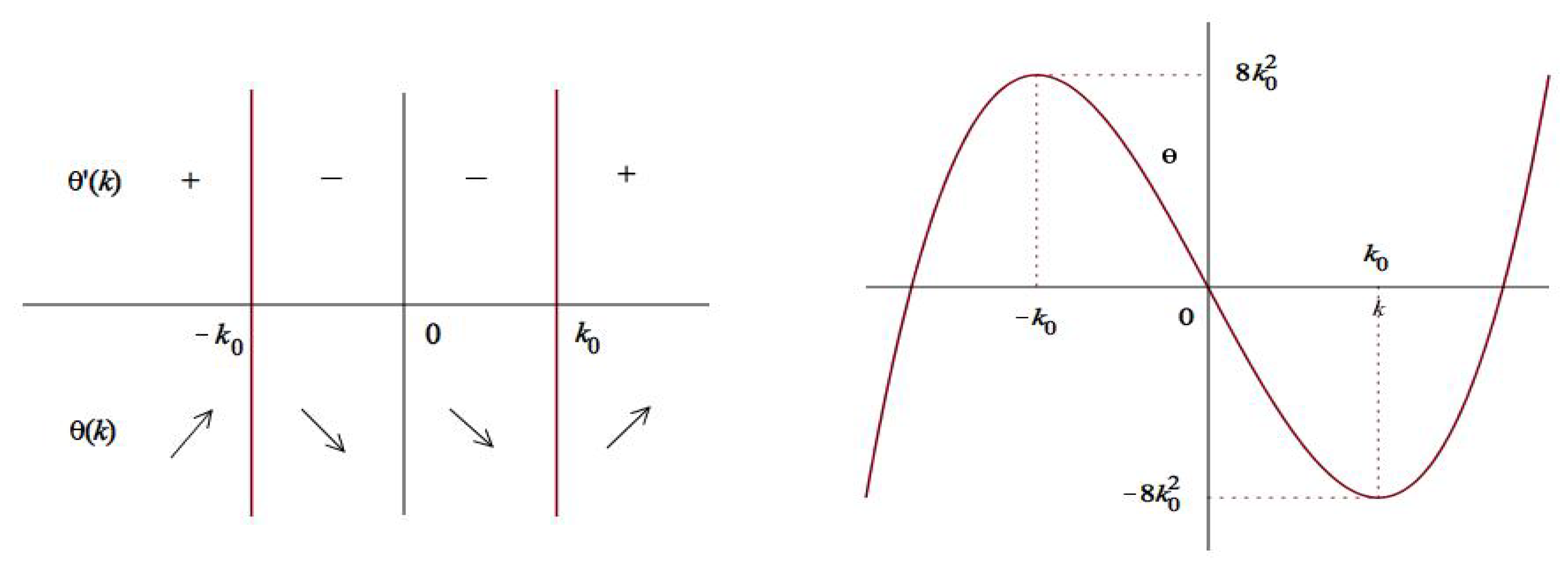

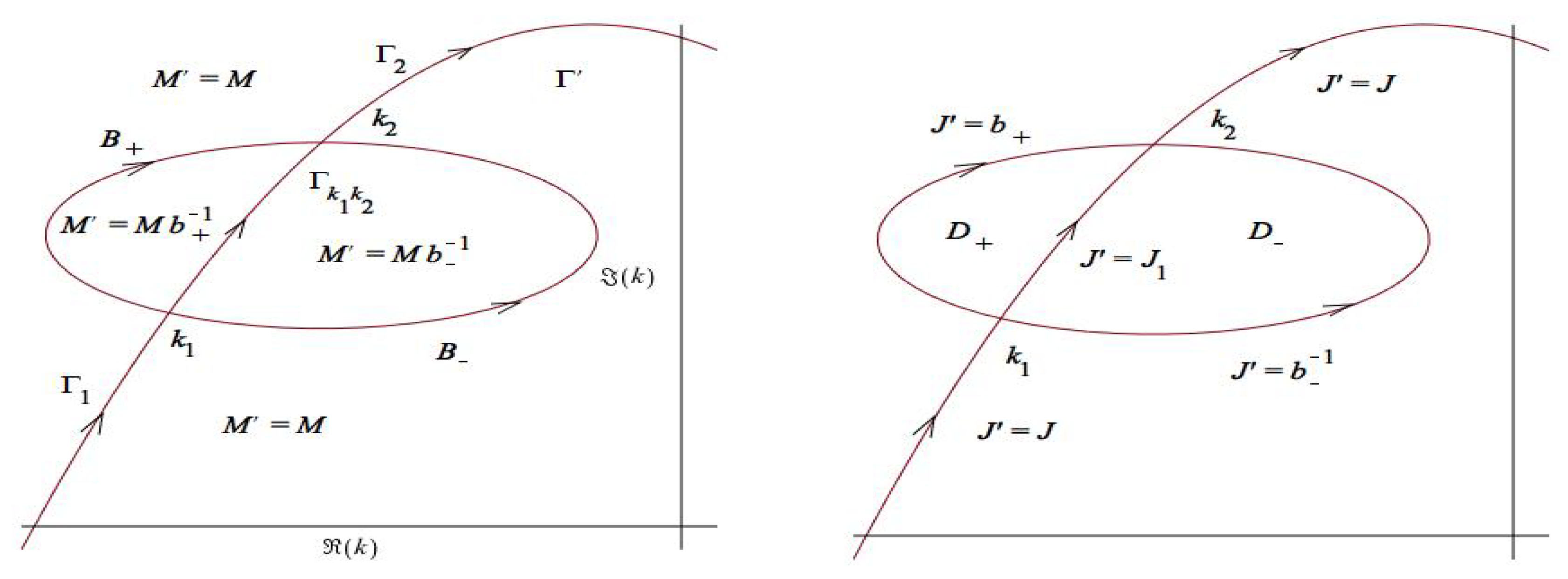

4.1. Transformation of the RH Problem

4.2. Decomposition of the Spectral Induced Function

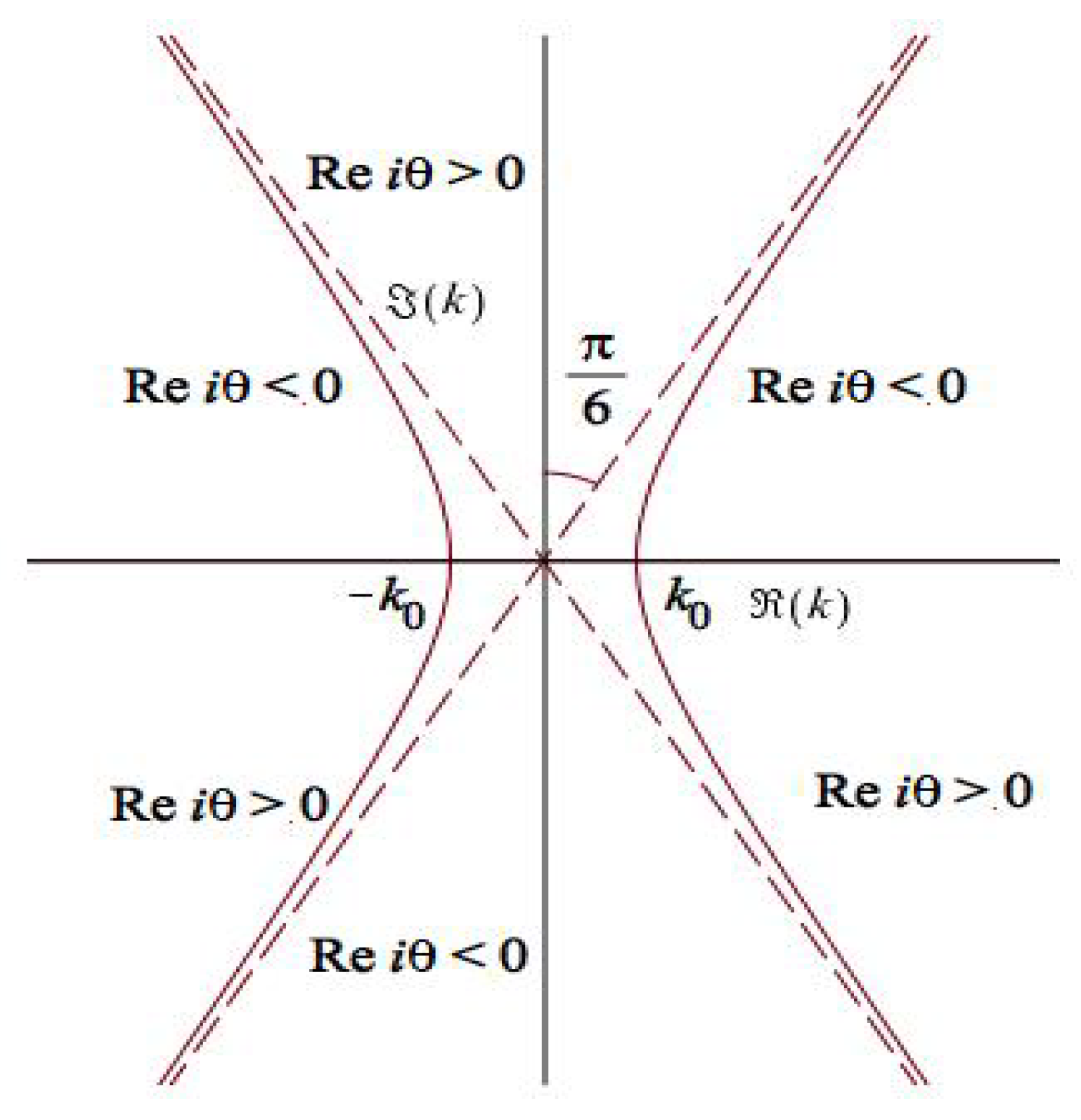

4.3. Deformation of the RH Problem

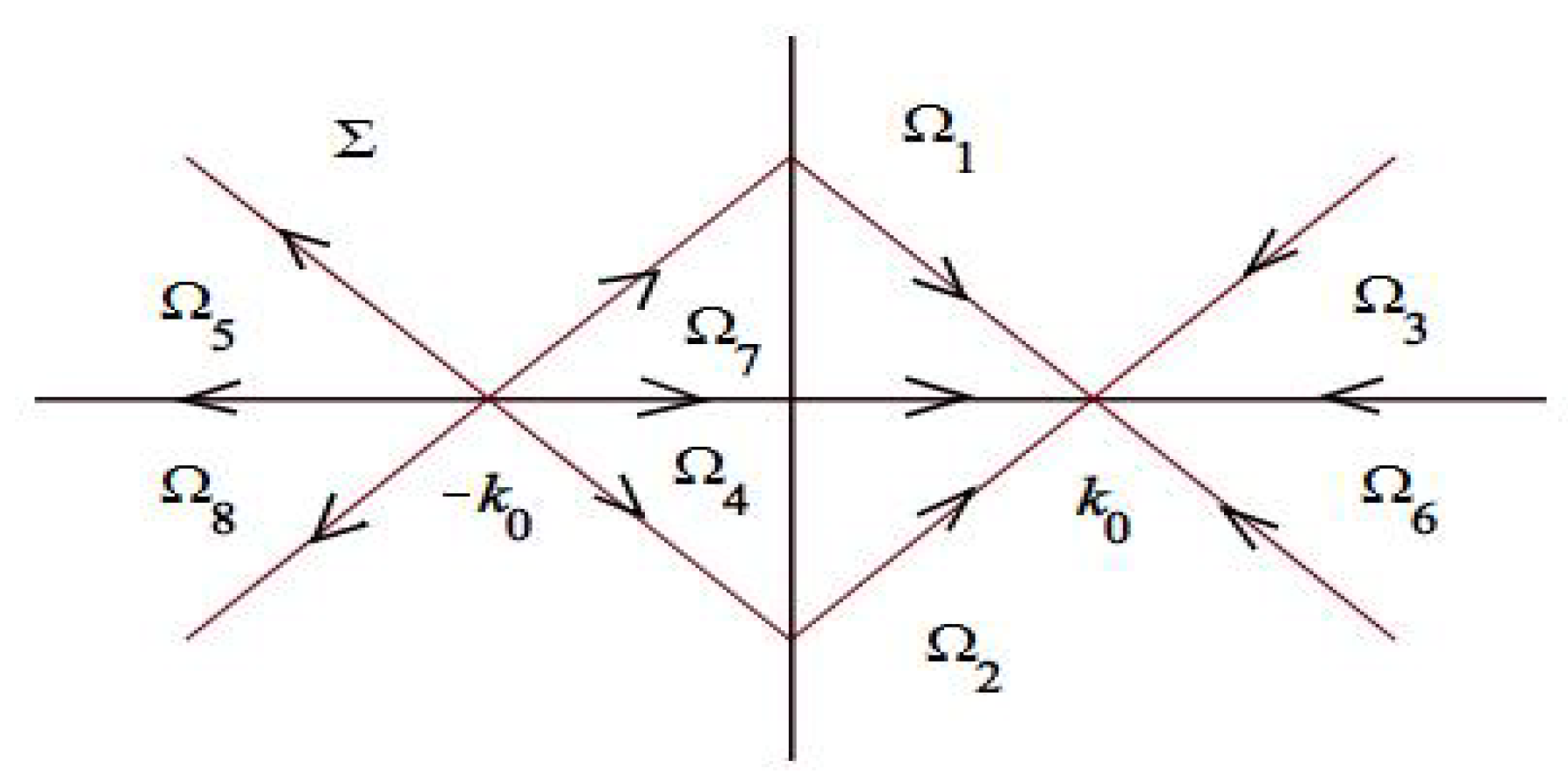

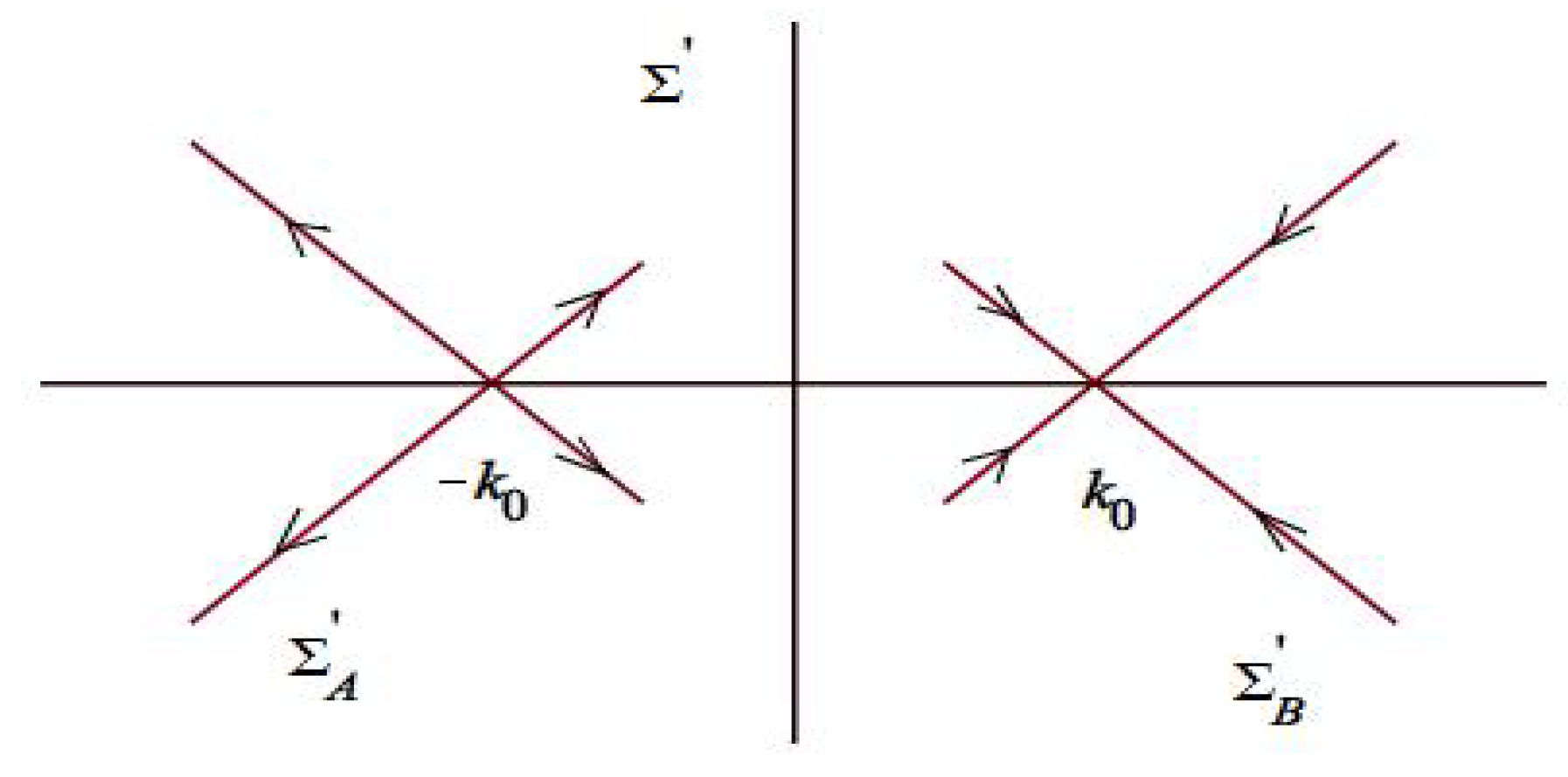

4.4. Contour Truncation

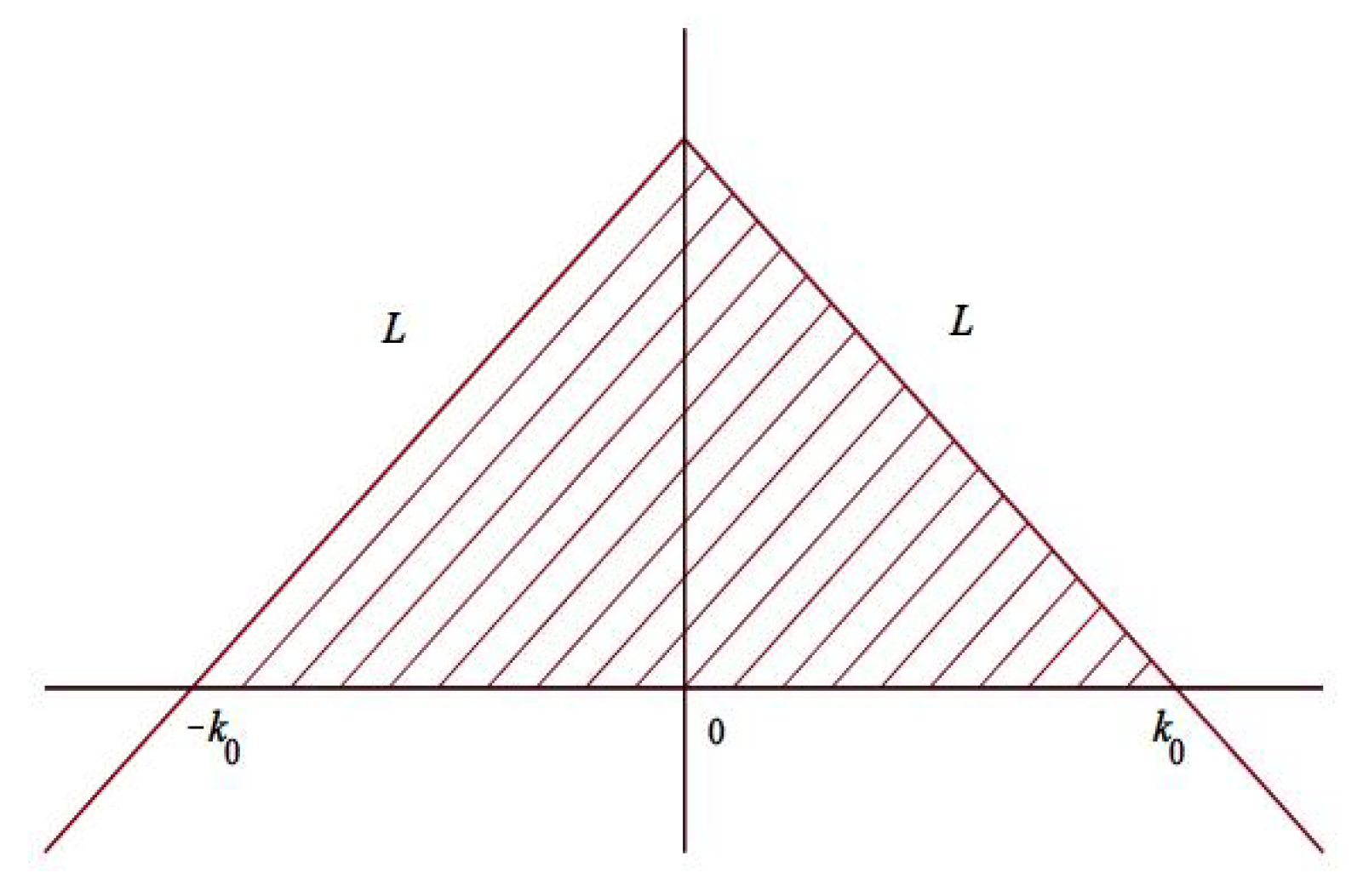

4.5. Disconnecting Contour Components

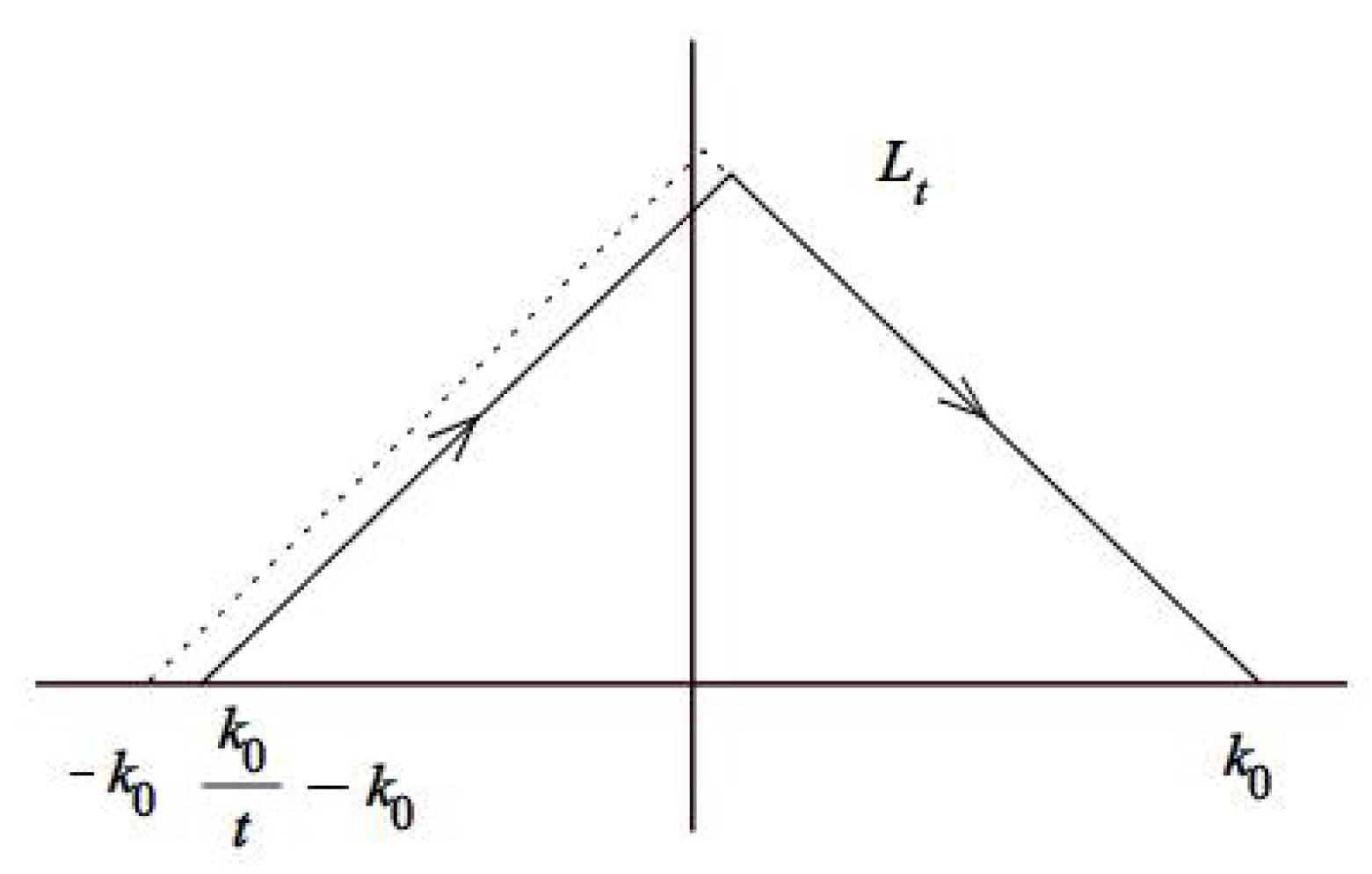

4.6. Rescaling and Reduction of the Disconnected RH Problem

4.7. The Model RH Problem and Its Solution

5. Concluding Remarks

Funding

Acknowledgments

Conflicts of Interest

References

- Manakov, S.V. Nonlinear Fraunnhofer diffraction. Sov. Phys. JETP 1974, 38, 693–696. [Google Scholar]

- Ablowitz, M.J.; Newell, A.C. The decay of the continuous spectrum for solutions of the Korteweg-de Vries equation. J. Math. Phys. 1973, 14, 1277–1284. [Google Scholar] [CrossRef]

- Zakharov, V.E.; Manakov, S.V. Asymptotic behavior of non-linear wave systems integrated by the inverse scattering method. Sov. Phys. JETP 1976, 44, 106–112. [Google Scholar]

- Ablowitz, M.J.; Segur, H. Asymptotic solutions of the Korteweg-de Vries equation. Stud. Appl. Math. 1977, 57, 13–44. [Google Scholar] [CrossRef]

- Segur, H.; Ablowitz, M.J. Asymptotic solutions and conservation laws for the nonlinear Schrödinger equation I. J. Math. Phys. 1973, 17, 710–713. [Google Scholar] [CrossRef]

- Its, A.R. Asymptotics of solutions of the nonlinear Schrödinger equation and isomonodromic deformations of systems of linear differential equations. Sov. Math. Dokl. 1981, 24, 452–456. [Google Scholar]

- Deift, P.; Zhou, X. A steepest descent method for oscillatory Riemann-Hilbert problems: Asymptotics for the MKdV equation. Ann. Math. 1993, 137, 295–368. [Google Scholar] [CrossRef]

- Deift, P.; Venakides, S.; Zhou, X. The collisionless shock region for the long-time behavior of solutions of the KdV equation. Commun. Pure Appl. Math. 1994, 47, 199–206. [Google Scholar] [CrossRef]

- Deift, P.; Zhou, X. Asymptotics for the Painlevé II equation. Commun. Pure Appl. Math. 1995, 48, 277–337. [Google Scholar] [CrossRef]

- Deift, P.; Venakides, S.; Zhou, X. New results in small dispersion KdV by an extension of the steepest descent method for Riemann-Hilbert problems. Int. Math. Res. Not. 1997, 1997, 286–299. [Google Scholar] [CrossRef]

- Kamvissis, S. Long time behavior for the focusing nonlinear Schroedinger equation with real spectral singularities. Commun. Math. Phys. 1996, 180, 325–341. [Google Scholar] [CrossRef]

- Kitaev, A.V.; Vartanian, A.H. Leading-order temporal asymptotics of the modified nonlinear Schrödinger equation: Solitonless sector. Inverse Probl. 1997, 13, 1311–1339. [Google Scholar] [CrossRef]

- Cheng, P.J.; Venakides, S.; Zhou, X. Long-time asymptotics for the pure radiation solution of the sine-Gordon equation. Commun. Part. Differ. Equ. 1999, 24, 1195–1262. [Google Scholar] [CrossRef]

- Grunert, K.; Teschl, G. Long-time asymptotics for the Korteweg-de Vries equation via nonlinear steepest descent. Math. Phys. Anal. Geom. 2009, 12, 287–324. [Google Scholar] [CrossRef]

- Boutet de Monvel, A.; Kostenko, A.; Shepelsky, D.; Teschl, G. Long-time asymptotics for the Camassa-Holm equation. SIAM J. Math. Anal. 2009, 41, 1559–1588. [Google Scholar] [CrossRef]

- Xu, J.; Fan, E.G. Long-time asymptotics for the Fokas-Lenells equation with decaying initial value problem: Without solitons. J. Differ. Equ. 2015, 259, 1098–1148. [Google Scholar] [CrossRef]

- Andreiev, K.; Egorova, I.; Lange, T.L.; Teschl, G. Rarefaction waves of the Korteweg-de Vries equation via nonlinear steepest descent. J. Differ. Equ. 2016, 261, 5371–5410. [Google Scholar] [CrossRef]

- Wang, D.S.; Wang, X.L. Long-time asymptotics and the bright N-soliton solutions of the Kundu-Eckhaus equation via the Riemann-Hilbert approach. Nonlinear Anal. Real World Appl. 2018, 41, 334–361. [Google Scholar] [CrossRef]

- McLaughlin, K.T.-R.; Miller, P.D. The ∂¯ steepest descent method and the asymptotic behavior of polynomials orthogonal on the unit circle with fixed and exponentially varying nonanalytic weights. Int. Math. Res. Pap. 2006, 2006, 1–77. [Google Scholar] [CrossRef]

- Varzugin, G.G. Asymptotics of oscillatory Riemann-Hilbert problems. J. Math. Phys. 1996, 37, 5869–5892. [Google Scholar] [CrossRef]

- Geng, X.G.; Xue, B. Quasi-periodic solutions of mixed AKNS equations. Nonlinear Anal. Theory Meth. Appl. 2010, 73, 3662–3674. [Google Scholar] [CrossRef]

- Boutet de Monvel, A.; Shepelsky, D. A Riemann-Hilbert approach for the Degasperis-Procesi equation. Nonlinearity 2013, 26, 2081–2107. [Google Scholar] [CrossRef]

- Geng, X.G.; Liu, H. The nonlinear steepest descent method to long-time asymptotics of the coupled nonlinear Schrödinger equation. J. Nonlinear Sci. 2018, 28, 739–763. [Google Scholar] [CrossRef]

- Wu, J.P.; Geng, X.G. Inverse scattering transform and soliton classification of the coupled modified Korteweg-de Vries equation. Commun. Nonlinear Sci. Numer. Simul. 2017, 53, 83–93. [Google Scholar] [CrossRef]

- Ma, W.X. The inverse scattering transform and soliton solutions of a combined modified Korteweg-de Vries equation. J. Math. Anal. Appl. 2019, 471, 796–811. [Google Scholar] [CrossRef]

- Ma, W.X. Trigonal curves and algebro-geometric solutions to soliton hierarchies I. Proc. R. Soc. A 2017, 473, 20170232. [Google Scholar] [CrossRef] [PubMed]

- Ma, W.X. Trigonal curves and algebro-geometric solutions to soliton hierarchies II. Proc. R. Soc. A 2017, 473, 20170233. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ma, W.X. Binary Bargmann symmetry constraints of soliton equations. Nonlinear Anal. Theory Meth. Appl. 2001, 47, 5199–5211. [Google Scholar] [CrossRef] [Green Version]

- Ma, W.X. Riemann-Hilbert problems of a six-component mKdV system and its soliton solutions. Acta Math. Sci. 2019, 39, 509–523. [Google Scholar]

- Ma, W.X.; Zhou, Z.X. Binary symmetry constraints of N-wave interaction equations in 1+1 and 2+1 dimensions. J. Math. Phys. 2001, 42, 4345–4382. [Google Scholar] [CrossRef] [Green Version]

- Tu, G.Z. On Liouville integrability of zero-curvature equations and the Yang hierarchy. J. Phys. A Math. Gen. 1989, 22, 2375–2392. [Google Scholar]

- Lax, P.D. Integrals of nonlinear equations of evolution and solitary waves. Commun. Pure Appl. Math. 1968, 21, 467–490. [Google Scholar] [CrossRef]

- Magri, F. A simple model of the integrable Hamiltonian equation. J. Math. Phys. 1978, 19, 1156–1162. [Google Scholar] [CrossRef]

- Ma, W.X.; Chen, M. Hamiltonian and quasi-Hamiltonian structures associated with semi-direct sums of Lie algebras. J. Phys. A Math. Gen. 2006, 39, 10787–10801. [Google Scholar] [CrossRef] [Green Version]

- Ma, W.X. Variational identities and applications to Hamiltonian structures of soliton equations. Nonlinear Anal. Theor. Meth. Appl. 2009, 71, e1716–e1726. [Google Scholar] [CrossRef]

- Ma, W.X. Generators of vector fields and time dependent symmetries of evolution equations. Sci. China A 1991, 34, 769–782. [Google Scholar]

- Ma, W.X. The algebraic structure of zero curvature representations and application to coupled KdV systems. J. Phys. A Math. Gen. 1993, 26, 2573–2582. [Google Scholar] [CrossRef]

- Ma, W.X.; Zhou, R.G. Adjoint symmetry constraints leading to binary nonlinearization. J. Nonlinear Math. Phys. 2002, 9 (Suppl. 1), 106–126. [Google Scholar] [CrossRef]

- Ma, W.X. Conservation laws by symmetries and adjoint symmetries. Discrete Contin. Dyn. Syst. S 2018, 11, 707–721. [Google Scholar] [CrossRef]

- Drinfel’d, V.G.; Sokolov, V.V. Equations of Korteweg-de Vries type, and simple Lie algebras. Sov. Math. Dokl. 1982, 23, 457–462. [Google Scholar]

- Terng, C.L.; Uhlenbeck, K. The n × n KdV hierarchy. J. Fixed Point Theory Appl. 2011, 10, 37–61. [Google Scholar] [CrossRef]

- Ma, W.X.; Xu, X.X.; Zhang, Y.F. Semi-direct sums of Lie algebras and continuous integrable couplings. Phys. Lett. A 2006, 351, 125–130. [Google Scholar] [CrossRef] [Green Version]

- Manakov, S.V. On the theory of two-dimensional stationary self-focusing of electromagnetic waves. Sov. Phys. JETP 1974, 38, 248–253. [Google Scholar]

- Chen, S.T.; Zhou, R.G. An integrable decomposition of the Manakov equation. Comput. Appl. Math. 2012, 31, 1–18. [Google Scholar]

- Ma, W.X. Symmetry constraint of MKdV equations by binary nonlinearization. Physica A 1995, 219, 467–481. [Google Scholar] [CrossRef] [Green Version]

- Yu, J.; Zhou, R.G. Two kinds of new integrable decompositions of the mKdV equation. Phys. Lett. A 2006, 349, 452–461. [Google Scholar] [CrossRef]

- Ma, W.X.; Yong, X.L.; Qin, Z.Y.; Gu, X.; Zhou, Y. A generalized Liouville’s formula. Appl. Math. B-A J. Chin. Univ. 2016. submitted. [Google Scholar]

- Novikov, S.P.; Manakov, S.V.; Pitaevskii, L.P.; Zakharov, V.E. Theory of Solitons: The Inverse Scattering Method; Consultants Bureau: New York, NY, USA, 1984. [Google Scholar]

- Ma, W.X. Application of the Riemann-Hilbert approach to the multicomponent AKNS integrable hierarchies. Nonlinear Anal. Real World Appl. 2019, 47, 1–17. [Google Scholar] [CrossRef]

- Zhou, X. The Riemann-Hilbert problem and inverse scattering. SIAM J. Math. Anal. 1989, 20, 966–986. [Google Scholar] [CrossRef]

- Ablowitz, M.J.; Fokas, A.S. Complex Variables: Introduction and Applications; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Beals, R.; Coifman, R.R. Scattering and inverse scattering for first order systems. Commun. Pure Appl. Math. 1984, 37, 39–90. [Google Scholar] [CrossRef]

- Clancey, K.; Gohberg, I. Factorization of Matrix Functions and Singular Integral Operators; Birkhäuser: Basel, Switzerland, 1981. [Google Scholar]

- Whittaker, E.T.; Watson, G.N. A Course of Modern Analysis, 4th ed.; Cambridge University Press: Cambridge, UK, 1927. [Google Scholar]

- Rybalko, Y.; Shepelsky, D. Long-time asymptotics for the integrable nonlocal nonlinear Schrödinger equation. J. Math. Phys. 2019, 60, 031504. [Google Scholar] [CrossRef]

- Boutet de Monvel, A.; Fokas, A.S.; Shepelsky, D. The mKdV equation on the half-line. J. Inst. Math. Jussieu 2004, 3, 139–164. [Google Scholar] [CrossRef]

- Lenells, J. The nonlinear steepest descent method: Asymptotics for initial-boundary value problems. SIAM J. Math. Anal. 2016, 48, 2076–2118. [Google Scholar] [CrossRef]

- Guo, B.L.; Liu, N. Long-time asymptotics for the Kundu-Eckhaus equation on the half-line. J. Math. Phys. 2018, 59, 061505. [Google Scholar] [CrossRef]

- Hirota, R. The Direct Method in Soliton Theory; Cambridge University Press: New York, NY, USA, 2004. [Google Scholar]

- Ma, W.X. Generalized bilinear differential equations. Stud. Nonlinear Sci. 2011, 2, 140–144. [Google Scholar]

- Freeman, N.C.; Nimmo, J.J.C. Soliton solutions of the Korteweg-de Vries and Kadomtsev-Petviashvili equations: The Wronskian technique. Phys. Lett. A 1983, 95, 1–3. [Google Scholar] [CrossRef]

- Ma, W.X.; You, Y. Solving the Korteweg-de Vries equation by its bilinear form: Wronskian solutions. Trans. Am. Math. Soc. 2005, 357, 1753–1778. [Google Scholar] [CrossRef]

- Matveev, V.B.; Salle, M.A. Darboux Transformations and Solitons; Springer: Berlin, Germany, 1991. [Google Scholar]

- Xu, X.X. An integrable coupling hierarchy of the MKdV_ integrable systems, its Hamiltonian structure and corresponding nonisospectral integrable hierarchy. Appl. Math. Comput. 2010, 216, 344–353. [Google Scholar]

- Dong, H.H.; Zhao, K.; Yang, H.W.; Li, Y.Q. Generalised (2+1)-dimensional super MKdV hierarchy for integrable systems in soliton theory. East Asian J. Appl. Math. 2015, 5, 256–272. [Google Scholar] [CrossRef]

- Dong, H.H.; Guo, B.Y.; Yin, B.S. Generalized fractional supertrace identity for Hamiltonian structure of NLS-MKdV hierarchy with self-consistent sources. Anal. Math. Phys. 2016, 6, 199–209. [Google Scholar] [CrossRef]

- Guo, M.; Fu, C.; Zhang, Y.; Liu, J.X.; Yang, H.W. Study of ion-acoustic solitary waves in a magnetized plasma using the three-dimensional time-space fractional Schamel-KdV equation. Complexity 2018, 2018, 6852548. [Google Scholar] [CrossRef]

- Ma, W.X.; Zhou, Y. Lump solutions to nonlinear partial differential equations via Hirota bilinear forms. J. Differ. Equ. 2018, 264, 2633–2659. [Google Scholar] [CrossRef]

- Ma, W.X.; Li, J.; Khalique, C.M. A study on lump solutions to a generalized Hirota-Satsuma-Ito equation in (2+1)-dimensions. Complexity 2018, 2018, 9059858. [Google Scholar] [CrossRef]

© 2019 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, W.-X. Long-Time Asymptotics of a Three-Component Coupled mKdV System. Mathematics 2019, 7, 573. https://doi.org/10.3390/math7070573

Ma W-X. Long-Time Asymptotics of a Three-Component Coupled mKdV System. Mathematics. 2019; 7(7):573. https://doi.org/10.3390/math7070573

Chicago/Turabian StyleMa, Wen-Xiu. 2019. "Long-Time Asymptotics of a Three-Component Coupled mKdV System" Mathematics 7, no. 7: 573. https://doi.org/10.3390/math7070573