Abstract

In this paper, we consider several new applications of the recently introduced mathematical framework of the Theory of Connections (ToC). This framework transforms constrained problems into unconstrained problems by introducing constraint-free variables. Using this transformation, various ordinary differential equations (ODEs), partial differential equations (PDEs) and variational problems can be formulated where the constraints are always satisfied. The resulting equations can then be easily solved by introducing a global basis function set (e.g., Chebyshev, Legendre, etc.) and minimizing a residual at pre-defined collocation points. In this paper, we highlight the utility of ToC by introducing various problems that can be solved using this framework including: (1) analytical linear constraint optimization; (2) the brachistochrone problem; (3) over-constrained differential equations; (4) inequality constraints; and (5) triangular domains.

1. Introduction

The Theory of Connections (ToC), introduced by D. Mortari [1] (see also [2,3,4]), is a framework that transforms constrained problems into unconstrained problems by deriving “constrained expressions”. Using these expressions, the governing physical models are modified and solved in the space without constraints. Below, we present a brief overview. For univariate functions, Ref. [1] introduced the constrained expression using the form,

where the are n assigned linearly independent functions, is a free function, and the coefficients are derived by imposing the n constraints. The resulting constrained expression always satisfies the constraints, as long as is defined, where the constraints are defined.

As an example, we consider a function subject to the constraints and . Following Equation (1), and selecting and , the following two expressions are obtained [3,4].

where and are values of the free function and its derivatives evaluated at the two constraint points and . The unknowns and can be solved leading to,

Plugging the coefficients back into Equation (1) and simplifying, the final constrained expression is produced,

It can be seen that the constraints are always satisfied ( and ), as long as and are defined. This is an example that demonstrates Equation (1). The theory is general and can be used for many other constraints. In the paper, we will present other examples (see also [1,3] for more examples).

In general, the coefficients are expressed in terms of the independent variables and in terms of evaluated where the constraints are defined. The Equation (1) is called constrained expression because it represents all possible functions satisfying the n constraints, regardless of the function . Please note that can be discontinuous, partially defined, and even the Dirac delta function, as long as there are no constraints specified where the delta function is infinite.

The function in Equation (1) typically is applied to ODEs or some variational problems. In general, in the example of ODEs, we can consider a problem for ,

subject to the constraints, which can include various types of constraints, (e.g., constraints on the function or derivatives, integral constraints, or so on). Here, F is some equation of and its derivatives. Using a ToC expression (Equation (1)), this equation is transformed to an unconstrained problem where the unknown parameter is defined by a new function . This transformation can be written as,

where is equations completely independent of the problem constraints. Moreover, differs from F and represents modified constitutive relations. The major advantage of Equation (2) is that it can be solved as an unconstrained optimization problem. Past work has focused on expanding the function of by a linear basis [5,6,7] such that

where is a vector of global functions and is vector of unknown coefficients used in the optimization process. These coefficients are determined by minimizing the residual of Equation (2) at some collocation points [8,9]. In general, one can use locally supported functions. One can also avoid a linear functional representation of and use a non-linear representation, for example, neural networks and support vector machines [10].

The Multivariate Theory of Connections [2] extends the original univariate theory [1] to n-dimensions and to any-degree boundary constraints. This extension can be summarized by the expression,

where is the vector of n orthogonal coordinates, is a function specifying the boundary constraints, is any interpolating function satisfying the boundary constraints, and is the free function. Several examples of constrained expressions can be found in Refs. [1,2].

The major research effort of Theory of Connections has been applied to solve linear [4] and non-linear [3] ODEs. This has been done by expanding the free function, , in terms of a set of basis functions (e.g., orthogonal polynomials, Fourier, etc.). Linear or iterative non-linear least-squares is then used to solve for the coefficients of this expansion. This approach to solve ODEs/PDEs has many advantages over traditional methods: (1) it consists of a unified framework to solve IVP, BVP, or multi-values problems, (2) it provides an approximated solution expressed via analytical functions that can be used for subsequent algebraic manipulation, (3) the solution accuracy is usually obtained fast for many application problems, (4) the procedure can be numerically robust (very low condition number), and (5) it can solve ODE/PDE subject to a variety of constraint types: absolute, relative, linear, non-linear, and integral. Additionally, this technique has recently been extended to solve 2-dimensional PDEs [10].

The purpose of this paper is to introduce a set of five new applications of Theory of Connections; applications that are not covered in the previous references and where ToC is found effective. The main motivation and a short rational description of the new applications considered in this paper are:

- Analytic linear constraint optimization. In this application, the analytical problem of finding the extreme of a quadratic vectorial form subject to linear constraint is obtained using ToC instead of using the classical Lagrange multipliers technique.

- Brachistochrone problem. This application is dedicated to one of the most famous problem in calculus of variations: the brachistochrone problem [11]. This problem is solved numerically and at machine error accuracy via the two-point boundary-value problem of the Euler-Lagrange equation associated with the brachistochrone functional integral definition. In particular, the solution is obtained by deriving the differential equation in polar coordinates.

- Over-constrained differential equations. This application takes into consideration the problem of solving ODEs subject to more constraints than the degree of the ODE which arise in many areas of science and engineering. For example, in the problem of orbit determination of a satellite the number of measurements exceeds the order of the governing differential equation [12]. Furthermore, multi-purpose optimization [13,14,15] deals specifically with optimizing across multiple objective functions simultaneous where trade-offs (weighting) between two conflicting objectives must be taken into account. In this section, a weight least-squares solution is provided for over-constrained differential equations by assigning relative weights to the constraints and then solving the weighted constrained ODE by ToC. Additionally, another example showing the sequence of continuous solutions of an IVP morphing into an BVP is provided.

- Inequality constraints. This application extends the ToC framework to include inequality constraints [16]. This is obtained using a combination of sigmoid functions to keep the constrained expression within the inequality constraints. This allows for the derivation of functions constrained by user-defined bounds than can be asymmetric, continuous, or symmetric discontinuous functions. The main motivation for this constraint type comes from optimal control problems (bounded control inputs).

- Triangular domains. Validated by mathematical proof, Ref. [2] has extended the original univariate theory [1] to the multivariate theory [2]. This extension represents the multivariate formulation of ToC subject to arbitrary-order derivative constraints in rectangular domains. This provides an analytical procedure to obtain constrained expressions in any orthogonal/rectangular space that can be used to transform constrained problems into unconstrained problems. In Ref. [2] particular emphasis and details are given to the 2-dimensional case, because of the most important applications to surfaces (PDEs, Topography, Visualization, etc.). This application begins the extension of ToC to triangular domains by deriving the surfaces satisfying boundary constraints.

The complete solution derivations of each one of these new applications, validated by numerical examples, are detailed in the subsequent sections.

2. Analytic Linear Constraints Optimization

This section shows an analytical application of the Theory of Connections. The problem, known as quadratic programming (QP) subject to equality constraints, consists of deriving a closed-form solution of the problem to find the min/max of the quadratic function, , subject to linear constraints,

where and , , , and , are all assigned, and D has rank m (all rows are independent). Let us search a solution in the form,

The constraint implies,

and

Therefore,

Substituting this expression of in Equation (3) we obtain,

The problem of finding the extreme of Equation (3) is now an unconstrained optimization problem. Stationary condition implies

where

In this example, we show how to transform a constrained optimization problem to unconstrained optimization problem and find the unconstrained , which is the solution of Equation (4). This shows a simple application of Theory of Connections. Unfortunately, the matrix is singular. However, Equation (4) is a consistent linear system. Therefore, the solution can be found in the null space of . This is computed by the Moore-Penrose inverse matrix (pseudo-inverse). Let be the spectral decomposition of , where the eigenvector matrix, C, is an orthogonal matrix because matrix is symmetric. Therefore, the solution of the problem given in Equation (3) is,

where the diagonal matrix contains the inverse of the nonzero eigenvalues and zero for zero eigenvalues.

3. Brachistochrone Problem

The brachistochrone problem is one of many problems arising from the calculus of variations. This body of work focuses on finding the extrema of functionals (mappings from functions to real numbers). Associated functions that maximize or minimize the functionals can be found using the Euler-Lagrange equation. In general, this equation produces an ODE which when solved produces the extremal function. The main application of ToC to the calculus of variation is solving the specific ODEs which can be highly complex.

Consider finding the function, , minimizing the integral,

where , is an unknown function satisfying and , and is the functional. An extremal, , of the integral given in Equation (5) is the solution of the Euler-Lagrange equation,

The solution of this equation, , is an extremal solution (where the derivative is zero, which is a necessary condition). This solution, however, is not sufficient as may be associated with some local minima, maxima, or saddle points. In other word, after solving Equation (6), we can consider , if the condition,

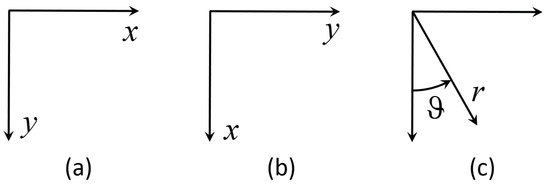

is verified. This can be done, for example, by evaluating the second derivative (Hessian) of . Figure 1 shows three different coordinate systems that may be used to solve the brachistochrone problem.

Figure 1.

Brachistochrone Coordinate Systems: (a) classic cartesian coordinate system, (b) modified cartesian coordinate system, and (c) polar coordinate system.

Typically, the brachistochrone problem is written using coordinate system (a) of Figure 1. In this coordinate system, the total travel time of the sliding bead is given by the functional [11],

subject to,

Using the steps of the calculus of variations outlined in Equation (6) on the function in Equation (7) yields the differential equation for the function ,

However, this coordinate system produces the case that at the initial point and cannot be solved using ToC. If the axes are oriented as shown in coordinate system (b) of Figure 1, the functional used in the calculus of variations becomes,

which after performing the steps of the calculus of variations leads to the differential equation for the function ,

The problem with the differential equation given in Equation (9) is that the solution may take on two different values of for a given value of x. Analytically, the issues with the DEs given in Equations (8) and (9) are circumvented by solving the problems parametrically. Unfortunately, the DE for the brachistochrone written in the parametric form either contains not-a-number or infinite values when implemented numerically, or admits a trivial solution. For example, let and each be functions of the parameter p and their derivatives with respect to p be given by the notation: and . Then, Equation (9) can be rewritten using the chain rule as

Unfortunately, Equation (10) will have infinite values anytime or . Therefore, it cannot be implemented numerically. Alternatively, one could write the DE as

However, Equation (11) admits the trivial solution . Attempts have been made to avoid the trivial solution by giving a starting value for the non-linear least-squares that is far from the solution , but none of these attempts were successful.

Another option is to express the brachistochrone problem in polar coordinates (coordinate system (c) in Figure 1). If the coordinates are chosen correctly, then the problems of the Cartesian coordinate systems can be avoided, and the problem does not need to be parameterized. A downside of this approach is a significantly more complicated DE; however, by using the ToC method, solving this DE is very easy. Using this coordinate system, the energy of an object sliding without friction is given by,

In addition, the differential path length in polar coordinates is given by,

where . Furthermore, using Equation (12), the velocity, v, can be written as,

Using Equations (13) and (14) one can express the total time to get from the start point to the end point as

Let the integrand of Equation (15) be defined as the functional . Then, the calculus of variations can be used to minimize the travel time. This leads to the DE, after some simplification, given in Equation (16).

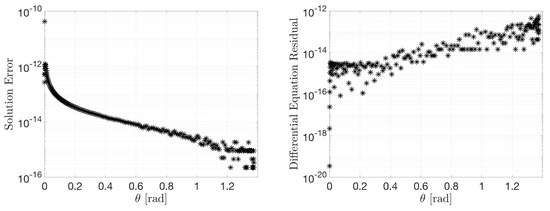

Using the differential equation given by Equation (16), the ToC method was used to solve the particular case with initial condition and final condition . Figure 2 shows information associated with solving the brachistochrone problem and highlights the error between the ToC solution and the real solution. Additionally, the residuals of the DE are between the order of and when the ToC formulation is used and is expressed by 60 Chebyshev polynomials. It can be seen that this method can estimate the solution of the brachistochrone problem with high accuracy.

Figure 2.

Polar brachistochrone differential equation solution error and residuals.

4. Over-Constrained Differential Equations

The seminal paper on ToC [1] presented Equation (1) as a way to incorporate n constraints to form a constrained expression, this equation is repeated below:

This process leads to a () matrix populated by the values of evaluated at the constraint conditions. The values of can then be solve by simply inverting this matrix. Yet, through this process, it is not required that number of coefficients equals the number of applied constraints, and thus constraint matrix can also be written for n values of and m constraints. Which leads to,

where denotes the order of derivative of the constraint at point where . This becomes an over-determined system with the constraint matrix M being . The values can still be solved by incorporating a weighted least-squares technique, where we define W as a diagonal weighting matrix,

leading to the solution,

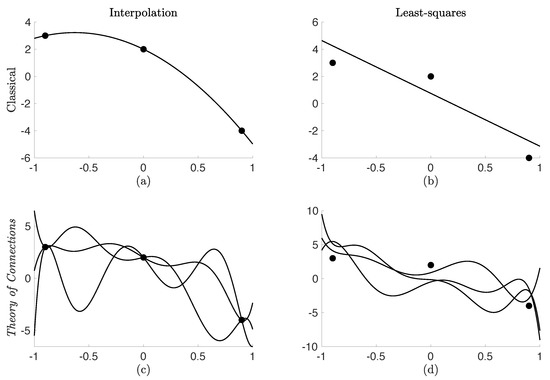

Unlike the traditional ToC approach where the values produced an equation always satisfying the given constraints, the weight least-squares approach produces a weighted constrained expression which satisfies the constraints in a weighted (relative) sense. To exactly solve a Differential Equation, the number of constraints MUST be equal to the order of the Differential Equation. Yet, through the ToC method, the constrained expression is formed and then the differential equation is solved. This separation allows for the incorporation of more constraints than the order of the Differential Equation. The concept of this is highlighted in Figure 3 which provides an outline which distinguishes the ToC approach from classical methods in interpolation and least-squares. Part (c) in Figure 3 shows the original formulation of ToC where the number of the coefficients was equal to the number of constraints incorporated. Part (d) highlights the work of this section which combines this general interpolation method with a weighted least-squares technique for the constraints.

Figure 3.

The classical formulation of (a) interpolation and (b) least-squares provides a single function solution. The ToC method provides the numerical framework to describe (c) all possible functions satisfying the constraints and (d) all possible functions of a least-squares fitting.

4.1. Second Order Differential Equation with Three Point Constraints. A Numerical Example

As a numerical test, let us consider solving the Differential Equation,

For this example, let us assume that a dynamical system described by Equation (17) is “observed” at three points and these measurements are subject to normally distributed noise such that

where for this problem , , and . For this, we define the weight matrix based on the variances,

Using the development in the prior section, this Differential Equation can incorporate information from all three observations even though the Differential Equation is only second-order. Since the Differential Equation is second-order, the solution must be searched by the following constrained expression,

Then the values of and are computed by weighted least-squares where we define and ,

where the M matrix is defined by imposing the constraints and the W matrix is populated by the scaled variances above. The solution leads to a constrained expression of the form,

such that

such that . Equation (18) represents all functions satisfying constraints relative to the given variances , , and . With the constraints completely captured in Equation (18), the function represents the solution space that satisfies the three constraints by least-squares. Now let us move toward solving Equation (17). First, let the free function , be expressed as a linear combination of a given basis set where . Substituting this into Equation (18) leads to,

where the constrained expression can be reduced to the form,

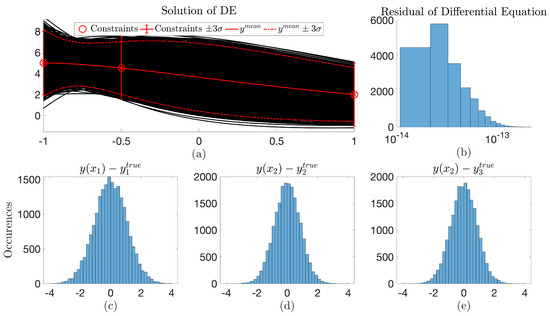

This form now has transformed the constrained optimization problem into an unconstrained optimization problem on , and can be solved using the techniques developed in Ref. [3,4]. In this specific problem, a Monte Carlo simulation of 10,000 trials was conducted to determine space that the function could occupy given the “observation” uncertainty and subject to dynamics governed by the Differential Equation. Figure 4 highlights this solution. In this figure, plot (a) shows the Differential Equation solution space given the observation uncertainty and plot (b) highlights the residuals of the Differential Equation over the entire simulation. It can be seen that the residuals of all solutions are between and . Plots (c), (d), and (e) display the distribution of the constraint points around the true value. Note: these values are sampled from the solutions of the Differential Equation and not the constraints specified in the constrained expression.

Figure 4.

Monte Carlo test for 10,000 trials. Part (a) represents the solutions of the differential equation over the varying observed “constraints” with part (b) quantifying the accuracy of the solution. Parts (c)–(e) represent the distribution of the solution values compared with the true value of the “constraints”.

Through this test, a probability bound for the Differential Equation can be produced, along with an estimated mean. For all solutions, the residual of the differential remained less than verifying the accuracy of the method. Additionally, an interesting result of this test is in the final estimated solutions. Most evident in the constraints at and , the of the Differential Equation is less than that of the observation . This arises because the loss function in the ToC method minimizes the residuals of the Differential Equation, and now in the over-constrained ToC method the residuals are minimized simultaneously with the weighted least-squares of the observations (or constraints).

4.2. Initial to Boundary-Value Problem Transformation. A Numerical Example

Another application made possible by this extension is a continuous transformation of an initial value problem to a boundary-value problem. The following numerically validates this approach. Consider solving,

subject to the three constraints

Since the Differential Equation is second-order, the constrained expression takes the form,

Since the highest order constraint is the first derivative, we can safely define and . Then the values of and are computed again by weighted least-squares,

where the M matrix is defined by imposing the constraints and the W matrix is a diagonal matrix of weights, i.e.,

and is a weight parameter such that , which is synonymous with the transformation from the IVP to the BVP. From this derivation, V becomes

and solving for leads again Equation (18) of the form,

where the terms are defined by,

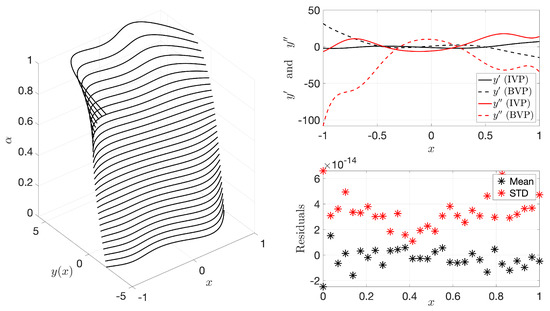

Again, can be defined as and the unknown coefficient vector can be solved with least-squares. Figure 5 shows this transformation “surface” along with the residuals of the Differential Equation for validation of the method. It can be seen that the mean residual overall values are on the order of with a standard deviation on the same order. This plot shows the solution of the Differential Equation, , continuously morphing from initial point and derivative constraints to initial and final point constraints.

Figure 5.

IVP to BVP Differential Equation parametric transformation.

5. Inequality Constraints

In some cases, it may be desired to find all possible trajectories between two functions, and , that represent upper and lower continuous bounds, respectively. This means that , . All trajectories between these two functions can be approximated by the following expression,

where represents the free function. In the cases that there are also equality constraints to be applied, the function can be replaced by Equation (1). Additionally,

is a sigmoid function with smoothing parameter k. The sigmoid function acts as a “switching function” that subtracts off the portion of that exceeds the bounds. In this equation, k is not infinite, and thus will experience some overshooting/undershooting when or that must be quantified. To analyze this, let us consider a simple case where the function is only constrained from above with and therefore only overshooting exists.

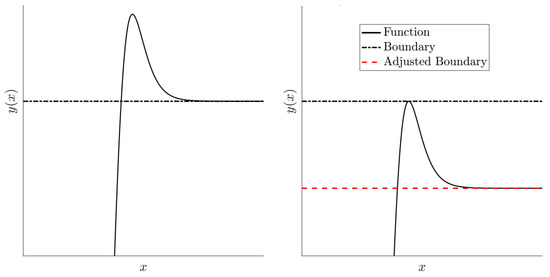

For this case, the overshooting phenomenon is shown in Figure 6.

Figure 6.

Overshoot of for the upper bound constraint. This phenomenon can be quantified and can be used to eliminate the overshooting.

Since the only free part of the equation is , the maximum value of the entire function, , will be when the derivative (. Performing this derivative, Equation (20) becomes,

which can be solved for . The resulting value, , is the value of that will cause the maximum overshoot in . This process yields,

where productlog is the product logarithm function (also-called the omega function or the Lambert W function). Substituting this result back into Equation (19), we can obtain the maximum value that can take.

Now, we use Equation (21) to solve for a modified upper bound, , such that the maximum value of is : is the adjusted boundary shown in Figure 6. This yields Equation (22).

A similar derivation can be made for the lower bound ; the solution is shown in Equation (23).

The functions and replace and in Equation (19), resulting in,

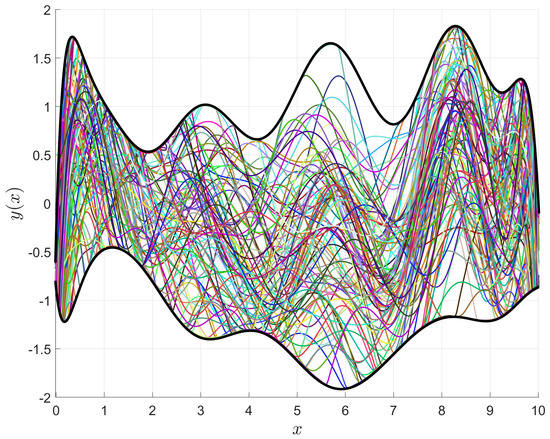

Equation (21) shows that the value of k is the only user-selected parameter that effects the difference between and , because is a constant. Increasing the value of k will decrease the difference between and , and also the difference between and . Decreasing the value of k will have the opposite effect. Equation (24) was used to generate Figure 7, which contains randomly generated inequality boundaries and functions. This figure shows that all the random functions stay within the randomly generated inequality boundaries, and thus demonstrates how this technique can be used to enforce inequality boundary constraints.

Figure 7.

Random constrained expressions with continuous bounds.

6. Triangular Domains

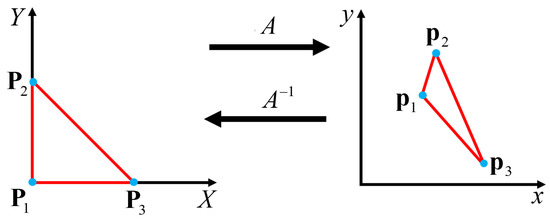

This section demonstrates how to construct a constrained expression with a free function, , that will always meet the Dirichlet boundary conditions on a triangular domain. This section is broken down into two subsections. The first proves that an affine transformation exists that can transform the unit triangle into any desired triangle: shown in Figure 8. The second subsection shows how to build the constrained expression for the unit triangle, and how to extend it, via the affine transformation given in the first subsection, to any triangular domain.

Figure 8.

Affine Transformation from Unit Triangle to Desired Triangle.

6.1. Affine Transformation from the Unit Triangle to the Generic Triangle

Consider a unit triangle, which is shown on the left plot of Figure 8. This unit triangle has the vertices defined by the vectors, , , and .

This subsection presents an affine transformation, denoted by , that transforms the coordinates of a unit triangle defined in the (capital frame) into the coordinates of the triangle in the desired coordinate frame.

Let the three vectors , where , be the position vectors of vertices of the unit triangle in the starred frame. Similarly, , where , be the position vectors of the vertices of the desired triangle in the regular frame. Then, the affine coordinate transformation given in Equation (25) transforms the unit triangle in the capital frame into the desired triangle in the desired frame.

The following identities prove the mapping provided by Equation (25),

The inverse transformation is also of interest, and can be written as,

The inverse transformation will always exist if the triangle is non-degenerate. The first column of A is the vector that points from to , and the second column is the vector from that points from to . For a triangle, these two vectors will never be parallel. The third column of A is defined as the cross-product of the first two columns, and will therefore be orthogonal to the vectors that make up the first two columns. Therefore, the columns of A are linearly independent, and A is invertible. Therefore, the inverse transform always exists.

6.2. Coons-Type Surface on the Unit Triangle

Consider unit triangle with boundary constraints provided by the boundary functions, , when , and . For this unit triangle, the following Coons-type surface can be constructed,

From Equation (27) one can show that

Let’s write Equation (27) in the following compact form,

where is a function of the boundary constraints, , , and .

6.3. ToC Surfaces on the Unit Triangle

Equation (28) can be specified for any surface, . This means that we can write the identity,

where , or , and . Therefore, ToC allows us to write the constrained expression representing all surfaces satisfying the boundary conditions on the unit triangle as,

where is a free function. The constrained expression given in Equation (29) can be written explicitly as,

6.4. ToC Surfaces on the Generic Triangle

The ToC surfaces on the generic triangle can be derived using Equation (29) and the affine mapping given in Equation (26). Setting, , Equation (29) can be rewritten as

Setting, , the affine transformation can be written as

and the ToC Surfaces on the Generic Triangle can be written as,

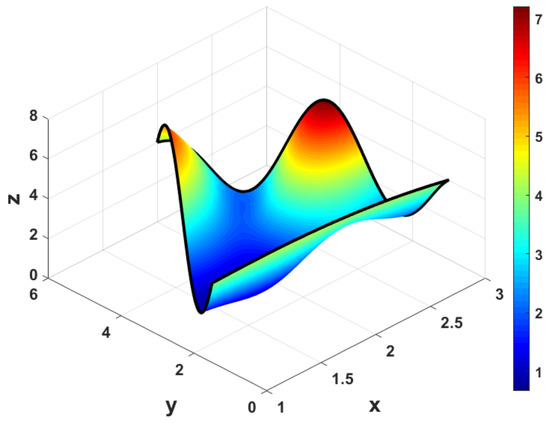

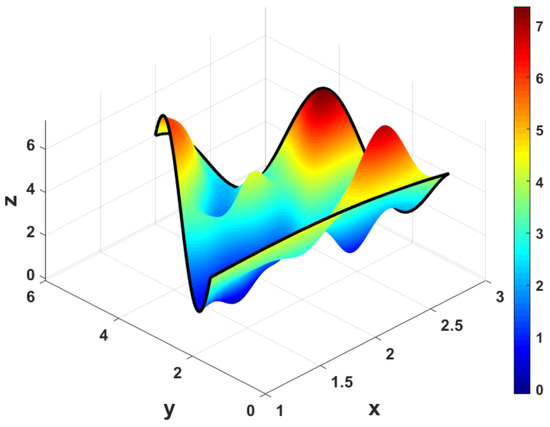

Figure 9 and Figure 10 show two ToC surfaces for a generic triangle with the same boundary conditions but different free functions, . The boundary conditions used to create these figures were,

and the vertices of the triangle were , , and .

Figure 9.

ToC Triangular Surface with .

Figure 10.

ToC Triangular Surface with .

7. Conclusions

This work demonstrated some of the applications of ToC as a unified framework. The first application showed how to solve QP problems subject to equality constraints without the use of Lagrange multipliers. Using ToC, the equality constraints are embedded into a constrained expression that transforms the problem into an unconstrained optimization problem. The second application showed how to solve one of the most famous calculus of variations problems, the brachistochrone problem, with ToC. The calculus of variations technique was used to derive a highly non-linear differential equation in polar coordinates, to avoid singularities, which was solved via ToC. The third application explored solving over-constrained differential equations, where there are more constraints than the order of the differential equation. Using ToC through weighted least-squares, we transform the problem into unconstrained problem, which can easily be solved. The fourth application showed how to extend ToC to incorporate inequality constraints by using sigmoid functions. In addition, a discussion on avoiding undershoot/overshoot was included. The fifth and final application showed how to write constrained expressions for triangular domains subject to Dirichlet boundary conditions.

Future work will investigate how some of these applications can be extended to solve optimal control problems. The QP problem subject to linear equality constraints and solving the brachistochrone problem represent the first research thrust in that direction, as these are some of the classic problems in the field of optimal control. Moreover, future work will investigate how these applications can be combined with the application on inequality constraints to solve optimal control problems subject to inequality constraints.

Furthermore, future work will investigate applications of over-constrained differential equations. One example is applications in orbit determination where measurements exceed the order of the differential equation governing the dynamics of the orbiting body. By using the approach developed for weighted least-squares solutions of differential equations, the dynamics of the body and variance of observational measurements can be combined into a single optimization problem. Another area of future work includes multi-objective optimization problems.

Additionally, future research will look to extend constrained expressions on triangular domains to include constraints on the derivatives normal to the sides of the triangle. The obvious application of this research extension will be in solving differential equations on triangular domains; however, this research extension also has applications in meshing, domain discretization, and visualization.

Author Contributions

Conceptualization, H.J., C.L., Y.E. and D.M.; Software, H.J. and C.L.; Supervision, Y.E. and D.M.; Writing original draft, H.J. and C.L.; Writing review & editing, H.J.

Funding

This work was supported by the NASA Space Technology Research Fellowship (NSTRF): Hunter Johnston (NSTRF 2019), Carl Leake (NSTRF 2019).

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| BVP | boundary-value problem |

| DE | differential equation |

| IVP | initial value problem |

| ODE | ordinary differential equation |

| PDE | partial differential equation |

| QP | quadratic programming |

| ToC | Theory of Connections |

References

- Mortari, D. The Theory of Connections: Connecting Points. Mathematics 2017, 5, 57. [Google Scholar] [CrossRef]

- Mortari, D.; Leake, C. The Multivariate Theory of Connections. Mathematics 2019, 7, 296. [Google Scholar] [CrossRef]

- Mortari, D.; Johnston, H.; Smith, L. High accuracy least-squares solutions of nonlinear differential equations. J. Comput. Appl. Math. 2019, 352, 293–307. [Google Scholar] [CrossRef]

- Mortari, D. Least-squares Solution of Linear Differential Equations. Mathematics 2017, 5, 48. [Google Scholar] [CrossRef]

- Gottlieb, D.; Orszag, S.A. Numerical Analysis of Spectral Methods: Theory and Applications; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 1977. [Google Scholar]

- Jain, M.K. Numerical Solution of Differential Equations; Wiley Eastern: New Delhi, India, 1979. [Google Scholar]

- Driscoll, T.A.; Hale, N. Rectangular spectral collocation. IMA J. Numer. Anal. 2016, 36, 108–132. [Google Scholar] [CrossRef]

- Lanczos, C. Applied Analysis. In Progress in Industrial Mathematics at ECMI 2008; Dover Publications, Inc.: New York, NY, USA, 1957; Chapter 7; p. 504. [Google Scholar]

- Wright, K. Chebyshev Collocation Methods for Ordinary Differential Equations. Comput. J. 1964, 6, 358–365. [Google Scholar] [CrossRef]

- Leake, C.; Johnston, H.; Smith, L.; Mortari, D. Approximating Ordinary and Partial Differential Equations Using the Theory of Connections and Least Squares Support Vector Machines. Trans. Neural Netw. Learn. Syst. 2019. submitted. [Google Scholar]

- Hurtado, J.E. Paul Bunyan’s Brachistochrone and Tautochrone. J. Astronaut. Sci. 2000, 48, 207–224. [Google Scholar]

- Bate, R.R.; Mueller, D.D.; White, J.E. Fundamentals of Astrodynamics; Dover Publications, Inc.: New York, NY, USA, 1971; Chapter 2. [Google Scholar]

- Miettinen, K. Nonlinear Multiobjective Optimization. In International Series in Operations Research & Management Science; Springer: New York, NY, USA, 1999. [Google Scholar]

- Shirazi, A.; Najafi, B.; Aminyavari, M.; Rinaldi, F.; Taylor, R.A. Thermal economic environmental analysis and multi-objective optimization of an ice thermal energy storage system for gas turbine cycle inlet air cooling. Energy 2014, 69, 212–226. [Google Scholar] [CrossRef]

- Ellefsen, K.; Lepikson, H.; Albiez, J. Multiobjective coverage path planning: Enabling automated inspection of complex, real-world structures. Appl. Soft Comput. 2017, 61, 264–282. [Google Scholar] [CrossRef]

- Lewis, F.L.; Vrabie, D.L.; Syrmos, V.L. Optimal Control; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2012. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).