Abstract

An algorithm is introduced to find an answer to both inclusion problems and fixed point problems. This algorithm is a modification of Tseng type methods inspired by Mann’s type iteration and viscosity approximation methods. On certain conditions, the iteration obtained from the algorithm converges strongly. Moreover, applications to the convex feasibility problem and the signal recovery in compressed sensing are considered. Especially, some numerical experiments of the algorithm are demonstrated. These results are compared to those of the previous algorithm.

1. Introduction

The concept of inclusion problems and fixed point problems has been interesting to many mathematical researchers. The reason is that these problems can be applied to several other problems. For instance, these problems are applicable to solving convex programming, the minimization problem, variational inequalities, and the split feasibility problem. As a result, some applications of such problems are able to be taken into consideration, such as machine learning, the signal recovery problem, the image restoration problem, sensor networks in computerized tomography and data compression, and intensity modulated radiation therapy treatment planning, see [1,2,3,4,5,6,7,8,9].

First we define all following notations needed throughout the paper. Suppose that H is a real Hilbert space. Define self-maps S and A on H, and a multi-valued operator . Any is said to be a fixed point of S if . Denote the set of all such x by . Definitely, the fixed point problem for the operator S is a problem of seeking a fixed point of S. Then the solution set is . Next, the inclusion problem for the operators A and B is a problem of searching for a point with . Assume the notation defined as the solution set of the problem. Finally, the inclusion and fixed point problem is obviously a combination of these two problems. This means that it is a problem of looking for a point

Thus, the solution set for this problem is .

In literature, a number of tools have been brought to investigate the inclusion problems, see [7,10,11,12,13]. One of the most popular methods, called the forward–backward splitting method, was suggested by Lions and Mercier [14], and Passty [15]. In 1997, Chen and Rockafellar [16] were interested in this method. As a result, the weak convergence theorem was obtained. In order to show that the forward–backward splitting method converges weakly, the additional hypotheses on A and B have to be assumed. Later, Tseng [17] improved this method by weakening the previous assumptions on A and B for weak convergence. This method is called the modified forward–backward splitting method, or Tseng’s splitting algorithm. Recently, Gibali and Thong [6] studied another modified method. To be more precise, the step size rule for the problems was changed according to Mann and viscosity ideas. In addition, this new method converges strongly under suitable assumptions and is convenient as a practical matter.

Besides, there has been numerous research on the inclusion and fixed point problems. The method of approximation of a solution has been developed. In fact, Zhang and Jiang [18] suggested a hybrid algorithm for the inclusion and fixed point problems for some operators. A few years later, Thong and Vinh [19] investigated another method relying on the inertial forward–backward splitting algorithm, Mann algorithm, and viscosity method. On top of that, a very recent work of Cholamjiak, Kesornprom, and Pholasa [20] has been introduced to solve the inclusion problem of two monotone operators and the fixed point problems of nonexpansive mappings.

With preceding inspirational research, this work suggests another algorithm to solve the inclusion and fixed point problems. This study focuses on the inclusion problems for A and B such that A is a Lipschitz continuous and monotone operator and B is a maximal monotone operator, and the fixed point problem for S such that S is nonexpansive. The main result guarantees that this algorithm converges strongly with some appropriate assumptions. Furthermore, some examples of numerical experiment of the proposed algorithm are provided to demonstrate the significant results.

To begin, some definitions and known results needed to support our work are given in the next section. Then the new algorithm is given along with essential conditions in Section 3. The proof that the iteration constructed by this algorithm converges strongly is provided in detail. Next, applications and numerical results to the convex feasibility problem and the signal recovery in compressed sensing are discussed to show the efficiency of the algorithm in Section 4. Lastly, this work is summarized in the Conclusion Section.

2. Mathematical Preliminaries

In what follows, recall the real Hilbert space H. Let C be a nonempty, closed and convex subset of H. Define the metric projection from H onto C by

It has been known for a fact that can be distinguished by

for any and .

Now assume that . Then the following equalities and inequality are valid for inner product spaces,

for any such that .

To obtain the desired results, the following definitions are needed to be used later.

Definition 1.

A self-map S on H is called

- (i)

- firmly nonexpansive if for each ,

- (ii)

- β-cocoercive (or β-inverse strongly monotone) if there is satisfying, for each ,

- (iii)

- L-Lipschitz continuous if there is satisfying, for each ,

- (iv)

- nonexpansive if S is L-Lipschitz continuous when ,

- (v)

- L-contraction if S is L-Lipschitz continuous when .

According to these definitions, it can be observed that every -cocoercive mapping is monotone and -Lipschitz continuous.

Definition 2.

Assume that is a multi-valued operator. The set

is called the graph of S.

Definition 3.

An operator is called

- (i)

- monotone if for all ,

- (ii)

- maximal monotone if there is no proper monotone extension of .

Definition 4.

Let be a maximal monotone. For each , the operator

is called the resolvent operator of S.

It is well known that if is a multi-valued maximal monotone and , then is a single-valued firmly nonexpansive mapping, and is a closed convex set.

Now, to accomplish the purpose of this work, some crucial lemmas are needed as follows. Here, the notations ⇀ and → represent weak and strong convergence, respectively.

Lemma 1.

[21] If is maximal monotone and is Lipschitz continuous and monotone, then is maximal monotone.

Lemma 2.

[22] Let C be a closed convex subset of H, and a nonexpansive mapping with . If there exists in C satisfying and , then .

Lemma 3.

[23] Assume that and are nonnegative sequences of real numbers such that , and is a sequence of real numbers with . Let be a sequence in with . If there exists such that for any ,

then

Lemma 4.

[24] Assume that is a sequence of real numbers. Suppose that there is a subsequence of satisfying for each . Let be a sequence of integers defined by

Then is a nondecreasing sequence with . Moreover, for each , we have and .

3. Convergence Analysis

To find a solution to the inclusion and fixed point problems, a new algorithm is introduced. The convergence of the sequence obtained from the algorithm is proved in Theorem 1. First, some assumptions are required in order to accomplish our goal. In particular, the following Conditions 1 through 4 are maintained in this section. Here, denote the solution set by .

- Condition 1.

- is nonempty.

- Condition 2.

- A is L-Lipschitz continuous and monotone, and B is maximal monotone.

- Condition 3.

- S is nonexpansive, and let be -Lipschitz continuous, where .

- Condition 4.

- Let and be sequences in such that and , and there exist positive real numbers a and b with for each .

Second, the following algorithm is constructed for solving the inclusion and fixed point problems. This algorithm is inspired by the algorithm of Tseng for monotone variational inclusion problems, and the iterative method of Mann [25] and Moudafi [26] viscosity approximating scheme for fixed point problems.

| Algorithm 1 An iterative algorithm for solving inclusion and fixed point problems |

| Initialization: Let and . Assume . |

Iterative Steps: Obtain the iteration as follows:

|

With this algorithm, the following lemmas can be achieved in the same manner as in [6].

Lemma 5.

[6] As given in the algorithm together with all four conditions assumed, is a convergent sequence with a lower bound of .

Lemma 6.

[6] As given in the algorithm together with all four conditions assumed, the following inequalities are true. For any ,

and

We are almost ready to show the strong convergence theorem for our algorithm. The remaining fact needed for the theorem is stated and verified below.

Lemma 7.

As given in the algorithm together with all four conditions assumed, suppose that

If there is a weakly convergent subsequence of , then the limit of belongs to the solution set Ω.

Proof.

Let such that . From , by similar proof as Lemma 7 in [6], we have Since and , it follows that . This together with , by Lemma 2, . Therefore, . □

Finally, the main theorem is presented and proved as follows.

Theorem 1.

Assume that Conditions 1–4 are valid. Let be a sequence obtained from the algorithm with some initial point , and .Then , where .

Proof.

Since , one can find such that for each ,

Consequently, by Equation (7), for any ,

Next, we prove all following claims.

Claim 1. and are bounded sequences.

Since Condition 3 and the inequality Equation (10) hold, the following relation is obtained for each :

Therefore, for any . Consequently, is bounded, and so are and . In addition, is also bounded because f is a contraction. Thus, we have Claim 1.

Now for each , set .

Claim 2. For any ,

Using Equation (4), we get

Applying Equation (7), we have

Therefore, Claim 2 is obtained as follows:

Moreover, we show that the inequality Equation (12) below is true.

Claim 3. For each ,

Indeed, setting . By Condition 3, we get

for , and

Recall that our task is to show that which is now equivalent to show that .

Claim 4. .

Consider the following two cases.

Case a. We can find satisfying for each .

Since each term is nonnegative, it is convergent. Further, notice that the argument in Condition 4 implies that and . Due to the fact that , according to Claim 2,

From Equation (8), we immediately get

In addition, by using the triangle inequality, the following inequalities are obtained:

Obviously, . Note that for each ,

Consequently, by Equation (15) and Condition 4, . Next, for the reason that is bounded, there is so that for some subsequence of . Then Lemma 7 implies that As a result, by the definition of p and Equation (1), it is straightforward to show that

Consider that the following result is obtained because .

Applying Lemma 3 to the inequality from Claim 3 with , , , and , as a consequence, .

Case b. We can find satisfying and for all .

According to Lemma 4, the inequlity is obtained, where is as in Equation (5), and for some . This implies, by Claim 2, for each , as follows.

Similar as in Case a, since , we obtain

and furthermore,

Finally, by Claim 3, for all , the following inequalities hold.

Some simple calculations yield

This follows that . Thus, . In addition, by Lemma 4,

Hence, converges strongly to p. □

4. Applications and Its Numerical Results

The inclusion and fixed points problems are usable to many problems. Owing to this, some applications can be considered. In particular, the algorithm constructed in Section 3 is used for the convex feasibility problem and the signal recovery in compressed sensing. Not only the numerical results of the algorithm, but also the comparison to the numerical results of Gibali and Thong, Algorithm 3.1 in [6], are shown for each problem. As a reference, all numerical experiments presented are obtained from Matlab R2015b running on the same laptop computer.

Example 1.

Assume that . For , let be an inner product on H, and, as a consequence, let be the induced norm on H. Define the half-space

where . Suppose that Q is the closed ball of radius 4 centered at . That is,

Next, given the mappings such that and for , define and , the subdifferential of indicator function on C. Then the convex feasibility problem is a problem of finding a point

Clearly, A is L-Lipschitz continuous, where , and B is maximal monotone, see [27]. For each , choose and , and assume that and . The stopping criterion is set to be when . To solve this problem, we apply our algorithm, and, additionally, Algorithm 3.1 [6] to the problem. Then the numerical experiments are presented in Table 1.

Table 1.

Numerical experiments of Example 1.

Accordingly, the new algorithm yields better results than Algorithm 3.1 [6] with the appropriate choice of the function f. In detail, the new algorithm spends less elapsed time and has fewer iterations than Algorithm 3.1 [6]. This difference occurs since the new algorithm contains the terms of mappings f and S which allow us to be more flexible with better options for f and S. In fact, Algorithm 3.1 [6] is a special case of our algorithm.

Example 2.

Recall that the signal recovery in compressed sensing is able to expressed in a mathematical model as follows.

where is the original signal, is the observed data, is a bounded linear operator, and is the Gaussian noise distributed as for such that . Solving the linear equation system of Equation (23) has been known to be equivalent to solving the convex unconstrained optimization problem:

where is a parameter. Next, let be the gradient of g and the subdifferential of h, where and . Then is -cocoercive, and is nonexpansive for any , see [28]. Moreover, is maximal monotone, see [13]. Additionally, by Proposition 3.1 (iii) of [4], x is a solution to Equation (24) ⇔ ⇔ for anywhere .

In this experiment, the size of signal is selected to be , and , where the original signal contains m nonzero elements. Let T be the Gaussian matrix generated by command . Choose and . Assume the same , , , and as in the preceding example. Given that the initial point is chosen to be , use the mean-squared error to measure the restoration accuracy. Precisely, , where x is the original signal. Then the results are displayed in Table 2. In other words, the number of iterations and elapsed time of each algorithm are provided for different numbers of nonzero elements. The numerical results show that our algorithm has a better elapsed time and number of iterations than Algorithm 3.1 [6].

Table 2.

Numerical comparison between Algorithm 3.1 [6] and the new algorithm for .

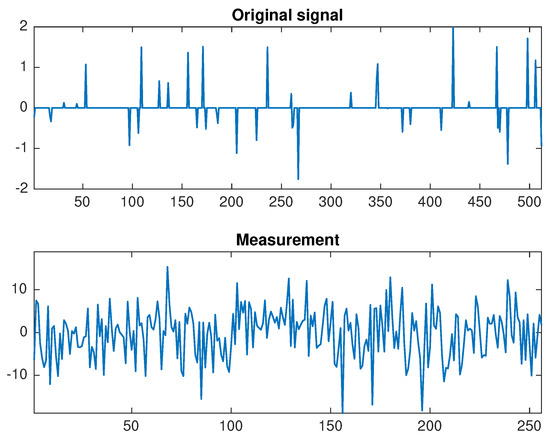

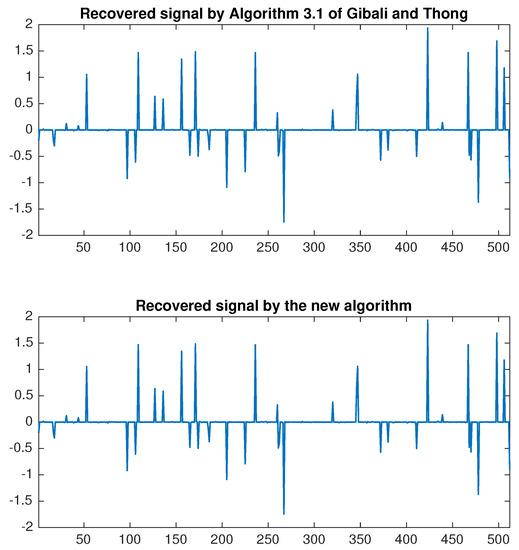

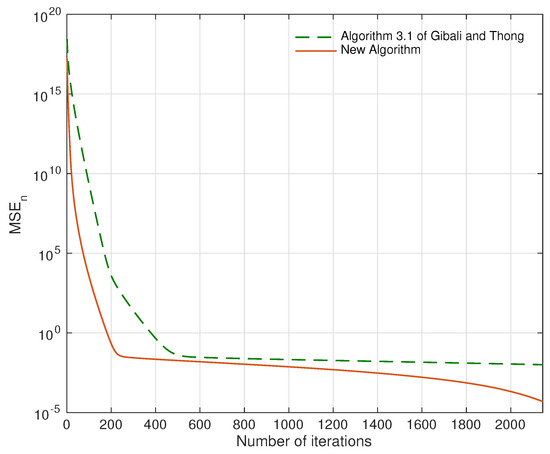

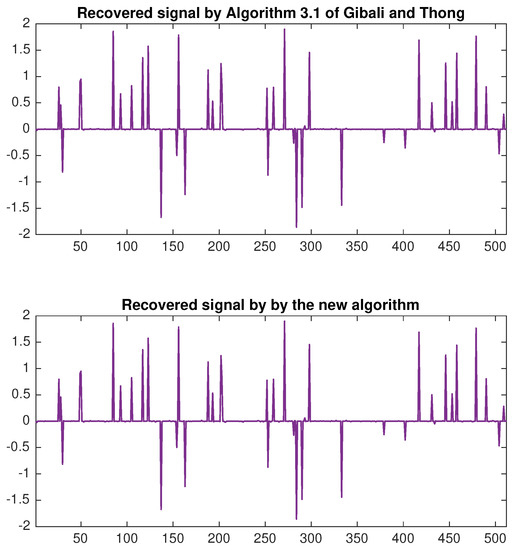

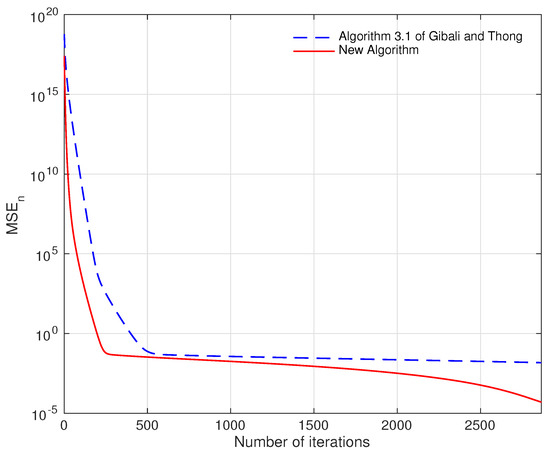

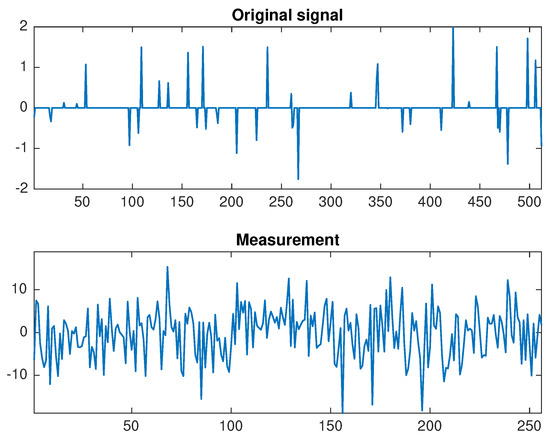

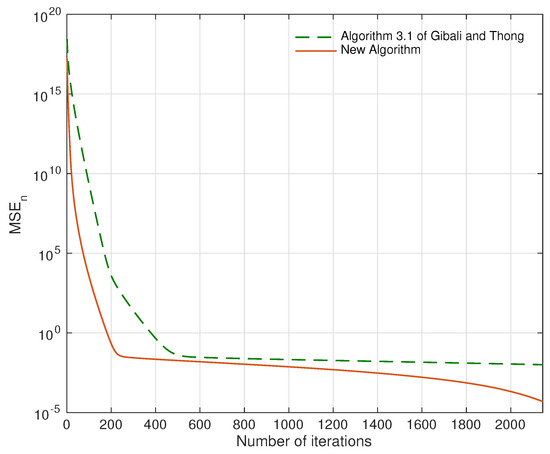

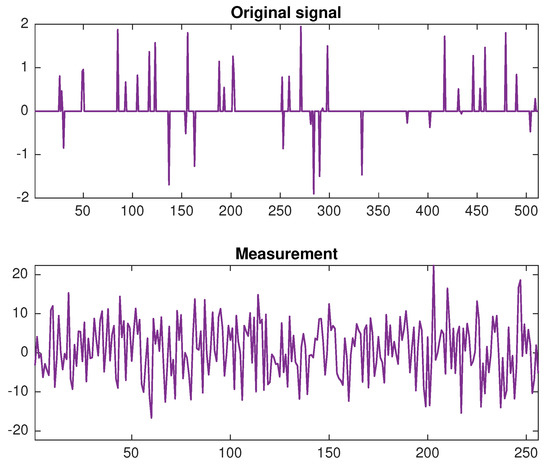

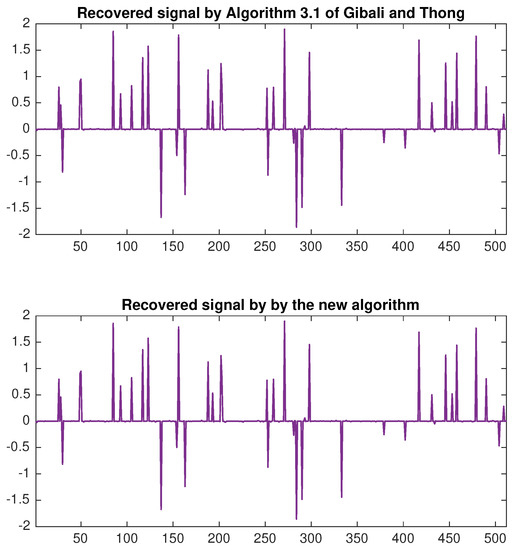

On top of that, we compare the recovery signals of each algorithm. In Figure 1, the original signal and the measurement are shown in the case when and . Then the signals recovered by using Algorithm 3.1 [6] and the new algorithm are presented in Figure 2. Therefore, the errors of each algorithm are compared in Figure 3. The outcome is that the signal recovered from our algorithm contains less error than Algorithm 3.1 [6].

Figure 1.

The original signal and the measurement when and .

Figure 2.

The recovery signals by Algorithm 3.1 [6] and the new algorithm when and .

Figure 3.

Error plotting of Algorithm 3.1 [6] and the new algorithm when and .

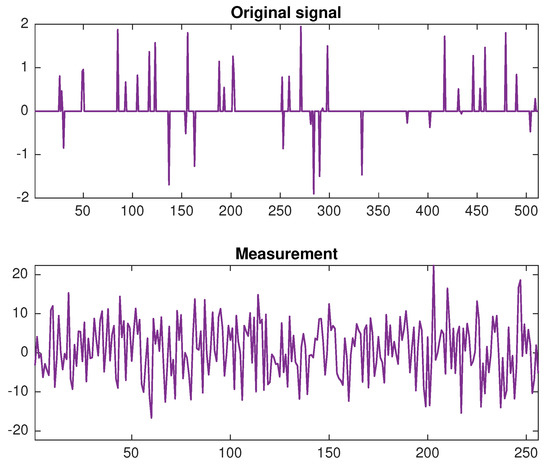

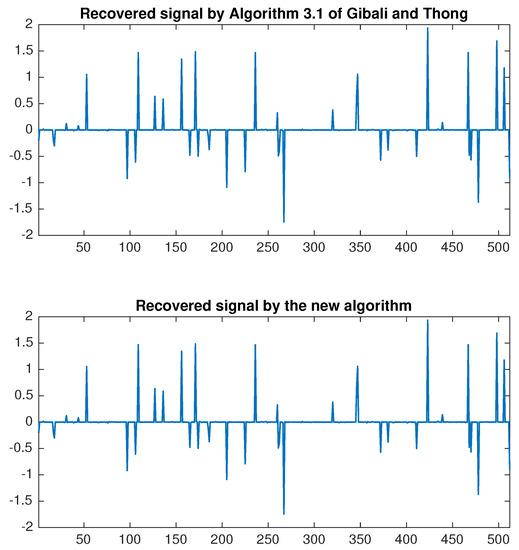

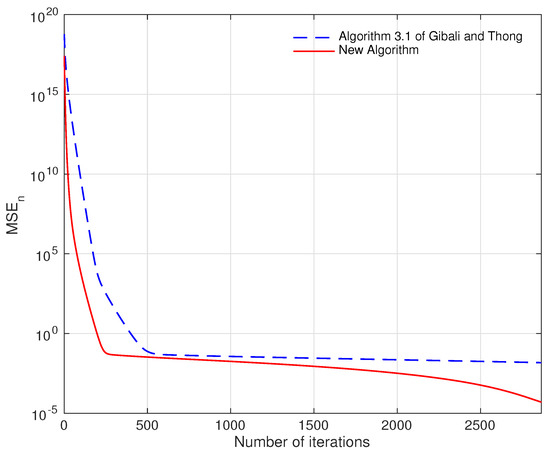

Next, another numerical result is given for in Table 3. Likewise, the original signal and the measurement are shown when in Figure 4. By using Algorithm 3.1 [6] and the new algorithm, the signal is recovered as in Figure 5. Then Figure 6 shows that the error of the result obtained from our algorithm is less than the result obtained from Algorithm 3.1 [6].

Table 3.

Numerical comparison between Algorithm 3.1 [6] and the new algorithm for .

Figure 4.

The original signal and the measurement when and .

Figure 5.

The recovery signals by Algorithm 3.1 [6] and the new algorithm when and .

Figure 6.

Error plotting of Algorithm 3.1 [6] and the new algorithm when and .

Overall, based on Table 2 and Table 3, similar results as in Example 1 is obtained. The new algorithm improves the elapsed time, and reduces the number of iterations compared to Algorithm 3.1 [6]. This means that the new algorithm displays better results than Algorithm 3.1 [6], mainly because of the more general setting in the new algorithm.

5. Conclusions

To sum up, a modified Tseng type algorithm is created based on the methods of Mann iterations and viscosity approximation. The purpose is to find a common solution to the inclusion problem of an L-Lipschitz continuous and monotone single-valued operator and a maximal monotone multivalued operator, and the fixed point problem of a nonexpansive operator. With some extra conditions, the iteration defined by the algorithm converges strongly to the solution of the problem. In applications, this algorithm can be applied to the convex feasibility problem and the signal recovery in compressed sensing. The numerical experiments of our algorithm yield better results compared to the previous algorithm.

Author Contributions

Writing–original draft preparation, R.S.; writing–review and editing, A.K.

Funding

This research was funded by Centre of Excellence in Mathematics, The Commission on Higher Education, Thailand, and Chiang Mai University.

Acknowledgments

This research was supported by Centre of Excellence in Mathematics, The Commission on Higher Education, Thailand, and this research work was partially supported by Chiang Mai University.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Censor, Y.; Bortfeld, T.; Martin, B.; Trofimov, A. A unified approach for inversion problems in intensity-modulated radiation therapy. Phys. Med. Biol. 2006, 51, 2353–2365. [Google Scholar] [CrossRef] [PubMed]

- Censor, Y.; Elfving, T. A multiprojection algorithm using Bregman projections in a product space. Numer. Algorithms 1994, 8, 221–239. [Google Scholar] [CrossRef]

- Cholamjiak, P.; Shehu, Y. Inertial forward–backward splitting method in Banach spaces with application to compressed sensing. Appl. Math. 2019, 64, 409–435. [Google Scholar] [CrossRef]

- Combettes, P.L.; Wajs, V.R. Signal recovery by proximal forward–backward splitting. Multiscale Model. Simul. 2005, 4, 1168–1200. [Google Scholar] [CrossRef]

- Duchi, J.; Shalev-Shwartz, S.; Singer, Y.; Chandra, T. Efficient projections onto the l1-ball for learning in high dimensions. In Proceedings of the 25th International Conference on Machine Learning, Helsinki, Finland, 5–8 July 2008. [Google Scholar]

- Gibali, A.; Thong, D.V. Tseng type methods for solving inclusion problems and its applications. Calcolo 2018, 55, 49. [Google Scholar] [CrossRef]

- Kitkuan, D.; Kumam, P.; Martínez-Moreno, J.; Sitthithakerngkiet, K. Inertial viscosity forward–backward splitting algorithm for monotone inclusions and its application to image restoration problems. Int. J. Comput. Math. 2019, 1–19. [Google Scholar] [CrossRef]

- Suparatulatorn, R.; Khemphet, A.; Charoensawan, P.; Suantai, S.; Phudolsitthiphat, N. Generalized self-adaptive algorithm for solving split common fixed point problem and its application to image restoration problem. Int. J. Comput. Math. 2019, 1–15. [Google Scholar] [CrossRef]

- Suparatulatorn, R.; Charoensawan, P.; Poochinapan, K. Inertial self-adaptive algorithm for solving split feasible problems with applications to image restoration. Math. Methods Appl. Sci. 2019, 42, 7268–7284. [Google Scholar] [CrossRef]

- Attouch, H.; Peypouquet, J.; Redont, P. Backward-forward algorithms for structured monotone inclusions in Hilbert spaces. J. Math. Anal. Appl. 2018, 457, 1095–1117. [Google Scholar] [CrossRef]

- Dong, Y.D.; Fischer, A. A family of operator splitting methods revisited. Nonlinear Anal. 2010, 72, 4307–4315. [Google Scholar] [CrossRef]

- Huang, Y.Y.; Dong, Y.D. New properties of forward–backward splitting and a practical proximal-descent algorithm. Appl. Math. Comput. 2014, 237, 60–68. [Google Scholar] [CrossRef]

- Rockafellar, R.T. Monotone operators and the proximal point algorithm. SIAM J. Control Optim. 1976, 14, 877–898. [Google Scholar] [CrossRef]

- Lions, P.L.; Mercier, B. Splitting algorithms for the sum of two nonlinear operators. SIAM J. Numer. Anal. 1979, 16, 964–979. [Google Scholar] [CrossRef]

- Passty, G.B. Ergodic convergence to a zero of the sum of monotone operators in Hilbert space. J. Math. Anal. Appl. 1979, 72, 383–390. [Google Scholar] [CrossRef]

- Chen, H.G.; Rockafellar, R.T. Convergence rates in forward–backward splitting. SIAM J. Optim. 1997, 7, 421–444. [Google Scholar] [CrossRef]

- Tseng, P. A modified forward–backward splitting method for maximal monotone mappings. SIAM J. Control Optim. 2000, 38, 431–446. [Google Scholar] [CrossRef]

- Zhang, J.; Jiang, N. Hybrid algorithm for common solution of monotone inclusion problem and fixed point problem and application to variational inequalities. SpringerPlus 2016, 5, 803. [Google Scholar] [CrossRef][Green Version]

- Thong, D.V.; Vinh, N.T. Inertial methods for fixed point problems and zero point problems of the sum of two monotone mappings. Optimization 2019, 68, 1037–1072. [Google Scholar] [CrossRef]

- Cholamjiak, P.; Kesornprom, S.; Pholasa, N. Weak and strong convergence theorems for the inclusion problem and the fixed-point problem of nonexpansive mappings. Mathematics 2019, 7, 167. [Google Scholar] [CrossRef]

- Brézis, H.; Chapitre, I.I. Operateurs maximaux monotones. North-Holland Math. Stud. 1973, 5, 19–51. [Google Scholar]

- Goebel, K.; Kirk, W.A. Topics in Metric Fixed Point Theory; Cambridge University Press: Cambridge, UK, 1990. [Google Scholar]

- Xu, H.K. Iterative algorithms for nonlinear operators. J. Lond. Math. Soc. 2002, 66, 240–256. [Google Scholar] [CrossRef]

- Maingé, P.E. Strong convergence of projected subgradient methods for nonsmooth and non- strictly convex minimization. Set-Valued Anal. 2008, 16, 899–912. [Google Scholar] [CrossRef]

- Mann, W.R. Mean value methods in iteration. Proc. Am. Math. Soc. 1953, 4, 506–510. [Google Scholar] [CrossRef]

- Moudafi, A. Viscosity approximating methods for fixed point problems. J. Math. Anal. Appl. 2000, 241, 46–55. [Google Scholar] [CrossRef]

- Bauschke, H.H.; Combettes, P.L. Convex Analysis and Monotone Operator Theory in Hilbert Spaces; CMS Books in Mathematics; Springer: New York, NY, USA, 2011. [Google Scholar]

- Iiduka, H.; Takahashi, W. Strong convergence theorems for nonexpansive nonself-mappings and inverse-strongly-monotone mappings. J. Convex Anal. 2004, 11, 69–79. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).