1. Introduction

With the development of computer computing resources, the field of computer vision has developed rapidly. Image segmentation is not only an important branch of computer vision, but also one of the most difficult problems in image processing [

1]. Image segmentation is widely used in automatic driving [

2,

3], intelligent medicine [

4], industrial testing [

5] and other fields. Currently, the most widely used image segmentation methods include threshold segmentation [

6], edge segmentation [

7], region segmentation [

8], clustering segmentation [

9,

10] and deep learning segmentation [

11,

12]. Threshold segmentation has gained attention due to its simplicity, low computational cost, and ease of implementation. Threshold segmentation has become a research hotspot owing to its advantages of simplicity, small computation and easy solution [

13].

Threshold segmentation can be divided into two types, single-threshold segmentation and multi-threshold segmentation [

14]. Single-threshold segmentation divides an image into target and background, whereas multi-threshold segmentation segments an image into multiple regions. Because single-threshold segmentation often fails to provide the necessary patterns for the problem at hand, multi-threshold segmentation is generally preferred. However, with the increase in thresholds, the computational complexity of traditional segmentation methods grows exponentially, leading to issues such as low accuracy and slow convergence speed. In order to solve this problem, an increasing number of researchers are introducing swarm intelligence optimization algorithms for image segmentation to enhance accuracy and speed [

15]. Common multi-threshold segmentation methods include Otsu’s entropy [

16,

17], Kapur entropy [

18,

19], fuzzy entropy [

20] and the cross-entropy method [

21,

22]. The Kapur entropy technique is insensitive to regions and preserves details better, which is why it has been widely applied in multi-level image segmentation.

However, multi-level threshold segmentation techniques rely heavily on threshold combinations and involve a large search space [

23]. Therefore, improving the segmentation performance necessitates optimization of the multi-level threshold selection process. Traditional methods often optimize the optimal thresholds by employing operational research techniques or exhaustive enumeration methods. However, as the number of thresholds increases, the computational complexity grows exponentially. To address this issue, researchers have introduced heuristic algorithms, which alleviate the problem of low computational efficiency to a certain extent. Houssein, E. H. et al. [

24] proposed an improved chimp optimization algorithm (ICHOA) and applied it to the problem of multi-level threshold image segmentation. Sabha, M. et al. [

25] introduced a cooperative simulated meta-heuristic algorithm called CPGH, which combines particle swarm optimization (PSO), gray wolf optimization (GWO), and Harris hawks optimization (HHO)to run in parallel, ultimately applied to coronavirus image segmentation. Hao, S. et al. [

26] developed an improved salp swarm algorithm (ILSSA) that integrates chaotic mapping with local escape operators and applied it to dermoscopic skin cancer image segmentation. Chauhan, D. et al. [

27] presented a crossover optimization artificial electric field algorithm (AEFA) that uses a Kapur’s entropy function, applied to the Berkeley segmentation dataset. Qian, Y. et al. [

28] proposed a continuous foraging ant colony optimization algorithm (SSACO) based on salp foraging (SSACO), and applied it to remote sensing image segmentation. Dhal, K. G. et al. [

29] introduced an improved slime mould algorithm (ISMA), employing oppositional learning and differential evolution mutation strategies to enhance the algorithm’s performance, applied to the segmentation of unlabeled white blood cell images. Shi, J. et al. [

30] proposed an improved variant of the whale optimization algorithm (GTMWOA) and applied it to lupus nephritis images. Houssein, E. et al. [

31] introduced an efficient search rescue optimization algorithm (SAR) based on oppositional learning for segmenting blood cell images and addressing multi-level threshold issues.

SIO is a powerful family of heuristic algorithms that have demonstrated remarkable capabilities in solving computationally intensive and complex problems, where classical algorithms often prove inefficient [

32]. In most cases, swarm intelligence algorithms begin by randomly generating a population in the early stages of iteration and utilize specific formulas to explore the search space during the process, ultimately identifying the optimal solution. These operations are inspired by natural phenomena such as natural behaviors, physical rules, social interactions, and evolutionary principles. Representative SIO methods, including Particle Swarm Optimization (PSO) [

33], Differential Evolution (DE) [

34], Artificial Bee Colony (ABC) [

35] and the Whale Optimization Algorithm (WOA) [

36], have been successfully applied to various fields such as path planning, power systems and image processing. Although SIO methods differ in form, their core idea remains similar: simulating natural behaviors to construct mathematical models for solving specific problems.

EEFO, a novel SIO algorithm proposed in 2024, simulates the predatory behavior of electric eels [

37]. It has been tested using various benchmark functions and engineering problems and compared with standard algorithms such as the Whale Optimization Algorithm (WOA), the Arithmetic Optimization Algorithm (AOA) [

38], Harris Hawks Optimization (HHO) [

39] and Beluga Whale Optimization (BWO) [

40]. Experimental results indicate that EEFO exhibits significant potential. Thus, EEFO holds promise for further research and applications. However, there is still considerable room for improvement when applying EEFO to complex problems such as image segmentation. According to the “No Free Lunch” theorem [

41], no algorithm is universally optimal for solving all problems. In other words, every algorithm has its limitations. Nevertheless, its performance can be enhanced by introducing new strategies and adjusting the parameter settings.

In this study, we further develop the EEFO method to enhance its effectiveness in addressing the challenges of color image segmentation.

The main contributions of this study are as follows:

i. This paper proposes an improved Electric Eel Foraging Optimization, which incorporates a differential evolution strategy, a quasi-oppositional learning strategy and the Cauchy mutation strategy, named MIEEFO.

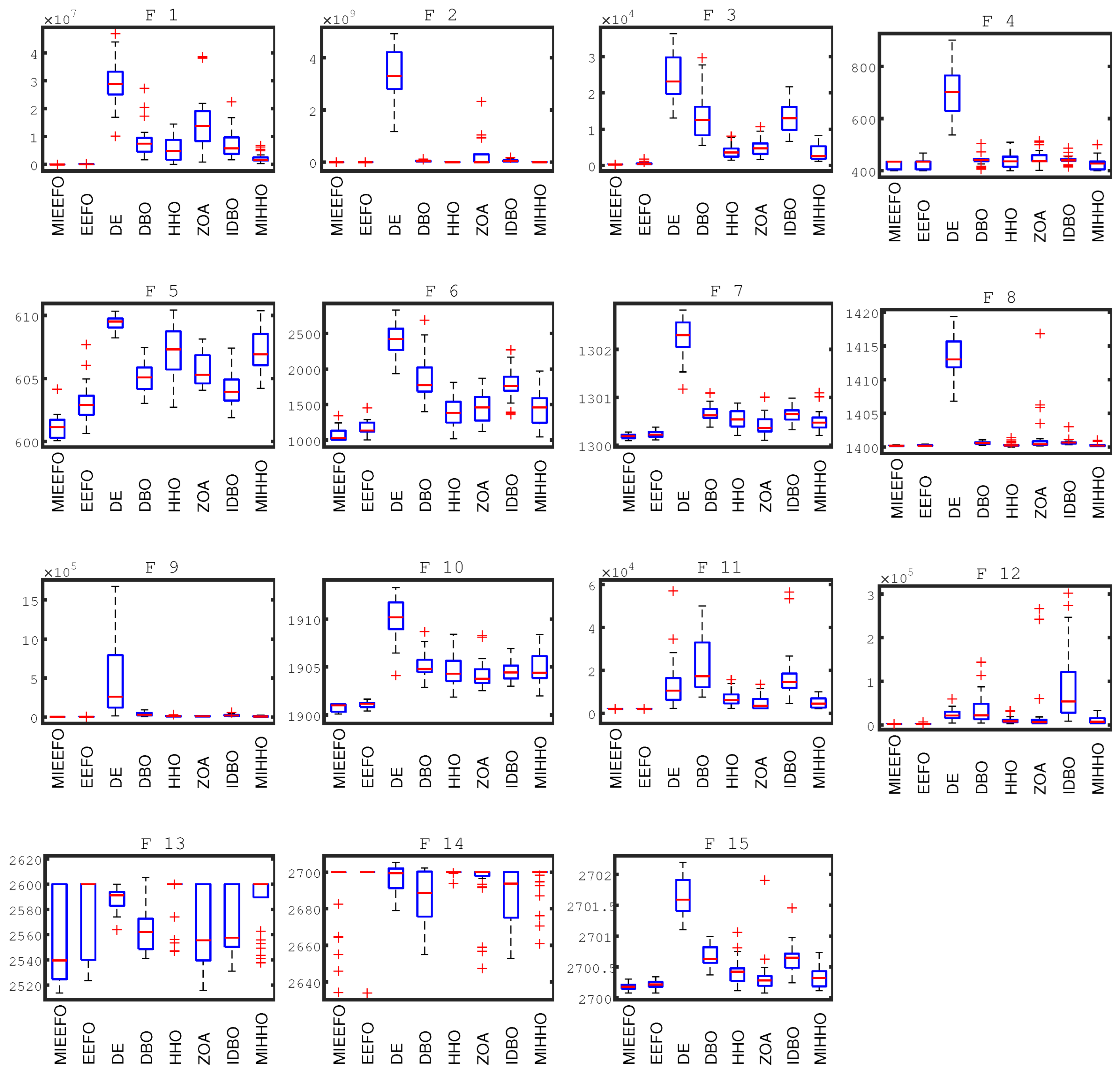

ii. A set of 15 benchmark test functions are used to evaluate the algorithm, and MIEEFO is compared with several state-of-the-art heuristic algorithms. Analysis using different evaluation methods demonstrates that MIEEFO exhibits superior performance.

iii. We introduce an efficient MIEEFO-based color image segmentation technique. Experiments were conducted comparing MIEEFO with other similar algorithms at multiple threshold levels, using Kapur’s entropy as the fitness function. The results show that the segmentation method based on MIEEFO significantly improves the accuracy of image segmentation.

iv. The proposed image segmentation method was applied to forest fire images, providing an effective solution for segmenting forest fire imagery.

The remainder of this paper is organized as follows:

Section 2 provides an overview of the EEFO algorithm.

Section 3 describes the proposed enhanced MIEEFO algorithm.

Section 4 presents the simulation results of MIEEFO for the test functions.

Section 5 outlines the image segmentation principles of MIEEFO and provides experimental results of the segmentation method. Finally,

Section 6 presents our conclusions and future work.

5. MIEEFO Is Used for Image Segmentation

In this section, the MIEEFO algorithm is compared with six other algorithms—EEFO, LHHO, NHHGWO [

50], HSMAWOA [

51] and HVSFLA [

52]—on the Multithreshold Image Segmentation (MTLIS) task. The experiments were conducted on images 37073, 42049, 94079, 118035, 189011 and 385028. The segmentation was performed at four threshold levels (4, 6, 8, and 12), where 4 and 6 represent low threshold levels, and 8 and 12 represent high threshold levels. To further ensure the fairness of the experiment, we conducted tests using the same parameters. The population size was set to 30, the number of iterations to 500, and the image resolution to 1980 × 1080. The experiment was conducted on a computer with an Intel(R) Core(TM) i7-8750H CPU, a clock speed of 2.2 GHz and 16GB RAM, running the Windows 10 (64-bit) operating system, and using MATLAB 2022b as the software platform. The experimental results are evaluated using PSNR, FSIM and SSIM as performance metrics.

5.1. Kapur Entropy

In this subsection, for a given image with a gray level of G, ranging from 0 to

, and a total of N pixels, the i-th intensity level has a frequency of

.

The probability of the intensity class is given by Equation (

31).

Assume that M has the following thresholds:

, at

. Using the threshold value, a given image is divided into segments of M + 1, and given the following symbols:

,

and

. MIEEFO uses Equation (32) as the objective function, and the optimal solution of

in MIEEFO is the optimal threshold.

5.2. Performance Specifications

In order to effectively evaluate the quality of the image segmentation results, PSNR, SSIM and FSIM are used as evaluation indexes.

Figure 6 present the original image and corresponding histograms for each color channel.

The Peak Signal-to-Noise Ratio (PSNR) has been one of the most commonly used objective metrics for evaluating image quality in recent years. It primarily measures the error between corresponding pixel values of two images. Mathematically, PSNR is defined as follows:

where

and

represent the pixel values of the original image and the distorted image at position and

is the total number of pixels in the image.

PSNR is expressed in decibels (dB), and higher PSNR values generally indicate better image quality.

The Structural Similarity Index (SSIM) is a metric used to measure the similarity between two images, typically before and after compression or processing.

The SSIM formula is expressed as follows:

where

and

are the mean intensities of images I and Seg, respectively.

and

are the variances of images I and Seg.

is the covariance between I and Seg;

and

are small constants used to stabilize the division, preventing instability when the denominator is close to zero.

The SSIM value ranges from 0 to 1. A higher SSIM value indicates smaller differences between the two images, reflecting better segmentation quality and effectiveness. Conversely, a lower SSIM indicates greater dissimilarity, often implying poorer segmentation quality.

The FSIM metric focuses on perceptually relevant features. A higher FSIM value indicates a higher similarity between two images, presenting better image quality or segmentation accuracy.

The FSIM formula is typically expressed as follows:

where

is the local similarity measure computed for the pixel X and

is the importance weight assigned to pixel X, where

.

represents the set of all pixels in the image.

where

represents the feature similarity of the image, and

represents the gradient similarity of the image.

and

denote the gradient magnitudes of the reference image and the enhanced image at pixel X, respectively. The parameters

and

are generally taken as 1.

The FSIM metric focuses on perceptually relevant features, making it more aligned with human visual perception compared to general pixel-wise metrics. A higher FSIM value (close to 1) indicates higher similarity between the two images, suggesting better image quality or segmentation accuracy.

5.3. Image Segmentation Results

This article compares Kapur entropy multithreshold image segmentation algorithms based on EEFO, LHHO, NHHGWO, HSMAWOA, and HVSFLA. Meanwhile,

Figure 7,

Figure 8 and

Figure 9 present bar charts of PSNR, SSIM and FSIM results across multiple thresholds, providing a clearer display of the evaluation results.

Figure 10 and

Figure 11 show the segmentation results of different algorithms with thresholds of 4 and 12. From

Figure 10 and

Figure 11, it can be seen that as the threshold increases, the images become clearer, indicating that a higher threshold can effectively capture more information.

Table 5,

Table 6 and

Table 7 provide the numerical evaluation results for the PSNR, SSIM and FSIM in the image segmentation task. The data show that MIEEFO generally outperforms other algorithms. Additionally, as the threshold gradually increased, the PSNR, FSIM and SSIM values of the segmented images also increased. Experimental results demonstrate that MIEEFO has a significant advantage in experiments based on Kapur entropy threshold segmentation.

5.4. Forest Fire Image Segmentation

In forest ecosystems, wildfires are characterized by their sudden onset and uncontrollabled nature. Once they exceed human control, they can cause severe damage to human lives and property, as well as significant harm to the ecological environment, and ultimately evolve into forest fires. An image-segmentation-based forest fire detection technique was proposed to minimize the damage caused by forest fires. The goal of forest fire image segmentation is to separate the fire-affected areas from the forest images for subsequent fire identification [

53]. To verify the effectiveness of this algorithm in practical engineering applications, MIEEFO is used to segment forest fire images. Images captured using digital cameras were used as experimental samples.

Figure 12 shows two forest fire images. All algorithms were tested under the same experimental conditions to ensure objectivity and fairness of the experiment. The algorithm parameters were set as described in the previous section. To verify the effectiveness of the algorithm in different dimensions, the number of thresholds used in the experiment was set to 2, 4, 8, 10 and 12. The image segmentation results of the multilevel thresholding algorithm based on MIEEFO for the different dimensions are shown in

Figure 13. There is no obvious contrast or distinction between the fire-affected areas and background, which limits the optimization ability of the algorithm.

The segmentation results show that the MIEEFO-based algorithm is proficient in identifying fire regions on forest surfaces. In the image segmentation experiments, MIEEFO was compared to EEFO, LHHO, NHHGWO, HSMAWOA and HVSFLA, and the experimental results were evaluated using three metrics: FSIM, SSIM and PSNR. The data for the performance of each algorithm in the segmentation experiments are shown in

Table 8,

Table 9 and

Table 10. The experimental results indicate that MIEEFO achieved the best SSIM, FSIM and PSNR values, demonstrating superior performance. Therefore, MIEEFO provided stable results and excellent performance across different threshold levels. The MIEEFO-based segmentation algorithm demonstrates ideal segmentation quality for forest fire detection. From the perspective of segmentation effectiveness, the MIEEFO-based algorithm can efficiently segment forest fire regions. In conclusion, this algorithm can solve optimization problems in various dimensions and effectively address complex engineering challenges.

6. Conclusions and Future Works

This paper proposes an improved Electric Eel Optimization Algorithm (MIEEFO) and tests it on 15 benchmark functions. MIEEFO is compared with EEFO, DE, DBO, HHO, ZOA, IDBO and MIHHO. The experimental results clearly demonstrate that MIEEFO exhibits superior exploration, exploitation, robustness and convergence accuracy. Additionally, MIEEFO is applied to the problem of color image segmentation. The test images include benchmark color images from the Berkeley database and forest fire images from real-world engineering problems. Using Kapur’s entropy as the fitness function, MIEEFO is compared with EEFO, LHHO, NHHGWO, HSMAWOA and HVSFA under different threshold levels. The experimental results show that the segmentation algorithm based on MIEEFO outperforms other algorithms across various threshold levels, proving the effectiveness of the model.

In future work, our research will focus on the following aspects: On the one hand, while the algorithm has already produced reliable results, additional strategies can be incorporated to further balance exploration and exploitation capabilities, thereby enhancing the convergence speed of EEFO. On the other hand, the improved MIEEFO can be applied to more complex domains, such as power systems, feature selection, data mining, artificial intelligence and other benchmark functions.