1. Introduction

The increasing frequency and intensity of wildfires, exacerbated by climate change, highlight the urgent need for effective detection and management strategies to protect ecosystems, human lives, and economic assets [

1]. As wildfires become more unpredictable and widespread, early detection is crucial for minimizing damage and improving response times. However, traditional fire detection methods, such as satellite imaging and ground-based sensors, often suffer from delayed detection, limited coverage, and high operational costs [

2].

Recent advancements in image processing, particularly in object detection [

3] and image segmentation [

4], have significantly improved fire detection accuracy and efficiency. In this context, Uncrewed Aircraft Systems (UASs) have emerged as a promising tool for real-time smoke detection, providing critical support for wildfire prevention and management [

5,

6]. Unlike conventional methods, UASs can rapidly navigate hazardous environments, offering a flexible and cost-effective solution for monitoring vast and inaccessible areas. By leveraging aerial imagery and sophisticated computer vision algorithms, UAS can detect smoke at its early stages, enabling authorities to respond proactively before fires escalate.

Moreover, by integrating advanced vision-based techniques with artificial intelligence, UASs enable automated smoke detection and tracking in dynamic environments [

7]. With their vision-based capabilities, UASs offer significant potential for wildfire surveillance, identification, and management [

8]. This approach mitigates both the environmental and social impacts of wildfires, highlighting the critical role of UASs in modern fire management strategies.

A key challenge in vision-based fire detection using video data is the limitation of object detection algorithms. One widely used real-time object detection algorithm is You Only Look Once (YOLO) [

9]. Leveraging a Convolutional Neural Network (CNN) architecture, YOLO quickly detects and classifies objects in an image by dividing it into a grid of pixels. YOLOv7 [

10] improves upon the original YOLO algorithm by enhancing accuracy and speed through the introduction of Extended Efficient Layer Aggregation Networks (E-ELAN).

While YOLOv7 performs well on static images, its effectiveness declines in video applications. This is because the algorithm processes each video frame independently, disregarding temporal relationships between frames. For instance, due to the temporal dependency of adjacent frames, YOLOv7’s bounding boxes may flicker, leading to inconsistent detections. This can cause errors such as misclassifying clouds as smoke or missing smoke detections in some frames, even when correctly identified in previous ones. To address these limitations, we propose a time-series analysis approach to enhance YOLOv7’s performance by incorporating temporal information, thereby improving the robustness and reliability of UAS-based wildfire detection.

In simple terms, a time series refers to a sequence of variable values recorded at different points in time, such as daily temperature measurements at a weather station [

11]. Time series data often exhibits long-term trends, and identifying and extracting these underlying trends can be crucial for better understanding and analysis. Trend extraction is widely used across various fields. For example, the power sector relies on it to forecast daily electricity production [

12], while the Department of Transportation uses it to predict state traffic patterns, helping individuals plan their travel more effectively [

13].

Trend extraction methods are generally classified into parametric and non-parametric approaches. Classical parametric trend estimation methods, such as Ordinary Least Squares (OLS), include median-of-pairwise slopes regression [

14] and segmented regression [

15]. The advantage of parametric methods lies in their strong interpretability; when the chosen model accurately represents the underlying data, these methods can achieve high accuracy. However, real-world data often contains unpredictable factors, making it difficult to ensure that a parametric model perfectly fits the data. In such cases, non-parametric trend estimators, such as Hodrick-Prescott (HP) filtering [

16] and

-trend filtering [

17], are often preferred.

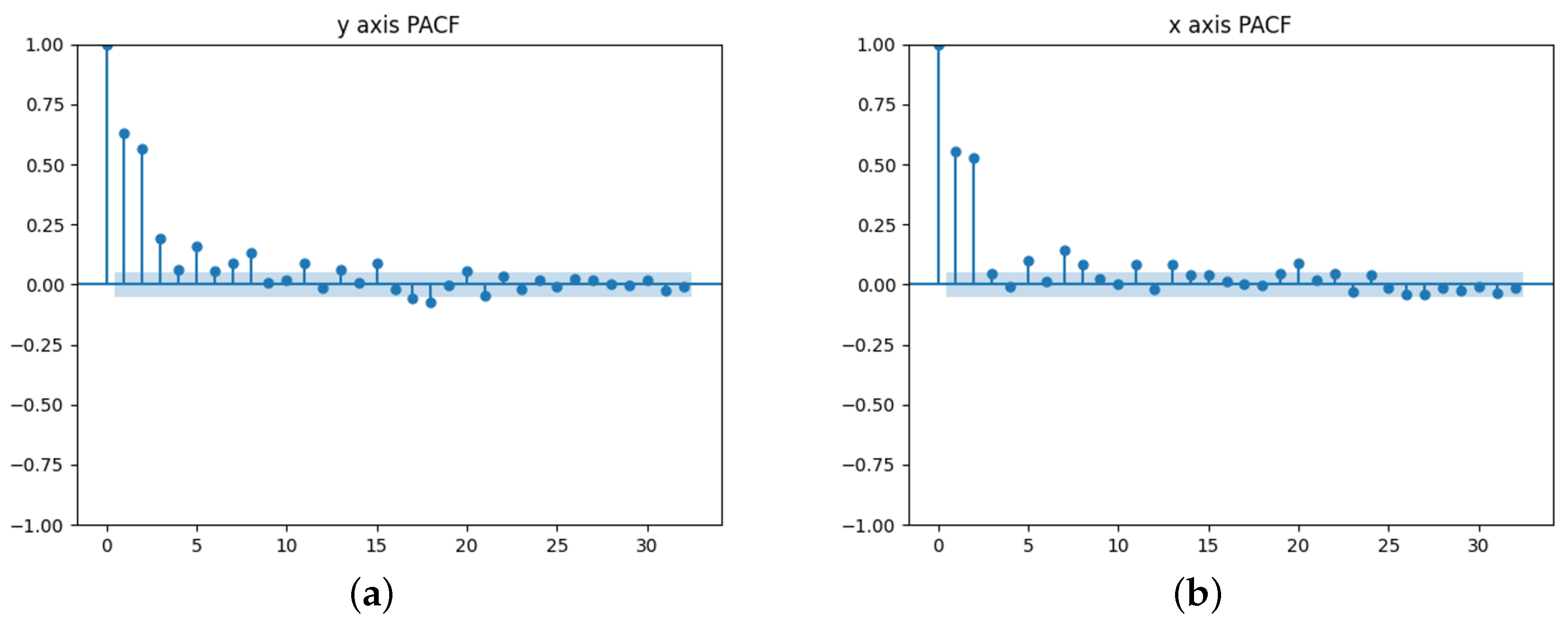

In this paper, the proposed method falls under the category of parametric trend estimation, as the prescribed fire environment during UAS deployment remains highly consistent. Compared to OLS, the proposed method incorporates additional constraints in the dataset, making it useful not only for stabilizing the YOLOv7 algorithm by predicting smoke instances missed by YOLOv7, but also for identifying key factors influencing smoke trends, such as wind speed, forest type, and UAS movement. The following are the key contributions of this paper:

Development of a Parametric Trend Estimation Method for Non-Stationary Time Series: We introduce a novel approach to accurately decompose time-series data into its long-term and short-term components, assuming the short-term trend follows an autoregressive (

) model. This method leverages the inherent autocorrelation in time-series data to identify patterns and similarities between past and present data points, as is commonly observed in such datasets [

18].

Theoretical Justification of the Model: We provide a rigorous theoretical foundation for the proposed parametric estimation method. Under two key assumptions, we demonstrate that the method can be optimized using an MCMC algorithm and that the algorithm converges over successive iterations.

Application to Wildfire Smoke Detection in Video: The proposed trend estimation method is applied to real-world smoke video data under the Hidden Markov Chain (HMM) structure. Experimental results demonstrate that the method effectively enhances smoke detection by correcting both missed and erroneous detections.

This paper is organized as follows:

Section 2 presents some popular trend estimation methods currently in use.

Section 3 describes the overall model, assumptions, theoretical support for applying MCMC estimation, and the MCMC algorithm.

Section 4 provides two simulation studies to visualize the algorithm’s efficiency.

Section 5 compares our model estimation with the YOLOv7 [

10] using a wildfire video in the real world.

Section 7 shows the future research direction based on the model, and

Section 8 summarizes our contribution.

2. Related Works

As mentioned, due to the unpredictable factors when collecting data, popular examples of trending estimation in time series fields are the HP filtering and

-trend filtering. Compared to HP filtering,

-trend filtering estimates the trend using a simple linear regression. The benefit of this approach is its interpretability. However, the optimization method for

-trend filtering is slightly more complicated due to the use of the

penalty. A modern solution to this optimization problem is to apply the Markov Chain Monte Carlo (MCMC) process [

19]. Without the optimization problem, HP filtering is popular in economics [

20]. However, since this method is nonparametric, the model lacks interpretability [

21].

Another issue is that both HP filtering and filtering assume that the error terms are independent of time, meaning the time series consists of a single trend function and an error term with constant variance over time (i.e., the error is stationary). However, this stationarity assumption may not be appropriate for analyzing wildfire detection videos, as the motion of wildfire smoke in these videos is influenced by two key time-dependent factors. The first factor is the long-term trend, which is primarily determined by the motion of the UAS capturing the video. The second factor is the short-term trend, which depends on dynamic environmental conditions such as current wind speed, wind direction, wildfire spread, and other contextual variables. This short-term trend introduces a non-stationary error component into the model, making the stationary error assumption inadequate.

In addition to classical HP filtering and

filtering, a newer trend estimation method is Singular Spectrum Analysis (SSA) [

22,

23,

24,

25]. SSA transforms the time series into a trajectory matrix and applies Principal Component Analysis (PCA) to decompose the matrix into a linear combination of elementary matrices, effectively extracting the latent function. However, similar to HP filtering and l1l1 filtering, SSA also assumes a stationary error term.

To address the issue of non-stationarity, a non-stationary Gaussian process regression model has been proposed [

26], which is a non-parametric approach capable of handling time-varying errors. While non-parametric and semi-parametric models excel at making accurate predictions, they face significant limitations in terms of interpretability and facilitating further analysis. These challenges make them less suitable for applications that require a deeper understanding of the underlying processes, such as the dynamics of wildfire smoke or the movement of UAS.

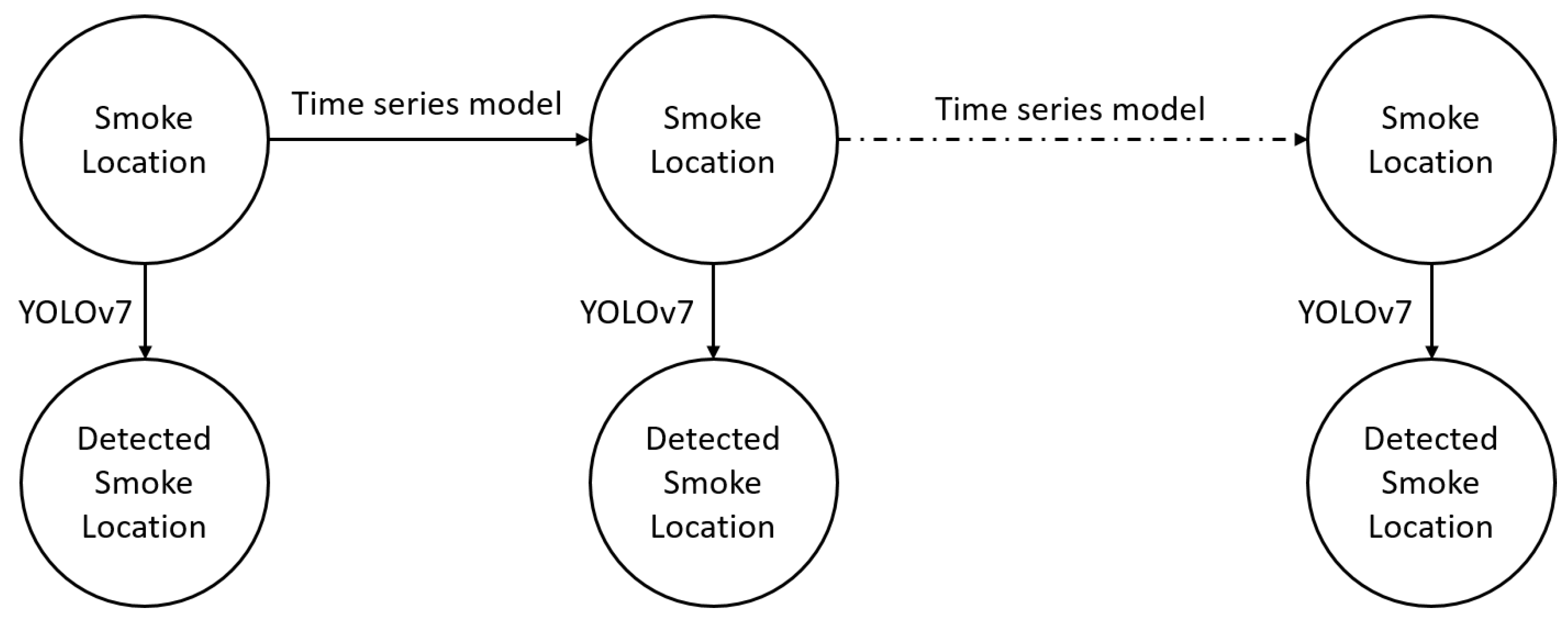

Therefore, we propose a parametric model and combine it with YOLOv7 based on the Hidden Markov Model (HMM) framework, which overcomes these limitations. The proposed method estimates transition probabilities within the HMM using time series data, where the long-term trend is estimated using Ordinary Least Squares (OLS), and the short-term trend is modeled by an

process. The emission states correspond to smoke locations identified by the YOLOv7 object detection model (see

Figure 1).

This approach allows for the prediction of smoke in frames where YOLOv7 detected it in the previous frame but fails to identify it in the current one. It also corrects false detections and integrates these refined, newly identified frames into the training set, enhancing YOLOv7’s accuracy. Additionally, the trend estimation facilitates further analysis of the factors driving changes in the trend function, providing valuable insights into the dynamics of wildfire smoke.

3. Methodology

In this article, we assume that the location and motion of smoke in a video are governed by two main factors: the long-term trend and the short-term trend. The long-term trend is primarily influenced by the motion of the UAS, while the short-term trend consists of two key components. The first component is the previous smoke location in the video, which ensures temporal continuity and can be predicted using an autoregressive function [

27]. The second component includes random factors affecting the smoke, such as wind direction, wind speed, and other environmental variables. Additionally, we assume that these factors are additive, leading to the formulation of the following time-series model.

where

, a deterministic function, represents the long-term trend, and the short-term trend is modeled by an autoregressive function

:

Here,

represents the deviation of

from the trend

, and the error term

is assumed to be independently and identically distributed (IID) following a distribution with finite mean 0, and variance

(i.e.,

). In addition, by the definition of autoregressive function, we have the following assumption.

Assumption 1. The coefficients in Equation (2) satisfy In this setup, we treat

as the random error, while the trending function

is conditionally independent of

given

Furthermore, by Assumption 1, and leveraging the properties of simple linear regression, we constrain the covariance structure of

. That is,

This ensures that the covariance of is bounded, reflecting the stability of the short-term trend within the time series model.

3.1. Model Assumptions and the Stationary Property

Based on the model assumptions outlined above, we further introduce the following assumption.

Assumption 2. The initial value of the short-term trend given byand for , follows the autoregressive processThis implies that is a non-stationary time series with variance changing over time. With both Assumptions 1 and 2, and given

and

, the conditional expectation of

is

Thus, the long-term trend

can be expressed as

and the autoregressive component is given by

which is the classical detrending process.

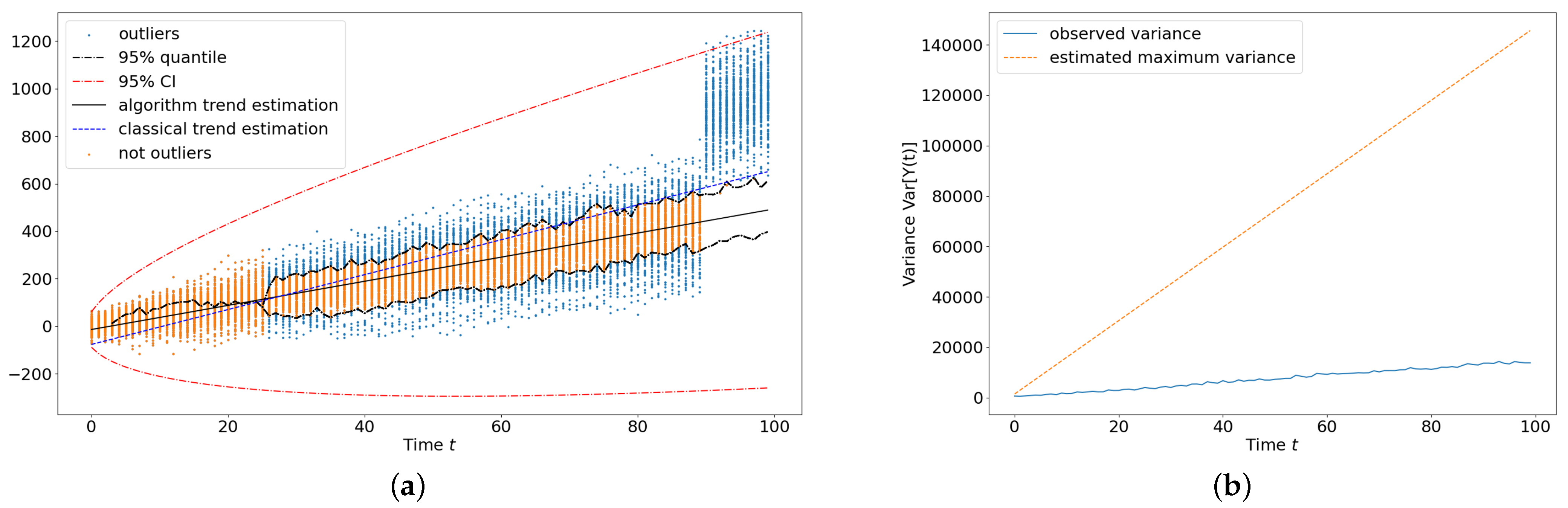

To estimate the trend, we propose the following iterative procedure. Let represent the observed time series. The iteration steps are as follows:

In this case, the parametric function

is a stationary function that does not change over time, while the error term

is non-stationary, with variance changing over time. Additionally, by taking the variance of both sides of Equation (

8), we obtain

where

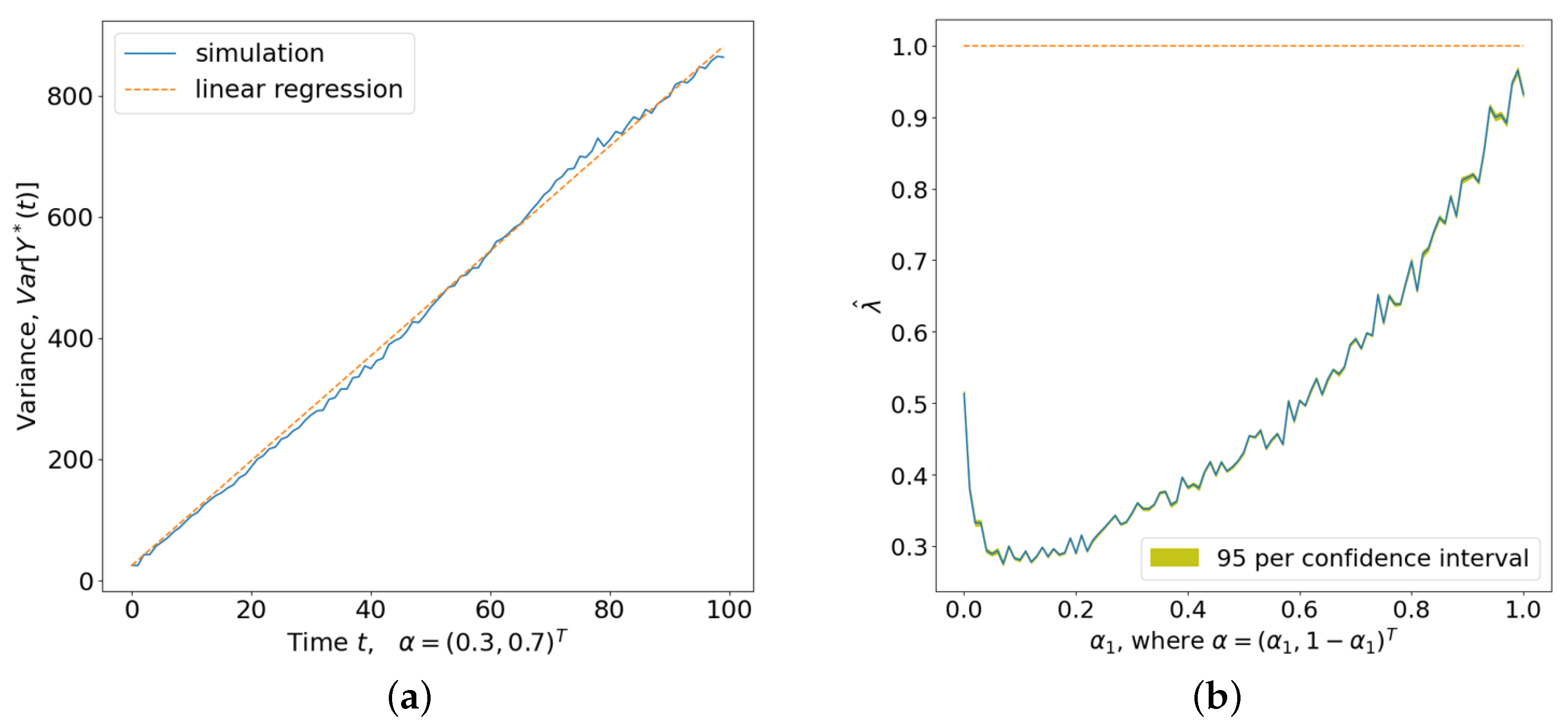

. Under these assumptions, we can now state the following theorem.

Theorem 1. Let Assumptions 1 and 2 hold. The variance of is given bywhere . With Theorem 1, the proposed method can be applied to non-stationary time series scenarios where variance changes over time. The proof of Theorem 1 is provided in

Appendix A, and the empirical conclusions are discussed in the simulation study section. The following inequality follows from Theorem 1.

This inequality implies that the variance of

is finite for a fixed

t. Hence, as the iteration of

progresses, it becomes stationary over extended prediction times

t, filtering the non-stationary error terms whose variance changes over time. Therefore, given the form of

, the observed data

, and the prediction horizon

t, we can construct an MCMC algorithm to estimate the trending function

.

3.2. The MCMC Algorithm

Algorithm 1 presents the pseudo-code. In our experiment, we set

as a linear trend since the data collected from the UAS is obtained under consistent motion. However, in theory, this method can also be applied to non-linear cases.

| Algorithm 1 MCMC iteration algorithm |

- 1:

Initialize the trending function . - 2:

for do - 3:

Compute . - 4:

Estimate given . - 5:

Compute , the rooted mean squared error of , as the estimate of . - 6:

for do - 7:

Simulate - 8:

Estimate - 9:

Compute the 95% quantile for the estimation. - 10:

if then - 11:

set as the outlier and remove it from the training data. - 12:

Determine , where . - 13:

After I iterations, and when kth iteration becomes stationary, where , compute the final prediction and the quantile intervals.

|

This algorithm performs two main tasks. First, it estimates the trending function . Second, it identifies outliers and non-stationary errors, removes them from the training data, and corrects the trending function .

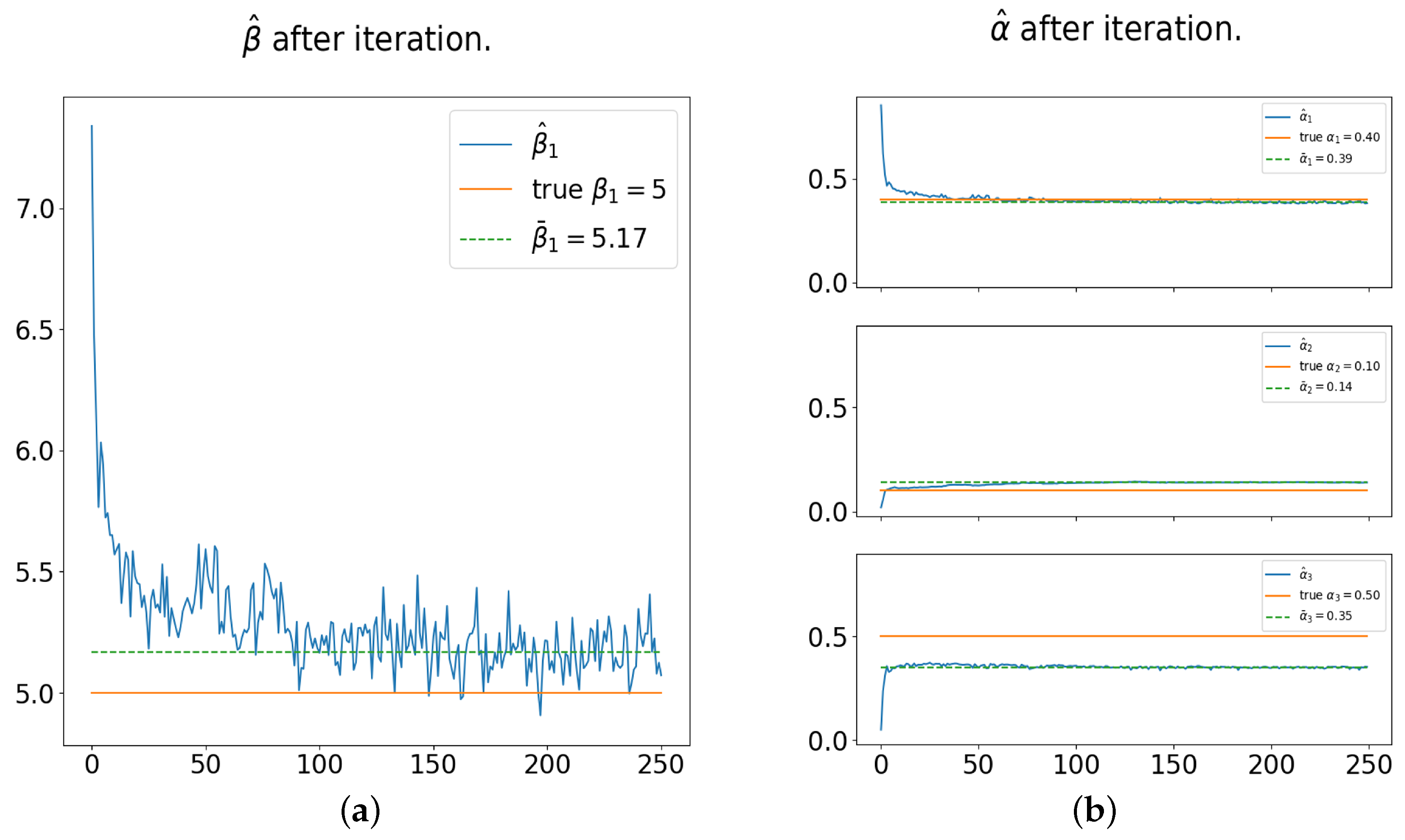

In Line 2 of Algorithm 1, the total number of iterations,

I, should be set large enough to ensure the estimated function

has converged. In our simulation study, the estimates became stationary after 100 iterations. Therefore, empirically, we recommend setting

I between 150 and 250 iterations. In Line 6 of Algorithm 1, we set the for-loop parameter

since, according to our empirical (i.e., simulation) study, there is no significant difference when

. In Line 7, we assume

follows a normal distribution with mean

and standard deviation

, where

is the root mean squared error (RMSE) of

(see Line 5). In Line 12, we use the median to compute

, but it can also be determined by averaging over

J iterations of

. In this paper, the final model is

where the 95% Percentile Interval is given by the following formula:

6. Results

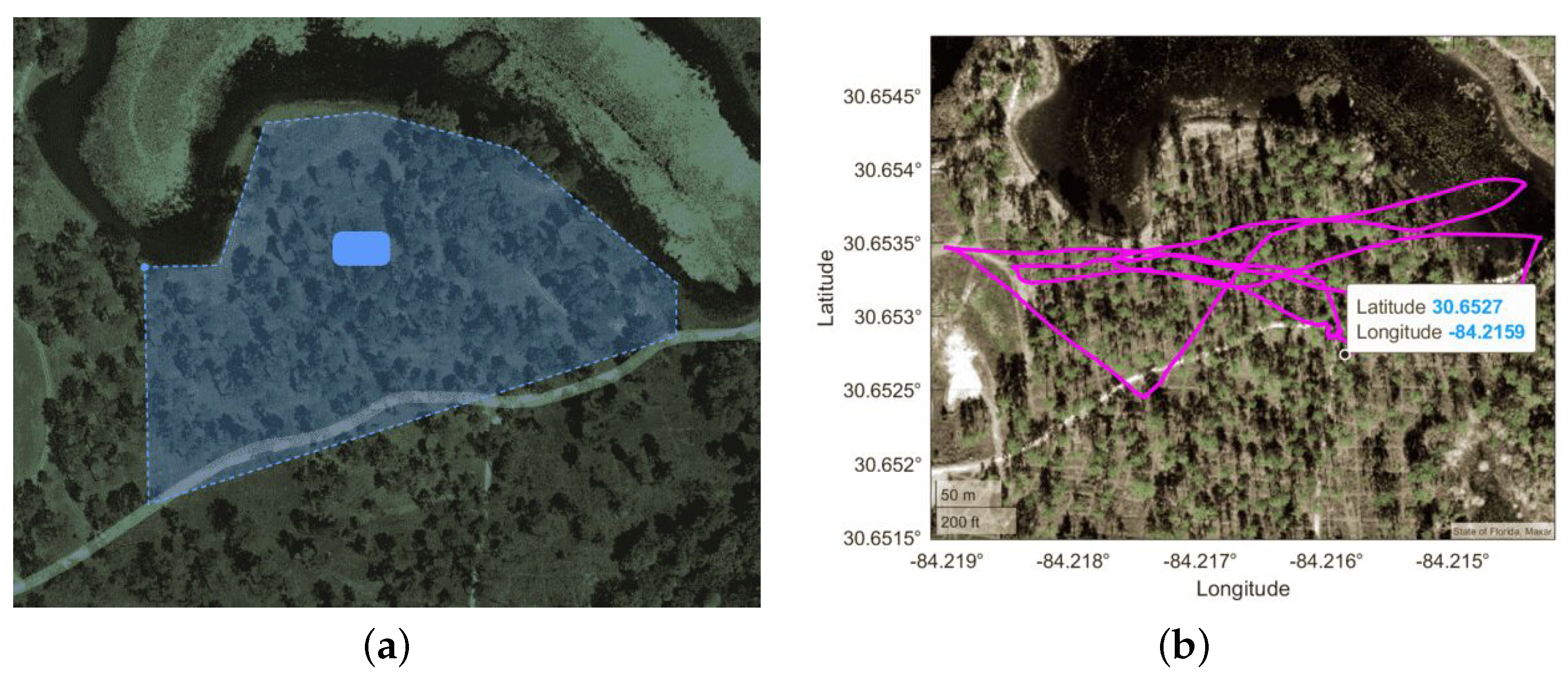

Based on the flight path of the UAS (see

Figure 5b), we divide the video frames into three sections. The first section, from Frames 29–500, corresponds to the UAS moving from the forest to the lake, during which YOLOv7 captures most of the smoke and generates the most consistent data. The second section, from Frames 687–825, focuses on the lake, resulting in fewer instances of smoke and less data generated by YOLOv7. In the third section, the UAS returns from the lake to the forest. This section includes both the sky and smoke, causing YOLOv7 to occasionally misidentify clouds as smoke.

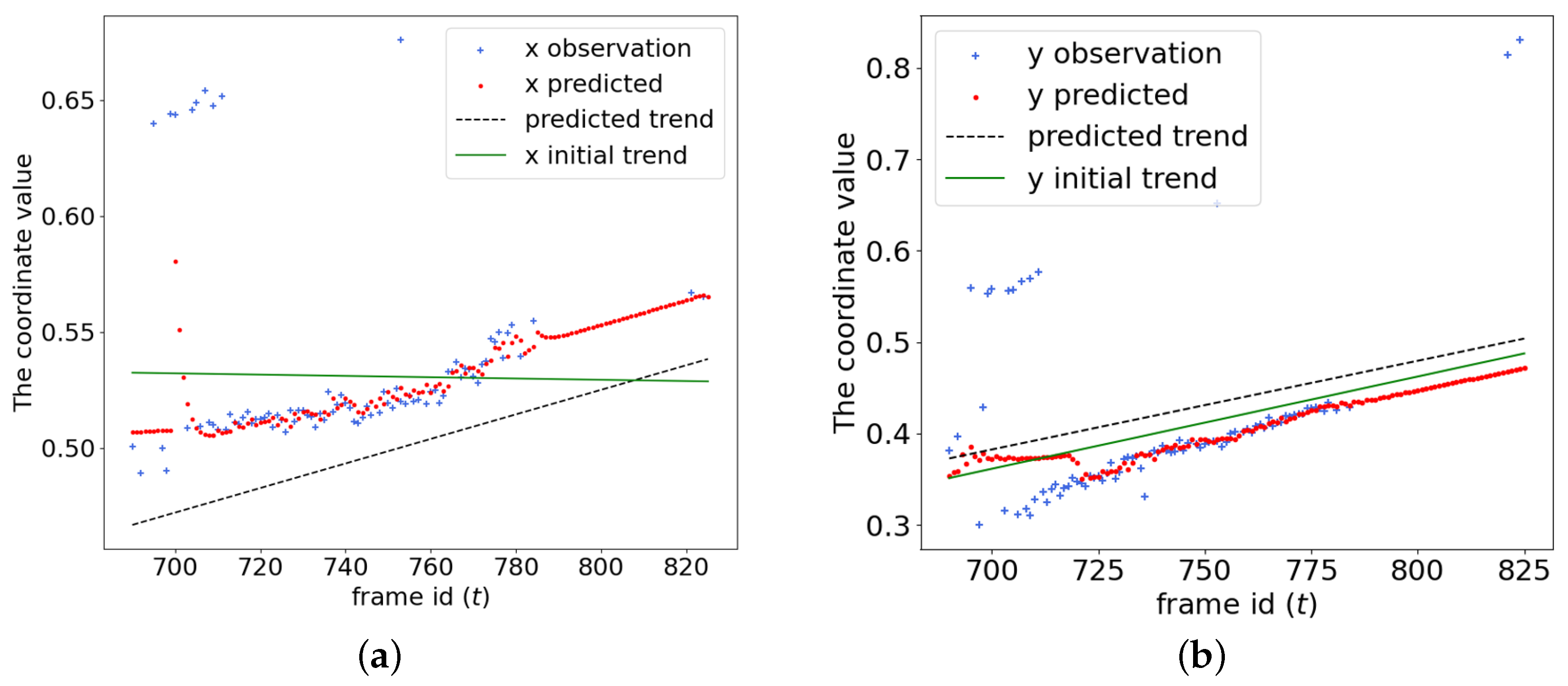

Table 4 presents the numerical results of the trend estimation. For the

x-coordinate, there are significant differences between the classical detrending method and Algorithm 1, with our proposed method slightly outperforming the classical approach.

For the

y-coordinate, no statistical differences were observed, as the 95% CIs of both the classical method and the proposed method overlap. The outcome is also illustrated in

Figure 7b, where the classical method is represented by the green solid line (initial

y-trend), and the proposed method is represented by the black dashed line (predicted trend). Additionally, since the classical method treats the points from Frame 687 to 710 as outliers, it adheres more closely to the observed data and, consequently, predicts a different intercept (

), resulting in a slightly smaller root mean squared error (RMSE).

However, since both methods use only the time-related coefficient () to predict the trend, and the proposed method is ultimately combined with the AR(3) model, discarding the predicted intercepts, the difference between the intercept estimates from both methods is not significant.

Table 5 implies that the

estimated by both methods has no significant difference except

in the

y-coordinate.

Finally, we apply Algorithm 1 and identify three distinct trending patterns in three frame sections (29–500, 687–825, 3150–3600). The summary result is shown in

Table 6. The inconsistent RMSE is due to changes in the motion of the observed data. The RMSE between Frames 687 and 825 is the smallest because, during this section, the UAS captured a constant wildfire while maintaining a constant speed. However, between Frames 3150 and 3600, the UAS captured both the wildfire and mistakenly identified some clouds as wildfire, causing the observations to become unstable and resulting in a higher RMSE.

7. Discussion and Future Works

There are two directions to further refine the algorithm in this paper. One is related to segmented regression in the time series. The main problem of segmented regression is determining the number of segments of time (non-identified case) [

28]. With Algorithm 1, we can determine if we should make a new segment at time

or not by the maximum confidence interval (i.e.,

).

Another direction is to expand the applicability of the algorithm. As mentioned, the current constraint requires the parameters of the autoregressive function to be between 0 and 1, with their sum less than 1. Extending this constraint to the L1 norm, however, may not guarantee a stationary result. Moreover, since the data collected from the UAS is obtained under consistent motion, the algorithm is applied with a linear trend. While the trend function could, in theory, take any form, further studies are needed to explore this possibility. Additionally, in this paper, we employ the HMM-based framework solely to integrate the proposed trend estimation method with YOLOv7, without altering the HMM method itself. Therefore, the proposed approach can be further enhanced by incorporating Bayesian updates to fully leverage the capabilities of the HMM.