Abstract

Object detection is a fundamental task in computer vision, aiming to identify and localize objects of interest within an image. Over the past two decades, the domain has changed profoundly, evolving into an active and fast-moving field while simultaneously becoming the foundation for a wide range of modern applications. This survey provides a comprehensive review of the evolution of 2D generic object detection, tracing its development from traditional methods relying on handcrafted features to modern approaches driven by deep learning. The review systematically categorizes contemporary object detection methods into three key paradigms: one-stage, two-stage, and transformer-based, highlighting their development milestones and core contributions. The paper provides an in-depth analysis of each paradigm, detailing landmark methods and their impact on the progression of the field. Additionally, the survey examines some fundamental components of 2D object detection such as loss functions, datasets, evaluation metrics, and future trends.

Keywords:

object detection; deep learning; convolutional neural networks; transformer; computer vision MSC:

68T45; 68U05; 68U10; 65D18; 68T07

1. Introduction

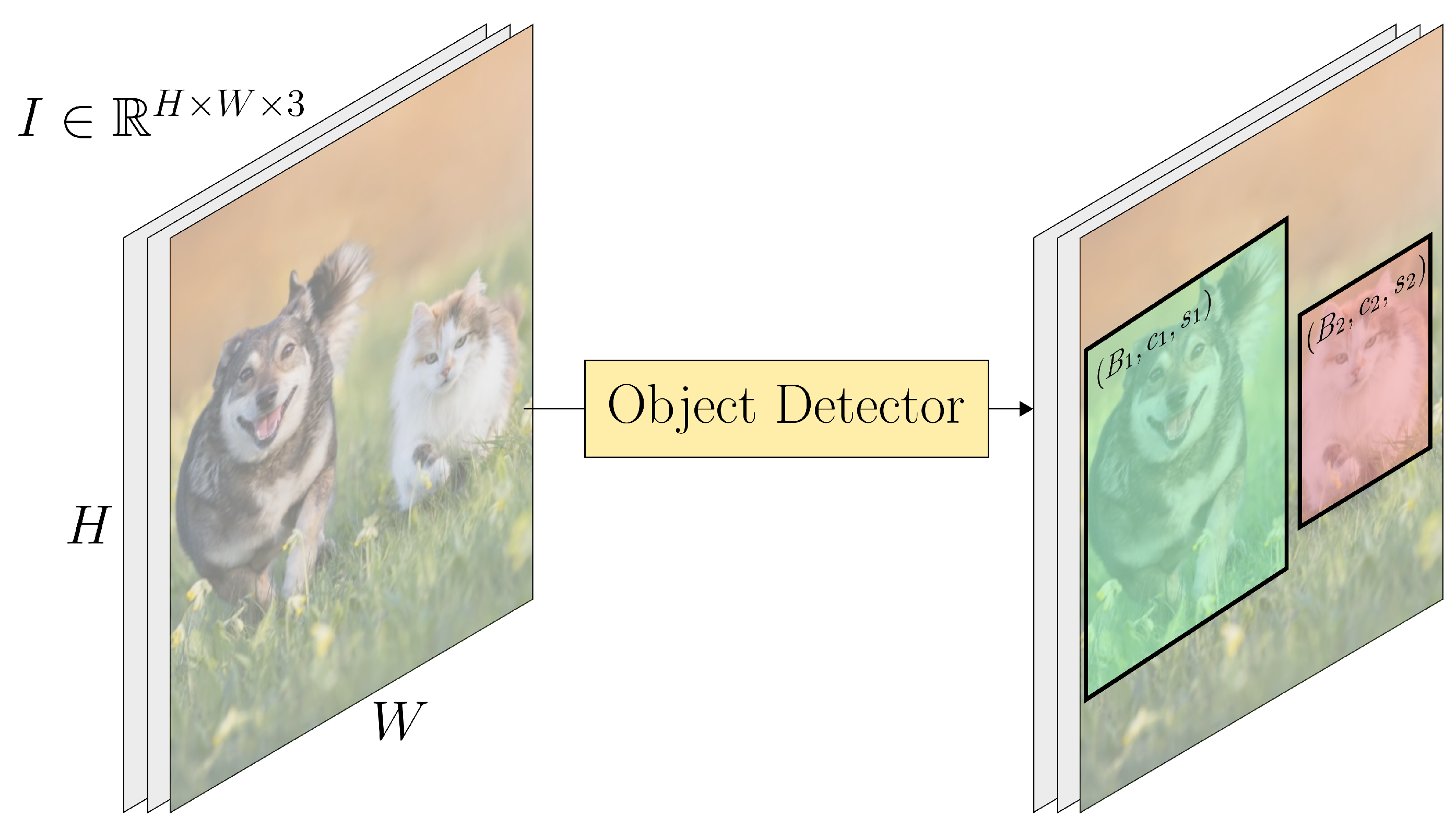

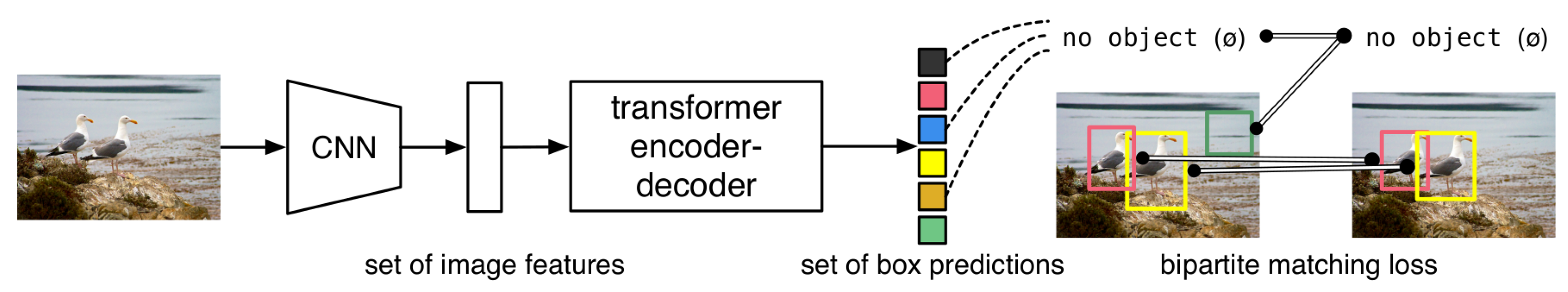

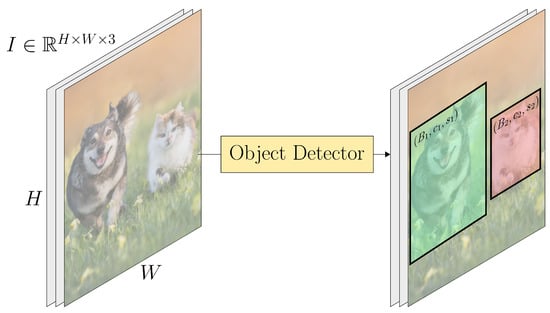

Object detection is a fundamental task in computer vision that involves two sub-tasks: the localization and classification of objects belonging to predefined categories of interest. Object detection can be formally described as follows. Let be an RGB image of height H and width W, and let C denote the total number of classes of interest. The goal is to identify all instances of these classes in I by predicting a set of bounding boxes, each paired with a class label and a confidence score. Specifically, the detector outputs a set where are the coordinates of the bounding box, is the predicted class and is the model’s confidence in the prediction (see Figure 1).

Figure 1.

Object detection pipeline. The model processes an input image and detects instances of predefined object classes (here, dog and cat), predicting bounding boxes, class labels, and confidence scores.

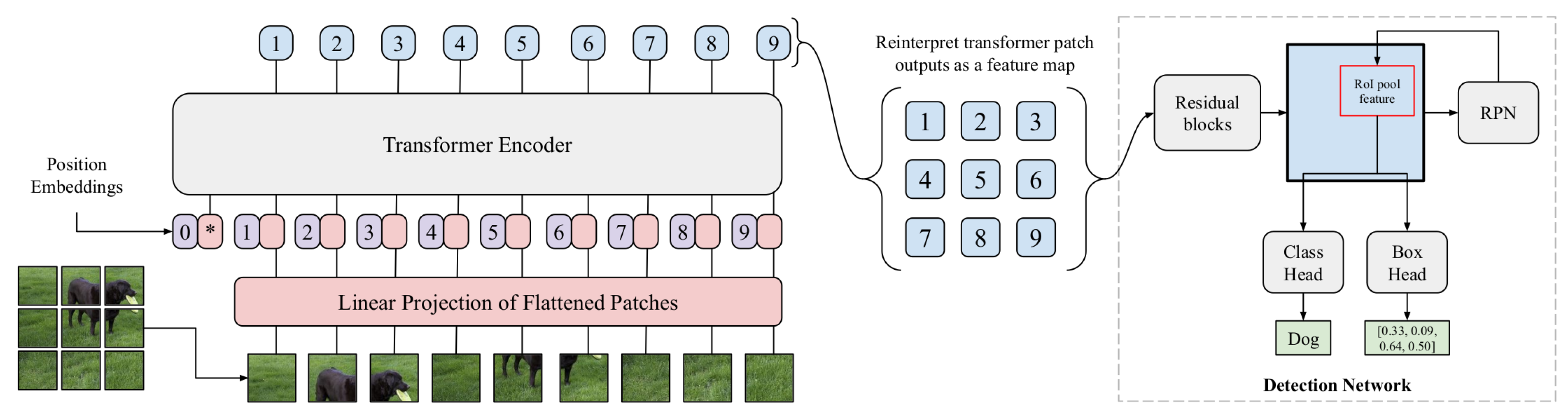

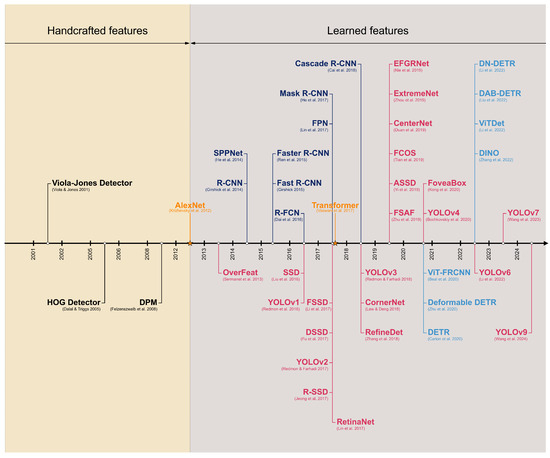

Over the last twenty-five years, object detection has undergone significant transformations following the broader advancements in computer vision as a whole. In its early stages, object detection relied on manually crafted features, such as Histogram of Oriented Gradients (HOG) [1], Scale-Invariant Feature Transform (SIFT) [2,3], and others. Early detectors, such as Viola–Jones [4,5], though impressive for their time, faced challenges like high computational costs and limited generalization capabilities. The success of convolutional neural networks (CNNs) with the introduction of AlexNet [6] in 2012 marked a definitive shift toward deep learning-based methods for object detection and, more broadly, for computer vision. The introduction of R-CNN (Regions with CNN features) [7] in 2014, and the subsequent R-CNN series, exemplified this shift. The deep learning era has been marked by an unprecedented emergence and growth of various object detection methods, driven by increasing computational capabilities, the availability of large-scale datasets, and advancements in other vision tasks. Various detector paradigms and families have since established themselves, as outlined in the milestone timeline shown in Figure 2. Modern object detection frameworks are predominantly categorized into three paradigms: two-stage, one-stage, and transformer-based detectors.

- Two-stage: these detectors, epitomized by the R-CNN [7] series, decouple the region proposal generation from classification and location refinement.

- One-stage: prioritizing inference speed and efficiency, detectors such as YOLO [8] and SSD [9] streamline the detection pipeline by eliminating the proposal generation stage.

- Transformer-based: exemplified by DETR [10], these detectors rely on the self-attention mechanism introduced in transformer architecture [11] to capture global context across the entire image, and they currently define the dominant trend in state-of-the-art 2D object detection.

In the following sections, this survey reviews the evolution of 2D generic object detection, analyzing its main paradigms, tracing their historical development, and systematically structuring key advancements in the field.

In summary, this survey presents the following key contributions: (1) It provides a comprehensive historical perspective on 2D object detection, tracing its evolution from handcrafted feature-based methods to modern deep learning-based approaches. (2) It presents a systematic categorization of deep learning-based object detection methods into three major paradigms—two-stage, one-stage, and transformer-based detectors—highlighting their milestones and technical advancements. (3) It provides an in-depth analysis of core components of object detection, including loss functions, datasets, and evaluation metrics. (4) It identifies open research challenges, including generalization across domains, scalability to novel object categories without extensive labeled data, and computational efficiency, which are further explored in Section 3.4. These contributions aim to provide a structured reference for researchers, consolidating the historical development and technical evolution in 2D object detection.

Figure 2.

Milestones of 2D generic object detection. AlexNet [6] marks the transition from traditional methods, based on handcrafted features, to deep learning-based approaches, based on learned features. Among the latter, three distinct colors identify the paradigms examined in this survey: blue for two-stage detectors, red for one-stage detectors, and light blue for transformer-based detectors, based on the transformer architecture [11], represented on the timeline. Milestone detectors in this figure: Viola–Jones Detector [4,5], HOG Detector [1], DPM [12], R-CNN [7], SPPNet [13], Fast R-CNN [14], Faster R-CNN [15,16], R-FCN [17], FPN [18], Mask R-CNN [19], Cascade R-CNN [20,21], OverFeat [22], SSD [9], DSSD [23], R-SSD [24], FSSD [25], RefineDet [26], EFGRNet [27], ASSD [28], RetinaNet [29], CornerNet [30], CenterNet [31], ExtremeNet [32], FCOS [33], FoveaBox [34], FSAF [35], YOLOv1 [8], YOLOv2 [36], YOLOv3 [37], YOLOv4 [38], YOLOv6 [39], YOLOv7 [40], YOLOv9 [41], DETR [10], Deformable DETR [42], DAB-DETR [43], DN-DETR [44], DINO [45], ViT-FRCNN [46], ViTDet [47].

Figure 2.

Milestones of 2D generic object detection. AlexNet [6] marks the transition from traditional methods, based on handcrafted features, to deep learning-based approaches, based on learned features. Among the latter, three distinct colors identify the paradigms examined in this survey: blue for two-stage detectors, red for one-stage detectors, and light blue for transformer-based detectors, based on the transformer architecture [11], represented on the timeline. Milestone detectors in this figure: Viola–Jones Detector [4,5], HOG Detector [1], DPM [12], R-CNN [7], SPPNet [13], Fast R-CNN [14], Faster R-CNN [15,16], R-FCN [17], FPN [18], Mask R-CNN [19], Cascade R-CNN [20,21], OverFeat [22], SSD [9], DSSD [23], R-SSD [24], FSSD [25], RefineDet [26], EFGRNet [27], ASSD [28], RetinaNet [29], CornerNet [30], CenterNet [31], ExtremeNet [32], FCOS [33], FoveaBox [34], FSAF [35], YOLOv1 [8], YOLOv2 [36], YOLOv3 [37], YOLOv4 [38], YOLOv6 [39], YOLOv7 [40], YOLOv9 [41], DETR [10], Deformable DETR [42], DAB-DETR [43], DN-DETR [44], DINO [45], ViT-FRCNN [46], ViTDet [47].

2. 2D Object Detectors

2.1. Handcrafted Features

Early pioneering works in the field of object detection, dating back to the early 2000s, relied heavily on handcrafted features, i.e., manually designed characteristics used to extract discriminative information from objects. Examples of feature extraction methods include Integral Image [4,5], Histogram of Oriented Gradients (HOG) [1], Scale-Invariant Feature Transform (SIFT) [2,3], and others. These features were combined with traditional classification models like the Support Vector Machine (SVM) (and its generalizations such as LSVM [12], SO-SVM [48,49]), or AdaBoost [50]. Notable examples of that time include the Viola–Jones detector [4,5], HOG-based detectors [1], and the deformable part-based model (DPM) [12].

- Viola–Jones Detector

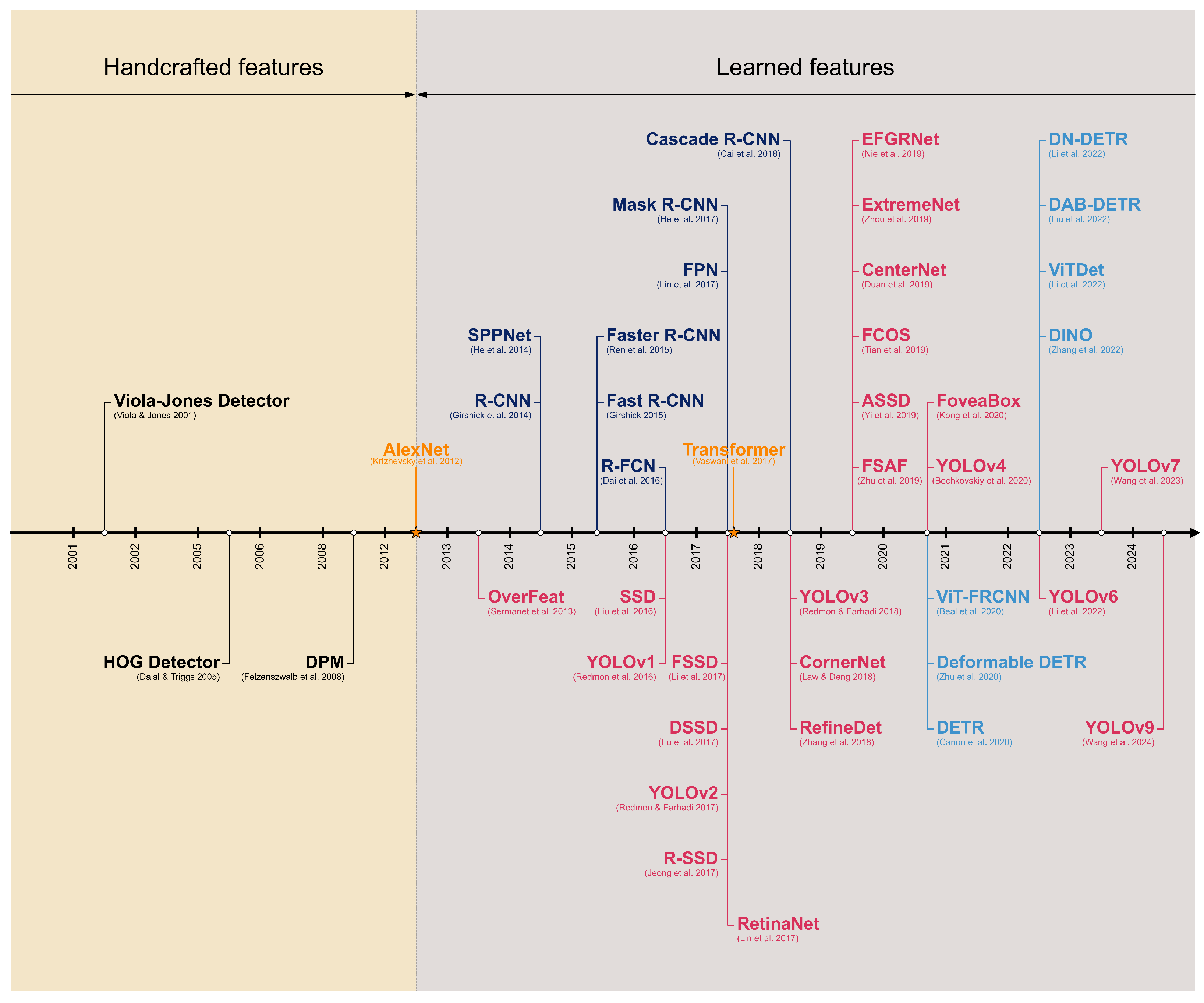

In 2001, P. Viola and M. J. Jones achieved the real-time detection of human faces for the first time without any auxiliary information (e.g., skin color segmentation, image differencing) [4,5]. The detector was at least 15 times faster than the best algorithms of its time ([51,52,53,54,55]) under comparable detection accuracy: operating on pixel single grayscale images, frontal upright faces were detected at 15 FPS on a conventional 700 MHz Intel Pentium III. The Viola–Jones detector employs a direct approach to detection, specifically, a sliding window technique: scanning all potential positions and scales within an image to identify whether any sub-window contains a human face. Three main advancements characterize their work. The first is the introduction of a new image representation called Integral Image, which allows the rectangular features (see Figure 3) used by the detector to be computed in a very efficient way; each pixel sub-window of the image has associated over 180,000 rectangular features. The second is a learning algorithm based on AdaBoost [50], which selects a small set of previous features and yields extremely efficient classifiers. The third contribution is a method for combining increasingly more complex classifiers in a 38-stage cascade, which allows background regions of the image to be quickly discarded while spending more computation on promising object-like regions. The authors trained their detection system on 4916 hand-labeled faces, randomly crawled on the web, and tested it on the MIT+CMU frontal face test set [52]. Subsequently, the authors successfully adapted their method to pedestrian detection, as detailed in their later work [56].

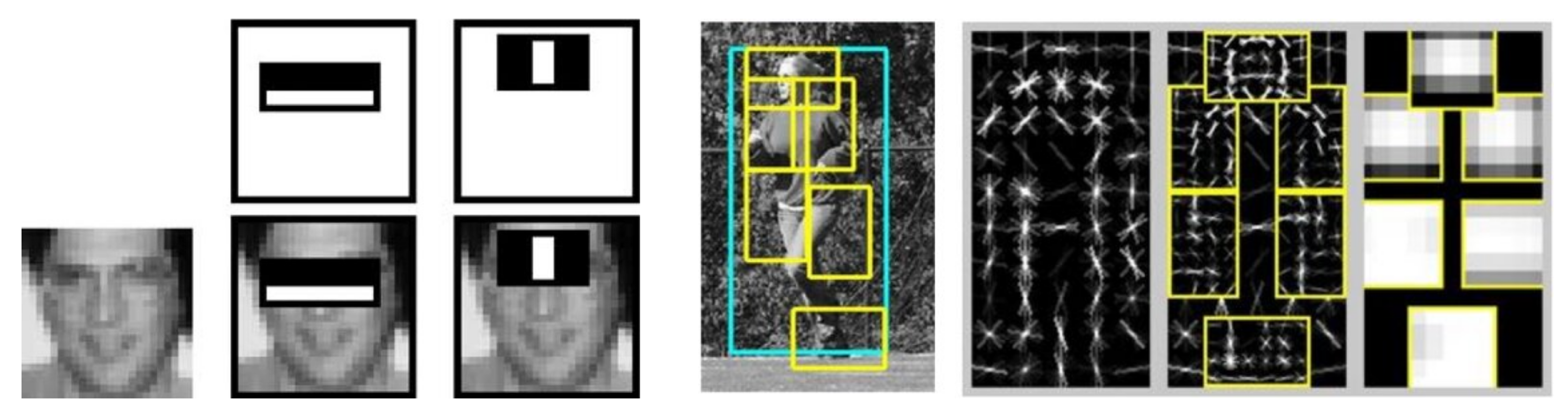

Figure 3.

On the left: the first and second rectangular features selected by AdaBoost [50] in Viola–Jones detector [4,5]. The two features are shown in the top row and then overlaid on a typical training face in the bottom row. On the right: an example detection obtained using the deformable part-based model (DPM) [12]. The model consists of a coarse template (blue rectangle), several higher-resolution part templates (yellow rectangles), and a spatial model that defines the relative location of each part.

- HOG

In 2005, N. Dalal and B. Triggs presented the Histogram of Oriented Gradients (HOG) feature descriptor [1]. HOG descriptors are reminiscent of edge orientation histograms [57,58], SIFT descriptors [3], and shape contexts [59]. The approach consists of dividing the image into a grid of uniform spatial regions, each being pixels and called cells, for each cell accumulating a local 1D histogram (nine orientation bins of 0°–180°) of unsigned gradient ( kernels) directions or edge orientations over the pixels of the cell; the combined histogram entries constitute the feature representation. Moreover, to enhance the illumination invariance, local contrast L2-Hys normalization is applied to each cell using larger spatial regions, each being pixel and called blocks; these normalized descriptor blocks are the HOG descriptors. The detection system is completed by covering a pixels detection window with a dense and overlapping grid of HOG descriptors and feeding the combined feature vector to a soft linear SVM. In terms of efficiency, the detector was able to process a pixel image in less than a second. The authors’ interests were mainly focused on human detection and, in particular, on pedestrian detection, though the descriptor performs equally well for other shape-based object classes: they tested their detection system on the MIT pedestrian test set [60,61] and on INRIA, a new pedestrian dataset introduced by the authors.

The HOG feature descriptor has been the basis of numerous subsequent detectors such as DPM [12,62], as well as other approaches that combine HOG with LBP [63,64,65], detectors based on related descriptors such as HSC [66], and others [67,68].

- Deformable Part-Based Model (DPM)

In 2008, P. Felzenszwalb et al. presented DPM [12]. The basic concept of part-based models is that objects can be modeled by parts in a deformable configuration [69,70,71,72,73,74,75,76,77,78]. In particular, in the DPM context, a model for an object consists of a global root filter, covering an entire detection window, and several part models: each part model specifies a spatial model and a part filter (see Figure 3). The spatial model defines a set of allowed placements for a part relative to a detection window and a deformation cost for each placement. The score of a detection window is the score of the root filter on the window plus the sum over parts of the maximum, over placements of that part, of the part filter score on the resulting sub-window minus the deformation cost. Both root and part filters are scored by computing the dot product between a filter and a sub-window of the HOG-based [1] feature pyramid. The features for the part filters are computed at twice the spatial resolution of the root filter. The model is defined at a fixed scale, and the objects are detected by searching over an image pyramid [79]. The training process makes use of a generalization of SVMs, called latent variable SVM (LSVM), a reformulation of MI-SVM [80] in terms of latent variables. When DPM was introduced, it became the new state of the art in object detection: teams led by Felzenszwalb were joint winners of the VOC-2008 [81] and VOC-2009 [82] challenges, and it will be the reference method until VOC-2012 [83].

Later, the star-structured deformable part models in [12] were further improved in numerous subsequent papers: in [84], a mixture of deformable part-based models, built on the pictorial structures framework (see [73,75]), was introduced to overcome the limits of a single deformable model: the score of a mixture model at a particular position and scale is the maximum over components of the score of that component model at the given location. In [62], the authors built a cascade for deformable part-based models using a hierarchy of models defined by an ordering of the original model’s parts; the algorithm prunes partial hypotheses using PAA (Probably Approximately Admissible) thresholds on their scores. This approach achieved a significant increase in efficiency, yielding a speedup of approximately 22 times. The cascade algorithm applies to star-structured and grammar models (see [85]). R. Girshick further developed object detection with grammar models in [86,87]. DPM has inspired numerous works in later years, such as [88,89,90,91,92] and UDS [93].

Other pioneering detectors of that period were based on SIFT [2,3] descriptors, such as [94] and ESS [95], or on their generalizations, such as the color-SIFT descriptors [96] used in Selective Search [97], or PCA-SIFT descriptors [98]. Still, other detectors used additional feature extractors such as Integral Channel Features (ICFs) [99,100,101] or Haar-like wavelets [60,61,102,103], shape contexts descriptors [59], Hough transform [104,105,106] or Regionlets representation [107], or extensive combinations of different features, as in Oxford-MKL [108]. However, while these traditional detectors effectively addressed early challenges in object detection and yielded impressive results for their time, they also encountered significant limitations, including high computational complexity and restricted generalization capabilities.

2.2. Learned Features

Pioneering works in neural network-based detection date back to the early 1990s and were primarily focused on face detection. In 1994, R. Vaillant et al. [109] presented a face detection system based on a convolutional neural network. In subsequent years, 1995, 1996, and 1998, H. A. Rowley et al. [52,110,111] used neural networks to detect upright frontal faces in an image pyramid. The true turning point occurred in 2012 with the introduction of AlexNet by A. Krizhevsky et al. [6]; their deep convolutional neural network won the ILSVRC-2012 [112], significantly outperforming traditional approaches. AlexNet’s success demonstrated the potential of deep learning for image classification, marking the beginning of its application to other vision tasks, including object detection, where traditional methods were simultaneously reaching their performance limits. In the following sections, deep learning-based detectors have been divided into three main categories: one-stage, two-stage, and transformer-based detectors.

2.2.1. Two-Stage Detectors

Two-stage detectors are a class of object detectors that separate the detection process into two distinct stages. In the first stage, the model generates region proposals, i.e., regions in the image likely to contain objects. These proposals are then passed to the second stage, where they are refined for both classification and localization, determining the object class and bounding box coordinates.

R-CNN Series

Among two-stage detectors, the most influential family is the R-CNN series; this series began in 2014 with R-CNN by R. Girshick et al. [7] which was among the earliest applications of deep learning to object detection and, of these early works, stands out as the most significant. In the following sections, the milestones of this family will be discussed in detail.

- R-CNN

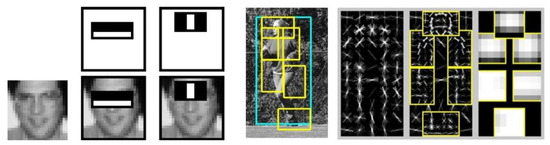

In 2014, R. Girshick et al. proposed R-CNN (Regions with CNN features) [7]. R-CNN consists of three modules, as shown in Figure 4. The first step is to generate around 2000 class-independent bottom-up region proposals using Selective Search [97,113]: these proposals, which are of arbitrary scale and size, define the set of candidate detections available to the detector. The second step is feature extraction: AlexNet [6], pre-trained on ILSVRC-2012 [112] and then fine-tuned on specific proposals, is used to extract a feature vector from each region proposal, cropped and warped to the network input size. The fine tuning is a -way classification problem, with C object classes, plus one for background. The third step classifies each region using class-specific linear SVMs: the presence or absence of an object within each region is predicted. At this stage, at test time, a class-specific greedy NMS (Non-maximum Suppression) is applied to all scored regions. Finally, to improve the localization performance, additional class-specific bounding-box regressors are applied to refine the bounding boxes of detected objects, using CNN proposal features.

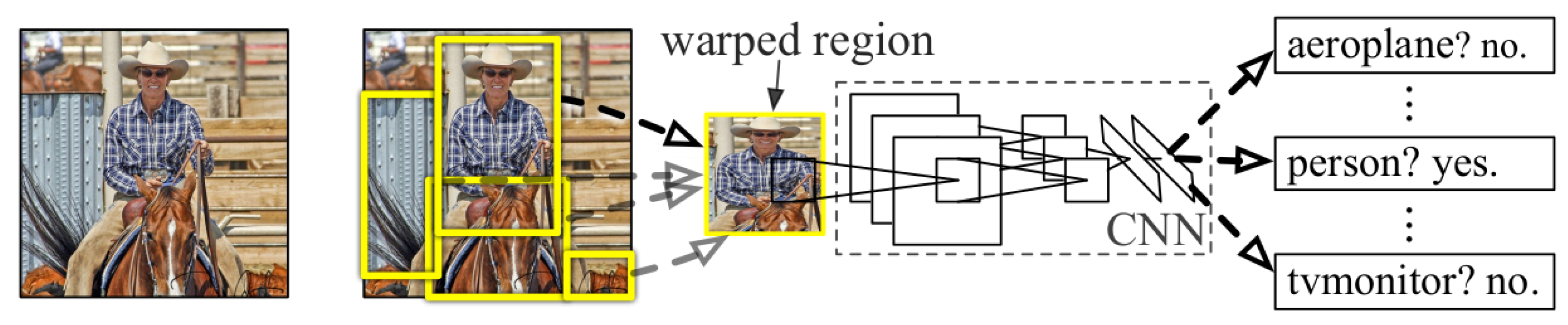

Figure 4.

The architecture of R-CNN [7], which, in order, takes an input image, extracts around 2000 bottom-up region proposals, computes features for each proposal using a backbone, and then classifies each region using class-specific linear SVMs.

R-CNN can be considered a significant milestone in the object detection field: it proves that pre-training CNN on a large classification dataset and then fine-tuning it on domain-specific data can greatly enhance performance on downstream tasks such as detection. Despite that, the main drawback is efficiency, affecting both the training phase, which involves a lengthy multi-stage procedure, and especially the inference phase.

- SPPNet

In 2014, in the same year as R-CNN [7], K. He et al. presented the Spatial Pyramid Pooling Network (SPPNet) [13]. The authors were the first to introduce an SPP layer in the context of CNNs in order to remove the fixed-size constraint of the network. Spatial pyramid pooling (SPP) [114,115], which can be considered an extension of the Bag-of-Words (BoW) model [116], partitions the image into divisions from finer to coarser levels, and aggregates local features in them. SPP is able to generate a fixed-length output regardless of the input size. In the context of object detection, SPPNet [13] can be used to extract feature maps from the entire image only once, possibly at multiple scales. The SPP layer is then applied to each candidate window of the feature maps to pool a fixed-length representation of this window. In other words, the proposed method extracts window-wise features from regions of the feature maps, while R-CNN [7] extracts directly from image regions. Because time-consuming convolutions are applied only once, rather than for each region proposal, this approach can run orders of magnitude faster than R-CNN [7].

Although SPPNet [13] has improved detection speed, it still requires multi-stage training; moreover, to speed up and simplify the training, it fine-tunes only the fully connected layers while fixing the convolutional layers, which may limit the overall accuracy.

- Fast R-CNN

In 2015, R. Girshick proposed Fast R-CNN [14]. The idea is to fix the disadvantages of R-CNN [7] and SPPNet [13], while improving their speed and accuracy. Fast R-CNN takes as input an image and multiple regions of interest (RoIs). The network first processes the whole image with several convolutional and max pooling layers to produce a convolutional feature map. Then, for each object proposal, a RoI pooling layer extracts a fixed-length feature vector from the feature map. Each feature vector is fed into a sequence of fully connected layers that finally branch into two output layers: one that produces softmax probability estimates over C object classes plus a catch-all background class, and another layer that outputs four real-valued numbers for each of the C object classes. Each set of four values encodes refined bounding-box positions for one of the C classes. The RoI pooling layer converts features inside any valid region of interest into a small feature map with a fixed spatial extent by dividing the RoI window into a grid of sub-windows and applying max pooling to each sub-window. Pooling is applied independently to each feature map channel, as in standard max pooling.

Fast R-CNN has several advantages, including faster single-stage training through feature sharing and a multi-task loss, along with a faster inference. However, its detection speed is still limited by the object proposal process, motivating the development of methods such as Faster R-CNN [15,16] to integrate proposal generation within the network.

- Faster R-CNN

In 2015, shortly after Fast R-CNN [14], S. Ren et al. presented Faster R-CNN [15,16] to overcome the dependency on region proposal algorithms. At that time, region proposal algorithms had become the real bottleneck of object detection pipelines at the test stage: methods like Selective Search [97,113], based on grouping super-pixels, or more recent ones, such as EdgeBoxes [117], based on sliding windows, still consume as much time as the detection network and both rely on CPU computation, not taking advantage of GPUs. Faster R-CNN is composed of two modules: the first module is a deep fully convolutional network (FCN) [118], called region proposal network (RPN), which proposes regions, and the second module is Fast R-CNN [14] that uses the proposed regions. The idea is that the convolutional feature maps used by region-based detectors can also be used for generating region proposals. To generate regions, the RPN slides over the convolutional feature map output by the last shared convolutional layer, taking as input an spatial window. Each sliding window is mapped to a lower-dimensional feature and then fed into two sibling fully connected layers, a box regression layer and a box-classification layer. The RPN can be trained end to end specifically for the task of generating detection proposals. Furthermore, the RPNs introduced a novel scheme for addressing multiple scales and aspect ratios: anchor boxes. Specifically, the k proposals predicted by RPN, where k is the number of maximum possible proposals, are parameterized relative to k reference boxes, called anchors. An anchor is centered at the sliding window in question and is associated with a scale and aspect ratio. This approach is translation invariant, both in terms of the anchors and in terms of the functions that compute proposals relative to the anchors. Because of this multi-scale design based on anchors, convolutional features are computed on a single-scale image.

Faster R-CNN has profoundly influenced subsequent advancements in object detection, serving as the foundation for numerous extensions and refinements. For instance, by replacing the default VGG-16 [119] with ResNet-101 [120] and making other improvements, such as iterative box regression, context, and multi-scale testing, K. He et al. [120] obtained an ensemble model, which won the MS-COCO [121] 2015 and ILSVRC-2015 [112] object detection competitions. Subsequent works and improvements include Faster R-CNN by G-RMI [122], Faster R-CNN with TDM (top-down modulation) [123], Faster R-CNN with FPN [18], and Faster R-CNN with DCR (Decoupled Classification Refinement) [124]. Additionally, A. Shrivastava et al. [125] proposed to enhance the Faster R-CNN framework by integrating semantic segmentation for top-down contextual priming and iterative feedback.

- R-FCN

In 2016, J. Dai et al. presented R-FCN (Region-based Fully Convolutional Network) [17], a framework designed to improve the speed and efficiency of Faster R-CNN [15,16] by making all the learnable layers convolutional. Specifically, following Faster R-CNN [15,16], R-FCN extracts candidate regions using the region proposal network (RPN), which is a fully convolutional architecture in itself. The last convolutional layer produces a bank of position-sensitive score maps for each category: these score maps correspond to a spatial grid describing relative spatial positions and, partially inspired by [126], allows translation variance to be incorporated into FCN [118]. R-FCN ends with a position-sensitive RoI pooling layer which aggregates the score maps and generates scores for each RoI. With end-to-end training, the RoI layer shepherds the last convolutional layer to learn specialized position-sensitive score maps, with no convolutional/fully connected layers following, enabling nearly cost-free region-wise computation and speeding up both training and inference. At training time, OHEM (Online Hard Example Mining) [127] is adopted.

Subsequent works based on R-FCN include D-RFCN (Deformable R-FCN) [128], an enhancement of R-FCN based on Deformable Convolutional Network (DCNv1) [128] and deformable PSROI, D-RFCN + SNIP (Scale Normalization for Image Pyramids) [129], and LH R-CNN (Light Head R-CNN) [130] which reduces the cost in R-FCN by applying separable convolution to reduce the number of channels in the feature maps before RoI pooling.

- FPN

In 2017, T.-Y. Lin et al. proposed FPN (Feature Pyramid Network) [18], a framework designed to improve multi-scale object detection in a computationally efficient manner. Before FPN, feature pyramids built upon image pyramids [79] were the basic and standard solution in recognition systems to detect objects on different scales [79]. Computing features for each level of an image pyramid has obvious limitations in terms of memory and inference time; for these reasons, detectors like Fast R-CNN [14] and Faster R-CNN [15,16] avoid image pyramids by default. FPN addresses this challenge and computes multi-scale feature representations by leveraging the inherent pyramidal feature hierarchy of convolutional neural networks. FPN takes a single-scale image of an arbitrary size as input, and outputs proportionally sized feature maps at multiple levels, in a fully convolutional fashion. The strategy is general-purpose, and it is independent of the backbone architecture. The FPN builds the pyramid using three components: a bottom-up pathway, a top-down pathway, and lateral connections. The bottom-up pathway is the feed-forward computation of the backbone, which computes a feature hierarchy consisting of feature maps at several scales. The top-down pathway up-samples spatially coarser, but semantically stronger, feature maps from higher pyramid levels. These features are then enhanced with features from the bottom-up pathway via lateral connections: each lateral connection merges by element-wise addition feature maps of the same spatial size from the bottom-up pathway (after a convolutional layer to reduce channel dimensions) and the top-down pathway.

Over the years, numerous generalizations of the Feature Pyramid Network (FPN) have been proposed, including PFPNet [131], NAS-FPN [132], Auto-FPN [133], -FPN [134], and AugFPN [135].

- Mask R-CNN

In 2017, K. He et al. presented Mask R-CNN [19]. Mask R-CNN is an intuitive extension of Faster R-CNN [15,16] to handle instance segmentation [126,136,137,138,139,140,141,142]. Mask R-CNN adopts the same two-stage procedure, with an identical first stage (RPN). In the second stage, Mask R-CNN adds a third branch to predict segmentation masks (see Figure 5) on each region of interest (RoI), in parallel with the two branches for classification and bounding box regression. The additional mask output is distinct from the class and box outputs, requiring the extraction of much finer spatial layout of an object. Specifically, the mask branch has a -dimensional output for each RoI, which encodes C binary masks of resolution , one for each of the C classes. This pixel-to-pixel behavior requires the RoI features to be precisely aligned to preserve the explicit per-pixel spatial correspondence; the RoIPool (RoI pooling) layer, introduced in Fast R-CNN [14], or RoIWarp, introduced in MNC [141], overlooked this alignment issue. To address this, the authors proposed a novel RoIAlign layer that removes the harsh quantization of RoIPool, properly aligning the extracted features with the input. At test time, the mask branch is applied to the top 100 RoIs after NMS, adding a overhead compared to Faster R-CNN [15,16].

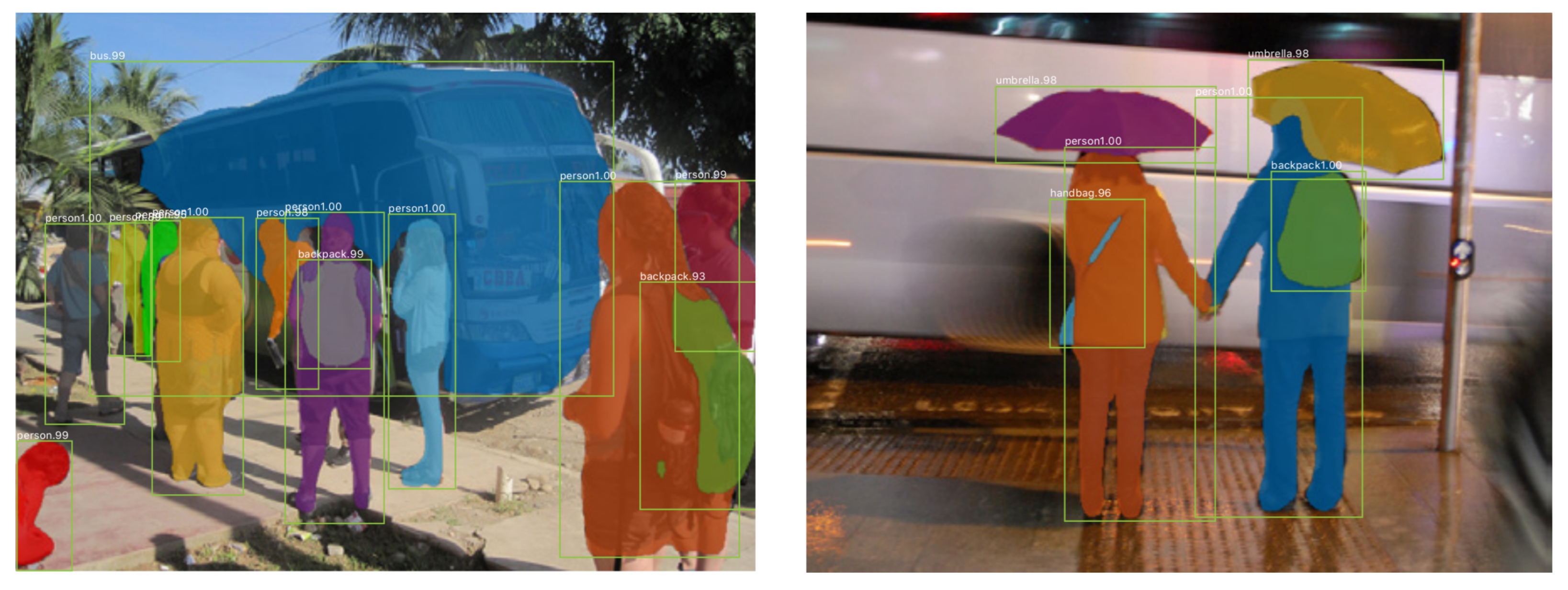

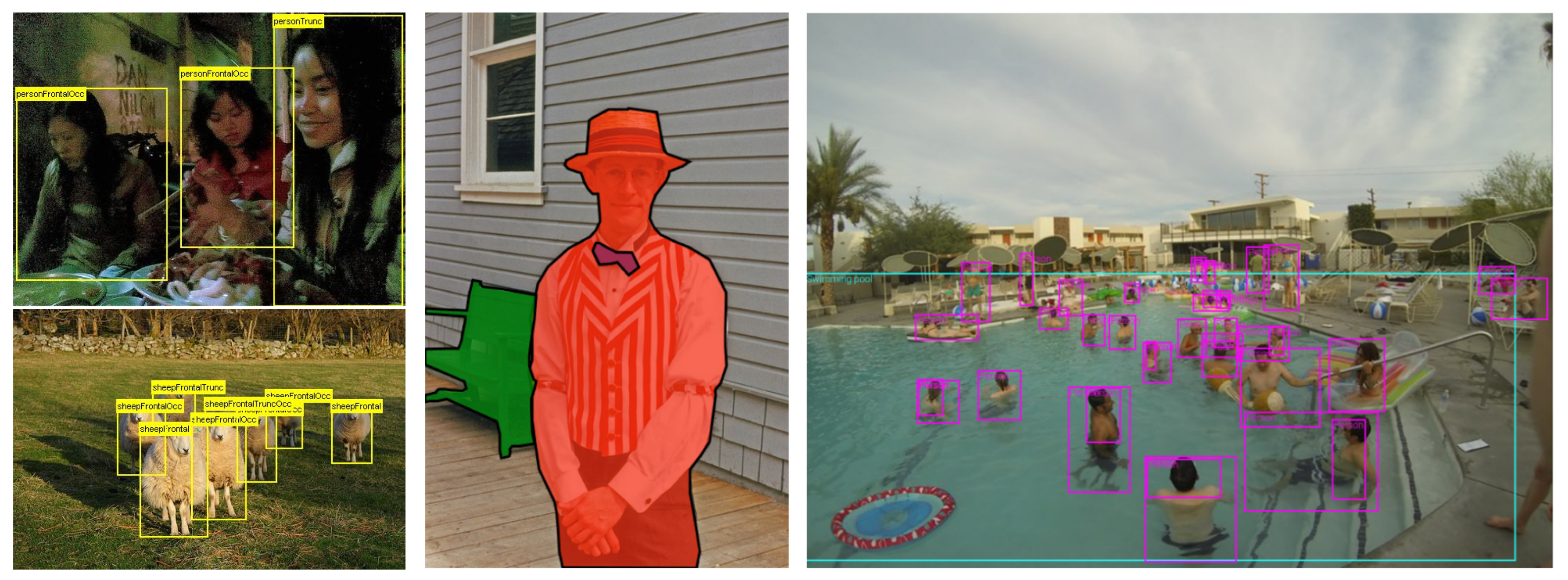

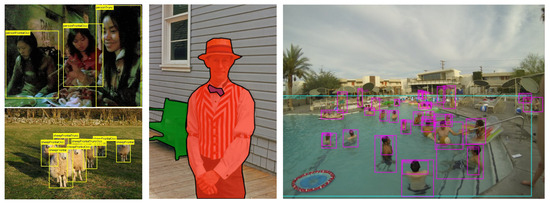

Figure 5.

Mask R-CNN results on the MS-COCO [121] dataset. For each image, masks are shown in color, along with the corresponding bounding boxes, category labels, and confidence scores.

- Cascade R-CNN

In 2018, Z. Cai et al. presented Cascade R-CNN [20,21], a multi-stage extension of R-CNN [7] that frames the bounding box regression task as a cascade regression problem, inspired by the works of cascade pose regression [143] and face alignment [144,145]. Cascade R-CNN uses a sequence of detectors trained with increasing IoU thresholds, to be sequentially more selective against close false positives. The cascade of R-CNN stages are trained sequentially, using the output of one stage to train the next; this is motivated by the observation that a bounding box regressor trained with a particular IoU tends to produce bounding boxes of higher IoU. Unlike earlier bootstrapping methods used in detectors such as Viola–Jones [4,5] or DPM [84], the resampling procedure does not aim to mine hard negatives. Instead, starting from proposals generated by the RPN in the first stage, it seeks to find a good set of close false positives for training the next stage, by adjusting bounding boxes. When operating in this manner, the number of positive examples remains roughly constant across successive stages, mitigating the overfitting, since positive examples are plentiful at all levels; additionally, the detectors of the deeper stages are optimized for higher IoU thresholds. At inference, the same cascade procedure is applied, enabling a closer match between the object proposals and the detector quality of each stage.

Other Two-Stage Detectors and Enhancements

The R-CNN series has been highly influential, inspiring a wide range of additional contributions that extend and specialize the milestones presented above. Notable examples include MR-CNN (Multi-Region CNN) [146], which captures object features across multiple regions and integrates semantic segmentation-aware features, and DeepID-Net [147], which introduces a deformation-constrained pooling layer and a specialized pre-training scheme. MS-CNN [148] combines multi-scale proposal generation with detection, HyperNet [149] introduces a Hyper Feature representation, and ION (Inside-Outside Net) [150] leverages context and multi-scale knowledge. A-Fast-RCNN (Adversarial Fast R-CNN) [151] enhances Fast R-CNN [14] by using an adversarial network to generate challenging examples with occlusions and deformations, IoU-Net [152] learns to predict the IoU between each detected bounding box and the matched ground truth, and TridentNet (Trident Network) [153] introduces a parallel multi-branch architecture with shared transformation parameters but with different receptive fields. Introduced in 2019, Libra R-CNN [154] addresses sample, feature, and objective imbalances, and, in the same year, X. Zhu et al. applied DCNv2 (Deformable ConvNets v2) [155], an improvement over DCNv1 [128], to Faster R-CNN [15,16] and Mask R-CNN [19]. In 2021, P. Sun et al. presented Sparse R-CNN [156] which replaces the traditional dense set of proposals with a fixed-length sparse set. Further contributions in the R-CNN series, or methods enhancing its framework, include SDS [136], R-CNN minus r [157], GBD-Net [158], NoC [159], MultiPath [160], AC-CNN [161], CoupleNet [162], MegDet [163], Feature Selective [164], PANet [165], OR-CNN [166], RelationNet [167], ME R-CNN [168], AutoFocus [169], DATNet [170], TSD [171], DetectoRS [172], and others [173].

Moreover, the R-CNN series includes several cascade-based approaches, in addition to Cascade R-CNN [20,21]. HTC (Hybrid Task Cascade) [174] improves Cascade Mask R-CNN [20,21] by proposing a new cascade architecture which combines box and mask branches for a joint multi-stage processing, while Cascade RCNN-RS [175] proposes a rescaled family of Cascade R-CNN [20,21] with improved speed–accuracy trade-offs. Other cascade-based approaches include CRAFT [176] and CC-Net [177].

Efforts to improve the performance of region proposal networks (RPNs) and the quality of region proposals include Cascade RPN [178], GA-RPN [179], LocNet [180], Attend Refine Repeat in AttractioNet [181], Subcategory-aware RPN [182], SharpMask [140], and others [183].

Other works following the two-stage paradigm, but not directly tied to the R-CNN series, include MultiBox [184] and its improvement MSC-MultiBox [185], FeatureEdit [186], and Deep Regionlets [187]. Beyond these, some two-stage detectors have also explored anchor-free paradigms, as detailed below.

- Anchor-Free Two-Stage Detectors

Although anchor-free methods are predominantly associated with one-stage detectors, some two-stage approaches have also adopted anchor-free paradigms. A detailed introduction to anchor-free methods, along with their categorization into keypoint-based and center-based, can be found in Section Anchor-Free Detectors.

Among keypoint-based detectors, DeNet, introduced by L. Tychsen-Smith et al. in 2017 [188], generates RoIs without using anchor boxes. Specifically, it first determines how likely it is each location belongs to either the top-left, top-right, bottom-left, or bottom-right corner of a bounding box and subsequently generates RoIs by enumerating all possible corner combinations, following the standard two-stage approach to classify each RoI. Other notable keypoint-based methods include Grid R-CNN [189], which replaces the standard regression branch with a grid-guided localization mechanism, and RPDet [190], which introduces RepPoints (Representative Points), a finer representation of objects as a set of sample points useful for both localization and recognition. In 2020, K. Duan et al. proposed CPN (Corner Proposal Network) [191] which first extracts a number of object proposals by finding potential corner keypoint combinations and then assigns a class label to each proposal by a standalone classification stage.

Among center-based detectors, in 2019, Z. Zhong et al. [192] proposed a text detection framework in which the anchor-based RPN in Faster R-CNN [15,16] is replaced with an anchor-free region proposal network (AF-RPN). In 2021, X. Zhou et al. introduced CenterNet2 [193], a probabilistic two-stage extension of the one-stage framework CenterNet [194].

We conclude this section on anchor-free methods by highlighting one additional approach. In 2021, H. Qiu et al. presented CrossDet [195], later extended to CrossDet++ [196], an anchor-free multi-stage object detection framework that diverges from traditional keypoint-based or center-based approaches by using a novel cross line representation: objects are represented as a set of horizontal and vertical cross lines, designed to capture continuous object information.

2.2.2. One-Stage Detectors

One-stage detectors are a class of object detection methods that streamline the detection pipeline by predicting both object locations and categories in a single step, bypassing the need for region proposal generation typical of two-stage detectors. Their single-step design makes them faster and more efficient in most cases. Among the earliest implementations of the one-stage paradigm are DetectorNet [197] and OverFeat [22], both introduced in 2013. DetectorNet [197] splits the input image into a coarse grid and frames the detection task as a regression problem to object bounding box masks; it adopts AlexNet [6] as backbone. We now discuss OverFeat [22] in more detail.

- OverFeat

In 2013, P. Sermanet et al. presented OverFeat [22], a unified framework for classification, localization, and detection. OverFeat can be considered one of the first one-stage object detectors and among the earliest attempts to integrate CNNs into an object detection pipeline. The backbone architecture is similar to AlexNet [6] but with differences, including non-overlapping pooling regions and the removal of contrast normalization. At each spatial location, the detection pipeline predicts bounding boxes, class labels, and confidence scores using a combination of a classifier and a regressor, which share the same feature extraction layers. At test time, OverFeat adopts a multi-scale and sliding window approach: by passing up to six scales of the original image through the network, it evaluates multiple contextual views. Bounding box predictions at any location and scale are combined via a greedy merge strategy, designed to be more robust to false positives than traditional NMS. Additionally, the authors perform negative training on the fly by selecting a few interesting negative examples per image, such as random or most offending ones. The architecture is available in two variants, fast and accurate, differing mainly in the stride of the first convolution, the number of stages, and the number of feature maps. OverFeat was the winner of the ILSVRC-2013 [112] localization competition and ranked third in the detection competition; in post-competition work, it achieved a new state of the art in this category.

OverFeat anticipates more successful one-stage architectures, such as SSD [9] and YOLO [8], but its reliance on sequential training for the classifier and regressor presents a significant drawback compared to later models.

SSD Series

Among one-stage detectors, one of the most influential families is the SSD series; this series was introduced in 2016 with the development of SSD (Single Shot MultiBox Detector) by W. Liu et al. [9], marking the first major success of the one-stage approach in deep learning-based object detection. SSD stood out as a landmark in efficiency and speed, paving the way for subsequent advancements in the one-stage detection paradigm. In the following sections, the milestones of this family will be discussed in detail.

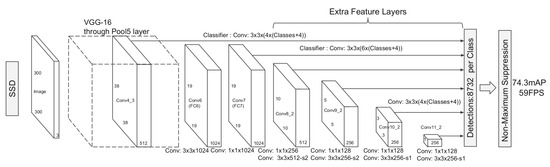

- SSD

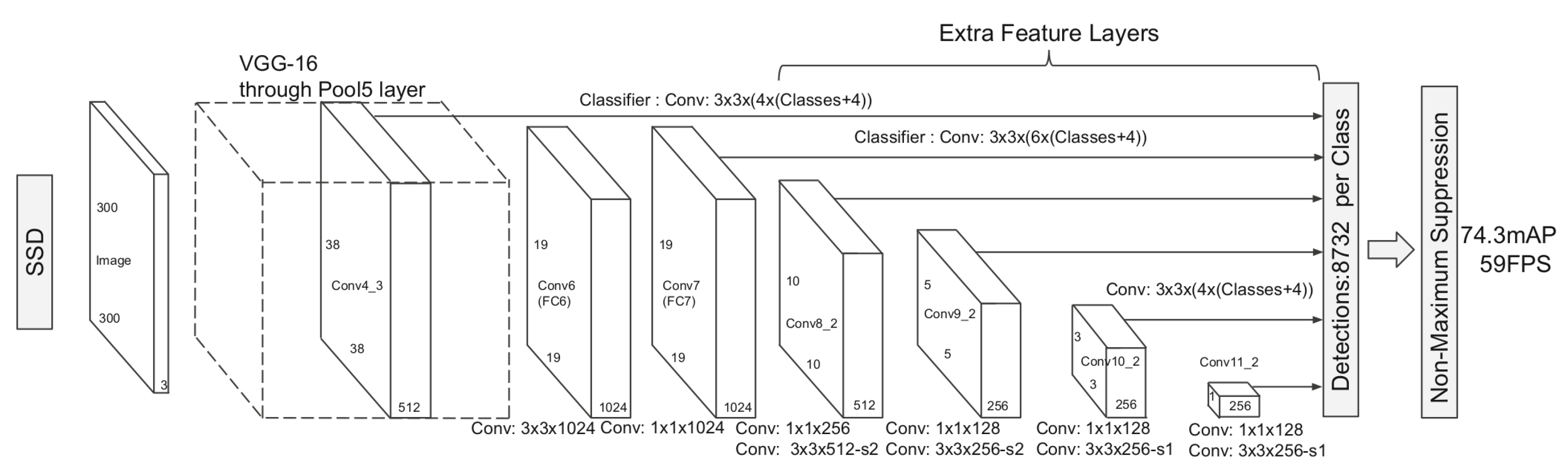

In 2016, W. Liu et al. presented SSD (Single Shot MultiBox Detector) [9]. SSD is based on a single feed-forward convolutional network that produces a fixed-size collection of bounding boxes and scores for the presence of object class instances in those boxes, followed by NMS. The SSD architecture, shown in Figure 6, extends a backbone network by appending additional convolutional layers; these layers progressively decrease in size and enable predictions at multiple scales. Each added feature layer uses its own set of convolutional filters to produce a fixed set of detections. The authors associate a set, typically six, of default bounding boxes with each feature map cell for multiple feature maps at the network’s top. At each feature map cell, the network predicts the offsets relative to the default box shapes in the cell and the per-class scores that indicate the presence of a class instance in each of those boxes. These default boxes are similar to the anchor boxes introduced in Faster R-CNN [15,16], but they are applied to feature maps of different resolutions. This multi-reference and multi-resolution approach efficiently discretizes the space of possible output box shapes. For a input, SSD can achieve 59 FPS on an NVIDIA Titan X.

Figure 6.

SSD architecture: SSD adds several feature layers to the end of a backbone, which predict the offsets to default boxes of different scales and aspect ratios, along with their associated confidence scores.

The primary innovation of SSD lies in its ability to detect objects at multiple scales across different network layers, unlike earlier detectors that perform detection exclusively on their top layers. Several extensions and improvements have been proposed to address specific challenges of SSD, which will be detailed in the following methods.

- DSSD

In 2017, C.-Y. Fu et al. presented DSSD (Deconvolutional Single Shot Detector) [23], an extension of SSD [9], designed to introduce additional context. DSSD draws inspiration from encoder–decoder architectures, often referred to as hourglass models for their wide–narrow–wide structure, which have proven effective in tasks such as semantic segmentation [198] and human pose estimation [199]. Building on SSD, DSSD introduces several key modifications. First, it replaces the VGG-16 [119] backbone with ResNet-101 [120], in order to improve accuracy. Second, the prediction module incorporates an additional residual block in each prediction layer, inspired by insights from MS-CNN [148]. Third, to integrate more high-level context, DSSD adds deconvolution layers to the original SSD architecture, effectively forming an asymmetric hourglass structure; these extra deconvolution layers increase the resolution of feature map layers. To strengthen the features, the authors adopt the skip connections from the hourglass model [199]. Unlike a standard hourglass network, DSSD employs a shallow decoder stage to maintain inference efficiency and because decoder layers must be trained from scratch, since no pre-trained models that include a decoder stage were available. Finally, a deconvolution module, inspired by SharpMask [140], is added to integrate information from earlier feature maps and the deconvolution layers. DSSD demonstrates significant improvements over SSD, particularly in detecting small objects, but this comes at the cost of reduced speed.

- R-SSD

In 2017, J. Jeong et al. presented R-SSD (Rainbow Single Shot Detector) [24], an extension of SSD [9], designed to address two key limitations. First, SSD treats each layer in the feature pyramid independently, considering only one layer for each scale, which neglects the relationships between different scales and, thus, the same object can be detected in multiple scales. Second, SSD is suboptimal in detecting small objects, a challenge also targeted by DSSD [23]. R-SSD introduces a novel feature map concatenation scheme, called rainbow concatenation, to address these issues. This is achieved by simultaneously performing lower-layer pooling and upper-layer deconvolution to create feature maps with an explicit relationship between different layers; batch normalization [200,201] is applied before concatenation to normalize feature values and mitigate scale differences across layers. By using the concatenated features, detection is performed considering all the cases where the object’s size is smaller or larger than the specific scale and it is expected that an object of a specific size is detected only in the most appropriate layer in the feature pyramid. In addition, the low-layer features, with limited representation power, are enriched by higher-layer features, resulting in good representation power for small object detection as in DSSD [23] but without much computational overhead: by rainbow concatenation, each layer in the feature pyramid has the same number of feature maps, and thus weights can be shared for different classifier networks in different layers. Furthermore, the number of channels in the feature pyramid is increased to enhance small object detection.

- FSSD

In 2017, Z. Li et al. presented FSSD (Feature Fusion Single Shot Multibox Detector) [25], an extension of SSD [9], which incorporates a lightweight and efficient feature fusion module. The idea is to appropriately fuse the different-level features at once and generate a feature pyramid from the fused features. Specifically, starting from SSD300 with a VGG-16 [119] backbone, FSSD excludes feature maps whose spatial size is smaller than , as they contain limited information for fusion. The remaining source layers are first reduced in feature dimension using a convolutional layer and then resized to match the spatial size of the feature map from conv (), using max pooling for down-sampling and bilinear interpolation for up-sampling. After resizing, concatenation, preferred over element-wise summation, is performed: batch normalization [200] is then applied to normalize the feature values. The resulting fused feature map is used to generate a feature pyramid, used as in SSD [9] to generate object detection results.

FSSD demonstrates several advantages over SSD [9]. Firstly, FSSD reduces the probability of repeatedly detecting parts of an object or merging multiple objects into one. Secondly, FSSD performs better on small objects by retaining their location information and context (shown to be fundamental [173]), which SSD struggles with due to its reliance on shallow layers whose receptive field is too small to observe the larger context information. In terms of inference time, since FSSD adds additional layers to the SSD model, it consumes approximately extra time.

- RefineDet

In 2018, S. Zhang et al. proposed RefineDet [26], an extension of SSD [9] that combines the advantages of one-stage and two-stage object detectors. Specifically, RefineDet introduces three key components: the ARM (Anchor Refinement Module), the ODM (Object Detection Module), and the TCB (Transfer Connection Block), which links the ARM and the ODM. The ARM filters out easy negative anchor boxes, reducing the search space for the classifier and mitigating the foreground–background class imbalance. It also coarsely adjusts the locations and sizes of anchors to provide a better initialization for the subsequent regressor in the ODM. The ODM is composed of the outputs of TCBs followed by the prediction layers, and takes the refined anchors from the ARM to regress accurate object locations and predict multi-class labels. The TCBs convert features of different layers from the ARM into the form required by the ODM, so that the ODM can share features from the ARM. TCBs are applied only to feature maps associated with anchors. Moreover, TCBs integrate large-scale context by adding high-level features to the transferred features to improve detection accuracy. RefineDet employs a two-step cascaded regression strategy through the ARM and ODM, mimicking the refinement process of two-stage detectors while maintaining the efficiency of the one-stage approach. This design allows RefineDet to achieve significant improvements in localization accuracy, especially for small objects.

- EFGRNet

In 2019, J. Nie et al. presented EFGRNet [27], a framework designed to enhance SSD [9] by jointly addressing the challenges of multi-scale detection and class imbalance without compromising its characteristic speed. EFGRNet introduces two key components: a feature enrichment (FE) scheme and a cascaded refinement scheme. The FE scheme captures multi-scale contextual information using a Multi-Scale Contextual Feature (MSCF) module. Inspired by ResNeXt [202] and HRGAN [203], the MSCF module employs a split–transform–aggregate strategy with dilated convolutions [204]. The output of MSCF is passed to the cascaded refinement scheme. The cascaded refinement scheme comprises two cascaded modules. First, the objectness module (OM) enriches the SSD features, performs class-agnostic binary classification, and generates an initial box regression. Then, the Feature Guided Refinement Module (FGRM) takes the outputs of the OM, generates an objectness map, refines the features and uses them to predict the final multi-class classification and bounding-box regression.

- ASSD

In 2019, J. Yi et al. presented ASSD (Attentive Single Shot Multibox Detector) [28], an extension of SSD [9] that enhances detection accuracy by incorporating an attention unit, inspired by the self-attention mechanism introduced for sequence transduction problems in the transformer [11]. In sequence transduction, the self-attention mechanism draws global dependencies between the input and output sequences by an attention function, which maps a query and a set of key–value pairs to an output. The ASSD’s approach can be viewed as a similar query problem that estimates the relevant information from the input features in order to build global pixel-level feature correlations. In the attention unit, the feature map at a given scale s is linearly transformed into three different feature spaces: , , and . An attention map , computed as the softmax-normalized matrix multiplication of and , captures the long-range dependencies of features at all positions and highlights the relevant parts of the feature map. The attention unit is completed with a matrix multiplication between and the attention map , obtaining an update feature map as the weighted sums of individual features at each location; finally, the result is added back to the input feature map . ASSD places the attention units between each feature map and the prediction module, where the box regression and object classification are performed.

- Other works in the SSD series

The SSD series has inspired a variety of extensions and improvements. In 2017 [205] and in a subsequent version in 2019 [206], Z. Shen et al. presented DSOD (Deeply Supervised Object Detector), an object detection framework based on SSD that can be trained from scratch, by adopting key principles such as deep supervision [207]. In 2017, in addition, they introduced GRP-DSOD [208], which enhances DSOD by integrating Gated Recurrent Feature Pyramids. ESSD (Extended Single Shot Detector) [209] enhances SSD by extending the semantic information of its shallow layers through an extension module. RFBNet (Receptive Field Block Net) [210] incorporates a Receptive Field Block to generate more discriminative and robust features. DES (Detection with Enriched Semantics) [211] enriches the features within the SSD framework by incorporating a semantic segmentation branch and a global activation module, while Features-Fused SSD [212] and FFE-SSD (Feature Fusion and Enhancement for SSD) [213] both enhance SSD by introducing multi-level feature fusion to improve small object detection. Further contributions enhancing the SSD framework include RRC [214], PFPNet [131], LFIP-SSD [215], M2Det [216], and PSSD [217].

- RetinaNet

In 2017, T.-Y. Lin et al. presented RetinaNet [29], a milestone among one-stage detectors for its innovative approach to addressing class imbalance, a key challenge preventing one-stage detectors from achieving state-of-the-art accuracy. Two-stage detectors, such as R-CNN-based detectors, address class imbalance through a two-stage cascade and sampling heuristics such as a fixed foreground-to-background ratio (1:3) or OHEM (Online Hard Example Mining) [127]. In contrast, one-stage detectors must process a much larger set of candidate object locations, usually –, that densely cover spatial positions, scales, and aspect ratios. Traditional solutions like bootstrapping [110,218] or hard example mining [4,62,127] proved ineffective as easily classified background examples still dominate training and can degrade the model. The authors proposed a new loss function, called focal loss, a dynamically scaled cross-entropy loss. The scaling factor decays to zero as confidence in the correct class increases and automatically down-weights the contribution of easy examples during training, enabling the model to focus on hard examples. By integrating this loss function into the training process, the authors introduced their architecture, RetinaNet. RetinaNet is composed of a backbone network, a modified ResNet-FPN [18,120], and two task-specific fully convolutional sub-networks: one predicts the probability of object presence at each spatial position for each of the A anchors and C object classes while the second regresses offsets from each anchor box.

In 2019, RetinaMask [219] enhanced RetinaNet with various improvements, including the integration of instance mask prediction, a self-adjusting Smooth L1 loss, and the addition of extra hard examples during training. In the same year, Retina U-Net [220] extended RetinaNet by integrating with U-Net [221] and adopting segmentation supervision to enhance medical object detection. In 2021, RetinaNet-RS [175] proposed a rescaled family of RetinaNet, with improved speed–accuracy trade-offs.

Anchor-Free Detectors

SSD-based detectors, RetinaNet [29], and the previously introduced R-CNN-based detectors are collectively referred to as anchor-based detectors, as they rely on predefined boxes with fixed sizes and aspect ratios. In SSD [9], these are called default boxes while Faster R-CNN [15,16] refers to them as anchor boxes. These predefined boxes serve as initial references, and the detector predicts the offsets relative to these anchors to refine the object locations. Despite their effectiveness, anchor-based detectors exhibit several drawbacks.

- Positive/negative imbalance: since this class of detectors is trained to classify whether each anchor box sufficiently overlaps with a ground truth box, achieving a high recall rate requires dense placement of anchor boxes on the input image. As a result, only a tiny fraction of anchor boxes will overlap with the ground truth, creating a significant imbalance between positive and negative samples, which further slows down training.

- Hyperparameters: the use of anchor boxes introduces many hyperparameters, such as the number of anchors, and the sizes and aspect ratios. As shown in [29] or [15,16], the detection performance is highly sensitive to these design choices and therefore needs to be properly tuned. The tuning of these hyperparameters typically involves ad hoc heuristics and statistics computed from a training/validation set [29,36], and becomes particularly challenging when combined with multi-scale architectures. However, design choices optimized for a particular dataset may not always generalize well to other applications, thus limiting generality [222].

- Shape variation: because the scales and aspect ratios of anchor boxes are kept fixed, anchor-based detectors face difficulties in handling object candidates with large shape variations, particularly for small objects.

- IoU: at training phase, anchor-methods rely on the IoU to define positive/negative samples, which introduces additional computation and hyperparameters for an object detection system [179].

To overcome these limitations, anchor-free detectors have been proposed as an alternative approach. Unlike anchor-based methods, anchor-free detectors eliminate the need for predefined anchor boxes. Anchor-free detectors can be categorized into keypoint-based detectors and center-based approaches, presented below.

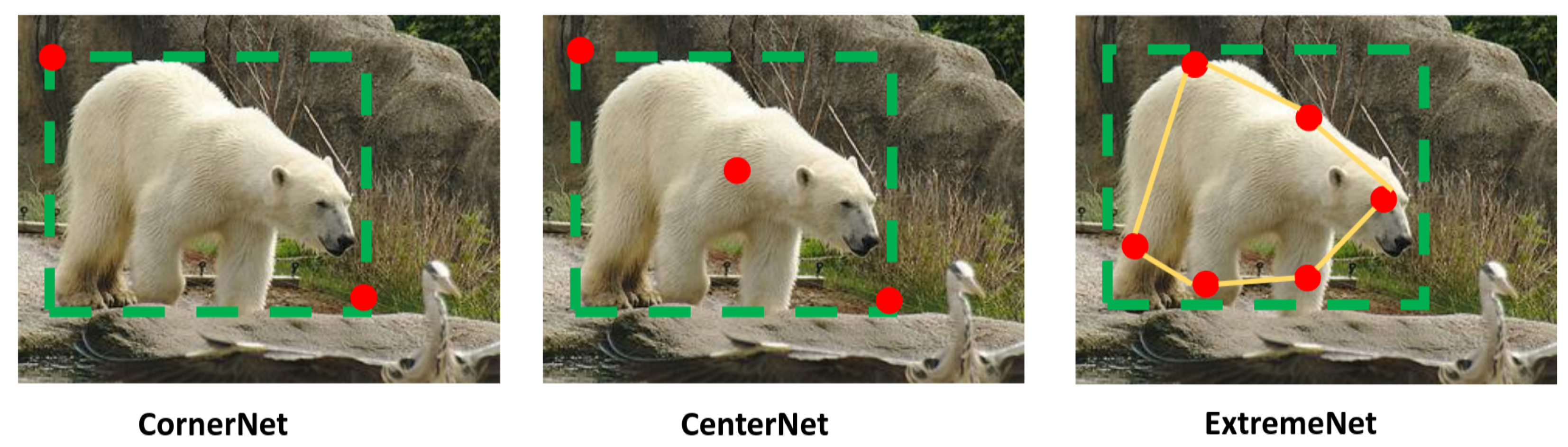

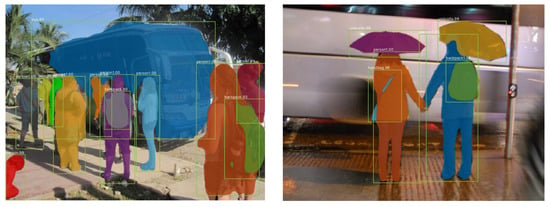

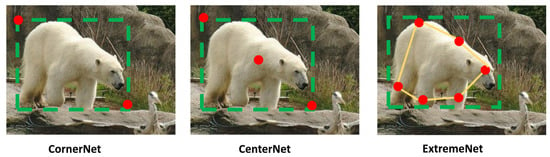

Keypoint-Based Detectors

Anchor-free keypoint-based detectors represent objects using multiple predefined or self-learned keypoints, such as the center, corners, or extreme points, which are then grouped to predict bounding boxes, as shown in Figure 7. A representative example of this approach is CornerNet [30], which detects object bounding boxes by identifying their top-left and bottom-right corners.

Figure 7.

The three images show anchor-free keypoint-based methods which use different combinations of keypoints (red circles) and then group them for bounding box prediction. A pair of corners, a triplet of keypoints, and extreme points on the object are respectively used in CornerNet [30], CenterNet [31], and ExtremeNet [32].

- CornerNet

In 2018, H. Law and J. Deng presented CornerNet [30], an anchor-free detector that represents objects using pairs of keypoints: the top-left and bottom-right corners of the bounding box (see Figure 7). CornerNet introduces corner pooling, a novel pooling layer that helps a convolutional network to better localize the corners of bounding boxes. Corner pooling takes in two feature maps: for the top-left corner, at each pixel location it max-pools all feature vectors to the right from the first feature map, max-pools all feature vectors directly below from the second feature map, and then adds the two pooled results together. A similar procedure is performed for the bottom-right corner. This approach addresses cases where a corner of a bounding box is outside the object and cannot be localized based on local evidence. CornerNet adopts a one-stage paradigm: a modified version of an hourglass network [199] is used as the backbone, followed by two prediction modules, one for the top-left corners, and the other for the bottom-right corners. Each module, whose first part is a modified version of the residual block [120], has its own corner pooling module to pool features from the hourglass network before predicting the heatmaps, embeddings, and offsets. The heatmaps represent the locations of corners of different object categories, while the embedding vectors, inspired by A. Newell et al. [223], serve to group a pair of corners that belong to the same object. For training, CornerNet uses a variant of focal loss [29] that reduces the penalty given to negative locations within a radius determined by the size of the object, using an unnormalized 2D Gaussian.

One year later, CornerNet-Lite [224] improved the inference efficiency of CornerNet with two variants: CornerNet-Saccade and CornerNet-Squeeze. CornerNet-Saccade reduces the number of pixels processed via an attention mechanism inspired by saccades in human vision [225,226], while CornerNet-Squeeze minimizes computation per pixel by introducing a compact hourglass backbone, inspired by SqueezeNet [227] and MobileNets [228].

- CenterNet

In 2019, K. Duan et al. presented CenterNet [31]. While CornerNet [30] represents objects using pairs of corners, it often generates incorrect bounding boxes due to the lack of information about the inner of the cropped regions. CenterNet resolves this limitation by introducing a third keypoint at the geometric center of the bounding box, forming a triplet, instead of a pair, to represent objects (see Figure 7). This extra keypoint allows the model to capture the visual patterns within the proposed region and to verify the correctness of each bounding box. CenterNet, therefore, extends CornerNet [30] by embedding a heatmap for the center keypoints and predicting their offsets. For each bounding box, a central region is defined using a scale-aware schema to adaptively fit the size of the bounding box. A bounding box is preserved only if a center keypoint is detected in its central region; the class label of the center keypoint must match the class label of the bounding box. Moreover, in order to improve the detection of center keypoints and corners, the authors proposed two pooling strategies, respectively: center pooling, which takes the maximum values in both the horizontal and vertical directions, and cascade corner pooling, which takes the maximum values in both the boundary and internal directions of objects.

- ExtremeNet

In 2019, X. Zhou et al. presented ExtremeNet [32], a bottom-up object detection framework that detects objects by identifying five keypoints: four extreme points and one center (see Figure 7). It is related to previous works, including DPM [84], key-point estimation, e.g., human joint estimation [19,223,229,230,231], and the extreme clicking annotation strategy by D.P. Papadopoulos et al. [232]. Specifically, using an hourglass network [199], ExtremeNet predicts heatmaps (one per class) and offset maps, since, as in CornerNet [30], offset prediction is category-agnostic, but extreme-point specific. After predicting the five heatmaps per class, extreme points are extracted as peaks, i.e., pixels that are local maxima, and grouped in a purely geometric manner using center grouping: given four extreme points, their geometric center is computed and, if the center is predicted with a high response in the center map, the extreme points are considered as a valid detection. Furthermore, the authors introduced a form of Soft-NMS [233] to tackle the problem of ghost boxes, i.e., false positive detections that can occur with three equally spaced collinear objects of the same size and edge aggregation to address the case of multiple points being the extreme point on one edge, leading the model to predict a segment of low-confidence responses instead of a single strong peak.

- Other keypoint-based works

In addition to the methods discussed above, several other anchor-free keypoint-based detectors have been proposed. In 2015, D. Yoo et al. introduced AttentionNet [234], which iteratively refines bounding boxes by predicting directional shifts for the top-left and bottom-right keypoints of an object. PLN (Point Linking Network) [235] represents objects using corners, center points, and their links, KP-xNet [236] detects corners for objects of different sizes and aspect ratios using Matrix Networks (xNets), while CentripetalNet [237] improves corner matching by introducing centripetal shift, a technique to pair corner keypoints from the same instance.

Center-Based Detectors

Center-based detectors represent objects using the center (the center point or part) and usually regress bounding box dimensions and other properties directly from this point. One of the first anchor-free center-based detection frameworks is DenseBox [238], introduced in 2015 with a primary focus on face detection. DenseBox defines the output ground truth as a five-channel map; the positive labeled region in the first channel is a filled circle, centered on a face bounding box and with a radius proportional to the bounding box size. For localization, it predicts the distance from each positive pixel to the bounding box boundaries. After DenseBox [238], several methods have been proposed, among which we can identify the following milestones.

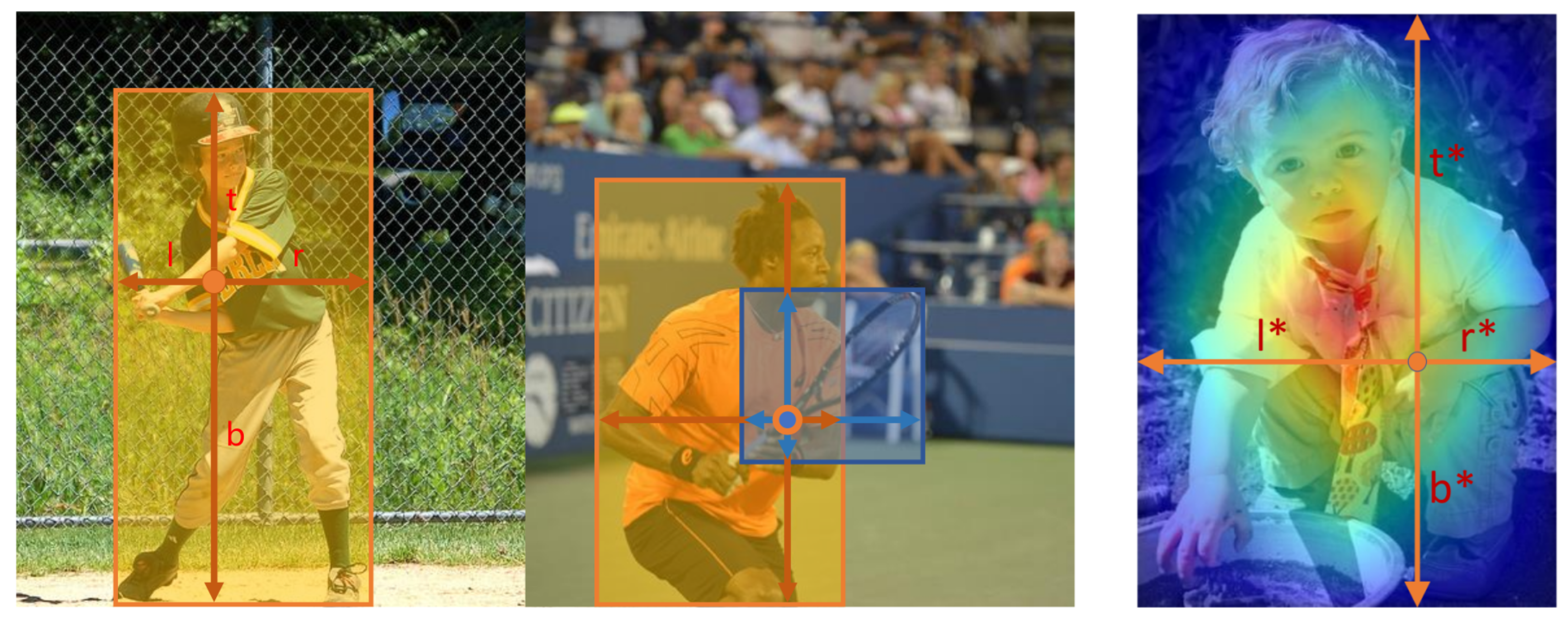

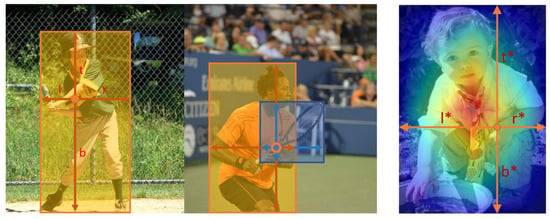

- FCOS and FoveaBox

In 2019, Z. Tian et al. presented FCOS (Fully Convolutional One-Stage) [33], a fully convolutional anchor-free detector. FCOS tackles object detection in a per-pixel prediction fashion, analogous to FCN for semantic segmentation [118]: unlike anchor-based detectors, FCOS directly regresses bounding boxes at pixel locations, treating them directly as training samples. Specifically, the location is considered a positive sample if it falls into any ground truth box, and a negative sample otherwise. For the classification task, the associated class label corresponds to the class label of the bounding box for positive samples, and to the background class for negatives. For the regression task, a four-dimensional real vector, encoding the distances from the location to the four sides of the bounding box, defines the regression targets. FCOS adopts multi-level prediction with FPN [18]: the heads are shared between different feature levels. Additionally, in order to suppress low-quality detected bounding boxes, produced by locations far away from the center of an object, FCOS introduces a center-ness branch, a single-layer network parallel to the classification branch. This branch predicts the center-ness of the location (see Figure 8), defined as the normalized distance from the location to the center of the object that the location is responsible for.

Figure 8.

On the left: FCOS [33] works by predicting a 4D vector encoding the location of a bounding box at each foreground pixel. The second plot on the left illustrates the ambiguity that arises when a location resides in multiple bounding boxes. On the right: the center-ness of FCOS [33] is shown, where red, blue, and other colors denote , and the values between them, respectively. Center-ness decays from 1 to 0 as the location deviates from the center of the object.

In 2020, T. Kong et al. proposed FoveaBox [34], a framework inspired by the fovea in human eyes [239] and similar to FCOS [33] but with notable differences: it eliminates the center-ness branch, employs a shrunk positive area for defining training samples, modifies the mapping of ground truth boxes to FPN [18] levels by presetting a size acceptance range for each level, and normalizes and regularizes the distances from the boundaries for the regression targets. Moreover, an enhanced version of FoveaBox employs feature alignment using deformable convolutions [128], refining the classification branch based on the predicted box offsets.

- FSAF

In 2019, C. Zhu et al. proposed FSAF (Feature Selective Anchor-Free) [35], an anchor-free module designed to select the optimal feature level for each object instance, removing the constraints imposed by anchor boxes. Specifically, the FSAF module can be plugged into one-stage detectors with a feature pyramid structure, such as RetinaNet [29]. For each pyramid level, an anchor-free branch is attached and comprises two additional convolutional layers, for the classification and regression, respectively. During training, a single object instance can be dynamically assigned to an arbitrary feature level within the feature pyramid. The authors defined as the projection of the object bounding box b onto the feature pyramid , and and as the effective and ignoring boxes, respectively, both proportional regions of . The ground truth for classification output consists of one map per class; the positive region corresponds to the effective box , while the ignoring region, i.e., where gradients are not propagated back to the network, is defined as . The remaining region of the ground truth map is the negative area. The ground truth for the regression output are four offset maps, agnostic to classes: for each pixel inside the effective box, the projected box is represented as a four-dimensional vector, whose components are the distances between the current pixel location and the boundaries of . During training, the FSAF module performs online feature selection to dynamically identify the optimal feature level based on the instance content, selecting the level that minimizes the focal loss [29] for classification and the IoU loss [240] for regression.

- Other center-based works

The one-stage center-based paradigm includes several additional detectors beyond those discussed above.

Some are directly based on FCOS [33], expanding its framework and introducing novel features. In 2020, Z. Tian et al. presented an improved version of FCOS [241], further refining the framework, while H. Qiu et al. presented BorderDet [242], which extends FCOS by introducing BorderAlign, a feature extractor that captures border features to refine the original center-based representation. In the same year, CenterMask [243] extended FCOS by adding a spatial attention-guided mask (SAG-Mask) branch for instance segmentation. [244] proposed an NMS-free version of FCOS by incorporating a PSS head for automatic selection of the single positive sample for each instance. FCOS has also inspired lightweight detectors for mobile devices, such as NanoDet [245] and PP-PicoDet [246].

Other approaches have explored different directions. UnitBox [240], presented in 2016, introduced a novel IoU loss function for bounding box prediction that regresses the four bounds of a predicted box as a whole unit. In 2018, J. Wang et al. incorporated UnitBox into the anchor-free branch of SFace [247], a face detection framework adopting a hybrid architecture (anchor-based and anchor-free) to tackle large-scale variations. CSP (Center and Scale Prediction) [248] simplified pedestrian detection into a straightforward task of center and scale prediction using a single Fully Convolutional Network (FCN). CenterNet [194], proposed in 2019, used keypoint estimation to identify the center of the object and regressed all other properties from this point. Two years later, CenterNet2 [193] was introduced as a probabilistic two-stage extension. SAPD (Soft Anchor-Point Detector) [249] adopted a training strategy with soft-weighted points and soft-selected pyramid levels. VarifocalNet [250], based on FCOS [33] and ATSS (Adaptive Training Sample Selection) [251], a training technique that automatically selects positive and negative samples according to the statistical characteristics of objects, adopted an IoU-Aware Classification Score (IACS) as a joint representation of object presence confidence and localization accuracy, and introduced a Varifocal Loss to train the detector to predict the IACS. In 2022, M. Zand et al. presented ObjectBox [252], which only used two corners of the central cell location for bounding box regression and introduced SDIoU loss to deal with boxes with different sizes. Another notable contribution is RTMDet (Real-Time Models for object Detection) [253], introduced in 2022.

YOLO Series

The YOLO (You Only Look Once) series is probably the most prominent and widely recognized family of object detectors in use today. Renowned for achieving an effective balance between inference speed and accuracy, the YOLO series exemplifies the greater efficiency of one-stage architectures compared to two-stage detectors. It includes both anchor-based and anchor-free approaches, reflecting the diverse methodologies encompassed within this category. This series began in 2016 with the work of J. Redmon et al., which introduced YOLOv1 [8], and has since evolved into a multitude of versions and sub-versions: as of this writing, the main line of development has reached YOLOv11 [254]. Versions YOLOv1 [8], YOLOv6 [39], YOLOv8 [255], YOLOv9 [41], YOLOv10 [256], and YOLOv11 [254] adopt an anchor-free, center-based approach, while YOLOv2 [36], YOLOv3 [37], YOLOv4 [38], YOLOv5 [257], and YOLOv7 [40] follow an anchor-based paradigm. In the following, we will focus on the core versions within the main line that have shaped its progression.

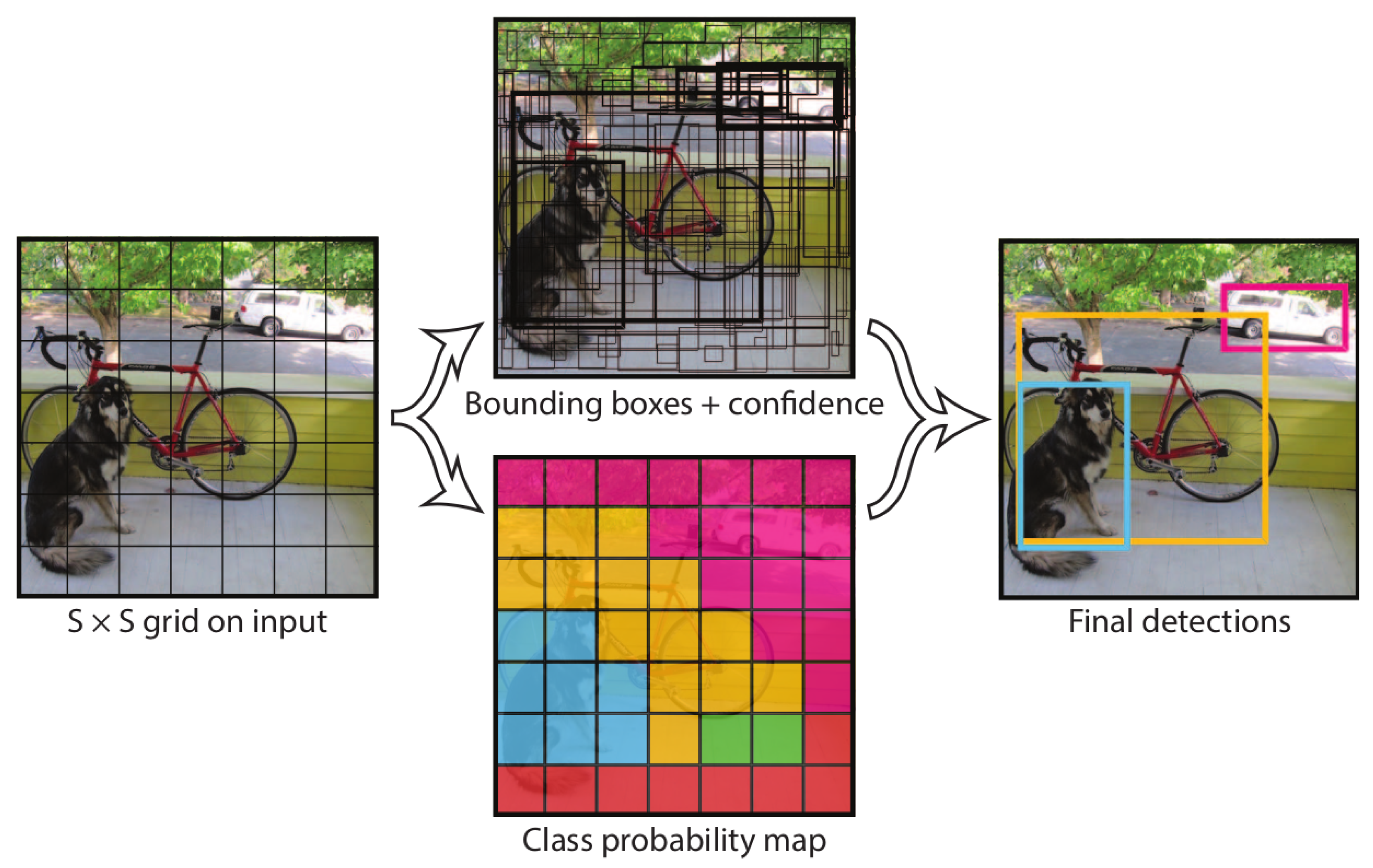

- YOLOv1

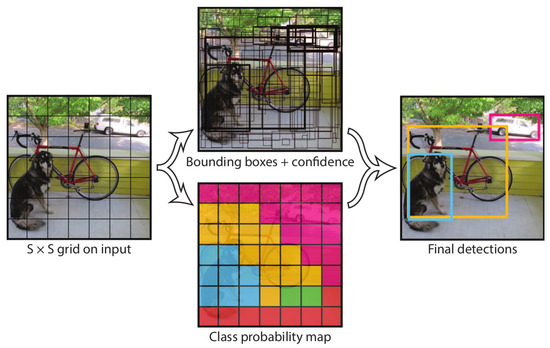

In 2016, J. Redmon et al. proposed YOLOv1 [8], an anchor-free detection framework that reframes detection as a single regression problem, directly predicting bounding box coordinates and class probabilities from the entire input image. The network architecture, inspired by GoogLeNet [258], includes 24 convolutional layers followed by 2 fully connected layers. Inspired by MultiGrasp [259], YOLOv1 divides the input image into an grid, as shown in Figure 9; if the center of an object falls into a grid cell, that grid cell is responsible for detecting that object. Each grid cell predicts B bounding boxes and C class probabilities. A bounding box prediction consists of five values: the coordinates of the box center, relative to the grid cell, width w, height h, both relative to the image size, and a confidence score representing the IoU with ground truth. In conclusion, the output is an tensor, with and by default, while the input resolution is set to . At test time, the conditional class probabilities and the individual box confidence predictions are multiplied, obtaining class-specific confidence scores for each box which encode both the probability of that class appearing in the box and how well the predicted box fits the object.

Figure 9.

YOLOv1 [8] detection pipeline: it divides the image into an grid and for each grid cell predicts B bounding boxes, their associated confidence scores, and C class probabilities. These outputs are encoded as an tensor.

This unified architecture makes YOLOv1 extremely fast, reaching 45 FPS on an NVIDIA Titan X and thus enabling real-time detection. However, YOLOv1 has some important limitations. It imposes strong spatial constraints on bounding box predictions since each grid cell only predicts two boxes (by default) and can only have one class, limiting the number of nearby objects that the model can predict. Moreover, it struggles to generalize to objects in new or unusual aspect ratios or configurations. Finally, YOLOv1 faces difficulties in localizing objects correctly, mainly due to its loss function, which treats errors the same in small and large bounding boxes.

- YOLOv2

In 2017, J. Redmon and A. Farhadi presented YOLOv2 [36], which builds on YOLOv1 [8] with several improvements, focusing mainly on improving recall and localization. YOLOv2 introduced anchor boxes, with dimensions optimized using k-means clustering. The class prediction mechanism is decoupled from the spatial location, enabling the model to predict class and objectness for each anchor box; the objectness still predicts the IoU of the ground truth and the proposed box and the class predictions predict the conditional probability of that class given that there is an object. A passthrough layer is added to improve the localization of small objects, by concatenating high and low resolution features. The model also adopts multi-scale training, to help the network to generalize across a variety of input dimensions. YOLOv2 adopts a custom backbone network, called Darknet-19, inspired by VGG models [119] and NIN (Network in Network) [260]. YOLOv2 is implemented using the Darknet neural network framework [261], written in C and NVIDIA CUDA [262]. Furthermore, the authors introduced YOLO9000, a modified version of YOLOv2 that integrates WordTree, a hierarchical model of visual concepts built using WordNet [263], to jointly train on classification and detection data.

- YOLOv3

In 2018, J. Redmon and A. Farhadi presented YOLOv3 [37], introducing several refinements over YOLOv2 [36]. YOLOv3 predicts an objectness score for each bounding box using logistic regression: this score should be 1 if the bounding box prior overlaps a ground truth object by more than any other prior. If the bounding box prior is not the best but does overlap a ground truth object by more than some threshold (by default ), the prediction is ignored as in Faster R-CNN [14]. Class predictions are performed using multi-label classification with independent logistic classifiers instead of softmax; this formulation helps in more complex domains, with many overlapping labels. Moreover, YOLOv3 adopts multi-scale prediction similar to FPN [18] and a new backbone network, called Darknet-53, much deeper than Darknet-19 [36]. YOLOv3, like YOLOv2, is implemented using the Darknet neural network framework [261].

In 2019, J. Choi et al. presented Gaussian YOLOv3 [264], which models the bounding box of YOLOv3, with a Gaussian parameter, and redesigns the loss function.

- YOLOv4

In 2020, A. Bochkovskiy et al. presented YOLOv4 [38], incorporating several improvements over YOLOv3 [37]. The backbone CSPDarknet53 [265] extends Darknet53 [37] with cross-stage partial connections (CSPNet) [265]. An SPP block [13] and a modified version of SAM [266] are attached to the backbone as additional modules, while a modified version of PANet [165] replaces the FPN [18] used in YOLOv3 [37]. CutMix [267], along with two new techniques introduced by the authors, Mosaic and Self-Adversarial Training (SAT), is used for data augmentation. YOLOv4 adopts several additional optimizations, categorized by the authors as Bag of Freebies (BoF), which improves training efficiency without increasing inference cost, and Bag of Specials (BoS), which enhances accuracy with minimal additional inference cost. Among the additional optimizations, BoF includes the elimination of grid sensitivity, using multiple anchors for a single ground truth, cosine annealing scheduler [268], random training shapes, genetic algorithms for selecting the optimal hyperparameters, DropBlock [269] regularization, Cross mini-Batch Normalization (CmBN), inspired by CBN [270], CIoU loss [271], and class label smoothing [272]. BoS includes DIoU-NMS [271], Mish [273] activation function, and MiWRC [274].

One year later, the same authors presented Scaled-YOLOv4 [275], whose main contribution is the introduction of new model-scaling techniques.

- YOLOv5

In 2020, G. Jocher, founder and CEO of Ultralytics, released YOLOv5, available only via a GitHub repository [257] without a paper published by the original authors; at the time of writing, the current version is v7.0. YOLOv5 adopts a modified CSPDarknet53 [265] as a backbone, a modified CSP-PAN [165,265], and SPPF (Spatial Pyramid Pooling Fast), an optimized version of SPP [13]. YOLOv5 employs various data augmentation techniques: Mosaic [38], Copy-Paste [276], random affine transformations, MixUp [277], HSV augmentation, random horizontal flip, and other augmentations from the Albumentations [278] library. The AutoAnchor strategy optimizes the prior anchor boxes to match the statistical characteristics of the ground truth boxes: it first applies a k-means function to dataset labels, and uses the k-means centroids as initial conditions for a Genetic Evolution (GE) algorithm, using CIoU loss [271] combined with BPR (Best Possible Recall) as a fitness function. Additionally, the formula for predicting the box coordinates is updated in order to reduce the grid sensitivity and prevent the model from predicting unbounded box dimensions. YOLOv5 marked the transition of the series from Darknet [261] to the PyTorch framework [279].

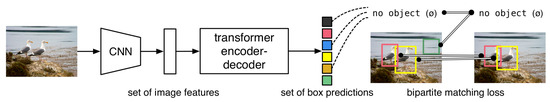

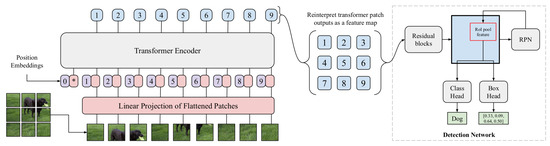

- YOLOv6