1. Introduction

In this paper, we introduce a fractional Adams method with a modified graded mesh for solving the following nonlinear fractional differential equation, with

:

where

are arbitrary real numbers and

represents the Caputo fractional derivative, defined by:

with

denoting the Gamma function and

representing the smallest integer greater than or equal to

. The function

satisfies the Lipschitz condition with respect to the second variable, i.e.,

where

L is a positive constant.

We shall focus only on the case

, as

does not appear to be of significant practical interest ([

1], lines 4–5 on page 46). The error estimates for the case

can be derived in a similar manner.

It is well-known that Equation (

1) is equivalent to the following integral representation:

The existence and uniqueness of the solution to Equation (

1) have been thoroughly discussed in [

1].

The numerical solution of fractional differential equations (FDEs) has been a topic of significant research interest in recent decades due to their applications in fields such as physics, biology, and engineering [

2,

3]. Exact solutions for FDEs are often difficult to obtain. Therefore, it is necessary to develop some efficient numerical methods for solving FDEs.

In addition to Adams methods, other numerical techniques for solving FDEs have been extensively explored. One approach involves directly approximating the fractional derivative, as discussed in [

4,

5,

6]. Another transforms the FDEs into equivalent integral forms, which are then solved using quadrature-based schemes [

7,

8,

9,

10,

11,

12,

13]. Furthermore, alternative strategies, such as variational iteration [

14], Adomian decomposition [

15], finite-element [

16], and spectral methods [

17], have been developed to address specific FDEs.

The Adams methods, particularly the predictor–corrector variants, have received significant attention for their efficiency in solving FDEs. For instance, Deng [

18] enhanced the Adams-type predictor–corrector method by incorporating the short memory principle of fractional calculus, thereby reducing computational complexity. Nguyen and Jang [

19] introduced a new prediction stage with the same accuracy order as the correction stage, while Zhou et al. [

20] developed a fast second-order Adams method on graded meshes to solve nonlinear time-fractional equations, such as the Benjamin–Bona–Mahony–Burgers equation. Moreover, Lee et al. [

21] and Mokhtarnezhadazar [

22] proposed an efficient predictor–corrector method based on the Caputo–Fabrizio derivative and a high-order method on non-uniform meshes, respectively. These advancements help reduce computational effort while maintaining high precision.

Among the many numerical methods available for solving FDEs, Diethelm et al. [

1,

23,

24,

25] provided the theoretical foundation for the fractional Adams method. They proposed an Adams-type predictor–corrector scheme on uniform meshes and provided rigorous error estimates under the assumption that

. The method achieves convergence rates of

for

and

for

, where

N is the number of the nodes of the time partition on

. These results have since inspired various extensions and refinements. Liu et al. [

26] introduced graded meshes to better handle the singular behavior of solutions near

. Their analysis refined error estimates and demonstrated that graded meshes significantly improve accuracy for FDEs with initial singularities, making them a practical choice for challenging problems. Furthermore, fractional calculus is more flexible than classical calculus, and recently, some new fractional definitions have been developed (see [

27]). These developments provide new perspectives and tools for the numerical solution of fractional differential equations.

In this paper, we propose a modified Adams-type predictor–corrector method with a modified graded mesh. This type of mesh was first introduced in [

28]. The modified graded mesh employs a non-uniform grid near the initial point to capture weak singularities, while a uniform grid is used away from the initial point, effectively reducing numerical errors. Our approach not only preserves the advantages of traditional graded meshes but also further optimizes the grid distribution, improving the accuracy of the numerical solutions.

Let

be a partition; we shall consider the following modified graded mesh [

28]. Define

as a positive monitor function:

where

is a constant and

. The mesh is constructed such that

is evenly distributed, i.e.,

Define

and choose a suitable

such that

for some

. The modified graded mesh

is defined as follows:

where

. The grid points

constitute a non-uniform grid, whereas the grid points

form a uniform grid [

28].

Let

for

, with

being the approximation of

. Suppose we know the approximate values

,

from other methods. For

, we define the following predictor–corrector Adams method to solve Equation (

3) for

:

The predictor term

in (

5) is derived by approximating the integral

,

with the following approximation,

,

where

is a piecewise constant function defined on

as,

,

The corrector term

in (6) is derived by approximating the same integral,

,

with the following approximation,

,

where

is a piecewise linear function defined on

as,

,

Here, the weights

in (

5) for

are given in

Appendix A.

The weights

in (6) for

, satisfy

Assumption 1 ([

26])

. Let and satisfy for . There exists a constant such that: Remark 1. Assumption 1 characterizes the local behavior of near and indicates that exhibits a singularity at this point. It is evident that . A simple example is , where .

Our main results of this work are summarized in the following two theorems.

Theorem 1. Suppose and satisfies Assumption 1. Assume that and are the solutions of Equations (3) and (6), respectively. Then, the following error estimates hold, with .

- 1.

If , then we have - 2.

If , then we have - 3.

If , then we have

Theorem 2. Suppose and satisfies Assumption 1. Assume that and are the solutions of Equations (3) and (6), respectively. Then, the following error estimates hold, with .

- 1.

If , then we have - 2.

If , then we have - 3.

If , then we have

The structure of this paper is as follows. In

Section 1, we introduce the predictor–corrector method on modified graded meshes for solving Equation (

1).

Section 2 presents several lemmas for the case

, and

Section 3 discusses lemmas for the case

. In

Section 4, we provide proofs of the theorems.

Section 5 provides numerical examples demonstrating the consistency between the numerical results and the theoretical predictions.

Throughout the paper, the symbol C denotes a generic constant, which may vary across different occurrences but remains independent of the mesh size.

2. Some Lemmas for

Denote

where

P,

K,

are defined in (

4). Then,

in (

4) can be rewritten as follows:

where

,

,

and

J is defined in (

4).

Lemma 1. There exists a positive constant , such thatwhere J is defined in (4). Proof. Choose

such that, since

,

Note that

which implies that when

, we have

Choose

and we see that when

,

Further, we have

. In fact,

implies that

. Hence, with

,

Thus, for

, we obtain

The proof of Lemma 1 is complete. □

In the rest of the paper, we assume .

Lemma 2. Suppose and satisfies Assumption 1. Let .

- 1.

If , then we have - 2.

If , then we have - 3.

If , then we have

In the above, denotes a piecewise linear approximation of defined on each interval for , Proof. For

, we decompose the integral into three parts,

Using Assumption 1, we have

If

, since

, we have

If

, since

and

, we obtain

Thus, there exists a constant

such that

When

, by Lemma 1, we obtain

When

, we get

For

, we have, with

and

, by Assumption 1,

There exist

, such that

For

, when

, we have

Case 1. . There holds

For

, there exists

, such that

For

, we obtain

For

, by Lemma 1, we get

If

, we have

If

, we have

If

, we have

Hence, we obtain, with

,

For

, by (

13), (

14), and Lemma 1, we arrive at

Case 2. . For

, there exists

, such that

and

Thus, by (

15) and (

16), we get

Case 3. . For

, there exists

, such that

and

Thus, by (

17) and (

18), we arrive at

Next, we consider with .

Case 1. . For

, we have

By (

19), Lemma 1, and noting that

we obtain

Case 2. . We have

We first consider

. For

, we have

and

By (

21)–(

23), we arrive at

Now we turn to

. For

, we have

and, by Lemma 1,

and

Case 3. . For

, we have

and

By (

27)–(

29), we arrive at

For

, there exist

,

, such that

Using Assumption 1, we have, with

,

When

, by (

24) and Lemma 1, we obtain

When

, by (

17), we arrive at

Thus, for , noting and , we have the following cases.

If

, we have

If

, we obtain

The remaining cases can be considered similarly. □

The following Lemmas 3 and 4 hold for .

Lemma 3. Let and . The weights and defined in (7) and (8), respectively, satisfy the following properties: - 1.

For all , we have - 2.

For all , we have

Proof. For

, it holds that

For

, it follows that

Hence, we show .

Note that, with

,

Since the is positive over the integration interval, it follows that . □

Lemma 4. Let . For , we havewhere is defined in (6). Proof. By (

7), we consider two cases:

When

, we have

When

, we have

The proof of Lemma 4 is complete. □

Lemma 5. Suppose and satisfies Assumption 1. Let .

- 1.

If , then we have - 2.

If , then we have - 3.

If , then we have

Here, denotes a piecewise constant approximation of defined on for , Proof. The following proof is similar to the proof of Lemma 2. Let

For

, by Lemma 3 and Assumption 1, we obtain

For

, by Lemma 4 and (

10), we have

For

, by Lemma 4 and (

11), we have

For

, with

, where

, we have

For , we consider the following three cases:

Case 1. . We have

By Lemma 4, (

12), (

14), and

, we have

By Lemma 4, (

13), (

14), and Lemma 1, we have

Case 2. . By Lemma 4, (

15), (

16), and

, we have

Case 3. . By Lemma 4, (

17), and (

18), we have

Case 1. . By Lemma 4, (

19), and for

(with

), and noting that

we have

Thus, with

and

, by (

20), we get

Case 2. . We have

For

(with

), we have

Thus, By Lemma 4, (

21), (

31), and (

23), with

and

, we get

For

(with

), by Lemma 1, we have

Thus, By Lemma 4, (

24), (

32), and (

26), with

and

, we get

Case 3. . For

(with

), we have

By Lemma 4, (

27), (

29), and (

33), with

and

, we get

For

, for

, by Assumption 1, there exists

, such that

When

, by Lemma 4, Lemma 1, and

, we have

When

, by Lemma 4, we have

Thus, when

, for

, if

, we have

The remaining cases can be proven similarly. This completes the proof of Lemma 5. □

We remark that, in the proof of Lemma 5, some inequalities hold for . The following Lemma 6 holds for .

Lemma 6. Let , then there exists a constant such that the following inequalities hold,where and are weights defined by (5) and (6), for . Proof. We will prove inequality (35), while the proof of (

34) follows analogously.

where

denotes the remainder term. By setting

in the integral, we have

From Lemma 3, . Therefore, inequality (35) holds. □

5. Numerical Simulations

In this section, we will consider some numerical examples to illustrate the convergence orders of the proposed numerical method (6) under different smoothness conditions of . We focus on the case for . Similarly, we can consider the case for .

Let N be a positive integer. Let be the partition of . For the graded mesh, we choose , with . When , this mesh is the uniform mesh. For the modified mesh, we have for and for .

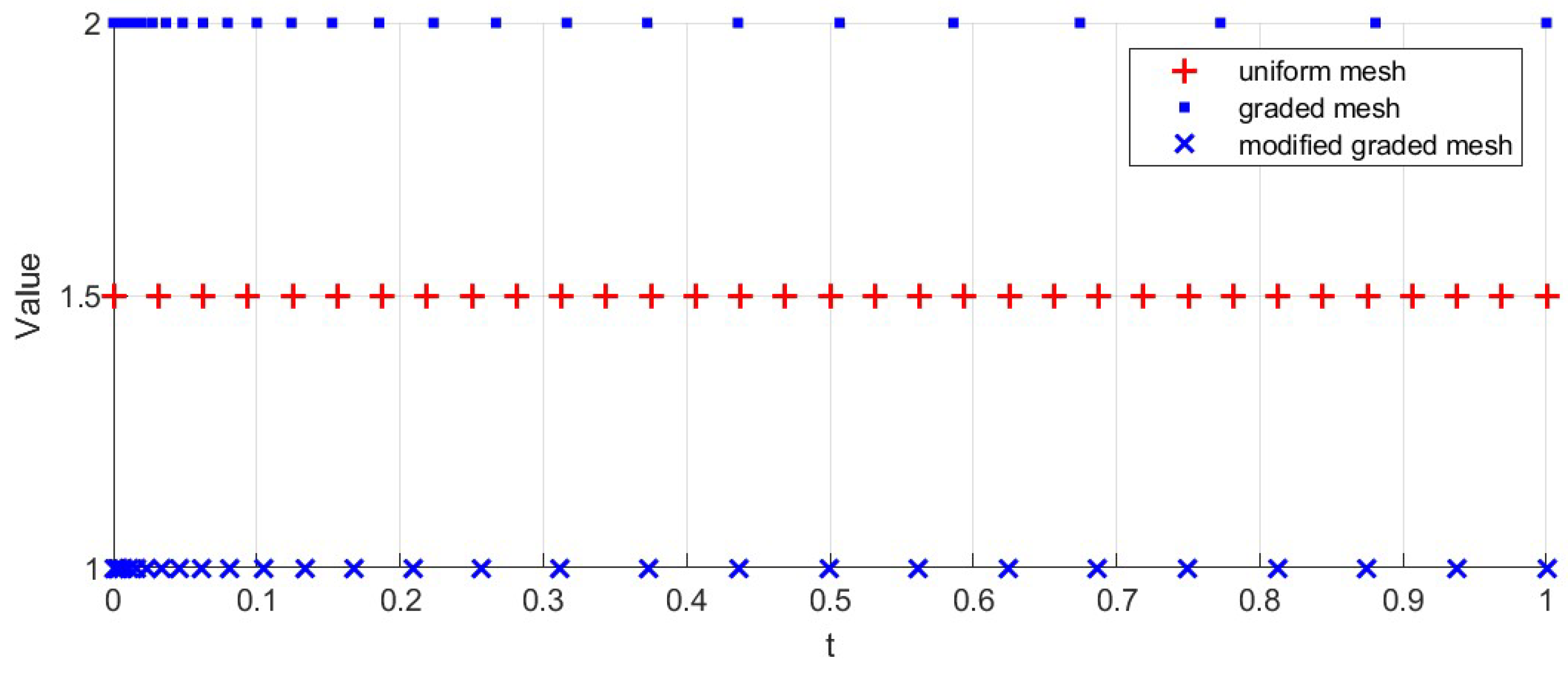

In

Figure 1, we choose

and

and plot the graded mesh with

and uniform mesh with

and the modified mesh with

, and

with

. The modified graded mesh is uneven from

to

, and uniform from

to

.

Example 1. Consider the following fractional differential equation,subject to the initial conditionwhere , , and , , and the exact solution is . Here, , which implies that the regularity of behaves as , which satisfies Assumption 1. Assume that

and

,

are the solutions of (

3) and (6), respectively. By Theorem 1 with

, we have the following error estimate (note that

):

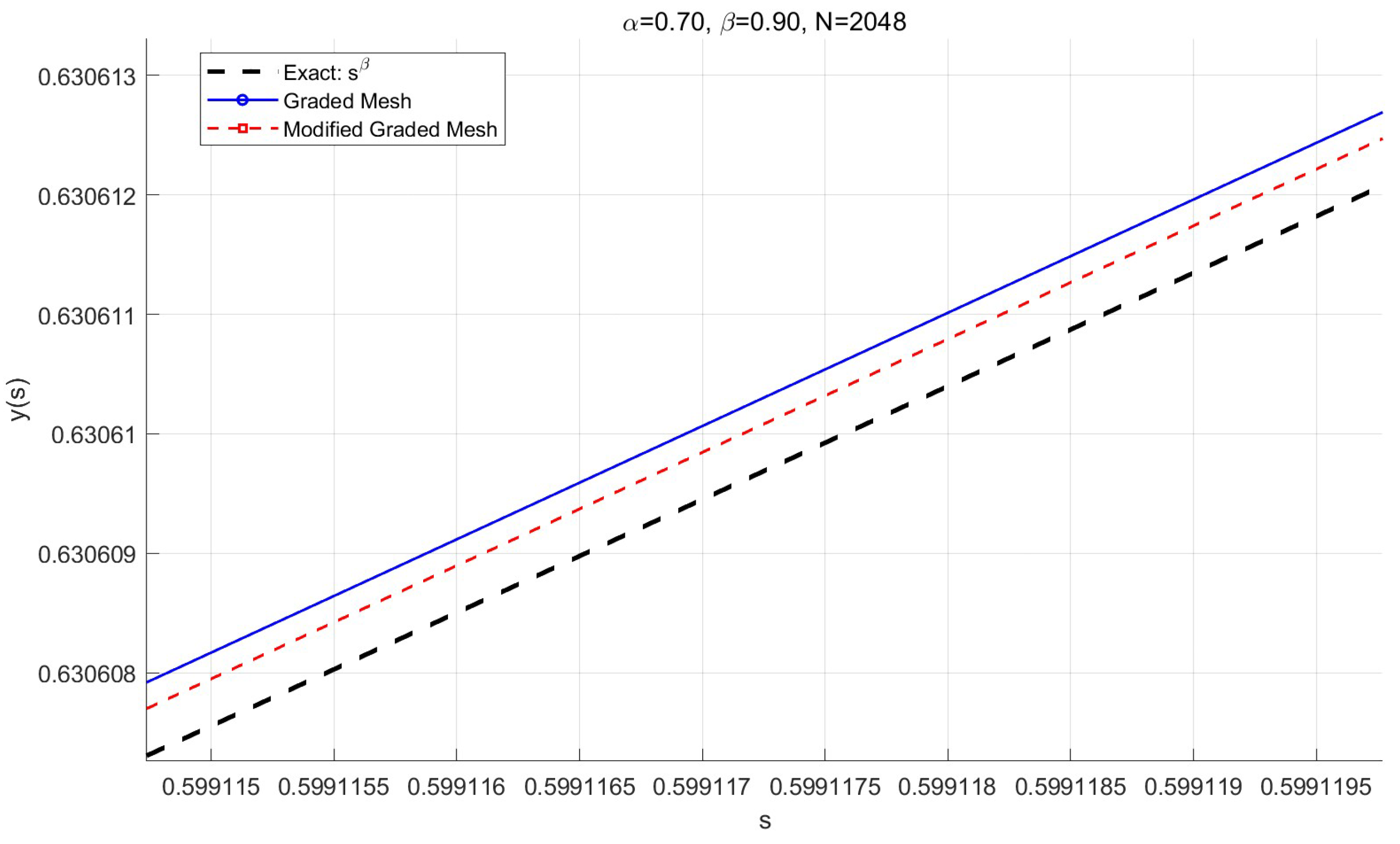

When

,

,

, and

, we compare the exact solution and the numerical solutions for the graded mesh

and the modified graded mesh

.

Figure 2 shows the exact solution along with the numerical solutions obtained using the graded mesh and the modified graded mesh. From the figure, it is evident that both methods approximate the exact solution well, but the modified graded mesh achieves a smaller error compared to the graded mesh. In our numerical tests, we see that the errors from the modified graded mesh depend on the value of

K.

For the different values of

, we select the appropriate values of

r and set

, where

Then, we compute the maximum nodal error

(as previously defined) for various

N and determine the experimental order of convergence (EOC) using the following formula:

In

Table 1,

Table 2 and

Table 3, we set

and present the experimental order of convergence (EOC) alongside the maximum nodal errors for different values of

N. The numerical results indicate that the error of the modified mesh is smaller than that of the graded mesh.

Example 2. Consider the followingwhere and . The exact solution , where is the Mittag-Leffler function defined by Hence,

which suggests that the regularity of

behaves as

, where

.

According to Theorem 1, when

, the error estimate is given by

Table 4,

Table 5 and

Table 6 summarize the experimental order of convergence (EOC) along with the maximum nodal errors for different values of

N. The observed EOC closely aligns with the theoretical prediction:

.

Through the analysis and numerical experiments, it is clear that the modified graded mesh achieves smaller errors compared to the graded mesh. The traditional graded mesh, with its non-uniform step size, is effective at addressing the weak singularity near the initial time . However, as the time nodes move further away from the initial point, the sparsity of the mesh can lead to significant errors. In contrast, the modified graded mesh adopts the graded mesh near to better handle the singularity and transitions to a uniform mesh in later regions, effectively reducing the overall error.