1. Introduction

In the realm of mathematical modeling, initial value problems (IVPs) play a pivotal role in representing a wide array of physical, biological, and engineering phenomena. The numerical solutions (NUMSs) of IVPs are essential for obtaining approximate solutions (APPSs) when analytical methods prove intractable. Differernt numerical methods have been developed to obtain APPSs for ODEs. One common method is to turn the differential equation (DE) into a system of algebraic equations. Foundational texts such as Shen et al. [

1] give a full picture of the powerful spectral methods paradigms. The literature presents a wide array of basis functions for this purpose, encompassing both non-orthogonal and orthogonal polynomials.

Among the non-orthogonal polynomials, Bernstein polynomials have been effectively employed for high-order ordinary differential equations (ODEs) [

2,

3], whereas alternative methods have utilized bases such as Bernoulli polynomials [

4] and cubic Hermite splines [

5]. Investigations have examined additional non-classical polynomials, including generalized Fibonacci and Vieta–Fibonacci polynomials, to address fractional and singular issues [

6,

7].

However, orthogonal polynomials are very common because they can obtain approximating solutions. The Jacobi polynomials (JPs) family is a flexible and unifying base for many spectral methods. JPs and Romanovski–Jacobi polynomials, in their broader applications, have been employed to develop resilient Galerkin and matrix methods for a range of complex DEs [

8,

9,

10]. The strength of this family also comes from its important special cases. For example, Gegenbauer polynomials have been effectively utilized in time-fractional cable problems [

11]. Chebyshev polynomials are used in many numerical methods, such as solving singular Lane–Emden equations [

12], making Petrov–Galerkin schemes for beam equations [

13], and making hybrid methods for nonlinear PDEs [

14]. These ideas have been expanded to include specialized bases, such as airfoil polynomials for complex fluid models [

15]. In addition to the Jacobi family, similar methods have used Chebyshev wavelets [

16] and radial basis functions [

17]. These various methods have made it possible to accurately solve other types of DEs, such as the Lane–Emden type [

18,

19] and the first Painlevé equation [

20].

Orthogonal JPs possess numerous advantageous properties that render them highly effective in the NUMSs of various types of ODEs, particularly through spectral methods. The primary attributes of JPs include orthogonality, exponential precision, and the incorporation of two parameters that facilitate versatility in setting the APPSs. In the development of various computational techniques, the usefulness of these characteristics is visible. For the development of Jacobi–Galerkin methods, which provide a strong framework for solving complex differential equations, the orthogonality of JPs is essential [

21]. Another useful technique creates OMs for derivatives that function well by utilizing the properties of JPs. This approach streamlines the process of converting a DE into an algebraic system as demonstrated in [

22,

23].

Orthogonal functions (OFs) have garnered significant interest in addressing different types of DEs. The primary attribute of this strategy is its ability to reduce these problems to the handling of a system of algebraic equations, significantly simplifying the problem. For example, researchers have created fractional-order Legendre functions [

24] and shifted fractional-order Jacobi functions to effectively address systems of FDEs [

25]. Another significant advancement was the proposal of Exponential Jacobi Polynomials (EJPs) for high-order ODEs to solve problems on semi-infinite intervals [

26]. These were later expanded to develop new algorithms for partial DEs in unbounded domains [

27,

28].

This work aims to expand the use of the novel OFs derived from EJPs to address IVPs, named GEJFs that satisfy the given ICs, to develop an SCM capable of addressing the following IVPs:

subject to the ICs

In this work, we introduce a novel methodology to solve Equations (

1) and (

2) by establishing new Galerkin OMs for ODEs based on the basis vectors of GEJFs. To the best of our knowledge, the literature has never used this strategy for addressing ODEs, utilizing the OM of the suggested basis vector, which is presented. This novel technique enables the effective mending and attainment of NUMSs for this class of ODEs.

This article is structured as follows:

Section 2 delineates particular characteristics of the JPs and EJFs.

Section 3 focuses on the development of innovative OMs for the ODs of GEJFs. In

Section 4, we analyze the application of freshly generated OMs in conjunction with the SCM as a numerical approach to solve (

1) and (

2). The evaluation of the error estimate for the NUMS obtained from this innovative method is elaborated in

Section 5.

Section 6 provides numerical examples and comparisons with alternative approaches from the literature to illustrate the effectiveness of the proposed strategy. In

Section 7, we summarize the key findings and draw implications from our research.

5. Convergence and Error Analysis (CEA)

In this part, we present the CEA of GEJFCOPMM. In this respect, we consider the space

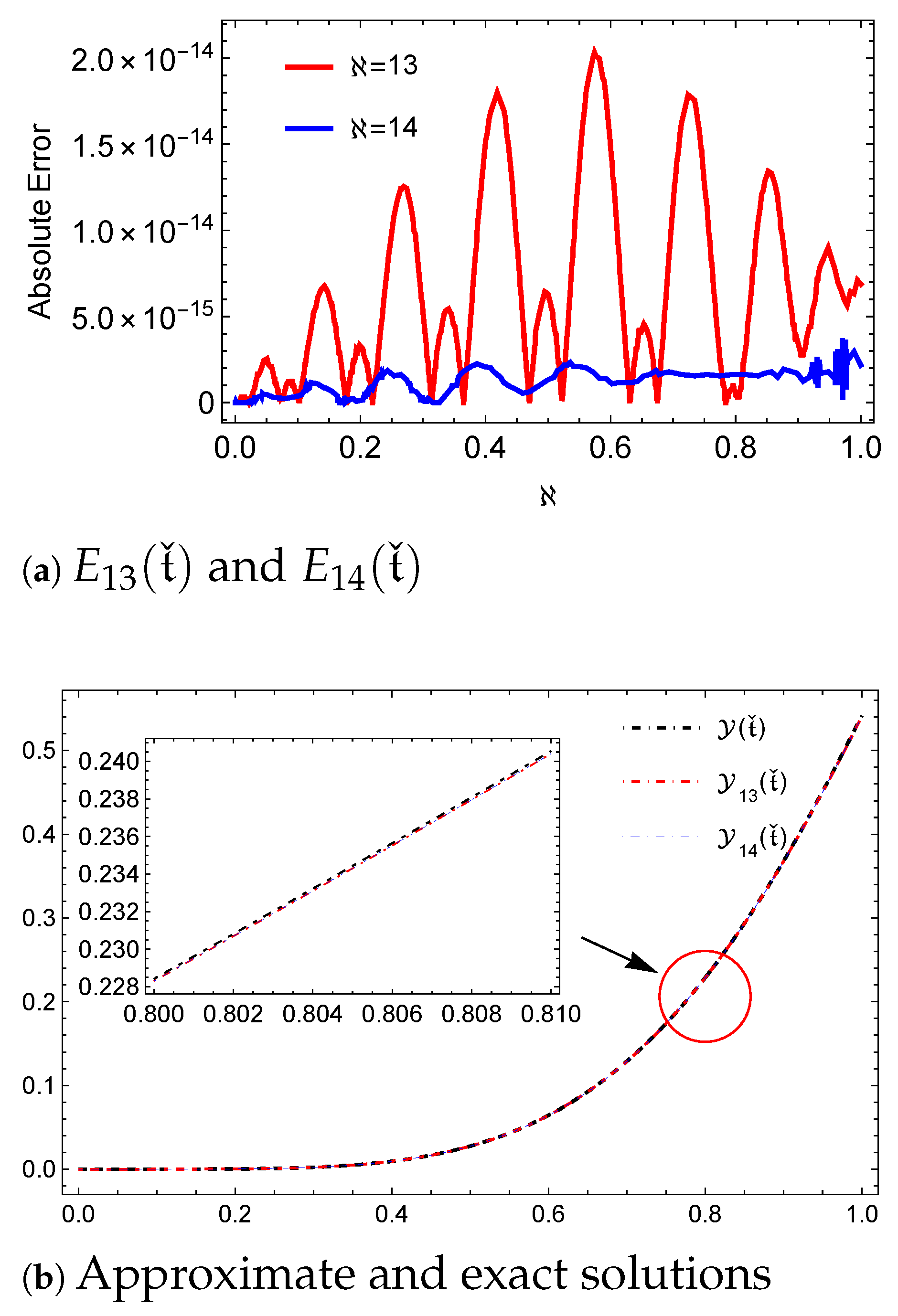

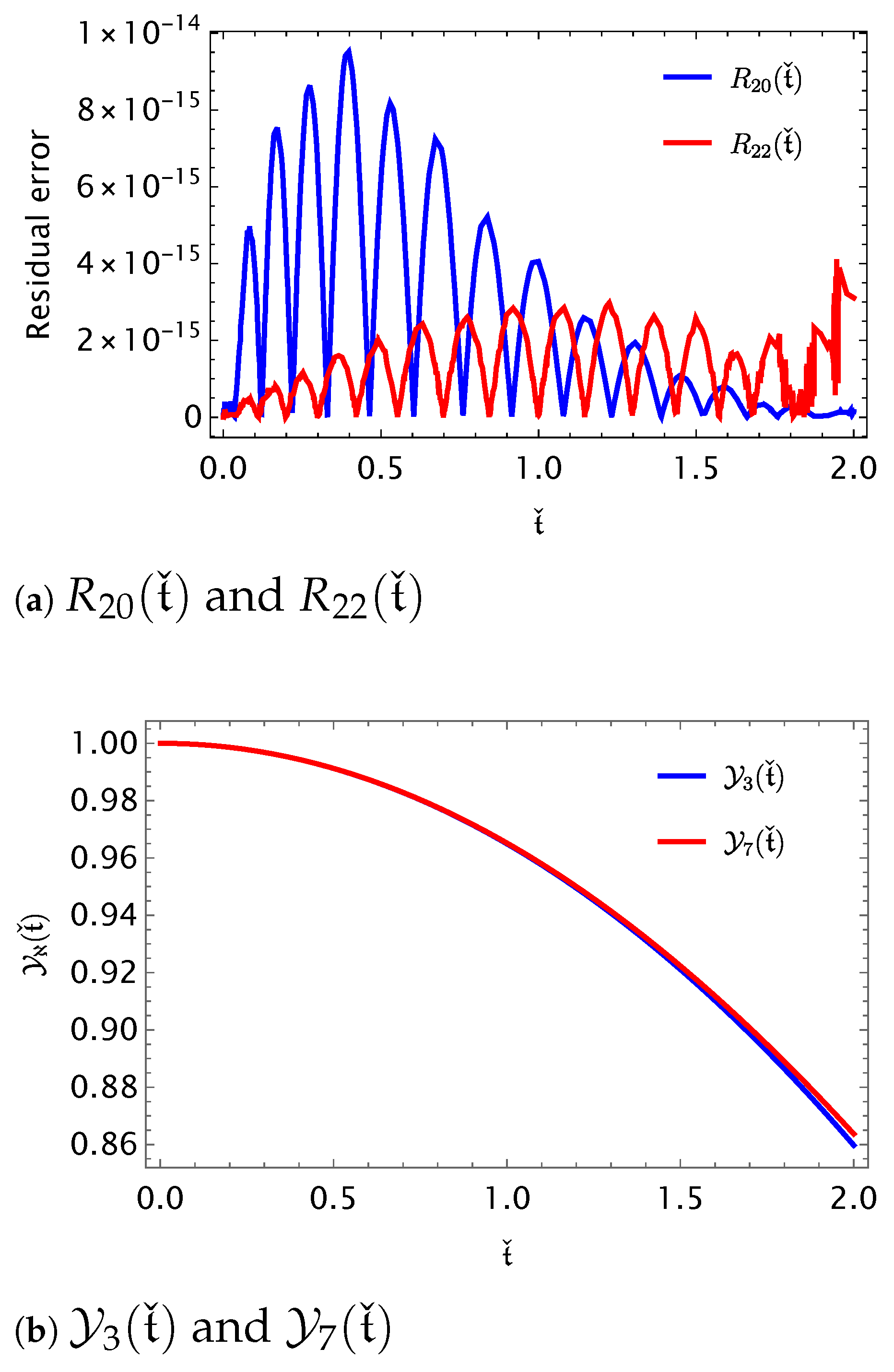

Additionally, the absolute error (AE) between

and its approximation

can be defined by

In the paper, the error obtained by GEJFCOPMM is analyzed by using the the weighted

norm

and the

(MAE) norm

The following lemma is need to prove Theorem 2:

Lemma 3.

Consider the zeros ; then, there exists a constant M such that Proof. In view of (

30), we have

Consider the function

. Using the mean value theorem, we get

then,

Applying the inequality (

42) to (

40), we obtain

which completes the proof of the lemma. □

Theorem 2.

Assume that , and has the form (27) and represents the best possible approximation (BPA) for out of . Then, there is a constant K such thatandwhere , and the constant M is a constant given by Lemma 3. Proof. Using Theorem 3.3 in ([

31], p. 109),

takes the form

where

(has the form of Equation (3.1) in ([

31], p. 108)) is the interpolating polynomial for

at the points

that satisfy

, such that

. In view of Lemma 3, we have

Then, we get

where

is the leading coefficient of

. In view of formula ([

29], Formula (7.32.2)),

we get

By using [

32],

the inequality (

50) takes the form

Now, consider

; so,

Since the APPS

represents the BPA to

, we have

and

So,

and

Using inequality (

52) leads to

□

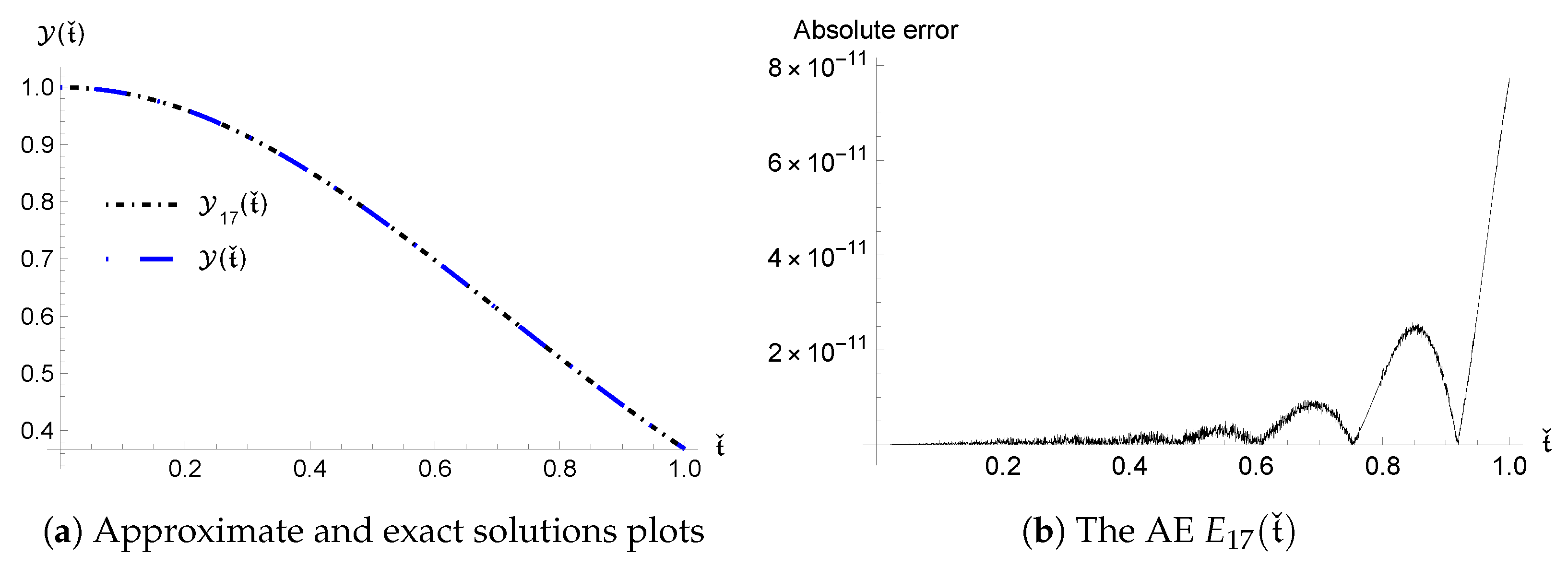

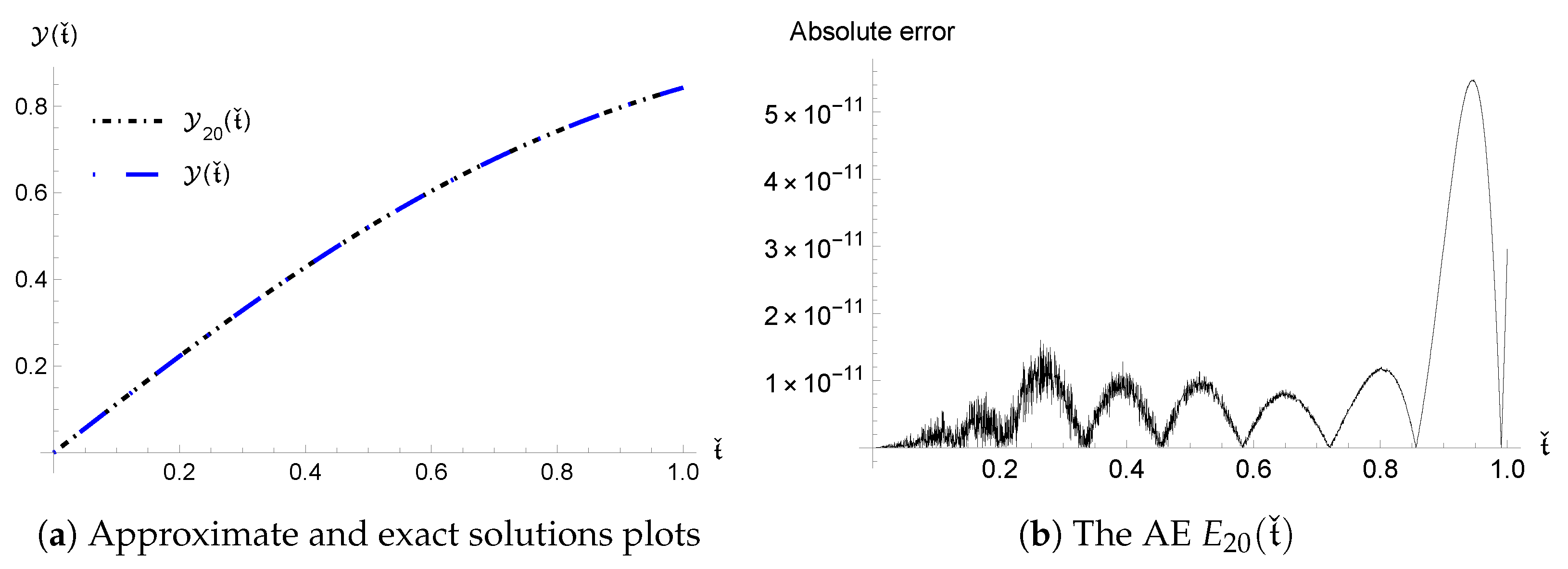

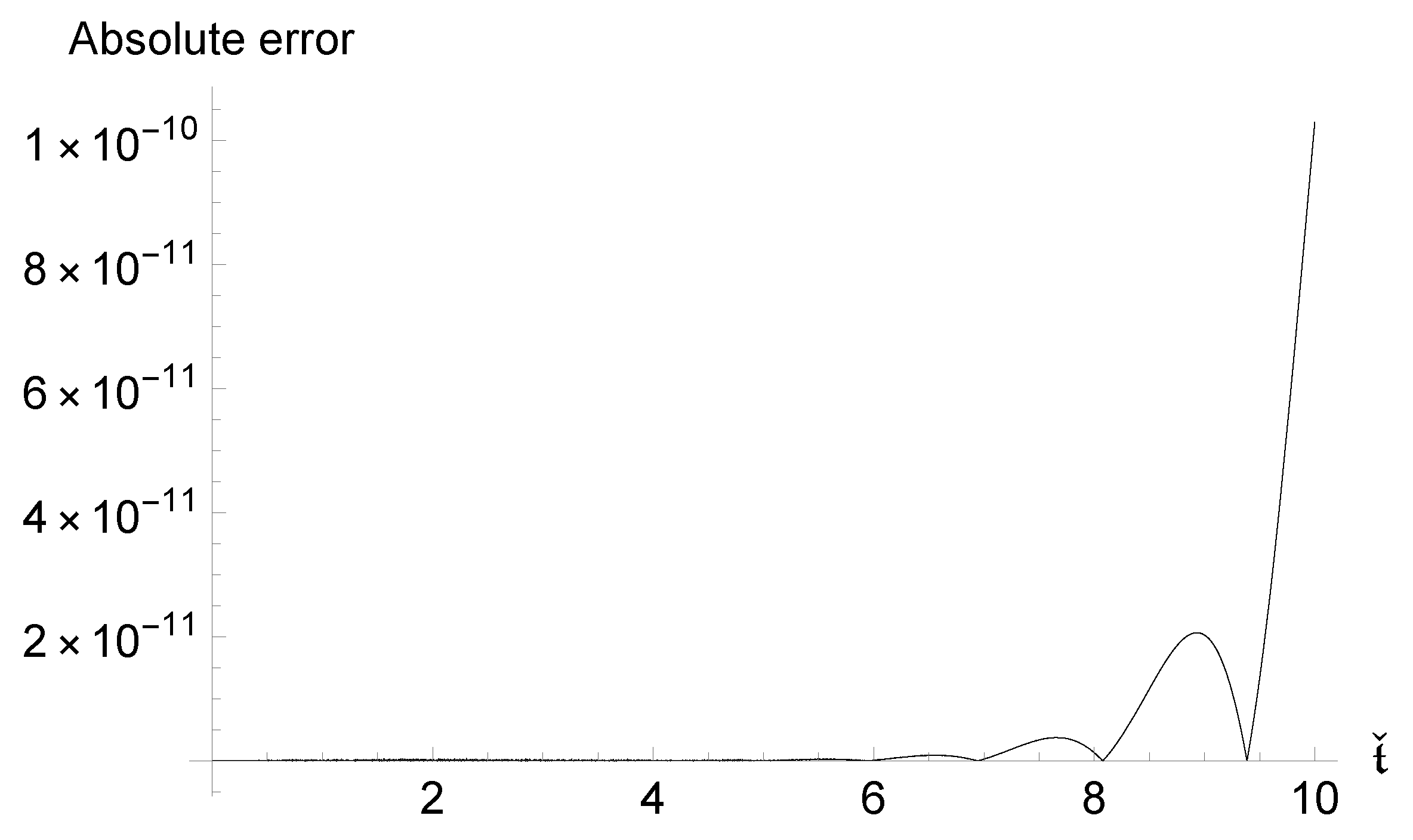

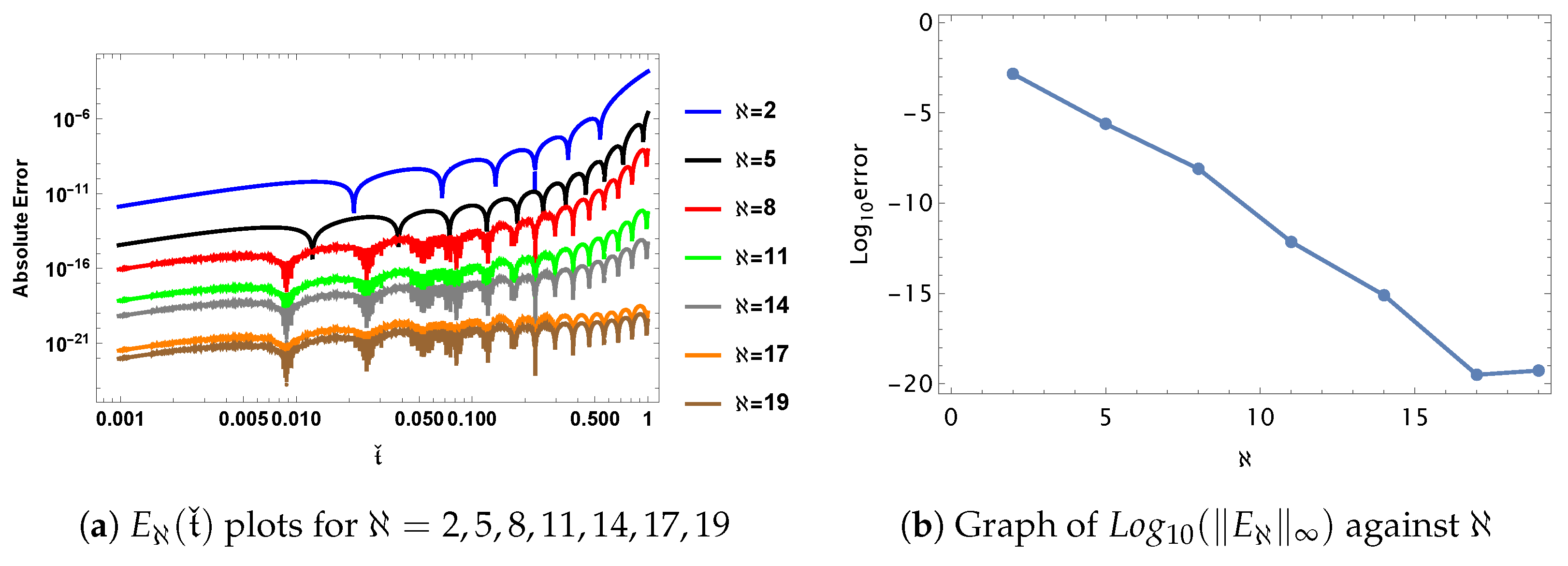

The following implication demonstrates that the acquired inaccuracies converge rapidly.

Corollary 3.

For all , we haveand The next theorem emphasizes the stability of error, i.e., making an estimation for error propagation.

Theorem 3.

For any two successive approximations of , we getwhere ≲ means that a generic constant d exists, such that . Proof. We have

By considering (

59), we can obtain (

61). □

7. Conclusions

In this work, we have introduced GEJFs that satisfy HC. Moreover, by utilizing the computed OMs with the SCM, GEJFCOPMM is established. GEJFCOPMM gives high-accuracy NUMSs and efficiency. For future work, we have identified several potential research avenues. First, we intend to extend the GEJFCOPMM framework to address boundary value problems, which bring with them special difficulties and opportunities for further development. Additionally, we believe that the theoretical findings presented in this paper can be adapted to handle other types of DEs, including PDEs and systems of DEs. Furthermore, exploring the application of GEJFs in real-world scenarios, such as in engineering and physics, could provide valuable insights and validate the robustness of our approach. We also encourage further investigation into the enhancement of OMs for various function classes to broaden the applicability of our method.