Joint Power Allocation Algorithm Based on Multi-Agent DQN in Cognitive Satellite–Terrestrial Mixed 6G Networks

Abstract

1. Introduction

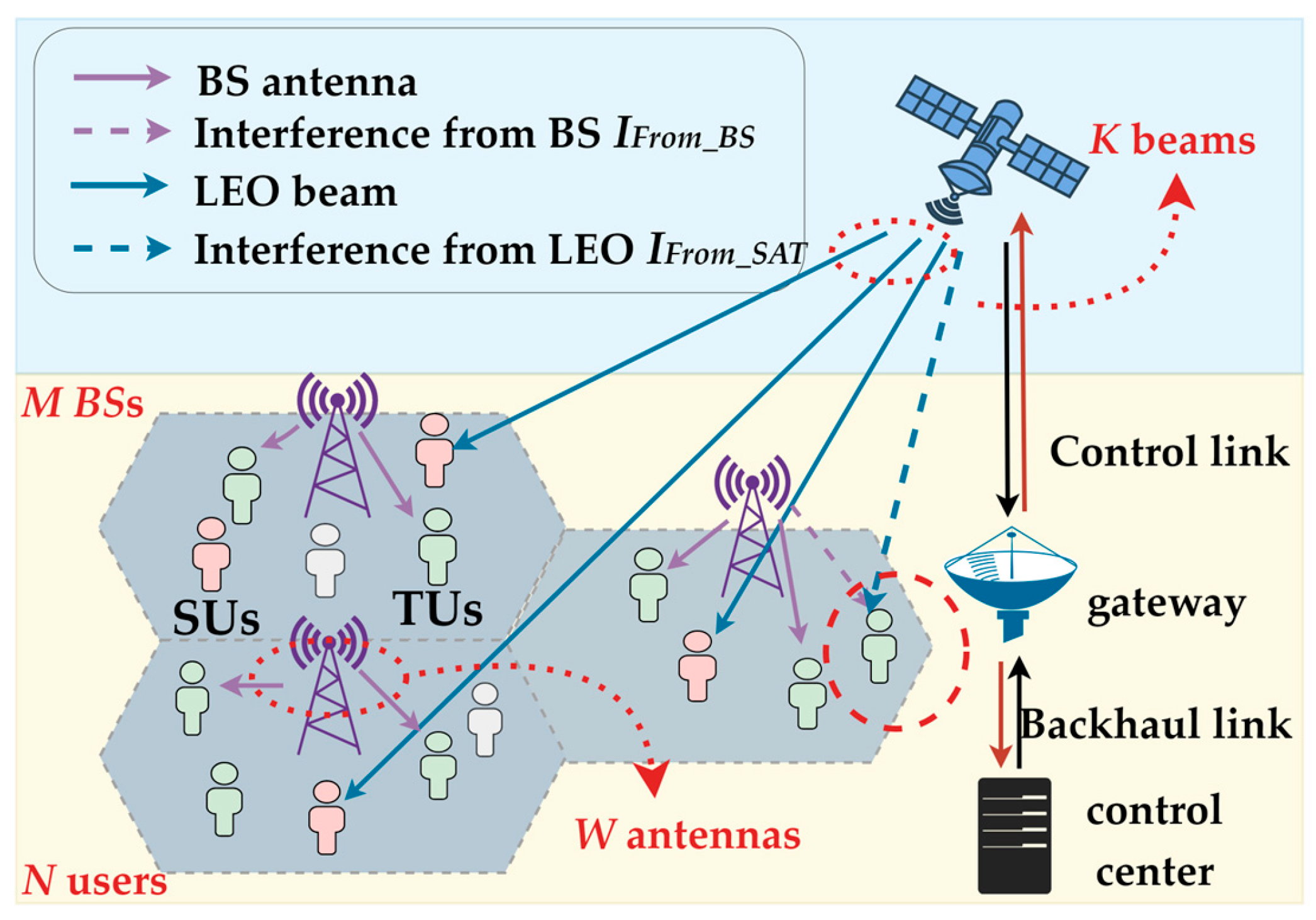

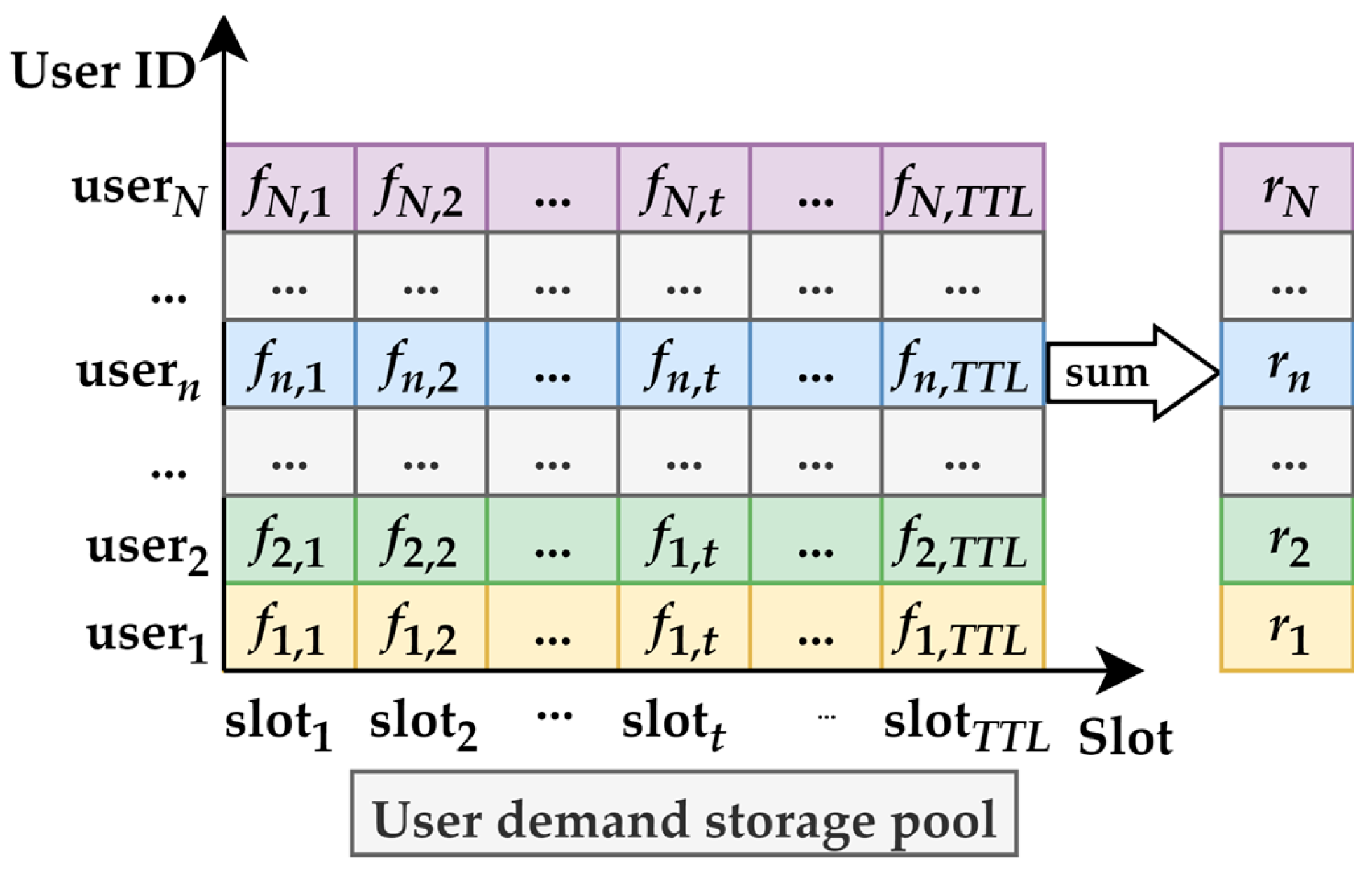

2. System Model

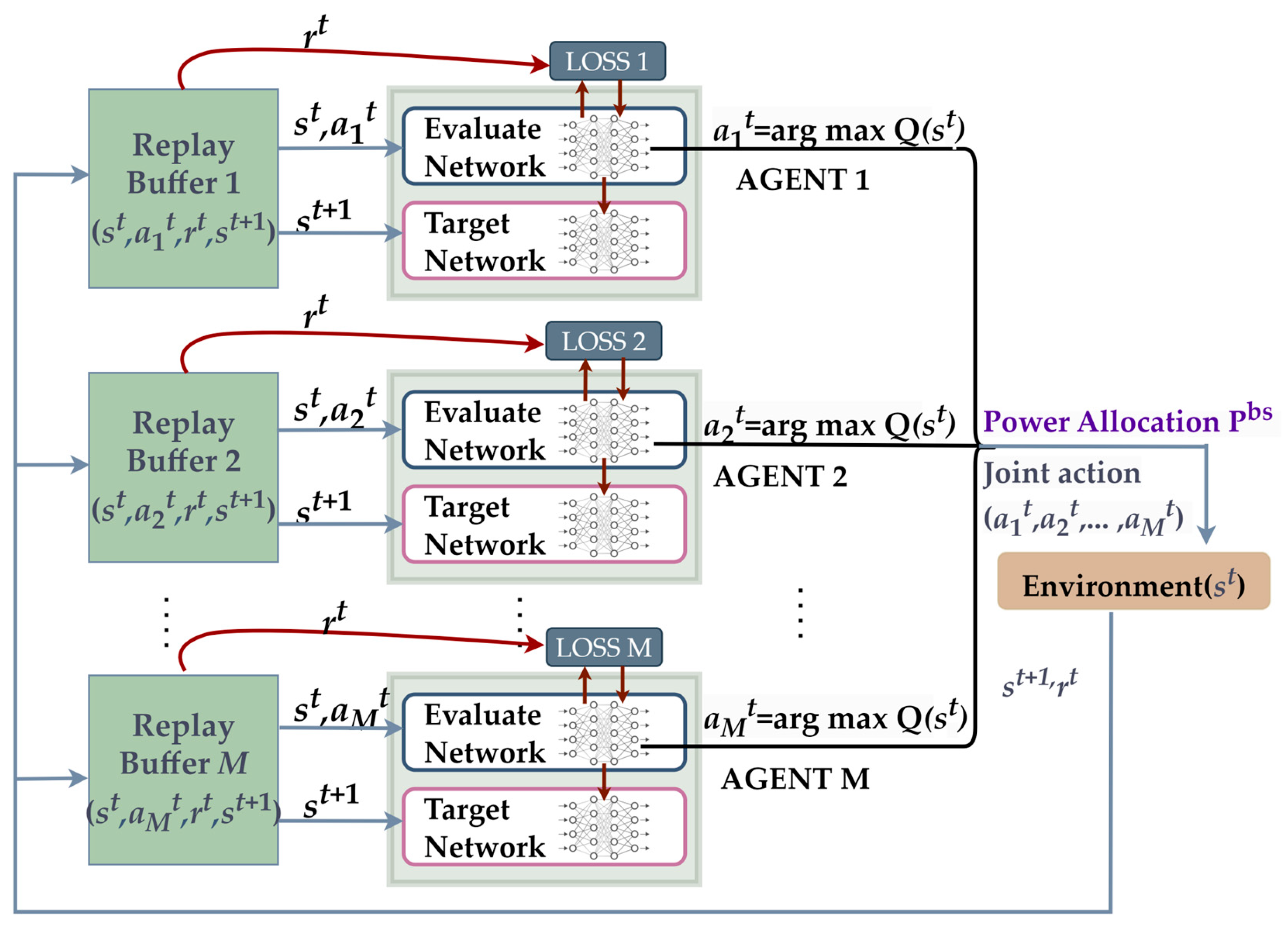

3. MADQN-IL Scheme

| Algorithm 1 Ground base station terminal association algorithm |

| 1. Input: Input the location of the terminal to the BS{posBS, posUser}, BS radius{RBS}, satellite correlation situation{ASAT}, user request{F}. 2. Determine the terminals that have been served by the satellite based on the satellite beam patter {nSat}. BS will serve other terminals {nBS}. 3. Calculate the distance from the terminal to the BS and determine the terminals within the BS{, m = 1, 2, …, M}. 4. Determine the terminals associated with the BS based on user needs from high to low, and obtain the BS user association matrix ABS. 5. for m = 0 to M − 1 do 6. Calculate the offset angle between the terminal and antenna, and complete the matching pattern between the terminal and antenna within the BS size(X) = N × M × W: 7. end for 8. Output: BS terminal correlation matrix X. |

| Algorithm 2 MADQN-IL algorithm for power allocation of base station antennae |

| 1. Input: Input M neural network parameters and M replay memories. 2. for episode = 1 to Ep do 3. Initialize the environment. 4. for step = l to Max_step do 5. Obtain observation status s = {F}. 6. Obtain the correlation matrix X between the BS antenna and the terminal through Algorithm 1. 7. for m = 1 to M do 8. Selection of antenna power allocation combination for BS m, 9. end for 10. BS network execution action a = {P1, P2, …, PM}. Obtain reward r and the next state s: 11. Calculate the channel capacity provided by each BS to serve the BS terminals based on the power allocation decisions of X and BS. 12. Calculate the maximum interference of ground terminals on satellite users IBS, and compare it with the threshold requirements Ith. Then determine reward size. 13. Update the requirement storage pool to obtain the next state s. 14. for m = 1 to M do 15. The quadruple will be stored in Memory m. 16. end for 17. If the number of quadruples stored in Memory is greater than the starting number of training, start training: 18. for m = 1 to M do 19. Sample a random batch from Memory m. 20. Calculate loss function. 21. Each agent updates the current Q-network m. 22. Update the target network parameters m at a certain frequency. 23. end for 24. end for 25. end for Output: Train neural network parameters and BS power allocation strategies. |

4. Results and Discussion

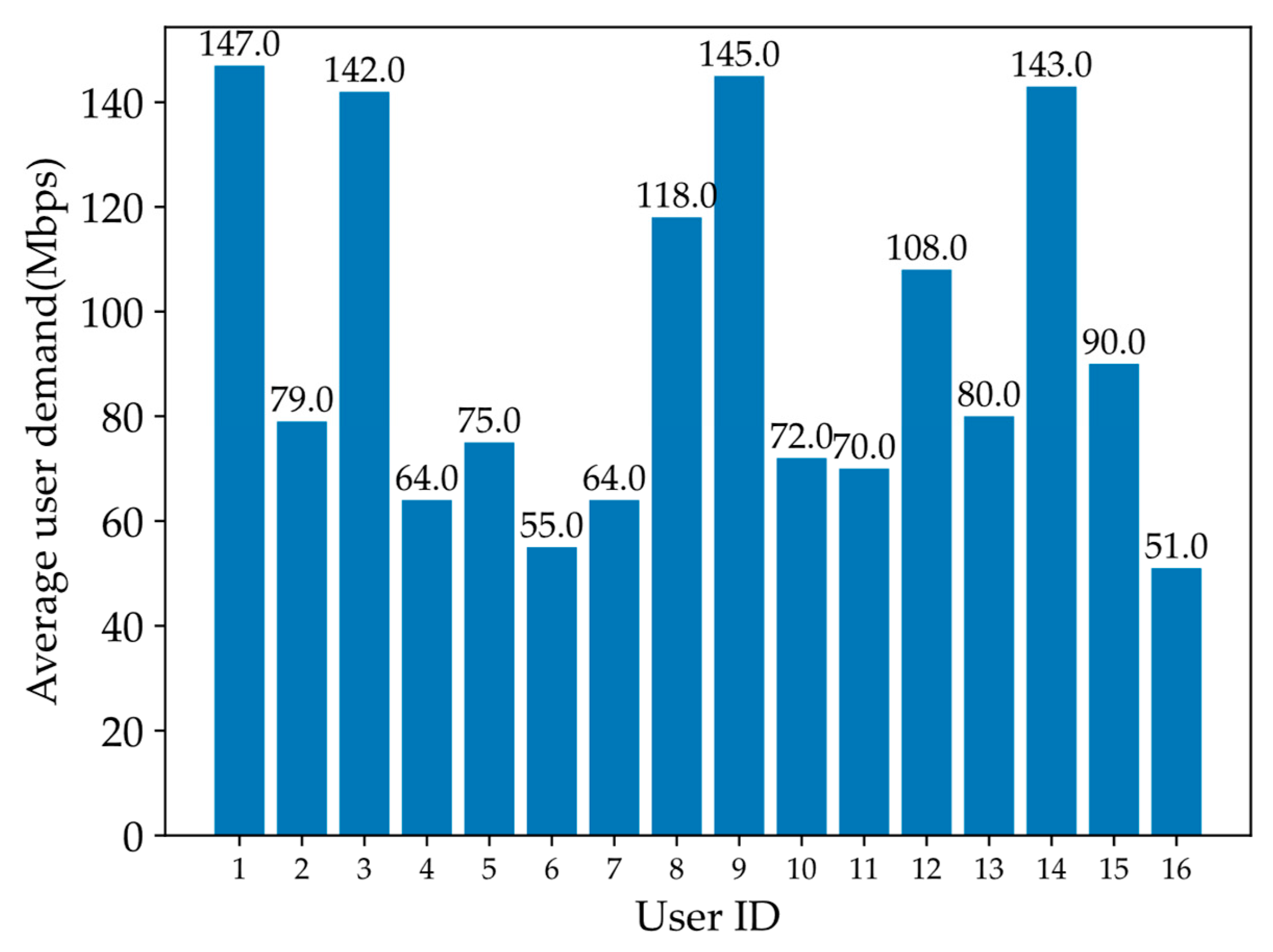

4.1. Simulation Environment Settings

| Parameters | Value |

|---|---|

| Training steps | 250 |

| Learning rate α | 0.00001 |

| Greedy factor ε | 0.9 |

| Discount factor γ | 0.95 |

| Update frequency | 200 |

| Memory size | 10,000 |

| Training episodes | 4000 |

| Batch size | 512 |

| Sampling interval | 20 |

| Number of networks | 3 |

| Input numbers | 640 |

| Number of neurons | 128/128/128/125 |

4.2. Simulation Results

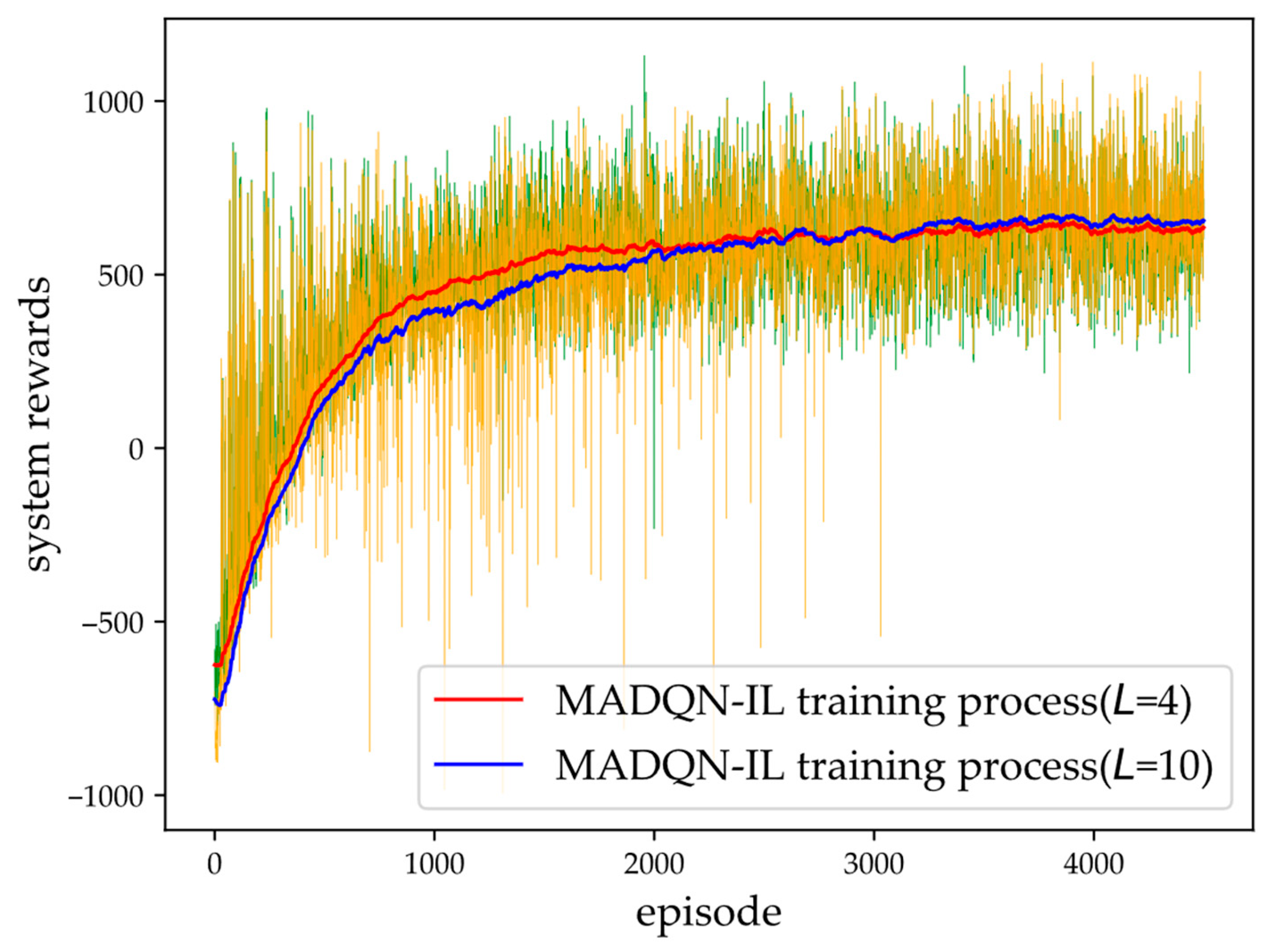

4.2.1. Network Training Convergence Result

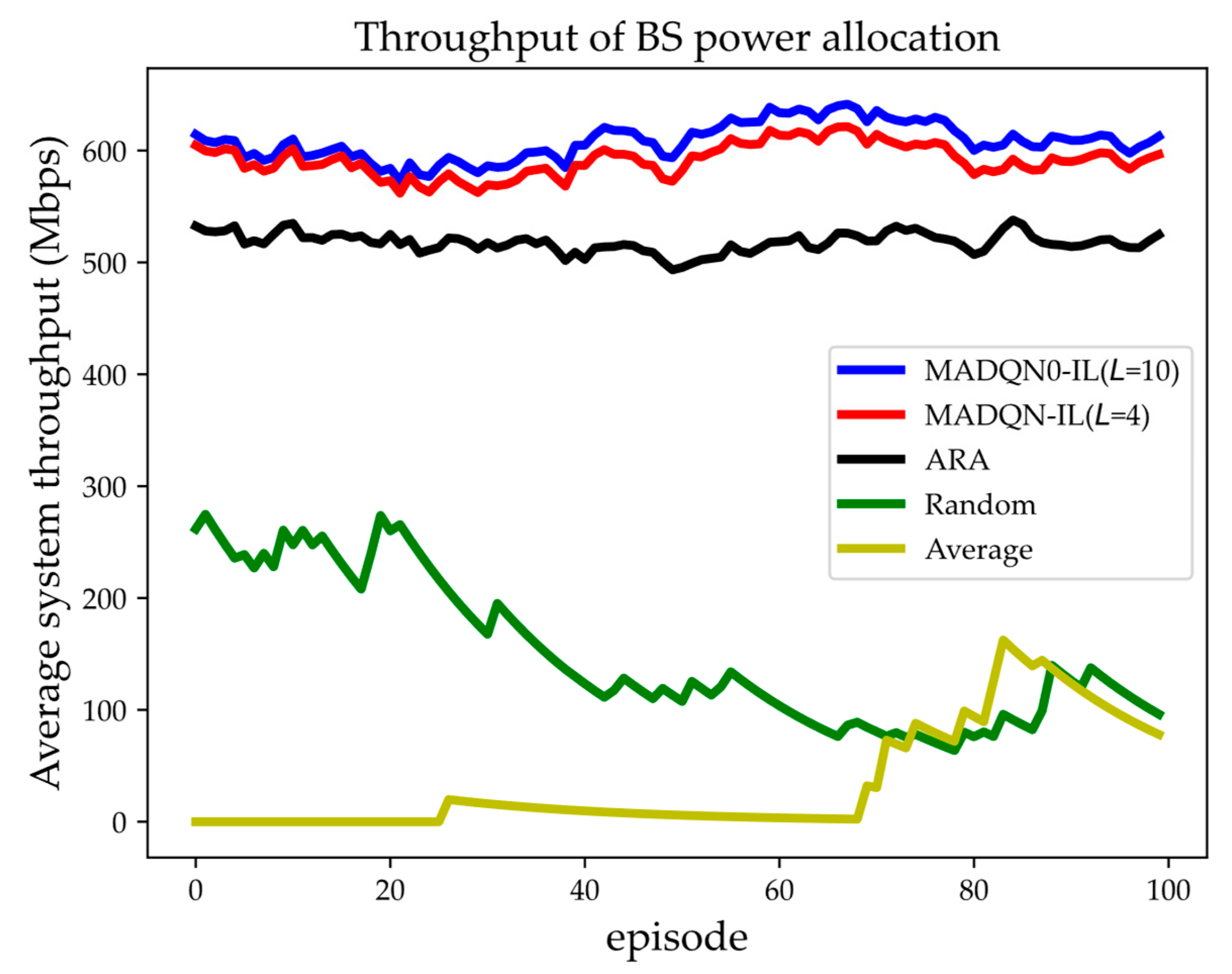

4.2.2. Test Results

- (1)

- Comparison of Throughput of Different Algorithms with Fixed BS Power

- (i)

- ARA [22]: This algorithm iteratively decreases the antenna transmit power in fixed-step increments, converging to the point where the interference constraint is satisfied.

- (ii)

- Random power allocation: This randomly selects the power size for each BS antenna.

- (iii)

- Average power allocation: Each BS antenna transmits information with an equal distribution of BS power.

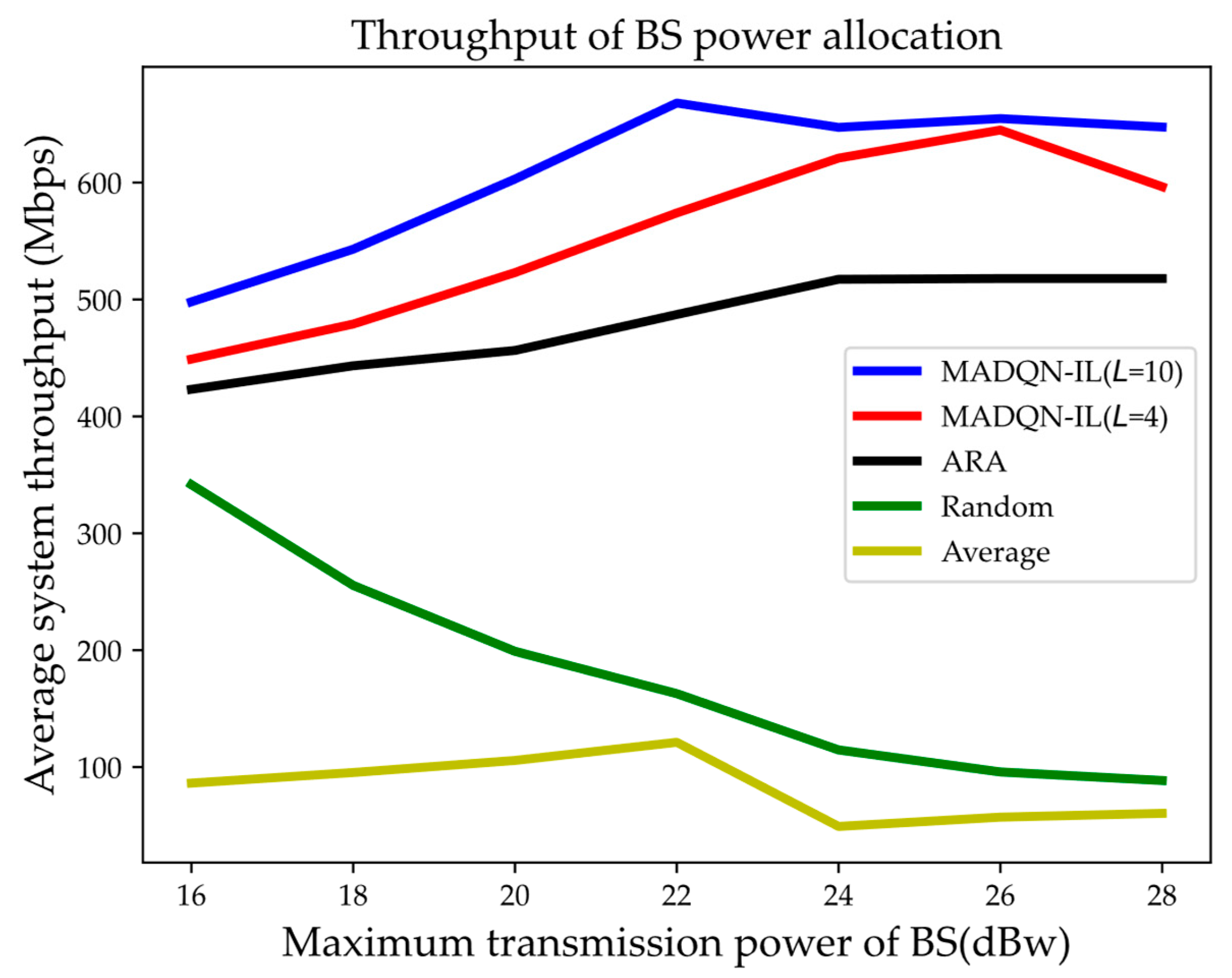

- (2)

- Comparison of Throughput of Different Algorithms when BS Power Changes

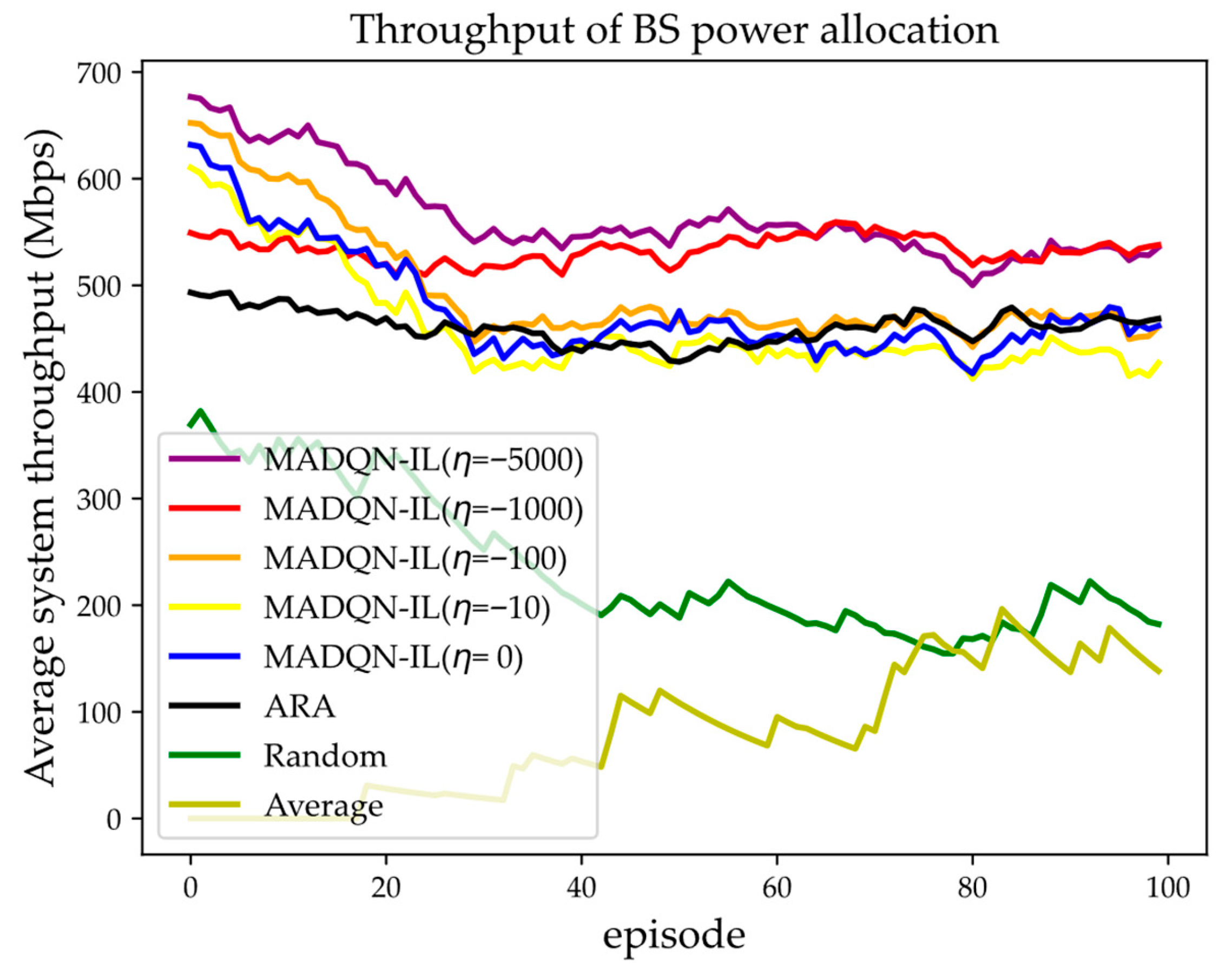

- (3)

- Comparison of Throughput of Different Algorithms with Different Interference-Penalty Factors ()

5. Conclusions

- (1)

- Based on the current research status of satellite–terrestrial integrated networks and the research content of the relevant literature, we established a cognitive satellite–terrestrial integrated network architecture and downlink communication process. The study of resource optimization problems takes the unbalanced and time-varying nature of terrestrial user service demands into consideration, which is more in line with the diversified and variable characteristics of services in satellite–terrestrial integrated networks.

- (2)

- To address the cognitive radio demands of satellite–terrestrial integrated networks, a MADQN-IL algorithm is proposed for power allocation in BSs. Through collaborative decision making, multiple agents optimize system performance while simplifying the neural network structure, which can improve the throughput under interference suppression conditions. The simulation verification of the terrestrial network DRL resource allocation algorithm shows that the MADQN-IL algorithm demonstrates higher system throughput performance in interference limitation compared to the ARA and fixed power allocation algorithms.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CSTN | Cognitive Satellite–Terrestrial Network |

| SUs | Satellite Users |

| TUs | Terrestrial base station Users |

| MADQN-IL | Multi-agent deep Q-network algorithm under interference limitation |

| SAGIN | Space–Air–Ground Integrated Network |

| ITSN | Integrated Terrestrial–Satellite Network |

| LPWAN | Low-Power Wide-Area Network |

| LLM | Large Language Model |

| LEO | Low Earth Orbit satellite |

| BS | Base Station |

| IoT | Internet of Things |

| ST | Secondary Transmitter |

| SR | Secondary Receiver |

| NOMA | Non-Orthogonal Multiple Access |

| PU | Primary User |

| QoS | Quality-of-service |

| DRL | Deep Reinforcement Learning |

| DDQN | Double Q-network |

| TTL | Time to Live |

| ARA | Adaptive Resource Adjustment |

| PPP | Poisson Point Process |

| MMSE | Minimum Mean Square Error |

References

- Bakambekova, A.; Kouzayha, N.; Al-Naffouri, T. On the Interplay of Artificial Intelligence and Space-Air-Ground Integrated Networks: A Survey. IEEE Open J. Commun. Soc. 2024, 5, 4613–4673. [Google Scholar] [CrossRef]

- Heydarishahreza, N.; Han, T.; Ansari, N. Spectrum Sharing and Interference Management for 6G LEO Satellite-Terrestrial Network Integration. IEEE Commun. Surv. Tutor. 2024, 5, 1–32. [Google Scholar] [CrossRef]

- Xiao, Y.; Ye, Z.; Wu, M.; Li, H.; Xiao, M.; Alouini, M.-S.; Al-Hourani, A.; Cioni, S. Space-Air-Ground Integrated Wireless Networks for 6G: Basics, Key Technologies, and Future Trends. IEEE J. Sel. Areas Commun. 2024, 42, 3327–3354. [Google Scholar] [CrossRef]

- Xu, Y.; Xu, T.; Zhou, T.; Zhang, H.; Hu, H. Elastic Spectrum Sensing for Satellite-Terrestrial Communication under Highly Dynamic Channels. In Proceedings of the GLOBECOM 2023–2023 IEEE Global Communications Conference, Kuala Lumpur, Malaysia, 4–8 December 2023; pp. 2433–2438. [Google Scholar]

- Chen, B.; Xu, D. Outage Performance of Overlay Cognitive Satellite-Terrestrial Networks with Cooperative NOMA. IEEE Syst. J. 2024, 18, 222–233. [Google Scholar] [CrossRef]

- Kwon, G.; Shin, W.; Conti, A.; Lindsey, W.C.; Win, M.Z. Access-Backhaul Strategy via gNB Cooperation for Integrated Terrestrial-Satellite Networks. IEEE J. Sel. Areas Commun. 2024, 42, 1403–1419. [Google Scholar] [CrossRef]

- Javaid, S.; Khalil, R.A.; Saeed, N.; He, B.; Alouini, M.-S. Leveraging Large Language Models for Integrated Satellite-Aerial-Terrestrial Networks: Recent Advances and Future Directions. IEEE Open J. Commun. Soc. 2025, 6, 399–432. [Google Scholar] [CrossRef]

- Ati, S.B.; Dahrouj, H.; Alouini, M.-S. An Overview of Performance Analysis and Optimization in Coexisting Satellites and Future Terrestrial Networks. IEEE Open J. Commun. Soc. 2025, 6, 3834–3852. [Google Scholar] [CrossRef]

- Zhao, F.; Hao, W.; Guo, H.; Sun, G.; Wang, Y.; Zhang, H. Secure Energy Efficiency for mmWave-NOMA Cognitive Satellite Terrestrial Network. IEEE Commun. Lett. 2023, 27, 283–287. [Google Scholar] [CrossRef]

- Ruan, Y.; Li, Y.; Wang, C.-X.; Zhang, R.; Zhang, H. Energy Efficient Power Allocation for Delay Constrained Cognitive Satellite Terrestrial Networks Under Interference Constraints. IEEE Trans. Wirel. Commun. 2019, 18, 4957–4969. [Google Scholar] [CrossRef]

- Sharma, P.K.; Yogesh, B.; Gupta, D.; Kim, D.I. Performance Analysis of IoT-Based Overlay Satellite-Terrestrial Networks Under Interference. IEEE Trans. Cogn. Commun. Netw. 2021, 7, 985–1001. [Google Scholar] [CrossRef]

- Peng, D.; Bandi, A.; Li, Y.; Chatzinotas, S.; Ottersten, B. Hybrid Beamforming, User Scheduling, and Resource Allocation for Integrated Terrestrial-Satellite Communication. IEEE Trans. Veh. Technol. 2021, 70, 8868–8882. [Google Scholar] [CrossRef]

- Sariningrum, R.; Adi, P.D.P.; Maulana, Y.Y.; Adiprabowo, T.; Wibowo, S.H.; Andriana; Fitria, N.; Novita, H.; Kaffah, F.M.; Sopandi, A. Non-Terrestrial Networks LPWAN IoT Satellite Communication for Medical Application. In Proceedings of the 2025 International Conference on Smart Computing, IoT and Machine Learning (SIML), Surakarta, Indonesia, 3–4 June 2025; pp. 1–6. [Google Scholar] [CrossRef]

- Fu, S.; Gao, J.; Zhao, L. Integrated Resource Management for Terrestrial-Satellite Systems. IEEE Trans. Veh. Technol. 2020, 69, 3256–3266. [Google Scholar] [CrossRef]

- Wang, J.; Guo, D.; Zhang, B.; Jia, L.; Tong, X. Spectrum Access and Power Control for Cognitive Satellite Communications: A Game-Theoretical Learning Approach. IEEE Access 2019, 7, 164216–164228. [Google Scholar] [CrossRef]

- Wen, X.; Ruan, Y.; Li, Y.; Pan, C.; Elkashlan, M.; Zhang, R.; Li, T. A Hierarchical Game Framework for Win-Win Resource Trading in Cognitive Satellite Terrestrial Networks. IEEE Trans. Wirel. Commun. 2024, 23, 13530–13544. [Google Scholar] [CrossRef]

- Chen, Z.; Guo, D.; Ding, G.; Tong, X.; Wang, H.; Zhang, X. Optimized Power Control Scheme for Global Throughput of Cognitive Satellite-Terrestrial Networks Based on Non-Cooperative Game. IEEE Access 2019, 7, 81652–81663. [Google Scholar] [CrossRef]

- Yuan, Y.; Lei, L.; Vu, T.X.; Chang, Z.; Chatzinotas, S.; Sun, S. Adapting to Dynamic LEO-B5G Systems: Meta-Critic Learning Based Efficient Resource Scheduling. IEEE Trans. Wirel. Commun. 2022, 21, 9582–9595. [Google Scholar] [CrossRef]

- Li, X.; Zhang, H.; Zhou, H.; Wang, N.; Long, K.; Al-Rubaye, S.; Karagiannidis, G.K. Multi-Agent DRL for Resource Allocation and Cache Design in Terrestrial-Satellite Networks. IEEE Trans. Wirel. Commun. 2023, 22, 5031–5042. [Google Scholar] [CrossRef]

- Lin, Z.; Ni, Z.; Kuang, L.; Jiang, C.; Huang, Z. Dynamic Beam Pattern and Bandwidth Allocation Based on Multi-Agent Deep Reinforcement Learning for Beam Hopping Satellite Systems. IEEE Trans. Veh. Technol. 2022, 71, 3917–3930. [Google Scholar] [CrossRef]

- Wang, X.; Li, H.; Jia, M.; Zhang, W.; Guo, Q.; Zhu, H. Cooperative-NOMA Assisted by Relay with Sensing and Transmission Capabilities in Underlay Cognitive Hybrid Satellite-Terrestrial Networks. In Proceedings of the 10th International Conference on Intelligent Computing and Signal Processing (ICSP), Xi’an, China, 16–18 May 2025; pp. 38–45. [Google Scholar] [CrossRef]

- Li, T.; Yao, R.; Fan, Y.; Zuo, X.; Miridakis, N.I.; Tsiftsis, T.A. Pattern Design and Power Management for Cognitive LEO Beaming Hopping Satellite-Terrestrial Networks. IEEE Trans. Cogn. Commun. Netw. 2023, 9, 1531–1545. [Google Scholar] [CrossRef]

- 3rd Generation Partnership Project; Technical Specification Group Radio Access Network. Radio Frequency (RF) Requirements for Multicarrier and Multiple Radio Access Technology (Multi-RAT) Base Station (BS) (Release 11); 3GPP: Sophia Antipolis, France, 2013. [Google Scholar]

| Parameters | Values |

|---|---|

| BS radius R | 10 km |

| Maximum transmission power () | 22 dBW |

| Max transmission gain () | 20 dBi |

| 3dB bandwidth () | 30° |

| Noise power ( MHz) | −117 dBW |

| Noise interference threshold () | −123 dBW |

| Time slot length ) | 2 ms |

| Data packet time to live (TTL) | 40 |

| Algorithms | Average Throughput |

|---|---|

| MADQN-IL (L = 10) | 609.01 Mbps |

| MADQN-IL (L = 4) | 590.89 Mbps |

| ARA power allocation | 517.27 Mbps |

| Random power allocation | 112.63 Mbps |

| Average power allocation | 49.19 Mbps |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhai, Y.; Ma, Z.; He, B.; Xu, W.; Li, Z.; Wang, J.; Miao, H.; Gao, A.; Cao, Y. Joint Power Allocation Algorithm Based on Multi-Agent DQN in Cognitive Satellite–Terrestrial Mixed 6G Networks. Mathematics 2025, 13, 3133. https://doi.org/10.3390/math13193133

Zhai Y, Ma Z, He B, Xu W, Li Z, Wang J, Miao H, Gao A, Cao Y. Joint Power Allocation Algorithm Based on Multi-Agent DQN in Cognitive Satellite–Terrestrial Mixed 6G Networks. Mathematics. 2025; 13(19):3133. https://doi.org/10.3390/math13193133

Chicago/Turabian StyleZhai, Yifan, Zhongjun Ma, Bo He, Wenhui Xu, Zhenxing Li, Jie Wang, Hongyi Miao, Aobo Gao, and Yewen Cao. 2025. "Joint Power Allocation Algorithm Based on Multi-Agent DQN in Cognitive Satellite–Terrestrial Mixed 6G Networks" Mathematics 13, no. 19: 3133. https://doi.org/10.3390/math13193133

APA StyleZhai, Y., Ma, Z., He, B., Xu, W., Li, Z., Wang, J., Miao, H., Gao, A., & Cao, Y. (2025). Joint Power Allocation Algorithm Based on Multi-Agent DQN in Cognitive Satellite–Terrestrial Mixed 6G Networks. Mathematics, 13(19), 3133. https://doi.org/10.3390/math13193133