1. Introduction

Efficient sampling methods are essential in statistical analysis, especially when acquiring data is costly or time-consuming. In such contexts, alternative sampling designs that leverage auxiliary information or partial measurements can offer significant advantages. One such approach is Ranked Set Sampling (RSS), first introduced by McIntyre [

1] in the early 1950s to estimate pasture yields in Australia. Since its inception, RSS has gained considerable attention due to its ability to increase estimation efficiency with minimal additional cost or effort, particularly in environments where precise measurement is costly, but ranking units is relatively easy [

2]. RSS has been successfully applied in a variety of fields—including agriculture, environmental science, and economics—where it has outperformed Simple Random Sampling (SRS) in estimation efficiency.

To implement RSS, a total of units are randomly selected from the population and divided into p sets, each containing p units. Within each set, the units are ranked based on visual inspection, expert judgment, concomitant variables, or other means that do not involve precise quantification. The process continues as follows: the smallest unit from the first set is measured, the second smallest unit from the second set is measured, and so forth, until the largest unit from the p-th set is measured. This procedure constitutes a single cycle of RSS, which can be repeated for m independent cycles to obtain a balanced RSS of size : , where denotes the k-th judgment order statistic of the units in the k-th set of the l-th cycle. The use of square brackets instead of parentheses signifies that ranking may be subject to error or imperfection. If ranking is perfect, the notation may be used instead.

To illustrate the technique, in a balanced RSS with and , with ranking based on an auxiliary (or concomitant) variable X, the selection proceeds as follows:

Cycle 2:

producing a balanced RSS:

There is a vast body of literature on statistical inference using RSS, including parametric estimation of population parameters such as the mean (e.g., [

3]), variance (e.g., [

4]), and quantiles (e.g., [

5]), and nonparametric estimation and hypothesis testing (e.g., [

6,

7,

8,

9,

10]). For a comprehensive review, see [

11].

In this paper, we consider the problem of estimating a finite population mean using RSS. Let

denote a finite population of size

N, where each unit

is associated with a value

of a study variable

Y, and a value

of an auxiliary variable

X. The parameter of interest is the finite population mean:

The goal is to estimate

based on an RSS of size

n. Numerous estimators have been proposed for this purpose, including the simple mean of the RSS ([

12]), the ratio estimator ([

13,

14]), and the regression estimator ([

15]).

In this study, we propose a shrinkage-type (composite) estimator for estimating the finite population mean in the context of RSS. This estimator builds upon the idea of combining two or more estimators using shrinkage weights, offering a compromise that can outperform the individual components under certain conditions. Specifically, we extend the work of Lui [

16], who proposed a composite estimator that blends the simple mean and the ratio estimator under SRS. Lui [

16] showed that the composite approach can offer improved performance when certain conditions are met. Their simulation study suggested that the SRS composite estimator could outperform the simple and ratio estimators individually, especially when the auxiliary variable is moderately to strongly correlated with the study variable. We extend and study this idea under RSS. We provide theoretical results that characterize the performance of the proposed estimator and explore its properties through simulation and real data analysis.

The remainder of this paper is organized as follows.

Section 2 reviews the idea of composite estimators under SRS.

Section 3 introduces the proposed composite estimator under RSS and derives its theoretical properties.

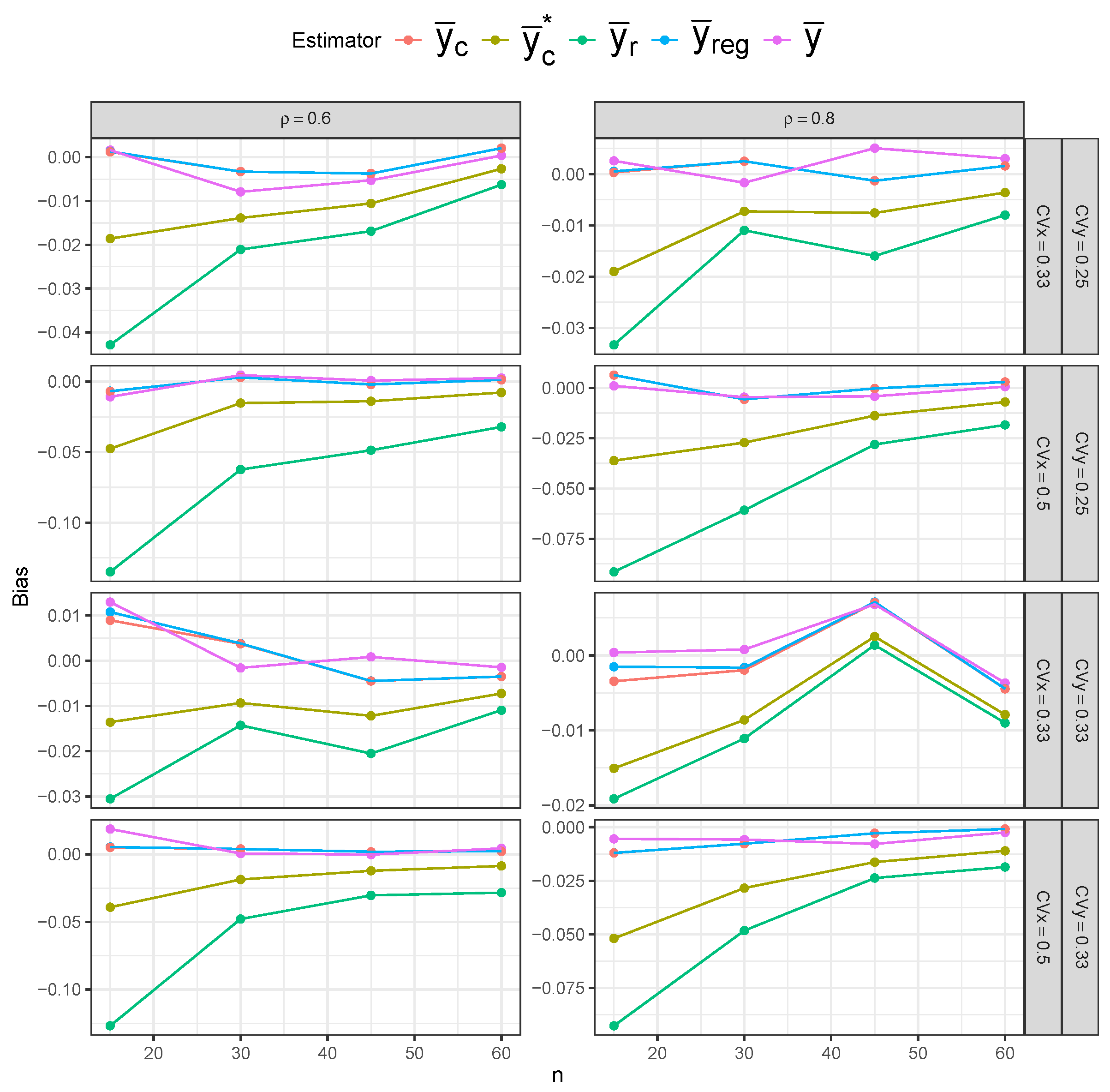

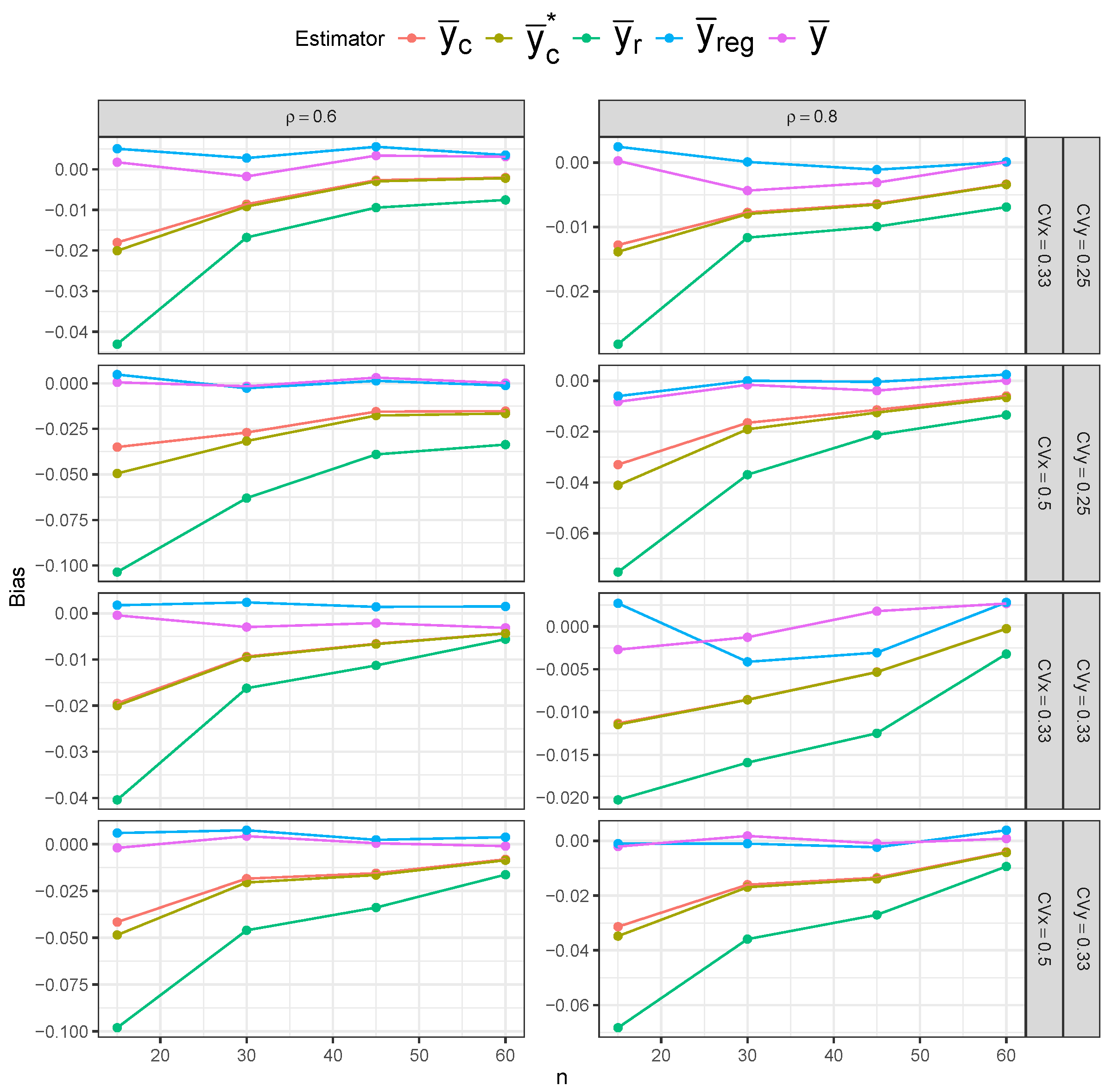

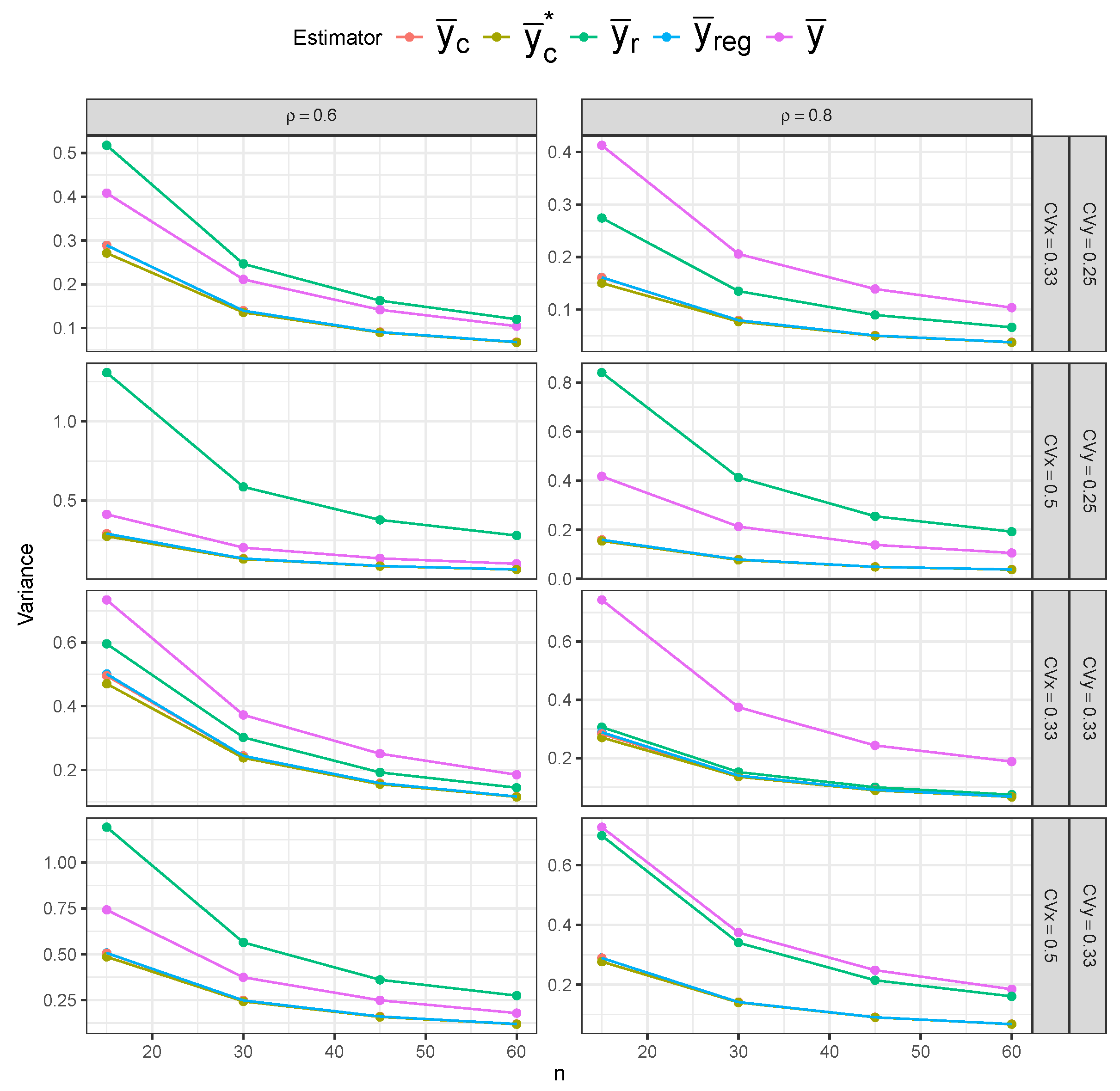

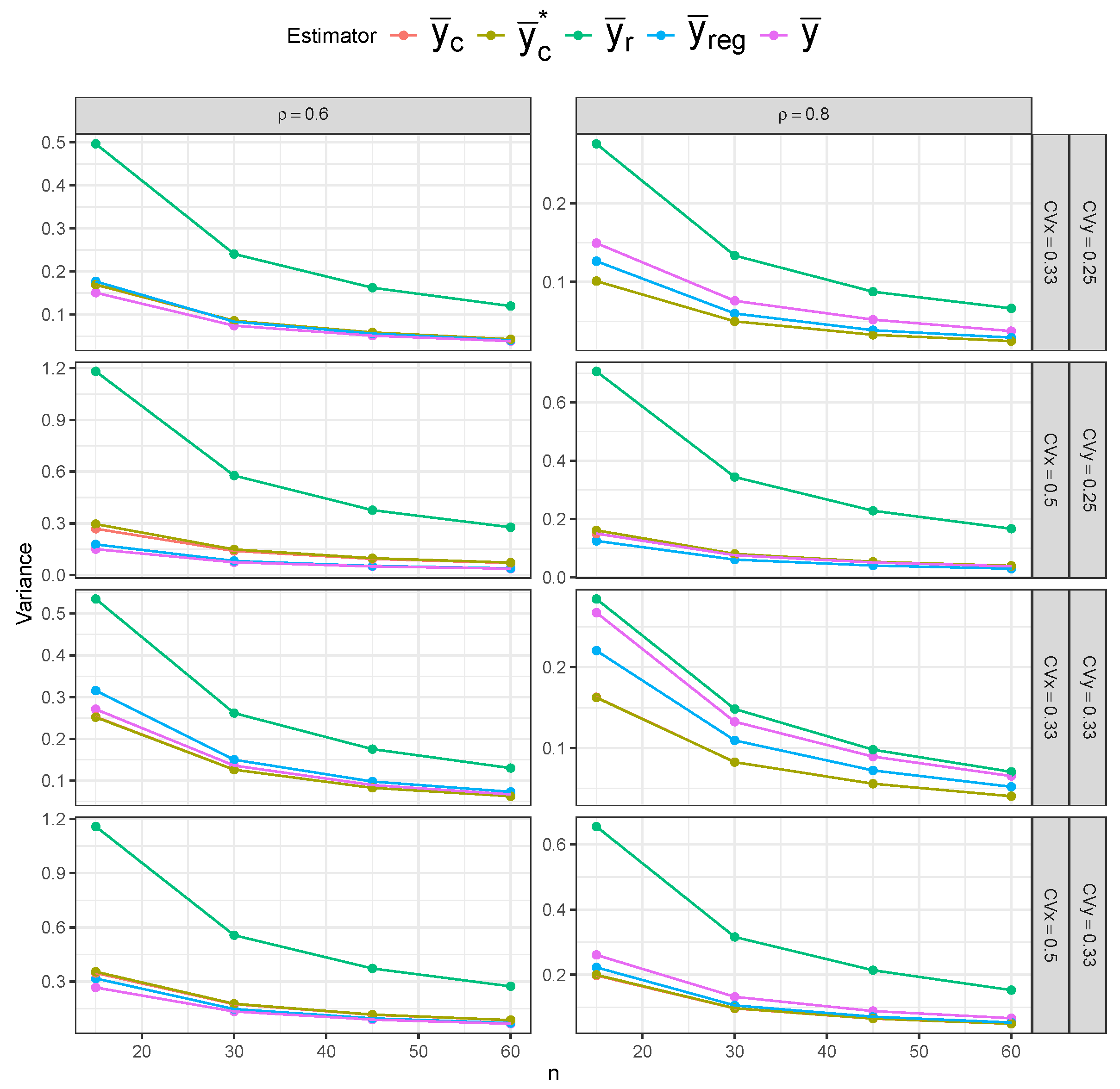

Section 4 presents a simulation study investigating the finite sample performance of the proposed estimator relative to several other estimators.

Section 5 illustrates the performance of the proposed estimator on a real dataset.

Section 6 concludes the paper with a discussion of key findings and future research directions.

2. Composite Estimators Under SRS

In this section, we review the idea of composite estimators under SRS. The simplest estimator for the finite population mean under SRS is the simple mean estimator defined by [

12]:

The simple mean estimator is known to be unbiased and its variance, or, equivalently, its mean squared error (MSE), is given by [

12], ch. 2:

where

is the variance of the study variable

Y.

An alternative estimator that is commonly used to take advantage of available auxiliary information is the ratio estimator, which is defined as follows under SRS [

12]:

The approximate variance of the ratio estimator under SRS is given by [

12], ch. 6:

where

,

,

is the squared population coefficient of variation of a generic variable

Z, and

is the population Pearson’s correlation coefficient between

X and

Y. Under SRS, Lui [

16] suggested combining the simple mean estimator and the ratio estimator into a composite estimator defined by

where

is an unknown weight parameter. Observing the fact that, since

is unbiased,

and the fact that the ratio estimator under SRS is asymptotically unbiased, ref. [

16] derived the optimal weight by minimizing the variance of the composite estimator:

where

. According to Lui [

16], there are specific circumstances in which the composite estimator surpasses the ratio estimator under SRS. These conditions are related to the correlation between

X and

Y, as well as their coefficients of variation. In addition, a simulation study was carried out by Lui [

16] to showcase the efficiency of the composite estimator compared to the simple mean estimator and the ratio estimator under SRS. In this study, we are building upon the research conducted by [

16] and expanding it to the context of RSS. In the following section, we will review the existing estimators for the population mean under RSS and introduce our proposed composite estimator.

5. Real Data Application

For the real data application, we use a modified version of the longleaf pine dataset comprising

trees, as analyzed by Jafari Jozani and Johnson [

20]. The original dataset can be found in Chen et al. [

21] and Platt et al. [

22]. In this dataset, the variable

X denotes tree diameter at breast height, while

Y represents tree height [

20]. To remove ties when ranking based on the

X variable, a small amount of random noise was added to the

X variable:

, recentered at zero. The Pearson correlation between the transformed

X variable and the

Y variable was

. Considering the

observations as the finite population, we drew 10,000 samples using each of three sampling designs—SRS, RSS with imperfect ranking (ranking based on

X), and RSS with perfect ranking (ranking based on

Y)—and computed each of the five estimators considered in the simulation study in the previous section. Goodness-of-fit tests—using the Shapiro—Wilk (‘sw’) option in the ‘gofTest()’ function of the

EnvStats package ver. 3.1.0 in R [

23]—confirmed that both

X and

Y can be modeled by the lognormal distribution, justifying the application of the lognormal approximation of the moments of sample order statistics (see Equation (

23)) under this dataset.

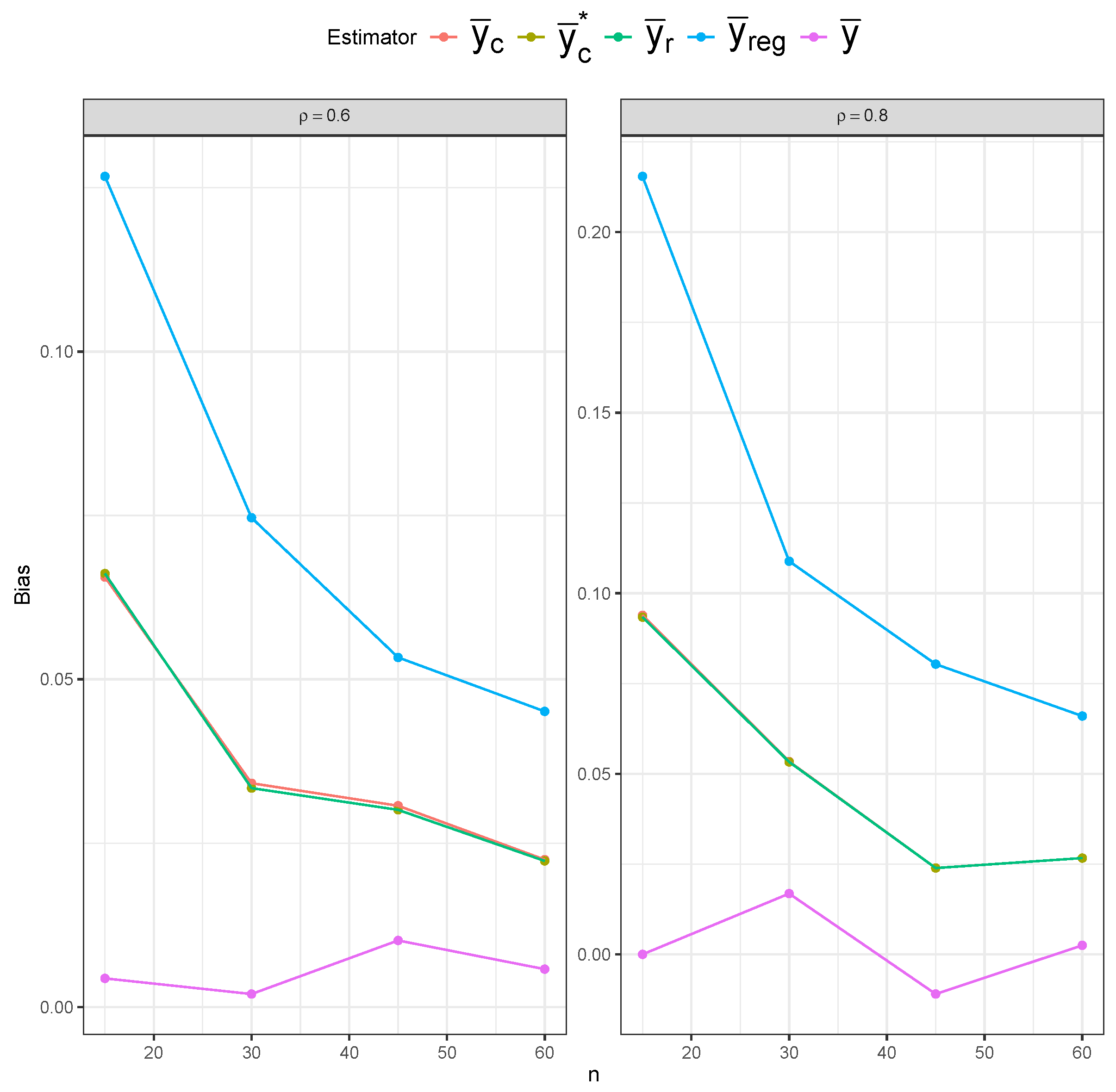

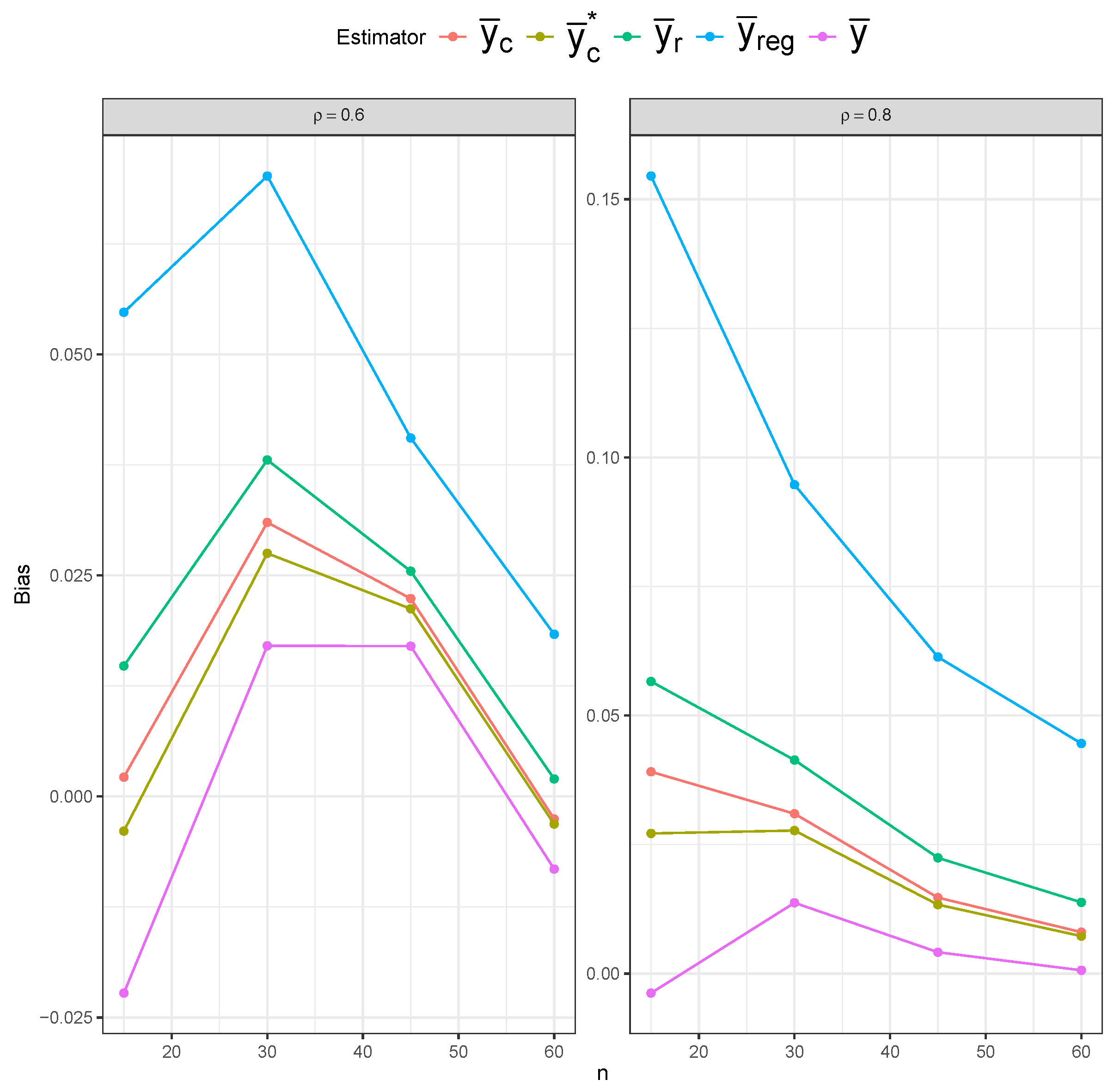

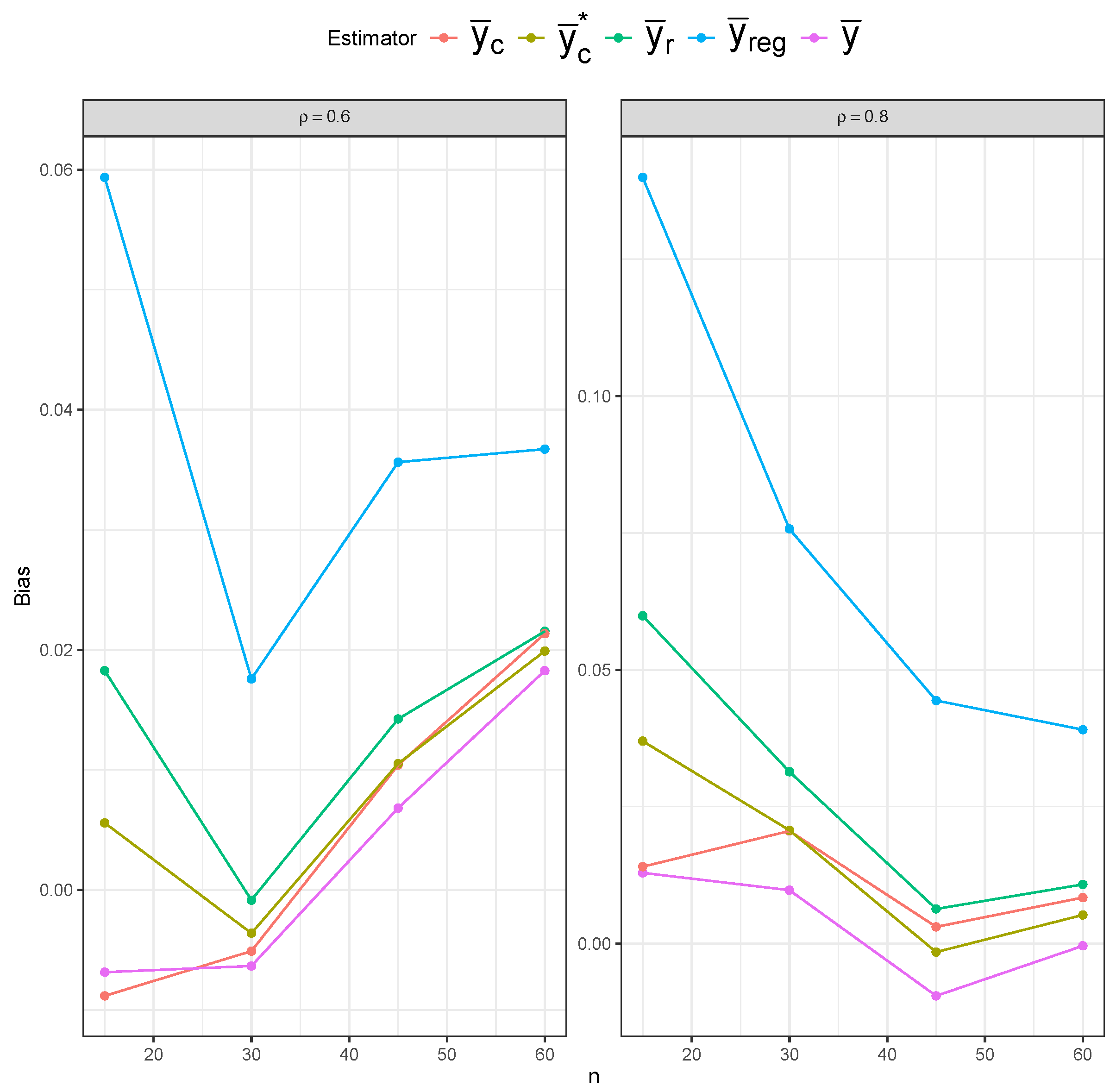

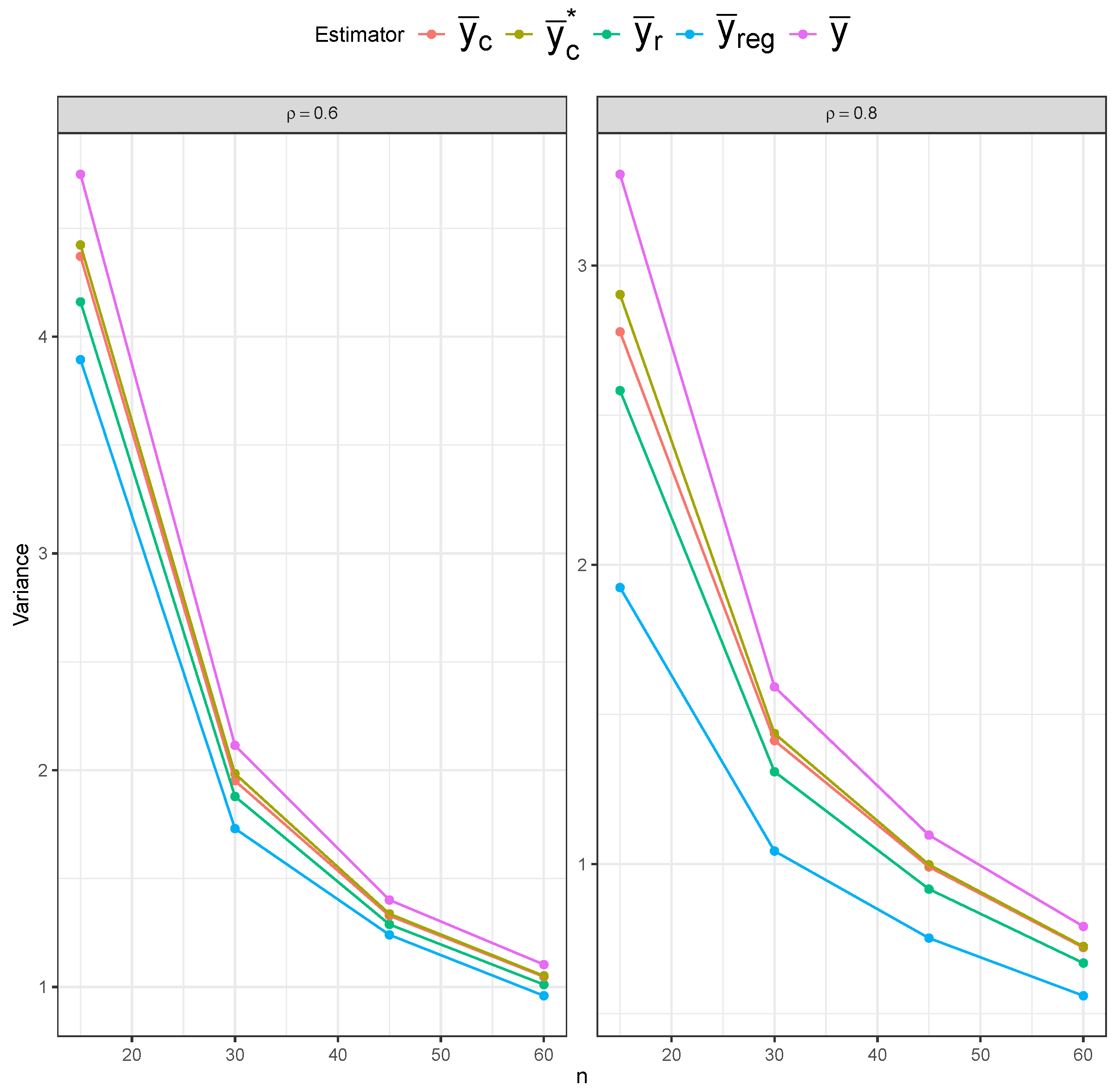

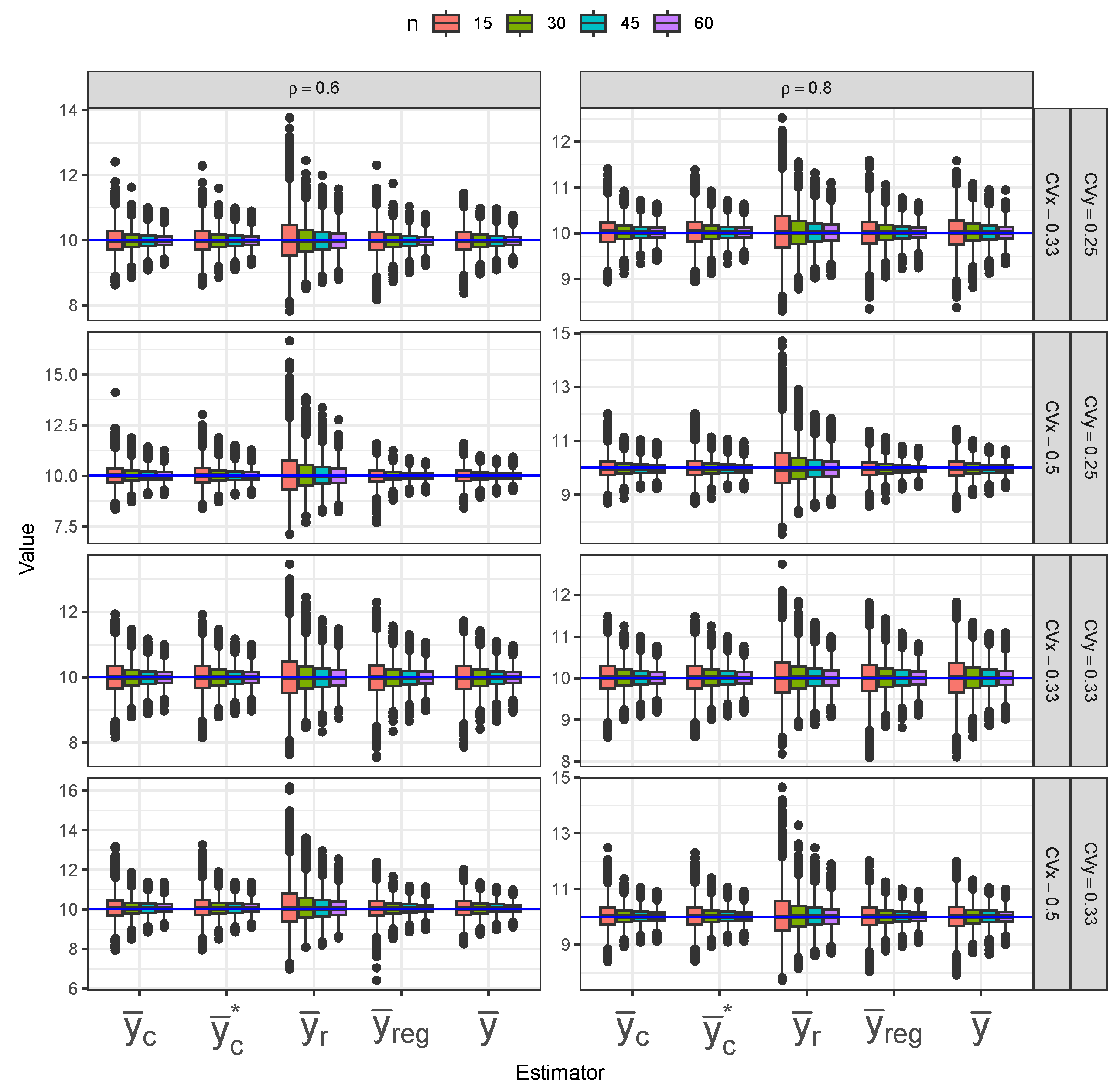

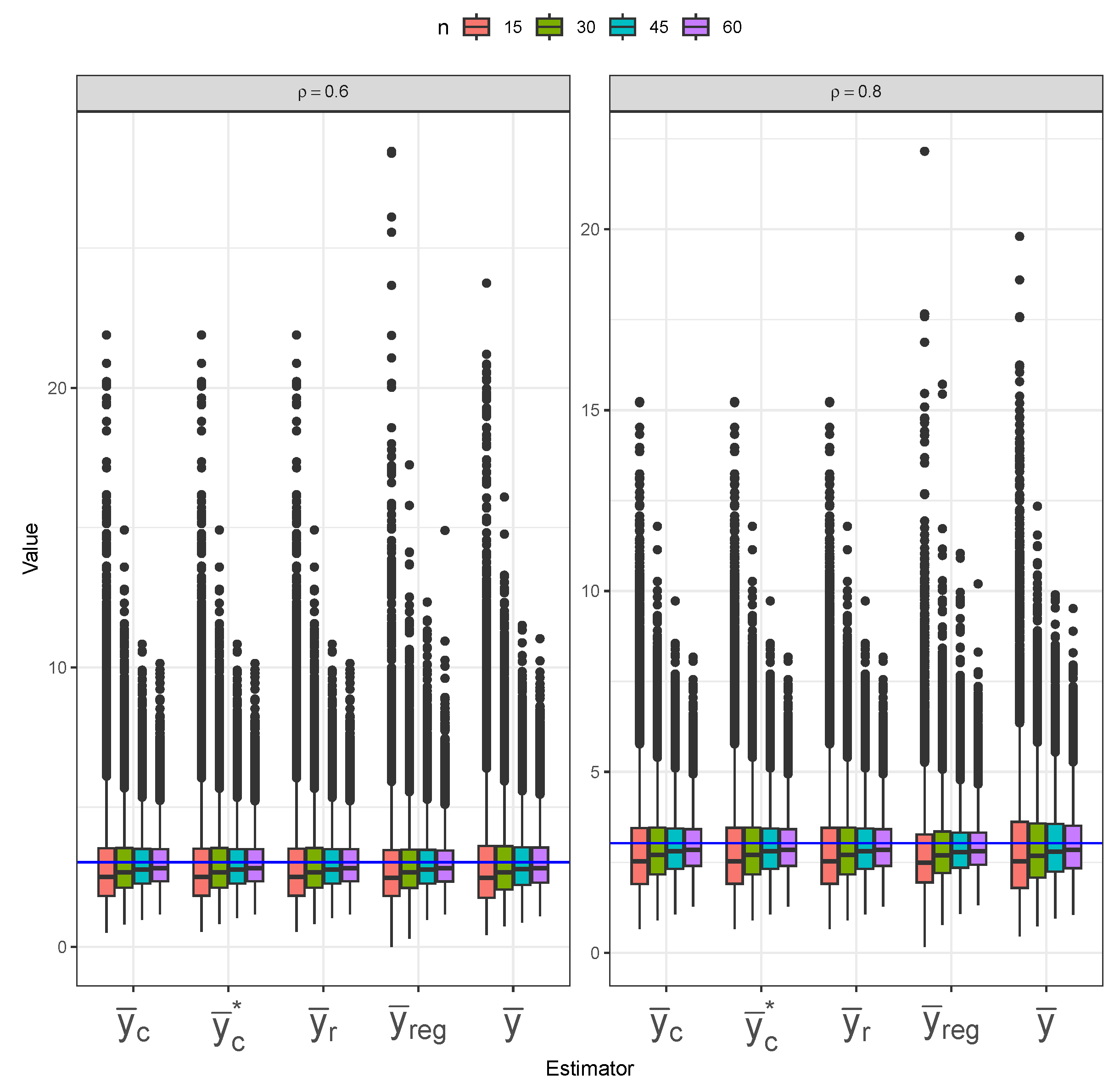

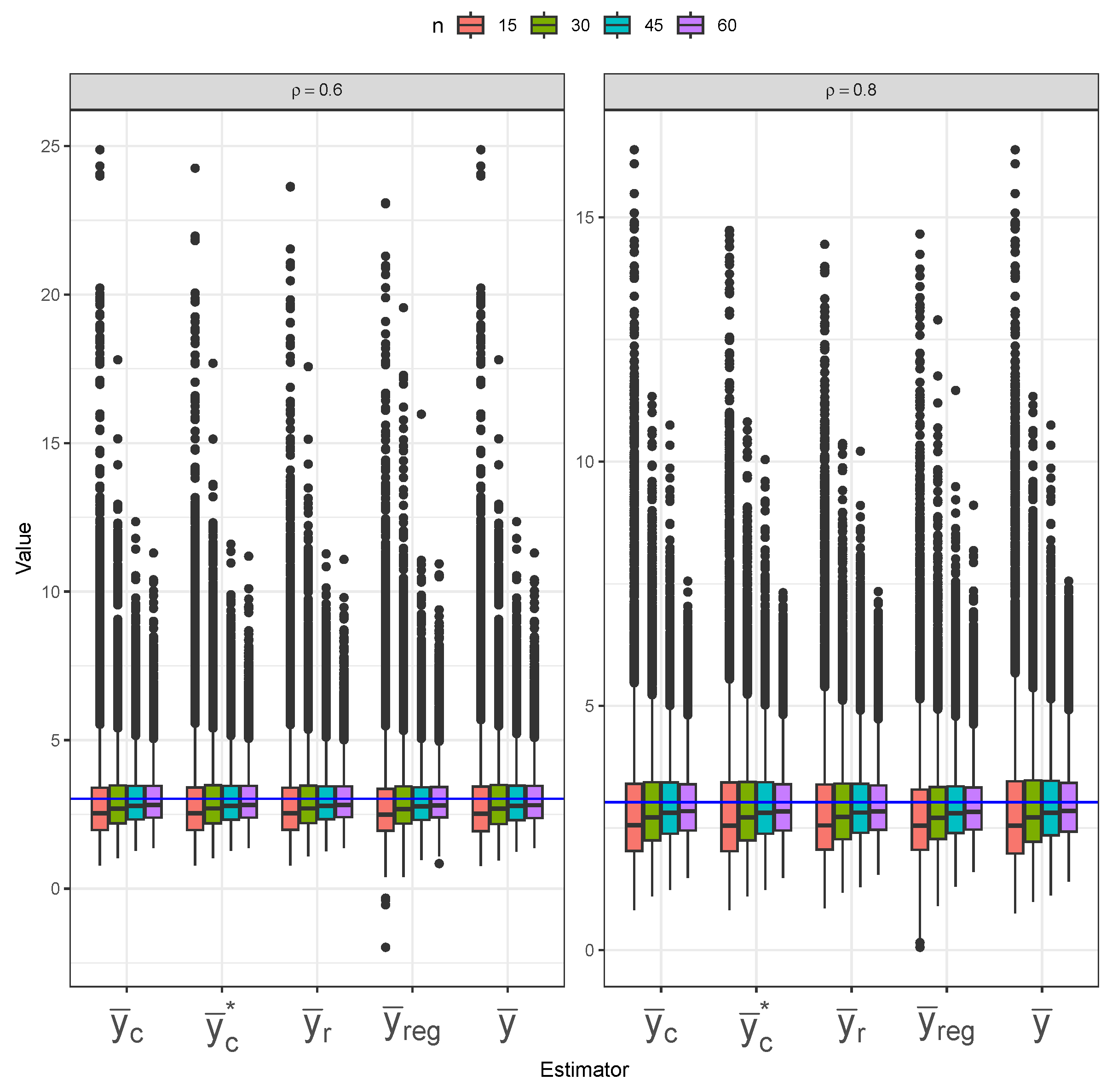

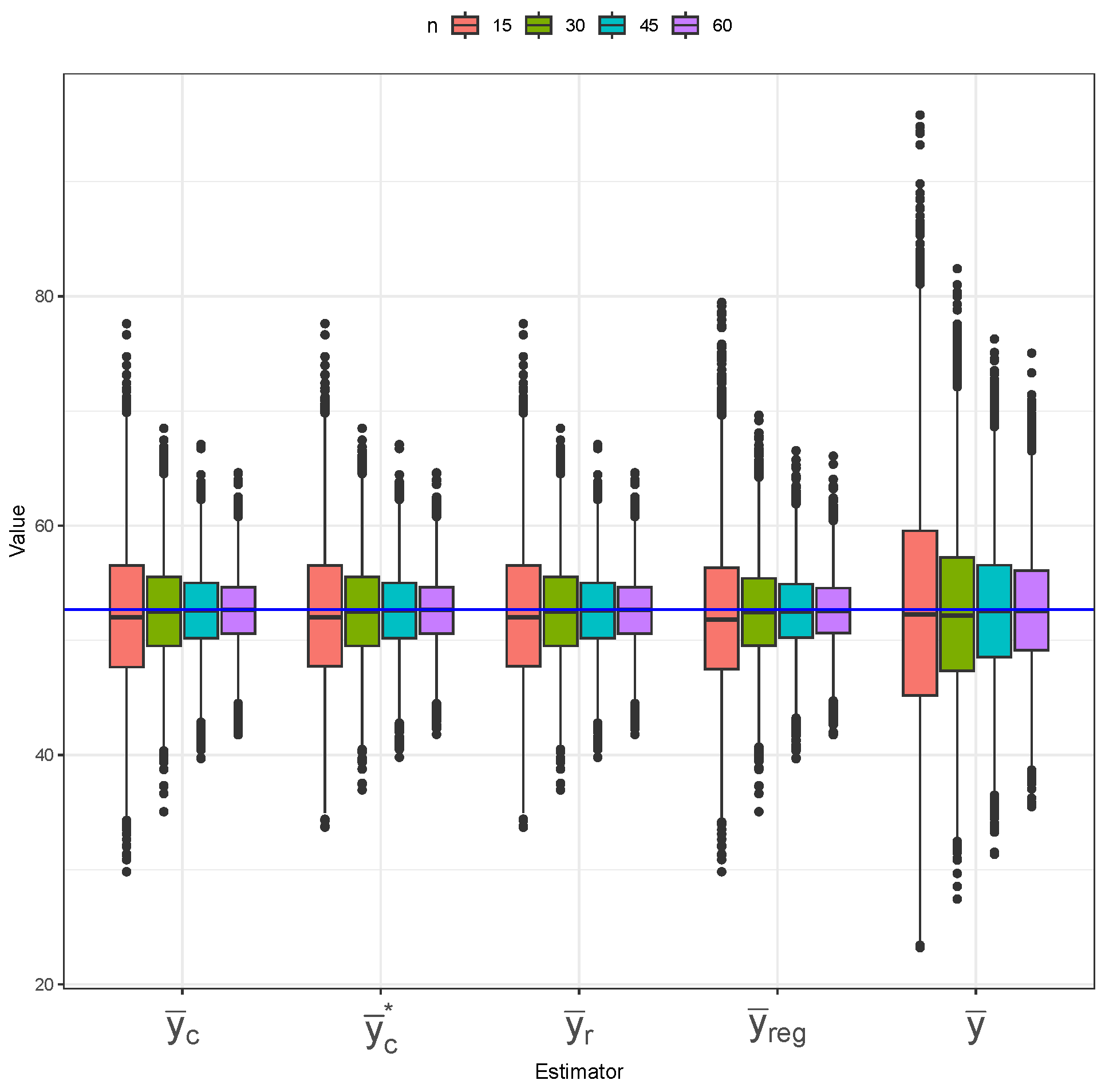

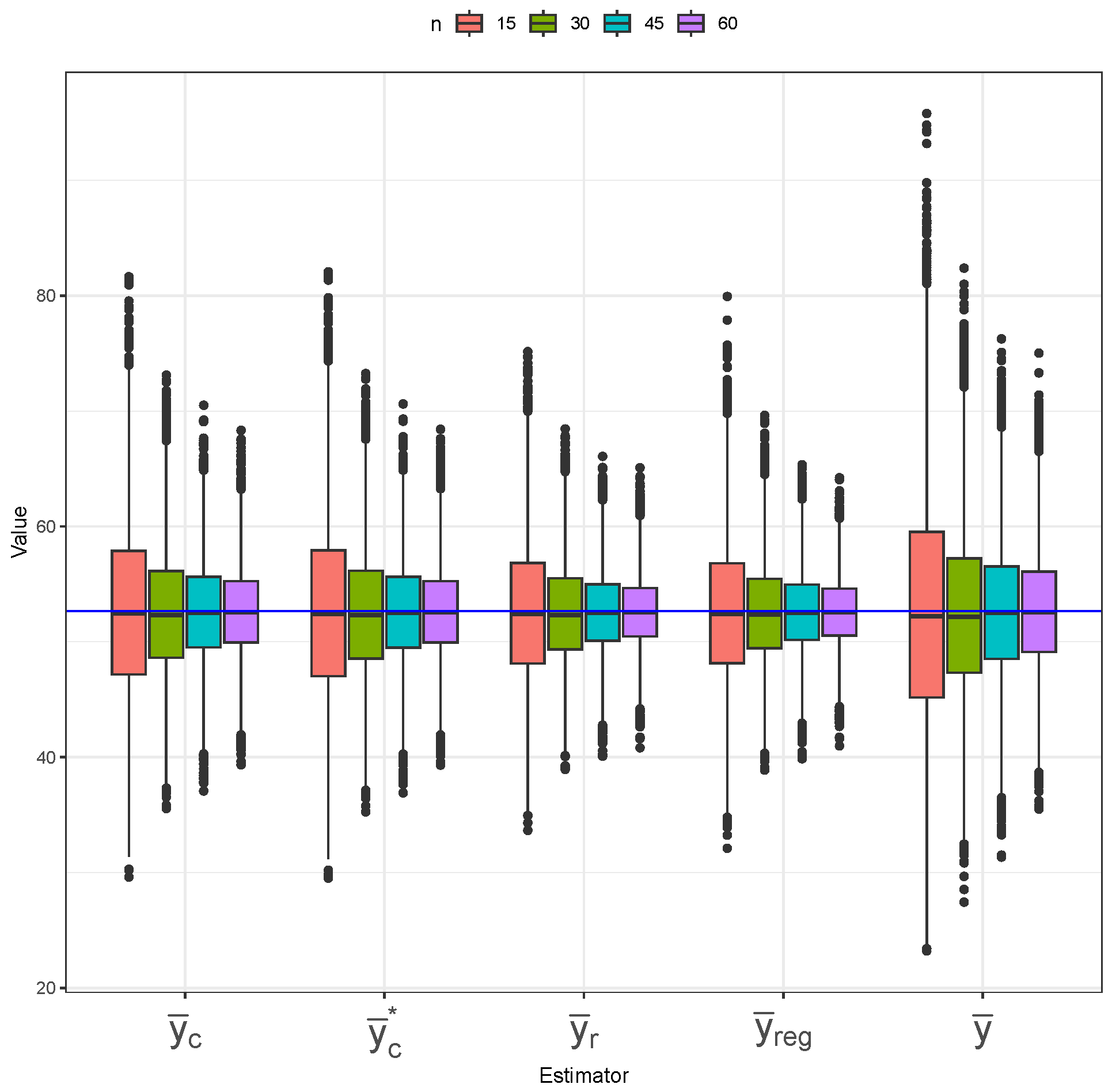

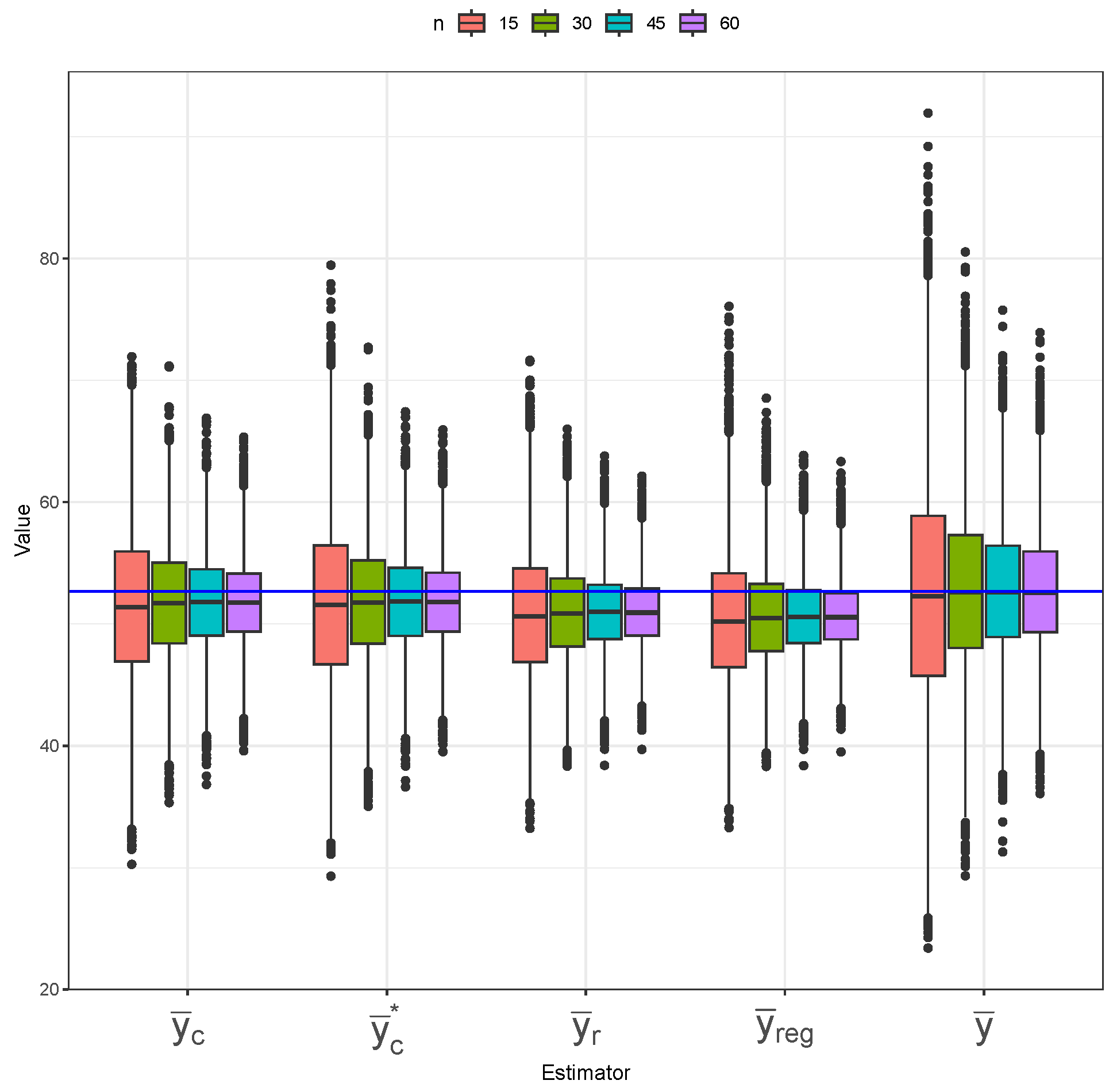

Figure 13,

Figure 14 and

Figure 15 present the distribution of five mean estimators under different sampling designs applied to the longleaf pine dataset, with the blue horizontal line indicating the true population mean. In

Figure 13 (SRS), the simple mean estimator (

) exhibits the greatest variance, as its boxplots are widely spread. The ratio (

) and composite (

and

) estimators perform best under SRS, showing less outliers and relatively low variance. The regression (

) estimator follows with slightly higher outlier presence, especially at small sample sizes. All estimators show low-to-no bias, as the boxplots are mostly centered at the true population mean. Similar patterns are observed under imperfect-ranking RSS (

Figure 14), but with the composite estimators switching places with the regression estimators. Under perfect-ranking RSS (

Figure 15), the simple mean estimator still shows the largest variability across different sample sizes, but it possesses the least bias overall. The ratio and regression estimators again show slightly lower variability than the composite estimators. However, the composite estimators are more centered around the true mean, indicating lower bias than the ratio and regression estimators.

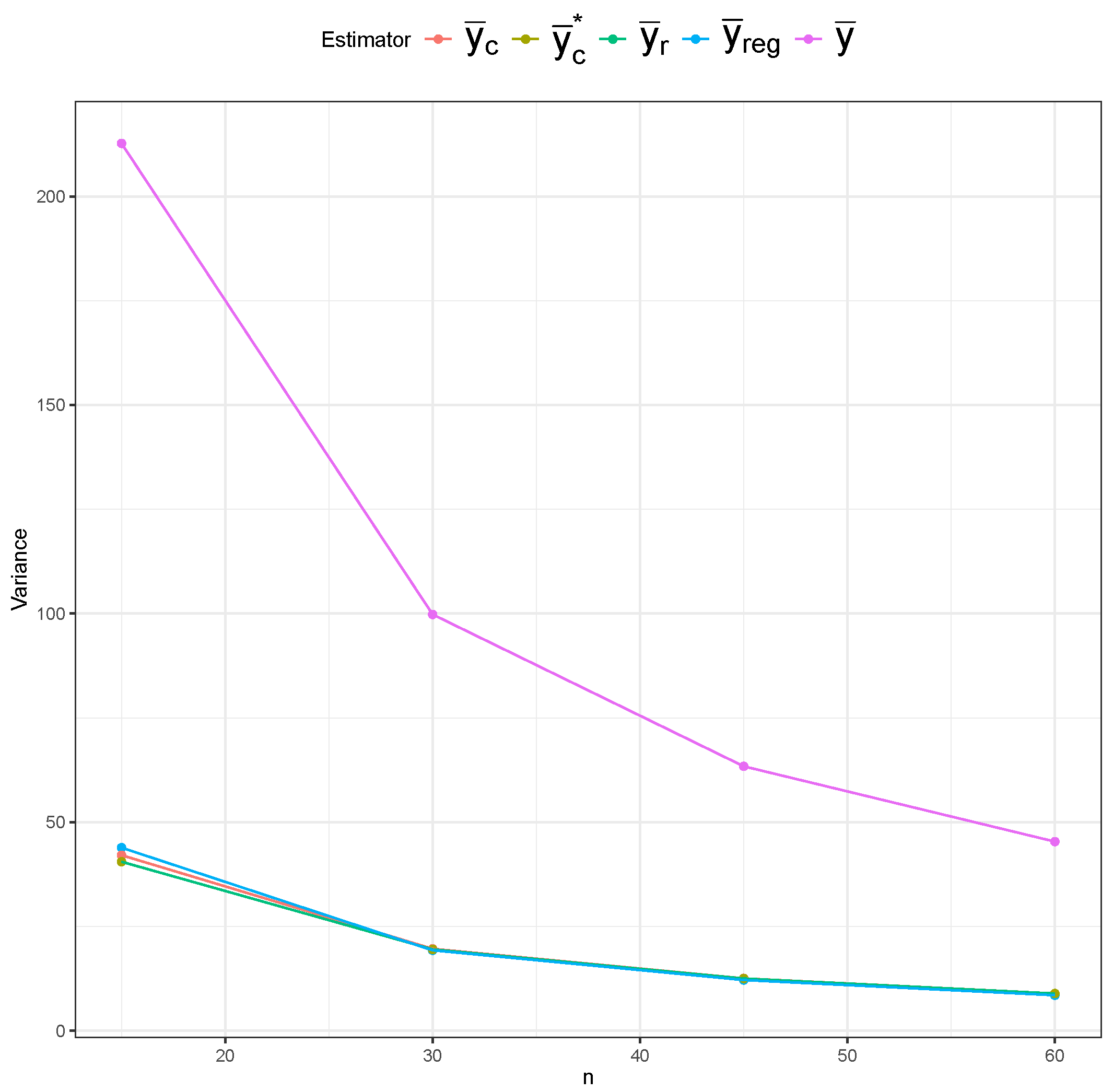

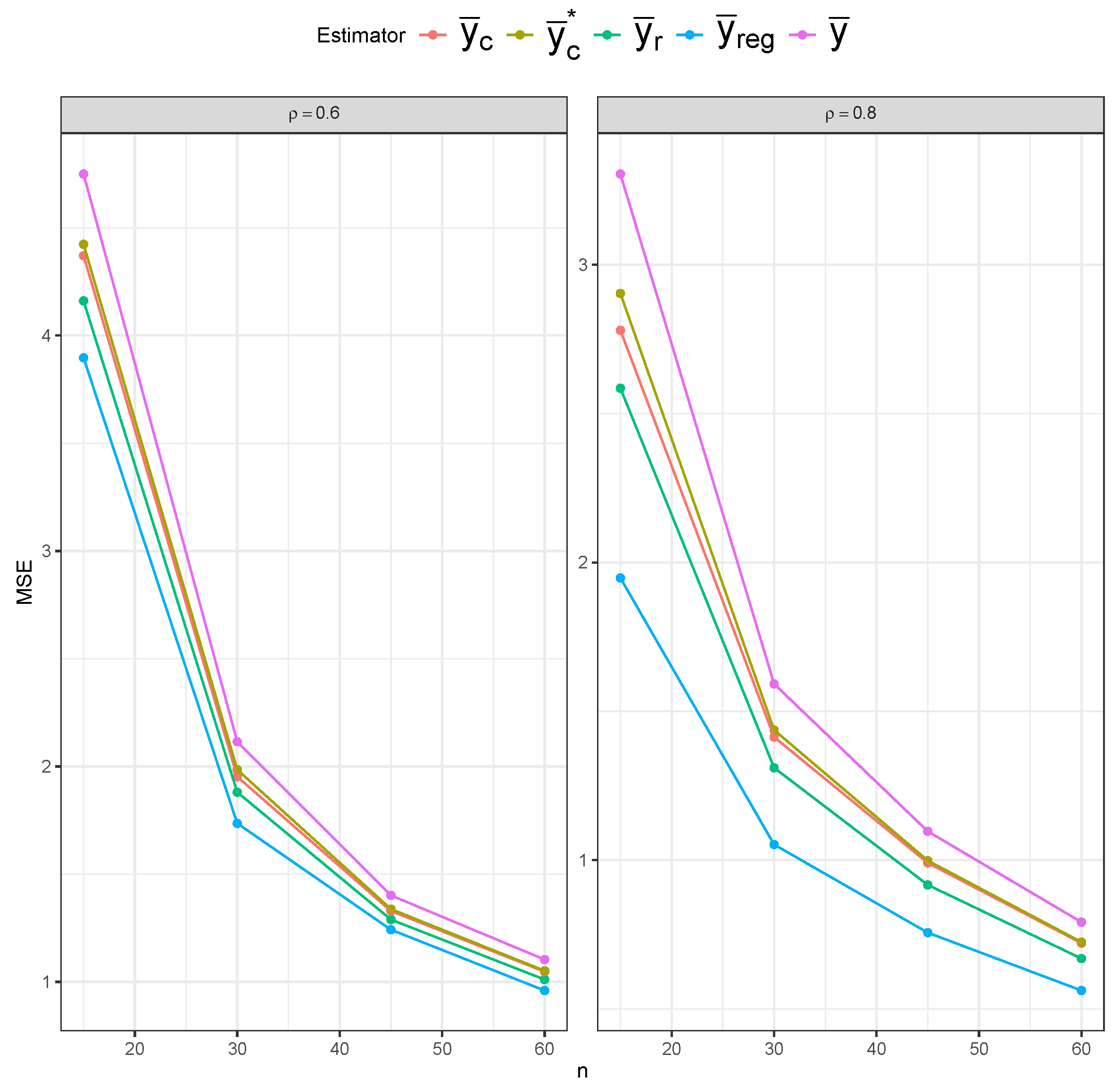

Table 1 displays the MSE behavior of five estimators under the SRS and RSS designs. The bias and variance results are included in

Appendix A.3. Across all three sampling designs, the simple mean estimator (

) exhibits notably higher MSE values than the other estimators across all sample sizes, confirming its inefficiency. However, the impact of the sampling design on the simple mean estimator’s MSE is most evident, as we can clearly see lower MSE values under RSS than under SRS, and under perfect-ranking RSS than under imperfect-ranking RSS. The remaining four estimators have similar MSE values under SRS. The ratio (

) and regression (

) estimators show superior performance under imperfect-ranking RSS across all sample sizes. The superiority of the ratio and regression estimators remains under perfect-ranking RSS, especially for the smaller sample sizes, with the composite estimators (

and

) approaching them for the larger sample sizes (

).

6. Discussion

In this study, we introduced a composite estimator for estimating the finite population mean under RSS. The estimator is defined as a weighted average of the simple mean and the ratio estimator. We derived its MSE and obtained the optimal weight that minimizes the MSE, along with a plug-in estimator for this optimal weight. We evaluated the performance of the composite estimator—both with the known optimal weight and the estimated (plug-in) weight—via Monte Carlo simulations using bivariate normal and lognormal populations, as well as a real finite population of longleaf pine trees. Comparisons were made against the simple mean, ratio, and regression estimators under SRS, imperfect-ranking RSS, and perfect-ranking RSS. The results indicate that the proposed composite estimator is highly efficient, particularly for moderate-to-large sample sizes, even in cases where the simple mean and ratio estimators perform poorly. The regression estimator consistently emerged as a strong competitor across all settings. For all three designs, the performance of the composite, ratio, and regression estimators improved as the correlation between the study and auxiliary variables increased. This trend also benefited the simple mean estimator under imperfect-ranking RSS, as higher correlation improved ranking accuracy. These findings were consistent across normal, lognormal, and real data scenarios. Although the plug-in version of the composite estimator performed well, its efficiency may be further enhanced through refinements in estimating the optimal weight. A key step in this process involves estimating the moments of the order statistics, and , which we approximated using known formulas for normal and lognormal distributions. Similar approximations exist for other distributions and could be explored in future work. Another promising direction is extending composite estimators to settings with multivariate auxiliary variables, where one or more variables could be used for ranking, estimation, or both. Such generalizations could further enhance the utility and flexibility of composite estimators for survey data.