Unsupervised Segmentation and Alignment of Multi-Demonstration Trajectories via Multi-Feature Saliency and Duration-Explicit HSMMs

Abstract

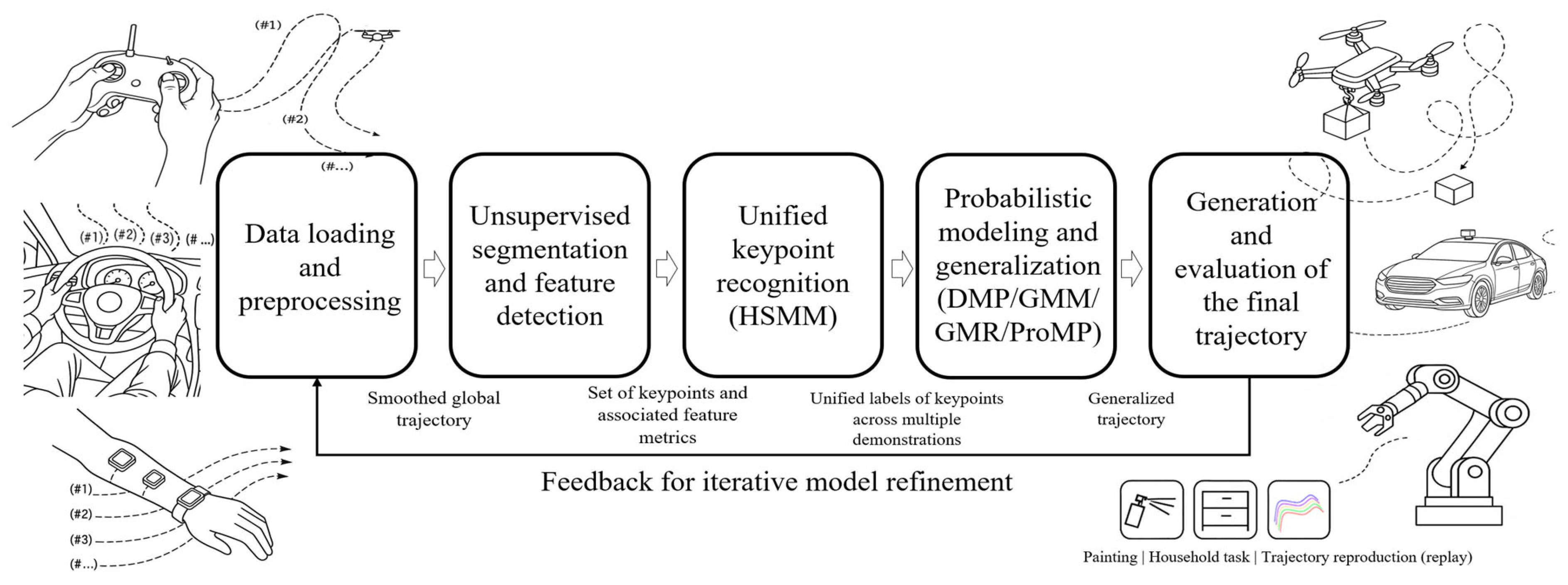

1. Introduction

- Scale-/time-warp-robust saliency. A topology-aware, multi-feature saliency (persistence + non-maximum suppression) that stabilizes keyframes under noise and tempo variations is developed, yielding sparser yet more stable anchors than signal-level detectors, and we formalize its stability in Proposition 1 (Section 2.3.5).

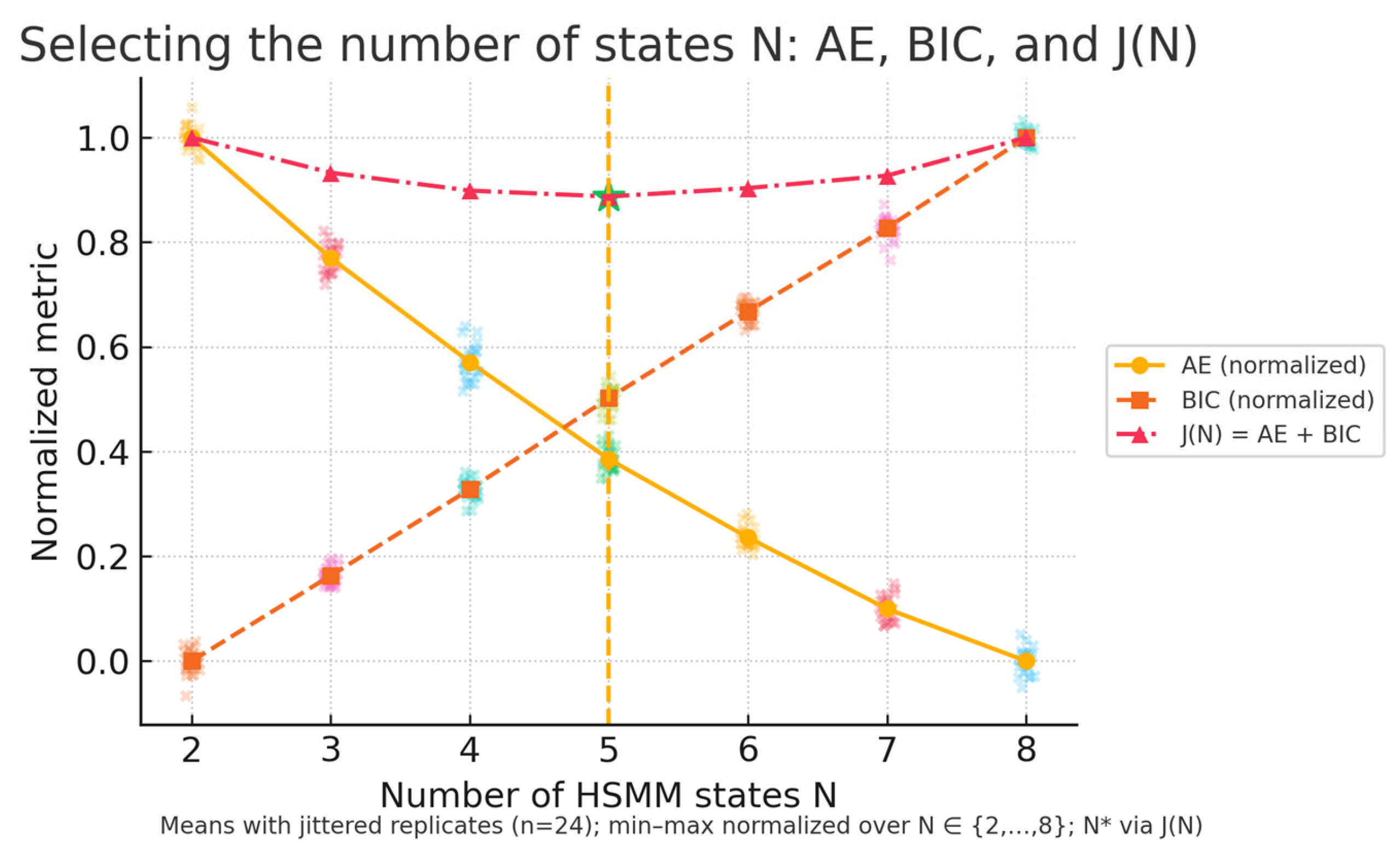

- Joint HSMM alignment with explicit durations. Cross-demonstration training with extended forward–backward/Viterbi recursions is used; model order is selected by a joint criterion combining BIC and alignment error (AE) to balance fit and parsimony.

- Label-free feature-weight self-calibration. CMA-ES on the weight simplex is used to minimize cross-demonstration structural dispersion, eliminating hand-tuned fusion and improving phase consistency.

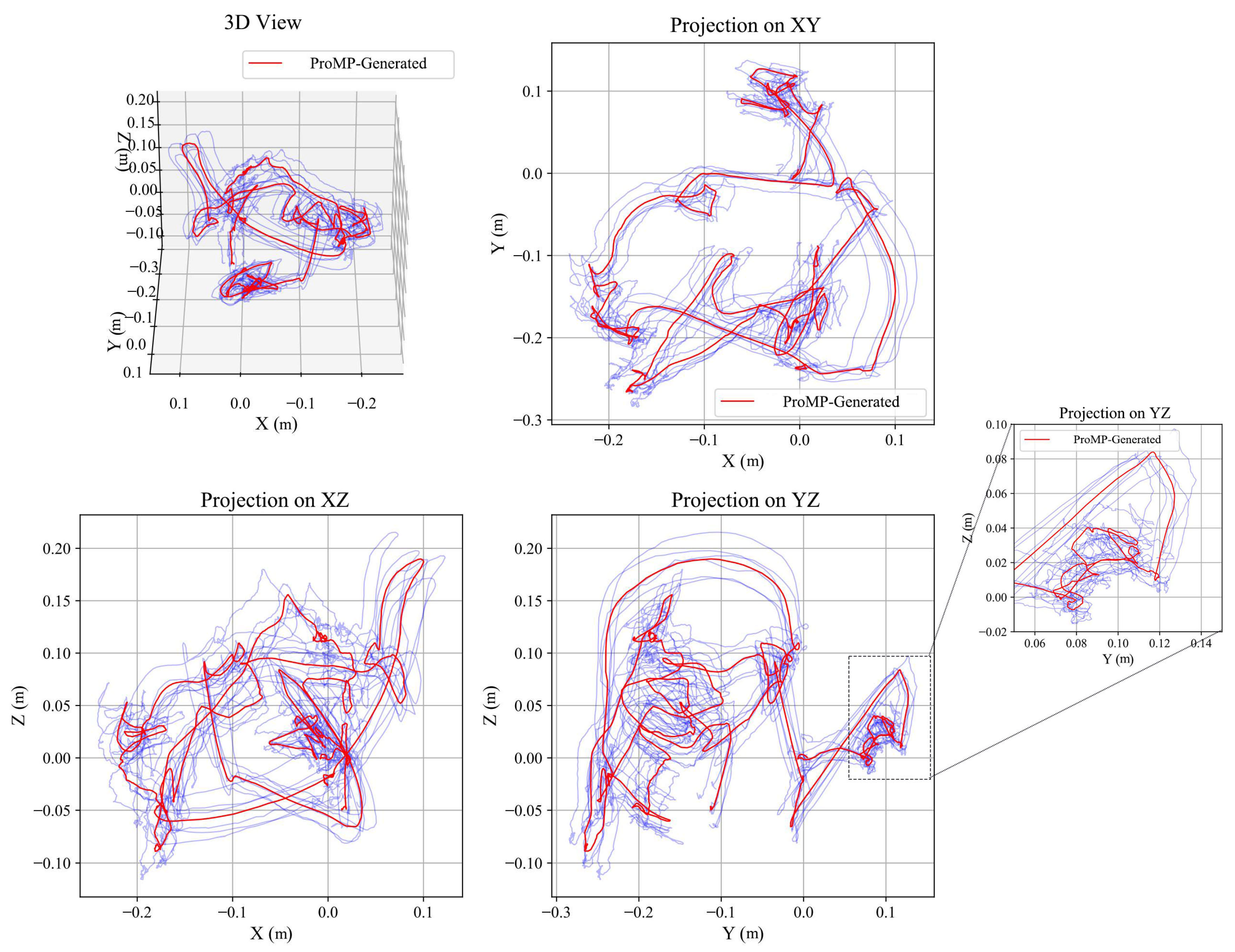

- Calibrated probabilistic encoding for planning. Segment-wise GMR/ProMP (optionally fused with DMP) returning means and covariances that integrate directly with OMPL/MPC for risk-aware execution is used.

2. Materials and Methods

2.1. Inputs, Outputs, and Assumptions

- A shared set of semantic phases and per-demo boundaries ;

- For each phase, a segment-wise generative model—DMP, GMM/GMR, or ProMP, alone or in combination—returning mean trajectories and covariance estimates;

- Under constraints (terminal/waypoints, velocity/acceleration limits, etc.), an executable trajectory and associated risk measures computed from covariances.

- Demonstrations consist of alternating quasi-stationary and transition segments;

- Time deformation is order-preserving (the semantic phase order does not change);

- Observation noise is moderate and can be mitigated by local smoothing and statistical filtering.

2.2. Multi-Feature Analysis and Automatic Segmentation

2.2.1. Data Ingestion and Pre-Processing

2.2.2. Feature Computation and Saliency Fusion

- 1.

- Velocity. Let

- 2.

- Acceleration.

- 3.

- Curvature. With ,

- 4.

- Direction-Change Rate (DCR). Define the unit direction vector . To avoid numerical issues at very low speed, introduce a threshold and set

- 5.

- Dimensionless fusion. Apply min–max normalization to each feature to obtain . For a weight vector with and , define the fused saliency

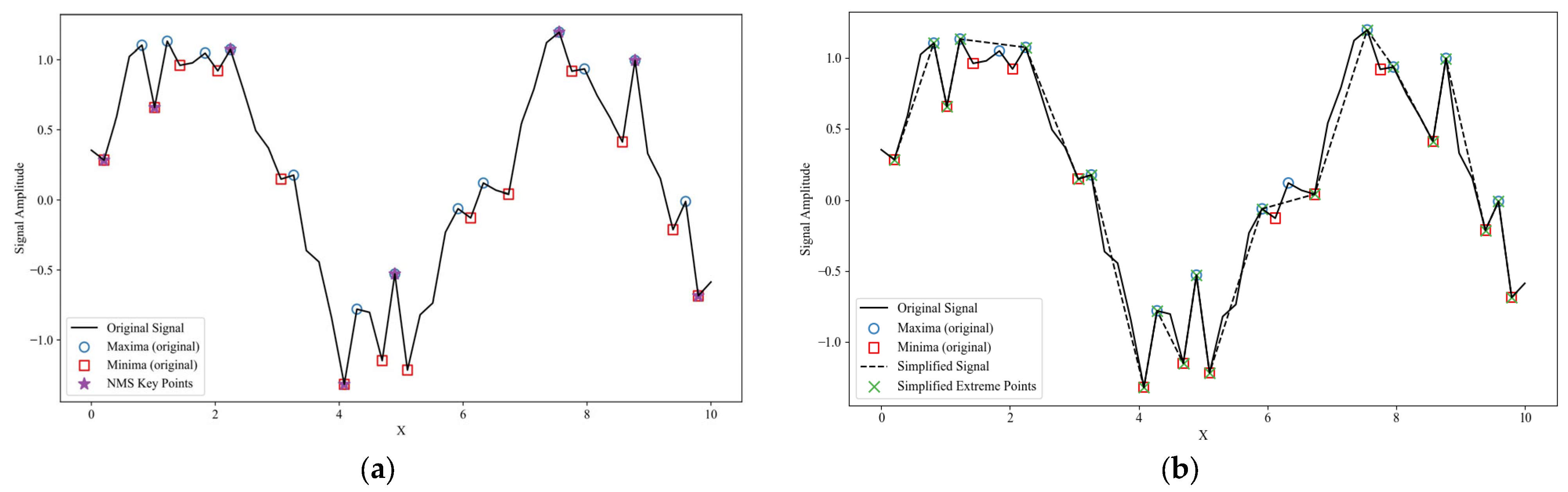

2.2.3. Keyframe Extraction with Topological Simplification

- 1.

- Candidate extrema via quantile thresholds.Let be a grid of quantiles (e.g., uniformly in [0.60,0.95]). For each :

- Set ;

- Collect indices and snap each to the nearest local extremum within a radius-3 neighborhood.

- 2.

- Persistence thresholding (scale-aware importance)

- 3.

- Non-maximum suppression (NMS).

2.2.4. Adaptive Feature-Weight Learning

- 1.

- Consistency functional.

- 2.

- Objective and constraints.

- 3.

- Solver and feasibility.

- 4.

- Computational profile.

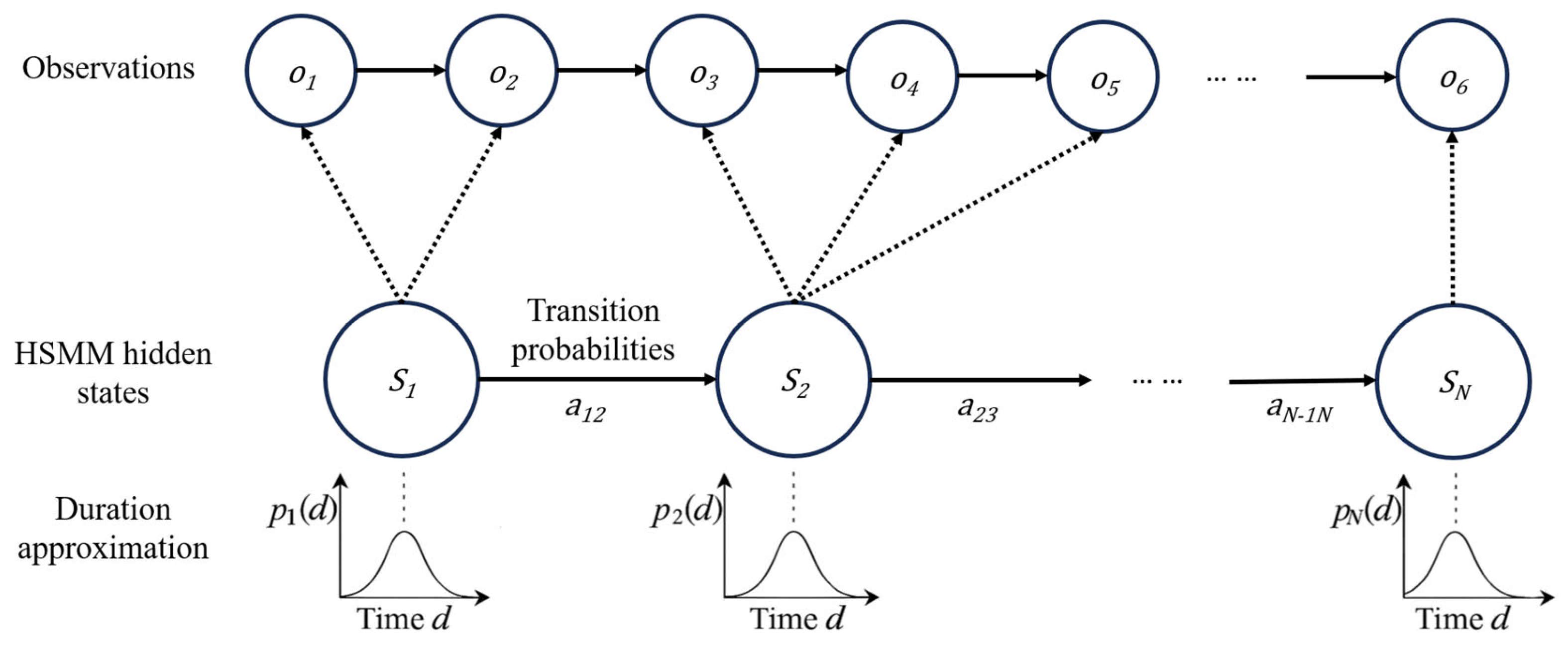

2.3. Multi-Demo Alignment and Segmentation via a Duration-Explicit HSMM

2.3.1. Model and Generative Mechanism

- (a)

- Initial phase: .

- (b)

- Duration: for the current phase , sample dwell length .

- (c)

- Observations: for with ,

- (d)

- Transition: ; terminal transitions end the sequence.

2.3.2. Parameter Estimation: Extended Baum–Welch

- 1.

- Forward variable (leaving ).

- 2.

- Backward variable.

- 3.

- Posteriors (E-step).

- 4.

- M-step (closed forms).

- 5.

- Numerical stability.

2.3.3. Semantic Time Axis: Decoding and Outputs

2.3.4. Alignment Quality, Model Selection, and Robustness

2.3.5. Stability Properties of Topology-Aware Saliency and Duration-Explicit Alignment

- Scale invariance. since persistence depends on amplitude differences and the elbow index is preserved.

- Weak time-warp stability (physical time). Under (b), extrema order is preserved; locations shift by at most samples due to the NMS window.

- Semantic-time invariance with HSMM. After duration-explicit decoding (Section 2.3), the phase order and boundaries induced by are invariant; order-preserving warps manifest primarily as duration redistribution across states (Section 2.3.4).

2.4. Statistical Motion Primitives and Probabilistic Generation

2.4.1. Dynamic Movement Primitives (DMP)

- 1.

- Single-segment dynamics.

- 2.

- Segment coupling and smoothness.

2.4.2. Gaussian Mixture Modeling and Regression (GMM/GMR)

2.4.3. Probabilistic Movement Primitives (ProMP)

2.4.4. Model Choice and Complementarity

- DMP excels at real-time execution and low jerk with simple time scaling;

- GMM/GMR offers closed-form means and covariances over phase and is convenient for planners needing analytic gradients;

- ProMP provides a distribution over shapes with exact linear-Gaussian conditioning, ideal for multi-goal tasks and collaboration.

3. Experiments and Results

3.1. Objectives and Evaluation Protocol

- Segmentation robustness. Do multi-feature saliency and topological persistence yield sparse yet structurally stable keyframes under heterogeneous noise and tempo variations?

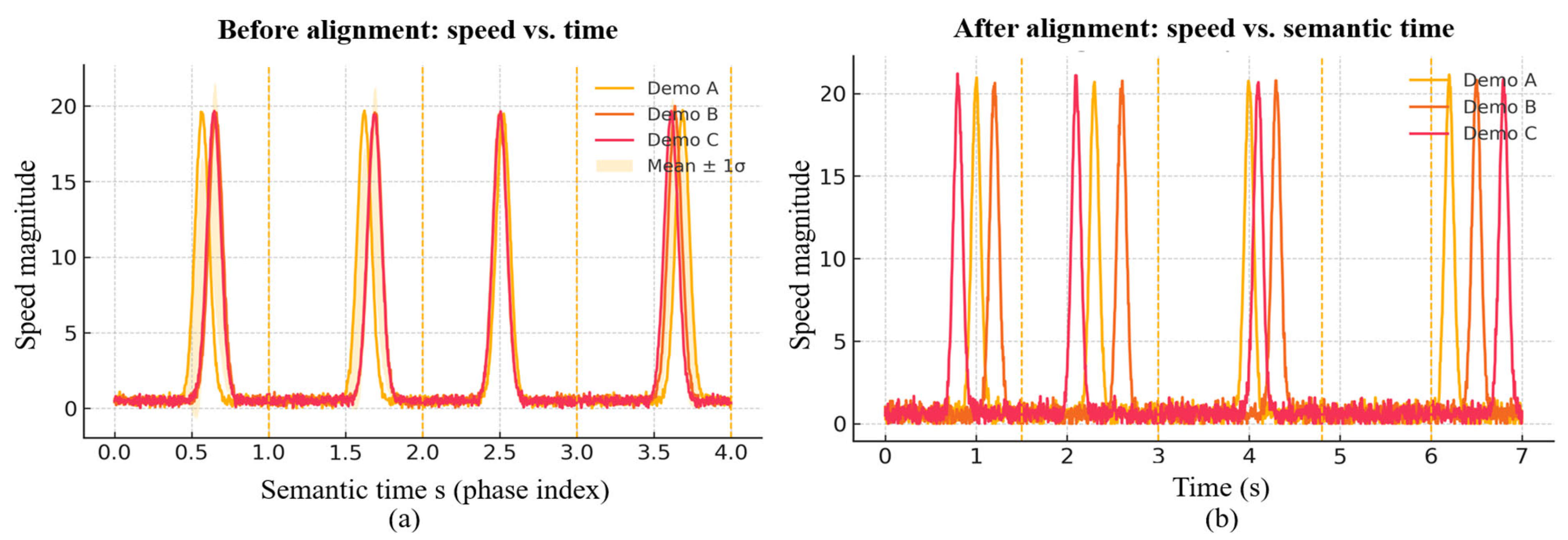

- Semantic alignment quality. Does duration-explicit HSMM reduce cross-demonstration time dispersion when non-geometric dwelling is present (e.g., hover, wait)?

- Generator calibration. On the shared semantic time base, do segment-wise probabilistic models achieve low reconstruction error, nominal uncertainty coverage, and dynamically schedulable executions?

3.2. Tasks, Datasets, and Testable Hypotheses

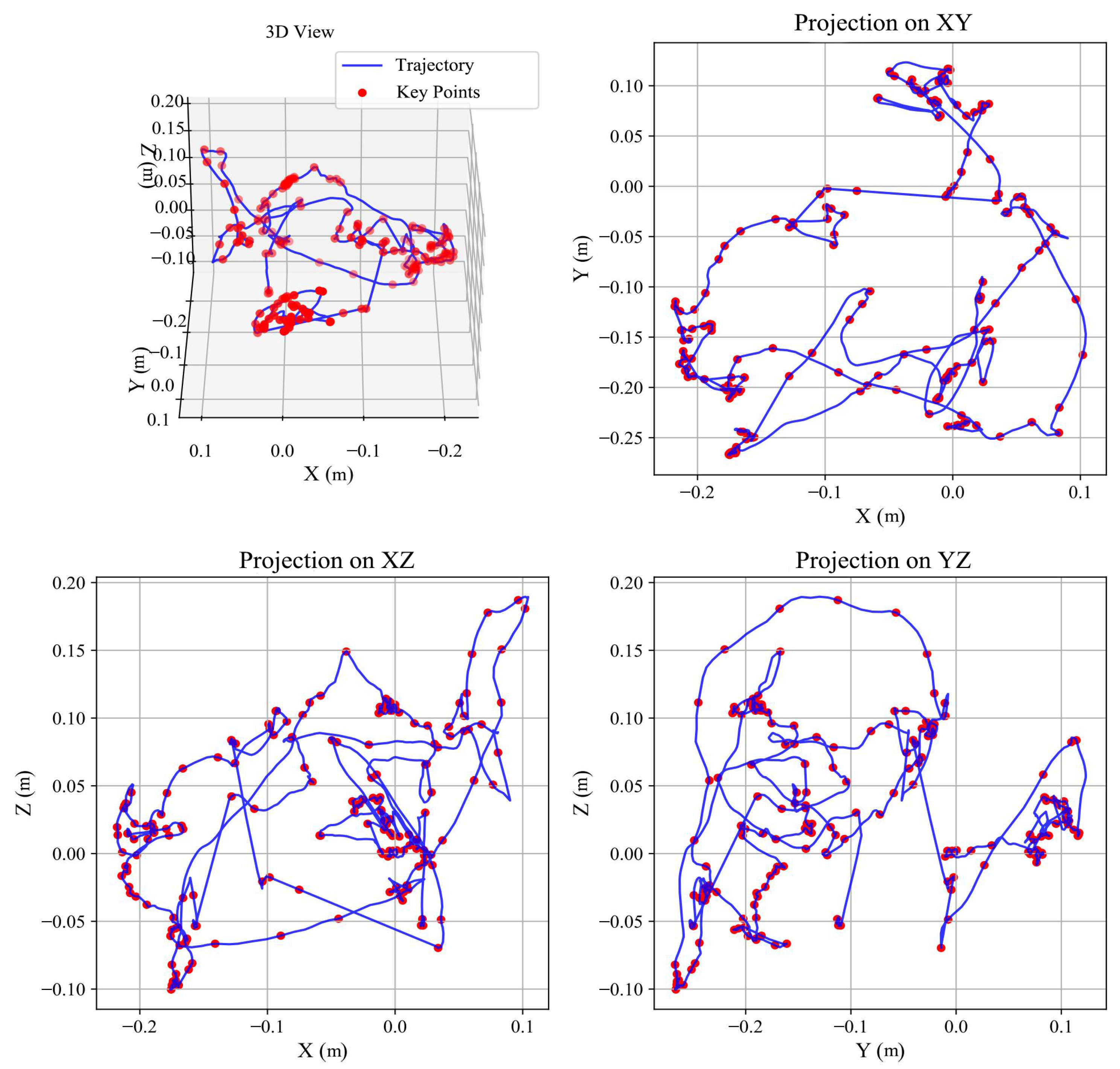

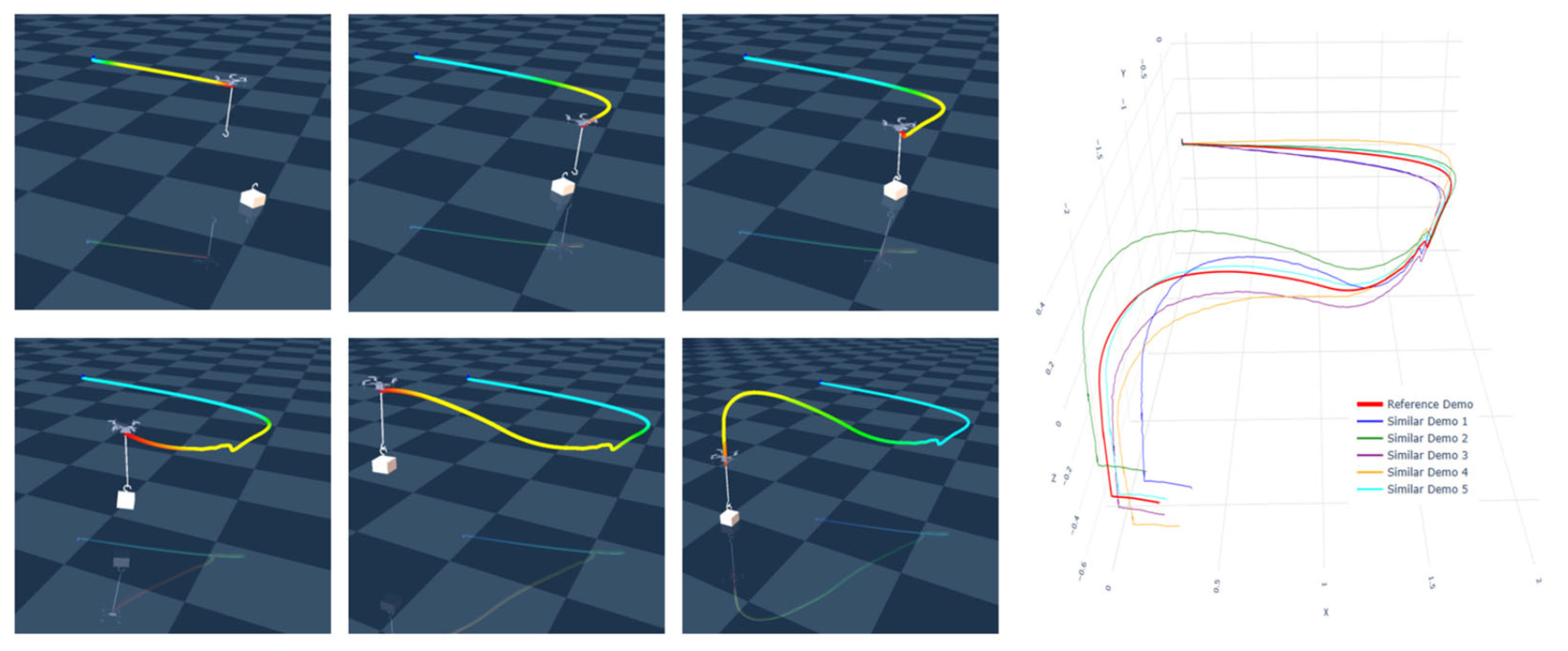

- Domain A—UAV-Sim (multi-scene flight). Sampling is at 100 Hz. Subtasks include take-off–lift–cruise–drop and gate-pass–loiter–gate-pass. Six subjects are used, with 20–30 segments per task. Observations: tool-center position (optional yaw). Figure 7 shows the environment and demonstrations.

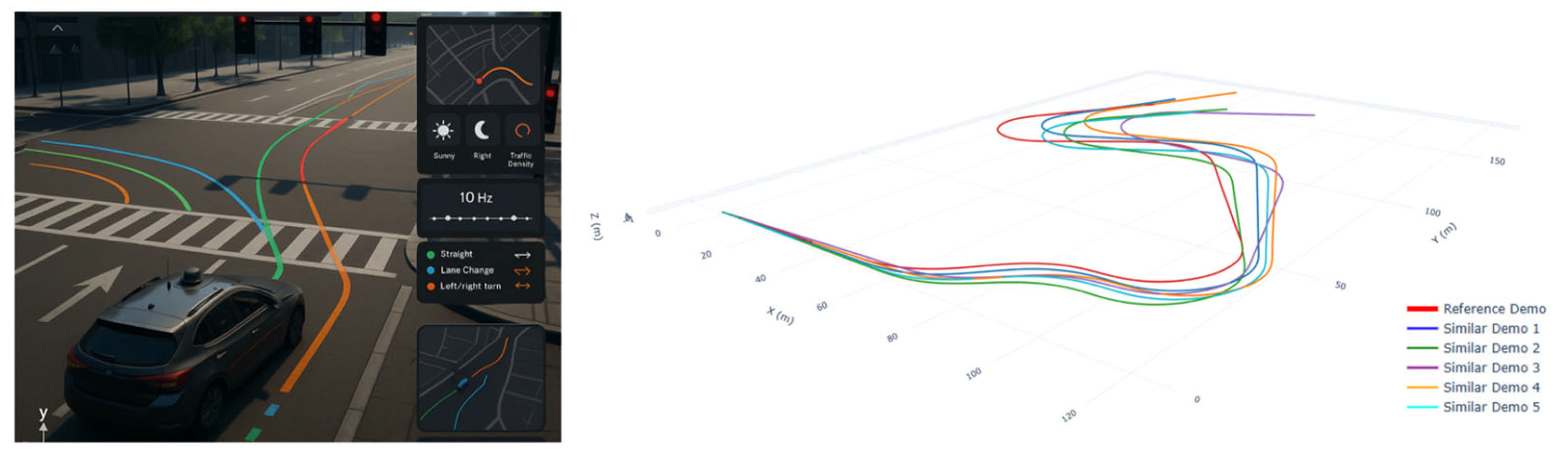

- Domain B—AV-Sim (CARLA/MetaDrive urban). Sampling is at 10 Hz across Town01–05, with varied weather/lighting and traffic control. Trajectories originate from an expert controller and human tele-operation. Observations: . See Figure 8.

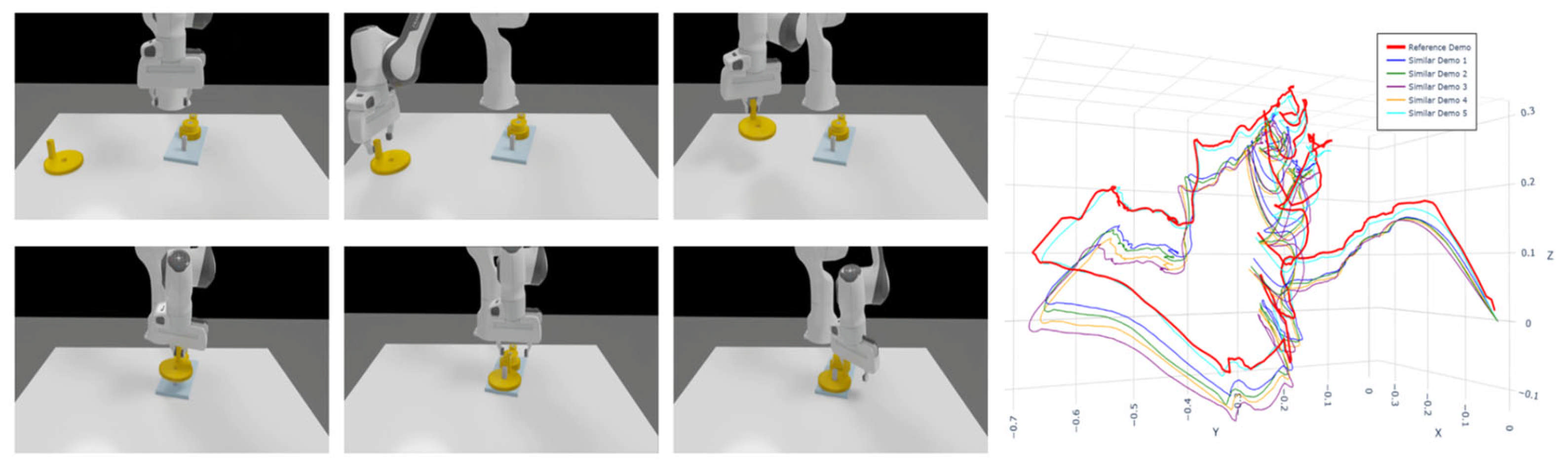

- Domain C—Manip-Sim (robomimic/RLBench assembly). Sampling is at 50–100 Hz. Tasks are akin to RoboTurk “square-nut”: grasp–align–insert with pronounced dwell segments. Observations: end-effector position. See Figure 9.

3.3. Metrics and Statistical Inference

- SOD (Equation (10), min): structural dispersion—mean point-to-point divergence on the shared time base.

- AE (Equation (19), min): Euclidean dispersion of phase end times across demonstrations.

- AAR (max): action acquisition rate. Given reference key actions (expert consensus/common boundaries) and detected , we count a hit if , with (i.e., 50 ms at 100 Hz; 0.5 s at 10 Hz).

- GRE (min): geometric reconstruction error (RMSE).

- TCR (max): time compression rate.

- Jerk (min): (normalized).

- CR (target ≈ 95%): nominal coverage. For each semantic segment, we sample uniformly in time; if , it is counted as covered; segment-level coverage is averaged and then length-weighted globally.

- Boundary precision/recall/. Let B be the set of reference boundaries (manual anchors or common-boundary clusters) and D the detected boundaries. Build a bipartite graph with edges if . Compute a one-to-one assignment via the Hungarian algorithm; let TP be the number of matched pairs, . Then , = , . We continue to report AAR for comparability with prior tables (interpreted as for key actions).

3.4. Baselines and Fairness Controls

3.5. Runtime and Scaling

3.6. Overall Results

3.7. Contribution Attribution: Ablation Study (UAV-Sim)

- Remove TDA (keep NMS): SOD +12.3%, AE +9.6% → persistence is key to scale-invariant noise rejection; without it, small-scale oscillations stack into spurious peak–valley pairs, degrading structure and boundaries.

- Remove NMS (keep TDA): AE +21.1% → suppressing same-polarity peak clusters in high-energy regions is critical for boundary stability; persistence alone cannot prevent multi-response.

- Fix equal weights (no ): SOD +18.6%, AAR −7.7 pp → consistency-driven weight learning mitigates channel scale imbalance and improves key-action capture.

- Replace HSMM with HMM: AE +31.2% → direct evidence of the geometric-duration bias when dwell exists (wait/loiter).

3.8. Robustness Evaluation Protocol

- (i)

- Monotone time-warps: (speed-up) and (slow-down);

- (ii)

- Additive white noise: m on positions;

- (iii)

- Random missing data: 20% uniform drops.

3.9. Robustness: Time-Warping, Noise, and Missing Data (AV-Sim)

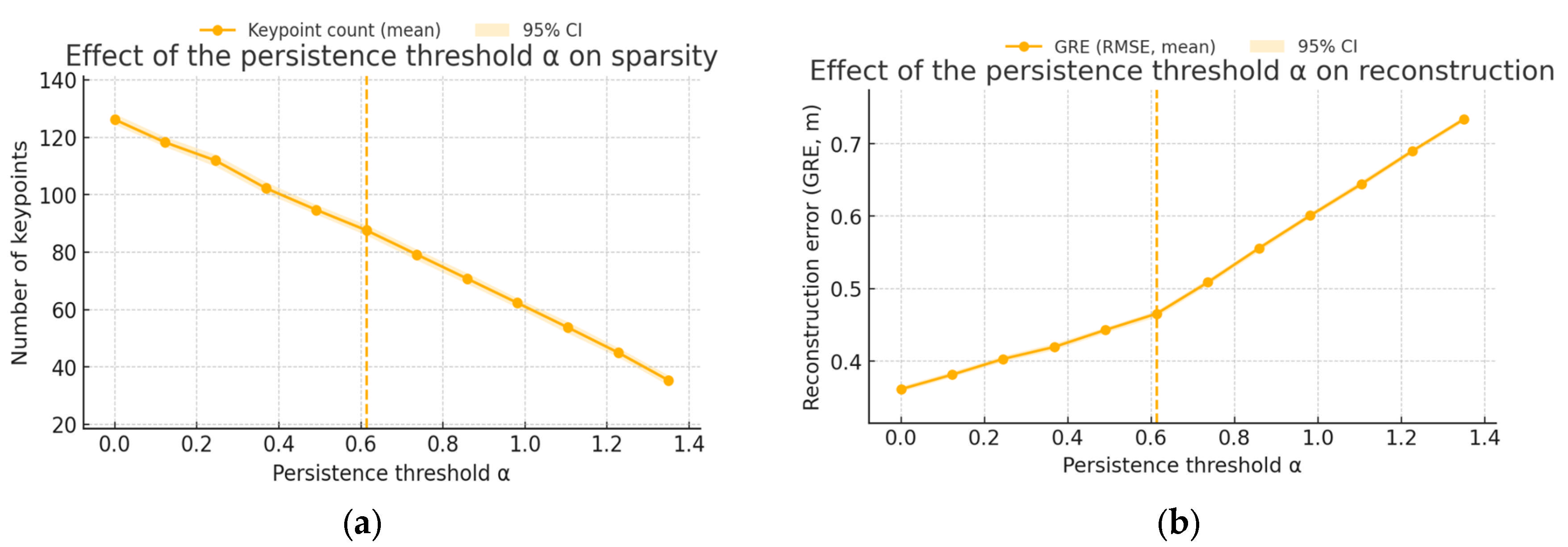

3.10. Model Selection and the Sparsity–Fidelity Trade-Off

- Alignment error (Equation (19)), which decreases and then plateaus as grows;

- Bayesian Information Criterion (Equation (20)), which increases monotonically due to the penalty .

- UAV-Sim. test-AE: Joint vs. AE-only vs. BIC-only ; after the Holm–Bonferroni correction; GRE unchanged within BCa 95% CIs; CR remains at 94–96%.

- AV-Sim. test-AE: Joint vs. AE-only vs. BIC-only ; ; GRE within CIs; CR 94–96%.

- Manip-Sim. test-AE: Joint vs. AE-only vs. BIC-only ; ; GRE within CIs; CR 94–96%.

- Front-end: Use (Kneedle elbow) and keep the NMS policy fixed across methods to isolate latent effects.

- Capacity: Use with . The solution is robust to replacing the equal-weight sum by for .

- Reproducibility: Always report the curves with BCa 95% CIs (cf. Figure 13), and state the chosen and their sensitivity.

3.11. In-Segment Generation: Accuracy, Smoothness, and Calibration

- Geometry: ProMP ≈ GMR < DMP < linear in GRE.

- Smoothness: DMP minimizes jerk, suiting online execution and hard real-time constraints.

- Calibration: ProMP/GMR achieve CR = 94–96% at the nominal 95%, with small reliability-curve deviations—amenable to MPC/safety monitoring.

3.12. Summary of Findings

4. Discussion

- (H1) Segmentation. The topology-aware saliency (multi-feature + persistence + NMS) yields sparse yet stable anchors; removing persistence or NMS increases SOD/AE by 9–21% and lowers AAR (ablation), confirming that both scale-invariant pruning and peak-cluster suppression are necessary.

- (H2) Alignment. Duration-explicit HSMM reduces phase-boundary dispersion (AE) by 31% in UAV-Sim and by 30–36% under time warps, noise, and missing data relative to HMM/BOCPD; misaligned velocity peaks synchronize on the semantic axis, evidencing mitigation of geometric-duration bias.

- (H3) Generation. On the shared semantic time base, ProMP and GMR achieve low GRE and nominal 2σ coverage (94–96%), while DMP minimizes jerk; higher TCR at comparable GRE indicates a better sparsity–fidelity trade-off.

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A. Generalization Under Model-Selection Criteria

Appendix A.1. Leave-One-Subject/Scene Generalization (UAV-Sim/AV-Sim/Manip-Sim)

| Domain | Selector | NN | Test-AE (s) | Test-GRE | CR (%) |

|---|---|---|---|---|---|

| UAV-Sim (M = 24) | AE-only | 7 | 0.33 ± 0.08 [0.30, 0.36] | 0.091 ± 0.020 m [0.087, 0.096] | 93.8 ± 2.7 |

| BIC-only | 4 | 0.36 ± 0.09 [0.33, 0.39] | 0.088 ± 0.019 m [0.084, 0.092] | 94.6 ± 2.4 | |

| Joint (ours) | 6 | 0.30 ± 0.07 [0.28, 0.32] | 0.086 ± 0.019 m [0.083, 0.089] | 95.1 ± 2.2 | |

| AV-Sim (M = 32) | AE-only | 7 | 0.43 ± 0.09 [0.40, 0.46] | 0.202 ± 0.036 m [0.196, 0.209] | 93.9 ± 2.8 |

| BIC-only | 4 | 0.46 ± 0.09 [0.43, 0.49] | 0.199 ± 0.035 m [0.193, 0.206] | 94.7 ± 2.6 | |

| Joint (ours) | 6 | 0.39 ± 0.08 [0.36, 0.42] | 0.196 ± 0.034 m [0.190, 0.203] | 95.0 ± 2.5 | |

| Manip-Sim (M = 20) | AE-only | 6 | 0.27 ± 0.08 [0.24, 0.29] | 0.86 ± 0.17 mm [0.82, 0.90] | 94.1 ± 2.6 |

| BIC-only | 4 | 0.29 ± 0.08 [0.26, 0.31] | 0.84 ± 0.16 mm [0.80, 0.88] | 94.8 ± 2.4 | |

| Joint (ours) | 6 | 0.25 ± 0.07 [0.23, 0.27] | 0.83 ± 0.16 mm [0.79, 0.86] | 95.2 ± 2.6 |

Appendix A.2. Synthetic Stress Test (Non-Geometric Dwell, 2–3 Segments)

| Set | Selector | NN | Test-AE (s) |

|---|---|---|---|

| GT-2seg | AE-only | 3 | 0.070 ± 0.014 [0.067, 0.074] |

| BIC-only | 2 | 0.062 ± 0.012 [0.059, 0.065] | |

| Joint (ours) | 2 | 0.051 ± 0.011 [0.049, 0.054] | |

| GT-3seg | AE-only | 4 | 0.084 ± 0.017 [0.080, 0.088] |

| BIC-only | 2 | 0.091 ± 0.018 [0.087, 0.095] | |

| Joint (ours) | 3 | 0.063 ± 0.013 [0.060, 0.066] |

References

- Correia, A.; Alexandre, L.A. A survey of demonstration learning. Robot. Auton. Syst. 2024, 182, 104812. [Google Scholar] [CrossRef]

- Jin, W.; Murphey, T.D.; Kulić, D.; Ezer, N.; Mou, S. Learning from sparse demonstrations. IEEE Trans. Robot. 2022, 39, 645–664. [Google Scholar] [CrossRef]

- Lee, D.; Yu, S.; Ju, H.; Yu, H. Weakly supervised temporal anomaly segmentation with dynamic time warping. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 11–17 October 2021; pp. 7355–7364. [Google Scholar]

- Braglia, G.; Tebaldi, D.; Lazzaretti, A.E.; Biagiotti, L. Arc-length-based warping for robot skill synthesis from multiple demonstrations. arXiv 2024, arXiv:2410.13322. [Google Scholar]

- Si, W.; Wang, N.; Yang, C. A review on manipulation skill acquisition through teleoperation-based learning from demonstration. Cogn. Comput. Syst. 2021, 3, 1–16. [Google Scholar] [CrossRef]

- Arduengo, M.; Colomé, A.; Lobo-Prat, J.; Sentis, L.; Torras, C. Gaussian-process-based robot learning from demonstration. J. Ambient. Intell. Humaniz. Comput. 2023, 14, 1–14. [Google Scholar] [CrossRef]

- Tavassoli, M.; Katyara, S.; Pozzi, M.; Deshpande, N.; Caldwell, D.G.; Prattichizzo, D. Learning skills from demonstrations: A trend from motion primitives to experience abstraction. IEEE Trans. Cogn. Dev. Syst. 2023, 16, 57–74. [Google Scholar] [CrossRef]

- Ansari, A.F.; Benidis, K.; Kurle, R.; Turkmen, A.C.; Soh, H.; Smola, A.; Wang, B.; Januschowski, T. Deep explicit duration switching models for time series. Adv. Neural Inf. Process. Syst. (NeurIPS) 2021, 34, 29949–29961. [Google Scholar]

- Sosa-Ceron, A.D.; Gonzalez-Hernandez, H.G.; Reyes-Avendaño, J.A. Learning from demonstrations in human–robot collaborative scenarios: A survey. Robotics 2022, 11, 126. [Google Scholar] [CrossRef]

- Ruiz-Suarez, S.; Leos-Barajas, V.; Morales, J.M. Hidden Markov and semi-Markov models: When and why are these models useful for classifying states in time series data. J. Agric. Biol. Environ. Stat. 2022, 27, 339–363. [Google Scholar] [CrossRef]

- Pohle, J.; Adam, T.; Beumer, L.T. Flexible estimation of the state dwell-time distribution in hidden semi-Markov models. Comput. Stat. Data Anal. 2022, 172, 107479. [Google Scholar] [CrossRef]

- Wang, X.; Li, J.; Xu, G.; Wang, X. A novel zero-velocity interval detection algorithm for a pedestrian navigation system with foot-mounted inertial sensors. Sensors 2024, 24, 838. [Google Scholar] [CrossRef] [PubMed]

- Haussler, A.M.; Tueth, L.E.; May, D.S.; Earhart, G.M.; Mazzoni, P. Refinement of an algorithm to detect and predict freezing of gait in Parkinson disease using wearable sensors. Sensors 2024, 25, 124. [Google Scholar] [CrossRef] [PubMed]

- Altamirano, M.; Briol, F.X.; Knoblauch, J. Robust and scalable Bayesian online changepoint detection. In Proceedings of the International Conference on Machine Learning (ICML), Honolulu, HI, USA, 23–29 July 2023; PMLR: Cambridge, MA, USA, 2023; Volume 202, pp. 642–663. [Google Scholar]

- Sellier, J.; Dellaportas, P. Bayesian online change point detection with Hilbert-space approximate Student-t process. In Proceedings of the International Conference on Machine Learning (ICML), Honolulu, HI, USA, 23–29 July 2023; PMLR: Cambridge, MA, USA, 2023; pp. 30553–30569. [Google Scholar]

- Tsaknaki, I.Y.; Lillo, F.; Mazzarisi, P. Bayesian autoregressive online change-point detection with time-varying parameters. Commun. Nonlinear Sci. Numer. Simul. 2025, 142, 108500. [Google Scholar] [CrossRef]

- Buchin, K.; Nusser, A.; Wong, S. Computing continuous dynamic time warping of time series in polynomial time. arXiv 2022, arXiv:2203.04531. [Google Scholar]

- Wang, L.; Koniusz, P. Uncertainty-DTW for time series and sequences. In European Conference on Computer Vision (ECCV); Springer: Cham, Switzerland, 2022; pp. 176–195. [Google Scholar]

- Mikheeva, O.; Kazlauskaite, I.; Hartshorne, A.; Kjellström, H.; Ek, C.H.; Campbell, N. Aligned multi-task Gaussian process. In Proceedings of the International Conference on Artificial Intelligence and Statistics (AISTATS), Valencia, Spain, 28–30 March 2022; PMLR: Cambridge, MA, USA, 2022; pp. 2970–2988. [Google Scholar]

- Saveriano, M.; Abu-Dakka, F.J.; Kramberger, A.; Peternel, L. Dynamic movement primitives in robotics: A tutorial survey. Int. J. Robot. Res. 2023, 42, 1133–1184. [Google Scholar] [CrossRef]

- Barekatain, A.; Habibi, H.; Voos, H. A practical roadmap to learning from demonstration for robotic manipulators in manufacturing. Robotics 2024, 13, 100. [Google Scholar] [CrossRef]

- Urain, J.; Mandlekar, A.; Du, Y.; Shafiullah, M.; Xu, D.; Fragkiadaki, K.; Chalvatzaki, G.; Peters, J. Deep Generative Models in Robotics: A Survey on Learning from Multimodal Demonstrations. arXiv 2024, arXiv:2408.04380. [Google Scholar]

- Vélez-Cruz, N. A survey on Bayesian nonparametric learning for time series analysis. Front. Signal Process. 2024, 3, 1287516. [Google Scholar] [CrossRef]

- Tanwani, A.K.; Yan, A.; Lee, J.; Calinon, S.; Goldberg, K. Sequential robot imitation learning from observations. Int. J. Robot. Res. 2021, 40, 1306–1325. [Google Scholar] [CrossRef]

- Bonzanini, A.D.; Mesbah, A.; Di Cairano, S. Perception-aware chance-constrained model predictive control for uncertain environments. In Proceedings of the 2021 American Control Conference (ACC), New Orleans, LA, USA, 25–28 May 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 2082–2087. [Google Scholar]

- El-Yaagoubi, A.B.; Chung, M.K.; Ombao, H. Topological data analysis for multivariate time series data. Entropy 2023, 25, 1509. [Google Scholar] [CrossRef]

- Nomura, M.; Shibata, M. cmaes: A simple yet practical Python library for CMA-ES. arXiv 2024, arXiv:2402.01373. [Google Scholar]

- Schafer, R.W. What is a Savitzky–Golay filter? IEEE Signal Process. Mag. 2011, 28, 111–117. [Google Scholar] [CrossRef]

- Tapp, K. Differential Geometry of Curves and Surfaces; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Gorodski, C. A Short Course on the Differential Geometry of Curves and Surfaces; Lecture Notes; University of São Paulo: São Paulo, Brazil, 2023. [Google Scholar]

- Cohen-Steiner, D.; Edelsbrunner, H.; Harer, J. Stability of persistence diagrams. Discret. Comput. Geom. 2007, 37, 103–120. [Google Scholar] [CrossRef]

- Satopaa, V.; Albrecht, J.; Irwin, D.; Raghavan, B. Finding a “Kneedle” in a haystack: Detecting knee points in system behavior. In Proceedings of the ICDCS Workshops, Minneapolis, MN, USA, 20–24 June 2011; pp. 166–171. [Google Scholar]

- Skraba, P.; Turner, K. Wasserstein stability for persistence diagrams. arXiv 2025, arXiv:2006.16824v7. [Google Scholar]

- Hansen, N. The CMA Evolution Strategy: A Tutorial. arXiv 2016, arXiv:1604.00772. [Google Scholar] [CrossRef]

- Singh, G.S.; Acerbi, L. PyBADS: Fast and robust black-box optimization in Python. J. Open Source Softw. 2024, 9, 5694. [Google Scholar] [CrossRef]

- Akimoto, Y.; Auger, A.; Glasmachers, T.; Morinaga, D. Global linear convergence of evolution strategies on more-than-smooth strongly convex functions. SIAM J. Optim. 2022, 32, 1402–1429. [Google Scholar] [CrossRef]

- Yu, S.-Z. Hidden semi-Markov models. Artif. Intell. 2010, 174, 215–243. [Google Scholar] [CrossRef]

- Chiappa, S. Explicit-duration Markov switching models. Found. Trends Mach. Learn. 2014, 7, 803–886. [Google Scholar] [CrossRef]

- Merlo, L.; Maruotti, A.; Petrella, L.; Punzo, A. Quantile hidden semi-Markov models for multivariate time series. Stat. Comput. 2022, 32, 61. [Google Scholar] [CrossRef]

- Jurafsky, D.; Martin, J.H. Speech and Language Processing: An Introduction to Natural Language Processing, Computational Linguistics, and Speech Recognition with Language Models, 3rd ed.; Online manuscript; Available online: https://web.stanford.edu/~jurafsky/slp3/ (accessed on 18 August 2025).

- Yu, S.-Z.; Kobayashi, H. An efficient forward–backward algorithm for an explicit-duration hidden Markov model. IEEE Signal Process. Lett. 2003, 10, 11–14. [Google Scholar]

| Property | DMP | GMM/GMR | ProMP |

|---|---|---|---|

| Shape representation | Basis functions + 2nd-order stable system | Gaussian over weights w | |

| Duration adaptation | time scaling | Requires resampling in phase | Basis-phase re-timing |

| Uncertainty | No closed-form (MC if needed) | Analytic posterior over w | |

| Online constraints | Endpoints/velocities easy | Refit or constrained regression | Exact linear-Gaussian conditioning |

| Execution smoothness | Low jerk (native dynamics) | Depends on mixture fit | Depends on basis and priors |

| Domain | CMA-ES | End- to-End | Peak RSS | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| UAV-Sim (100 Hz) | 24 | 2900 | 6 | 200 | 34 ms [29, 41] | 3.2 s/0.9 s [2.9–3.6/0.8–1.0] | 14 [13, 16] | 120 ms [105, 140] | 0.68 s [0.61, 0.75] × 24 | 75 s [67, 84] | 610 MB [560, 670] |

| AV-Sim (10 Hz) | 32 | 1800 | 6 | 120 | 19 ms [16, 23] | 1.8 s/0.5 s [1.6–2.0/0.45–0.58] | 12 [11, 13] | 80 ms [70, 92] | 0.42 s [0.37, 0.47] × 22 | 38 s [34, 43] | 520 MB [480, 560] |

| Manip-Sim (50–100 Hz) | 20 | 2500 | 5 | 150 | 27 ms [22, 32] | 2.4 s/0.6 s [2.1–2.7/0.54–0.68] | 15 [14, 16] | 95 ms [83, 110] | 0.55 s [0.49, 0.62] × 24 | 59 s [53, 66] | 580 MB [540, 620] |

| Method | SOD (m) | AE (s) | GRE (m) | TCR (%) | AAR (%) | Jerk | CR (%) |

|---|---|---|---|---|---|---|---|

| Curvature + quantile | 0.081 ± 0.019 | 0.55 ± 0.11 | 0.124 ± 0.027 | 34.9 ± 4.8 | 68.1 ± 6.0 | 1.22 ± 0.10 | – |

| Multi-feat (equal), no TDA/NMS | 0.071 ± 0.017 | 0.47 ± 0.10 | 0.110 ± 0.023 | 41.8 ± 5.1 | 73.8 ± 5.7 | 1.16 ± 0.08 | – |

| Multi-feat + TDA/NMS + HMM | 0.060 ± 0.014 | 0.41 ± 0.09 | 0.098 ± 0.019 | 54.6 ± 4.3 | 81.0 ± 4.8 | 1.08 ± 0.07 | – |

| BOCPD | 0.064 ± 0.016 | 0.46 ± 0.12 | 0.105 ± 0.022 | 51.0 ± 4.6 | 78.3 ± 5.4 | 1.14 ± 0.08 | – |

| + TDA/NMS + HSMM + ProMP | 0.045 ± 0.012 | 0.28 ± 0.07 | 0.082 ± 0.018 | 55.0 ± 4.0 | 88.7 ± 4.2 | 1.00 ± 0.06 | 94.9 ± 2.6 |

| Method | SOD (m) | AE (s) | GRE (m) | TCR (%) | AAR (%) | Jerk | CR (%) |

|---|---|---|---|---|---|---|---|

| Curvature + quantile | 0.172 ± 0.030 | 0.70 ± 0.14 | 0.247 ± 0.041 | 32.7 ± 5.2 | 66.0 ± 6.7 | 1.18 ± 0.09 | – |

| Multi-feat (equal), no TDA/NMS | 0.160 ± 0.029 | 0.63 ± 0.12 | 0.231 ± 0.038 | 39.5 ± 5.0 | 71.6 ± 6.1 | 1.14 ± 0.08 | – |

| Multi-feat + TDA/NMS + HMM | 0.148 ± 0.027 | 0.55 ± 0.11 | 0.214 ± 0.035 | 47.4 ± 4.7 | 78.8 ± 5.4 | 1.08 ± 0.07 | – |

| + TDA/NMS + HSMM + ProMP | 0.112 ± 0.022 | 0.37 ± 0.08 | 0.191 ± 0.033 | 47.5 ± 4.6 | 86.3 ± 5.0 | 1.00 ± 0.06 | 95.1 ± 2.5 |

| Method | SOD (mm) | AE (s) | GRE (mm) | TCR (%) | AAR (%) |

|---|---|---|---|---|---|

| Curvature + quantile | 1.12 ± 0.27 | 0.33 ± 0.09 | 1.02 ± 0.19 | 30.0 ± 4.8 | 65.3 ± 6.3 |

| Multi-feat (equal), no TDA/NMS | 0.94 ± 0.24 | 0.28 ± 0.08 | 0.86 ± 0.16 | 33.0 ± 5.0 | 69.1 ± 5.8 |

| Multi-feat + TDA/NMS + HMM | 0.87 ± 0.22 | 0.29 ± 0.08 | 0.91 ± 0.17 | 35.1 ± 5.1 | 70.0 ± 5.7 |

| BOCPD | 0.98 ± 0.25 | 0.31 ± 0.09 | 1.05 ± 0.20 | 45.0 ± 5.6 | 71.9 ± 5.4 |

| + TDA/NMS + HSMM + ProMP | 0.72 ± 0.18 | 0.24 ± 0.07 | 0.79 ± 0.15 | 49.2 ± 4.8 | 83.4 ± 5.1 |

| Variant | ΔSOD | ΔAE | ΔGRE | ΔTCR | ΔAAR |

|---|---|---|---|---|---|

| No TDA (NMS only) | 12.30% | 9.60% | 6.70% | −9.4% | −5.8% |

| No NMS (TDA only) | 9.20% | 21.10% | 7.30% | −3.7% | −6.1% |

| ) | 18.60% | 14.40% | 9.10% | −1.1% | −7.7% |

| HMM in place of HSMM | 23.50% | 31.20% | 17.50% | ≈ 0 | −8.5% |

| Perturbation | Setting | HMM (Baseline) | HSMM (Ours) | Δ |

|---|---|---|---|---|

| Time-warp | 0.61 | 0.39 | −36% | |

| Time-warp | 0.58 | 0.38 | −34% | |

| Gaussian noise | 0.57 | 0.4 | −30% | |

| Missing data | 20% random drop | 0.63 | 0.41 | −35% |

| Contribution | Failure Mode | Trigger | Mitigation |

|---|---|---|---|

| Topology-aware saliency (persistence + NMS) | Missed/duplicate keyframes in weak-signal regions | Long constant-speed stretches; low curvature/DCR; micro-jitter | Energy-proportional NMS window; minimal peak height with persistence elbow; add force/tactile/yaw channels |

| Topology-aware saliency (persistence + NMS) | Over-pruning near dense peaks | Local oscillations at sharp turns | Two-stage NMS (coarse-to-fine); top-k per <polarity, neighborhood>; relax elbow by one step when peak density is high |

| Weight self-calibration (CMA-ES on simplex) | Weight collapse; slow/noisy convergence | Heterogeneous demos; rugged SOD surface | Entropy/L2 regularization; multi-start and covariance restarts; early-stop on held-out SOD; feature-stream caching |

| Duration-explicit HSMM | Under-fitting multi-modal/heavy-tailed dwells | Bi-modal loiter times; operator-dependent pauses | Truncated mixture/quantile dwell distributions; hazard-based HSMM; optional skip transitions |

| Segment-level generators (DMP/GMR/ProMP) | DMP lacks closed-form uncertainty; GMR/ProMP boundary-sensitive | Risk analysis needs calibrated covariances; segmentation residuals | Use GMR/ProMP for uncertainty; init DMP from GMR mean for low jerk; condition ProMP on soft endpoint priors; boundary-neighborhood reweighting |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, T.; Neusypin, K.A.; Dmitriev, D.D.; Yang, B.; Rao, S. Unsupervised Segmentation and Alignment of Multi-Demonstration Trajectories via Multi-Feature Saliency and Duration-Explicit HSMMs. Mathematics 2025, 13, 3057. https://doi.org/10.3390/math13193057

Gao T, Neusypin KA, Dmitriev DD, Yang B, Rao S. Unsupervised Segmentation and Alignment of Multi-Demonstration Trajectories via Multi-Feature Saliency and Duration-Explicit HSMMs. Mathematics. 2025; 13(19):3057. https://doi.org/10.3390/math13193057

Chicago/Turabian StyleGao, Tianci, Konstantin A. Neusypin, Dmitry D. Dmitriev, Bo Yang, and Shengren Rao. 2025. "Unsupervised Segmentation and Alignment of Multi-Demonstration Trajectories via Multi-Feature Saliency and Duration-Explicit HSMMs" Mathematics 13, no. 19: 3057. https://doi.org/10.3390/math13193057

APA StyleGao, T., Neusypin, K. A., Dmitriev, D. D., Yang, B., & Rao, S. (2025). Unsupervised Segmentation and Alignment of Multi-Demonstration Trajectories via Multi-Feature Saliency and Duration-Explicit HSMMs. Mathematics, 13(19), 3057. https://doi.org/10.3390/math13193057