1. Introduction

Even though the class of Runge–Kutta (RK) methods has been well established and studied for decades, a complete determination of all their coefficient values based on their performance remains unresolved to this day, except for RK methods with a small number of stages. This is due to the large number and the strong nonlinearity of the conditions that must be satisfied to achieve high-order accuracy. The main approach to address this complexity is to predetermine a set of coefficients, often by setting them to zero, to simplify the resulting system enough to be solvable [

1] and, to the same end, to consider additional simplifying assumptions [

2].

Traditionally, once the order conditions have been satisfied, the remaining free coefficients are used to satisfy other local properties, such as increased phase-lag order and/or amplification error order, phase fitting, amplification error fitting, exponential/trigonometric fitting, and minimisation of the local truncation error, among others. The problems considered here, the (2+1)-dimensional nonlinear Schrödinger (NLS) equation, the Two-Body (TB) problem and its generalisation, i.e., the

N-Body (NB) problem, all exhibit oscillatory and periodic behaviour. As such, methodologies targeting oscillatory and/or periodic systems have been widely applied. For example, a phase- and amplification-fitted Runge–Kutta–Nyström pair has been derived in [

3] for the numerical solution of oscillatory systems. For the same type of problems, Abdulsalam et al. [

4] developed a multistep Runge–Kutta–Nyström method with frequency-dependent coefficients. More specific problem approaches have been adopted as well, and trigonometrically-fitted methods have been developed for the Schrödinger equation in [

5] and orbital problems in [

6]. Further minimisation of the local truncation error coefficients or other quantities, e.g., the Lagrangian of the system, has also been investigated [

7], while invariant domain preserving and mass conservative explicit Runge–Kutta methods have also been developed [

8]. Finally, explicit integrators that obey local multisymplectic conservation laws, based on partitioned Runge–Kutta methods, have been investigated in [

9].

However, a key limitation of these classical approaches is their reliance on local properties. That is, they ensure the method performs well locally, perhaps by integrating simple problems, based on the assumption that acquiring certain local features implies good global behaviour. This assumption, although common, is not always verified for complex or long-time integration problems. The global error in the real problem remains the ultimate metric for assessing a method’s performance, and this is the approach we adopt here. Rather than analysing local accuracy or stability conditions, we directly target the global error of the problem.

For this reason, we consider a parametric explicit RK method with eight stages and sixth-order accuracy, which has four free coefficients and can be optimised to efficiently solve the NLS, TB, and NB problems. In a previous work [

10], we attempted to optimise this method for the NLS equation only. However, several significant barriers were encountered: the highly nonlinear behaviour of the optimisation landscape, the presence of local minima in the free coefficient space, the expensive computation of the global error via time integration, and the black-box nature of the objective function, due to the absence of derivative information. These factors make a systematic investigation of the optimisation problem particularly difficult and suggest the need for non-traditional approaches.

Utilising artificial intelligence methodologies to solve scientific and engineering problems is increasingly common; see [

11] and references therein. Computational intelligence techniques [

12], and in particular evolutionary computation (EC) methods [

13], are well-suited for optimising parametric numerical methods. Particle swarm optimisation (PSO) is among the most prominent of these methods [

14], and differential evolution techniques are also gaining popularity [

15]. There is research that involves combining optimisation and RK methods, but not with the ultimate goal of developing an optimised RK method. For example, in [

16], the RK method is used to define the update rule in a metaheuristic optimiser. Notwithstanding, there have been limited applications of EC techniques to optimise finite difference schemes and even fewer for Runge–Kutta methods, despite the fact that the complex nature and size of the system of nonlinear equations would benefit from such techniques. Babaei in [

17] develops new RK methods for the solution of initial value problems; however, the process does not involve any order conditions. Neelan and Nair [

18] developed hyperbolic Runge–Kutta methods with an optimised stability region using evolutionary algorithms. Optimisation of parametric RK methods has also been achieved using differential evolution by Kovalnogov et al. in [

19] and by Jerbi et al. in [

20].

In this article, we use the particle swarm optimisation algorithm [

21] to determine the optimal values of the four free coefficients in our parametric RK method [

10]. PSO, which continues to evolve, is well-suited to overcoming the aforementioned obstacles [

22], as it does not require derivative information and allows for efficient global exploration of the coefficient space. It also provides a systematic way to experiment with otherwise impractical constraints, such as relaxing the node bounds

to a more general interval

. The use of PSO not only enhances the ability to identify effective RK coefficients but also enables the discovery of optimal methods tailored to real-world problems in quantum physics, astronomy, and related fields.

In summary, the main contributions of this article are the formulation of an evolutionary optimisation framework for Runge–Kutta methods that targets global accuracy rather than local properties, the introduction of a benchmark-based objective function, the relaxation of conventional coefficient bounds to enhance stability and error properties, and the identification of structural patterns in optimal coefficients for highly oscillatory regimes.

The structure of this paper is as follows:

In

Section 2, we introduce the Runge–Kutta framework, formulate the parametric optimisation problem, and describe the implementation of the evolutionary computation methods;

In

Section 3, we present the numerical problems used for training and testing the methods;

In

Section 4, we report the optimal Runge–Kutta coefficients obtained for each training problem;

In

Section 5, we analyse the error and stability properties of the resulting methods;

In

Section 6, we present numerical results evaluating the performance of the optimised methods;

In

Section 7, we discuss key trends and implications of the results;

In

Section 8, we summarise the main contributions.

2. Evolutionary Optimisation of Parametric RK Methods

2.1. Overview of Runge–Kutta Methods

The problems under investigation fall under the same category of initial value problems, presented in the autonomous form:

where

u implicitly contains

t and potentially

x and

y and all derivatives with respect to variables other than

t, if they exist, which can be semi-discretised via finite differences or replaced by a theoretical solution if available. The resulting problem can, in that case, be solved using the method of lines.

An

s-stage explicit Runge–Kutta method for the solution of Equation (

1) is presented below:

where

is strictly lower triangular,

(weights),

(nodes), and all coefficients

, for

and

, define the method [

2]. Here,

is the step length in time, and

f is defined in the form

.

2.2. Parametric Runge–Kutta Method

We consider a general parametric method, which was developed in [

10] for the solution of Equation (

1). It is an eight-stage, sixth-order explicit RK method and has 10 fixed coefficients, namely

,

,

,

,

,

,

,

,

,

, while 29 coefficients depend on one or more of

, which serve as the four degrees of freedom in the optimisation.

In [

10], we attempted to optimise the method using a standard optimisation technique, with a progressively decreasing step length

from

to

for all four free coefficients

. The optimisation yielded the following results as optimal:

,

,

,

. Using evolutionary optimisation, we will identify improved variants of the method described above, illustrating the limitations of conventional optimisation in finding globally optimal values.

2.3. The Optimisation Problem

The objective of the optimisation is to explore the free coefficient space thoroughly and efficiently across a range of step lengths to ensure that the optimal RK method performs well across all step lengths. Many setups were successful, with varying efficiencies. For example, when a reliable benchmark method exists across all accuracy levels and can act as a benchmark, then it is useful to optimise the mean ratio of the errors of the method under development to those of the benchmark method.

We define the objective function

C as the mean ratio of the maximum norm of the global error produced by the new method over that of the benchmark method, averaged over several runs with different step lengths. Formally, the objective function is defined as

C: The objective function to be minimised. It represents the average relative error of the new method compared with the benchmark method with the same stages and order.

N: The total number of runs, each corresponding to a specific step length .

: The maximum norm of the global error of the new method in the i-th run.

: The maximum norm of the global error of the benchmark method in the i-th run.

The benchmark method is only run once per run. We chose the optimal method developed in [

10], also presented in

Section 4, to act as the benchmark method, due to its consistent performance across all step lengths.

In order to ensure a uniformly good performance for all accuracies, we select a range of different step lengths

that produce results with varying accuracies, avoiding both excessively large (unstable) and excessively small (round-off dominated) values. The best results were obtained from the geometric sequence:

Several variations were also examined, but the above combinations provided the most reliable results over a set of individual runs.

Each run involves solving a problem using both the new and the benchmark methods under identical conditions (e.g., same problem, same step length). The global error is measured as the maximum absolute difference between the numerical and exact (or reference) solution over time. By averaging the ratio across all N runs, we obtain a scalar performance measure C that reflects the overall accuracy of the new method relative to the benchmark. A value of indicates that the new method, on average, outperforms the benchmark in terms of maximum global error.

For optimising the four coefficients to perform well when integrating the NLS Equation (

10), described in

Section 3.1, we use the same equation over a shorter interval as a training problem, in contrast to the test problem.

To improve the performance of the methods in the

N-Body problem (

19), described in

Section 3.3, instead of using it as a training problem and test problem, we select the Two-Body problem (

17), described in

Section 3.2, to tune the coefficients, as it is computationally cheaper yet shares similar dynamics. We experimented with three different eccentricities, but

yielded the best results, as it is the dominant eccentricity in our problem, as explained in

Section 3.3.

Alternatively, we tested the following objective function:

where

is the experimental order of accuracy, although it can be empirically tuned, as its purpose in the above formula is to balance the scale of the ratios within the objective function (an equivalent objective function was proposed in [

20]). However, despite offering independence from a benchmark method, the function was less consistent and required fine-tuning of the parameter

for comparable results, so it was not adopted in our main optimisation.

We have one main boundary constraint for all coefficients , which is the typical constraint when developing Runge–Kutta methods. However, this constraint offers limited practical benefit for the problems studied. As we will show, removing this bound allows for smaller maximum global error, lower local error constants, and larger stability intervals, all simultaneously. The efficient implementation of PSO allows this type of experimentation with different setups, enabling the removal of traditional constraints that are not essential. Even when bounds are enforced, PSO yields better results than classical optimisation techniques.

To conclude, we present the optimisation problem under investigation below:

Minimise the objective function

C, defined in (

3),

with respect to the four coefficients ,

in combination with the step lengths defined in (4),

subject to (optional).

2.4. Implementation of Particle Swarm Optimisation

Because the goal is to minimise the global error, and the objective function lacks derivative information, derivative-based methods are inapplicable. For instance, optimisation methods such as gradient descent could be considered; however, the absence of derivative information and the problem’s strong nonlinearity render derivative-free approaches more appropriate. This motivates the use of derivative-free global optimisation strategies, such as evolutionary computation methods.

Particle Swarm Optimisation (PSO), inspired by the social behaviour of bird flocks and fish schools, is a population-based stochastic method that iteratively improves candidate solutions (in our case, quadruples of free coefficients) through individual experience and social learning. PSO is a well-established and widely used optimisation technique, known for its robustness, simplicity, and flexibility across diverse problem domains. We selected PSO for its balance between exploration and exploitation and relatively few control parameters [

21,

22,

23]. Its straightforward tuning requirements and fast convergence behaviour further support its practical appeal. Additionally, its structure naturally supports parallel evaluation of candidates, which is beneficial given the computational cost of calculating the global error via time integration. While other evolutionary methods, such as differential evolution, have been applied in similar optimisation problems, PSO best meets our requirements for efficiency, robustness, and ease of integration into the optimisation framework.

We used a standard PSO algorithm described by the update rules below (Algorithm 1).

| Algorithm 1 Neighbourhood-Best PSO with Adaptive Parameters |

The position of particle i at iteration is updated as

The velocity is updated according to

where

is the inertia weight at iteration t; and are the cognitive and social acceleration coefficients; are independent uniform random numbers drawn for each particle and dimension at every iteration; is the best position found by particle i; is the best position found in the neighbourhood of particle i; is the neighbourhood size at iteration t.

The inertia weight is adapted at each iteration as

where and bound the inertia range, and stallCounter counts consecutive iterations without improvement. The neighbourhood size is adapted as

where and are the minimum and neighbourhood sizes, respectively.

|

In all experiments, the parameters were set as follows:

,

,

,

,

, and

equal to the swarm size

S. These choices follow commonly recommended guidelines about parameter values, such as the cognitive and social acceleration coefficients [

23,

24] and update rules, specifically the inertia and neighbourhood update rules [

25], while accommodating the specific requirements of our problems. For example, there are numerous articles and surveys advocating for the use of neighbourhoods in the PSO algorithm [

26,

27]. Other authors propose even more advanced variants [

28,

29], however, we prefer the technique described above, which accommodates ease of integration without compromising on efficiency or robustness.

The population size is now generally expected to be much larger than the 20–50 particles suggested in early PSO studies, as observed in many applications [

30]. In our study, the criteria for selecting a population size

S are, in order of priority: (i) achieving the lowest possible objective-function values among the best solutions, (ii) maintaining solution diversity, and (iii) if these two are comparable, maximising the computational efficiency of the optimisation process. We analysed the sensitivity of these criteria with respect to

when training for the Two-Body problem (e = 0.05) for the step lengths in (

4a) and objective function (

5), based on nine independent runs per population size in this representative test configuration. The analysis revealed equivalent objective function values with means

,

,

and standard deviations

,

,

for

, 2000, 4000 respectively. The deciding factor in selecting the population size was the diversity of the solutions: the average pairwise Euclidean distances were

,

, and

, indicating that the solutions for

were more spread out and less prone to clustering in a narrow region. Furthermore, for

, the system exhibited instability due to high memory usage from parallelisation. Thus,

provided the most robust and diverse results. For completeness, the average number of function evaluations was

,

, and

.

Nonetheless, it was necessary to reinitiate the population between runs, as this alone could redirect the swarm to more optimal regions of the search space. Each run converged in approximately 100–300 iterations, which resulted naturally without imposing any hard iteration limit, with a maximum of 50 stall iterations, where the best solution has not changed by more than the function tolerance, which is set to machine epsilon, approximately for our machine.

During the exploration phase of the optimisation, we observed that the optimal solution exhibited high mobility across the search space, overcoming local barriers and valleys. Different settings and initial populations led to distinct optimal solutions, and this behaviour further indicates the complex nature of the optimisation problem.

More than 20 runs were attempted for each of the four problems, the NLS Equation (

10), and the Two-Body problem (

17) with three different eccentricities,

,

, and

. The solution derived from each of these runs was tested over the full interval for either the NLS Equation (

10) or the

N-Body problem (

19), depending on the problem for which it was optimised. All experiments were conducted on a computer with an Intel i9 processor (14 cores) and 32 GB of RAM. MATLAB R2024b was used for numerical computations, and Maple 2024 was used for symbolic computations.

Before presenting the optimised coefficients obtained from the training process, we first introduce the numerical problems used in both the optimisation and testing stages.

3. Training and Test Problems

We now describe the numerical problems used to train and test the methods, namely the (2+1)-dimensional nonlinear Schrödinger equation, the Two-Body problem and the N-Body problem.

3.1. (2+1)-Dimensional Nonlinear Schrödinger (NLS) Equation

We consider the (2+1)-dimensional nonlinear Schrödinger (NLS) equation of the form:

where

,

,

is a complex function of the spatial variables

and the temporal variable

t, and

. The term

governs temporal evolution, the terms

and

describe spatial dispersion in the

x and

y directions, respectively, and the nonlinear term

arises in physical applications such as Bose–Einstein condensates and optical beam propagation [

31].

Equation (

10) has a dark soliton solution given by

where

,

, and we select

,

,

,

,

,

,

,

,

,

,

[

32].

The analytical solution of the above special case is

and the density is given by

Furthermore, the solution

of Equation (

10) satisfies the mass conservation law [

33]:

For the numerical solution of Equation (

10),

We use the method of lines for

for the integration of problem (

10), where

for the training problem, and

for the test problem. Even shorter training intervals could yield good results, but not consistently, and the resulting RK methods may lack sufficient stability characteristics. As we are interested in the RK method’s performance, we use Equation (

12) to evaluate both the initial and boundary conditions and the second derivatives

and

of the same equation. The maximum global solution error and the maximum relative mass error of the numerical solution are defined by Equations (

15) and (

16) respectively.

For the NLS equation, if

is the numerical approximation,

, we define the maximum global solution error as

and the maximum relative mass error over the region

D as

where

is approximating

over

for

, and

is approximating

over the same region

D.

3.2. Two-Body Problem

The classical planar Newtonian Two-Body problem is used as a training problem to tune the four free coefficients before testing it on the N-Body problem.

In Cartesian form:

where

and

e is the orbital eccentricity.

The theoretical solution is provided by:

where

can be found by solving the equation

[

34].

As we consider the Two-Body problem a training problem, we select a relatively short interval of integration: . We tested the trained methods in the Two-Body problem for and the N-Body problem, as described in the next section.

3.3. N-Body Problem

The

N-Body problem is the problem that investigates the movement of

N bodies under Newton’s law of motion. It is expressed by the system

where

G is the gravitational constant,

is the mass of body

j, and

and

denote the vectors of the position and acceleration of body

i, respectively.

We solve the five outer body problem, a specific configuration of the

N-Body problem. This system consists of the Sun and the four inner planets (denoted as Sun+4), the four outermost planets, and Pluto.

Table 1 shows the masses (relative to the solar mass), the orbital eccentricities and revolution periods (in Earth years), and the initial position and velocity components of the six bodies. The distances are in astronomical units, and the gravitational constant is

.

As analytical solutions are unavailable, we compare against reference high-precision numerical results, produced by applying the 10-stage implicit Runge–Kutta method of Gauss with 20th algebraic order, for

(about 2738 years), following the procedure described in [

35].

Table 1 implies that the dominant eccentricity of the bodies with the highest frequencies, or lowest revolution periods, that is, Jupiter, Saturn, and Uranus, is

. This observation motivates our choice of training eccentricity, as further explained in

Section 6. Additional methodologies for investigating the performance and behaviour of the methods in astronomical problems can be found in [

36].

4. Optimal RK Coefficients

Table 2 lists the optimal coefficient values obtained for each training problem. These include the nonlinear Schrödinger equation and the Two-Body problem with eccentricities

,

, and

for both bounded (

) and unbounded cases. For comparison, the Original method from [

10] is also included.

In total, seven new optimised RK methods were constructed:

NLS (unbounded) and NLS in [0, 1] (bounded),

TB I, TB II, TB III (unbounded, trained for , , and ),

TB Ib, TB IIb (bounded counterparts of TB I and TB II).

Notably, the coefficients of the bounded NLS method are relatively close to those of the Original method, while the unbounded version differs significantly. The unbounded Two-Body methods (TB I and TB II) also show substantial deviations from their bounded counterparts (TB Ib and TB IIb). In contrast, TB III, trained on a problem with high eccentricity , naturally satisfies and therefore has no bounded variant.

These variations in coefficient values reflect the complex, nonlinear relationship between the free parameters and the global behaviour of the method. They also illustrate the influence of the training problem, particularly the solution’s eccentricity and oscillatory character, on the structure of the optimal coefficients.

5. Error and Stability Analysis

In this section, we present the local truncation error and stability analysis.

5.1. Error Analysis

The local truncation error analysis of the parametric method defined in Equation (

2) and

Section 2.2, based on the Taylor expansion series of the difference

, yields the principal term of the local truncation error, which is given by

where

indicating global accuracy of order six. The

values for the newly developed methods are presented in

Table 3.

5.2. Stability Analysis

For the analysis of the (absolute) stability of method (

2), we consider the problem

Applying method (

2) to the above equation, we obtain the numerical solution

, where

, and

is called the stability polynomial [

2]. We have the following definitions:

Definition 1. For the method of Equation (2), we define the stability interval along the real axis as , where Definition 2. For the method of Equation (2), we define the stability interval along the imaginary axis as , where Definition 3. The region of absolute stability is defined as the set

Following the above procedure, we compute its stability polynomial, which is given by

where

Using this polynomial and the coefficients of all methods in

Table 2, we conduct a numerical computation of the real and imaginary stability intervals, according to Definitions 1 and 2, respectively, and a step length of

, presenting the intervals in

Table 3. Similarly, we present the stability regions of all methods in

Figure 1, as the set of grid points that satisfy Definition 3. More specifically, we evaluated

over the domain

,

, using a step length of

. Here,

x and

y represent the real and imaginary parts of

v, respectively. For clarity, only the boundary of each stability region is shown, though each region comprises all points for which

.

Table 3.

Properties of developed methods. Here,

and

define the stability interval along the real axis

and along the imaginary axis

, whereas

stands for the coefficient of the principal term of the local truncation error, shown in (

20).

Figure 1 provides a graphical representation of the stability intervals.

Table 3.

Properties of developed methods. Here,

and

define the stability interval along the real axis

and along the imaginary axis

, whereas

stands for the coefficient of the principal term of the local truncation error, shown in (

20).

Figure 1 provides a graphical representation of the stability intervals.

| Method | | | |

|---|

| Original [10] | −3.986 | 0.001 | |

| NLS | −4.442 | 0.002 | |

| NLS in | −4.057 | 0.001 | |

| TB I () | −5.926 | 0.001 | |

| TB Ib () in | −3.912 | 0.000 | |

| TB II () | −3.709 | 0.311 | |

| TB IIb () in | −3.807 | 0.000 | |

| TB III () | −4.180 | 0.001 | |

5.3. Error and Stability Characteristics and Interpretation

In

Table 3, we note that the new methods show improved stability and local truncation error characteristics. For example, the new method NLS, compared with the Original method, exhibits larger real and imaginary stability intervals (indicated by larger absolute values of

and

, respectively) and a smaller absolute coefficient for the principal term of the local truncation error (denoted by

). A similar improvement is evident when comparing the unbounded TB I with its bounded counterpart, TB Ib (developed under the condition

). The unbounded method exhibits a real stability interval approximately two units larger and a truncation error coefficient approximately half an order of magnitude smaller. Furthermore, TB II has a non-vanishing imaginary stability interval in contrast to the bounded counterpart TB IIb, while retaining a similar real stability interval and error coefficient. We observe that, even though the methods were developed for optimal global error, they also exhibit good, albeit not optimal, local properties. Whether the local properties are optimal depends solely on whether they contribute to the minimisation of the global error.

6. Numerical Results

6.1. Overview of Compared Methods

We measure the efficiency of the new methods against existing methods from the literature. We refer to methods 2–8 as new methods, meaning Runge–Kutta methods constructed via PSO in this study. Methods 3, 5, and 7 have bounded nodes such that , , whereas methods 2, 4, 6, and 8 were optimised without this constraint, even though method 8 ultimately satisfies it.

The compared methods are presented below:

- 1.

Original: Method developed in [

10] for the NLS equation (

Table 2, row 1)

- 2.

NLS: New method optimised by PSO for the NLS equation, without boundary constraints (

Table 2, row 2)

- 3.

NLS in

: New method optimised for the NLS equation with bounded nodes (

Table 2, row 3)

- 4.

TB I: New method optimised for the Two-Body problem with

, unbounded (

Table 2, row 4)

- 5.

TB Ib: New method optimised for the Two-Body problem with

with bounded nodes (

Table 2, row 5)

- 6.

TB II: New method optimised for the Two-Body problem (

) and the

N-Body problem, unbounded (

Table 2, row 6)

- 7.

TB IIb: New method optimised for the Two-Body problem (

) and the

N-Body problem with bounded nodes (

Table 2, row 7)

- 8.

TB III: New method optimised for the Two-Body problem with

, unbounded (

Table 2, row 8)

- 9.

Papageorgiou: The method of Papageorgiou et al., with phase-lag and amplification error orders of 10 and 11, respectively [

37]

- 10.

Kosti I: The method of Kosti et al. (I), with phase-lag and amplification error orders of 8 and 9, respectively [

38]

- 11.

Dormand: The classic method of Dormand et al. [

2]

- 12.

Kosti II: The method of Kosti et al. (II), with phase-lag and amplification error orders of 8 and 5, respectively [

39]

- 13.

Fehlberg: The classic method of Fehlberg et al. [

2]

- 14.

Triantafyllidis: The method of Triantafyllidis et al., with phase-lag and amplification error orders of 10 and 7, respectively [

40]

- 15.

Kovalnogov: The parametric method of Kovalnogov et al., optimised with differential evolution [

19]

- 16.

Jerbi: The parametric method of Jerbi et al., optimised with differential evolution [

20]

6.2. Performance on the NLS Equation

Table 4 presents the maximum global solution error and the maximum relative mass error of the selected methods when solving the NLS Equation (

10) for

and six values of

ranging from

to

. We exclude methods developed specifically for the Two-Body and

N-Body problems. The table indicates the superior performance of the PSO-optimised NLS method compared with all other methods, including the Original method developed specifically for the NLS equation, for both small and large step lengths. At the largest step length

, all other methods either diverged or produced a maximum global error exceeding one, indicating instability in contrast to the NLS method, which remained stable and accurate.

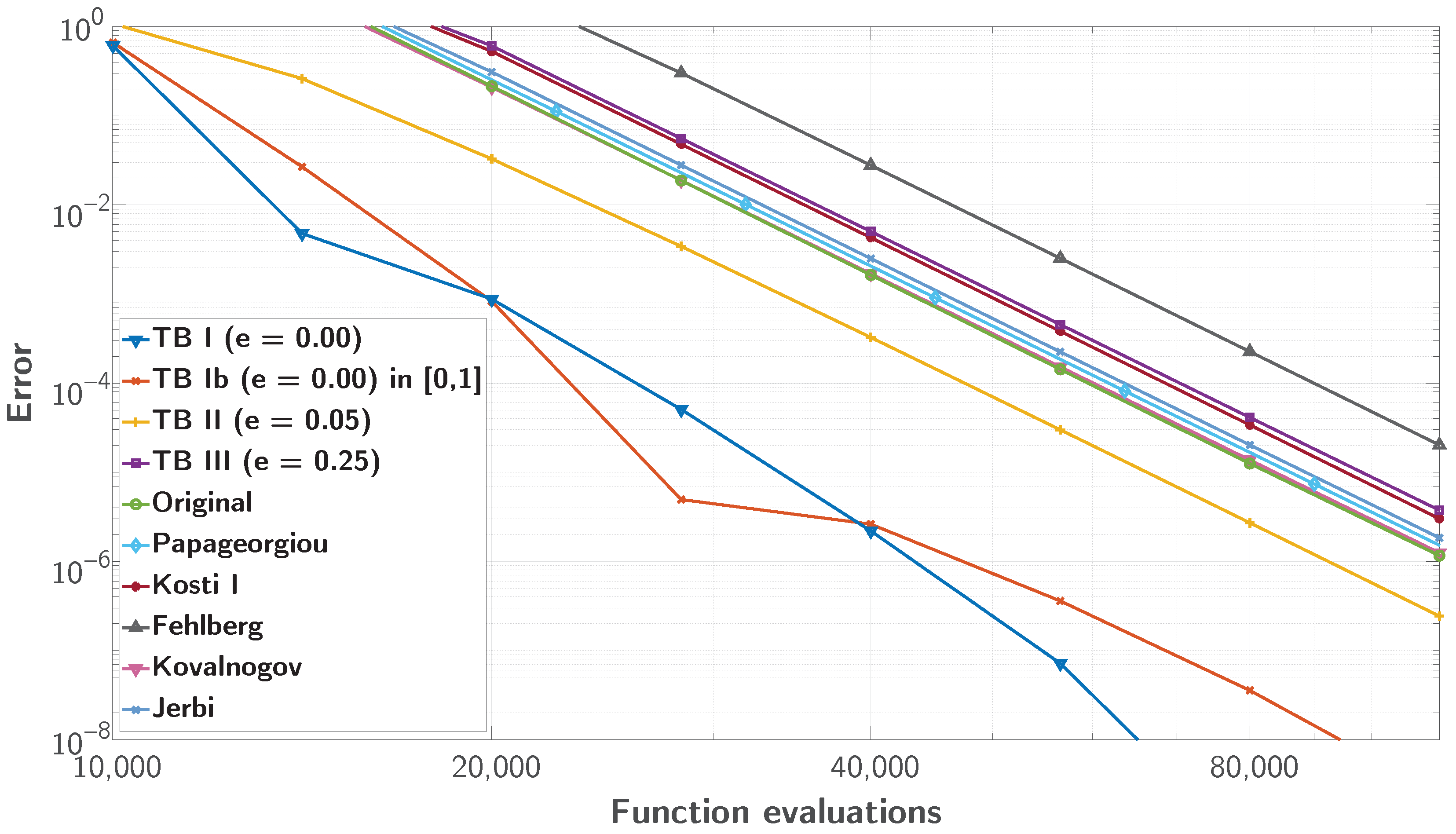

As shown in

Table 4, the NLS method performs best overall.

Figure 2 illustrates this by plotting the maximum global solution error against the function evaluations for the best seven methods solving the NLS Equation (

10), using the setup described in

Section 3.1. Both newly constructed methods, NLS and NLS in [0, 1], outperform the Original method across all tested step lengths. The unbounded NLS method also exhibits superior stability, achieving better accuracy at larger step lengths and faster convergence in that regime compared with the bounded variant.

6.3. Performance on the Two-Body Problem

Although the Two-Body problem was used for training before testing on the

N-Body problem, we also evaluate the methods on the former problem over a longer integration interval, as described in

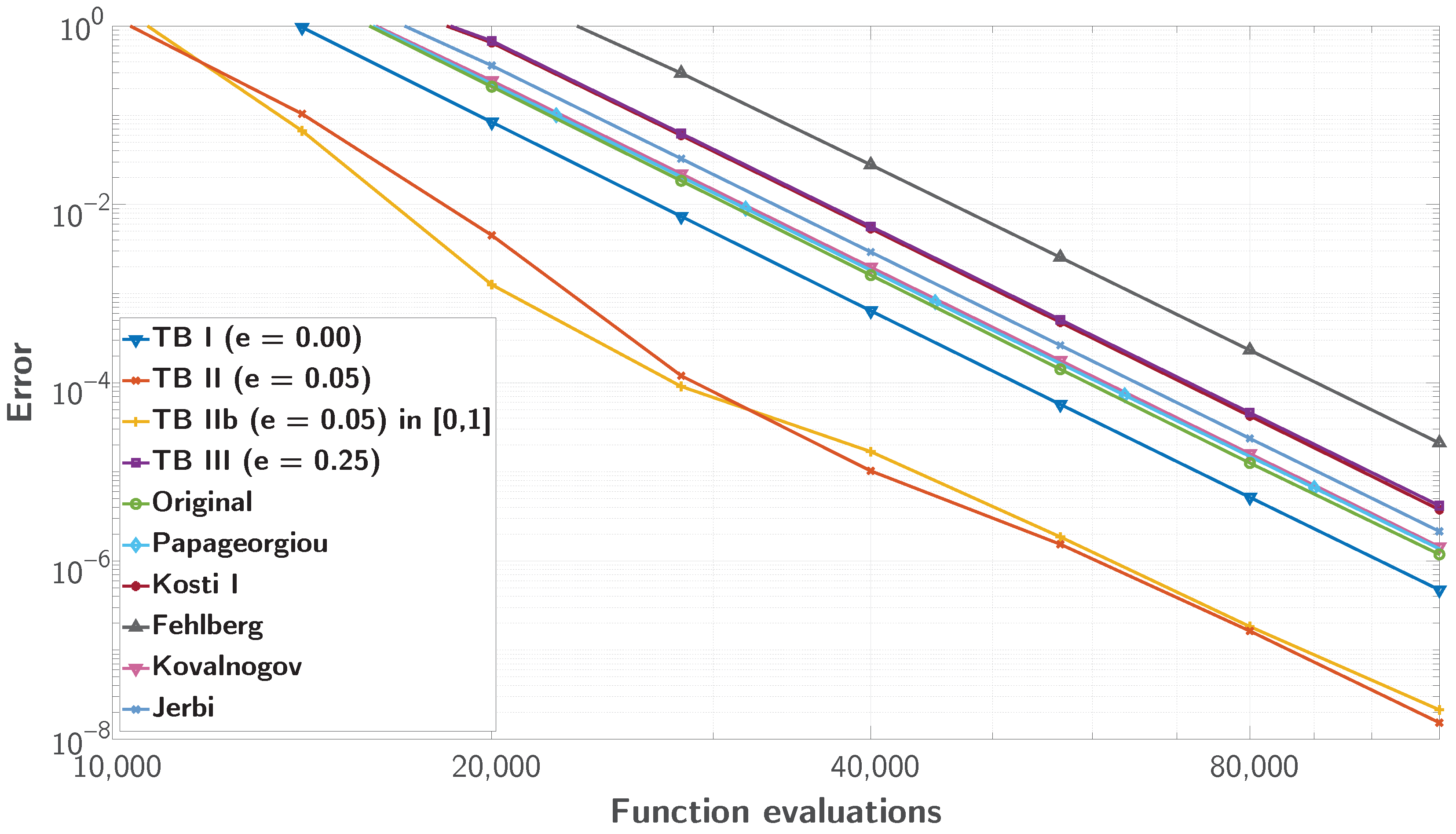

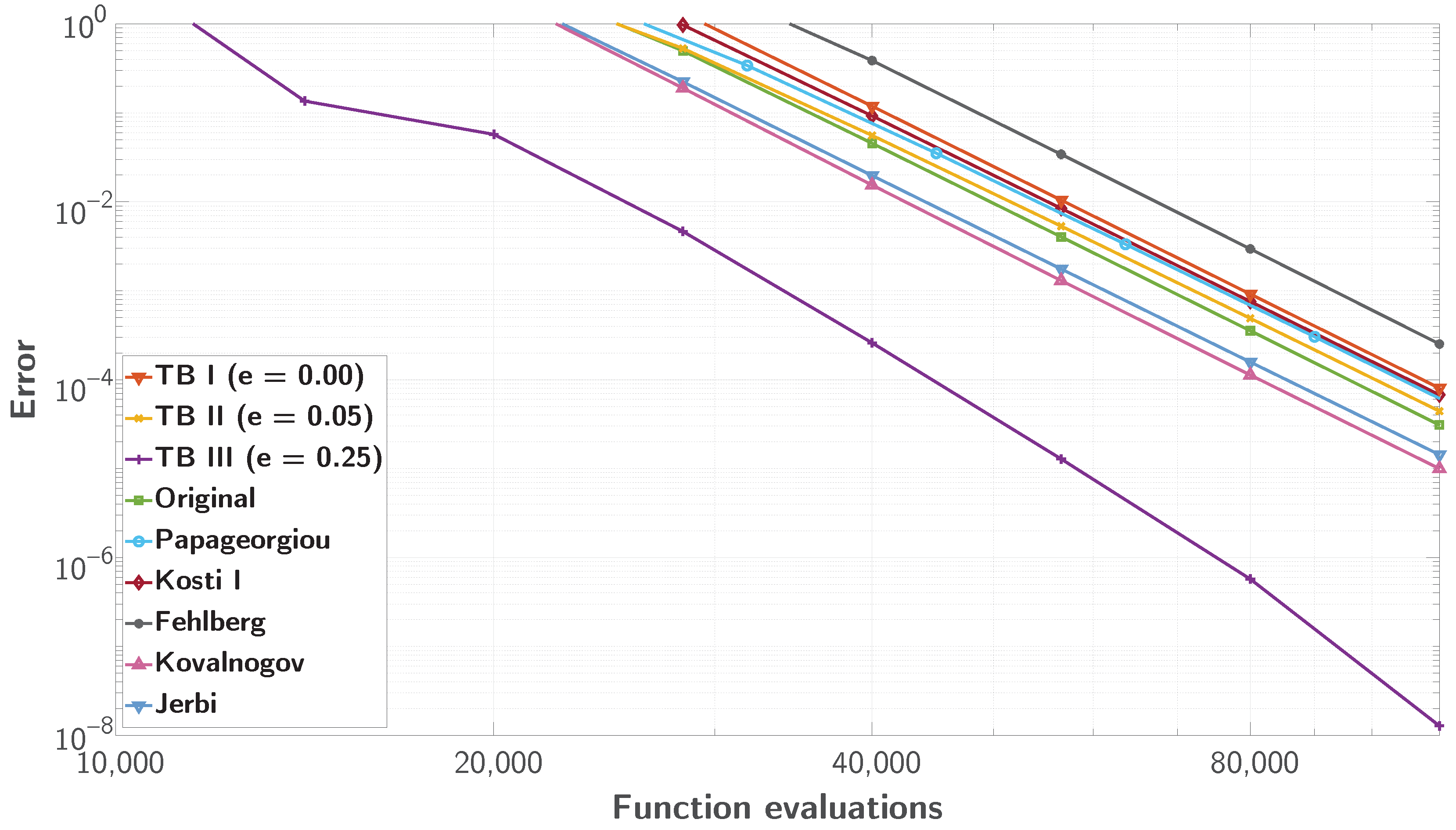

Section 3.2.

Figure 3,

Figure 4 and

Figure 5 show the efficiency results for the Two-Body problem (

17) for eccentricities

,

, and

, respectively. In each case, we present the three best TB methods, i.e., the ones without the constraint

, along with the corresponding bounded variant trained for that eccentricity, where available. For example, in

Figure 5, there is no such method, as the coefficients of the best method for

, TB III, already satisfy the constraint, as seen in the last row of

Table 2.

The plots illustrate that the most efficient methods achieve lower global error with fewer function evaluations across varying values of e. For smaller e, the problem is less stiff, allowing certain methods to maintain accuracy with relatively low computational cost. As e increases, reflecting greater oscillatory behaviour and complexity, methods trained specifically for those eccentricities require fewer function evaluations compared to the other methods to achieve accuracy, thus becoming more efficient. This variation highlights that method efficiency depends on the problem regime defined by e and the corresponding targeted training of the methods. In conclusion, the methods trained on the corresponding eccentricity outperform all others, including those trained for different eccentricities, by up to three orders of magnitude in global error.

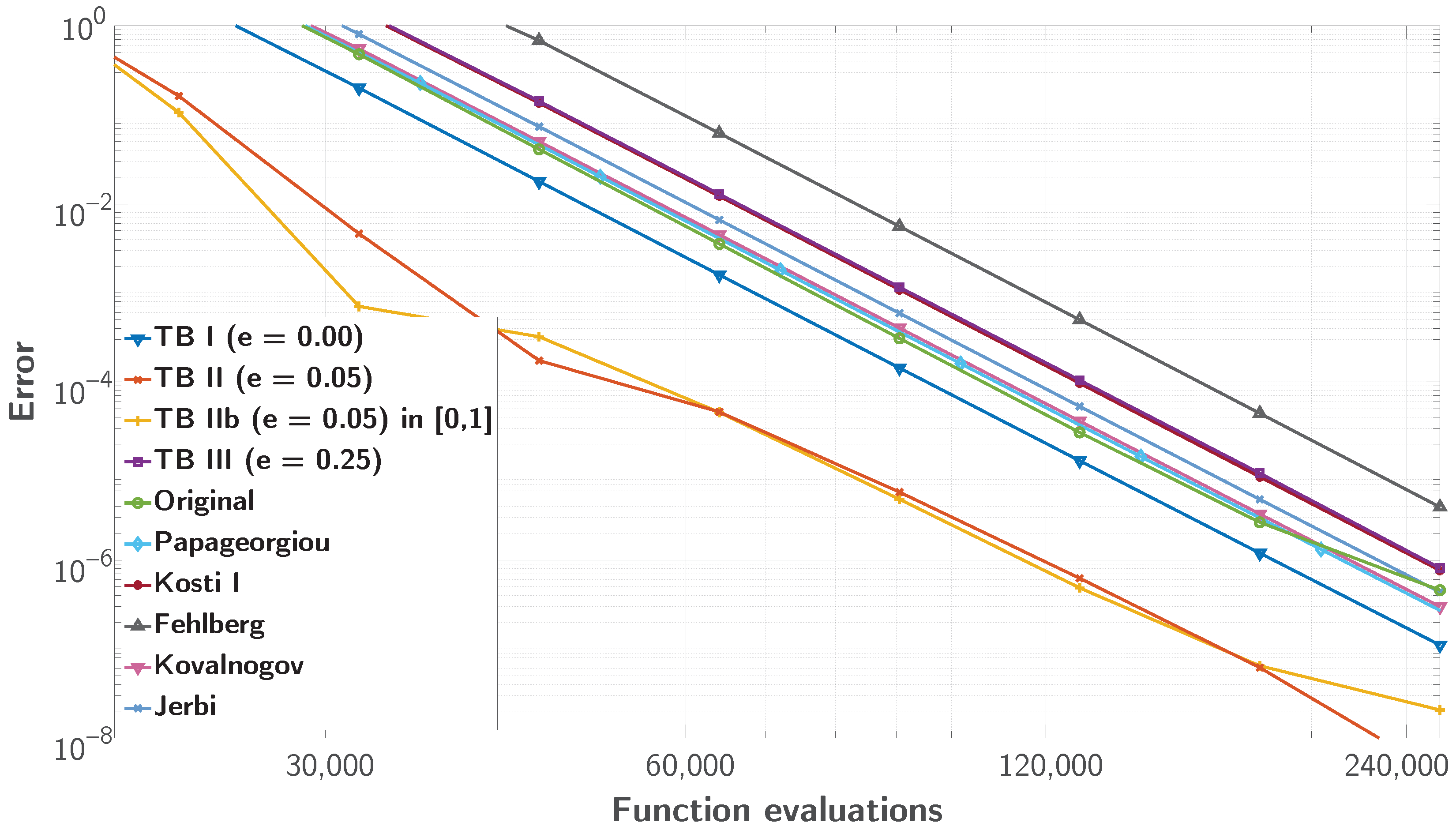

6.4. Performance on the N-Body Problem

To assess generalisability to more complex systems, we test the methods trained for the Two-Body problem on the

N-Body problem.

Table 5 presents the maximum global error for each method applied to the

N-Body problem (

19) for eight different step lengths in a geometric sequence. We include all methods except the two methods developed for the NLS equation. As observed in

Section 3.3,

Table 1, the dominant eccentricity of the bodies with the highest frequencies, which are the main contributors to the maximum global error [

34], is approximately

. Indeed, the results show that the best performance in the five outer planet problem is achieved by using the method whose coefficients have been trained for the simpler Two-Body problem with

. Methods produced by training the coefficients in the Two-Body problem with orbital eccentricities

or

yielded much poorer results.

A desirable property of a numerical method is to maintain its theoretical order of accuracy over a long range of step lengths that do not necessarily include the unstable region (very large step lengths) and the region where the round-off error becomes significant (very small step lengths).

We estimate the experimental order of accuracy

using

Table 6 shows that all new methods exhibit consistent convergence behaviour and retain the order on average. While some fluctuations exist, a drop in error is typically followed by an increase, so the achieved accuracy often exceeds the values predicted by the average order, which is also confirmed in

Figure 6.

As seen in

Figure 6, the slope of each method, representing its experimental order of accuracy, agrees with the results presented in

Table 6 for the

N-Body problem. Notably, the behaviour of all methods for the

N-Body problem (

19) closely mirrors that of the Two-Body problem for eccentricity

. Even though TB II and TB II in

were trained on a much simpler problem over a significantly shorter integration interval, they retain their performance characteristics when applied to the more complex system. It is noteworthy that the training TB problem (

17) has about 16 full oscillations, whereas for the

N-Body problem (

19), the fastest revolving body, Jupiter, completes about 230 revolutions.

7. Discussion

There are several aspects that contributed to the increased performance of the evolutionarily optimised methods compared with methods optimised by classical techniques or those designed to possess local properties. First of all, the objective function under minimisation considers the efficiency of the new method compared with a benchmark method, so the objective function is explicitly designed to maximise the efficiency of the new method relative to an established benchmark, embedding performance comparison into the optimisation itself. The objective function also ensures that it covers the efficiency across a range of accuracies, which also covers small accuracies, thus improving the stability characteristics. To reduce optimisation time and enhance results, the training was performed on simpler versions of the problems, e.g., using short integration intervals or alternative but related problems. Moreover, other integration parameters, such as the step lengths, were tuned individually for each problem to maximise efficiency. This allowed experimentation with relaxed conditions, such as removing typical RK bounds on the coefficients.

The evolutionary optimisation allowed the new methods to be more efficient than their classically optimised counterparts in both local truncation error coefficients and stability intervals. The newly developed methods outperformed existing literature methods tailored to periodic or oscillatory problems. Notably, this was accomplished without explicitly enforcing local properties such as phase lag or amplification error.

A particularly striking result is the training efficiency, as the Two-Body problem required significantly less computational time and a shorter integration interval compared with the more complex N-Body problem. Comparing the function evaluations and CPU time for different accuracies, we determined that the integration of the training problem required approximately 70 times fewer evaluations and less time than the testing problem. In particular, for the Two-Body and N-Body problems, the evolutionary algorithm successfully optimised methods for high eccentricities, where solutions deviate significantly from sinusoidal form, overcoming the limitations of classical methodologies that rely on local properties such as phase-lag minimisation, amplification-error control, and trigonometric fitting.

The evolutionary optimisation process, using the selected PSO parameters, is sufficiently robust to require minimal adjustment when applied to a different problem, aside from possible changes to the step length range.

Nevertheless, some limitations of the PSO-based approach remain. The computational cost is dominated by evaluating the global error through time integration, which may increase substantially when scaling to higher-order methods with more free parameters. Additionally, PSO can be prone to premature convergence in complex or multimodal search landscapes, potentially resulting in suboptimal solutions. To address these issues, hybrid optimisation strategies or adaptive parameter tuning could be considered in future work. Nevertheless, PSO remains an effective and practical choice for the current problem, balancing exploration and exploitation while allowing parallel candidate evaluation.

Ideally, full optimisation of a parametric RK method would be achieved without any predetermined coefficients, and, after solving the system of equations, all coefficients would then depend on a set of free parameters, acting as degrees of freedom. However, this approach remains infeasible with current hardware, due to its high memory and computational demands. Alternative strategies, such as applying simplifying assumptions, can make the process more tractable but introduce additional constraints that limit the extent of optimisation. In practice, it is not yet possible to eliminate the use of predetermined coefficients or simplifying assumptions in the development of parametric RK methods. Nevertheless, reducing their use remains a future goal, as doing so would better highlight the potential of evolutionary optimisation.

However, the methodology has the potential to be applied to a wider range of differential problems. Its direct operation in the parameter space of Runge–Kutta coefficients means that different problem classes could be addressed by selecting appropriate training problems and parameter constraints. While we have not yet tested these extensions, the general framework is flexible enough to be explored in future work.

8. Conclusions

In this article, we proposed a new optimisation strategy for selecting RK coefficients based on evolutionary computation techniques. This strategy seeks to optimise the global behaviour over a training problem, rather than local properties as targeted by classical approaches. In some cases, the training problem requires nearly two orders of magnitude less computational effort than the testing problem. We also introduced a new objective function designed to improve the method’s performance relative to a benchmark. The strategy considers a wide range of accuracies, systematically and efficiently, overcoming challenges such as local minima in the free coefficient space and the lack of derivative information for the objective function. We removed typical coefficient bounds that govern RK methods, such as , and achieved excellent results in local stability, error characteristics, and global performance. Finally, the methodology uncovers structural patterns in coefficient values when optimising for high eccentricities, where solutions deviate from sinusoidal behaviour, offering insights that can guide coefficient predetermination in classical method design.

The methodology is general and can be applied to any type of problem. Here, we used the NLS equation and the N-Body problem (the latter tested over a long-time integration interval), which are important problems in quantum physics and astronomy, respectively. Future work will focus on applying the methodology to additional problem classes, incorporating a broader set of representative training problems to further enhance the generalisability of the resulting methods.