1. Introduction

Deep learning has shown remarkable promise in computer-aided diagnosis (CAD) for medical image analysis (MIA), powering applications such as lesion classification, segmentation, and disease screening [

1]. However, the real-world deployment of such systems remains limited due to two core challenges: (1) the high cost and expertise required for collecting large-scale annotated datasets, and (2) substantial domain shifts introduced by variations in acquisition devices, protocols, and patient populations across clinical sites [

2,

3].

Semi-supervised learning (SSL) methods [

4,

5] attempt to mitigate the annotation bottleneck by leveraging unlabeled data alongside a small labeled subset, often using consistency regularization or pseudo-labeling. Yet, most SSL approaches assume that labeled and unlabeled data come from a shared distribution. This assumption often breaks in real-world medical settings. For instance, in the ISIC2019 dataset, images come from multiple hospitals and devices, leading to differences in lighting, skin tone, and lesion framing—all of which can degrade model performance when unlabeled data are drawn from a different distribution [

6]. Moreover, ISIC2019 is particularly well-suited for domain generalization tasks due to its large-scale composition (25,000+ images) and the inclusion of diverse imaging sources. This inherent heterogeneity in acquisition protocols, devices, and demographics provides a natural testbed for evaluating cross-domain robustness under limited supervision. In this work, we treat medical imaging as a multi-domain scenario due to the inherent diversity in image acquisition across clinical environments. A “domain” in this context refers to a distinct distribution of image features shaped by scanner type, imaging protocol, patient demographics, and institution-specific practices [

7,

8]. For instance, dermoscopic images from the ISIC2019 dataset vary significantly in resolution, color calibration, and lighting due to differences across hospitals and acquisition devices. These domain-specific variations introduce substantial shifts in data distributions, making medical image classification a naturally multi-domain problem.

In contrast, domain generalization (DG) techniques [

7,

9,

10] seek to learn domain-invariant features that generalize to unseen target domains. However, DG often requires fully labeled source domains, which is infeasible in medical settings where expert labels are limited. This limitation has sparked growing interest in semi-supervised domain generalization (SSDG) [

11,

12,

13,

14], which jointly addresses data scarcity and cross-domain robustness.

In this work, we propose a unified SSDG framework tailored for medical image classification. Our method integrates ideas from uncertainty-aware pseudo-labeling, domain adaptive classification, and style-driven feature regularization. Unlike previous methods that rely on Monte Carlo dropout for uncertainty estimation [

11] or use fixed shared heads across domains [

15], our model introduces a novel synergy between style-based augmentation and attention-guided multi-head classification. This allows the model to learn diverse yet consistent feature representations across domains under limited supervision [

8,

16].

Existing SSDG methods often face two practical limitations that hinder their scalability and effectiveness in medical imaging. First, uncertainty-aware pseudo-labeling techniques such as Monte Carlo dropout [

11] introduce significant computational overhead, which is impractical in clinical settings where inference time and resource constraints are critical. Second, frameworks like MultiMatch [

13] adopt shared classifier heads across domains, which limits adaptability and reduces performance when imaging styles vary significantly—a common issue in multi-institutional or device-heterogeneous medical datasets. These challenges motivate the need for a modular, efficient, and style-adaptive approach to SSDG that better suits the demands of medical image analysis.

Our contributions are as follows:

We propose a multi-style ensemble pseudo-labeling strategy, where each unlabeled sample is augmented with multiple style-transferred versions (relevant and irrelevant). Predictions are aggregated and filtered based on entropy thresholds, improving pseudo-label reliability without Monte Carlo dropout.

We incorporate feature-based conformity (FBC) and semantic alignment (SA) losses to promote intra-class compactness and inter-domain consistency in the feature space. Only samples close to class prototypes are retained, reducing pseudo-label noise under shift.

We design a multi-task domain-specific classification scheme with separate heads for each stylized domain and an attention-based fusion module. This allows the model to adaptively weight predictions from different domains, extending MultiMatch-style’s training to medical style augmentations.

We introduce a dual-level neural-style augmentation pipeline that applies both clinically relevant transformations (e.g., MRI, dermoscopy artifacts) and stylistic textures (e.g., artistic styles) to simulate domain variability while preserving medical semantics.

Experiments on the ISIC2019 skin lesion classification benchmark demonstrate that our framework achieves superior performance under low-label conditions (5–10%), outperforming strong baselines such as FixMatch [

4], StyleMatch [

14], and UPLM [

11]. The proposed architecture achieves improved accuracy and robustness under distribution shifts, as confirmed by both quantitative metrics and t-SNE-based visualizations of the learned feature space.

2. Related Work

2.1. Semi-Supervised Learning in Medical Imaging

Semi-supervised learning (SSL) has gained popularity in medical imaging due to its ability to leverage unlabeled data, reducing dependence on costly expert annotations. Classic SSL methods include entropy minimization [

17], temporal ensembling [

18], pseudo-labeling [

19], and consistency-based approaches like MixMatch [

20], FixMatch [

4], and Mean Teacher [

5]. However, most of these approaches assume that labeled and unlabeled samples come from the same domain, which is rarely the case in clinical practice. Recent medical imaging studies have extended SSL to domain-adaptive or few-shot settings [

21,

22], yet generalization under domain shift remains a key limitation. A detailed comparison of existing SSDG methods is described in

Table 1.

2.2. Domain Generalization and Style-Based Augmentation

Domain generalization (DG) approaches aim to train models on multiple source domains that generalize well to unseen domains [

7]. DG in computer vision includes feature alignment methods [

23,

24], data augmentation techniques like MixStyle [

9] and RandConv [

25], and meta-learning schemes [

26]. In medical imaging, style randomization has proven effective [

8,

16], as styles encode domain-specific biases. Yet, few works combine clinically grounded augmentations with stylistic diversity in a structured manner.

2.3. Semi-Supervised Domain Generalization (SSDG)

SSDG has recently emerged as a promising research direction combining the goals of SSL and DG. Notable works include UPLM [

11], which introduces Monte Carlo dropout-based uncertainty filtering, and MultiMatch [

13], which employs multi-task learning across domains with shared classifier heads. StyleMatch [

14] applies stochastic style perturbations to enhance generalization but lacks uncertainty-aware filtering and modular classification. Few-label generalization methods [

12] tackle low-label regimes but do not address domain-specific variability explicitly. Our work builds upon these by combining entropy-guided pseudo-label filtering, style-based augmentation, feature-space regularization, and domain-aware classification in a unified architecture.

2.4. Classifier Design and Domain-Specific Heads

Classifier modularity is critical when domains exhibit distinct feature distributions. Prior work often assumed a shared classifier across all domains [

10,

14], which can lead to underfitting domain-specific signals. MultiMatch [

13] addresses this with task-specific heads but lacks the adaptive weighting of predictions. Our approach introduces domain-specific heads with attention-weighted fusion, allowing for a dynamic integration of stylized features while preserving generalizability.

2.5. Feature-Space Regularization and Pseudo-Label Filtering

Regularizing the feature space using class prototypes and supervised contrastive learning has proven effective in semi-supervised and cross-domain settings [

27,

28]. We adopt a similar strategy by combining feature-based conformity and semantic alignment to promote intra-class compactness and cross-domain consistency. Unlike UPLM [

11], which uses heavy sampling-based uncertainty estimation, we implement lightweight entropy-based filtering, making our framework more scalable and training-efficient.

Recent advances have also explored fine-grained uncertainty modeling to enhance pseudo-label reliability in medical imaging. For example, Zhou et al. [

29] propose a bidirectional uncertainty-aware region learning strategy that focuses on high-uncertainty regions for labeled data and low-uncertainty regions for unlabeled data, mitigating noise in pseudo-labels. Similarly, the Dual Uncertainty-Guided Multi-Model (DUMM) framework [

30] leverages both sample-level and pixel-level uncertainty to produce high-fidelity pseudo-labels, yielding notable improvements on ACDC2017 and ISIC2018 segmentation benchmarks. These works complement our entropy-based approach by demonstrating the benefit of combining uncertainty guidance with multi-source feature regularization.

3. Materials and Methods

3.1. Overview of the Proposed Method

We propose a unified framework for semi-supervised domain generalization (SSDG) tailored to medical image analysis. The method integrates uncertainty-aware pseudo-labeling, feature-space regularization, domain-adaptive classification, and style-based augmentation to enhance generalization to unseen domains under limited label availability. Inspired by recent works such as UPLM [

11], Few-Labels [

12], and MultiMatch [

13], our framework incorporates the following:

Multi-style ensemble pseudo-labeling with entropy-based filtering;

FBC and SA losses;

Supervised contrastive learning across labeled and pseudo-labeled samples to align features across domains;

Multi-task classification via domain-specific heads and attention-based fusion;

Dual-level neural-style transfer to simulate realistic domain shifts.

In addition, supervised contrastive loss

encourages the alignment of semantically similar samples across domains. In parallel, domain-specific heads are adaptively fused using an attention MLP, weighted by

, to produce final predictions. The overall loss is given by

. Color-coded modules and dashed vs. solid arrows indicate function-specific flows, loss paths, and attention-based fusion. An overview of our overall framework is illustrated in

Figure 1.

3.2. Dataset Description

The dataset includes 25,331 dermoscopic images annotated with one of eight diagnostic categories: MEL, NV, BCC, AK, BKL, DF, VASC, and SCC.

Table 2 shows the distribution of the ISIC2019 dataset.

The dataset exhibits substantial class imbalance, with the largest class (NV) containing over fifty times more samples than the smallest (DF). Such imbalance can bias the model toward over-represented classes and degrade performance on rare but clinically significant categories. This motivated our integration of feature-based conformity and entropy-guided pseudo-label filtering, which help stabilize training and improve generalization across all classes.

Access requires user registration and acceptance of the data use agreement on the official ISIC Archive platform.

For preprocessing, all images were resized to 224 × 224 pixels and normalized using ImageNet (ILSVRC-2012) statistics. We used the official training/test split provided by the challenge. Metadata was used to simulate background domain shifts for validation (e.g., via skin tone, lighting conditions). Only RGB images were used for training and inference.

3.3. Neural-Style Transfer for Domain Variability

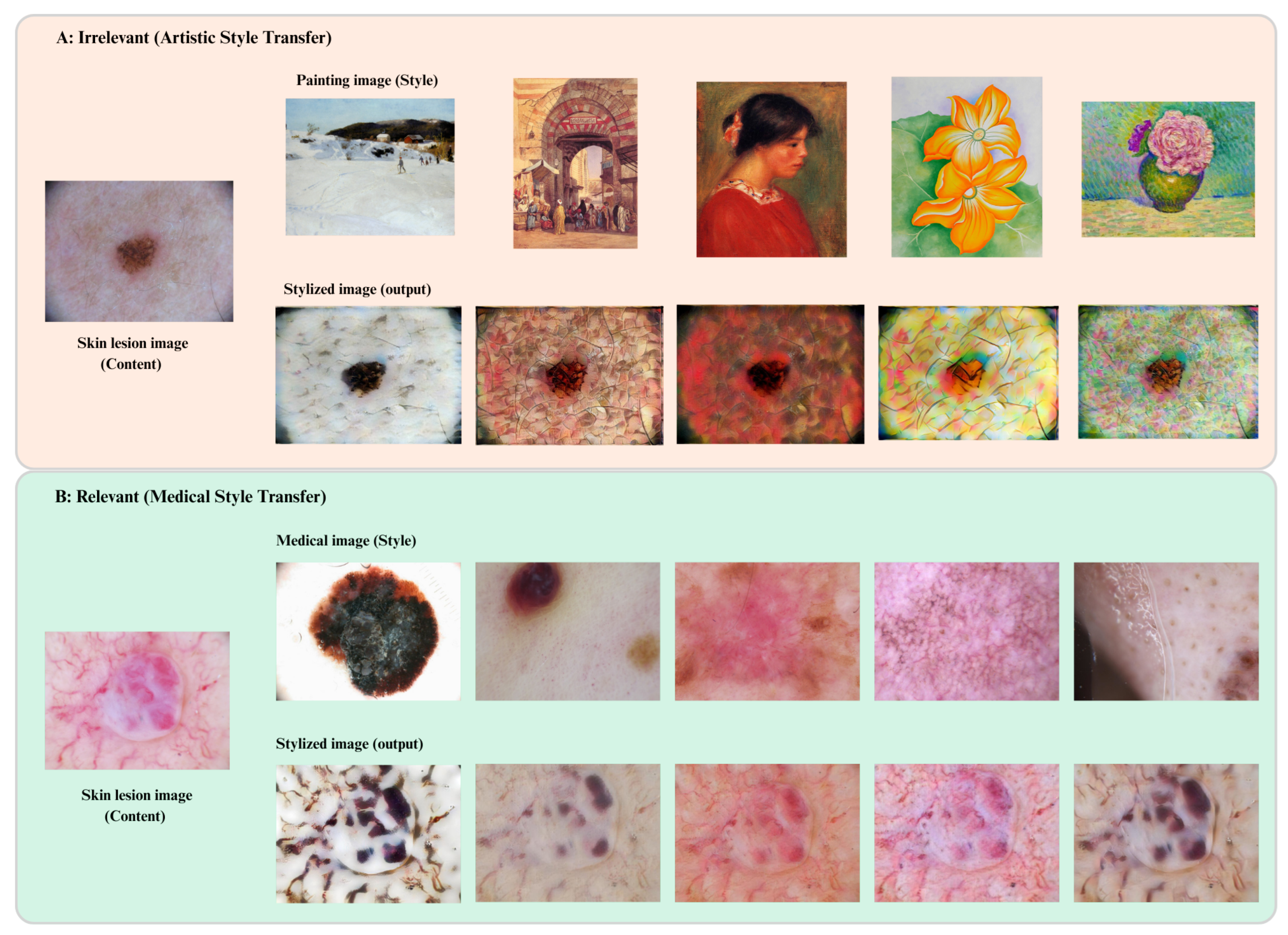

As illustrated in

Figure 2, our multi-style transfer framework is designed to enhance domain generalization. To simulate domain shifts common in clinical imaging (e.g., scanner type, acquisition protocol), we apply two levels of neural-style augmentation:

Medical-style augmentation: We apply Adaptive Instance Normalization (AdaIN) [

32] to mimic clinical texture artifacts (e.g., MRI or dermoscopy-specific styles).

Artistic-style augmentation: Styles are sampled from the WikiArt dataset to introduce high-frequency texture variations without affecting lesion semantics, following the MixStyle strategy [

9].

These augmentations expose the model to both intra- and inter-domain variability, enhancing its robustness to domain shifts.

3.4. Multi-Style Ensemble Pseudo-Labeling

Given an unlabeled image

, we generate

M augmented views

using both medical and artistic style transformations. Each view is passed through the model

, producing predictions

. We compute the entropy

of each prediction and apply a batch-wise threshold

(90%) to discard low-confidence outputs. The filtered pseudo-label is then computed as follows:

This approach improves pseudo-label reliability under domain shifts without incurring the computational cost of Monte Carlo dropout [

11].

3.5. FBC and SA

We regularize feature space learning using the following mechanisms:

These constraints ensure that representations of the same class remain close in the feature space across different styles and domains [

12].

Training is performed using the Adam optimizer with

,

, and a cosine-annealed learning rate schedule starting at

. Validation accuracy is monitored to determine convergence, and early stopping is applied if no improvement is observed for 20 epochs. This procedure, together with the

sensitivity tuning described in

Section 3.5, ensures stable convergence and reproducible performance across independent runs.

3.6. Supervised Contrastive Loss

To enhance representation learning across stylized domains, we incorporate a supervised contrastive loss [

27] extended to multi-domain pairs:

where

is the projection of

via encoder

E and projection head

P,

is a temperature parameter, and

is the set of positive pairs.

3.7. MultiMatch-Style Domain-Specific Training

Inspired by MultiMatch [

13], we assign a separate classifier head hk to each stylized domain. At inference, predictions are fused via learned attention weights:

The final classification loss is a weighted fusion of head-specific cross-entropy losses and regularization terms:

3.8. Implementation Details

Each experiment was repeated five times using independent random seeds (42, 123, 456, 777, and 999). For all performance metrics reported in

Table 3,

Table 4,

Table 5 and

Table 6, we reported the mean and standard deviation (mean ± std) across five independent runs. Additionally, we performed a paired

t-test comparing our method to UPLM under 5% and 10% label conditions, using

independent runs per method. The resulting t-statistics were 6.17 for the 5% label setting and 5.42 for the 10% label setting, both yielding

, confirming the robustness of our approach.

Although MultiStyle-SSDG is a modular framework combining multiple domain-specific heads and feature-level constraints, it remains computationally tractable. All experiments were conducted on a single NVIDIA RTX 3090 GPU (NVIDIA Corporation, Santa Clara, CA, USA) with 24 GB VRAM. Training the model on ISIC2019 (input size: 224 × 224) for 1024 epochs with a batch size of 64 required approximately 8 h. We used the Adam optimizer with , , and an initial learning rate of , decayed using a cosine annealing schedule over 200 epochs. Batch size was set to 64 (32 labeled, 32 unlabeled samples). Training was monitored using the validation accuracy, with early stopping triggered if accuracy did not improve for 20 consecutive epochs. The model with the highest validation accuracy was retained for final testing. Convergence was typically reached within 140–160 epochs. To ensure reproducibility, we fixed random seeds for PyTorch (v2.2.0), NumPy (v1.26), and Python (v3.10), as well as disabled non-deterministic CuDNN operations. Peak VRAM usage was around 16.8 GB, and CPU RAM usage remained below 10 GB throughout training.

During inference, the model processes a single image in approximately 50 ms, demonstrating practical efficiency for clinical decision support scenarios. Dual-style augmentation modules are used only during training and do not impact inference latency. The modular structure—especially domain-specific heads fused via attention—introduces slight overhead but also improves flexibility and robustness across domain shifts.

3.9. Baseline Implementation Details

For a fair and reproducible comparison, we re-implemented or directly used the official code repositories of all baseline methods: FixMatch [

4], StyleMatch [

14], and UPLM [

11]. For FixMatch and StyleMatch, we followed the original hyperparameter settings reported in their respective papers, including learning rate (0.03), batch size (64), optimizer (SGD with momentum 0.9), and weight decay (

). For UPLM, we adhered to the published configuration, including the EMA decay rate of 0.999, weak/strong augmentations, and Monte Carlo dropout sampling (5 passes). For all baselines, image resolution was fixed at

, normalization followed ImageNet statistics, and training epochs matched those used for our method (1024 epochs) to ensure consistency. When hyperparameters were not explicitly stated in the original paper, we used the default settings from the official implementations. All experiments for baselines and our method were conducted under identical hardware, random seed initialization, and dataset splits.

3.10. Simulated Domain Shifts

To assess generalization under specific domain shift scenarios, we simulated three types of distribution shifts during validation:

- (1)

Style Shifts: Implemented using neural-style transfer with both medically relevant and artistically irrelevant styles, as described in

Section 3.3.

- (2)

Background Shifts: Generated by selecting samples with diverse non-lesion backgrounds (e.g., skin tone, lighting, and acquisition settings). These groups were identified using ISIC2019 metadata and visual inspection.

- (3)

Corruption Shifts: Simulated through controlled test-time augmentations, including Gaussian blur, brightness distortion, and occlusion. These perturbations mimic low-quality imaging conditions commonly encountered in practice.

3.11. Ethical Considerations

This study used the publicly available ISIC2019 dataset, which is fully anonymized. No personally identifiable information was accessed. No generative AI tools were employed in preparing or analyzing the data or writing this manuscript.

4. Results

4.1. Semi-Supervised Classification Performance

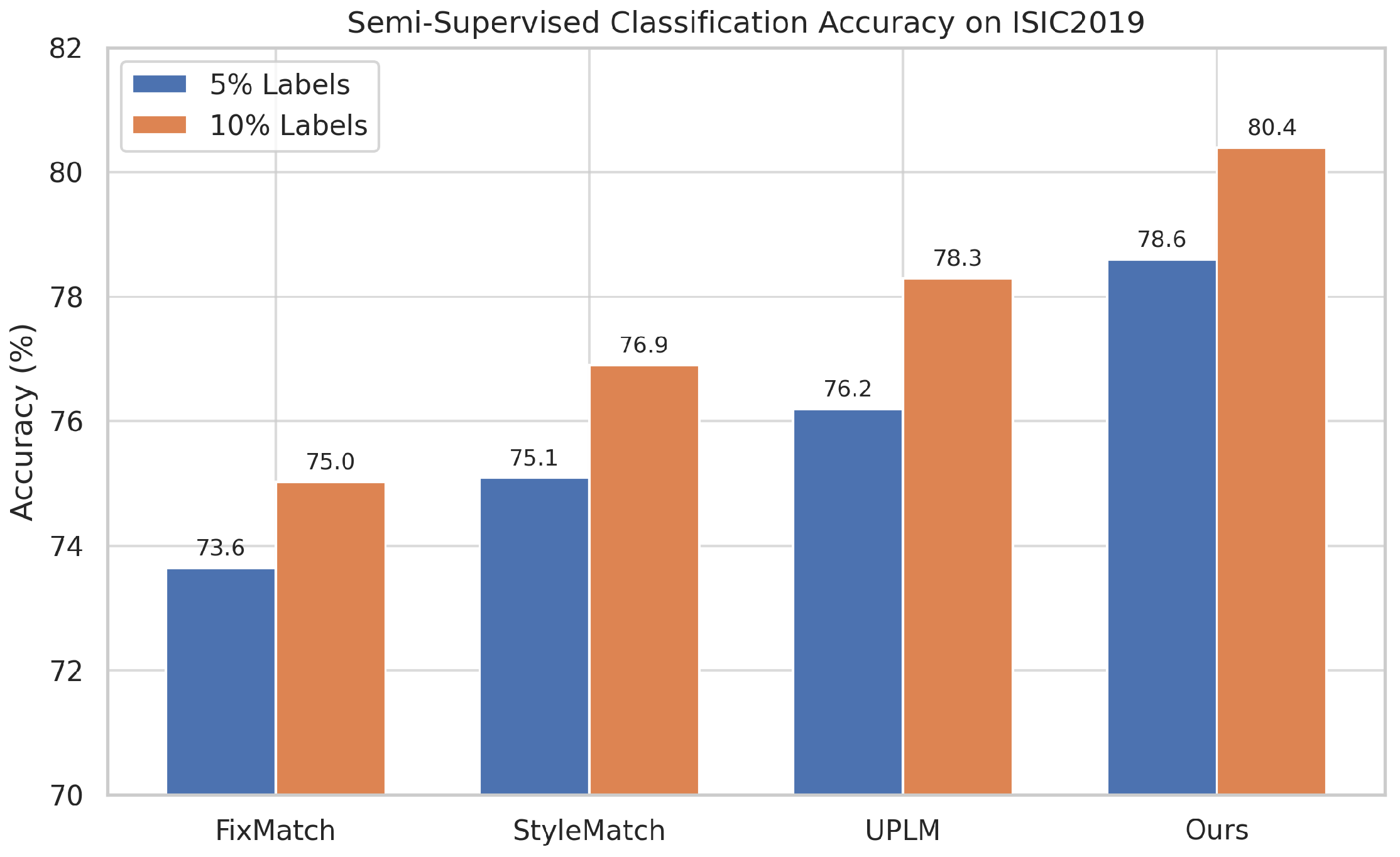

We evaluated the performance of our proposed framework on the ISIC2019 skin lesion classification dataset under two low-label regimes: 5% and 10%.

Table 3 reports the classification accuracies compared to strong baselines: FixMatch [

4], StyleMatch [

14], and UPLM [

11]. Results are shown as mean ± standard deviation over five independent runs. To better illustrate the performance differences,

Figure 3 presents a bar chart visualization of the same results.

Our method achieved consistent improvements over all baselines in these label-scarce settings. With only 5% labeled data, our multi-style pseudo-labeling and feature-based regularization improved accuracy by 4.96 pp over FixMatch [

4] and by 2.4 pp over UPLM [

11]. At 10% labels, we continued to outperform previous methods, achieving a 2.1 pp gain over UPLM and 3.5 pp over FixMatch [

4]. These results confirm the effectiveness of our entropy-based pseudo-label filtering and style-augmented domain adaptation.

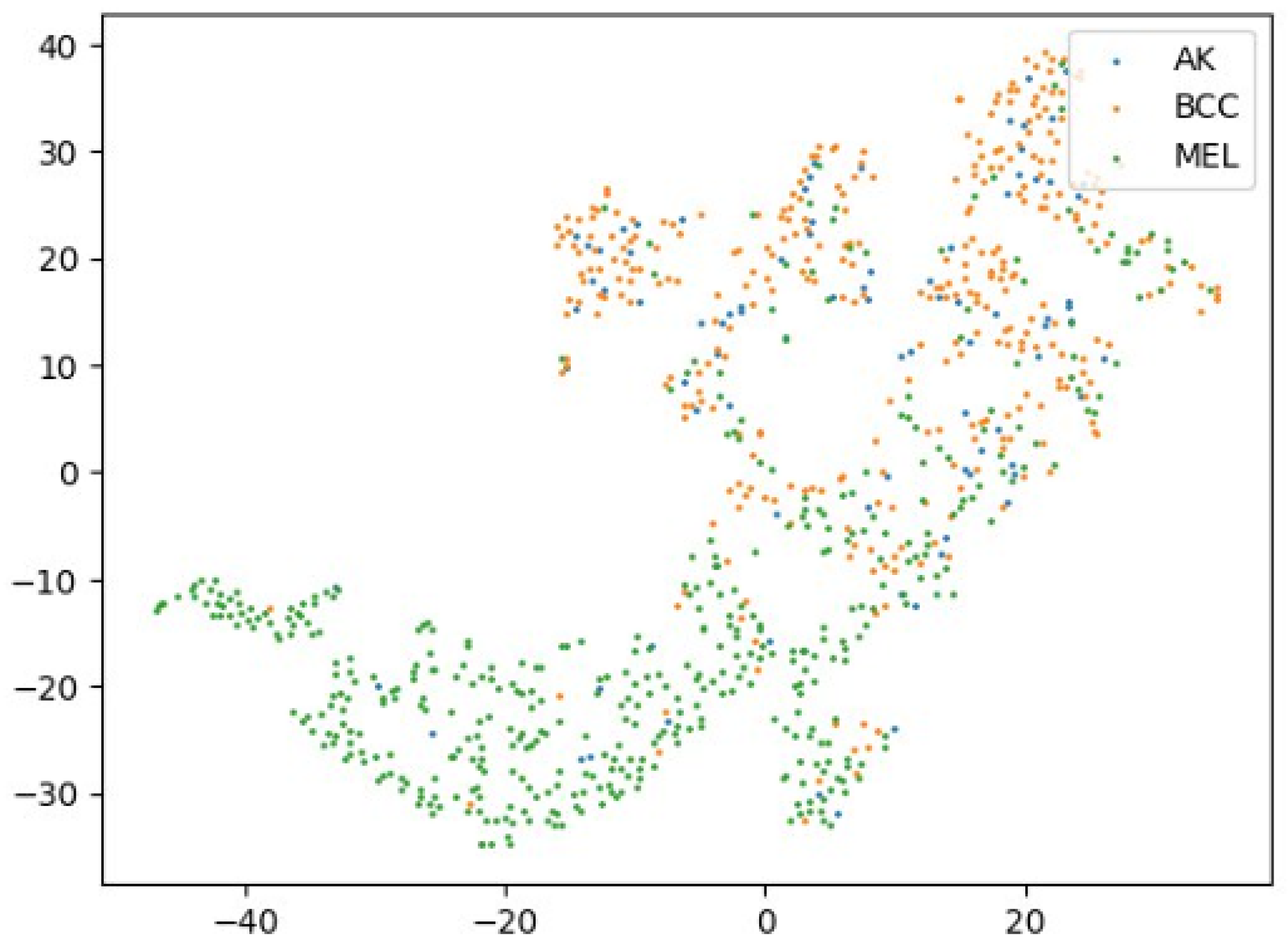

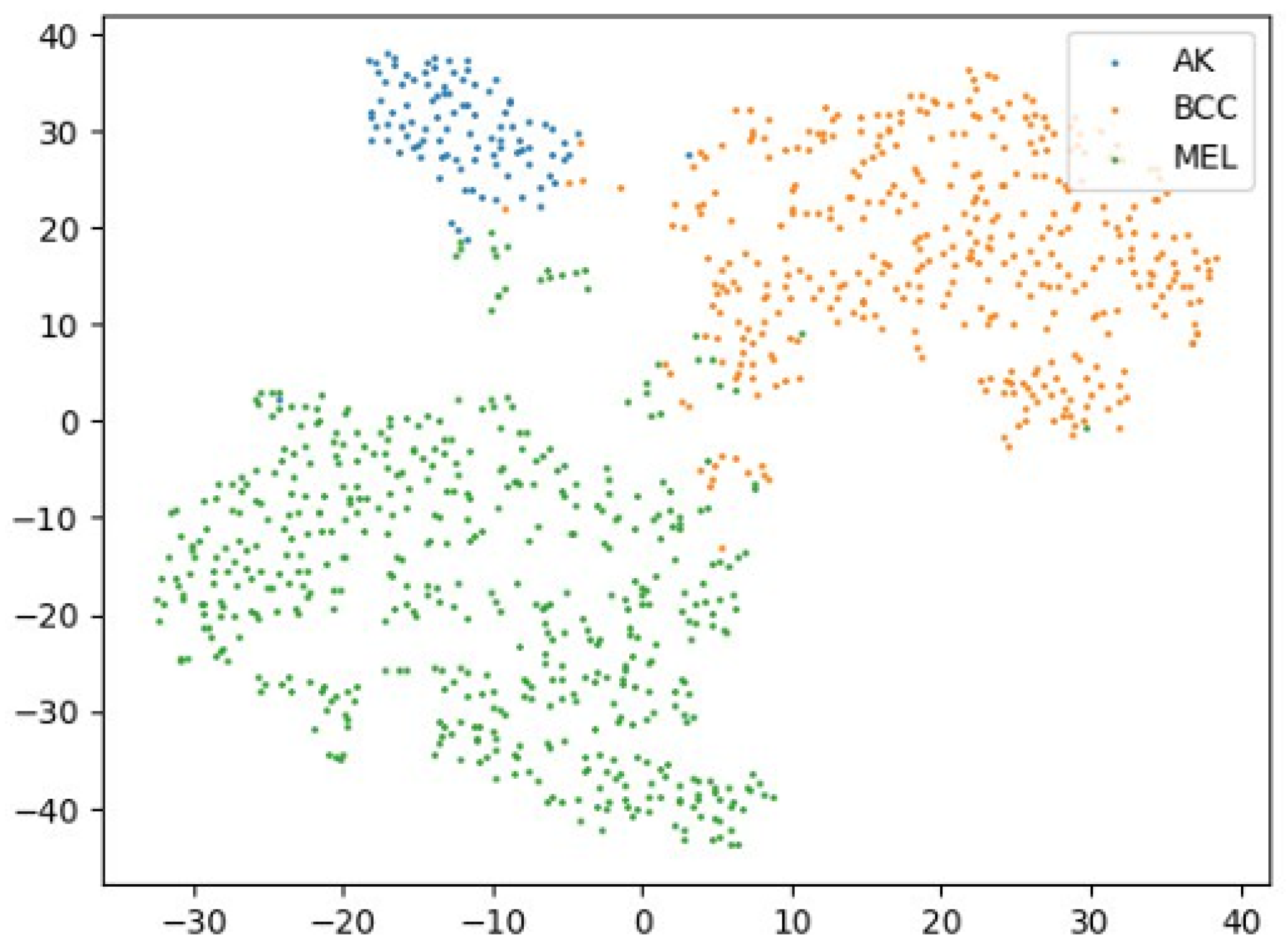

4.2. Effectiveness of Style Transformations for Domain Generalization

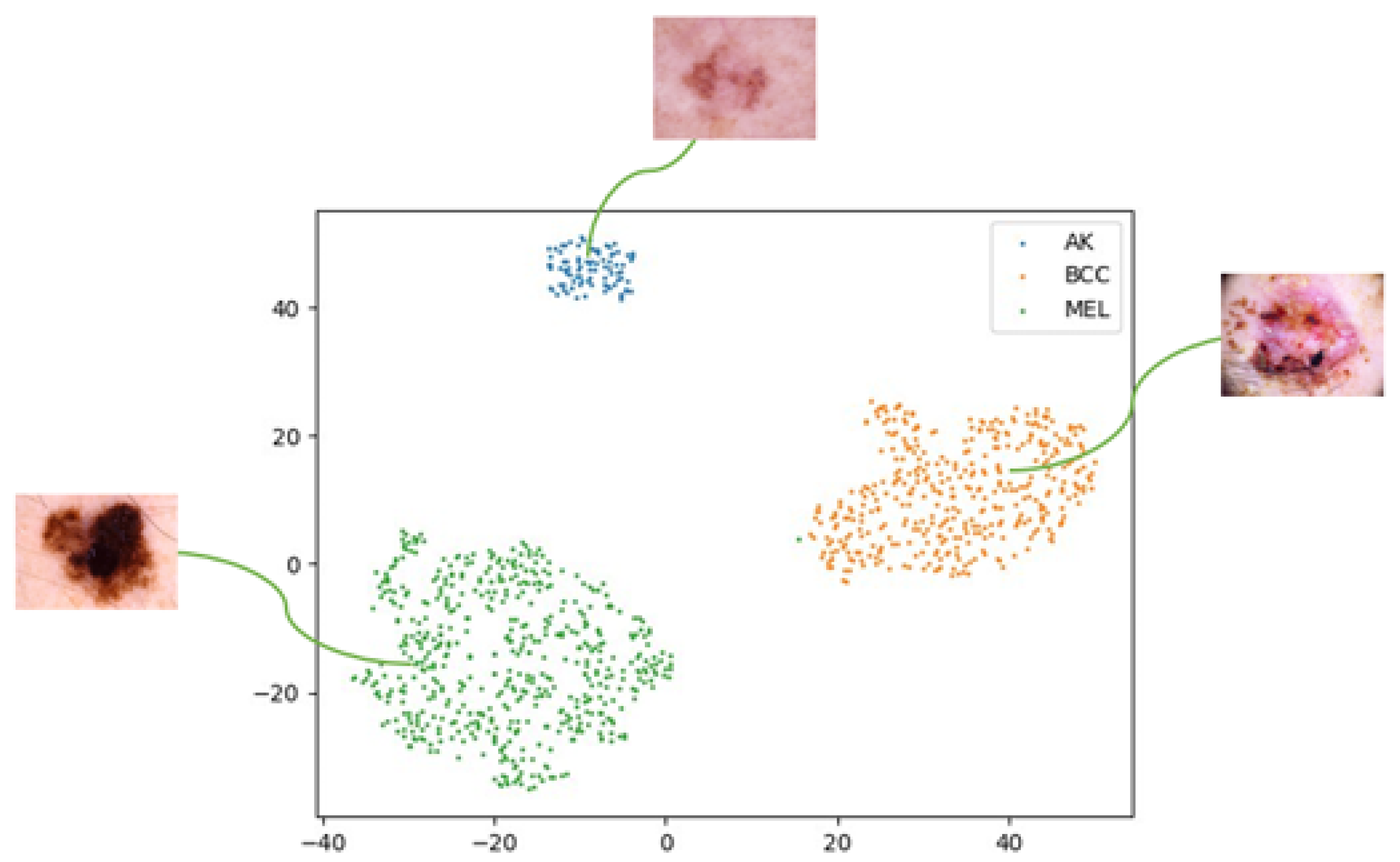

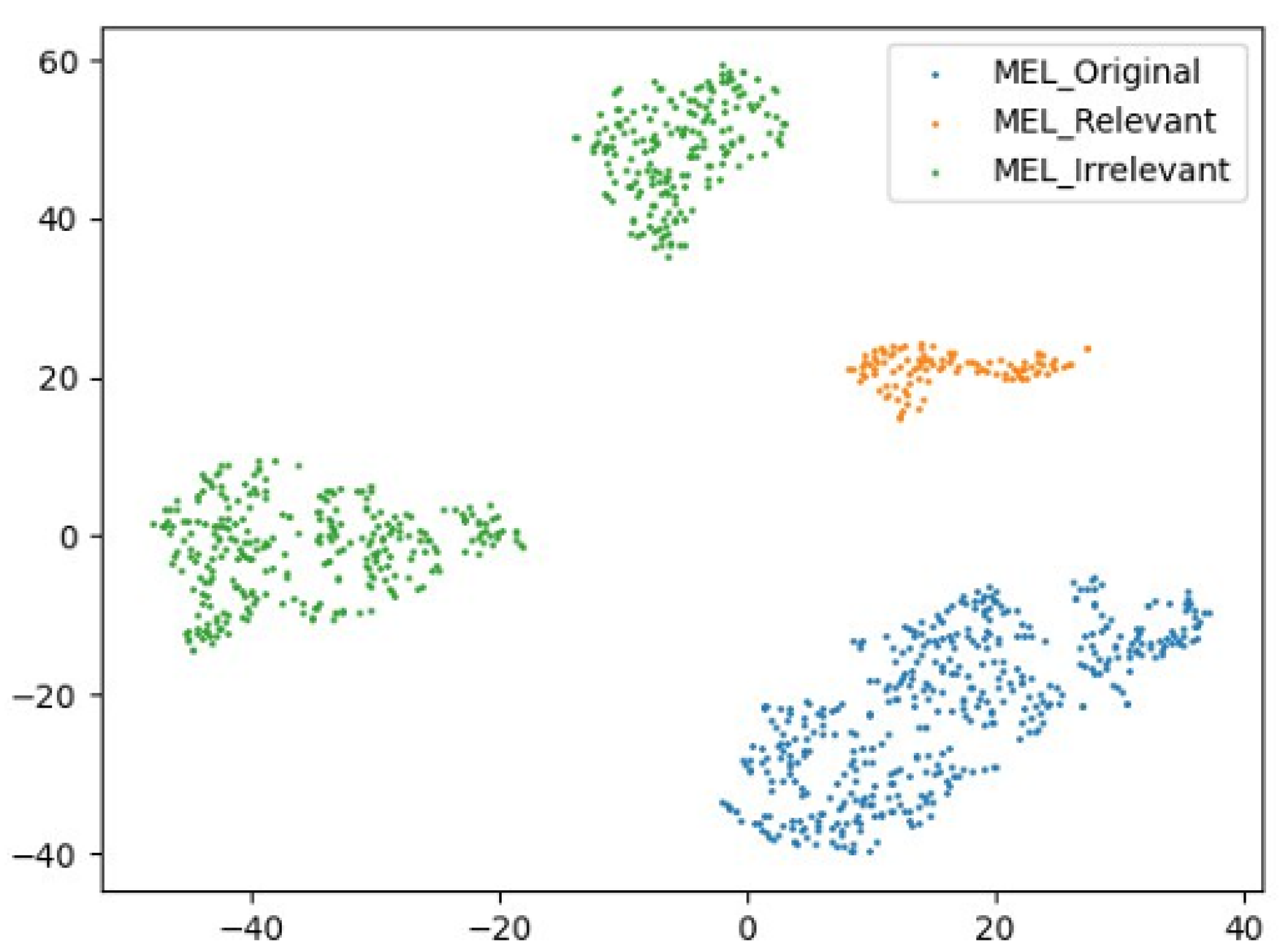

To assess how style transformations affect feature distributions and domain robustness, we use 2D t-SNE [

33] to visualize final-layer embeddings from ResNet-50 on ISIC2019. Feature vectors from the last fully connected layer are projected into 2D for comparison.

Figure 4 shows embeddings from the original dataset, revealing partial class separation but domain entanglement. To simulate domain shifts, we apply neural-style transfer [

32] using both clinically relevant and artistic styles.

Figure 5 illustrates that artistic augmentation introduces texture diversity but lacks semantic consistency, leading to increased intra-class spread and noisy boundaries.

Figure 6 demonstrates that medically styled augmentations preserve lesion semantics more effectively, promoting compact and distinguishable class clusters across domains.

Figure 7 isolates the MEL class across the three settings. Embeddings under medical stylization closely align with the original, while artistic stylization produces fragmented representations with semantic drift.

Collectively,

Figure 4,

Figure 5,

Figure 6 and

Figure 7 provide qualitative support for our domain-aware stylization strategy. Medical styles enhance intra-class compactness and inter-class separability, in contrast to artistic styles that inject uncontrolled variability. These visual findings align with the quantitative improvements reported in

Table 5 and

Table 6 and support prior work on semantic-preserving domain augmentation [

12,

13].

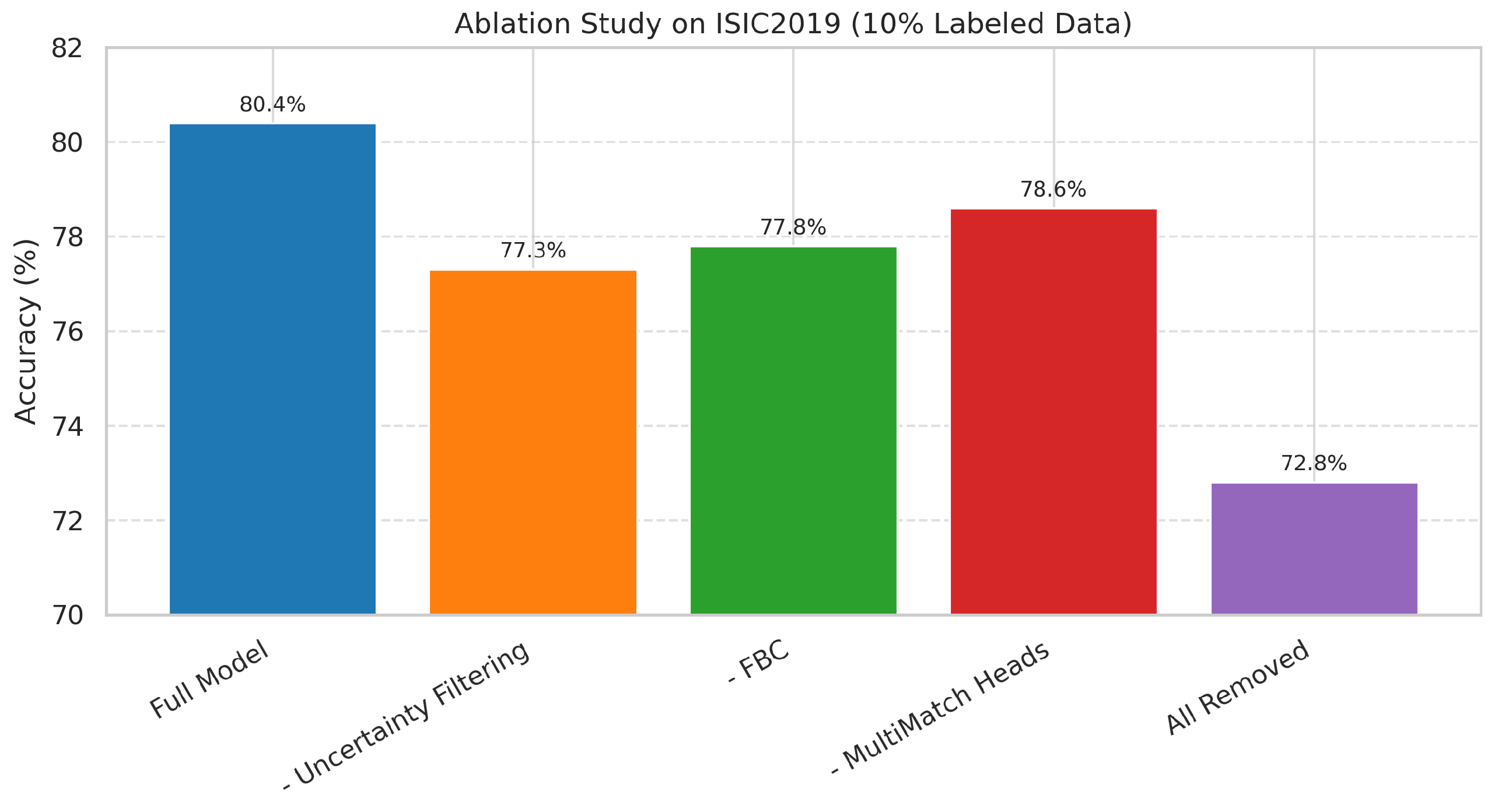

4.3. Ablation Study

We performed a comprehensive ablation study on the ISIC2019 dataset using 10% labeled data to evaluate the contribution of each component in our proposed framework.

Table 4 reports both the absolute classification accuracy and the corresponding drop in performance (in percentage points) when specific modules are removed from the full model, which achieved 80.4% accuracy.

Removing uncertainty-based filtering of pseudo-labels resulted in a 3.1 pp drop (

), validating its role in suppressing noisy pseudo-labels and improving training stability under domain shift [

11]. Disabling Feature-Based Conformity (FBC) led to a 2.6 pp reduction (

), confirming its importance in aligning class-wise feature prototypes across domains [

12]. Excluding the MultiMatch-style domain-specific heads and attention fusion reduced accuracy by 1.8 pp, with a modest but measurable impact on domain sensitivity [

13].

4.4. Detailed Component Effectiveness Analysis

A closer look at the ablation results reveals distinct roles for each module:

(1) Uncertainty Filtering has the highest individual impact, indicating that in SSDG settings—where domain shift can severely distort model confidence—filtering out low-confidence pseudo-labels is critical for avoiding error reinforcement.

(2) Feature-Based Conformity (FBC) improves intra-class compactness in the feature space, which is especially beneficial when unlabeled samples come from domains with different acquisition styles. We further examined the sensitivity of the FBC proximity threshold and found that provided the best trade-off between pseudo-label quality and coverage, with deviations resulting in a 1–2 percentage point drop in accuracy. Lower values such as 0.5 were overly strict, discarding many valid samples, whereas higher values such as 0.9 admitted more noisy samples, both of which degraded performance.

(3) Supervised Contrastive Loss () yields a 1.5 pp drop in accuracy when removed (), highlighting its role in improving inter-class separation and stabilizing representations across stylized domains. While its effect is smaller than FBC or uncertainty filtering individually, it complements these modules by promoting tighter clustering of same-class samples even under significant domain variation.

(4) Domain-Specific Heads with Attention Fusion provide smaller but consistent gains, suggesting that they capture complementary style-specific cues that a shared head would overlook. This is particularly valuable for dermoscopic datasets where background and lighting vary drastically across domains.

Interestingly, the combined removal of all four components produces a drop larger than the sum of individual drops, underscoring a clear synergy: the modules not only contribute individually but also reinforce one another when integrated. This synergy results directly from combining style-aware data augmentation with robust pseudo-labeling and adaptive multi-head classification.

To better illustrate the individual contributions of each module, the results from the ablation study are visualized in

Figure 8.

4.5. Performance Under Domain Shifts

We evaluate performance under three simulated domain shift scenarios: style, background, and corruption (e.g., illumination, blur, occlusion), as detailed in

Section 3.10. In clinical practice, style shifts may arise from differences in dermoscopic devices or color calibration across hospitals. Background shifts can result from variations in image framing, lighting, or inclusion of surrounding skin when using handheld versus mounted devices. Corruption shifts resemble degraded images caused by motion blur, low-quality optics, partial occlusion by hair, or artifacts from skin markers.

Table 5 and

Table 6 report classification accuracy as mean ± standard deviation over five independent runs. Our framework consistently outperforms FixMatch and StyleMatch across all shift types and label regimes (5% and 10%). Furthermore, paired

t-tests show that our improvements over FixMatch are statistically significant (

) in all settings, confirming the robustness and generalization power of our approach under diverse real-world domain perturbations. All reported p-values are unadjusted, as our primary hypothesis testing focused on direct pairwise comparisons with FixMatch and UPLM under each label setting. For exploratory comparisons with other baselines, results are presented descriptively without formal adjustment for multiple comparisons.

The results in

Table 5 and

Table 6 demonstrate that our method consistently improves accuracy under all types of domain shifts, especially when supervision is limited. The largest gains are observed in the style shift category, with improvements of over 4% at 5% labeled data compared to FixMatch. This validates the effectiveness of our multi-style augmentation strategy, which includes both clinically-relevant (e.g., MRI, X-ray) and stylistically diverse artistic transformations.

Performance under background shifts is also improved due to the proposed feature-based conformity and domain-specific classification heads, which reduce interference from non-lesion areas and acquisition variance. Although corruption shifts (e.g., noise or blur) are not directly targeted, our method still shows measurable gains—likely due to more robust representations learned through semantic alignment and ensemble pseudo-labeling.

These results highlight the generalization power of our framework across heterogeneous clinical and environmental domains, supporting its applicability to real-world settings where domain variability is a major concern.

5. Discussion

This study introduces a semi-supervised domain generalization (SSDG) framework designed to address the dual challenges of limited labeled data and domain shifts in medical imaging. By integrating uncertainty-guided pseudo-labeling, multi-style neural-style augmentation, feature-based conformity (FBC), semantic alignment, and MultiMatch-style domain-specific heads, the proposed approach enhances generalization across diverse clinical domains. Our statistical analysis further supports the reliability of our method. Over five independent runs, MultiStyle-SSDG exhibited low variance and consistent performance. The improvements over UPLM were statistically significant under both 5% and 10% labeled data settings, with based on a paired t-test. These results confirm the robustness of the proposed framework under various domain shifts.

5.1. Interpretation of Findings

Our framework demonstrates consistent improvements over strong baselines under low-label conditions. As shown in

Table 3 and

Figure 3, MultiStyle-SSDG achieves up to 5 pp accuracy gains over FixMatch at 5% labels and over 2 pp gains compared to UPLM at 10%, validating the effectiveness of uncertainty-based filtering and style-aware regularization.

The impact of domain-aware augmentation is visualized through t-SNE plots (

Figure 3,

Figure 4,

Figure 5 and

Figure 6), where features transformed by medical-style stylization exhibit tighter intra-class clusters and clearer inter-domain separation compared to original and irrelevant (artistic) styles. This aligns with observed improvements in domain-shift robustness, as shown in

Table 5 and

Table 6.

Component-level analysis (

Table 4 and

Figure 7) further confirms that each module contributes significantly to performance. Removing uncertainty filtering or feature-based conformity results in a 2–3 pp drop, while ablating all components leads to a total degradation of 7.6 pp. These results highlight the synergy between pseudo-label refinement, semantic regularization, and domain-specific head adaptation. From a clinical perspective, this synergy is crucial because it supports reliable deployment of the model across multiple hospitals and imaging setups without the need for site-specific retraining. By reducing sensitivity to device-specific styles and improving the reliability of pseudo-labels, the framework mitigates potential biases linked to underrepresented patient groups or acquisition protocols and enhances trustworthiness in multi-center diagnostic use.

5.2. Comparison with Previous Studies

Compared to UPLM [

11], our method avoids the need for computationally expensive Monte Carlo sampling, instead relying on entropy-based filtering that is lightweight and scalable. While StyleMatch [

14] applies consistency in the pixel space only, our approach complements it with feature-space alignment and domain-adaptive classification.

The MultiMatch-style training strategy extends the capabilities of fixed-head baselines like FixMatch [

4] by allowing for specialization across domains through fused prediction heads. This enables better handling of inter-domain interference and reflects improvements in generalization under realistic domain shifts.

5.3. Implications for Clinical Practice

Generalization across diverse imaging protocols is critical for clinical deployment. Our framework is particularly suited to applications in dermatology and radiology, where acquisition devices, protocols, and patient demographics often vary. The demonstrated robustness under low-label regimes can reduce reliance on manual annotation, enabling faster roll-out of diagnostic tools in resource-limited settings.

Furthermore, improved cross-domain performance may reduce bias and variability across underrepresented populations or imaging conditions, contributing to safer and more equitable AI systems in healthcare.

5.4. Limitations and Future Work

While our framework was validated on dermoscopic images from the ISIC2019 dataset, we acknowledge that other imaging modalities such as CT, MRI, and ultrasound are also critical in medical image analysis. Dermoscopy remains a clinically recognized, noninvasive imaging technique widely used for melanoma screening. However, in future work, we aim to extend our method to volumetric and grayscale modalities. The modular structure of our framework—including the pseudo-labeling pipeline and domain-specific heads—is modality-agnostic and can be adapted to radiological imaging with appropriate feature encoders and augmentation strategies. Such extensions would help further validate both the generalizability and the clinical impact of the proposed SSDG approach.

6. Conclusions and Future Work

This study presents MultiStyle-SSDG, a unified framework for semi-supervised domain generalization in medical imaging that addresses the dual challenges of limited expert-labeled data and domain shifts across acquisition settings. Our approach integrates four complementary components: uncertainty-guided pseudo-labeling, domain-adaptive classification with attention-based fusion, multi-style augmentation using clinically relevant and artistic transformations, and feature-level regularization via prototype conformity and semantic alignment losses.

Through comprehensive evaluation on the ISIC2019 skin lesion classification benchmark under 5% and 10% labeled conditions, the proposed framework consistently outperformed competitive baselines such as FixMatch, StyleMatch, and UPLM. Our results demonstrated statistically significant improvements in classification accuracy across simulated domain shifts including style, background, and corruption. These findings were supported by qualitative t-SNE visualizations showing improved intra-class compactness and cross-domain feature consistency, as well as by ablation studies confirming the contribution of each architectural component. The removal of any single module resulted in notable performance degradation, while removing all components together led to a substantial 7.6 percentage point drop in accuracy.

While MultiStyle-SSDG demonstrates strong generalization in 2D dermoscopic image settings, there are several limitations and directions for future research. First, the framework assumes that neural-style transformations adequately simulate real-world domain shifts, which may not generalize to more extreme modality gaps, such as those between ultrasound and MRI. Second, the current preprocessing pipeline relies on precomputed style augmentations, which introduce additional computational and storage overhead. Incorporating real-time or lightweight augmentation strategies, such as fast neural-style transfer or diffusion models, is a promising next step. Third, although the framework is modular and theoretically adaptable to other modalities, its effectiveness has not yet been validated on 3D or multimodal imaging data such as CT or PET-CT. Nevertheless, the modular nature of MultiStyle-SSDG allows for straightforward substitution of the backbone with architectures tailored to such modalities, including 3D convolutional neural networks for volumetric data and transformer-based encoders for capturing long-range dependencies in multimodal inputs. Future work should also explore integration with federated learning pipelines to enable privacy-preserving, cross-institutional training.

Ultimately, our goal is to bridge the gap between controlled experimental conditions and the complex variability of real-world clinical data. By combining domain-aware stylization with uncertainty filtering and feature regularization, MultiStyle-SSDG moves toward more robust and generalizable computer-aided diagnosis (CAD) systems capable of adapting to diverse clinical environments and patient populations. In addition to extending our framework to volumetric and grayscale imaging modalities such as CT, MRI, and ultrasound, future work will also investigate its application to multimodal medical datasets, where complementary information from different modalities (e.g., PET-CT or dermoscopy combined with clinical photography) can be jointly leveraged to improve diagnostic accuracy and robustness. Another promising direction is the integration of MultiStyle-SSDG into real-world clinical workflows, for example by embedding it within dermatology decision-support systems or radiology PACS platforms. This would enable prospective validation in multi-center studies and facilitate seamless adoption in routine practice, while also assessing model interpretability and user trust in clinical settings.

Author Contributions

Conceptualization, Z.T. and S.-W.L.; methodology, Z.T.; software, Z.T.; validation, Y.C. and S.-W.L.; formal analysis, Z.T.; investigation, Z.T.; resources, S.-W.L.; data curation, Z.T.; writing—original draft, Z.T.; writing—review and editing, Y.C. and S.-W.L.; visualization, Z.T.; supervision, S.-W.L.; project administration, S.-W.L.; funding acquisition, S.-W.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Challengeable Future Defense Technology Research and Development Program through the Agency for Defense Development (ADD), funded by the Defense Acquisition Program Administration (DAPA) in 2025 (No. 915073201). It was also supported by the Institute of Information & Communications Technology Planning & Evaluation (IITP) grant, funded by the Korea government (MSIT) (No. RS-2025-25443681).

Institutional Review Board Statement

Ethical review and approval were waived for this study because the ISIC2019 dataset is publicly available and anonymized.

Informed Consent Statement

Informed consent was obtained by the creators of the ISIC2019 dataset. Patient consent was waived for this secondary analysis as no new data were collected.

Data Availability Statement

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| CAD | Computer-Aided Diagnosis |

| MIA | Medical Image Analysis |

| SSDG | Semi-Supervised Domain Generalization |

| FBC | Feature-Based Conformity |

| SA | Semantic Alignment |

References

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; Van Der Laak, J.A.; Van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef] [PubMed]

- Tzeng, E.; Hoffman, J.; Darrell, T.; Saenko, K. Simultaneous deep transfer across domains and tasks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 13–16 December 2015; pp. 4068–4076. [Google Scholar]

- Hoffman, J.; Tzeng, E.; Park, T.; Zhu, J.Y.; Isola, P.; Saenko, K.; Efros, A.; Darrell, T. Cycada: Cycle-consistent adversarial domain adaptation. In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 1989–1998. [Google Scholar]

- Sohn, K.; Berthelot, D.; Carlini, N.; Zhang, Z.; Zhang, H.; Raffel, C.A.; Cubuk, E.D.; Kurakin, A.; Li, C.L. Fixmatch: Simplifying semi-supervised learning with consistency and confidence. Adv. Neural Inf. Process. Syst. 2020, 33, 596–608. [Google Scholar]

- Tarvainen, A.; Valpola, H. Mean teachers are better role models: Weight-averaged consistency targets improve semi-supervised deep learning results. Adv. Neural Inf. Process. Syst. 2017, 30, 1195–1204. [Google Scholar]

- Organizers, I.C. ISIC 2019: Skin Lesion Analysis Towards Melanoma Detection. 2019. Available online: https://challenge.isic-archive.com/data (accessed on 21 August 2025).

- Wang, J.; Lan, C.; Liu, C.; Ouyang, Y.; Qin, T.; Lu, W.; Chen, Y.; Zeng, W.; Yu, P.S. Generalizing to unseen domains: A survey on domain generalization. IEEE Trans. Knowl. Data Eng. 2022, 35, 8052–8072. [Google Scholar] [CrossRef]

- Jackson, P.N.; Atapattu, T.; Fernando, B.; Denman, S.; Sridharan, S.; Fookes, C. Style augmentation: Data augmentation via style randomization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Zhou, K.; Yang, Y.; Qiao, Y.; Xiang, T. Domain generalization with mixstyle. arXiv 2021, arXiv:2104.02008. [Google Scholar] [CrossRef]

- Li, D.; Yang, Y.; Song, Y.Z.; Hospedales, T.M. Deeper, broader and artier domain generalization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5542–5550. [Google Scholar]

- Khan, A.; Shaaban, M.A.; Khan, M.H. Improving pseudo-labelling and enhancing robustness for semi-supervised domain generalization. arXiv 2024, arXiv:2401.13965. [Google Scholar]

- Galappaththige, C.J.; Baliah, S.; Gunawardhana, M.; Khan, M.H. Towards generalizing to unseen domains with few labels. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 23691–23700. [Google Scholar]

- Qi, L.; Yang, H.; Shi, Y.; Geng, X. Multimatch: Multi-task learning for semi-supervised domain generalization. ACM Trans. Multimed. Comput. Commun. Appl. 2024, 20, 1–21. [Google Scholar] [CrossRef]

- Zhou, K.; Loy, C.C.; Liu, Z. Semi-supervised domain generalization with stochastic stylematch. Int. J. Comput. Vis. 2023, 131, 2377–2387. [Google Scholar] [CrossRef]

- Zhou, K.; Loy, C.; Liu, Z. Domain Adaptive Ensemble Learning (DAEL). IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 3009–3021. [Google Scholar]

- Rahman, T.; Zhang, Y.; Chen, J.; Liu, H.; Wang, Q. Style-driven domain generalization for medical image segmentation. arXiv 2024, arXiv:2406.00298. [Google Scholar]

- Grandvalet, Y.; Bengio, Y. Semi-supervised learning by entropy minimization. Adv. Neural Inf. Process. Syst. 2004, 17, 529–536. [Google Scholar]

- Laine, S.; Aila, T. Temporal ensembling for semi-supervised learning. arXiv 2016, arXiv:1610.02242. [Google Scholar]

- Lee, D.H. Pseudo-label: The simple and efficient semi-supervised learning method for deep neural networks. In Proceedings of the Workshop on Challenges in Representation Learning, ICML, Atlanta, GA, USA, 17–19 June 2013; Volume 3, p. 896. [Google Scholar]

- Berthelot, D.; Carlini, N.; Goodfellow, I.; Papernot, N.; Oliver, A.; Raffel, C.A. Mixmatch: A holistic approach to semi-supervised learning. Adv. Neural Inf. Process. Syst. 2019, 32, 5050–5060. [Google Scholar]

- Yu, Z.; Chen, L.; Cheng, Z.; Luo, J. Transmatch: A transfer-learning scheme for semi-supervised few-shot learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 12856–12864. [Google Scholar]

- Zhang, Q.; Wang, H.; Li, X. S&D Messenger: Exchanging semantic and domain knowledge for generic semi-supervised medical image segmentation. IEEE Trans. Med. Imaging 2025, 44, 1201–1213. [Google Scholar]

- Ganin, Y.; Ustinova, E.; Ajakan, H.; Germain, P.; Larochelle, H.; Laviolette, F.; March, M.; Lempitsky, V. Domain-adversarial training of neural networks. J. Mach. Learn. Res. 2016, 17, 1–35. [Google Scholar]

- Muandet, K.; Balduzzi, D.; Schölkopf, B. Domain generalization via invariant feature representation. In Proceedings of the International Conference on Machine Learning, Atlanta, GA, USA, 17–19 June 2013; pp. 10–18. [Google Scholar]

- Volpi, R.; Namkoong, H.; Sener, O.; Duchi, J.C.; Murino, V.; Savarese, S. Generalizing to unseen domains via adversarial data augmentation. Adv. Neural Inf. Process. Syst. 2018, 31, 5339–5349. [Google Scholar]

- Li, D.; Yang, Y.; Song, Y.Z.; Hospedales, T. Learning to generalize: Meta-learning for domain generalization. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Khosla, P.; Teterwak, P.; Wang, C.; Sarna, A.; Tian, Y.; Isola, P.; Maschinot, A.; Liu, C.; Krishnan, D. Supervised contrastive learning. Adv. Neural Inf. Process. Syst. 2020, 33, 18661–18673. [Google Scholar]

- Dou, Q.; Coelho de Castro, D.; Kamnitsas, K.; Glocker, B. Domain generalization via model-agnostic learning of semantic features. Adv. Neural Inf. Process. Syst. 2019, 32, 6450–6461. [Google Scholar]

- Zhou, S.; Zhao, H.; Sun, D. Bidirectional Uncertainty-Aware Region Learning for Semi-Supervised Medical Image Segmentation. arXiv 2025, arXiv:2502.07457. [Google Scholar]

- Qiu, Z.; Gan, W.; Yang, Z.; Zhou, R.; Gan, H. Dual Uncertainty-Guided Multi-Model Pseudo-Label Learning for Semi-Supervised Medical Image Segmentation. Math. Biosci. Eng. 2024, 21, 2212–2232. [Google Scholar] [CrossRef]

- Codella, N.; Rotemberg, V.; Tschandl, P.; Celebi, M.E.; Dusza, S.; Gutman, D.; Helba, B.; Kalloo, A.; Liopyris, K.; Marchetti, M.; et al. Skin lesion analysis toward melanoma detection 2018: A challenge hosted by the international skin imaging collaboration (ISIC). arXiv 2019, arXiv:1902.03368. [Google Scholar] [CrossRef]

- Huang, X.; Belongie, S. Arbitrary style transfer in real-time with adaptive instance normalization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1501–1510. [Google Scholar]

- Maaten, L.v.d.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

Figure 1.

Overview of the proposed MultiStyle-SSDG framework for semi-supervised domain generalization. (a) The supervised branch trains on labeled images using the cross-entropy loss . (b) In the unlabeled branch, input images undergo both clinically relevant and irrelevant style augmentations. Features are extracted using a shared CNN encoder and projection head. Pseudo-labels are computed in two steps: (1) entropy-based filtering discards uncertain predictions, and (2) the filtered predictions are combined using a weighted Multi-Style ensemble. This path contributes to the unsupervised loss through a shared classifier. (c) For regularization, two feature-level constraints are applied: feature-based conformity (FBC), which aligns representations to class prototypes via , and semantic alignment (SA), which promotes intra-class compactness using .

Figure 1.

Overview of the proposed MultiStyle-SSDG framework for semi-supervised domain generalization. (a) The supervised branch trains on labeled images using the cross-entropy loss . (b) In the unlabeled branch, input images undergo both clinically relevant and irrelevant style augmentations. Features are extracted using a shared CNN encoder and projection head. Pseudo-labels are computed in two steps: (1) entropy-based filtering discards uncertain predictions, and (2) the filtered predictions are combined using a weighted Multi-Style ensemble. This path contributes to the unsupervised loss through a shared classifier. (c) For regularization, two feature-level constraints are applied: feature-based conformity (FBC), which aligns representations to class prototypes via , and semantic alignment (SA), which promotes intra-class compactness using .

Figure 2.

Multi-style neural-style transfer for domain generalization. (A) Irrelevant artistic style transfer: Skin lesion content images are transformed using unrelated painting styles to simulate out-of-domain noise. (B) Relevant medical style transfer: Lesion images are augmented using style features from other medical cases to simulate inter-institutional variability. These augmentations help train domain-invariant representations while preserving diagnostic semantics.

Figure 2.

Multi-style neural-style transfer for domain generalization. (A) Irrelevant artistic style transfer: Skin lesion content images are transformed using unrelated painting styles to simulate out-of-domain noise. (B) Relevant medical style transfer: Lesion images are augmented using style features from other medical cases to simulate inter-institutional variability. These augmentations help train domain-invariant representations while preserving diagnostic semantics.

Figure 3.

Comparison of classification accuracy across methods on ISIC2019 under 5% and 10% labeled data. Our proposed method (MultiStyle-SSDG) consistently outperforms all baselines, especially in the low-label regime.

Figure 3.

Comparison of classification accuracy across methods on ISIC2019 under 5% and 10% labeled data. Our proposed method (MultiStyle-SSDG) consistently outperforms all baselines, especially in the low-label regime.

Figure 4.

A 2D t-SNE visualization of three class features (AK, BCC, MEL) extracted from the original ISIC2019 dataset. While some separation is visible, significant overlap remains due to intra-domain variance.

Figure 4.

A 2D t-SNE visualization of three class features (AK, BCC, MEL) extracted from the original ISIC2019 dataset. While some separation is visible, significant overlap remains due to intra-domain variance.

Figure 5.

2D t-SNE visualization of the irrelevant stylized data using artistic (non-medical) style transformations. Clusters remain loosely defined and spread, reflecting limited benefit for feature discrimination.

Figure 5.

2D t-SNE visualization of the irrelevant stylized data using artistic (non-medical) style transformations. Clusters remain loosely defined and spread, reflecting limited benefit for feature discrimination.

Figure 6.

A 2D t-SNE visualization of relevant stylized data using medical imaging-inspired styles. Domain-specific clusters are significantly more compact and isolated, validating the impact of medically meaningful augmentations.

Figure 6.

A 2D t-SNE visualization of relevant stylized data using medical imaging-inspired styles. Domain-specific clusters are significantly more compact and isolated, validating the impact of medically meaningful augmentations.

Figure 7.

A 2D t-SNE comparison of the MEL class across three stylization types: original data, medical-relevant augmentation, and artistic stylization. The relevant stylization maintains semantic proximity, while irrelevant styles introduce domain noise.

Figure 7.

A 2D t-SNE comparison of the MEL class across three stylization types: original data, medical-relevant augmentation, and artistic stylization. The relevant stylization maintains semantic proximity, while irrelevant styles introduce domain noise.

Figure 8.

Ablation study of MultiStyle-SSDG components under 5% labeled data. Bars represent mean ± standard deviation across five independent runs. Removing Uncertainty Filtering produces the largest performance drop, underscoring its importance in suppressing noisy pseudo-labels in cross-domain settings. Feature-Based Conformity (FBC) improves intra-class compactness, while Domain-Specific Heads with Attention Fusion provide complementary style-specific cues that a shared head cannot capture. When all three components are removed, the drop in accuracy exceeds the sum of individual effects, illustrating a clear synergy: these modules are most effective when integrated together.

Figure 8.

Ablation study of MultiStyle-SSDG components under 5% labeled data. Bars represent mean ± standard deviation across five independent runs. Removing Uncertainty Filtering produces the largest performance drop, underscoring its importance in suppressing noisy pseudo-labels in cross-domain settings. Feature-Based Conformity (FBC) improves intra-class compactness, while Domain-Specific Heads with Attention Fusion provide complementary style-specific cues that a shared head cannot capture. When all three components are removed, the drop in accuracy exceeds the sum of individual effects, illustrating a clear synergy: these modules are most effective when integrated together.

Table 1.

Comparison of recent SSDG methods in medical imaging. ✓ indicates the presence of a component, and ✗ indicates its absence. Our proposed method is shown in bold.

Table 1.

Comparison of recent SSDG methods in medical imaging. ✓ indicates the presence of a component, and ✗ indicates its absence. Our proposed method is shown in bold.

| Method | Style Aug. | Uncertainty Filtering | Domain-Specific Heads | Feature Regularization | Modular Design |

|---|

| FixMatch [4] | ✗ | ✗ | ✗ | ✗ | ✗ |

| StyleMatch [14] | ✓ (Stochastic Style) | ✗ | ✗ | ✗ | ✗ |

| UPLM [11] | ✗ | ✓ (MC Dropout) | ✗ | ✓ | ✗ |

| MultiMatch [13] | ✗ | ✗ | ✓ (Static Heads) | ✗ | ✗ |

| Few-Labels [12] | ✗ | ✗ | ✗ | ✓ (Prototypes & SA) | ✗ |

| MultiStyle-SSDG (Ours) | ✓ (Dual-Level Style) | ✓ (Entropy) | ✓ (Attention-Fused) | ✓ (FBC + SA) | ✓ |

Table 2.

Class-wise distribution of the ISIC2019 dataset.

Table 2.

Class-wise distribution of the ISIC2019 dataset.

| Class | Abbreviation | Count |

|---|

| Melanoma | MEL | 4522 |

| Melanocytic nevus | NV | 12,875 |

| Basal cell carcinoma | BCC | 3323 |

| Actinic keratosis | AK | 867 |

| Benign keratosis-like lesions | BKL | 2624 |

| Dermatofibroma | DF | 239 |

| Vascular lesions | VASC | 253 |

| Squamous cell carcinoma | SCC | 628 |

| Total | – | 25,331 |

Table 3.

Semi-supervised classification accuracy (%) on ISIC2019 under 5% and 10% label settings. Bold values indicate the best performance in each setting.

Table 3.

Semi-supervised classification accuracy (%) on ISIC2019 under 5% and 10% label settings. Bold values indicate the best performance in each setting.

| Method | 5% Labels (Mean ± std) | 10% Labels (Mean ± std) |

|---|

| FixMatch [4] | 73.64 ± 0.72 | 75.03 ± 0.64 |

| StyleMatch [14] | 75.10 ± 0.69 | 76.90 ± 0.61 |

| UPLM [11] | 76.20 ± 0.15 | 78.30 ± 0.11 |

| Ours (MultiStyle-SSDG) | 78.62 ± 0.18 | 80.36 ± 0.16 |

Table 4.

Ablation study on ISIC2019 using 10% labeled data. Accuracy drop is relative to the full model baseline (80.4%). Statistical significance compared to the full model is marked with ** (). Bold values indicate the best performance.

Table 4.

Ablation study on ISIC2019 using 10% labeled data. Accuracy drop is relative to the full model baseline (80.4%). Statistical significance compared to the full model is marked with ** (). Bold values indicate the best performance.

| Component Removed | Accuracy (%) | Drop (pp) |

|---|

| None (Full Model) | 80.4 ± 0.7 | – |

| Uncertainty Filtering | 77.3 ± 0.9 ** | 3.1 |

| Feature-Based Conformity | 77.8 ± 0.8 ** | 2.6 |

| MultiMatch-style Heads | 78.6 ± 0.6 | 1.8 |

| Supervised Contrastive Loss () | 78.9 ± 0.7 ** | 1.5 |

| All Components Removed | 72.4 ± 1.2 ** | 8.0 |

Table 5.

Accuracy (%) under different domain shift types on ISIC2019 using 5% labeled data. Statistical significance compared to the full model is marked with ** (). Bold values indicate the best performance.

Table 5.

Accuracy (%) under different domain shift types on ISIC2019 using 5% labeled data. Statistical significance compared to the full model is marked with ** (). Bold values indicate the best performance.

| Method | Style Shifts | Background Shifts | Corruption Shifts |

|---|

| FixMatch [4] | 73.64 ± 0.9 | 70.50 ± 1.2 | 68.90 ± 1.1 |

| StyleMatch [14] | 75.10 ± 0.8 ** | 71.30 ± 1.0 ** | 69.20 ± 0.9 |

| Proposed Method | 78.20 ± 0.7 ** | 74.40 ± 0.8 ** | 71.30 ± 0.7 ** |

Table 6.

Accuracy (%) under different domain shift types on ISIC2019 using 10% labeled data. Statistical significance compared to the full model is marked with ** (). Bold values indicate the best performance.

Table 6.

Accuracy (%) under different domain shift types on ISIC2019 using 10% labeled data. Statistical significance compared to the full model is marked with ** (). Bold values indicate the best performance.

| Method | Style Shifts | Background Shifts | Corruption Shifts |

|---|

| FixMatch [4] | 75.03 ± 0.8 | 72.00 ± 1.0 | 70.00 ± 1.1 |

| StyleMatch [14] | 76.90 ± 0.9 ** | 73.20 ± 1.0 ** | 71.00 ± 0.8 |

| Proposed Method | 80.10 ± 0.6 ** | 76.30 ± 0.7 ** | 74.00 ± 0.9 ** |

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).